Contents

Editorial

Editorial: Who Owns The Front Door to AI? If it isn't you, it’s game over.

Essay

AI

‘You will have AI friends’: Character.ai bets on companionship chatbots

The New AI Data Trade: Web Publishers and Startups Look to Cash In

Meta Restructures AI Group Again in Pursuit of Superintelligence

Alphabet hits intraday record high on $10B Meta cloud deal, Apple Gemini talks

AI Mode in Search gets new agentic features and expands globally

OpenAI Crosses $12 Billion ARR: The 3-Year Sprint That Redefined What’s Possible in Scaling Software

Google’s AI Mode expands globally, adds new agentic features

Venture

China

Interview of the Week

Startup of the Week

Post of the Week

Editorial

Who Owns The Front Door to AI? If it isn't you, its game over,

This week’s readings suggest that we are evolving to ‘the browser inside AI’ rather than “AI inside the browser.” . The chat interface becomes the place we start, the place we finish, and the ledger where credit and money move. If you don’t own that front door, you’re not relevant to the future.

Chat, not the browser, is becoming the default interface to AI. A prompt is no longer a curiosity machine; it’s a work order. Tell the assistant what you want, and it reads, routes, fetches, compares, books, and returns a result—often with a receipt rather than a pageview. The old browser assumed human eyes on HTML. The new interface assumes an agent on your behalf. We are shifting from “look here” to “do this.” The browser may well survive, but only if it ceases to be a browser as we have known it.

What the browser gave us—address bar, tabs, history, bookmarks, extensions—now migrates into the assistant. Chrome’s AI Mode from Google is precisely that. The address bar collapses into an intent bar. Tabs become threads. Bookmarks become memory. History becomes context. Passwords become keys held by the agent. Extensions become tools. That migration is not cosmetic; it reassigns power. The surface where a session begins and ends is the place that captures trust, preference, and payment. That used to be the browser. It’s now the chat window.

Links don’t die; they change jobs. We will still click, but less to discover and more to consumate the end of an AI conversation with a reference or a purchase. The link will verify and handoff. A link becomes function, not the product. Agents will generate lightweight, ephemeral “views” when we need to inspect a document, compare two items, buy something or or sign off on a decision. The assistant owns the flow; destinations supply the evidence.

The unit of value flips from pageviews to completions. When an assistant reads and acts for us, attribution is not a courtesy—it’s the spine of the economy. We need content and product with durable identity; we need usage logs that travel with the work; we need money that moves when those logs do. The winner here is not whoever renders the prettiest page; it’s whoever runs the job router, surfaces the content or item, and the settlement rail behind it.

Attachment becomes the moat. The first assistant that gets your taste and constraints right becomes habit; the tenth is a demo. An AI that can deliver this will be hard to give up on. Switching costs aren’t just technical anymore—they’re cognitive and emotional. You will not want to fire the assistant that holds your preferences, workflows, and memory, because it is your muscle memory. That’s a different kind of lock-in than the web’s link-based gravity, and it lived through your preferred front door.

This is why the browser can’t “host” AI and keep control. Embedding a model into a browser UI doesn’t change the logic of the session. The assistant determines which sources to consult, what to buy, how to book, and when to escalate to a human view. The browser becomes a renderer of last resort—useful, but subordinate. To survive the browser has to morph into this new thing. It will no longer be a url entry box delivering a web page, although it may be able to do that as well.

The benefits we loved in browsers survive, but they’ll live inside the assistant’s fabric: identity and permissions, sandboxing and safety, extensibility and performance. The front door moves; the furniture comes with it. That is why Googles extension of AI mode to 180 countries this week is both good and bad. Good that Google understands the importance of AI mode. Bad that it believes a browser is the best place to deliver it. I predict it will redefine the browser even more, and soon.

Links in Ai is its own subject. The rails for this need to be built and they are are unglamorous and decisive. We’ve invested in models but under-invested in the infrastructure required to transform the internet into an AI native internet. What is an AI native Internet? it is one where content is sourced from AI conversations. The article below about historical documents and AI is another example of a gap to be filled.

Agents need a rights-aware registry so that its training knowledge has SKUs and the ability to surface links to them—provenance, freshness, and permission encoded at the source. They need a real-time link graph so product, price, and availability are queryable without scraping. They need auditable logs and standardized receipts so creators, publishers, and merchants actually get paid. Without these rails, we’re just pasting a chat box onto the old web and praying.

There is a lot of noise about the poor scraping to visitor ratio from AI compared to search crawlers. This will be fixed, guaranteed. Publishers and merchants aren’t doomed; they’re unprepared. The assistant can increase—not cannibalize—the value of destinations if it sends fewer but better visits: intent-qualified traffic with usage records attached. But that future requires exposing structured endpoints (catalogs, prices, inventory, licensing) and accepting that the conversation is where discovery and decision happen. If your business depends on being the place where the journey begins, prepare to be disintermediated. If your business wins when the right buyer arrives with the right intent, agents are a gift—provided attribution and settlement are real. A new infrastructure for link rich content as part of training and real time RAG is required here.

Speed is table stakes; correctness is the game. The “move fast” era produced dazzling demos; the durable winners will ship routing that is consistently right: the right source, tool, and policy every time. That demands governance, observability, and predictable behavior under constraints. It looks like operations, not theater—and it’s exactly what earns the authority to sit at the front door.

Voice and ambient computing finish the job. The more natural the interface, the less we tolerate detours. If asking is faster than hunting, the assistant wins every time—on phones, in cars, through earbuds, across desktops. The browser becomes a specialized instrument we pull out when a human view is the point. Most days, it won’t be.

So let’s say the quiet part out loud. The chat interface replaces the browser as the primary user interface for computing on the web. The browser is morphing into that. So Google still has a chance to own the front door if they are decisive, but OpenAi and Anthropic are current leaders.

The browser’s benefits—security, portability, extensibility—don’t vanish; they’re absorbed. The question isn’t whether a browser can bolt on AI. It’s whether your assistant can absorb the browser’s best features while running the economy of completions: identity, permission, routing, and settlement—at scale, with receipts.

Own the front door, and you set the rules for trust, traffic, and payment. Fail, and you will keep shipping ever-better pages into someone else’s conversation, praying to be surfaced by a concierge you don’t control. The web taught us to think in clicks. The next web will count completions. The front door is the chat window. The rest is furniture.

Bottom line: Winners will own a trusted front door with standards and auditing and settlements behind it—and help teams actually change how they work and consumers find what they want without dethroning content owners. Everyone else will keep shipping demos into a narrowing feed.

Essay

Opinion | AI Is the Future, but It Knows Little of the Past

Wsj • August 20, 2025

AI•Data•Digitization

The technology is limited to those pages it can see, and most original records aren’t yet digitized.

John Masko rightly observes that “AI Won’t Replace Historians” (op-ed, Aug. 18) because a computer can’t replicate “the human spark.” But another dynamic currently makes it impossible for AI to do historical research. According to the U.S. National Archives and Records Administration, only 421.8 million pages out of an estimated 12 billion in their collections have been digitized. This is merely one of thousands of archives around the world, most of which are also largely undigitized and not likely to become fully available online anytime soon.

AI can be an asset to historians. Researchers at Columbia’s History Lab, for example, have begun developing AI tools to augment historians’ analysis of digitized sources. These resources may allow historians to produce ground-breaking scholarship. But AI is limited to those pages it can see. Most original records—key sources of new insight—are accessible only to humans. That means historical research will, for the foreseeable future, require real people to travel to real places and sift through real pages.

Digitization is a net good and should be accelerated. It democratizes access to records and helps historians study them in new ways. But since most historical evidence exists only in the physical world, we need to train, employ and fund more human historians to study a past that is invisible to AI.

Unresolved debates about the future of AI

Helentoner • Helen Toner • June 30, 2025

Essay•AI•Scaling•Reasoning•AIImprovingAI

The problem that made me want to give this talk today was a recurring one.

An early instance was in 2022 when we had, very close to each other, headlines about deep learning hitting a wall and then ChatGPT launching a whole new revolution.

Last year, we had coverage from the Wall Street Journal—really good reporting—about real challenges inside OpenAI with scaling up their pre-trained models and how difficult that was and how they weren't happy with the results, and then on the literal same day we had the release of o3, the next generation of their reasoning model, and François Chollet—who's famously skeptical—saying that it was a significant breakthrough on his ARC-AGI benchmark. So these very contradictory takes, both of which had some truth to them.

Then last month, two big publications that I'm guessing many folks here have seen. One making the case that AI is just a normal technology and it's going to follow the same path we've seen in many previous technologies, the other describing how we might have AGI by 2027, superintelligence by 2030, and an absolutely radically transformed world. Again, very different perspectives, both of which have some truth to them.

So basically, this talk is for anyone who—raise your hand if you’ve ever been personally victimized by the onslaught of AI news and how contradictory it is? Yeah, this talk is for you. If you didn’t raise your hand you can leave, I won’t take offense, it’s fine.

A friend saw the title of the talk and said, "Wait, how did you get them to give you a nine-hour slot to cover all the unresolved debates?" But we're just going to talk about three debates. We’re going to focus on technical debates, meaning how AI will evolve as a technology, not what are we going to do about it or how will it affect society. And debates that are the most relevant in my view or are, according to me, a helpful way of breaking down some of the disagreements.

So in other words, we're not going to talk about timelines to AGI, and we're not going to talk about p(doom), because I think both of those are not as productive.

Here are the three debates that I want to talk about: How far can the current paradigm go? How much can AI improve AI? And will future AI still basically be tools, or will they be something else?

#1: How far can the current paradigm go?

People often talk about "is scale all you need" or "can you scale language models all the way to AGI?" I don't think this is that helpful of a question, or I think it's a little bit misguided. I would rather ask: are we on a good branch of the tech tree?

Is AI a “Normal Technology”?

Oreilly • August 19, 2025

Essay•AI•Normal Technology

We think we see the world as it is, but in fact we see it through a thick fog of received knowledge and ideas, some of which are right and some of which are wrong. Like maps, ideas and beliefs shape our experience of the world. The notion that AI is somehow unprecedented, that artificial general intelligence is just around the corner and leads to a singularity beyond which everything is different, is one such map. It has shaped not just technology investment but government policy and economic expectations.

But what if it’s wrong?

The best ideas help us see the world more clearly, cutting through the fog of hype. That’s why I was so excited to read Arvind Narayanan and Sayash Kapoor’s essay “AI as Normal Technology.” They make the case that while AI is indeed transformational, it is far from unprecedented. Instead, it is likely to follow much the same patterns as other profound technology revolutions, such as electrification, the automobile, and the internet.

That is, the tempo of technological change isn’t set by the pace of innovation but rather by the pace of adoption, which is gated by economic, social, and infrastructure factors, and by the need of humans to adapt to the changes. (In some ways, this idea echoes Stewart Brand’s notion of “pace layers.”)

What Do We Mean by “Normal Technology”?

Arvind Narayanan is a professor of computer science at Princeton who also thinks deeply about the impact of technology on society and the policy issues it raises. He joined me last week on Live with Tim O’Reilly to talk about his ideas. I started out by asking him to explain what he means by “normal technology.” Here’s a shortened version of his reply. (You can watch a more complete video answer and my reply here.)

There is, it turns out, a well-established theory of the way in which technologies are adopted and diffused throughout society. The key thing to keep in mind is that the logic behind the pace of advances in technology capabilities is different from the logic behind the way and the speed in which technology gets adopted. That depends on the rate at which human behavior can change. And organizations can figure out new business models. And I don’t mean the AI companies.

There’s too much of a focus on the AI companies in thinking about the future of AI. I’m talking about all the other companies who are going to be deploying AI.

So we present a four-stage framework. The first stage is invention. So this is improvements in model capabilities.…The model capabilities themselves have to be translated into products. That’s the second stage. That’s product development.

How Social Media Shortens Your Life

Gurwinder • Gurwinder • August 3, 2025

Essay•Media•Social Media•Time Perception•Attention Economy

I. THE NORMALISATION OF AMNESIA

The most common noun in the English language is “time”. We talk obsessively about time because it’s the most important thing in the universe. Without it, nothing can happen. And yet most of us treat time as if it’s the least important thing. We kick up a fuss when tech giants steal our data, but we’ve been strangely nonchalant as those same companies carry out the greatest heist of our time in history.

One reason for our indifference is that the true scale of the theft has been hidden from us. Social media platforms have been stealing our time using a sneaky trick: they’ve been speeding up our sense of time — effectively shortening our lives — so we think we had less than we did, and don’t notice some of it was pilfered.

Every social media user has experienced the theft of their time. You may log on to quickly check your notifications, and before you know it, half an hour has gone by and you’re still on the platform, unable to account for where the time went. This phenomenon even has a name: the “30-minute ick factor”. It also has empirical support. Experiments have found that people using apps like TikTok and Instagram start to underestimate the time they’re on such platforms after just a few minutes of use, even when they’re explicitly told to keep track of time.

To understand how social media warps time, we must understand time perception, or chronoception. Even outside of our heads, time doesn’t move at a constant pace. It is, for instance, slowed by gravity. This is why the Earth’s core is 2.5 years younger than its surface. Just as massive objects can slow objective time, so weighty experiences can slow subjective time. It’s why people tend to overestimate the duration of earthquakes and accidents (or in fact any scary situation).

Generally, an event feels longer in the moment if it heightens awareness. But we seldom think of time in the moment; the majority of our sense of time is retrospective. And our sense of retrospective time is determined by awareness of the past: in other words, by memory. The more we remember of a certain period, the longer that period feels, and the slower time seems to have passed.

Sometimes an experience can seem brief in the moment but long in memory, and vice versa. A classic example is the “holiday paradox”: while on vacation, time speeds by because you’re so overwhelmed by new experiences that you don’t keep track of time. But when you return from your vacation, it suddenly feels longer in retrospect, because you made many strong memories, and each adds depth to the past.

Conversely, when you’re waiting at a boring airport, you keep checking the clock, and this acute awareness of time causes it to pass slowly in the moment. But since the wait is uneventful, you don’t make strong memories of the experience, and so in retrospect it seems brief.

Now, a sinister thing about social media is that it speeds up your time both in the moment and in retrospect. It does this by simultaneously impairing your awareness of the present and your memory of the past.

We Are Only Beginning to Understand How to Use AI

Oreilly • August 21, 2025

Essay•AI•GoogleDocs

I once flew to another country for a meeting where we annotated a proposed standard by projecting a Word document and calling out edits, debating each change in the room before accepting or discarding it. This was long after collaborative online editing existed. I kid you not.

It was post–Google Docs (introduced in 2005), yet the process felt stranded in an earlier era. Many will remember emailing Word attachments, then reconciling divergent versions—habits that persisted for years in many places, and in some contexts still do.

I am become human google doc, incorporator of interagency feedback

This lag between invention and understanding how to use it is the point of Arvind Narayanan and Sayash Kapoor’s “AI as Normal Technology.” Early electrified factories simply swapped steam engines for large electric motors without rethinking layout; only later did distributed small motors transform production. New technologies pass through stages—discovery, diffusion, product development, and adaptation—unfolding over time.

James Bessen calls this “learning by doing”: people try things, share what works, and collectively expand the frontier of what’s possible. In 2005, email attachments still ruled until a small team built Writely, revealing how the internet could enable real-time collaborative editing with version control hidden from view. Acquired by Google in 2006, it became Google Docs. Today, with AI, we face a similar choice: retrofit old processes, or explore the technology’s native possibilities.

AI

Will AI Replace Data and Analytics Engineers?

Sql patterns • August 16, 2025

AI•Jobs•Data Engineering

When ChatGPT first burst on the scene in early 2023, with its ability to give seemingly intelligent answers and write seemingly perfect code, I have to admit I was worried.

I thought I’d become obsolete.

I thought our entire industry would be handed over to AI and I’d have to switch careers.

That’s not exactly what happened but things have definitely changed.

Two years later, with AI hype at an all time high, one thing is clear to me.

Data and analytics engineering work is not going anywhere!

Instead, what’s happening is that data roles are becoming more multi-faceted. Data analysts are doing data science; data scientists are doing data engineering; data engineers are doing software engineering.

As a self-procliamed data generalist (I’ve done analytics, data engineering and even some data science) this was more than welcome, but what I didn’t expect was for me to get into software engineering this late in my career.

While my background is in computer science, I never wanted to get into software engineering, so when my manager asked me dive into Python I was a little concerned. I had never written a single line of Python in my life 😁!

Copilot to the rescue!

Once I realized I could use GenAI / LLMs to help me write the code, I noticed something very interesting. Not knowing how to write Python from scratch didn’t hinder me. As long as I could read AI-generated code and understand what it was doing I was fine!

The key skill for me shifted from writing code to writing very clear design docs; and to write very clear design docs, you need to be REALLY good at system design and architecture.

I soon realized data system design was a skill that wasn’t going anywhere. In fact, many of the solutions I’ve built even required embedding LLM capabilities into software.

Sure you can build apps with a single prompt nowadays, but are these apps easy to maintain, debug, update or are they a bunch of AI “slopware?”

Big Tech Is Eating Itself in Talent War

Wsj • August 16, 2025

AI•Jobs•ReverseAcquihire

The scramble by tech companies for top AI talent is using unorthodox methods that imperil Silicon Valley’s startup culture.

Big Tech’s appetite for elite AI researchers has become so intense that it now threatens the very ecosystem that feeds it. Companies are showering candidates with eye-watering compensation and deploying novel tactics to secure scarce expertise—moves that may help in the near-term AI race while weakening the startup engine they depend on.

Along with offers rumored to reach $1 billion, Microsoft, Meta, Amazon and Alphabet are leaning into a “reverse acquihire” playbook: rather than buying startups outright, they recruit founders and core researchers—or sign sweeping tech-licensing deals—leaving the rest of the company to pivot or be absorbed elsewhere.

Microsoft followed this template with Inflection AI, bringing in Mustafa Suleyman to steer Copilot and paying the company a $650 million licensing fee. Meta used a variant in June with Scale AI, pairing a multibillion-dollar investment with the recruitment of CEO Alexandr Wang and a cohort of Scale staffers.

These maneuvers fit the moment. They happen fast in what many view as a once-in-a-generation sprint, avoid the headaches of post-merger integration, and—crucially—don’t require regulatory signoff at a time of heightened antitrust scrutiny for the largest platforms.

Startups have their own incentives. Poached researchers can command salaries on par with star athletes. Venture backers, while rarely seeing blockbuster exits from such deals, often avoid total write-offs, according to industry executives.

But the cultural costs are mounting. Silicon Valley’s bargain has long been extreme risk in exchange for the possibility of outsized rewards—especially for employees who sign on for equity in lieu of cash. When a reverse acquihire hollows out a company, those left behind frequently miss the payoff they were working toward.

One vivid example: after Google struck a $2.4 billion deal that drew key leaders out of Windsurf this July, remaining staffers reportedly wept in the office. OpenAI had earlier discussed a more traditional $3 billion acquisition, but the team that stayed likely didn’t receive the windfall they imagined. The worry among founders and investors is that, if this pattern persists, ambitious builders will skip startups altogether and head straight to Big Tech—shrinking the talent pool that makes the whole system run.

‘You will have AI friends’: Character.ai bets on companionship chatbots

Ft • August 16, 2025

AI•Tech•CharacterAI•AICompanions•Dependency

Company argues persona-based chatbots will aid real-life interactions but critics say they create dependency

The chief executive of artificial intelligence chatbot maker Character.ai believes most people will have “AI friends” in the future, as it faces a string of lawsuits over alleged harm to children and advocacy groups call for a ban on “companionship” apps.

The San Francisco-based start-up — backed by top Silicon Valley investors such as Andreessen Horowitz and a past acquisition target of Meta — is at the vanguard of tech groups building AI-powered chatbots with different personas that interact with people.

It offers AI chatbots that have characters such as “Egyptian pharaoh”, “an HR manager” or a “toxic girlfriend”, which have proved popular with young users. “They will not be a replacement for your real friends, but you will have AI friends, and you will be able to take learnings from those AI-friendly conversations into your real-life conversations,” said Karandeep Anand, who took over as CEO in June.

He was appointed just under a year after Google poached the founders of Character.ai in a $2.7bn deal. The company said it had 20mn monthly active users, with about half of those female and 50 per cent Gen Z or Alpha, people born after 1997.

The New AI Data Trade: Web Publishers and Startups Look to Cash In

Wsj • August 17, 2025

AI•Publishing•DataLicensing•DataBrokers•CreatorEconomy

A podcast series about an emerging ecosystem of data brokers who license or sell content from creators to artificial-intelligence companies.

How AI will change the browser wars

Ft • August 21, 2025

AI•Tech•BrowserWars•AIAgents•SearchDisruption

Artificial intelligence is reshaping how people get things done online, and with it, the balance of power in the browser market. For two decades, browsers have been passive windows onto a web designed for humans to click links, scroll pages and see ads. Now AI systems can read, summarize and act on content directly, shrinking the role of manual browsing and threatening the ad-funded economics that sustained the open web.

The battle is shifting from rendering pages quickly to controlling an intelligent gateway that interprets a user’s intent. That helps explain why controlling the browser has become newly strategic. Recent moves — from aggressive AI integrations to headline‑grabbing proposals around who should run the world’s most popular browser — reflect a scramble to shape the default path by which users and AI systems reach information, shops and services.

Three models are emerging. First, the human‑in‑the‑loop browser, which keeps the familiar interface but layers in assistants that summarize pages, fill forms and organize research. Second, chatbot‑led browsing, where a conversational interface does the navigating and returns synthesized answers, with the browser working in the background. Third, fully automated agents that increasingly bypass pages altogether, negotiating via APIs or task‑specific protocols and returning outcomes instead of links.

If AI reduces the time people spend on websites, the value of scarce human attention could rise for destinations that deliver clear utility or community — while generic traffic risks being cannibalized by machine visits. That creates hard questions for publishers and retailers about attribution, licensing and margins when AI intermediaries do the reading. It also raises regulatory issues around defaults, data access and whether one company’s assistant should be allowed to dominate discovery in the same way one search engine once did.

The next phase of the browser wars will be decided less by speed tests and more by who owns the assistant layer, the standards it sets and the commerce it routes — and by whether the “web page” remains the unit of interaction at all.

Meta Restructures AI Group Again in Pursuit of Superintelligence

Bloomberg • August 19, 2025

AI•Tech•Meta

Meta Platforms Inc. is splitting its newly formed artificial intelligence group into four distinct teams and reassigning many of the company’s existing AI employees, an attempt to better capitalize on billions of dollars’ worth of recently acquired talent.

The new structure is meant to “accelerate” the company’s pursuit of so-called superintelligence, according to an internal memo sent Tuesday by Alexandr Wang, the former Scale AI chief executive officer who recently joined Meta as chief AI officer.

“Superintelligence is coming, and in order to take it seriously, we need to organize around the key areas that will be critical to ...

Alphabet hits intraday record high on $10B Meta cloud deal, Apple Gemini talks

Youtube • CNBC Television • August 22, 2025

AI•Tech•Alphabet

Alphabet’s shares touch an intraday record high as investors react to reports that Meta has agreed to a roughly $10 billion cloud deal with Google. The move underscores accelerating demand for AI-ready infrastructure and highlights Google Cloud’s momentum as hyperscalers compete to supply compute, storage and networking for large-scale AI workloads.

The reported agreement positions Meta to expand capacity for training and serving AI systems while diversifying infrastructure partners. For Alphabet, the news reinforces the strategic role of its cloud business in the AI cycle, complementing ongoing investments in models and data center buildouts. Market action reflects optimism that long-duration cloud contracts tied to AI will translate into durable revenue visibility for providers.

In parallel, reports of Apple in talks with Google about using Gemini elevate attention on potential distribution for Google’s generative AI across Apple devices. Any integration discussions emphasize how model access, default placements and on-device versus cloud inference choices could shape user experience and traffic patterns in the broader ecosystem.

Together, a marquee cloud commitment from a leading social platform and high-profile conversations around Gemini signal how AI partnerships are being negotiated across infrastructure, platforms and consumer endpoints. Investors are watching for confirmations, timelines, and the extent to which such deals impact capital spending, gross margins and competitive positioning among Alphabet, Meta and Apple as AI capabilities scale into mainstream products and services.

How AI Upscales Engineers

Youtube • 20VC with Harry Stebbings • August 20, 2025

AI•Work•SoftwareEngineering•DeveloperTools•Productivity

AI Mode in Search gets new agentic features and expands globally

Blog • Robby Stein • August 21, 2025

AI•Tech•GoogleSearch

We’re adding more agentic capabilities and personalized responses to AI Mode in Search so Google can act on your behalf and help you get things done — and rolling it out to more people worldwide.

AI is making Google Search more helpful so you can ask any question and get things done. Starting today, AI Mode gains more advanced agentic and personalized capabilities to help you make progress on tasks and receive information tailored to your interests. We’re also bringing AI Mode to even more people around the world.

Get things done with agentic capabilities in AI Mode. We’re beginning with restaurant reservations and will expand to local service appointments and event tickets.

You can ask for a dinner reservation that fits multiple constraints — party size, date, time, location and preferred cuisine — and AI Mode will search across reservation platforms for real-time availability that matches your needs, then present a curated list of options with available slots. It does the legwork and links you directly to the booking page to finalize your reservation.

Under the hood, AI Mode uses Project Mariner’s live web browsing, direct partner integrations in Search, and the power of the Knowledge Graph and Google Maps. We’re working with partners like OpenTable, Resy, Tock, Ticketmaster, StubHub, SeatGeek, Booksy and more. This new experience is rolling out for Google AI Ultra subscribers in the U.S. through the “Agentic capabilities in AI Mode” experiment in Labs.

People in the U.S. who opt into the AI Mode experiment in Labs will also see results tailored to their preferences, starting with dining-related topics, with controls to adjust personalization at any time. AI Mode is expanding to over 180 new countries and territories in English.

OpenAI Crosses $12 Billion ARR: The 3-Year Sprint That Redefined What’s Possible in Scaling Software

Saastr • August 20, 2025

AI•Funding•OpenAI

OpenAI just logged its first $1 billion revenue month in July 2025, doubling from roughly $500 million at the start of the year in only seven months. We’ve never seen anything like it—and it’s still accelerating (Anthropic is sprinting too, at a $5B ARR pace).

How OpenAI’s Revenue Sprint Compares to Tech Giants

Time to ~$12B ARR: OpenAI (2022–2025): ~3 years—from ChatGPT’s 2022 launch to $12B ARR by July 2025, unprecedented in software. Google/Alphabet: ~8 years (founded 1998; IPO 2004; $10.6B revenue in 2006 via search ads). Meta/Facebook: ~8 years (founded 2004; $5B revenue in 2012; $12B+ by 2014 via social advertising).

Key Differentiators: infrastructure‑first scaling that demands massive compute; a multi‑modal revenue mix across consumer subscriptions, enterprise, and API; rapid enterprise adoption (three million paying business users); and an AI‑native architecture where every dollar comes from foundation models.

Revenue Acceleration Beyond Expectations: OpenAI has reached $12B ARR in 2025, doubling revenue in the year’s first seven months. Perspective: 2022, $28M; 2023, $2B; 2024, $3.7B; 2025 now tracking $15–$20B based on July’s run‑rate, well ahead of the earlier $12.7B projection.

Google’s AI Mode expands globally, adds new agentic features

Techcrunch • August 21, 2025

AI•Tech•Search

Google is launching a global expansion of AI Mode, its feature that allows users to ask complex questions and follow-ups to dig deeper on a topic directly within Search, the company announced on Thursday. The tech giant is also bringing new agentic and personalized capabilities to the feature.

As part of the expansion, Google is bringing AI Mode to 180 new countries in English. Up until now, it’s only been available to users in the U.S., U.K., and India. Google plans to bring the feature to more languages and regions soon.

In terms of the new agentic features, users can now use AI Mode to find restaurant reservations, and in the future, they’ll be able to find local service appointments and event tickets. Users can request dinner reservations based on multiple preferences, such as party size, date, time, location, and preferred cuisine. AI Mode will then search across different reservation platforms to find real-time availability for restaurants that match the inquiry. It then surfaces a curated list of options to choose from.

This new capability is rolling out for Google AI Ultra subscribers in the U.S. through the “Agentic capabilities in AI Mode” experiment in Labs, Google’s experimental arm. (Ultra is Google’s highest-end plan, at $249.99 per month.)

Google says that U.S. users in the AI Mode experiment will also now see search results tailored to their individual preferences and interests. The tech giant is starting with dining-related topics for this capability.

For example, if someone searches, “I only have an hour, need a quick lunch spot, any suggestions?” AI Mode will use their past conversations, along with places they’ve searched for or clicked on in Search and Maps, to offer more relevant suggestions. So, if AI Mode infers that you like Italian food and places with outdoor seating, you’ll get results suggesting options with these preferences.

Google notes that users can adjust their personalization settings in their Google Account.

In addition, AI Mode now lets users share and collaborate with others. A new “Share” button lets users send an AI Mode response to others, allowing them to jump into the conversation. Google says this could be helpful in cases where you need to collaborate with someone else, such as planning a trip or a birthday party.

Venture

Figma rival Canva valued at $42bn as IPO rumours swirl

Ft • August 20, 2025

Venture

One of Australia’s most valuable technology companies kicks off employee share sale

Canva, the Australian design software developer that competes with Adobe and Figma, has launched a share sale programme for its staff that it said valued the company at $42bn.

The Sydney-based company said on Wednesday that demand came from existing shareholders and new investors including JPMorgan Asset Management. Founded in 2013, Canva develops web-based design software that is widely used in schools and large companies to prepare presentations. It is one of Australia’s most valuable technology companies, alongside enterprise software developer Atlassian, and is backed by the country’s main venture capital funds including Blackbird, Square Peg and Airtree.

Its latest share sale boosts its valuation from $32bn last October and comes after its rival Figma listed in the US last month, spurring rumours that Canva may be plotting an initial public offering soon.

The company said in June it had 240mn active users a month and annualised revenue — a metric used by start-ups to project full-year revenue based on a recent month’s sales — of $3.3bn.

Figma made $749mn in revenue last year and had 13mn active users a month in the first quarter of this year, according to its IPO filing. The company priced its shares at $33, and the stock now trades at $69.41, valuing it at $34bn.

The Australian company has not commented on its listing plans but has long been expected to float on the Nasdaq, following in the footsteps of Atlassian.

As Funding To AI Startups Increases And Concentrates, Which Investors Have Led?

Crunchbase • August 20, 2025

Venture

As funding to AI companies has grown this year and concentrated into fewer companies, Crunchbase data shows that a mix of private equity and alternative investors, Big Tech companies and venture capital firms have led the way. AI-related companies have raised $118 billion as of Aug. 15, up from $108 billion for all of 2024. Funding to the sector has more than doubled in the same timeframe.

Proportions are also up: 48% of global venture funding year to date has been invested in AI-related companies, up from a third in 2024.

Capital concentrates

A select few companies raised a greater proportion of funding in this sector.

Of that $118 billion, eight companies have collectively raised $73 billion via billion-dollar rounds, representing 62% of funding to AI-related companies. That includes a $40 billion raise by OpenAI. Contrast that with 2024 when 13 AI-related companies raised $47 billion, representing 44% of AI funding that year.

Across both 2024 and 2025, six of these companies raised billion-dollar rounds in both years. They include, in order of total funding since 2024, OpenAI, xAI, Scale AI, Anthropic, Anduril Industries and Safe Superintelligence. Meanwhile, billion-dollar round proportions in non-AI companies amounted to 4% of funding in 2025 and 5% in 2024.

Leading the biggest commitments

Investors include a varied mix.

SoftBank has led — by a wide margin — with the $40 billion round it led in OpenAI at a $300 billion valuation. Greenoaks led the $2 billion funding to Safe Superintelligence, and Thrive Capital led both a $900 million funding round in Anysphere and $600 million in Isomorphic Labs.

On the corporate side, Meta, SpaceX and Google have committed the most so far this year. Meta invested $14.3 billion in Scale AI at a value of $29 billion, which included its founder Alexandr Wang joining Meta. SpaceX led the $5 billion funding in xAI. Google invested in AI Labs, $1 billion in Anthropic, and $300 million funding in AI21 labs.

OpenAI Staffers to Sell $6 Billion in Stock to SoftBank, Other Investors

Bloomberg • August 15, 2025

Venture

SoftBank, Dragoneer and Thrive Capital are set to buy OpenAI shares from current and former employees at a roughly $500 billion valuation, according to people familiar with the matter. The deal would take the form of a secondary sale, giving staff and alumni a chance to cash out some holdings without issuing new shares.

The talks are in early stages and key details could still change, the people said. The investor group consists of existing OpenAI backers, and the transaction is aimed at expanding employee liquidity amid intense competition for artificial intelligence talent.

The prospective secondary follows a separate, SoftBank-led primary financing that valued OpenAI around $300 billion earlier this year, indicating robust demand from investors. Pricing the employee sale at a premium highlights expectations for continued growth as generative AI adoption accelerates.

If completed, the transaction would add to the billions in employee share sales that have occurred over the past year, while keeping OpenAI’s overall capitalization and governance unchanged. The company isn’t expected to receive proceeds from the secondary, which instead would go to sellers of the stock.

The employee-focused tender offer underscores how late-stage startups are balancing capital needs with retention and recruitment pressures, particularly in AI. It also signals that large crossover and growth investors remain eager to increase exposure to OpenAI outside of primary rounds, even at higher implied valuations.

On the 5 archetypes of top YC founders

Medium • Jared Heyman • August 21, 2025

Venture

Rebel Fund has proudly invested in 500+ talented YC founders over the past several years across hundreds of startups. Along the way, we’ve built the world’s most comprehensive database of YC startups and outcomes, now tracking millions of data points across every YC company and founder in history, in large part to train our proprietary Rebel Theorem 4.0 ML/AI algorithm to accurately predict YC startup success.

Our algorithm weighs hundreds of YC company and founder characteristics or ‘features’ across 100+ decision trees to ultimately assign a probabilty of success or failure to every new YC startup. It’s grown so complex that even our data and engineering team can’t fully explain its inner workings.

So, to demystify our model a bit, we simplified¹ it into ~30 key features related to YC founders that give the model ~80% of its predictive power. We then performed a cluster analysis² on these features to divide founders into 5 archetypes that are both human-understandable and predictive of startup outcomes. This blog post will reveal our top YC founder archetypes and share which ones are most likely to build $1B+ companies.

Archetype #1 — “Charismatic Hustlers”

Successful examples:

Kaarel Kotkas @ Veriff (valuation ~$1.5B)

Jake Loosarian @ Gecko Robotics (valuation ~$1.6B)

One-liner:

Charismatic, quick-moving sellers with minimal prior experience and weak technical/industry fit.

Key characteristics:

These founders win attention and momentum through charisma, speed, persistence, and “hustling” into opportunities. They have shorter resumes than their YC peers, with just ~6 years work experience across few job roles, and rarely at top Silicon Valley employers. Academic backgrounds are less impressive than their peers, and their founder-product fit scores are lower. They’re also less technical and more likely to be female than other archetypes.

They compensate for their less-than-stellar pedigrees with an outgoing personality and leadership style, showing very high levels of Dominance, Expressiveness, Social, and Pace traits, and the lowest levels of Risk Aversion, Skepticism, Leniency, and Pragmatism among all archetypes. This founder is fast, bold, inspiring, often light on analytics.

Rebel Theorem outlook:

Our “Charimatic Hustlers” are less likely to build successful companies than most other archetypes, but are also less likely to stall out or fail. They can keep their startup alive for years through sheer force of personality, but their companies don’t often become unicorns.

Their natural charisma makes them excellent at startup pitches and raising capital, but investors should be cautious with them.

Archetype #2 — “Bay-Area Value Builders”

Successful examples:

Parker Conrad @ Rippling (valuation ~$19B)

Laks Srini @ ZeroDown (valuation ~$190M)

(Both my YC batchmates while @ Zenefits. Go W13!)

One-liner:

Experienced and well‑connected, with credible signals — the group most likely to produce breakout successes.

Key characteristics:

Their experience, networks, and target markets skew toward the Bay Area, and their track records point to building meaningful company value. They have above‑average work experience (~10 years) prior to founding, more than 5 years prior experience as co-founders, and more prior job roles and employers than average. They often achieved strong prior startup outcomes as well, with large teams managed, higher prior startup valuations, more funding raised as co-founders than their peers.

The also have a strong Bay Area and North America footprint, with the longest tenure working in San Francisco. They’re most likely building a B2B company that sells to other startups. These founders’ personality skews moderately assertive and action-oriented, exhibiting slightly lower Leniency than their peers, and variability around Skepticism and Sociability. They are the classic startup founder profile that many VCs gravitate towards, and for good reason….

A Narrative Violation on a Friday Morning

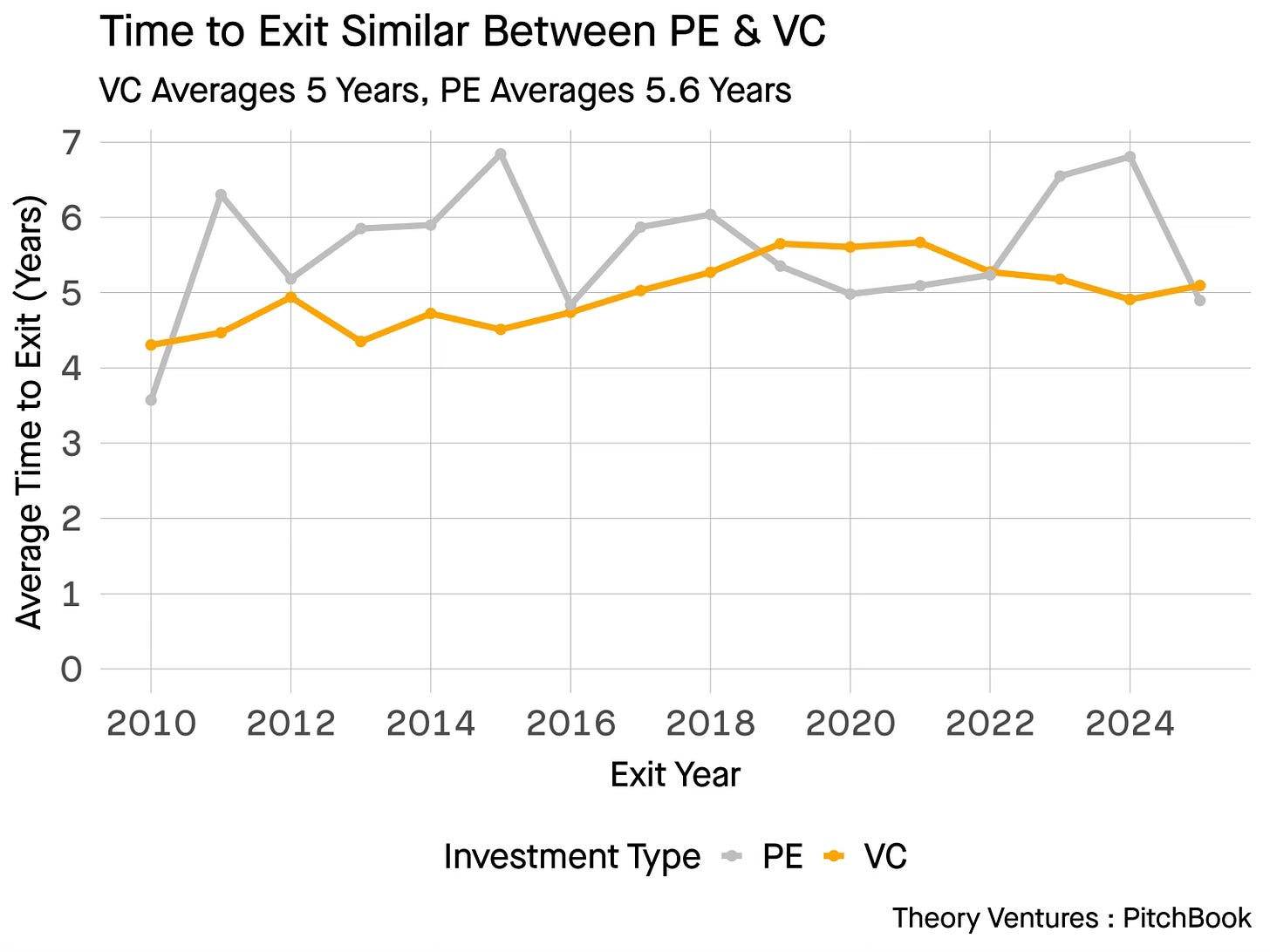

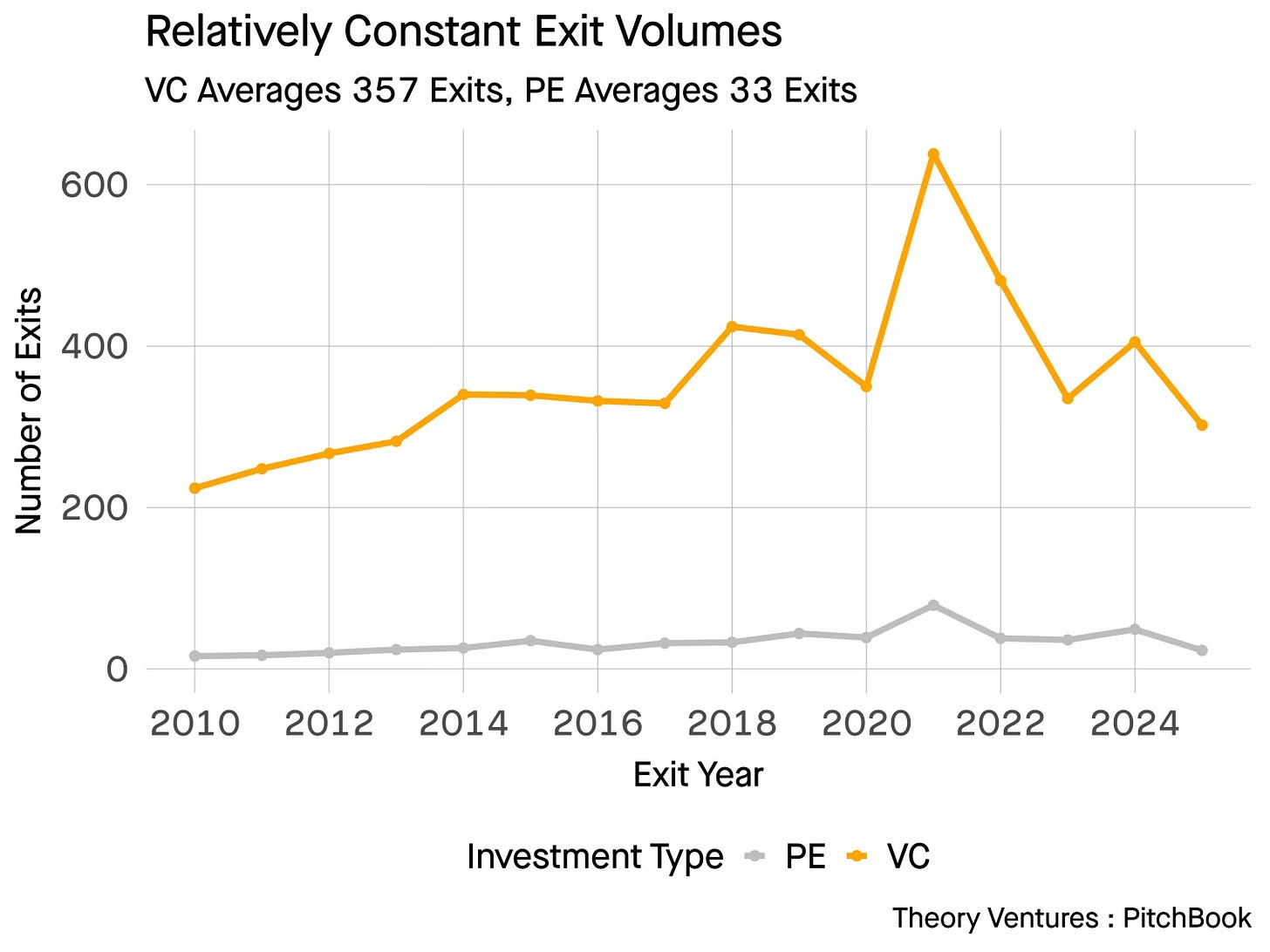

Tom tunguz • August 21, 2025

Venture

I set out to write a very different post : one with the thesis that venture capital holding periods were lengthening relative to private equity.

But the data violates the narrative!

Conor Quigley at PitchBook ran an analysis on Theory’s behalf to answer the question: How many years from the first funding round to exit on average between PE & VC?

The answer is it’s about the same : 5 years.

There’s no doubt that the exit markets have been extremely quiet until the beginning of the summer of this year. But there is no yawning gap in holding periods between private equity & venture capital.

There is survivorship bias in the data. We are only measuring companies that have exited, not the backlog or the average age of the backlog.

However, looking at the exit counts, we see relatively consistent volumes year over year. This suggests that the data should not be hugely skewed.

Venture capital generates roughly 10 times more exits annually than PE in the software sector. VC exits peaked at 638 companies in 2021, while PE reached just 79 exits that same year. Aside from a big spike in 2021 for both asset classes, the deal volumes have been relatively consistent.

The secondary market in venture capital should continue to grow, but not because VC holding periods are longer than PE. Instead, it should grow because VC generates 10 times more exits annually, creating more liquidity opportunities.

Scale, not latency, drives secondary market potential.

China

Ranking the Chinese Open Model Builders

Interconnects • Nathan Lambert • August 17, 2025

China•Technology•OpenModels

The Chinese AI ecosystem has taken the AI world by storm this summer with an unrelenting pace of stellar open model releases. The flagship releases that got the most Western media coverage are the likes of Qwen 3, Kimi K2, or Zhipu GLM 4.5, but there is a long-tail of providers close behind in both quality and cadence of releases.

In this post we rank the top 19 Chinese labs by the quality and quantity of contributions to the open AI ecosystem — this is not a list of raw ability, but outputs — all the way from the top of DeepSeek to the emerging open research labs. For a more detailed coverage of all the specific models, we recommend studying our Artifacts Log series, which chronicles all of the major open model releases every month. We plan to revisit this ranking and make note of major new players, so make sure to subscribe.

At the frontier

These companies rival Western counterparts with the quality and frequency of their models.

DeepSeek

DeepSeek needs little introduction. Their V3 and R1 models, and their impact, are still likely the biggest AI stories of 2025 — open, Chinese models at the frontier of performance with permissive licenses and the exposed model chains of thought that enamored users around the world.

With all the attention following the breakthrough releases, a bit more has been said about DeepSeek in terms of operations, ideology, and business model relative to the other labs. They are very innovative technically and have not devoted extensive resources to their consumer chatbot or API hosting (as judged by higher than industry-standard performance degradation).

Over the last 18 months, DeepSeek was known for making “about one major release a month.” Since the updated releases of V3-0324 and R1-0528, many close observers have been surprised by their lack of contributions. This has let other players in the ecosystem close the gap, but in terms of impact and actual commercial usage, DeepSeek is still king.

An important aspect of DeepSeek’s strategy is their focus on improving their core models at the frontier of performance. To complement this, they have experiments using their current generation to make fundamental research innovations, such as theorem proving or math models, which ultimately get used for the next iteration of models. This is similar to how Western labs operate. First, you test a new idea as an experiment internally, then you fold it into the “main product” that most of your users see.

DeepSeekMath, for example, used DeepSeek-Coder-Base-v1.5 7B and introduced the now famous reinforcement learning algorithm Group Relative Policy Optimization (GRPO), which is one of the main drivers of R1. The exception to this (at least today) is Janus, their omni-modal series, which has not been used in their main line.

Interview of the Week

Back to the Digital Future: Why the Future of AI Healthcare Might be a Return to the Gig Economy

Keenon • August 19, 2025

AI•Work•Healthcare•Gig Economy•Chronic Disease•Interview of the Week

Might the supposedly revolutionary future of AI healthcare actually be a return to the gig economics of Uber and Airbnb?

That’s the intriguing proposition put forward by former Kaiser Permanente Chief and Stanford Medical School professor Robert Pearl, a prescient observer of the future of his industry.

According to Pearl, we may be returning to the digital future: freelance doctors, he predicts, will train people to use existing AI tools (ChatGPT, Claude, etc.) for managing chronic conditions - essentially "Uberizing" medical AI guidance.

The real question, of course, is whether this will cheer up both doctors and patients. Pearl isn’t sure about either.

But one thing he is certain about is that MAGA government isn’t the answer to fixing America’s healthcare future. Having been cautiously optimistic about RFK Jr six months ago, he now gives the US Secretary of Health and Human Services an “F” for his first six months in office. Maybe we should Uberize RFK Jr. It certainly couldn’t make things worse.

1. Two Competing AI Healthcare Models

Pearl identifies two paths: expensive, FDA-regulated products from tech companies versus affordable, clinician-led training programs that teach patients to use existing AI tools like ChatGPT for chronic disease management—with the second potentially avoiding regulation entirely.

2. AI Could Prevent 30-50% of Medical Deaths

By better managing chronic diseases like hypertension and diabetes (which account for 70% of doctor visits and costs), AI could save $1.5 trillion and prevent massive numbers of deaths from heart disease, cancer, kidney failure, and strokes.

3. The "Uberization" of Medical Care

With 40% of doctors already doing gig work, Pearl envisions freelance physicians training patients to use AI tools for continuous health monitoring—replacing the current system of infrequent office visits with real-time, at-home care management.

4. Insurance Companies Will Welcome AI, Hospitals Will Resist

Insurers will benefit from lower costs and reduced need for prior authorizations, while hospitals and drug companies will see fewer patients and medication sales—making them the primary opponents of AI healthcare adoption.

5. Medical Education Faces Major Disruption

Elite institutions like Stanford will focus on complex procedures (heart transplants, major cancers), while routine medical knowledge becomes commodified. Mid-level healthcare jobs will disappear, similar to what's happening in computer programming.

Startup of the Week

Scaling the 'Cursor for Slides' to $50M ARR- Gamma founder Jon Noronha

Youtube • Sequoia Capital • August 19, 2025

AI•Tech•Gamma•ARR•PresentationAI•Startup of the Week

Before ChatGPT went mainstream, Jon Noronha was building Gamma around a simple insight: most people dislike making slides, yet high‑stakes ideas still demand strong visual communication. Drawing on his Optimizely background, Gamma became an experiment lab for AI models, running extensive tests that revealed complementary strengths—models like Claude excelled at creative “taste,” Gemini offered strong cost efficiency—while heavy “reasoning” models could dampen creativity. By solving their own blank‑page problem, the team discovered they’d solved it for millions of users too: people could leap from a vague idea to a workable draft and spend their time editing rather than starting from scratch.

Founded in 2020, Gamma set out to reinvent the medium rather than clone PowerPoint. The product is block‑first and writing‑forward—“if Notion and Canva had a baby”—with rich themes, diagrams and layouts that AI assembles for you. Quality comes from human taste plus data: designers define what “great” looks like, then Gamma’s systems test layouts, harmonize image palettes and try many design options in seconds. That combination helped the company become cash‑flow positive with tens of millions of users and roughly $50M in ARR, even as incumbents with hundreds of millions of users remain the chief competition.

Noronha’s advice for builders centers on application‑layer advantage: orchestrate models, run relentless A/B tests, and differentiate the medium. Gamma is expanding into adjacent uses like lightweight websites and an API that auto‑generates on‑brand decks from CRM data. The roadmap pushes toward agentic editing, where you can ask the AI to expand sections, redo visuals or transform a five‑slide pitch into twenty—advancing visual storytelling beyond traditional slides.

Here are a set of slides I made using Gamma - https://gamma.app/docs/SignalRank-Technology-Platform-Transforming-Venture-Capital-Throu-djhopa18lj5jml4

Post of the Week

Why Generalists Win in the Age of AI

Youtube • 20VC with Harry Stebbings • August 20, 2025

AI•Work•Generalists•CareerDevelopment•Productivity

A reminder for new readers. Each week, That Was The Week, includes a collection of selected essays on critical issues in tech, startups, and venture capital.

I choose the articles based on their interest to me. The selections often include viewpoints I can't entirely agree with. I include them if they make me think or add to my knowledge. Click on the headline, the contents section link, or the ‘Read More’ link at the bottom of each piece to go to the original.

I express my point of view in the editorial and the weekly video.