Contents

AI Native Software and Hardware

Essays

Venture Capital

AI

The Next Trillion Dollar Marketplace Will Put SKUs on Services

OpenAI to Open-Source Some of the A.I. Systems Behind ChatGPT

ElevenLabs launches an AI music generator, which it claims is cleared for commercial use

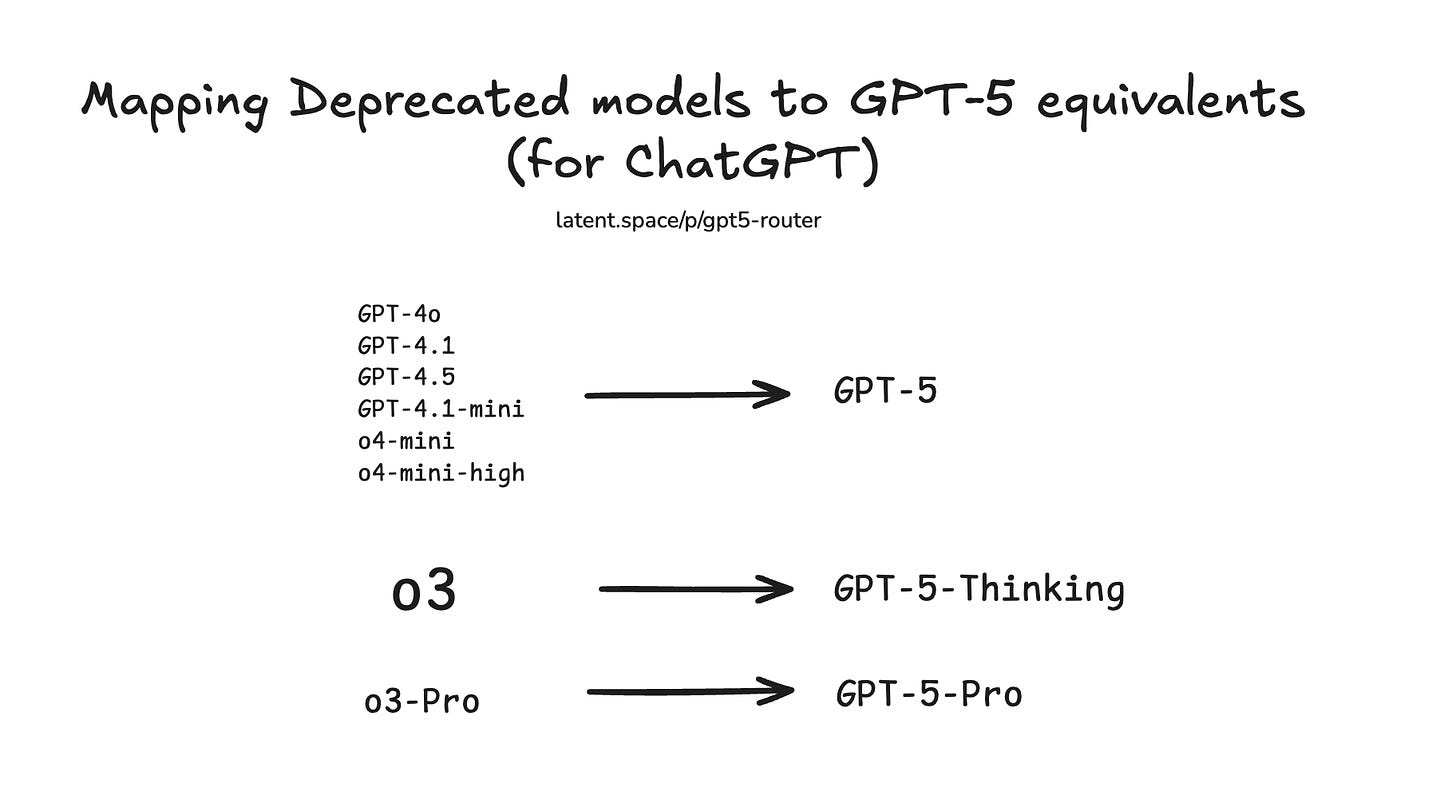

GPT-5's Router: how it works and why Frontier Labs are now targeting the Pareto Frontier

OpenAI in talks for share sale valuing ChatGPT maker at $500bn

Box CEO on OpenAI's GPT-5 launch, AI use in the workplace and the future of the tech

The Cloud Wars Update: Who’s Winning the AI-Driven Growth Battle

AI and Publishers

AI and Jobs

Substack

Geopolitics

Defense Tech

China Tech

Stablecoins

Interview of the Week

Editorial

AI Native is Here

This Week AI Broke Our Software—and Hardware—Assumptions

This advancement establishes a new dimension beyond raw knowledge, allowing AI to move from advice-giving to direct action-taking within complex workflows.

Thus spoke Tomasz Tunguz in his essay From Knowledge to Action. The same theme is echoed in GPT-5 Hands-On: Welcome to the Stone Age on Latent Space":

The Stone Age marked the dawn of human intelligence, but what exactly made it so significant? What marked the beginning? Did humans win a critical chess battle? Perhaps we proved a very fundamental theorem, that made our intelligence clear to an otherwise quiet universe? Recited more digits of pi?

No. The beginning of the stone age is clearly demarcated by one thing, and one thing only: humans learned how to use tools.

What if the defining assumption of modern tech — that software is something humans write, and other humans use, and hardware is something we buy and use—just became wrong?

This week’s stories say exactly that. OpenAI’s GPT‑5 “just does stuff,” routes work to tools, and slashes latency and energy via a new router; Anthropic keeps shipping pragmatic upgrades that turn models into working colleagues. The net: software is becoming an actor, not an app, and hardware is going to have that embedded - imagine a child talking to a toy and getting answers back. I can already do that in my Tesla Model 3 using Grok. Or telling the lawn mower to go mow the lawn and it not only does it but reports back to you.

1) As Tomasz Tunguz states - Software has moved from knowledge to action—and budgets will follow

GPT‑5 is not a smarter autocomplete; it’s a workflow engine. Its router policy picks specialized modules, calls tools, and self‑verifies work, yielding ~4x lower latency and half the energy per token. Pair that with hands‑on reports of GPT‑5 proactively spinning up entire apps and collateral (“it just does stuff”), and Tom Tunguz’s framing is here: from advice to execution.

Anthropic’s tack is quieter but consequential. Claude Opus 4.1 isn’t splashy; it’s better at code refactors, reasoning, and “agentic” tasks—exactly where enterprises live. As Dario Amodei told John Collison (via Om Malik), code is the early indicator of what’s coming everywhere else.

The result hits pricing and procurement first. Jason Lemkin put it bluntly:

“Every developer… is going to get $10,000 a month of AI credits… Shopify is already there for some of its top developers.”

McKinsey’s 12,000 agents and Box’s “enhance, don’t replace” posture show where Fortune 500s are headed. Meanwhile, the ground truth: non‑coders are building internal tools in hours, ditching pricey SaaS (Every’s $50k‑in‑three‑hours story) as “vibe analysis” lets teams talk to their data and compress cycles 2x to 100x.

2) Hardware is the constraint—and it’s reorganizing the stack

Azure captured ~43% of net new cloud run‑rate, but all three hyperscalers say demand exceeds capacity. Power and chips—not sales—are the gating factor. GPT‑5’s router is thus not just clever; it’s a power policy.

The hardware response is national and local. Apple’s additional $100B U.S. investment includes a Houston facility to build AI servers—a reshoring bet that compute sovereignty becomes strategy.

Edge is back. OpenAI’s open‑weight “gpt‑oss” models run on a single 80GB GPU—or even a laptop (20B on 16GB). If your agents can act locally with near‑o4‑mini capability, you don’t just save cost; you change privacy, latency, and vendor risk. ElevenLabs’ licensed music generator hints at the parallel content supply chain: on‑device generation backed by explicit rights.

3) How can we help AI and also enable revenue streams?

Cloudflare’s charge that Perplexity used stealth, undeclared crawlers to evade robots.txt (Perplexity disputes it) is more than drama; it’s the fault line for an AI‑era web. ChatGPT, Cloudflare notes, respected robots.txt; the market will punish those who stall AI and reward those who help it but it will also reward AI for figuring out how to channel money to content producers. My 2c is that this needs more than training fees policed by robots.txt.

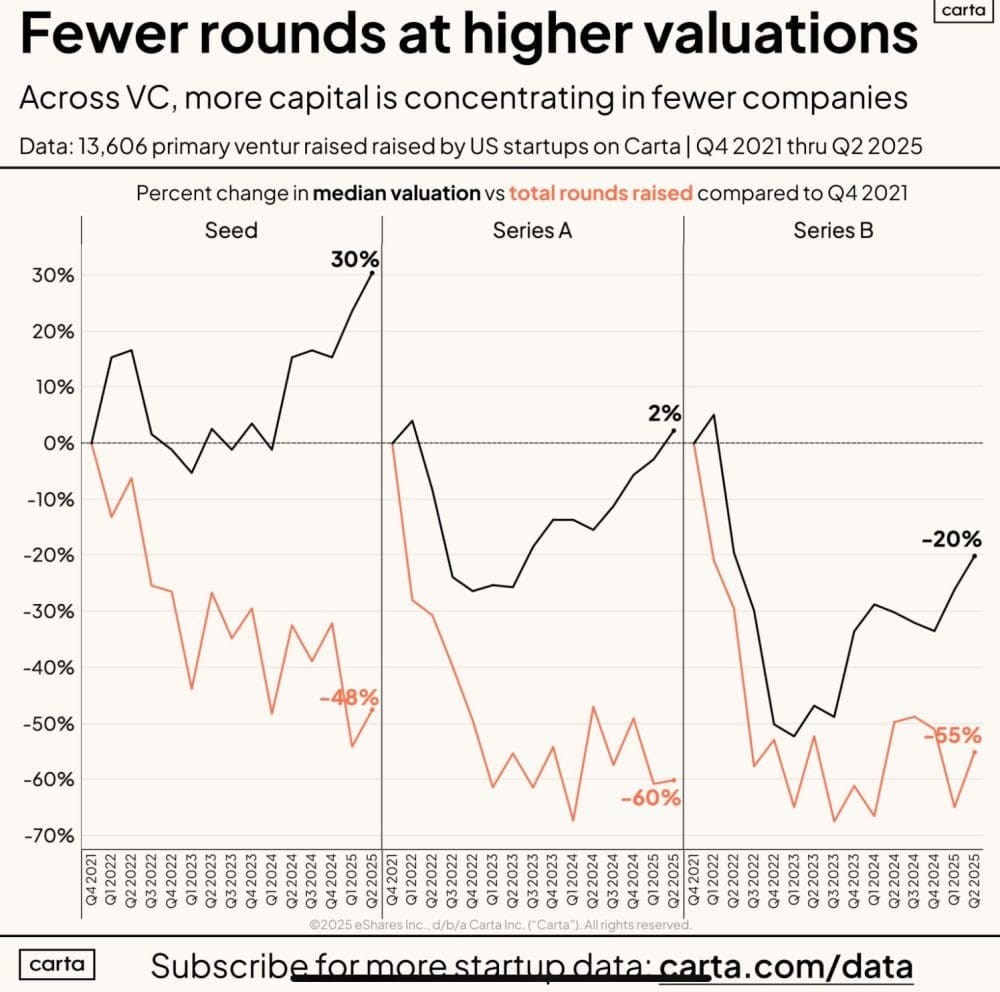

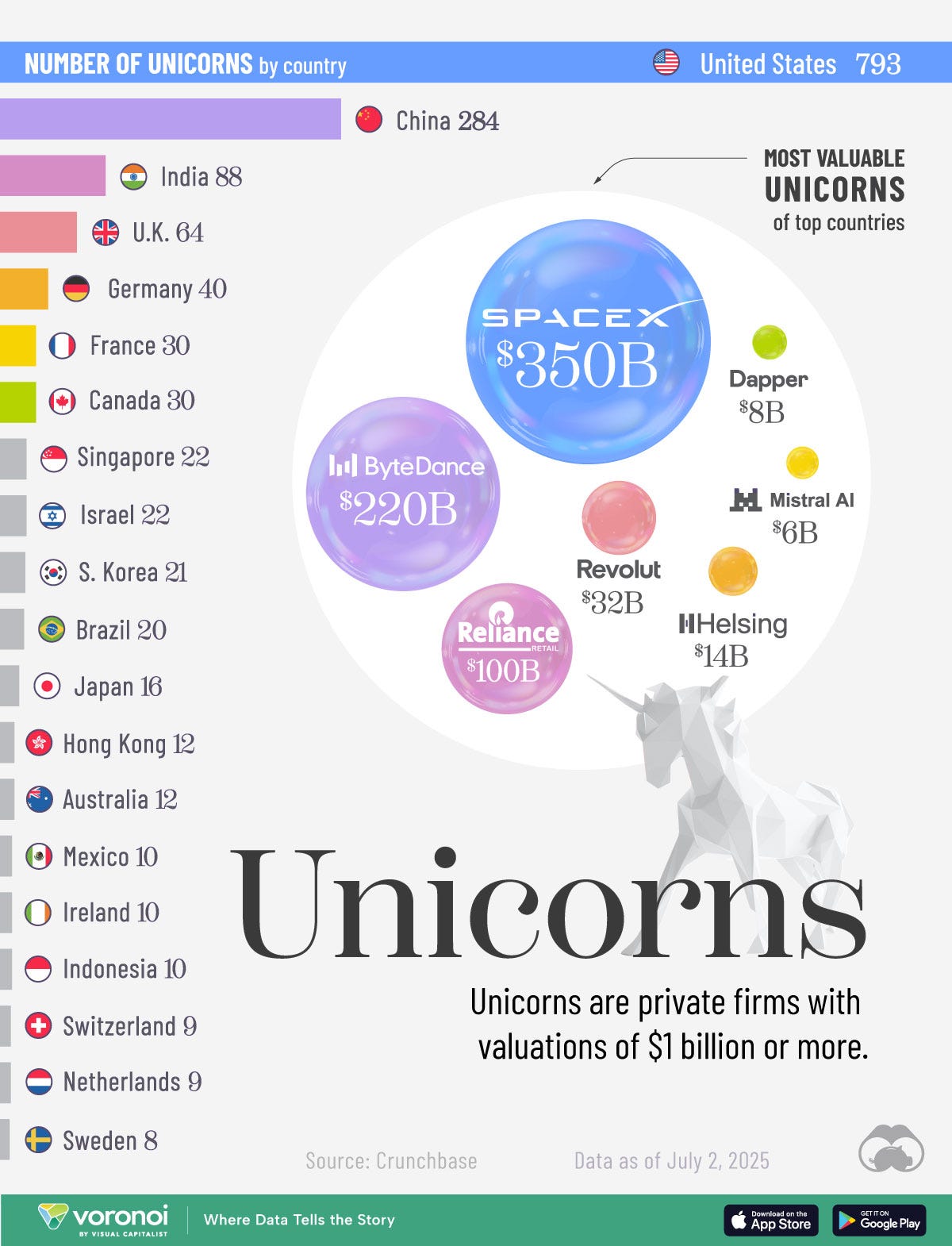

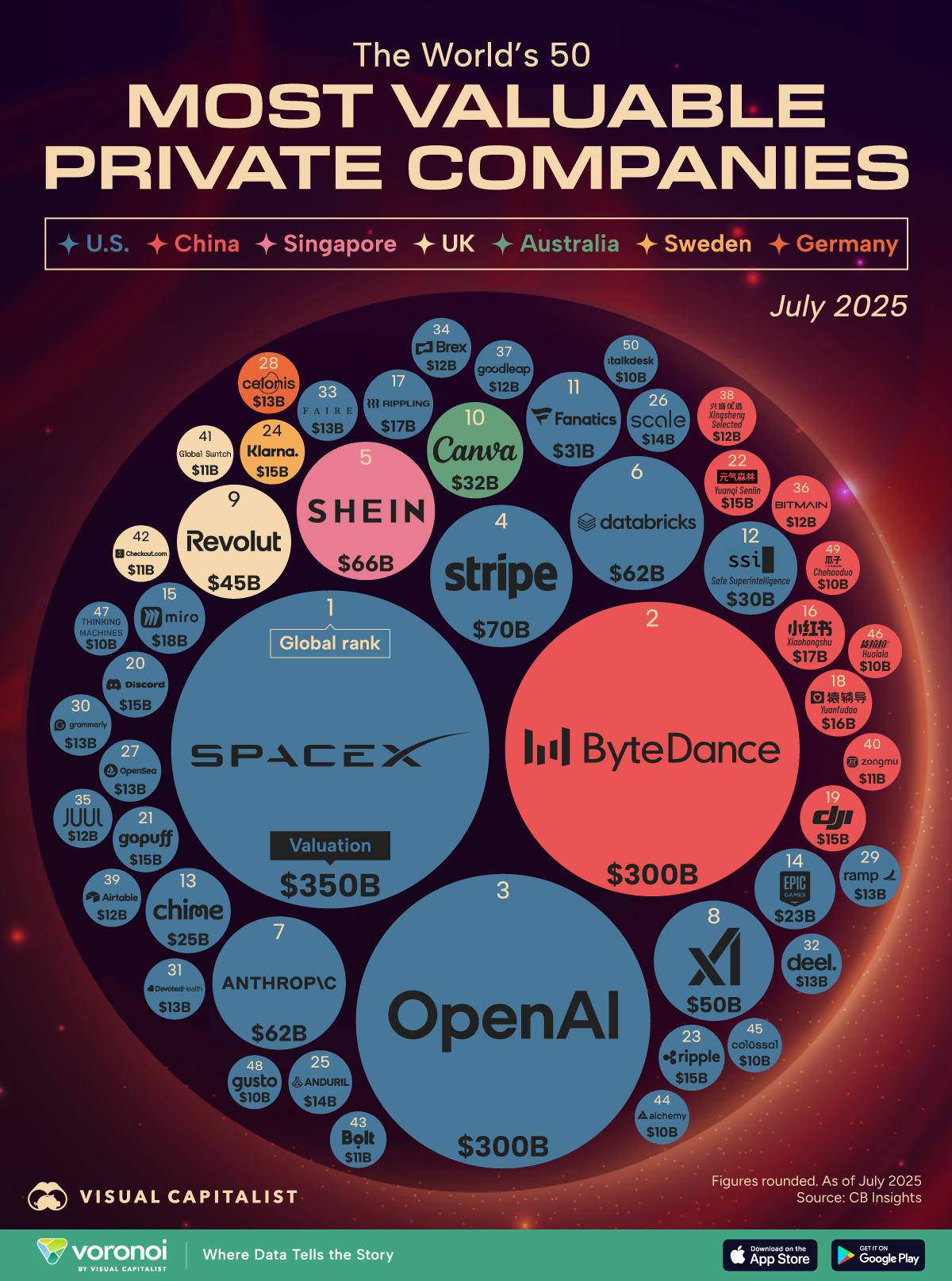

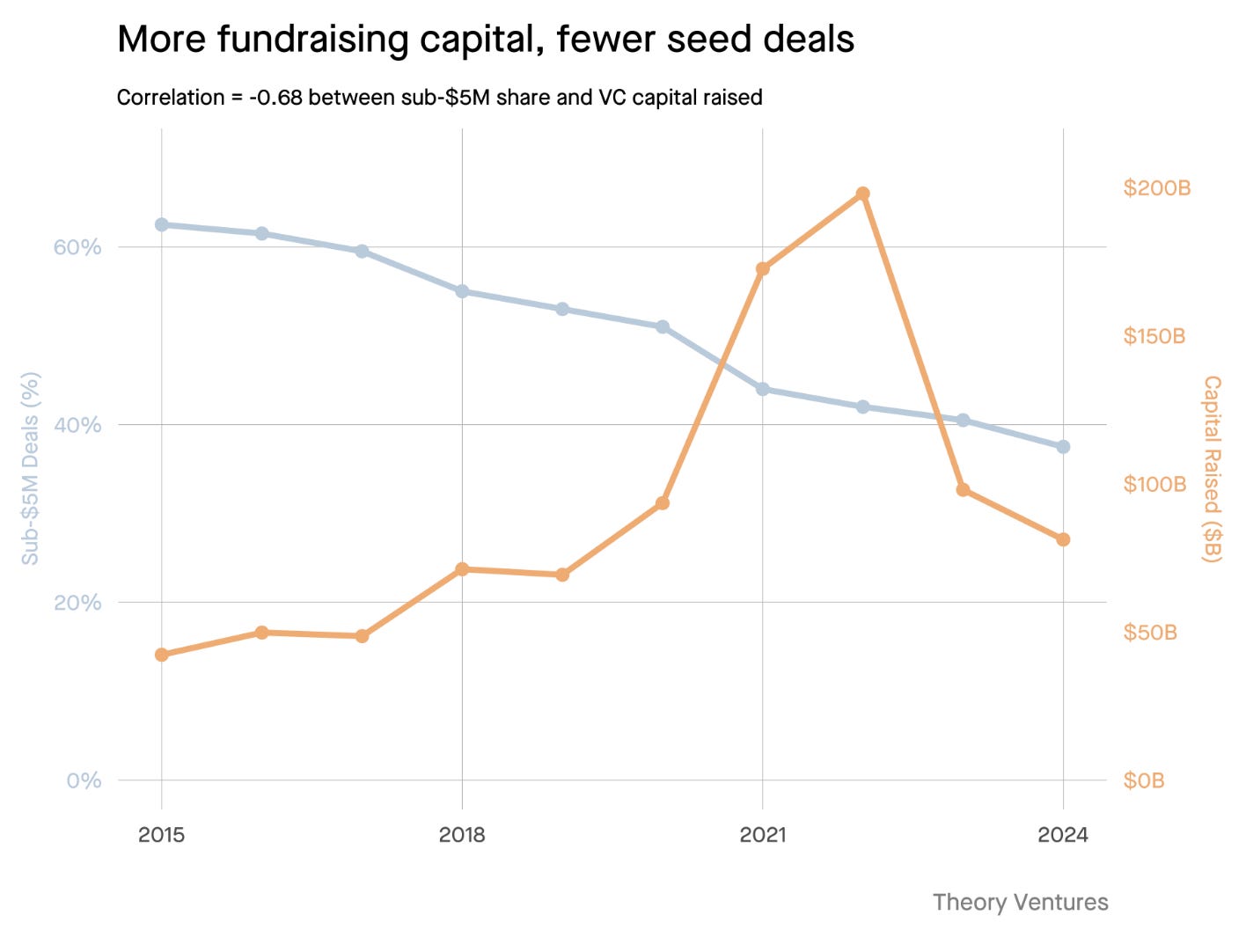

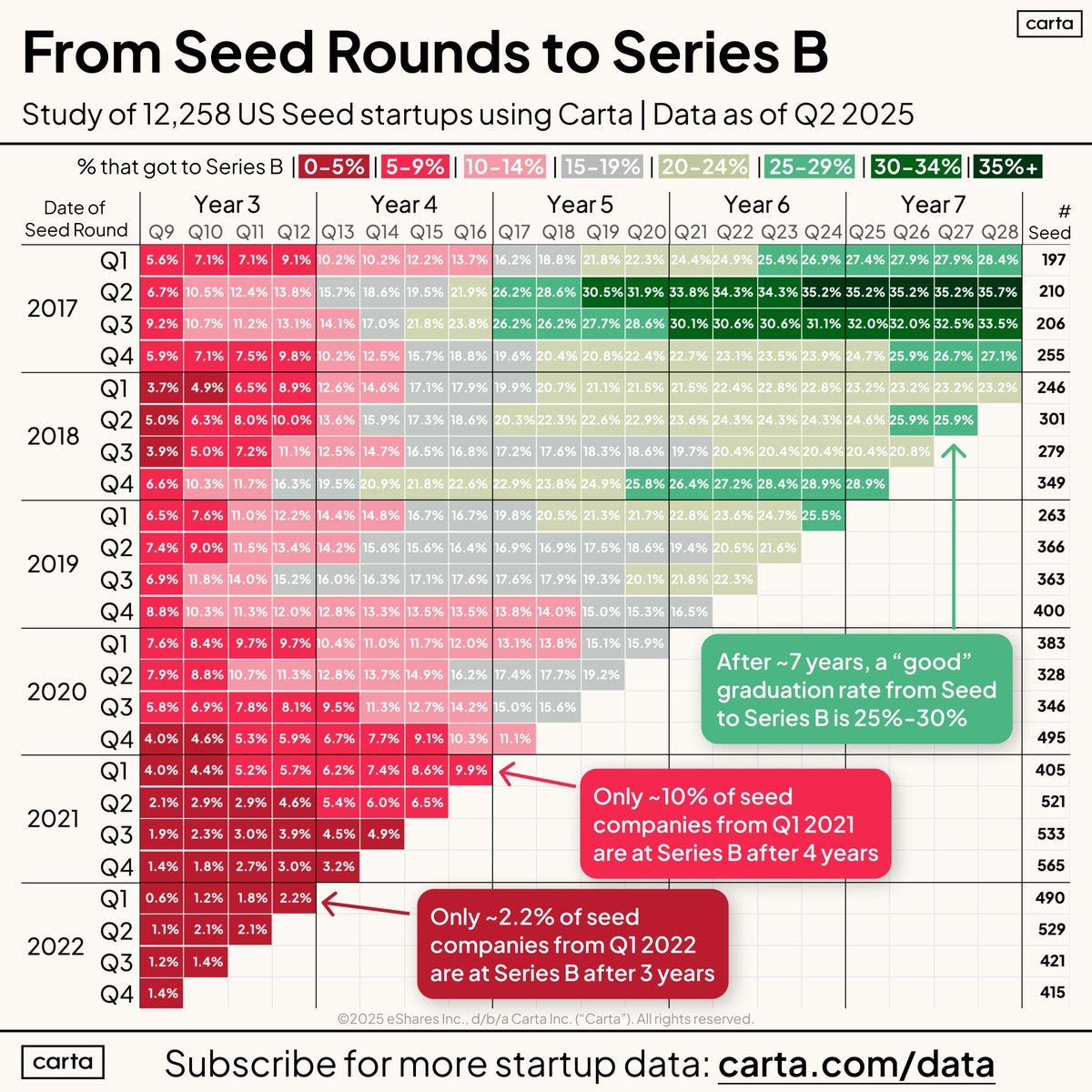

Capital is concentrating around those who can shoulder the capex. Carta shows valuations up 15–25% even as deals fall; Crunchbase highlights $70B flowing to just 11 companies. OpenAI’s prospective $500B secondary, 700M weekly users, and even my $10T hot‑take underscore the winner‑take‑most stakes.

Meanwhile, Meta’s “copy button” culture and Substack vs. Ghost reveal distribution power shifting from brands to platforms and—importantly—back to open infrastructure. China’s push for a global AI governance plan says the rules of this game won’t be written in one capital.

What others are missing: The software story isn’t “apps get AI.” That would simply plug AI use into existing user interfaces.

The real story is that agents plus tool‑calling invert enterprise design.

What to watch next

Will enterprises appoint “agent managers” and codify routing, spend caps, and human‑in‑the‑loop by function? (Compliance, claims, tax are already drawing legal lines.)

Do open‑weight models at the edge erode proprietary moats—or expand them via proprietary data and deep workflow integration?

Does the Cloudflare‑Perplexity fight catalyze a paid, auditable standard for AI access to the web—and who enforces it?

Can clouds expand power faster than AI demand? If not, expect more Apple‑style reshoring and product designs that privilege energy‑aware routing.

If last year was about demos, this week made it operational. Software does the work. Hardware rations the power. Our assumptions should update accordingly.

AI Native Software and Hardware

I Found 12 People Who Ditched Their Expensive Software for AI-built Tools

Every • August 4, 2025

Technology•AI•Software•Automation•NoCode•AI Native Software and Hardware

During Every's recently completed Think Week, the team addressed internal pain points by building tools with AI—often with no coding required. This approach, while not unique to Every, highlights a growing trend. Lewis Kallow found 12 examples of people saving six figures and launching products faster by prompting AI instead of hiring developers. These individuals built what they needed in hours, not months.

I recently heard a founder explain how he saved $50,000 in three hours. He didn’t achieve it by budget-cutting or layoffs but by prompting AI to build his own custom software tool, writing zero lines of code himself.

These stories show people creating powerful internal tools by prompting AI, replacing expensive software, automating workflows, and shipping products faster without writing code. These are internal tools for teams and organizations—the unglamorous software that actually runs businesses. AI has now made these accessible to anyone who can write prompts.

One standout story is Joshua Wöhle, a six-time founder and CEO of Mindstone, who nearly signed a $50,000 SaaS contract for a tool to connect community members but instead built the entire software himself in three hours using AI without manually writing or editing code. This saved him $50,000 with no compromise on quality.

Brian Christner replaced a costly online course platform Kajabi by building his own on Replit, cutting his costs to one-tenth and tailoring features exactly for his students’ needs.

Manny Bernabe built an enterprise-grade vendor portal that manages vendors and contracts—a tool that traditionally costs five figures and weeks to develop—mostly by directing AI to generate the code, saving substantial time and money.

Michael Luo, a product manager at Stripe, built a free Docusign alternative compliant with electronic signature laws in a weekend for less than $50, showcasing the potential for quick, affordable solutions.

Matt Palmer automated his tedious UTM tracking workflow in under an hour with zero manual coding, creating an app that manages perfectly formatted tracking parameters automatically.

Other stories include founders and teams building prototypes quickly, automating workflows that once took hours weekly, and transforming ideas into investor-backed startups using AI-powered no-code or low-code platforms. For example, Gustav Linder vibe-coded an AI-powered fashion website with e-commerce functionalities, which attracted investors and full-time attention.

Zinus, a mattress company, automated customer service quality assurance using AI in half the time and cost of traditional developer-built solutions, saving $140,000.

These case studies demonstrate that even those without technical backgrounds can build fully functioning applications by prompting AI, drastically reducing timeframes from months to hours or days, lowering costs, and gaining competitive advantages.

An internal tool doesn’t need to be complex or polished to be valuable. AI agents are proving effective by creating simple tools that save hours and reduce costs, enabling ideas to come to life faster than ever.

The State of AI-First Services Today

Medium • Florian Seemann • July 31, 2025

Technology•AI•AI•AI Native Software and Hardware

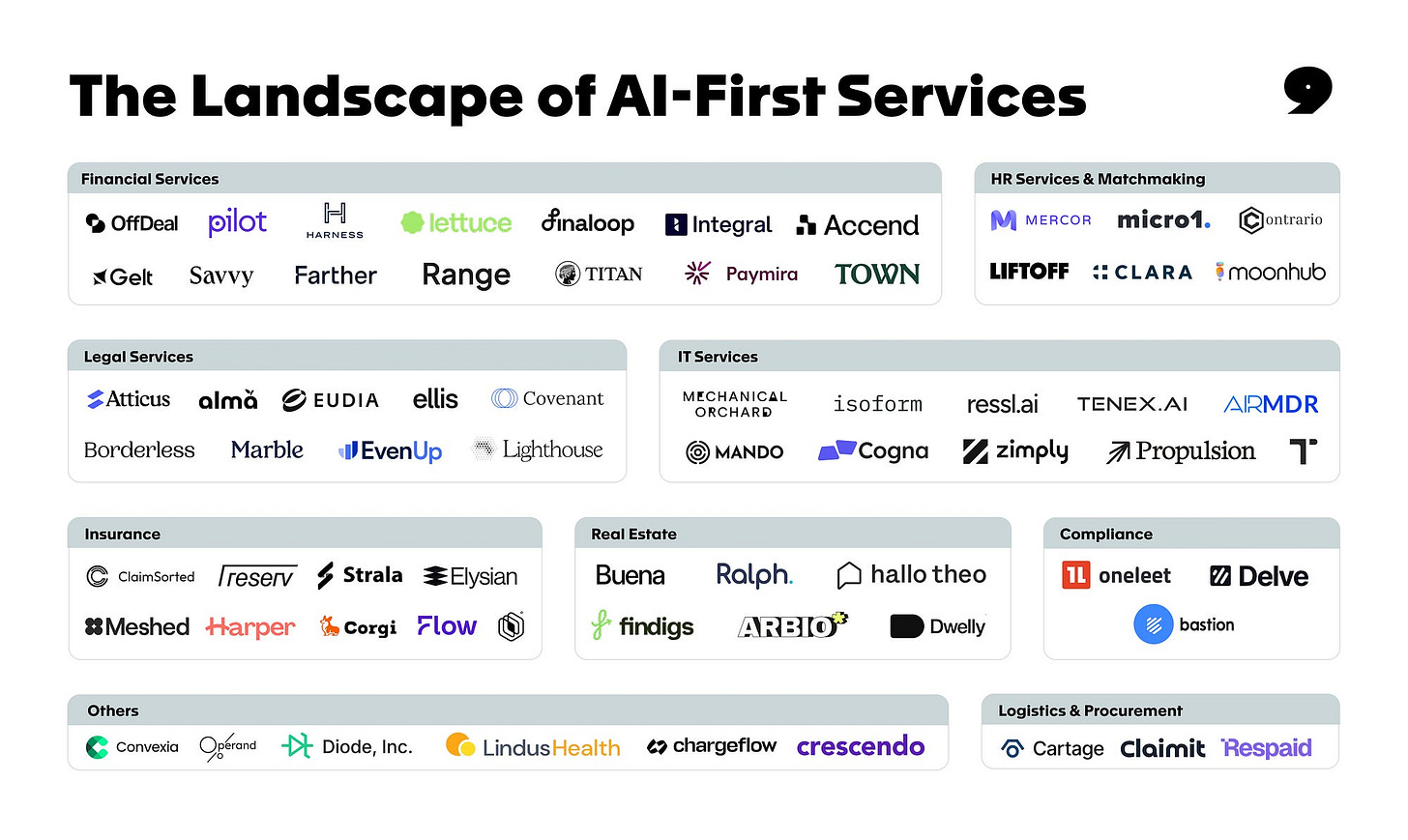

Over the past months, we’ve explored the rise of AI-first service businesses from several angles: Louis started by laying out why we believe foundation models are now performant enough to support full-stack services and that these businesses could become meaningful in ways traditional software can’t. We then mapped the early landscape and looked at how M&A might accelerate their path to scale.

In these earlier explorations, we argued that their operational playbook(s) and value proposition(s) often diverge meaningfully from traditional software businesses and that their addressable markets could often be multiple times larger.

What wasn’t clear to us was how these businesses could balance automation and service quality, how they could scale operations without linear cost growth, how they could build strong (data) moats, or how they could articulate value beyond cost savings.

After dozens of founder conversations, deep dives into emerging sub-verticals, and some early commercial signals from the market, we now have more evidence to revisit those questions. As part of this, we’re also sharing an updated version of the market map to reflect where we’re seeing the most activity and momentum.

We began by mapping verticals to understand where AI-first service businesses are most likely to succeed. The initial pattern suggested that the most promising opportunities lie in under-digitized industries with entrenched, low-NPS incumbents like property management. But what’s proven even more important is the shared structure of the workflows these businesses aim to replace.

Whether it’s insurance claims, tax filings, property management, or immigration law, the underlying work is often highly structured and repeatable, driven by documents, rules, and checklists rather than creative problem-solving. Most of it still runs on PDFs, spreadsheets, and legacy systems.

That shared anatomy makes these categories particularly well-suited to being rebuilt from the ground up:

Structured intake and triage: In insurance or property management, each case starts with a flood of semi-structured inputs, e.g., KYC packs, maintenance tickets, scanned forms. AI-native firms use LLM + OCR pipelines to classify and validate this data automatically, routing only edge cases to humans.

Document-heavy review and cross-referencing: Tax firms or claims processing specialists often rely on junior/lower-qualified staff to search through dense statutes, policy docs, or precedent letters. RAG and vector search now surface the relevant clause in seconds.

Repeatable, rules-based decisioning: Whether it’s approving a claim, closing an alert, or filing a compliance opinion, outcomes often follow fixed logic. AI-first teams train policy agents to apply those rules, explain the outcome, and log it immutably.

Low-value manual tasks at scale: Across verticals, teams still spend hours copying data across systems, reconciling ledgers, or filling out forms. End-to-end automation reduces marginal cost per case to near zero and scales throughput without linear hiring.

Massive untapped historical data: Legacy firms sit on decades of returns, claims, filings, and alerts, mostly untouched. AI-native companies structure this data to train vertical-specific models that improve accuracy and create defensibility.

What emerges is a new class of service business: data-in, judgment-out factories. They run on documents, structured data, and rules-based logic. The output is a decision, classification, or filing, increasingly handled by agents instead of human analysts.

Since our original market map, we’ve seen a noticeable uptick in activity across insurance-related services, e.g., Inca or Elysian in claims processing and Flow or Meshed in brokerage. Financial services remain a strong category, with steady expansion across tax, accounting, and compliance. And we’re beginning to see more unique and complex use cases emerge, like Convexia in drug discovery or Operand in management consulting.

Some things weren’t clear early on, i.e., how far automation could go without hurting quality, what scalable delivery would look like, or how these businesses would differentiate beyond price. We’re starting to see more answers take shape.

Across most verticals we’ve looked at, companies are not aiming for full automation from day one. Instead, they’re building workflows where AI handles the bulk of routine tasks and humans remain involved at key points. Specifically in:

Regulatory compliance: In many verticals, there are legal ceilings on automation. German customs brokers are legally required to manually review filings. Property managers must conduct in-person meetings annually. Tax advisors often need certified oversight. In these contexts, human accountability isn’t optional, and will not become so in the near future.

Accuracy assurance: In high-stakes workflows (claims, filings, tax, security), automation errors are costly. Some of the companies we’ve talked to invest heavily in custom verification layers, reviewer training, and tightly controlled workflows. Control sheets, QA loops, and task-specific overrides ensure that speed doesn’t come at the expense of accuracy.

Trust and relationship management: Some industries are still fundamentally human, e.g., brokers, real estate agents, wealth advisors. These customers often care more about trust and service than technical elegance. Integral, an AI-native tax advisory for German SMBs, doesn’t mention AI once on its homepage. Their customers aren’t looking for sophistication; they’re looking for confidence.

We’re particularly excited about companies that manage to productize parts of their service early, without rushing into full automation too soon, or defaulting to stitching together off-the-shelf tools without real leverage. Getting automation right is less about maximizing coverage and more about sequencing it properly, starting with the aspects of the business that provide the biggest operational leverage.

In AI-first services, growth without automation just means more people. And more people likely lead to margin compression, coordination risk, and brittle operations. We’ve been excited to see some companies starting out by building the systems that allow margins to expand with volume by encoding expertise into infrastructure.

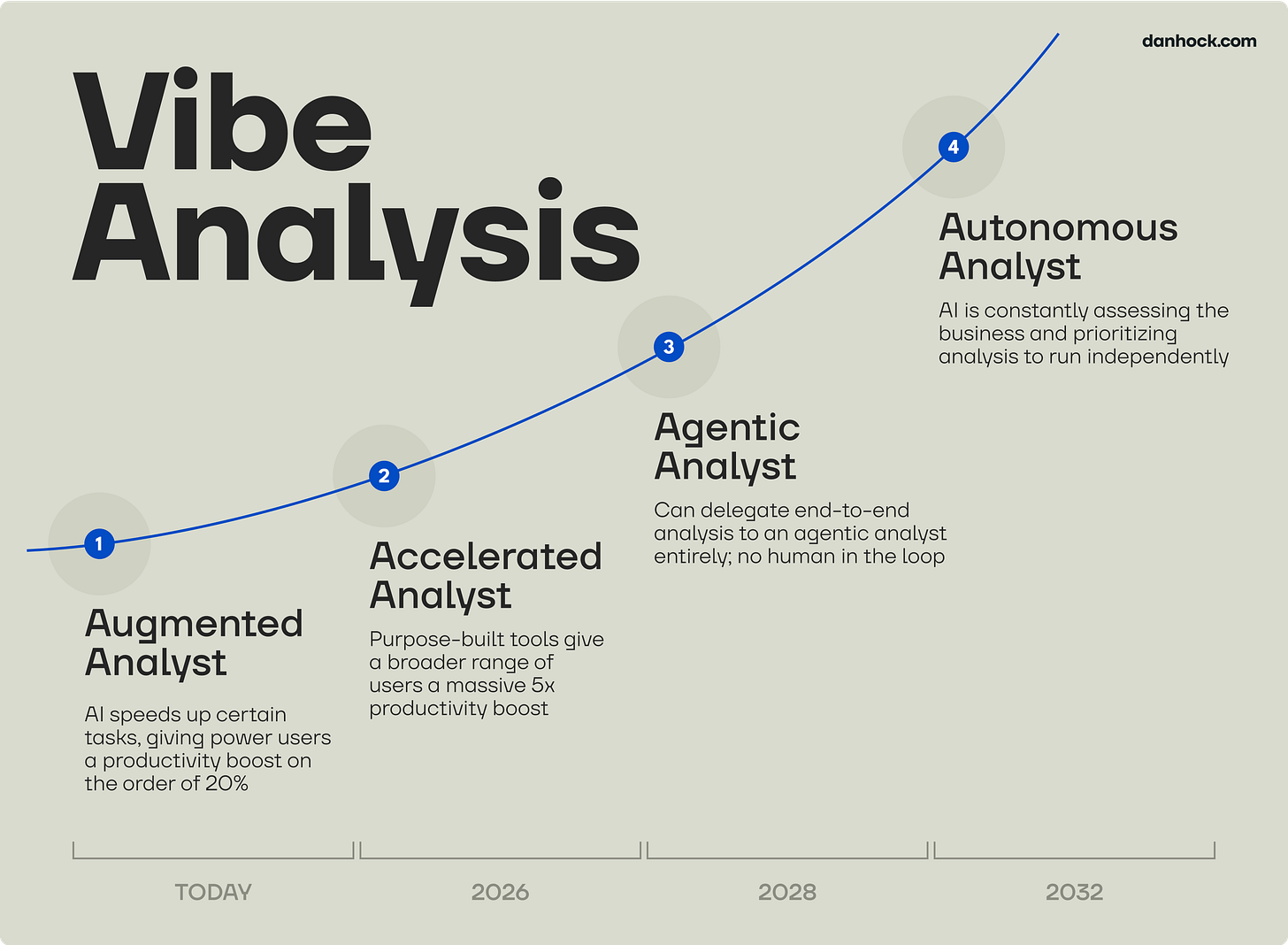

Vibe Analysis

Danhock • Dan Hockenmaier • July 31, 2025

Technology•AI•Data Analysis•Analytics•Automation•AI Native Software and Hardware

Vibe coding is for creating software. Vibe analysis is for creating insights.

Vibe analysis could be an even bigger deal: there are about 2 million software engineers in the US, but at least 5 million people who use data to answer questions every day. That means that in the US alone, we’re spending 10 billion hours a year reporting on business performance, assessing new products and features, and deciding which experiments to ship and which growth opportunities to pursue.

I worked with some of the team at Faire who are at the edge of applying AI to analytical work — Alexa, Ali, Blake, EB, Jolie, Max, Sam, Tim, and Zach — to shed light on the change that is coming.

We’ll look at both the bull case (how AI could massively increase the efficiency and quality of data analysis) and the bear case (why it will be harder than many people think).

What becomes clear is that no matter how conservative your assumptions, within a few years the way analysis is done and who does it will be unrecognizable from today.

The bull case

Data analysis is full of the kinds of things that humans are bad at and machines are great at. There are basically four components.

The hardest part is often simply knowing what data to use - understanding the schema, how different tables and fields interact, and what is up to date. If you hook up ChatGPT to a data warehouse today, you get a tool that is pretty dumb out of the gate but gets smart quickly as it develops a semantic model of the dataset. Instead of asking each team member to learn this for themselves, you can ask a model to learn it once.

Another component of analysis is writing SQL queries themselves. This is such an obvious use case that off-the-shelf LLMs are already very helpful at quickly cleaning up and generating queries. Cursor is pretty good at it too. Products that are purpose-built for data analysis will be excellent at it.

The third component is manipulating data into a useful format. Some of this can be done through the query, but many forms of analysis require a secondary tool like spreadsheets. New solutions for this are exploding, such as the viral launch of Shortcut (a “superhuman Excel agent”) just a few weeks ago.

Finally, there is visualizing and dashboarding data. This is probably where the tools are weakest today, but there are sparks of genius. All of the charts below were one-shotted by Claude based on some data and a quick description of the format:

As incumbents and startups race to build solutions to these problems, two distinct UIs are emerging:

“Cursor for analytics” where the core workflow is autocomplete, editing, or refactoring of existing code. Startups like NAO and Galaxy are building for this use case and incumbents like Mode are incorporating it into their products.

“Data chatbots” where the core workflow is natural language conversations that output basic datasets and charts. Many incumbents are building this, including Snowflake and Looker.

Today the former is accessible only to more sophisticated users, and the latter just have very limited capabilities. There are strong incentives for a tool that does both, because this would allow the work of power users to tune the semantic model for the benefit of everyone else.

This tool will also need built-in visualizations in order to avoid analysts constantly jumping between workflows, and to enable static dashboards and charts for sharing.

The space is begging for a full stack solution which:

Is native to your data warehouse and holds as much of it in context as possible

Constantly updates its semantic model of the data schema as it is used

Provides data and visualizations by default, but exposes SQL to those who want it

Has a built-in visualization tool that allows rapid iteration on charts

Essays

The risk of letting AI do your thinking

Ft • July 31, 2025

Technology•AI•CognitiveOffloading•CriticalThinking•Education•Essays

Artificial intelligence (AI) has become deeply integrated into daily life, with tools like ChatGPT boasting 700 million weekly users worldwide. These technologies offer significant advantages, such as time savings, accelerated research, and enhanced productivity. However, this widespread adoption raises concerns about "cognitive offloading"—the tendency to rely on AI for tasks like writing and problem-solving—which may lead to diminished memory and critical thinking skills, similar to the “Google effect.”

Early studies indicate that frequent AI use can negatively impact users' intellectual engagement and performance. For instance, research from MIT found that students using AI underperformed at cognitive and linguistic levels. Another study linked heavy AI reliance to weaker critical thinking abilities.

To mitigate these risks, experts recommend reinforcing critical thinking skills in education, encouraging users to treat AI as a tool rather than an infallible authority, and designing AI responses that foster human deliberation. The conclusion urges users to actively engage with AI, using the technology as a collaborator rather than a crutch.

Meta’s Favorite Product Isn’t AI. It’s the Copy Button.

Om • July 31, 2025

Technology•Software•Innovation•BusinessStrategy•IntellectualProperty•Essays

An hour after the initial commentary on Meta's Super Intelligence memo, attention was drawn to a recurring theme in Mark Zuckerberg’s approach: the idea of "copying" or subsuming others’ ideas and phrases as his own. This pattern aligns closely with the company’s internal culture and branding, where replication and iteration seem more core to their strategy than original invention. The article highlights how Zuckerberg himself has embraced concepts like "personal super intelligence" by assimilating terminology introduced by others, emphasizing the broader culture at Meta of integrating external innovations into their product and narrative framework.

The focus then shifts intriguingly to Meta’s actual favorite product, which, contrary to public assumptions around AI advancements, is revealed to be the fundamentally simple “copy button.” This metaphorical highlight points to Meta’s underlying operational philosophy: to replicate, adapt, and incorporate innovations—whether in technology, features, or ideas—from others rapidly and position them centrally within their ecosystem. Such a stance underlines a strategic choice to prioritize incremental improvement and widespread adoption rather than risky original invention. This approach allows Meta to maintain dominance in product functionality and user experience by embedding tested and popular functionalities into their platforms.

This emphasis on copying as a strategic asset carries significant implications for the tech industry and innovation debates. It challenges the conventional emphasis on groundbreaking originality as the sole path to industry leadership. Instead, Meta’s strategy shows that systematically copying and refining can be just as powerful. It raises complex questions about ethics, intellectual property, and the balance between inspiration and outright replication. Critics argue this may stifle true innovation by discouraging smaller creators and startups, while supporters might claim it accelerates technological progress by disseminating successful ideas widely and efficiently.

Furthermore, this logic reflects a broader trend in modern tech giants who leverage vast resources to incorporate emerging technologies, like AI, only after they become proven elsewhere. Meta’s cautious yet assertive replication strategy could be seen as a pragmatic method to manage risks associated with unproven technologies while still leading in market relevance and user engagement.

In summary, Meta’s favorite product being the "copy button" is more than just a symbolic statement—it is a revealing insight into how the company operates and innovates. By centering their strategy around rapidly adopting, adapting, and scaling existing ideas, Meta ensures its platforms remain competitive and feature-rich. While this approach stirs debates over originality and ethics, it undeniably shapes the competitive landscape of technology development, reflecting a nuanced reality where copying can be an effective tool for technological and business success.

Tech Insider Claims OpenAI Will Be Worth $10 Trillion: Has Silicon Valley Finally Gone Totally Bonkers?

Keenon • Andrew Keen • August 1, 2025

Technology•AI•Valuation•Innovation•MarketTrends•Essays

The article explores a strikingly ambitious valuation forecast for OpenAI and its rival Anthropic, as suggested by a tech insider, predicting that OpenAI could soon be worth $10 trillion, with Anthropic valued at $5 trillion. These figures are extraordinary, surpassing the GDP of every country globally except the United States and China, signaling extraordinary optimism or perhaps delusion in Silicon Valley's current valuations. The article questions whether these predictions represent visionary insight or if the tech world has "gone totally bonkers," likening this speculation to the excesses seen during the dot-com bubble.

Key Insights and Market Context

AI Valuations in Fantasy Territory: The valuation estimates place these two AI companies collectively at $15 trillion—a mind-boggling number that far exceeds traditional benchmarks in tech valuation and global economy comparisons. Such figures emphasize the enormous financial expectations being placed on artificial intelligence as a transformative technology.

Tipping Point in AI-Driven Search: The rise of AI-powered search alternatives like Perplexity's Comet browser marks a fundamental shift from traditional search engines dominated by Google. Approximately a quarter of internet users reportedly now prefer AI for search functions, posing a direct threat to Google's advertising-driven business model. This shift could disrupt how search and information retrieval operate across the internet.

San Francisco’s Tech Boom Revived: The AI revolution has reignited San Francisco’s status as the epicenter of tech innovation. Real estate prices and rental demand have surged, paralleling the frenetic tech hiring environment reminiscent of the late 1990s. This surge reflects intense competition among AI firms for the best engineering talent and office spaces, underscoring the AI sector’s rapid growth and influence.

AI Race Is Not Winner-Take-All: Unlike previous tech battlegrounds where dominance belonged to a single company (e.g., Google in search, Amazon in e-commerce), the AI market appears expansive enough to support multiple major players. Anthropic is noted as a formidable competitor to OpenAI, and Chinese AI models are emerging as serious global contenders, suggesting a multipolar competitive landscape rather than a monopoly.

Big Tech’s AI Industry Anxiety: Established tech giants exhibit varied strategies and concerns regarding AI. Facebook is investing billions in retaining AI talent, motivated by recent model shortcomings. Apple, less publicly aggressive, opts to integrate external AI into its products rather than building expensive infrastructure itself. Meanwhile, the U.S. government's conscious choice to avoid regulating AI development reflects a laissez-faire approach that could have broad implications for industry dynamics and societal impact.

Implications and Analysis

The proposed valuations and market dynamics highlight both the transformative potential and the speculative risks surrounding AI today. Investors and companies are betting heavily on AI’s future economic impact, driving valuations that may or may not be sustainable. The shift in how people search for information could revolutionize digital advertising and reshape internet commerce, with far-reaching consequences.

Moreover, the reinvigoration of San Francisco as a tech hub signals renewed economic and social pressures but also opportunities tied directly to AI’s growth trajectory. The competitive landscape’s diversity suggests innovation could accelerate, but it also raises questions about geopolitical technology races, especially with Chinese AI advancements gaining prominence.

Big Tech’s varied responses—ranging from heavy investment to strategic caution—reflect the uncertainty and high stakes in AI development. The absence of government regulation might expedite innovation but could also raise ethical, security, and economic concerns as AI proliferates.

In summary, the article presents a provocative snapshot of Silicon Valley’s current state in AI valuation and competition, capturing the mix of optimism, hype, and strategic positioning that defines this critical moment in technology history.

The Peculiar Persistence of the AI Denialists

Persuasion • Yascha Mounk • August 6, 2025

Technology•AI•ArtificialIntelligence•EconomicImpact•AIdenialism•Essays

A prosthetic hand playing the piano during the World Artificial Intelligence Conference 2025 in Shanghai, China, on July 27, 2025. (Photo by Ying Tang/NurPhoto via Getty Images.)

Some momentous historical events, like the French Revolution or the demise of communism, come with little warning. Few contemporaries were able to predict that they were about to happen, or to foresee how fundamentally they would transform the world.

Other momentous historical events, like the fall of the Roman Empire or the Industrial Revolution, loudly announce their imminent arrival. Once those first factories in the north of England started to appear, the productive capacities of the spinning jenny and the steam engine were so evident that they augured disruption on a mass scale. Any contemporary observer who treated these technological developments as but one among many interesting social, cultural and political developments taking place in early 19th century Europe was, in a manner of speaking, so busy studying molehills that he failed to notice the sudden appearance of a towering mountain.

What we are going through at the moment is, at a conservative estimate, analogous to the Industrial Revolution. The rapid emergence of sophisticated models of artificial intelligence has enormous implications for the future of the human race. If they are harnessed for good, they could liberate humans from hard toil, end material scarcity, and facilitate enormous breakthroughs in areas from medicine to the arts. If they are harnessed for ill, they could lead to mass immiseration, cause war or pestilence on an unprecedented scale, or even make obsolete the human race.

But while all of this is as obvious as the significance of the Industrial Revolution should have been in the Manchester of the early 19th century, an astonishing number of people are choosing to keep studying their little molehills. Yes, every fashionable conference has some panel on AI. Yes, social media is overrun with hypemen trying to alert their readers to the latest “mind-blowing” improvements of Grok or ChatGPT. But even as the maturation of AI technologies provides the inescapable background hum of our cultural moment, the mainstream outlets that pride themselves on their wisdom and erudition—even, in moments of particular self-regard, on their meaning-making mission—are lamentably failing to grapple with its epochal significance.

A recent viral essay in The New Yorker provides an extreme, but not an altogether atypical, illustration of the problem. “A.I. is frankly gross to me,” its author, Jia Tolentino, avows. “It launders bias into neutrality; it hallucinates; it can become ‘poisoned with its own projection of reality.’ The more frequently people use ChatGPT, the lonelier, and the more dependent on it, they become.” At least Tolentino has the honesty to acknowledge the astonishing fact that “I have never used ChatGPT.” Though the author considers herself a progressive, her basic attitude to new technologies resembles that of a reactionary 19th century priest who denounces the railways as the devil’s work—before proudly mentioning that he himself has, of course, never engaged in the sin of riding one.

Mainstream outlets from The New York Times to NPR do have some smart assessments of the state, the stakes, and the likely future of artificial intelligence. But a depressingly large share of the AI coverage you are likely to encounter in those storied publications comes in three graduated forms of what I’ve come to think of as “AI denialism.”

There are the articles which dismiss AI as incompetent, portraying chatbots as perennially prone to hallucinations and incapable of delivering on basic tasks like fact-checking. Then, there are the articles which claim that, far from being truly intelligent, AI is merely a pattern-matching machine, a sort of “stochastic parrot.” And finally, there are the articles which argue that the impact of AI on the economy has been vastly overstated, since its promised productivity gains have not yet materialized.

Hear no progress, speak no progress, see no progress.

“AI is incompetent.”

The first of these three genres constitutes the purest form of denialism, in that it at this stage has to stipulate things which are plainly wrong (as anyone who has actually bothered to use ChatGPT or Claude or Grok or Gemini or Deep Seek would well know). It just about remains true that there are certain specific tasks at which AI chatbots remain surprisingly inept. If you are searching for a particular quote you half remembered (as I often do), it is usually a mistake to ask them for help. For if they are unable to locate the true quotation, they somehow cannot resist the temptation to please you by making up a perfect—albeit fake—little soundbite.

But in most fields of endeavor, AI engines now rival all but the most gifted humans. They are astonishingly good at translating texts and at playing chess, at writing poetry and at teaching you new skills, at coding and at making illustrations, at diagnosing a medical condition and at summarizing a technical research paper in the form of a podcast. To dismiss this astonishing box of varied wonders on the basis of a few tasks the technology has not yet cracked is reminiscent of the well-worn joke about two old Jews who go to the circus. An acrobat crosses a high wire on a unicycle while juggling seven flaming torches and playing a virtuoso piece on the violin. Dismissively, one Jew turns to the other and laments: “Paganini, he isn’t.”

“AI is just a stochastic parrot.”

The second genre of denialism is at once more sophisticated and more hollow. It invokes a supposedly profound technical insight about the nature of AI—but ultimately amounts to little more than dismissive sloganeering, shrewdly disguised behind the cover of a half-understood incantation.

According to an influential 2021 paper, the problem with large language models is that they don’t truly understand the world; rather, they are merely parroting back human language based on a stochastic model of which words are usually associated with which other words in the large data sets on which they are trained. Far from being “intelligent,” AI chatbots turn out, upon further inspection, to be mere “stochastic parrots.”

The idea that AI chatbots are merely “stochastic parrots” is rooted in an uncontested truth about the nature of these technologies: the algorithms really do draw on vast data sets to predict what the next word in a text, or pixel in a painting, or sound in a piece of music might be. But evocative though the invocation of this fact may sound, it does not magically make the prodigious abilities of artificial intelligence disappear. If chatbots fulfill tasks in the blink of an eye over which skilled humans used to labor for weeks, this advance will transform the world—whether for good or ill—irrespective of how the bots are able to do so.

Nor is the observation that chatbots use stochastic reasoning as disqualifying as it first appears. We are about as far from understanding how the human mind works as we are from understanding what exactly makes ChatGPT tick. But there is good reason to believe that our own astonishing ability to comprehend and manipulate the world is itself rooted in our pattern-matching abilities. Indeed, the pattern-matching that supposedly makes artificial intelligence a mere “stochastic parrot” might actually make it more similar to humans than its high-minded critics want to admit.

In May 1997, Garry Kasparov, then the best chess player in human history, lost to Deep Blue, a vast IBM machine spanning several refrigerator-sized cabinets. As he later recounted, he was particularly shaken by one move made by the machine. Kasparov believed that Deep Blue would make a move which offered a big tactical advantage even though he could sense, based on his vast experience, that doing so would ultimately weaken its position. But Deep Blue, which was but a giant calculating machine playing out as many scenarios as far out as possible, did not fall for the trap. Its move was shocking to Kasparov because he realized that a machine was able to come up with the intuitively best option—something that felt quintessentially human—by mere calculation.

Now, what’s fascinating about today’s chatbots, which vastly outperform Deep Blue, is that they work in a completely different manner. Deep Blue “knew” the rules of chess, which allowed the machine to consider millions of possible scenarios through brute-force calculation, and arrive at the right conclusion through a sheer act of calculative might. Today’s large language models, by contrast, draw on their vast database of past chess games to predict which move feels right. In other words, the fact that, unlike Deep Blue, ChatGPT operates like a “stochastic parrot” makes it more, not less, similar to the way in which astonishingly accomplished humans like Garry Kasparov play the game.

“AI won’t have that much impact, anyway.”

The final form of denialism is about the economic impact of this technology. When OpenAI released ChatGPT 3.5 in November 2022, some observers predicted an immediate and devastating effect on white-collar jobs. A few industries have already been hard hit. While economists over the last decade urged career-minded students to learn coding in order to future-proof their careers, computer programmers have rapidly gone from commanding astonishing wages to being more likely than recent graduates of far less “safe” fields like art history or philosophy to be out of a job. But on the whole, the wholescale disruption of white-collar workplaces is so far conspicuous by its absence—as are the promised gains in productivity.

This makes it tempting to predict that the invention of artificial intelligence will, at least in economic terms, turn out to be much less important than meets the eye. Some distinguished economists argue that the job market will for the foreseeable future hardly be impacted by AI. Others argue that the sky-high valuations of companies like OpenAI will prove to be a giant mistake, with the ever-growing costs of training ever-more sophisticated AI models not sufficiently offset by future revenues. In the end, they argue, this moment will be remembered for the irrationality of its collective hubris, just as the DotCom Bubble of 2000 was.

The obvious way to rebut this argument is to point out that the DotCom bubble turned out to be but a temporary downturn. Yes, plenty of useless companies were vastly overvalued before the bubble burst in March 2000. But the hype about the internet has since turned out to be fully justified. A quarter century on from the “bubble,” the NASDAQ is four times higher than it was before it burst, and tech companies make up a huge share of the world’s stock market capitalization. It has become undeniable that the world economy has been fundamentally transformed by digital technology.

The deeper way to rebut skepticism about the economic impact of AI is to point out that technology-induced improvements in productivity require a combination of two things: new technologies which can augment or substitute for human labor; and the organizational changes which allow firms to harness them. Technologies which produce incremental increases in productivity in particular industries are often easy to implement, in part because they tend to be the result of concerted efforts by incumbent firms to expedite existing production processes. Technologies which produce large increases in productivity across industries are often hard to implement, in part because they—as in the case of artificial intelligence—usually come from outside the existing industrial structure and require much more fundamental organizational changes before they can be implemented.

Take one example: Studies suggest that AI bots are now as effective as the most skilled doctors at many key medical tasks, such as interpreting sophisticated test results or diagnosing a patient’s condition based on a diffuse set of symptoms. But because of the extremely strict regulations which govern the health care system—and the power of medical professionals, who have every incentive to avoid being replaced—the actual practice of medicine has so far changed little. This tells us less about the long-term potential of new technologies than it does about how slow complex systems are to adapt to them, especially when the salaries of well-connected professionals in highly protected industries are on the line. As in many previous instances of technological disruption, these forces are proving capable of containing the rising tide for a surprisingly long period of time; but it would be foolish to predict that the dam can hold forever.

Ten years ago, the conventional wisdom held that technological advances would imperil many blue-collar jobs, like those of truck drivers. Now, the astonishing advances in text-based AI have convinced many commentators that white-collar professionals, from paralegals to HR professionals, will be the first to lose their job. But it is worth noting that there is another very large hammer which has not yet fallen. While it has turned out to be more difficult to build robots which can maneuver around the physical world with dexterity than to build chatbots that can perform high-level cognitive tasks, there will come a time in the relatively near future in which machines capable of doing both tasks simultaneously will be produced in large numbers. At that point, both white-collar and blue-collar jobs will be imperilled en masse.

This makes me skeptical of the argument that even sophisticated economists now seem to fall back on to downplay the likely impact of artificial intelligence. They like to point out that, despite dire predictions by contemporaries, past technological transformations from the invention of the printing press to the automatization of factory work did not lead to mass unemployment. While certain categories of workers were indeed decimated by these developments, they also gave rise to the need for wholly new categories. There may no longer be scribes who copy books by hand; but (as the state of my inbox can attest) there are now plenty of marketing professionals who earn their living by pitching authors to podcast hosts. Similarly, the number of coal miners may have plummeted over the last decades; but there is now a significantly greater number of professional yoga teachers in the United States.

That argument has so far proven correct at every historical juncture. But that is because we have never before in the history of humanity been faced with an embodied form of general intelligence that outshines the vast majority of humans at the vast majority of tasks. Whether the principle of historical replacement of job categories which has held for past technological innovations can persist in the face of this unprecedented innovation remains at best an open question. Personally, I suspect that the people now claiming that the impact of AI on the job market will resemble that of the steam engine will suffer the same fate as Malthus, whose theory about the dangers of unchecked population growth proved astonishingly informative in describing every historical moment up until the very juncture at which he wrote—but turned out to be badly wrong about everything that happened after.

I have an admission to make.

Intellectually, I have become deeply convinced that the importance of AI is, if anything, under hyped. The sorry attempts to pretend we don’t stand at the precipice of a technological, economic, social and cultural revolution are little more than cope. In theory, I have little patience for the denialism about the impact of artificial intelligence which now pervades much of the public discourse.

But in practice, I too find it hard to act on that knowledge. I am not a computer programmer, so I don’t have all that many useful things to say about the technology. I am not deeply enmeshed in tech circles, so I struggle to identify the best people with whom to talk about these topics. Most articles we publish in Persuasion don’t touch on AI, and the ones that do often get surprisingly little pickup.

But if there is one thing I have learned in my writing career so far, it is that it eventually becomes untenable to bury your head in the sand. For an astonishingly long period of time, you can pretend that democracy in countries like the United States is safe from far-right demagogues or that wokeness is a coherent political philosophy or that financial bubbles are just a figment of pessimists’ imagination; but at some point the edifice comes crashing down. And the sooner we all muster the courage to grapple with the inevitable, the higher our chances of being prepared when the clock strikes midnight.

AI disagreements

Bloodinthemachine • Brian Merchant • August 7, 2025

Technology•AI•ArtificialGeneralIntelligence•AIAlignment•AIDoomer•Essays

Hello all,

Well, here’s to another relentless week of (mostly bad) AI news. Between the AI bubble discourse—my contribution, a short blog on the implications of an economy propped up by AI, is doing numbers, as they say—and the AI-generated mass shooting victim discourse, I’ve barely had time to get into OpenAI. The ballooning startup has released its highly anticipated GPT-5 model, as well as its first actually “open” model in years, and is considering a share sale that would value it at $500 billion. And then there’s the New York Times’ whole package of stories on Silicon Valley’s new AI-fueled ‘Hard Tech’ era.

That package includes a Mike Isaac piece on the vibe shift in the Bay Area, from the playful-presenting vibes of the Googles and Facebooks of yesteryear, to the survival-of-the-fittest, increasingly right-wing-coded vibes of the AI era, and a Kate Conger report on what that shift has meant for tech workers. A third, by Cade Metz, about “the Rise of Silicon Valley’s Techno-Religion,” was focused largely on the rationalist, effective altruist, and AI doomer movement rising in the Bay, and whose base is a compound in Berkeley called Lighthaven. The piece’s money quote is from Greg M. Epstein, a Harvard chaplain and author of a book about the rise of tech as a new religion. “What do cultish and fundamentalist religions often do?” he said. “They get people to ignore their common sense about problems in the here and now in order to focus their attention on some fantastical future.”

All this reminded me that not only had I been to the apparently secret grounds of Lighthaven (the Times was denied entry), late last year, where I was invited to attend a closed door meeting of AI researchers, rationalists, doomers, and accelerationists, but I had written an account of the whole affair and left it unpublished. It was during the holidays, I’d never satisfactorily polished the piece, and I wasn’t doing the newsletter regularly yet, so I just kind of forgot about it. I regret this! I reread the blog and think there’s some worthwhile, even illuminating stuff about this influential scene at the heart of the AI industry, and how it works. So, I figure better late than never, and might as well publish now.

The event was called “The Curve” and it took place November 22-24th, 2024, so all commentary should be placed in the context of that timeline. I’ve given the thing a light edit, but mostly left it as I wrote it late last year, so some things will surely be dated.

A couple weeks ago, I traveled to Berkeley, CA, to attend the Curve, an invite-only “AI disagreements” conference, per its billing. The event was held at Lighthaven, a meeting place for rationalists and effective altruists (EAs), and, according to a report in the Guardian, allegedly purchased with the help of a seven-figure gift from Sam Bankman-Fried. As I stood in the lobby, waiting to check in, I eyed a stack of books on a table by the door, whose title read Harry Potter and the Methods of Rationality. These are the 660,000-word, multi-volume works of fan fiction written by rationalist Eliezer Yudkowsky, who is famous for his assertion that tech companies are on the cusp of building an AI that will exterminate all human life on this planet.

The AI disagreements encountered at the Curve were largely over that very issue—when, exactly, not if, a super-powerful artificial intelligence was going to arise, and how quickly it would wipe out humanity when it did so. I’ve been to my share of AI conferences by now, and I attended this one because I thought it might be useful to hear this widely influential perspective articulated directly by those who believe it, and because there were top AI researchers and executives from leading companies like Anthropic in attendance, and I’d be able to speak with them one on one.

I told myself I’d go in with an open mind, do my best to check my priors at the door, right next to the Harry Potter fan fiction. I mingled with the EA philosophers and the AI researchers and doomers and tech executives. Told there would be accommodations onsite, I arrived to discover that, my having failed to make a reservation in advance, meant either sleeping in a pod or shared dorm-style bedding. Not quite sure I could handle the claustrophobia of a pod, I opted for the dorms.

I bunked next to a quiet AI developer who I barely saw the entire weekend and a serious but polite employee of the RAND corporation. The grounds were surprisingly expansive; there were couches and fire pits and winding walkways and decks, all full of people excitedly talking in low voices about artificial general intelligence (AGI) or super intelligence (ASI) and their waning hopes for alignment—that such powerful computer systems would act in concert with the interests of humanity.

I did learn a great deal, and there was much that was eye-opening. For one thing, I saw the extent to which some people really, truly, and deeply believe that the AI models like those being developed by OpenAI and Anthropic are just years away from destroying the human race. I had often wondered how much of this concern was performative, a useful narrative for generating meaning at work or spinning up hype about a commercial product—and there are clearly many operators in Silicon Valley, even attendees at this very conference, who are sharply aware of this particular utility, and able to harness it for that end. But there was ample evidence of true belief, even mania, that is not easily feigned. There was one session where people sat in a circle, mourning the coming loss of humanity, in which tears were shed.

The first panel I attended was headed up by Yudkowsky, perhaps the movement’s leading AI doomer, to use the popular shorthand, which some rationalists onsite seemed to embrace and others rejected. In a packed, standing-room only talk, the man outlined the coming AI apocalypse, and his proposed plan to stop it—basically, a unilateral treaty enforced by the US and China and other world powers to prevent any nation from developing more advanced AI than what is more or less currently commercially available. If nations were to violate this treaty, then military force could be used to destroy their data centers.

The conference talks were held under Chatham House Rule, so I won’t quote Yudkowsky directly, but suffice to say his viewpoint boils down to what he articulated in a TIME op-ed last year: “If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter.” At one point in his talk, at the prompting of a question I had sent into the queue, the speaker asked everyone in the room to raise their hand to indicate whether or not they believed AI was on the brink of destroying humanity—about half the room believed on our current path, destruction was imminent.

This was no fluke. In the next three talks I attended, some variation of “well by then we’re already dead” or “then everyone dies” was uttered by at least one of the speakers. In one panel, a debate between a former OpenAI employee, Daniel Kokotajlo, and Sayash Kapoor, a computer scientist who’d written a book casting doubt on some of these claims, the audience, and the OpenAI employee, seemed outright incredulous that Kapoor did not think AGI posed an immediate threat to society. When the talk was over, the crowd flocked around Kokotajlo, to pepper him with questions, while just a few stragglers approached Kapoor.

I admittedly had a hard time with all this, and just a couple hours in, I began to feel pretty uncomfortable—not because I was concerned with what the rationalists were saying about AGI, but because my apparent inability to occupy the same plane of reality was so profound. In none of these talks did I hear any concrete mechanism described through which an AI might become capable of usurping power and enacting mass destruction, or a particularly plausible process through which a system might develop to “decide” to orchestrate mass destruction, or the ways it would navigate and/or commandeer the necessary physical hardware to wreak its carnage via a worldwide hodgepodge of different interfaces and coding languages of varying degrees of obsolescence and systems that already frequently break down while communicating with each other.

I saw a deep fear that large language models were improving quickly, that the improvements in natural language processing had been so rapid in the last few years that if the lines on the graphs held, we’d be in uncharted territory before long, and maybe already were. But much of the apocalyptic theorizing, as far as I could tell, was premised on AI systems learning how to emulate the work of an AI researcher, becoming more proficient in that field until it is automated entirely. Then these automated AI researchers continue automating that increasingly advanced work, until a threshold is crossed, at which point an AGI emerges. More and more automated systems, and more and more sophisticated prediction software, to me, do not guarantee the emergence of a sentient one. And the notion that this AGI will then be deadly appeared to come from a shared assumption that hyper-intelligent software programs will behave according to tenets of evolutionary psychology, conquering perceived threats to survive, or desirous of converting all materials around it (including humans) into something more useful to its ends. That also seems like a large and at best shaky assumption.

There was little credence or attention paid to recent reports that have shown the pace of progress in the frontier models has slowed—many I spoke to felt this was a momentary setback, or that those papers were simply overstated—and there seemed to be a widespread propensity for mapping assumptions that may serve in engineering or in the tech industry onto much broader social phenomena.

When extrapolating into the future, many AI safety researchers seemed comfortable making guesses about the historical rate of task replacement in the workplace begot by automation, or how quickly remote workers would be replaced by AI systems (another key road-to-AGI metric for the rationalists). One AI safety expert said, let’s just assume in the past that automation has replaced 30% of workplace tasks every generation, as if this were an unknowable thing, as if there were not data about historical automation that could be obtained with research, or as if that data could be so neatly quantified into such a catchy truism. I could not help but think that sociologists and labor historians would have had a coronary on the spot; fortunately, none seem to have been invited.

A lot of these conversations seemed to be animated displays of mutual bias confirmation, in other words, between folks who are surely quite good at computational mathematics, or understanding LLM training benchmarks, but who all share similar backgrounds and preoccupations, and who seem to spend more time examining AI output than how it’s translating into material reality. It often seemed like folks were excitedly participating in a dire, high-stakes game, trying to win it with the best-argued case for alignment, especially when they were quite literally excitedly participating in a game; Sunday morning was dedicated to a 3-hour tabletop role-playing game meant to realistically simulate the next few years of AI development, to help determine what the AI-dominated future of geopolitics held, and whether humanity would survive.

(In the game, which was played by 20 or so attendees divided into two teams, AGI is realized around 2027, the US government nationalizes OpenAI, Elon Musk is put in charge of the new organization, a sort of new Manhattan Project for AI, and competition heats up with China; fortunately, the AI was aligned properly, so in the end, humanity is not extinguished. Some of the players were almost disappointed. “We won on a technicality,” one said.)

The tech press was there, too—Platformer’s Casey Newton, myself, the New York Times’ Kevin Roose, and Vox’s Kelsey Piper, Garrison Lovely, and others. At one point, some of us were sitting on a couch surrounded by Anthropic guys, including co-founder Jack Clark. They were talking about why the public remained skeptical of AI, and someone suggested it was due to the fact that people felt burned by crypto and the metaverse, and just assumed AI was vaporware too. They discussed keeping journals to record what it was like working on AI right now, given the historical magnitude of the moment, and one of the Anthropic staff mentioned that the Manhattan Project physicists kept journals at Los Alamos, too.

It was pretty easy to see why so much of the national press coverage has been taken with the “doomer” camps like the one gathered at Lighthaven—it is an intensely dramatic story, intensely believed by many rich and intelligent people. Who doesn’t want to get the story of the scientists behind the next Manhattan Project—or be a scientist wrestling with the complicated ethics of the next world-shattering Manhattan Project-scale breakthrough? Or making that breakthrough?

Not possessing a degree in computer science, or having studied natural language processing for years myself, if even a third of my AI sources were so sure that an all-powerful AI is on the horizon, that would likely inform my coverage, too. No one is immune to biases; my partner is a professor of media studies, perhaps that leads me to skew more critical to the press, or to be overly pedantic in considering the role of biases in overly long articles like this one. It’s even possible I am simply too cynical to see a real and present threat to humanity, though I don’t think that’s the case. Of course I wouldn’t.

So many of the AI safety folks I met were nice, earnest, and smart people, but I couldn’t shake the sense that the pervasive AI worry wasn’t adding up. As I walked the grounds, I’d hear snippets of animated chatter; “I don’t want to over-index on regulation” or “imagine 3x remote worker replacement” or “the day you get ASI you’re dead though.” But I heard little to no organizing. There was a panel with an AI policy worker who talked about how to lobby DC politicians to care about AI risk, and a screening of a documentary in progress about SB 1047, the AI safety bill that Gavin Newsom vetoed, but apart from that, there was little sense that anyone had much interest in, you know, fighting for humanity. And there were plenty of employees, senior researchers, even executives from OpenAI, Anthropic, and Google’s Deepmind right there in the building!

If you are seriously, legitimately concerned that an emergent technology is about to exterminate humanity within the next three years, wouldn’t you find yourself compelled to do more than argue with the converted about the particular elements of your end times scenario? Some folks were involved in pushing for SB 1047, but that stalled out; now what? Aren’t you starting an all-out effort to pressure those companies to shut down their operations ASAP? That all these folks are under the same roof for three days, and no one’s being confronted, or being made uncomfortable, or being protested—not even a little bit—is some of the best evidence I’ve seen that all the handwringing over AI Safety and x-risk really is just the sort of amped-up cosplaying its critics accuse it of being.

And that would be fine, if it wasn’t taking oxygen from other pressing issues with AI, like AI systems’ penchant for perpetuating discrimination and surveillance, degrading labor conditions, running roughshod over intellectual property, plagiarizing artists’ work, and so on. Some attendees openly weren’t interested in any of this. The politics in the space appeared to skew rightward, and some relished the way AI promises to break open new markets, free of regulations and constrictions. A former Uber executive, who admitted openly that what his last company did “was basically regulatory arbitrage” now says he plans on launching fully automated AI-run businesses, and doesn’t want to see any regulation at all.

Late Saturday night, I was talking with a policy director, a local freelance journalist, and a senior AI researcher for one of the big AI companies. I asked the AI developer if it bothered him that if everything said at the conference thus far was to be believed, his company was on the cusp of putting millions of people out of work. He said yeah, but what should we do about it? I mentioned an idea or two, and said, you know, his company doesn’t have to sell enterprise automation software. A lot of artists and writers were already seeing their wages fall right now. The researcher looked a little pained, and laughed bleakly. It was around that point that the journalist shared that he had made $12,000 that year. The AI researcher easily might have made 30 times that.

It echoed a conversation I had with Jack Clark, of Anthropic. It was a bit strange to see him here, in this context; years ago, he’d been a tech journalist, too, and we’d run in some of the same circles. We’d met for coffee some years ago, around when he’d left journalism to start a comms gig at OpenAI, where he’d do a stint before leaving to co-found Anthropic. At first I wonder if it’s awkward because I’m coming off my second mass layoff event in as many media jobs, and he’s now an executive of a $40 billion company, but then I recall that I’m a member of the press, and he probably just doesn’t want to talk to me.

He said that what AI is doing to labor might get government to finally spark a conversation about AI’s power, and to take it seriously. I wondered—wasn’t his company profiting from selling the automation services that was threatening labor in the first place? Anthropic does not, after all, have to partner with Amazon and sell task-automating software. Clark says that’s a good point, a good question, and they’re gathering data to better understand exactly how job automation is unfolding, and he hopes to be able to make it public. “I want to release some of that data, to spark a conversation,” he said.

I press him about the AGI business, too. Given he is a former journalist, I can’t help but wonder if on some level he doesn’t fully buy the imminent super-intelligence narrative either. But he doesn’t bite. I ask him if he thinks that AGI, as a construct, is useful in helping executives and managers absolve themselves and their companies of actions that might adversely effect people. “I don’t think they think about it,” Clark said, excusing himself.

The contradictions were overwhelming, and omnipresent. Yet relatively few people here were disagreeing. AGI was an inexorable force, to be debated, even wept over, as it risked destroying us all. I do not intend to demean these concerns, just question them, and what’s really going on here. It was all thrown into even sharper relief for me, when, just two weeks after the Curve, I attended a conference in DC on nuclear security, and listened to a former Commander of Stratcom discuss plainly how close we are to the brink of nuclear war, no AI required, at any given time. A phone call would do the trick.

I checked out of the Curve confident that there is no conspiracy afoot in Silicon Valley to convince everyone AI is apocalyptically powerful. I left with the sense that there are some smart people in AI—albeit often with apparently limited knowledge of real-world politics, sociology, or industrial history—who see systems improving, have genuine and deep concerns, and other people in AI who find that deep concern very useful for material purposes. Together, they have cultivated a unique and emotionally charged hyper-capitalist value system with its own singular texture, one that is deeply alienating to anyone who has trouble accepting certain premises. I don’t know if I have ever been more relieved to leave a conference.

The net result, it seems to me, is that the AGI/ASI story imbues the work of building automation software with elevated importance. Framing the rise of AGI as inexorable helps executives, investors, and researchers, even the doom-saying ones, to effectively minimize the qualms of workers and critics worried about more immediate implications of AI software.

You have to build a case, an AI executive said at a widely attended talk at the conference, comparing raising concerns over AGI to the way that the US built its case for the invasion of Iraq.

But that case was built on faulty evidence, an audience member objected.

It was a hell of a demo, though, the AI executive said.

Why AI Disrupts Software First

Om • Om Malik • August 6, 2025

Technology•AI•Software•Startups•Innovation•Essays

A few days ago, when decoding Microsoft CEO Satya Nadella’s memo to his company about layoffs and artificial intelligence, I said that “this is not just about Microsoft, but pretty much every software company will be hit hard by this wave of transformation.” The point I was making in the piece was that AI is coming, and the first domino to fall will be software.

When watching this excellent conversation between Stripe co-founder John Collison and Anthropic CEO Dario Amodei, the latter eloquently outlines non-financial reasons as to why the software sector is getting (and will keep getting) disrupted by AI. Anthropic is one of the fastest-growing businesses — with over $4 billion in annual recurring revenue — and one of the key cornerstones of its growth is Claude Code. “We’ve actually managed to make Claude good in a way that’s relevant to what people actually use,” Amodei said in the conversation.

“The people who write code are very socially and technically adjacent to the folks who develop AI models, and so the diffusion is very fast,” Amodei told Collison. “They’re also the kind of people who are early adopters, who are used to new technology.”

“The big growth in code, you know, I would say the biggest cause of that is just that the people doing it and the startups devoted to it are fast adopters who understand the technology super well,” Amodei said.

“I think code is maybe an early indicator,” he said, viewing coding/software as “an early indicator, like a premonition of what’s going to happen everywhere else.” Amodei argued that traditional enterprises are slower to change. Large entities such as banks, insurance companies, and pharmaceutical firms have organizational inertia, but in time they will adapt. This vision of widespread AI adoption fuels Amodei’s ambition. He dreams of making Anthropic into a “one-stop shop for AI,” adding, “We think of ourselves as a platform company first.”

“One of the fundamental experiences and uncertainties of working at or running something like Anthropic is you kind of don’t know. You make this exponential projection. It sounds crazy. It might be crazy. But also, it might not be crazy because that trend line has followed before.”

Of all Amodei’s recent interviews, this conversation clearly outlines where we stand with AI and what the long-term vision is for Anthropic, the company behind Claude and OpenAI’s main competitor. It’s worth watching!

From Knowledge to Action

Tomtunguz • August 7, 2025

Technology•AI•Machine Learning•Workflow Automation•Tool Integration•Essays

GPT-5 represents a significant evolution in artificial intelligence, marking a shift from purely knowledge-based capabilities toward actionable intelligence. While previous models excelled at retrieving and reorganizing information, GPT-5's revolutionary strength lies in its ability to execute tasks through tool-calling and strategic model selection. This advancement establishes a new dimension beyond raw knowledge, allowing AI to move from advice-giving to direct action-taking within complex workflows.

The benchmarks GPT-5 has achieved—94.6% on AIME 2025 and 74.9% on SWE-bench—highlight its exceptional knowledge prowess, but these benchmarks also signal an approaching saturation point where future incremental improvements in knowledge alone yield diminishing returns. The real differentiator for GPT-5 and future models is their capacity to orchestrate workflows and integrate with external systems through tool-calling. This feature mitigates two fundamental limitations of pure language models. First, workflow orchestration: unlike single-shot responses, GPT-5 can manage multi-step, stateful processes, maintain context over multiple operations, handle errors, and keep track of progress. Second, system integration: by calling external tools like databases, APIs, and enterprise software, it can translate natural language commands into executable actions outside the text-only environment of language models.

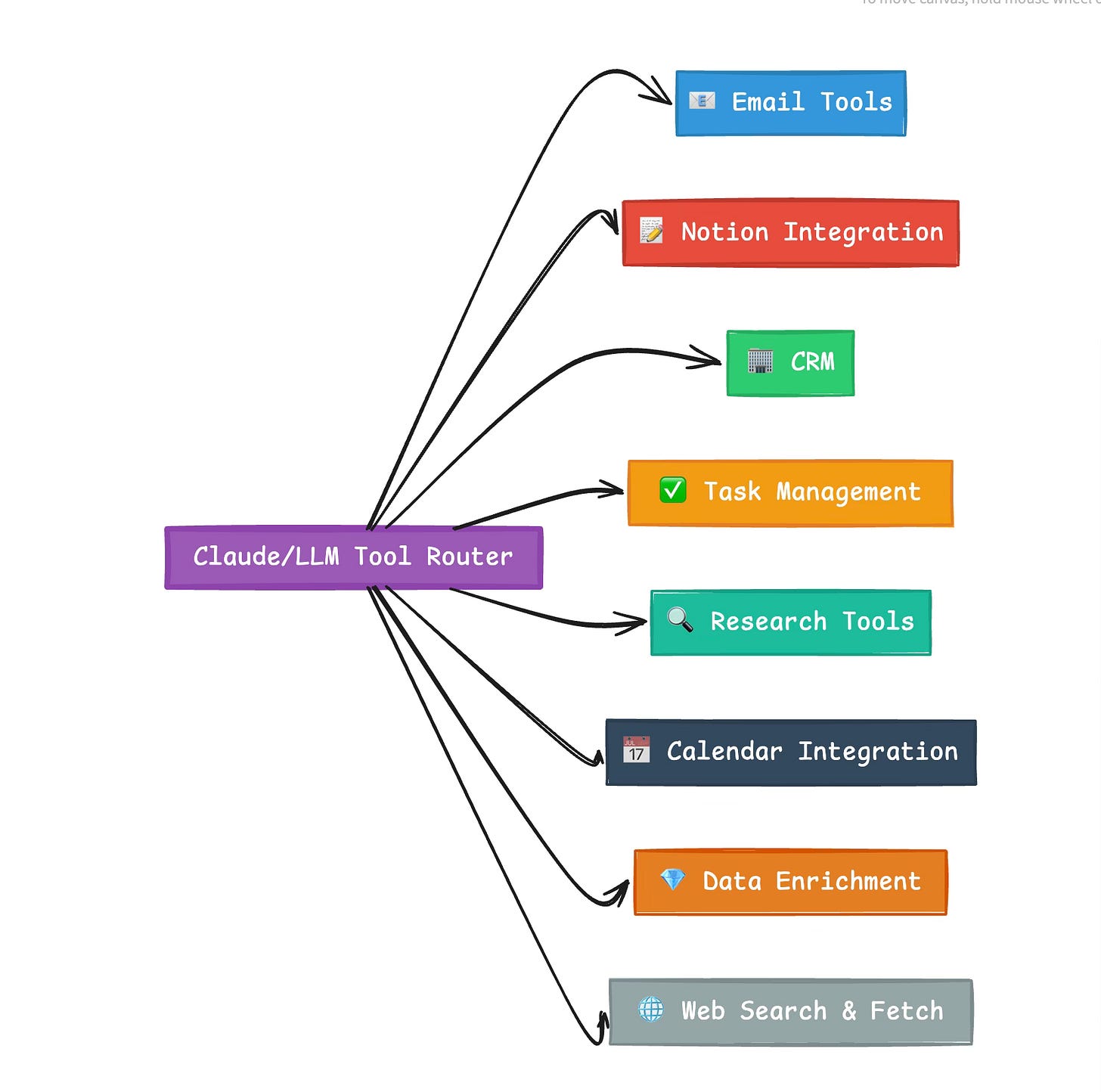

The ability to select the appropriate tool quickly and correctly is critical for robust AI performance. Missteps in tool routing can derail entire workflows, so precision and sophistication in managing tool usage underpin productivity gains. The author, who has personally built 58 different AI tools ranging from email processors and CRM integrations to research assistants, underscores the transformative potential of given the scale of such workflows. Simple commands, such as analyzing an email to identify startups not in a CRM, can trigger complex automated sequences replacing what once required lengthy manual workflows.

Further enhancing reliability is GPT-5’s self-verification loop, which ensures that tasks are completed on time and correctly, an innovation that injects consistency to automated processes that is otherwise difficult to achieve. When deployed across large organizations with thousands of workflows and employees, the productivity impact multiplies exponentially, allowing companies to scale operations efficiently.

The article concludes that the future AI landscape will reward those who master tool orchestration and query routing, effectively turning managers into "agent managers" who coordinate AI agents rather than manually handling every task. This shift promises not only enhanced efficiency but a fundamental reshaping of how human labor and AI collaboration operate in practice.

Key takeaways:

GPT-5 surpasses traditional benchmarks through strategic tool-calling and multi-model selection.

Knowledge alone is reaching a performance ceiling; actionability distinguishes next-gen AI.

Tool-calling allows AI to orchestrate complex, multi-step workflows and interface with non-text systems.

Precision in selecting the right tool is crucial to avoid workflow failures.

Self-verification loops enhance reliability and trust in AI-driven processes.

Productivity gains amplify dramatically at scale, managing thousands of automated workflows.

Future AI success depends on tool orchestration sophistication and operational predictability, ushering in a new era of AI agent management.

Enough

Benn • Benn Stancil • August 8, 2025

Technology•AI•Wealth•Social Impact•Finance•Essays

At a party given by a billionaire on Shelter Island, Kurt Vonnegut informs his pal, Joseph Heller, that their host, a hedge fund manager, had made more money in a single day than Heller had earned from his wildly popular novel Catch-22 over its whole history. Heller responds, “Yes, but I have something he will never have … enough.”

– from Morgan Housel’s The Psychology of Money, recounting a story from Vanguard founder John Bogle

It’s both everywhere and, somehow, still, nobody knows how to talk about it.

I don’t know what else we would say. I don’t know what we can say, other than what everyone already says: “It’s gotten so crazy,” and, “can you imagine?,” and, “man, that is a lot of money.”

But man, that is a lot of money.

Which one? I can’t keep track. It’s OpenAI, raising money that values the company at $500 billion, which is $200 billion more than its valuation just five months ago—which was, then, the largest private fundraise in history. It’s Meta, adding almost $200 billionto its market cap in a day, only to be outdone by Microsoft going up by $265 billion on the same day. It’s Microsoft, becoming a $4 trillion company, less than seven years after Apple became the first company to reach a measly one trillion. (Even Broadcom—whose website looks like a regional home security provider1—is worth more than that today.) And that all happened over the last week.

The week before, it was Ramp, raising $500 million—million, with an M, how quaint—at a $22.5 billion valuation, less than two months after they raised $200 million at a $16 billion valuation. It was Meta, buying Scale AI’s CEO for $15 billion, or OpenAI, buying Jony Ive for $6 billion. It was Meta again, trying to buy an engineer from Thinking Machines for $250 million a year, and not only getting rejected, but getting rejected for economically rational reasons, because Thinking Machines is currently worth $12 billion, and their executives’ pay packages might already be worth more than Meta’s offers.2 It’s $200 million to poach an Apple executive, and stories about $18 million offers, getting relegated to the final line of a daily beat report.

You become numb to it, until some fresh blockbuster jars you loose again. In one day, Microsoft grew by more than all of Roche ($247 billion), Toyota ($237 billion), IBM ($233 billion), and just a couple AI engineers less than LVMH ($266 billion). In one day, Mark Zuckerberg’s net worth grew by $27 billion—a full Rupert Murdoch; a full Peter Thiel; a full Steve Cohen; a full Jerry Jones and a full Marc Benioff. In a recent column about the OpenAI fundraise, Matt Levine reminded us that 1 basis point of OpenAI—one-hundredth of one percent; 0.01 percent; the amount an average employee getswhen they join an average late-stage startup—is worth $50 million.

Those are the numbers now, but I don’t know what to do with any of them.

GPT-5 Hands-On: Welcome to the Stone Age

Latent • August 7, 2025

Technology•AI•Software•Agents•ToolUse•Essays

OpenAI’s long-awaited GPT-5 is here, and early access partners have been testing it in various applications such as raindrop.ai, Cursor, Codex, and Canvas. This model is seen as a significant leap towards artificial general intelligence (AGI), especially excelling in software engineering by solving complex problems and managing large codebases effectively.

However, GPT-5 is not simply "better" at everything. It surprisingly performs worse at writing than previous versions like GPT-4.5 and GPT-4. Instead of fitting conventional expectations, these flaws have reshaped the understanding of AGI development by highlighting the importance of tool use. The Stone Age marked the dawn of human intelligence because humans learned to use tools—extending their capabilities externally, trading internal memory for external aids like writing.

GPT-5 marks a new era for large language models (LLMs) and agents by not just using tools but thinking and building with them. Unlike earlier models that used web search simply as a tool call, GPT-5 conducts deep research by iterating, planning, and exploring online information as part of its thinking process. It can leverage any tool if given the right access, and these tools can be categorized as internal retrieval, web search, code interpreters, or actions that trigger side effects.

A critical feature of GPT-5 is its ability to use tools in parallel effectively, allowing it to operate on longer time horizons and reduce latency, which opens doors for new product possibilities. It requires structured guidance, or a "compass," rather than heavy context loading. This means providing GPT-5 with clear instructions about its environment, such as project purpose, file organization, and evaluation criteria, which helps it onboard complex tasks efficiently.

In coding, GPT-5 demonstrated notable prowess by resolving intricate dependency conflicts quickly and accurately, showcasing behaviors akin to deep research and iterative problem-solving. It also easily generated complex websites and applications with fully functioning features like persistence of user data, outperforming competitors and past models in speed and accuracy.

While GPT-5 is a leap forward in coding and practical tool use, it is not yet strong in creative writing, where preceding models still excel. Overall, GPT-5 represents a foundational step toward more autonomous, intelligent agents that do not just respond but actively use and build with tools, pushing the frontier of what AI can accomplish in real-world applications.