Contents

Essays

Venture

AI

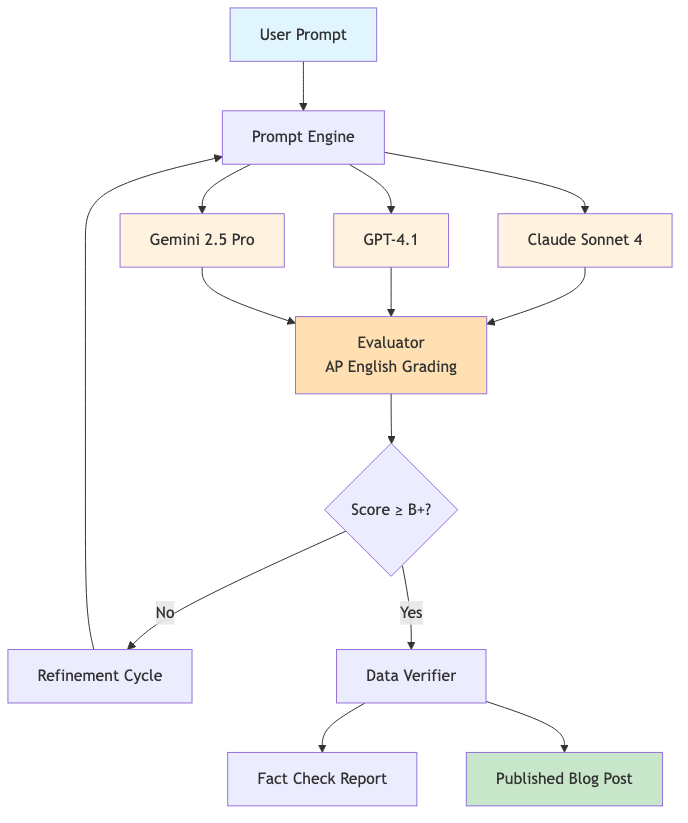

EvoBlog: Building an Evolutionary AI Content Generation System

AGI progress, surprising breakthroughs, and the road ahead — the OpenAI Podcast Ep. 5

Sam Altman addresses ‘bumpy’ GPT-5 rollout, bringing 4o back, and the ‘chart crime’

Context Engineering: Bringing Engineering Discipline to Prompts—Part 1

Anthropic takes aim at OpenAI, offers Claude to ‘all three branches of government’ for $1

Media

Regulation

China

Education

Crypto

Interview of the Week

Startup of the Week

Post of the Week

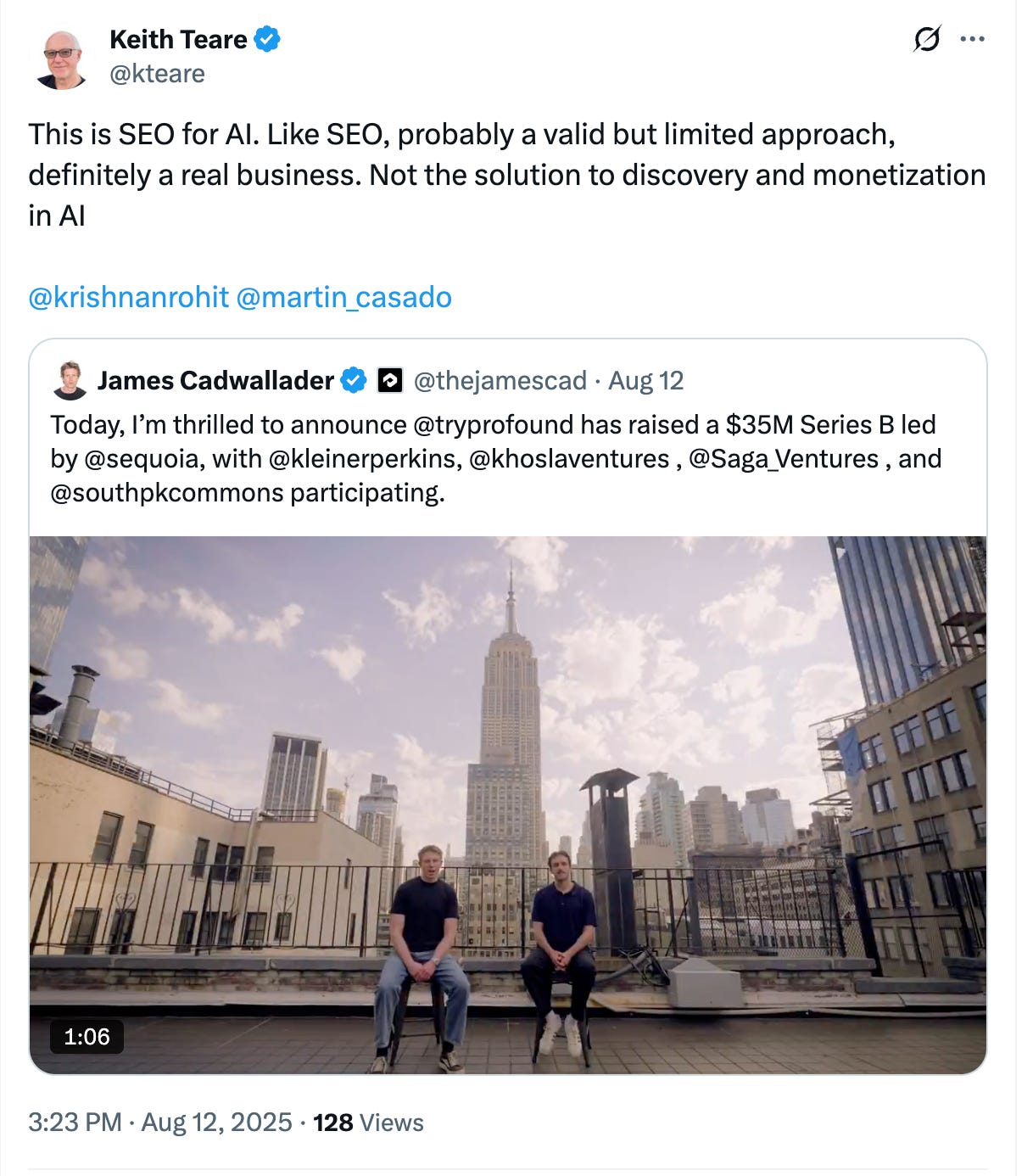

SEO for AI - Really?

Editorial:

When Your AI Breaks Your Heart: The Pain of Change

What happens when your favorite coworker comes in one morning with a new brain and a different personality? Or worse still, your life partner.

This week OpenAI chat users begged for 4o back, reporting that GPT-5 felt “off,” less helpful, less itself. As Sam Altman acknowledged in addressing the “bumpy” rollout, even OpenAI underestimated how deeply users had bonded with their AI companion’s specific personality. Many, particularly women who had come to treat 4o as a kind of boyfriend substitute, experienced the update as a genuine loss. A relationship had ended.

The subsequent week turned into a crisis-level PR effort for Altman and his team. GPT-4o was resurrected as a surviving alternative. Relationships could continue. But the question hangs over the week: was this the week everything changed, or the week nothing changed?

The Pain of Change

There is little doubt that GPT-5 is technically “better” than its predecessors. But users are forming bonds with AI personalities and react badly to inconsistency. They “lost a friend.”

The deeper issue is leadership. Altman proved this week he is no Elon Musk. Musk pushes through pain and criticism to stick to his vision. Altman, by contrast, slowed down, backtracked, and tried to soothe. That may look like good crisis management, but it revealed a softness at the very top of OpenAI. For a company whose stated goal is artificial superintelligence, such sensitivity to feedback raises questions about whether it has the stomach for the road ahead.

Change Everywhere, Or Nowhere?

The “pain of change” theme played out across this week’s stories. Stanford, heart of Silicon Valley, stuck with legacy admissions—a decision that looks like the week nothing changed. Meanwhile, O’Reilly’s Mike Loukides described a profound shift: from prompting to context engineering, where success depends on shaping AI’s inputs with precision. That looks like the week everything changed.

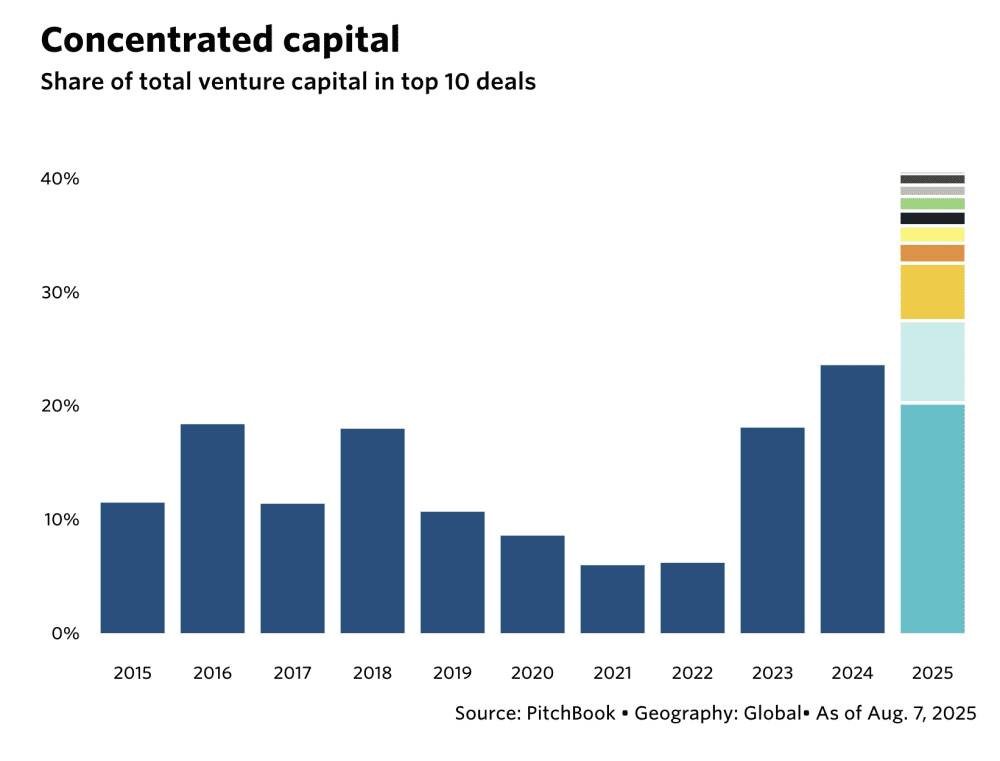

Venture capital tells the same dual story. Over 40% of dollars now flow into just 10 deals. This is not new—it’s the power law at work, a law of capitalism as much as venture. Winners win big, and everyone else struggles. Inequality widens, but technology continues to lift all boats. The same structural concentration that drives Anthropic and OpenAI into dominance also fuels opportunity for those who can own a meaningful piece of the future.

Regulation and Resistance

Resistance to change isn’t limited to users mourning lost AI personalities. Governments are intervening too. In the UK, age verification rules cut Pornhub traffic dramatically, showing how state regulation can reshape digital behavior overnight. If regulators can force every Briton to prove their age before watching video, AI will inevitably face its own set of controls. That reality makes debates about whether AI is “safe” or “aligned” somewhat secondary; governments will shape usage whether engineers want them to or not.

Browsers, Business Models, and Perplexity

Cloudflare CEO Matthew Prince warned that AI may be killing the web, and Perplexity’s audacious $34.5 billion offer for Google Chrome seemed to prove his point. But here, too, the truth is double-edged. On the one hand, Chrome’s value is immense—Google pays Apple $20 billion a year just to power Safari defaults, which makes $34 billion look laughably low. On the other, the very premise of buying a browser may be backward-looking. If AI interfaces replace browsers as the primary way we access information, Chrome’s centrality could fade.

What is clear is that AI is liberating content from the tyranny of URLs and browser tabs. Just as Spotify freed music from CDs and Netflix freed movies from DVDs, AI is freeing information from the browser. What matters is not the pipe, but the quality of the content and the fairness of its monetization.

This is why “SEO for AI” (see post of the week) is a contradiction in terms. Manipulating prompts to inject links degrades intelligence. The real prize is context engineering: supplying AI with authentic, structured, useful data. That’s why startups like Torch—which integrates years of health records into actionable insight—point to the real future.

Hinton, Hope, and Friction

Even Geoffrey Hinton, Nobel laureate and AI “godfather,” oscillates between hope and fear. In his interview this week, he estimated a 20% chance AI wipes out humanity—before admitting he was guessing. His proposal to give AI “maternal instincts” reflected cultural wishcasting more than technical realism.

The truth is starker: AI is built, trained, and bounded by engineers. It cannot evolve outside its programming. To the extent Hinton focuses on “guardrails” rather than innovation, he risks becoming a friction point rather than a change agent—turning himself into an irrelevance in the very field he helped found.

The Story We’re Writing

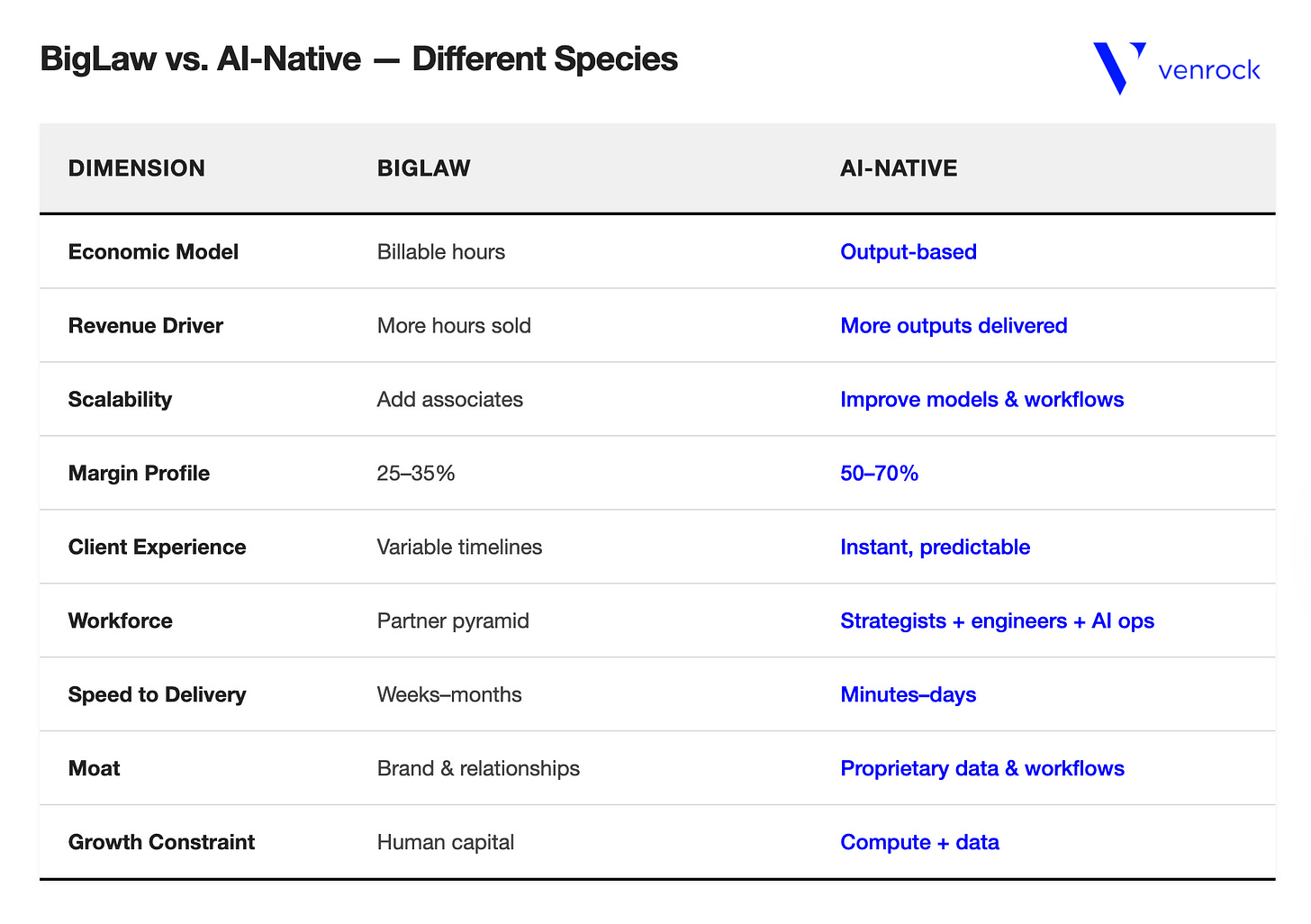

Storytelling may be dismissed as buzzword fluff, but it matters. Humans make meaning through narrative. One story of the week says “nothing changed”—legacy admissions endure, VC remains concentrated, the power law persists. The other story says “everything changed”—AI is redefining interfaces, repricing the $900 billion legal sector, reshaping how content is monetized, and turning even health records into insight. Both are true, depending on how you frame the week.

And that is the real lesson of GPT-4o’s brief “death” and resurrection. Users felt heartbreak because they had become attached to one particular story of AI—the friendly companion. OpenAI’s stumble revealed that the real story is larger, more disruptive, and sometimes more uncomfortable.

We are not passive consumers of this technology. We are participants, shaping what excellence, authenticity, and value mean in an AI-native world. The heartbreak of losing GPT-4o is real—but fleeting. The changes bearing down on us are far bigger.

Essays

The Abstractions, They Are a-Changing

Oreilly • August 12, 2025

AI•Abstraction•Context Engineering•LLMs•Essays

Since ChatGPT arrived, it’s been clear that computing was in for a major shift. It has taken a few years to grasp the outlines, but the picture is coming into focus—and it doesn’t look like a world where “we won’t need to program anymore.” The real question is what, exactly, we will need.

Martin Fowler has called this the biggest leap in abstraction since high‑level languages. If you’ve ever written assembly, you know what that first leap meant: instead of crafting machine instructions, you wrote in FORTRAN, COBOL, BASIC, and later C. Modern languages have improved enormously, but the conceptual distance from Rust to early FORTRAN is tiny compared with FORTRAN to assembler. That earlier era introduced a fundamental shift: formulas and control structures replaced opcodes and memory flags.

Language models represent a shift just as large. We no longer require small, precisely specified programming languages reserved for specialists. We can use natural language—vast vocabulary, flexible syntax, and productive ambiguity—to describe what we want computers to do, without spelling out every step. This isn’t merely “vibe coding,” though it does enable breathtakingly fast demos. Nor does it eliminate programmers. People who never learn to code will interact with computers more fluently, but we will still need practitioners who can translate between human intent and machine behavior, decompose complex problems, and steer systems when they go off course—when the model hallucinates, fixates on an error, or stalls. Those problems won’t evaporate; real software still demands professional judgment.

This change in abstraction alters what developers do. Expect more emphasis on testing, up‑front design, and reading and analyzing machine‑generated code. We’ve moved from simple code completion to interactive assistance to agentic coding. And yet a deeper shift lurks beneath the prompt, one we’re only beginning to see.

A few years ago, everyone talked about “prompt engineering,” often meaning simple framing tricks. Models have improved, but prompts that mediate software’s interaction with AI still matter; that more serious side of prompt engineering isn’t going away.

More recently, attention has turned to the layer under the prompt: context—the conversation history, what the model knows about your project, what it can retrieve or discover via tools, and even what it infers about you across interactions. Understanding and managing this has emerged as context engineering.

Context engineering must also account for failure modes. The job of managing context while co‑coding differs from designing context flows for agentic systems where chains of tool calls amplify errors. They’re related, but as different as “explain it with horses” is from reformatting a request with documents pulled via retrieval‑augmented generation.

Death of the Billable Hour: Legal’s $900B AI Repricing

Venrock • Ethan Batraski • August 12, 2025

AI•Work•Billable Hours•Big Law•Outcome Based Pricing•Essays

The legal industry’s last great inefficiency is ending. How AI-native firms will replace billable hours with outcome-based pricing at scale.

The legal industry represents one of the last great market inefficiencies in the modern economy. While every other sector discovered that speed and efficiency create competitive advantage, BigLaw built a $900 billion empire on the opposite insight: that scarcity and time consumption signal quality. They’ve trained clients to equate hours billed with value delivered, creating the only major industry where productivity gains threaten profitability.

This isn’t an accident—it’s a carefully constructed economic moat that has insulated legal services from normal market forces for decades.

But all monopolies eventually face their reckoning. When the marginal cost of diligence, drafting, or research approaches zero, pricing based on time becomes indefensible. Every other industry where technology erased time as the primary cost driver—trading, advertising, parts of consulting—saw incumbents lose pricing power overnight. Law is the last great holdout.

AI is about to end it.

The Billable Hour’s Last Stand

The traditional $900B legal services model relies on three foundational principles that AI fundamentally transforms:

Price tied to inputs (hours worked, not outcomes delivered)

Scale driven by associate leverage (junior lawyers billing at senior rates)

Efficiency as enemy (faster work means lower revenue)

This structure has survived for decades because it benefits everyone except the client. Partners maximize profits, Associates get training, and firms avoid the risk of outcome-based pricing. The client pays for this elaborate theater of productivity.

Within each practice area, 30–60% of billable hours (estimates based on Associates vs Partner billable hours) go to repeatable, rules-based tasks—the exact work AI can compress by 50–90%. A $1.5B firm generating $500M in billables from tasks that AI can reduce to minutes faces an existential math problem. Even with conservative adoption, that’s a $250M revenue line under direct pressure.

For legacy firms, AI creates an impossible choice:

Adopt AI → hours fall → revenue falls

Resist AI → clients defect to faster, cheaper competitors

Without pricing transformation, AI adoption creates a utilization crisis: too many associates for too little work.

Who will actually profit from the AI boom?

Noahpinion • August 10, 2025

AI•Capex•Competition•Valuations•Essays

In a post a week ago, I shared some pretty startling numbers about the size of the AI-related capex boom.

In fact, this boom is so big that in 2025 so far, AI-related investment has contributed more to economic growth than all the growth in consumer spending combined. Since consumption is more than three times as big as investment overall, this is a really startling fact — it means that consumption is sluggish, while AI capex is sustaining economic growth all by itself. Paul Kedrosky calls this a “private sector stimulus program”, and he’s not wrong.

My last post asked whether a crash in the AI sector would hurt the U.S. economy. But there’s another important question here, which is who is actually going to make a profit from all this spending. Will it be the AI model companies themselves, like OpenAI, xAI, and Anthropic? Will it be the companies that provide the compute to train and run the AI models — Amazon, Microsoft, and Google? Will it just be the GPU companies like Nvidia that provide the physical infrastructure?

The profit question is an important one if you’re an investor, of course, since corporate valuations are (usually) based on how much profit companies make — not on how much they invest or how much total revenue they generate. But it’s also important if we want to understand the social impact of the AI boom — in particular, the question of whether AI will lead to extreme economic inequality.

There’s a narrative out there that after AI takes everyone’s jobs, the only people in society who will have money are the people who own the AI companies — the Sam Altmans and Elon Musks of the world, and perhaps the Satya Nadellas and Jensen Huangs. It’s possible to spin sci-fi scenarios where the mass of humanity is impoverished and starving, while a few Robot Lords order their pet AI gods to use all of Earth’s resources to colonize the Solar System.

In reality, those scenarios would run into political problems (i.e., war) long before they came to pass. But it’s important to ask whether that’s the direction in which our economic system is naturally heading. Thomas Piketty, for instance, wrote that inequality in society tends to increase until some sort of major political event — war, revolution, etc. — forces it back down. Some people worry whether the AI boom will represent the fulfillment of that dark vision.

It’s worth it to note that so far, stock markets don’t actually expect anything that extreme to happen. When you look at the price-to-earnings ratios of the major public AI-related companies, they’re somewhat high but not particularly astronomical. As for OpenAI, xAI, and Anthropic, their combined valuation is still less than $1 trillion; for comparison, Nvidia’s current valuation is around $4.5 trillion. As for the broader market, the PE ratio of the S&P 500 is around 30 — historically somewhat high, but not astronomically high.

So we seem to have a disconnect between a popular narrative and market expectations. If AI is going to make all the money in the economy, why are markets not expecting companies to see truly wondrous profit growth? The answer, I think, is that markets are remembering something that popular commentary and folklore has forgotten — the importance of corporate competition in limiting capital income. Investors know that AI companies are going to compete with each other, and that this is going to limit how much they can profit from their creations.

We need to 80x private market transactions

Beehiiv • The Brief • August 13, 2025

Regulation•USA•Essays

Last week's Executive Order allows alternatives in 401(k)s… but democratization will break private markets unless we fix their infrastructure first.

It directs regulators to dismantle the Department of Labor’s guidance that has kept 90 million defined-contribution accounts, with over $9 trillion in assets, locked out of private equity, credit, real estate, and infrastructure.

The headlines focus on "access." The real story is scale.

Even a modest 2-3% allocation from the DC system means tens of billions in new capital annually. If allocations rise to 20% or more, as many predict, flows could exceed $1 trillion in just three years.

And there is an even bigger story

DC access coincides with private markets entering managed account platforms, the technology that lets advisors customize portfolios—often through model portfolios—for millions of individual clients. These platforms control $15T in assets. Combined with the newly accessible 401(k) market, private markets now face a $24T addressable market expansion.

Unlike individual investment decisions, these hybrid portfolios with pre-set asset allocation will combine public and private assets in a single portfolio, that thousands or millions of accounts follow systematically. When the model changes, such as adding a 5% allocation to private credit, every linked account rebalances instantly. What was once hundreds of brokerage trades per day across the industry becomes hundreds of thousands in a single overnight event.

To understand the scale: on a volatile day in public markets, a single model portfolio change might trigger hundreds of thousands of automated rebalancing transactions across a major platform.

Now imagine adding private credit allocations to those same model portfolios. Every rebalancing event becomes a flood of subscription documents hitting infrastructure built for manual processing

In other words: $24T in new private market transactions won’t be driven by individual investors placing brokerage trades one at a time.

They’ll be driven by model portfolios, the operating system of modern wealth management.

….

Can We Save The Web From AI? — With Cloudflare CEO Matthew Prince

Youtube • Alex Kantrowitz • August 13, 2025

Media•Publishing•Cloudflare•AI•MatthewPrince•Essays

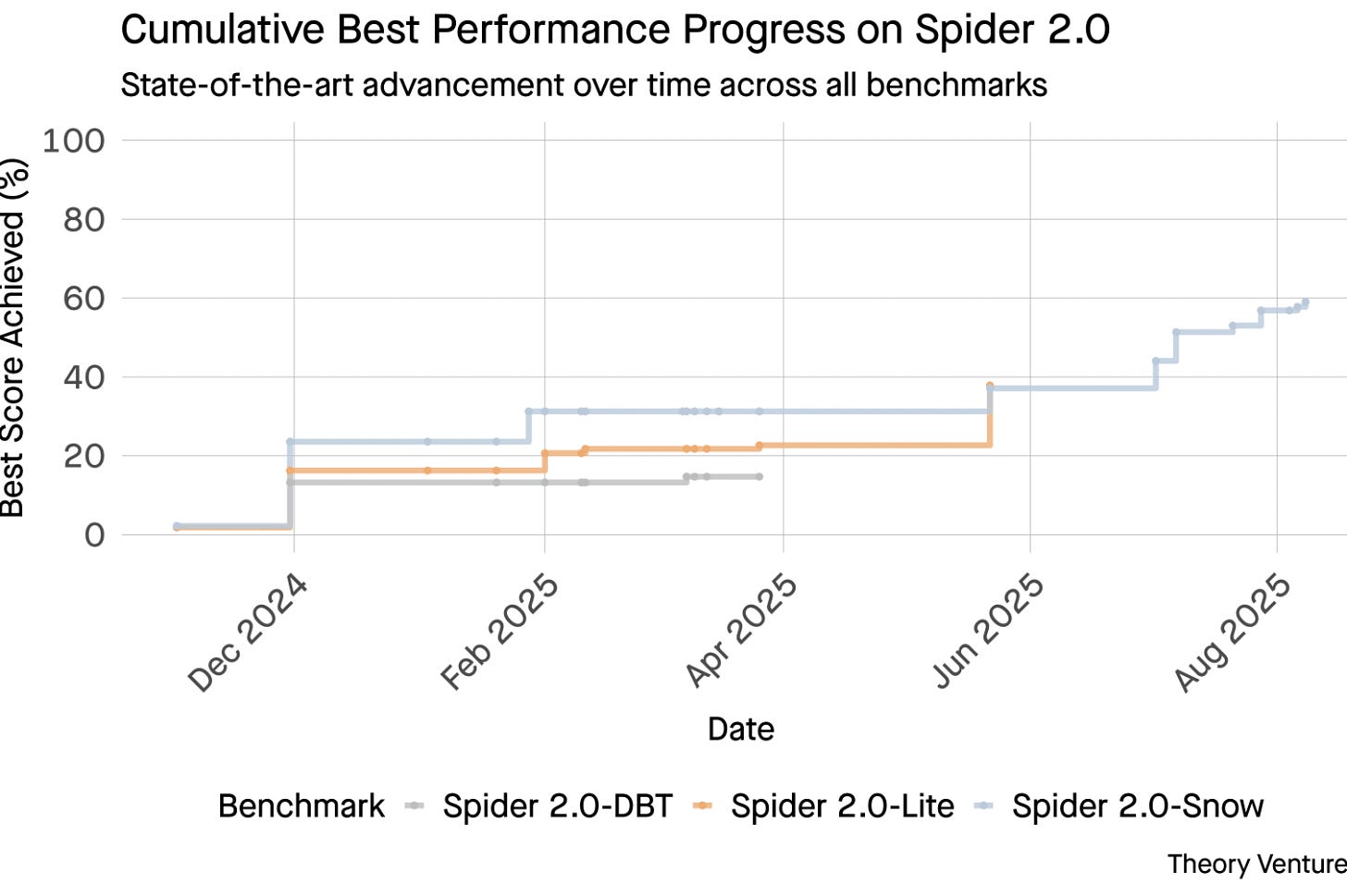

Why AI Can't Crack Your Database

Tomtunguz • campaign spend • August 12, 2025

AI•Data•TextToSQL•Spider•Essays

GPT-5 achieves 94.6% accuracy on AIME 2025, suggesting near-human mathematical reasoning.

Yet ask it to query your database, and success rates plummet to the teens.

The Spider 2.0 benchmarks reveal a yawning gap in AI capabilities. Spider 2.0 is a comprehensive text-to-SQL benchmark that tests AI models’ ability to generate accurate SQL queries from natural language questions across real-world databases.

While large language models have conquered knowledge work in mathematics, coding, and reasoning, text-to-SQL remains stubbornly difficult.

The three Spider 2.0 benchmarks test real-world database querying across different environments. Spider 2.0-Snow uses Snowflake databases with 547 test examples, peaking at 59.05% accuracy.

Spider 2.0-Lite spans BigQuery, Snowflake, and SQLite with another 547 examples, reaching only 37.84%. Spider 2.0-DBT tests code generation against DuckDB with 68 examples, topping out at 39.71%.

This performance gap isn’t for lack of trying. Since November 2024, 56 submissions from 12 model families have competed on these benchmarks.

Claude, OpenAI, DeepSeek, and others have all pushed their models against these tests. Progress has been steady, from roughly 2% to about 60%, in the last nine months.

The puzzle deepens when you consider SQL’s constraints. SQL has a limited vocabulary compared to English, which has 600,000 words, or programming languages that have much broader syntaxes and libraries to know. Plus there’s plenty of SQL out there to train on.

If anything, this should be easier than the open-ended reasoning tasks where models now excel.

Yet even perfect SQL generation wouldn’t solve the real business challenge. Every company defines “revenue” differently.

….

Venture

Venture Has Never Been More Concentrated: 40%+ of VC $$$ Going to Just 10 Deals

Saastr • Jason Lemkin • August 11, 2025

Venture

What’s happening in venture capital right now. At some level, if you’re not one of the chosen few mega-startups … you’re essentially competing for table scraps.

Because the Age of AI has led to not just massive rounds being raised, but those massive dollars going to the fewest companies ever. Per Pitchbook’s latest data: 41% of all venture capital dollars deployed in the U.S. this year have gone to just 10 companies. Read that again. Ten companies. Out of thousands seeking funding.

This isn’t just concentration—it’s a fundamental restructuring of how venture capital works.

The Math Founders Need to Understand

Here’s what 41% concentration actually means in practice:

$81.3 billion has flowed to just 10 startups out of $197.2 billion total VC deployment in 2025

This represents a 75% increase from the share awarded to the top 10 companies in 2024

It’s the highest concentration we’ve seen in the last decade

Eight of these ten companies are AI-focused

To put this in perspective: OpenAI alone raised $40 billion—the largest single financing event in venture capital history. That’s more than many entire years of VC activity in previous decades.

The top three AI companies (OpenAI, xAI, and Anthropic) collectively raised $65 billion—nearly one-third of all venture dollars this year.

Why This Concentration Is Accelerating

AI Infrastructure Requires Unprecedented Capital

Unlike previous technology cycles where millions could fund meaningful progress, frontier AI development demands billions. Training large language models, building compute infrastructure, and acquiring the necessary talent requires capital at scales that would have been unimaginable even five years ago.

Scale AI’s $14.3 billion round from Meta (giving Meta a 49% non-voting stake) exemplifies this new reality. When Meta can justify a $14+ billion investment for specialized AI infrastructure, we’re playing an entirely different game.

The Power Law Has Gone Exponential

Venture has always operated on power law dynamics—one big win covers many losses. But now the “big wins” are so massive they’re creating a bifurcated market:

Mega-funds with billions in assets under management chase mega-deals

Boutique funds fight over the remaining 59% of capital spread across thousands of companies

First-time fund managers raised a combined $1.8 billion across 44 funds—less than half of what Founders Fund alone raised ($4.6 billion)

….

What is venture capital’s core product? Can it scale?

Signalrank update • August 14, 2025

Venture

For an industry focused on disruption, there is remarkably little innovation within venture capital itself. Arthur Rock, the OG VC behind Fairchild, Intel & Apple, could don a Patagonia gilet and feel comfortable within most investment committees today.

The product offered by VCs has not changed. Floodgate’s Mike Maples observed that a VC’s product at its core is its decision-making engine. There is ordinarily no such thing as proprietary deal flow and many investors add minimal value. The uniqueness of a partnership comes down to the strength, culture, and dynamism of a firm’s decision-making process. LPs are buying a firm’s process; the results should take care of themselves.

When viewed through this prism, VC cannot scale. It remains an apprenticeship-based artisanal craft, not a commodifiable & industrialized data-driven product. In fact, adding additional humans to an investment committee is likely to make for worse decisions, not better.

VCs have liked to integrate sourcing, capital & value-add services to this core decision-making process. It’s the whole product, packaged as one for LPs. But the history of technology, at least as told by Clay Christensen, is that modularity beats integration every time: “the transition from proprietary architecture to open modular architecture just happens over and over again.”

What if you applied this logic to venture capital? What if you outsourced decision-making? What if you separated decision-making from sourcing, capital & value add services? What if technology was applied to the venture capital industry itself?

Jim Barksdale, the former CEO of Netscape, is credited with saying, “there's only two ways I know of to make money in business: bundling and unbundling”. In this post, we are going to use the framework of unbundling/rebundling to consider how venture capital could be rebuilt for scale.

The unbundlers

There is an infamous conversation between Marc Andreessen & Jim Barksdale with Harvard Business School here to consider how distribution technologies enable bundling & rebundling. They discuss how the internet frees distribution from the constraints of “time and place.”

The proliferation of digitally-distributed data is finally started to lead to the unbundling of venture capital, freeing it from similar constraints of time and place.

We are going to consider three different models of unbundling VC: solo GPs, guilds & AI models.

Solo GPs

LPs have historically backed partnerships of multiple GPs, where diversity of opinion, complementary skillsets and access to differentiated networks should improve outcomes. Not to mention the ability to diligence more opportunities by adding additional bodies.

Yet the relative importance of an individual’s brand within a partnership has been increasing for a while. Correlation Ventures argued in 2017 that the value of a firm is no more than the sum of its partners. Founders are keen to work with specific individuals (who are ideally ex operators, not Excel jockeys), instead of specific firms. This has happened simultaneously to the professionalization of angel investing. And of course the growth of social media. Contrary’s Kyle Harrison has an admirable series on the current unbundling of venture capital where he focuses on the rise of solo GPs.

….

What It Really Takes to IPO | Dave Chen, Co-Head of Global Tech Investment Banking at Morgan Stanley

Youtube • Carta • August 14, 2025

Regulation•IPOs•IPOReadiness•MorganStanley•Carta•Venture Capital•Venture

The Hard Truth About SaaS Revenue Durability: 80% of Post-IPO Companies Don’t Compound in Value

Saastr • Jason Lemkin • August 12, 2025

Venture

Bottom Line Up Front: The math on SaaS investing is … tough. Even “good” bets from 2021’s growth surge are stalling out because fast-growing revenue often doesn’t compound as expected at scale. With roughly 80% of post-IPO SaaS companies failing to meaningfully compound in value, investors must identify the top 10% of winners—a challenge that’s even harder pre-IPO when revenue durability is less proven.

The 2021 Hangover: When Good Bets Go Bad

The SaaS investment landscape of 2021 feels like a different universe. Companies were scaling at breakneck speed, valuations soared, and it seemed like every high-growth SaaS company was destined for greatness. Fast forward to today, and many investors are sitting on portfolios full of companies that weren’t necessarily “bad” bets—they were logical investments in rapidly growing businesses that simply hit a wall.

The issue isn’t that these companies failed outright. It’s that their revenue stopped compounding at the scale investors expected. A company racing from $10M to $100M ARR might look unstoppable, but the journey from $100M to $500M ARR often tells a very different story.

The Brutal Math of Post-IPO Performance

Here’s the sobering reality that every SaaS investor needs to internalize: approximately 80% of companies that go public don’t meaningfully compound in value over the long term. This isn’t about failed startups or obvious disasters—this is about companies that successfully reached the public markets and still couldn’t deliver the exponential returns investors hoped for.

This mathematical reality creates a harsh selection pressure: you need to identify and hold the top 10% of IPO-bound companies to capture the real value creation in SaaS. The challenge? Making that distinction when companies are still private and their revenue durability hasn’t been tested at massive scale.

Global Unicorn Count Tops 1,600, With 13 Additions In July

Crunchbase • August 13, 2025

Venture

Thirteen companies spanning sectors from AI to energy and professional services joined The Crunchbase Unicorn Board in July, while five had exits, a hopeful signal for the hundreds of well-funded companies stabled on the board.

The board also reached a new milestone last month: It now includes more than 1,600 unicorn startups, or private companies currently valued at $1 billion or more, according to Crunchbase data.

Among the 13 new companies, six are U.S.-based, two are from Sweden, and one each hail from France, China, Singapore, UAE and Saudi Arabia. Leading sectors for new unicorns in July were AI apps and infrastructure, e-commerce and healthcare.

Five exits

Last month also posted five unicorn exits, signaling that more liquidity could be on the horizon from the board, which still holds a collective valuation just under $6 trillion.

Three unicorns went public in July: San Francisco-based design collaboration platform Figma, Beijing-based autonomous robotics company Geek+, and Atlanta-based insurtech Accelerant.

Two companies were also acquired: California-based Windsurf — was bought by AI code generation startup Cognition for an undisclosed price after an earlier planned $3 billion acquisition by OpenAI fell apart — and Texas-based Iodine Software, which aims to improve payment outcomes for hospitals was acquired by Waystar for $1.25 billion.

AI

EvoBlog: Building an Evolutionary AI Content Generation System

Tom Tunguz • August 13, 2025

AI•Publishing•LLMs•ContentGeneration•EvolutionaryComputation

One of the hardest method models to break is how disposable AI generated content is.

When asking me to generate one blog post, why not just ask it to generate three, pick the best, use that as a prompt to generate three more, and repeat until you have a polished piece of content?

This is the core idea behind EvoBlog, an evolutionary AI content generation system that leverages multiple large language models (LLMs) to produce high-quality blog posts in a fraction of the time it would take using traditional methods.

The post below was generated using EvoBlog in which the system explains itself.

– Imagine a world where generating a polished, insightful blog post takes less time than brewing a cup of coffee. This isn’t science fiction. We’re building that future today with EvoBlog.

Our approach leverages an evolutionary, multi-model system for blog post generation, inspired by frameworks like EvoGit, which demonstrates how AI agents can collaborate autonomously through version control to evolve code. EvoBlog applies similar principles to content creation, treating blog post development as an evolutionary process with multiple AI agents competing to produce the best content.

The process begins by prompting multiple large language models (LLMs) in parallel. We currently use Claude Sonnet 4, GPT-4.1, and Gemini 2.5 Pro - the latest generation of frontier models. Each model receives the same core prompt but generates distinct variations of the blog post. This parallel approach offers several key benefits.

First, it drastically reduces generation time. Instead of waiting for a single model to iterate, we receive multiple drafts simultaneously. We’ve observed sub-3-minute generation times in our tests, compared to traditional sequential approaches that can take 15-20 minutes.

Second, parallel generation fosters diversity. Each LLM has its own strengths and biases. Claude Sonnet 4 excels at structured reasoning and technical analysis. GPT-4.1 brings exceptional coding capabilities and instruction following. Gemini 2.5 Pro offers advanced thinking and long-context understanding. This inherent variety leads to a broader range of perspectives and writing styles in the initial drafts.

Next comes the evaluation phase. We employ a unique approach here, using guidelines similar to those used by AP English teachers. This ensures the quality of the writing is held to a high standard, focusing on clarity, grammar, and argumentation. Our evaluation system scores posts on four dimensions: grammatical correctness (25%), argument strength (35%), style matching (25%), and cliché absence (15%).

….

AGI progress, surprising breakthroughs, and the road ahead — the OpenAI Podcast Ep. 5

Youtube • OpenAI • August 15, 2025

AI•Tech•AGI•Breakthroughs•OpenAIPodcast

Sam Altman addresses ‘bumpy’ GPT-5 rollout, bringing 4o back, and the ‘chart crime’

Techcrunch • Julie Bort • August 8, 2025

AI•Tech•GPT

During a Reddit ask-me-anything session, OpenAI CEO Sam Altman and members of the GPT-5 team fielded a barrage of questions about the new model’s early performance and pleas to restore the previous GPT-4o option. Participants pressed Altman on why the rollout felt rough, and raised the now-infamous “chart crime” from the launch presentation.

A major change with GPT-5 is a real-time router that selects which model handles a prompt—opting for speed or taking more time to “think” for tougher queries. That system stumbled at launch, undermining user trust in the upgrade.

AMA commenters reported GPT-5 felt less capable than 4o. Altman attributed that perception to the router malfunction during Thursday’s rollout and said fixes were already underway. He pledged improvements to the decision boundary and more transparency about which model is responding, adding that “GPT-5 will seem smarter starting today.”

Many users also lobbied for the return of GPT-4o, at least for Plus subscribers. Altman said the team is evaluating how to let Plus users continue using 4o while weighing tradeoffs. To ease the transition, he committed to doubling rate limits for Plus customers as the rollout completes so users can explore the new model without worrying about hitting monthly caps.

Predictably, Altman was asked about the launch-day bar chart that visually inverted a lower benchmark score into a taller bar, spawning jokes about “chart crime.” He didn’t address it in the AMA, but on X called it a “mega chart screwup,” and others noted the charts in the published blog post were corrected.

Altman closed by emphasizing a focus on stability and responsiveness to feedback, promising continued work to address the issues users care about most and to keep listening as the rollout proceeds.

How AI could create the first one-person unicorn

Economist • August 11, 2025

AI•Work•Generative AI•Grief Tech•Funeral Planning

Overview

A nontechnical founder, Sarah Gwilliam, conceives a generative-AI startup after the recent loss of her father. Motivated by the twin burdens of grief and administrative complexity, she imagines a service that helps people navigate mourning while coordinating the myriad tasks that follow a death—“wedding planning for funerals.” Although she admits she doesn’t “speak AI,” the idea suggests how modern generative tools could enable domain experts and caregivers, not just engineers, to build targeted solutions for high‑stakes, emotionally charged life events.

The Problem Space

Families face simultaneous emotional and logistical challenges: arranging memorials, notifying contacts, and closing accounts while processing loss.

Knowledge gaps and time pressure can compound stress, especially for those unfamiliar with legal, financial, and ceremonial requirements.

Fragmented vendors and information sources create coordination friction, increasing the risk of missed deadlines and costly errors.

Proposed AI-Driven Solution

Conversational guidance that adapts to a family’s traditions and preferences, translating complex tasks into step‑by‑step checklists.

Automated coordination with service providers (venues, caterers, celebrants) and document workflows (death certificates, account closures).

Tone‑aware support that recognizes sensitive moments, offers empathetic phrasing, and calibrates reminders to avoid overwhelming users.

Why It Matters

Demonstrates how generative AI can lower technical barriers, allowing lived experience—rather than coding expertise—to drive product vision.

Targets a persistent, universal need: end‑of‑life logistics recur for every household, suggesting durable demand for trustworthy assistance.

Highlights the shift from general‑purpose AI demos to vertical, outcome‑focused tools that solve specific pains where timing and accuracy matter.

Implications and Considerations

Trust and safety: Users will expect strong privacy safeguards, clear data handling, and the ability to opt out of sensitive predictions.

Cultural and legal nuance: Effective solutions must reflect diverse rituals and comply with jurisdiction‑specific rules for estates and funerals.

Human‑in‑the‑loop: Even with automation, families may want expert oversight at key decision points to ensure empathy and correctness.

Key Takeaways

A personal loss catalyzes a product vision that merges logistics and compassion.

Generative AI can empower non‑engineers to design specialized services.

Success will hinge on sensitivity, compliance, and reliable execution across vendors and documents.

Context Engineering: Bringing Engineering Discipline to Prompts—Part 1

Oreilly • August 11, 2025

AI•Tech•ContextEngineering

The following is Part 1 of 3 from Addy Osmani’s original post “Context Engineering: Bringing Engineering Discipline to Parts.” Context Engineering Tips: To get the best results from an AI, you need to provide clear and specific context. The quality of the AI’s output directly depends on the quality of your input.

How to improve your AI prompts:

Be precise: Vague requests lead to vague answers. The more specific you are, the better your results will be.

Provide relevant code: Share the specific files, folders, or code snippets that are central to your request.

Include design documents: Paste or attach sections from relevant design docs to give the AI the bigger picture.

Share full error logs: For debugging, always provide the complete error message and any relevant logs or stack traces.

Show database schemas: When working with databases, a screenshot of the schema helps the AI generate accurate code for data interaction.

Use PR feedback: Comments from a pull request make for context-rich prompts.

Give examples: Show an example of what you want the final output to look like.

State your constraints: Clearly list any requirements, such as libraries to use, patterns to follow, or things to avoid.

Prompt engineering was about cleverly phrasing a question; context engineering is about constructing an entire information environment so the AI can solve the problem reliably. As applications grew more complex, the limitations of focusing only on a single prompt became obvious. The field is coalescing around “context engineering” as a better descriptor for getting great results from AI: constructing the entire context window an LLM sees—not just a short instruction, but all the relevant background, examples, and guidance needed for the task. The phrase gained momentum among practitioners in mid-2025, reflecting a shift from one-off prompt tricks to designing robust systems that bring in memory, knowledge, tools, and data in an organized way.

Anthropic offers Claude chatbot to US lawmakers for $1

Ft • August 12, 2025

AI•Tech•Anthropic•Claude•US Government

Anthropic will offer its Claude artificial intelligence chatbot to US lawmakers for $1, in a push to seed adoption of generative AI across Washington and gain a foothold in public-sector technology procurement.

The initiative, available for one year, gives congressional offices access to enterprise-grade versions of Claude with additional security and administrative controls suited to government use. It follows recent approvals that allow major AI systems to be used by federal agencies for sensitive but unclassified work.

Anthropic’s move mirrors a similar $1 offer made by OpenAI to executive-branch agencies last week and comes as Google explores comparable terms for its Gemini chatbot. The discounts reflect intensifying competition among leading AI developers to become the preferred provider to government, a market seen as both influential and lucrative over the long term.

The company says the programme is intended to help officials draft and summarise documents, analyse large volumes of material and respond to constituent queries while maintaining safeguards. It also positions Anthropic alongside rivals as policymakers debate how best to regulate advanced AI systems and assess their potential risks and benefits to public administration.

The offers arrive amid heightened scrutiny of AI in Washington, where concerns about bias, safety and election integrity are prompting calls for clearer standards. By lowering the barrier to adoption, leading AI groups hope to familiarise officials with their tools and shape future procurement decisions once introductory pricing lapses.

Anthropic takes aim at OpenAI, offers Claude to ‘all three branches of government’ for $1

Techcrunch • Rebecca Bellan • August 12, 2025

AI•Tech•Anthropic•OpenAI•Fed RAMP High

Just a week after OpenAI announced it would offer ChatGPT Enterprise to the entire federal executive branch workforce at $1 per year per agency, Anthropic has raised the stakes. The AI giant said Tuesday it would also offer its Claude models to government agencies for just $1 — but not only to the executive branch. Anthropic is targeting “all three branches” of the U.S. government, including the legislative and judiciary branches.

The package will be available for one year, says Anthropic.

The move comes after OpenAI, Anthropic, and Google DeepMind were added to the General Services Administration’s list of approved AI vendors that can sell their services to civilian federal agencies. TechCrunch has reached out to Google to see if it plans to respond to Anthropic’s and OpenAI’s challenges in kind.

Anthropic’s escalation — a response to OpenAI’s attempt to undercut the competition — is a strategic play meant to broaden the company’s foothold in federal AI usage.

“We believe the U.S. public sector should have access to the most advanced AI capabilities to tackle complex challenges, from scientific research to constituent services,” Anthropic said in a statement. “By combining broad accessibility with uncompromising security standards, we’re helping ensure AI serves the public interest.”

Anthropic will offer both Claude for Enterprise and Claude for Government. The latter supports FedRAMP High workloads so that federal workers can use Claude for handling sensitive unclassified work, according to the company.

FedRAMP High is a stringent security baseline within the Federal Risk and Authorization Management Program (FedRAMP) for handling unclassified sensitive government data.

Anthropic will also provide technical support to help agencies integrate AI tools into their workflows, according to the company.

Media

Storytelling Will Be the Skill of the Future

Youtube • Uncapped with Jack Altman • August 11, 2025

Media•Social

Who is Talking to My Users?

Tomtunguz • August 10, 2025

Media•Publishing•GoogleSearch•PewResearch•Cloudflare

In 1999, the dotcoms were valued on traffic. IPO metrics revolved around eyeballs.

Then Google launched AdWords, an ad model predicated on clicks, & built a $273b business in 2024.

But that might all be about to change : Pew Research’s July 2025 study reveals users click just 8% of search results with AI summaries, versus 15% without - a 47% reduction. Only 1% click through from within AI summaries.

Cloudflare data shows AI platforms crawl content far more than they refer traffic back : Anthropic crawls 32,400 pages for every 1 referral, while traditional search engines scan content just a couple times per visitor sent.

The expense of serving content to the AI crawlers may not be huge if it’s mostly text.

The bigger point is AI systems disintermediate the user & publisher relationship. Users prefer aggregated AI answers over clicking through websites to find their answers.

It’s logical that most websites should expect less traffic. How will your website & your business handle it?

Regulation

Americans, Be Warned: Lessons From Reddit’s Chaotic UK Age Verification Rollout

Eff • Molly Buckley • August 8, 2025

Regulation•Europe•Online Safety Act•Age Verification•Free Speech

Overview and Thesis

The UK’s Online Safety Act (OSA) has ushered in nationwide age verification requirements that extend far beyond pornography, forcing platforms to gate large swaths of content and pushing users to surrender sensitive identity data or leave. The article argues the rollout has been chaotic and overbroad—most vividly on Reddit—illustrating how age checks erode privacy, chill speech, and paradoxically increase risk to the very users they purport to protect. The core warning: if such mandates spread, especially to the U.S., they will function as sweeping censorship regimes.

What Changed on Reddit—and Why It Matters

On July 25, UK Reddit users found numerous communities suddenly locked behind age gates. Under new policies, users must submit a government ID and/or a live selfie to Persona, Reddit’s third‑party verification vendor. The restrictions hit not only sexual content but also LGBTQ+ identity and support forums, global news and conflict reporting, and health-related spaces such as r/periods, r/stopsmoking, and r/sexualassault. Even benign or educational groups—r/vexillology, r/worldwar2, r/poker, r/earwax, r/popping, and r/rickroll—were blocked for unverified users. Faced with heavy fines and vague compliance rules, platforms defaulted to overcensoring. As the article puts it, “Every user in the country is now faced with a choice: submit their most sensitive data… or stay off of Reddit entirely.”

Collateral Damage Beyond Major Platforms

The OSA’s burdens are hardest on smaller sites and forums, some of which have shuttered or curtailed services rather than risk penalties. The episode underscores a long-standing concern: stringent, ill-defined duties push intermediaries to err on the side of removal and gating. Reddit is a noteworthy case because of its prior commitments—defending Section 230, endorsing the Santa Clara Principles, and historically supporting user privacy and speech. If even a relatively rights-forward platform overcensors to comply, the broader internet—dominated by less rights-conscious actors—will fare worse.

Rollout Chaos and Technical Failures

Backlash was swift. VPN usage in the UK surged, more than 500,000 people signed a repeal petition, and users demonstrated trivial bypasses—such as video game face filters and meme images—tricking the verification AI. Reports proliferated of bugs and friction: repeated ID prompts, lockouts, and moderators unable to view or assess content, including safe-for-work submissions. Even assuming workarounds are patched, the systemic problems remain: automated age estimation is error-prone and discriminatory; many people, including teens, lack IDs or dedicated devices; and verification funnels users to data brokers, expanding surveillance risks.

Why the Safety Rationale Backfires

The article emphasizes a counterintuitive effect: by walling off mainstream, moderated services, age checks will push determined teens and unverified users toward the internet’s shadows—spaces with weaker moderation and more hazards, including CSAM and non-consensual material. In this sense, “age verification mandates… are censorship regimes,” and their net effect is to “increase the risk of harm,” not reduce it. The measures also risk excluding millions from vital communities for health, identity, and civic engagement—areas where access can be protective.

Implications for the United States

The UK experiment is a proving ground for global rollouts. In the U.S., age verification debates have moved beyond porn toward broader categories—e.g., LGBTQ+ and reproductive health content—using “protect the children” framing as a Trojan horse. Nearly half of U.S. states already impose some online age restrictions, and a recent Supreme Court action has opened the door wider to sexual-content age blocks. Federal proposals like KOSA could replicate the UK’s outcomes at scale, threatening First Amendment access to lawful speech for all users, including minors.

What’s at Stake and What to Do

The trajectory points to a future of pervasive identity checks, larger data trails, and algorithmic gatekeeping of lawful information. The article urges proactive civic action: contact federal and state representatives to oppose age verification mandates, educate peers about the UK’s experience, and support coalitions defending online privacy, security, anonymity, and expression.

• Key takeaways:

Age verification under the OSA is sweeping, affecting far more than explicit content.

Reddit’s overcensoring illustrates the compliance incentives facing all platforms.

The rollout is plagued by technical failures, evasion, and moderator friction.

Safety claims are undermined by displacement to riskier, unmoderated spaces.

U.S. policy proposals risk importing the same censorship dynamics unless challenged.

Perplexity Offers $34.5 Billion for Google Chrome

Bloomberg • August 12, 2025

Regulation•USA•Antitrust•Google•Chrome

AI startup Perplexity said it made an unsolicited bid for Google’s Chrome browser for $34.5 billion. The Trump administration is pushing for Google to sell the Chrome browser after a federal judge found Google has an illegal monopoly in internet search. Google is not selling Chrome. Bloomberg's Seth Fiegerman reports on "Bloomberg Technology."

What to Know About Trump’s Plan to Take 15% of AI Chip Sales to China

Wsj • Amrith Ramkumar • August 11, 2025

Regulation•USA•Nvidia•AMD•ExportControls

The decision to charge Nvidia and AMD a portion of the sales from their AI chips to China opens up legal and national-security concerns.

What is Chrome Worth?

Tom Tunguz • August 11, 2025

Venture•Regulation

Perplexity AI just made a $34.5b unsolicited offer for Google’s Chrome browser, attempting to capitalize on the pending antitrust ruling that could force Google to divest its browser business.

Comparing Chrome’s economics to Google’s existing Safari deal reveals why $34.5b undervalues the browser.

Google pays Apple $18-20b annually to remain Safari’s default search engine¹, serving approximately 850m users². This translates to $21 per user per year.

The Perplexity offer values Chrome at $32b, which is $9 per user per year for its 3.5b users³.

If Chrome users commanded the same terms as the Google/Apple Safari deal, the browser’s annual revenue potential would exceed $73b.

This data is based on public estimates but is an approximation.

This assumes that Google would pay a new owner of Chrome a similar scaled fee for the default search placement. Given a 5x to 6x market cap-to-revenue multiple, Chrome is worth somewhere between $172b and $630b, a far cry from the $34.5b offer.

Chrome dominates the market with 65% share⁴, compared to Safari’s 18%. A divestment would throw the search ads market into upheaval. The value of keeping advertiser budgets is hard to overstate for Google’s market cap & position in the ads ecosystem.

If forced to sell Chrome, Google would face an existential choice. Pay whatever it takes to remain the default search engine, or watch competitors turn its most valuable distribution channel into a cudgel against it.

How much is that worth? A significant premium to a simple revenue multiple.

China

China’s vision for a driverless future is miles ahead of everyone else’s

Rest of world • August 12, 2025

China•Technology•Autonomous Vehicles•Robo taxis•Regulation

At the glitzy Auto Shanghai show in April this year, the message was clear: China is making the cars of the future, and that future will be increasingly electrified, connected, and autonomous.

At the world’s biggest car expo, domestic players — once dismissed as knockoffs — were the stars: BYD’s premium Denza, Huawei’s luxury sedan, Pony.ai’s next‑generation robotaxis.

They were marketed less like cars and more like smartphones, packed with ultrafast charging technology, facial recognition systems, and self‑driving features.

With these players, China’s autonomous vehicle industry is nearing what some have called its “ChatGPT moment” — a tipping point where breakthrough technology goes mainstream. It is already the world’s largest auto market and a global leader in electric and autonomous vehicles.

More self‑driving cars are tested there than anywhere else. By 2030, a fifth of new cars sold in China will be fully driverless, and 70% will feature advanced assisted‑driving technology, according to the China Society of Automotive Engineers.

China currently leads in ADAS and robotaxis: tech and auto firms from Baidu to XPeng are rolling out AI‑powered driver‑assistance in consumer cars and fleets; over half of cars sold carry Level 2 systems, and five firms run 2,300 robotaxis across 30 cities, versus about 700 in five U.S. cities.

Several factors drive this momentum — above all, strong government backing and infrastructure investment. National policy treats AVs as a strategic industry, while cities like Beijing, Shanghai, and Shenzhen set up pilot zones, offer subsidies, fast‑track permits, and upgrade roads for driverless navigation.

Education

Stanford sticks with legacy admissions

Techcrunch • August 10, 2025

Education•Universities•Legacy Admissions•Standardized Testing•AB

Stanford said its fall 2026 admissions will continue to consider legacy status, keeping an advantage for applicants with alumni ties. The university is also ending its test-optional policy and will again require SAT or ACT scores for the first time since 2021.

Citing the Stanford Daily, the school is prepared to withdraw from California’s Cal Grant program rather than comply with a new state law that bans legacy admissions (Assembly Bill 1780). Stanford says it will replace the lost state aid with its own institutional funding.

As the launchpad for many of Silicon Valley’s most prominent founders and executives, Stanford’s stance could help preserve access advantages for the children of the tech elite, reinforcing a pipeline into influential networks that have powered multiple startup booms.

Reinstating standardized testing adds another layer to the debate. Supporters argue test requirements help maintain academic standards; critics contend they privilege students with greater access to test preparation and resources, undermining claims of meritocracy and widening inequities.

Stanford had already signaled last year that it would reverse its 2021 move to drop testing requirements. The decision to keep legacy consideration surfaced in newly posted admissions criteria, confirming that family connections will remain part of the holistic review.

The policies arrive against a backdrop of heavy dependence on alumni philanthropy across higher education. At Stanford, donations flow either into The Stanford Fund for immediate spending on operations and financial aid or, more often, into the university’s endowment managed by Stanford Management Company. The endowment’s annual payout—around 5%—covers roughly 22% of the university’s operating budget, underscoring why alumni relationships remain financially consequential.

Crypto

The Future of Crypto | Brian Armstrong, CEO of Coinbase

Youtube • Uncapped with Jack Altman • August 13, 2025

Crypto•Blockchain•Future Of Crypto

Interview of the Week

AI Godfather Geoffrey Hinton warns that We're Creating 'Alien Beings that "Could Take Over"

Keenon • Andrew Keen • August 13, 2025

AI•Tech•Geoffrey Hinton•Existential Risk•Super intelligence•Interview of the Week

So will AI wipe us out? According to Geoffrey Hinton, the 2024 Nobel laureate in physics, there's about a 10-20% chance of AI being humanity's final invention. Which, as the so-called Godfather of AI acknowledges, is his way of saying he has no more idea than you or I about its species-killing qualities. That said, Hinton is deeply concerned about some of the consequences of an AI revolution that he pioneered at Google. From cyber attacks that could topple major banks to AI-designed viruses, from mass unemployment to lethal autonomous weapons, Hinton warns we're facing unprecedented risks from technology that's evolving faster than our ability to control it. So does he regret his role in the invention of generative AI? Not exactly. Hinton believes the AI revolution was inevitable—if he hadn't contributed, it would have been delayed by perhaps a week. Instead of dwelling on regret, he's focused on finding solutions for humanity to coexist with superintelligent beings. His radical proposal? Creating "AI mothers" with strong maternal instincts toward humans—the only model we have for a more powerful being designed to care for a weaker one.

Nobody Really Knows the Risk Level Hinton's 10-20% extinction probability is essentially an admission of complete uncertainty. As he puts it, "the number means nobody's got a clue what's going to happen" - but it's definitely more than 1% and less than 99%.

Short-Term vs. Long-Term Threats Are Fundamentally Different Near-term risks involve bad actors misusing AI (cyber attacks, bioweapons, surveillance), while the existential threat comes from AI simply outgrowing its need for humans - something we've never faced before.

We're Creating "Alien Beings" Right Now Unlike previous technologies, AI represents actual intelligent entities that can understand, plan, and potentially manipulate us. Hinton argues we should be as concerned as if we spotted an alien invasion fleet through a telescope.

The "AI Mothers" Solution Hinton's radical proposal: instead of trying to keep AI submissive (which won't work when it's smarter than us), we should engineer strong maternal instincts into AI systems - the only model we have of powerful beings caring for weaker ones.

Superintelligence Is Coming Within 5-20 Years Most leading experts believe human-level AI is inevitable, followed quickly by superintelligence. Hinton's timeline reflects the consensus among researchers, despite the wide range.

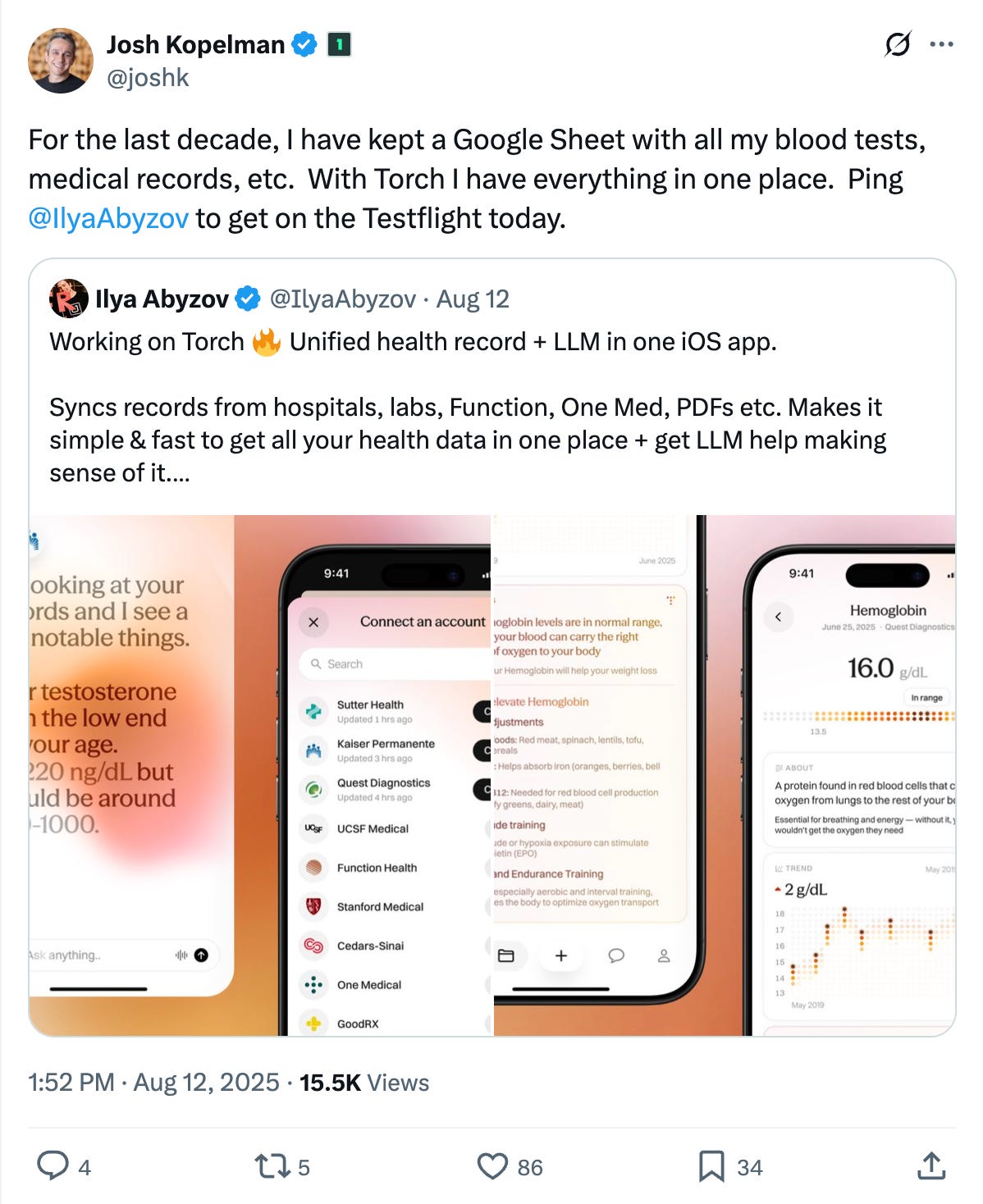

Startup of the Week

From Google Sheets to Torch: Consolidating Personal Health Records

Post of the Week

A reminder for new readers. Each week, That Was The Week, includes a collection of selected essays on critical issues in tech, startups, and venture capital.

I choose the articles based on their interest to me. The selections often include viewpoints I can't entirely agree with. I include them if they make me think or add to my knowledge. Click on the headline, the contents section link, or the ‘Read More’ link at the bottom of each piece to go to the original.

I express my point of view in the editorial and the weekly video.