Hi from deep July. I just returned from two weeks in Tuscany and thought some of you might be missing That Was The Week due to our July Hiatus. So, just for you, here are the things that would have appeared in the past 3 weeks had we been publishing. Plus the bonus of a Notebook LM podcast.

Best

Keith

Contents

Venture Capital

AI

Vibe Coding is the Future. But “Roll Your Own?” That’s More Complicated.

How Anthropic Rocketed to $4B ARR — And Why Your B2B Playbook May Already Be Obsolete

xAI's Grok 4: The tension of frontier performance with a side of Elon favoritism

NotebookLM adds featured notebooks from The Economist, The Atlantic and others

AI filmmaker Kavan released a trailer of "Untold - The Immortal Blades Saga", an

🧠David Friedberg explains the great hope of Artificial Superintelligence

When AI Agents Knock, Will Your Data Platform Answer? – Venrock’s investment in Collate.

OpenAI to take cut of ChatGPT shopping sales in hunt for revenues

Cognition Buys Windsurf, Nvidia Can Sell to China, Grok 4 and Kimi

Trump AI Czar David Sacks Defends Reversal of China Chip Curbs

OpenAI Just Released ChatGPT Agent, Its Most Powerful Agent Yet

It’s Time to Take Anthropic and OpenAI’s Wild Revenue Projections Seriously

Tokenization

Essays

GeoPolitics

Attention Economy

Legislation

Self Driving Cars

IPO

Automotive

Browser Wars

Startup of the Week

Education

Regulation

Venture Capital

SpaceX heads to $400bn valuation in share sale

Ft • July 8, 2025

Business•Aerospace•SpaceX•Starlink•Valuation•Venture Capital

SpaceX, the aerospace company founded by Elon Musk, is preparing for a $1 billion share sale that would value the company at $400 billion. This valuation marks a significant increase from the $210 billion valuation in mid-2024 and the $350 billion valuation in December 2024. The current share sale, priced at $212 per share, includes a tender offer for employee shares, allowing employees to sell their holdings to a select group of investors. SpaceX plans to purchase some shares as part of the transaction, similar to the $500 million worth of employee shares bought back in December. Despite political risks stemming from Musk's support of former President Donald Trump, investor confidence remains high, reflecting the company's strong position in the aerospace industry.

Founded in 2002 with $100 million from Musk's PayPal proceeds, SpaceX aims to revolutionize space travel with reusable rockets and envisions enabling human colonization of Mars. The company's rapid growth is driven by its Starlink satellite internet service, which has deployed around 7,000 satellites and serves approximately 5 million subscribers across 114 countries. Starlink is projected to generate $6.6 billion in hardware and subscription revenue in 2024, contributing significantly to SpaceX's valuation. Additionally, SpaceX's Starship program continues to advance, with recent test flights demonstrating progress toward developing a next-generation reusable rocket system.

The upcoming share sale positions SpaceX among the top 20 companies in the S&P 500, surpassing major corporations like Bank of America and Procter & Gamble. This valuation underscores the company's dominant position in the space industry and its potential for future growth. While the company did not immediately respond to requests for comment, the share sale reflects ongoing investor confidence in SpaceX's vision and achievements.

VC Dollars Are Up, Yes. But VC Rounds? They Are At a 7+ Year Low

Saastr • July 7, 2025

Business•VentureCapital•FundingTrends•InvestmentStrategies•Venture Capital

Recent data from Carta reveals a significant decline in the number of venture capital (VC) funding rounds, reaching a seven-year low, despite an increase in total VC dollars raised. This trend indicates a shift in the venture capital landscape, with fewer but larger investments being made.

Between Q1 2018 and Q2 2025, early-stage VC rounds per day decreased from 9.1 to 7.4, marking a 53% decline from the 2021 peak. Growth-stage rounds fell by 53%, and late-stage rounds decreased by 33% during the same period. This reduction suggests a more selective investment approach, with VCs concentrating on fewer, larger deals.

The decline in the number of funding rounds has led to longer fundraising cycles, as startups face increased competition for available capital. Additionally, the funding funnel has narrowed, resulting in fewer companies entering the venture-backed ecosystem, which may impact growth and late-stage activity in the future.

Despite the decrease in the number of rounds, the total dollar volume has remained robust, driven by mega-rounds and record fund sizes. However, this concentration of capital means that while some startups secure substantial funding, many others find it more challenging to raise capital.

In summary, the venture capital market is experiencing a structural shift towards fewer, larger investments, making it more competitive for startups to secure funding. Entrepreneurs should be prepared for longer fundraising processes and consider alternative funding strategies to navigate this evolving landscape.

Revolut in talks to raise new funding at $65bn valuation

Ft • July 9, 2025

Business•Fintech•Revolut•Funding•Expansion•Venture Capital

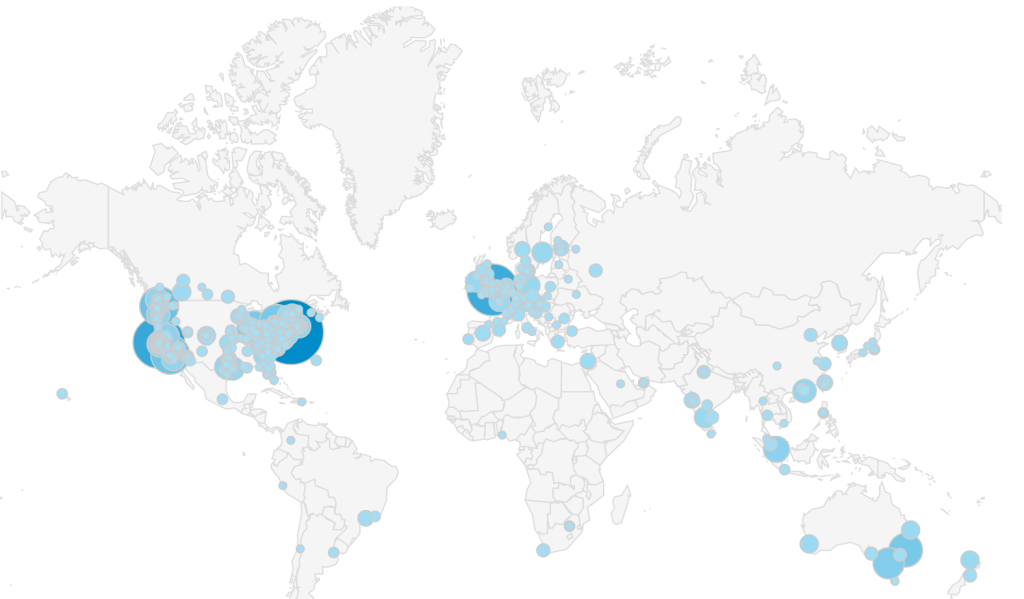

Revolut, Europe's most valuable start-up, is in discussions to raise approximately $1 billion in a new funding round that would value the company at $65 billion. This fundraising effort, involving newly issued shares and the sale of some existing stock, is aimed at supporting the company's global expansion. US investment firm Greenoaks is expected to lead the round, while Mubadala, Abu Dhabi's sovereign wealth fund and a previous investor, is also in discussions to participate. The valuation is a blended figure, with a higher valuation for new capital and a lower one for existing share sales.

The fintech, which gained a UK banking licence in 2024 after a prolonged regulatory process, plans to bolster its presence in the US market leveraging its user-friendly app. Despite regulatory challenges, including unresolved licensing for credit services in the UK, Revolut has posted strong financial results, with pre-tax profits doubling to £1 billion and revenues increasing to £3.1 billion in 2024, largely driven by cryptocurrency trading. Customer growth has reached 50 million globally, though the company continues to face difficulties attracting users to deposit their wages into Revolut accounts, affecting potential fee income. However, Revolut maintains that holding primary accounts is not central to its strategy.

A Crisis Moment for Seed VC

Nextview • July 16, 2025

Technology•Startup•SeedFunding•VentureCapital•AI•Venture Capital

The article "A Crisis Moment for Seed VC" explores a pivotal challenge facing seed-stage venture capital, driven by four interconnected forces reshaping the industry landscape. These forces threaten the traditional model and dynamics of seed investing, suggesting the need for adaptation and strategic reevaluation.

Industry Maturation

The seed venture capital space is undergoing significant maturation as the startup ecosystem evolves. With time, early-stage investing has become more competitive and sophisticated, leading to a more crowded market. This maturation means that returns have started aligning with a more consistent landscape, reducing the ease of outsized gains previously seen in this stage. As the industry matures, early-stage funds are facing pressure to deliver performance comparable to later-stage and growth funds, despite inherently higher risk and uncertainty.

The Two Unstoppable Forces

The article identifies two major forces that seed VC must navigate: the increasing specialization and scale of technology companies, and the macroeconomic environment that influences capital allocation. The rise of dominant platforms and ecosystems concentrates value and power in fewer winners, pushing seed investors to be more discerning and precise in their bets. Simultaneously, broader economic conditions such as tightening capital markets and shifts in interest rates impact fund availability and valuations. These forces create an environment where only the most strategic and well-positioned investors will thrive.

Power Law as Consensus

A fundamental aspect highlighted is the centrality of the "power law" in venture outcomes—the notion that a small number of investments generate the vast majority of returns. This consensus shapes investor behavior and fund strategy. However, the increasing maturity of the seed market means that the power law dynamic is evolving, with amplified competition for the few potential breakout successes. Investors are challenged to identify and back these rare high-potential startups early in their journey, requiring deeper diligence and differentiated networks.

The AI Platform Shift

One of the most transformative trends disrupting seed VC is the rapid emergence of AI as a foundational platform. AI technologies are not only creating new categories of startups but also impacting how startups operate, enabling faster innovation cycles and broader market opportunities. This AI platform shift demands new expertise and investment theses, pushing seed investors to incorporate AI understanding into their evaluation and support processes. It also intensifies competition as scale AI companies dominate attention and capital distribution, influencing where seed capital flows.

Implications and Strategic Considerations

Together, these factors signify a critical juncture for seed VC, where industry participants must rethink their approaches. Traditional seed strategies might no longer suffice; emphasizing specialization, AI expertise, and adaptable fund structures become crucial. Moreover, investors should prepare for a potentially more consolidated landscape where fewer, larger winners emerge from seed rounds. Recognizing power law dynamics and the AI platform's influence may allow VCs to better position themselves for sustained success despite growing headwinds.

In conclusion, the article underscores an existential moment for seed venture capital driven by industry maturation, macroeconomic dynamics, evolving power law realities, and the AI revolution. Successful seed investors will be those who can anticipate these trends, innovate their investment models, and maintain agility in a rapidly shifting ecosystem.

A media future to believe in

Post • Chris Best • July 17, 2025

Technology•Software•CreatorsEconomy•Funding•MediaInnovation•Venture Capital

Today, we’re announcing $100 million in Series C funding, led by investors at BOND and The Chernin Group (TCG), with participation from Andreessen Horowitz, Rich Paul, CEO and founder of Klutch Sports Group, and Jens Grede, CEO and co-founder of SKIMS. BOND’s Mood Rowghani will join our board. We’re thrilled to partner with these investors, who bring a wealth of experience across tech, media, and culture, as we put this capital to work serving creators and their communities.

We’re living through a time of rapid technological change, one that’s reshaping how we communicate, create, and live. Every leap forward brings both promise and peril. The tools we hoped would uplift and enrich us have too often degraded or dehumanized us instead. Now, as powerful new technologies emerge daily, they arrive freighted with both hope and anxiety. The challenges ahead are real.

But this time of flux also holds tremendous opportunity. A growing number of people are navigating the chaos by choosing independence. Audiences are investing their attention and money in what they value, not just what addicts them. Creators are building livelihoods based on trust, quality, and creative freedom. They know the future belongs to those who build it.

At Substack, we believe the heroes of culture are the ones who shape it. Technology should serve them, not the other way around. That’s why we’re building tools and a network to protect their independence, amplify their voices, and foster deep and direct relationships. These are the people who will lead us to a better culture, and a future we can believe in.

This funding is our chance to get behind them. We’ll invest in better tools, broader reach, and deeper support for the writers and creators driving Substack’s ecosystem. Already, hundreds of millions of dollars flow from audiences to creators there every year. Millions use the app weekly, and pay for the work they discover. But this is just the beginning.

The model is working—across writing, audio, video, and communities—and this funding lets us go further. We’re doubling down on the Substack app, which is designed to help audiences reclaim their attention and connect with the creators they care about. We aim to prove that a media app can be fun and rewarding without melting your brain. An escape from the doomscroll, and a place to take back your mind.

We’re also building tools that give superpowers to anyone who has something important to say. Creators face enough challenges without juggling logistics and expenses. Substack should feel like a studio in your pocket—we take care of everything except the hard part: the creative work itself.

Most importantly, we’re building an economic engine to power this entire cultural ecosystem. Our model is simple: creators make money by serving their communities, and Substack succeeds only when they do. Audiences vote with attention and money for the culture they want, acting as collaborators in shaping a media ecosystem rooted in intention and connection. And everyone is part of a network that rewards trust, not manipulation. Substack is growing fast around the world, and we’re accelerating our work to bring the platform to new markets, so more people everywhere can support the creators they care about.

Independence shouldn’t mean going it alone. We’re building technologies that work for you, not against you—helping you carve out your own space on the internet, where you set the rules. It’s a system that rewards integrity, curiosity, and courage.

None of this happens without you. To everyone publishing on, subscribing to, or just exploring Substack: thank you. We’re honored to play a part, and excited for what this funding will unlock. The future of media belongs to you, and it can’t come soon enough.

Only 11% of Unicorn Exits Are IPOs Now (Down from 53%)

Saastr • July 9, 2025

Business•Startups•UnicornExits•IPOs•VentureCapital•Venture Capital

Recent research by Professor Ilya Strebulaev at Stanford University's Graduate School of Business reveals a significant shift in how unicorns—startups valued at over $1 billion—exit the market. The data, compiled by Stanford's Venture Capital Initiative, indicates that the proportion of unicorn exits via initial public offerings (IPOs) has dropped dramatically from 83% in 2010 to just 11% in 2024. This trend suggests a fundamental restructuring of the exit landscape with lasting implications for SaaS founders.

The decline in IPOs is attributed to several key factors:

Abundant Private Funding: The availability of substantial late-stage private funding has reduced the pressure on companies to go public for growth capital.

Increased Compliance Costs: The financial and regulatory burdens associated with going public have escalated, making IPOs less attractive.

Emergence of Secondary Markets: Secondary markets now offer liquidity options for employees and early investors without the need for a public offering.

Rise of Strategic Acquisitions: Large corporations are increasingly acquiring unicorns, providing an alternative exit strategy.

The volatility in IPO exits from 2021 to 2024 underscores this shift:

2021: 39% of exits were IPOs.

2022: Only 8% of exits were IPOs.

2023: A brief recovery to 24% IPO exits.

2024: A return to 11% IPO exits.

This pattern suggests that the era of IPOs as the default exit strategy for unicorns may have ended, even before the market downturn.

Secondary markets have played a pivotal role in this transformation. They provide liquidity to stakeholders without necessitating a public offering, allowing companies to remain private longer and reducing the reliance on IPOs. This shift has significant implications for equity compensation strategies, as employees may no longer expect IPOs for liquidity.

Additionally, contractual clauses in venture capital agreements can impede IPOs, especially when valuations decline. Clauses such as down-round protection, liquidation preferences, and board consent requirements can make public offerings economically unfeasible. Founders are advised to thoroughly understand these provisions to navigate potential exit constraints effectively.

The data indicates that the preference for IPOs among unicorns is no longer the norm. Companies now have more alternatives, including strategic acquisitions and secondary market options, which offer different advantages and challenges. For SaaS founders, this evolving landscape necessitates a reevaluation of exit strategies, emphasizing the importance of flexibility and preparedness for various scenarios.

The trend is not your friend

Signalrankupdate • July 21, 2025

Business•VentureCapital•InvestmentStrategy•EmergingTrends•Venture Capital

In 2010, Mark Suster of Upfront Ventures advised venture capitalists (VCs) to focus on "lines"—multiple data points over time indicating a trajectory—rather than "dots," which are isolated data points. This approach encourages early engagement with founders to assess progress and build relationships. However, this advice is increasingly overlooked in today's competitive VC landscape, where rapid investment processes are common.

The venture ecosystem's interconnectedness has led to the rapid rise and fall of "hot" themes, often without substantial value creation. For instance, the "____ for X" model, such as "Uber for X," has become prevalent, with numerous companies adopting this template. This trend reflects a mimetic approach to venture capital, where investors chase popular themes rather than seeking unique opportunities.

The most successful investors focus on identifying exceptional founders capable of building category-defining companies. They evaluate founders on an individual basis, independent of prevailing themes, and resist the urge to follow the crowd. This strategy involves building networks and relationships in unconventional areas, allowing investors to discover opportunities before they become mainstream.

Data supports this approach. For example, the defense sector gained prominence only after Anduril's Series C funding, and AI investments surged following OpenAI's release of ChatGPT. These instances demonstrate how the best investors can identify and capitalize on emerging trends before they gain widespread attention.

In summary, while following trends may seem appealing, the most successful investors prioritize building relationships and identifying unique opportunities, focusing on the long-term trajectory of founders and companies.

It’s Not Just You. Lead VCs Are Taking More of Each Round

Saastr • July 5, 2025

Business•Startups•VentureCapital•Fundraising•Venture Capital

If you've been fundraising lately and noticed your lead investor wants to take a bigger chunk of your round than expected, you're not imagining things. New data from Carta analyzing 17,896 primary priced rounds from Q1 2021 to Q2 2025 shows a clear trend: lead investors are systematically taking larger portions of the rounds they lead.

Here's what's happening across every funding stage:

Seed rounds: Lead investor participation jumped from 52% in 2021 to 61% in 2025. That's nearly a 10-percentage-point increase in just four years.

Series A: More stable but still climbing from 54% to 59% over the same period.

Series B: Despite some volatility, trending upward from 50% to 51%.

Lead VCs aren't just leading rounds anymore—they're dominating them. Sharper elbows, more ownership.

What This Means for Founders

Your round composition is changing. Where you might have had 4-5 investors splitting a round a few years ago, you're increasingly looking at 2-3 investors with your lead taking the lion's share.

Syndicate dynamics are shifting. With leads taking bigger bites, there's less room for other investors. This can make it harder to bring in strategic investors, additional value-add partners, or maintain optionality for future rounds.

Power concentration is real. When one investor controls 60%+ of your round, they wield significantly more influence over your company's direction, board composition, and future fundraising decisions.

Why This Is Happening

Several factors are driving this trend:

Flight to quality. In uncertain markets, top-tier VCs are being more selective and going deeper on fewer deals rather than spreading capital thin.

Larger fund sizes. Many VCs have raised bigger funds and need to deploy more capital per deal to achieve meaningful ownership percentages.

Competitive dynamics. To win competitive deals, leads are offering to take larger allocations to give founders more certainty and simplify the fundraising process.

Risk management. By taking bigger positions, VCs can exert more control and better protect their investments in volatile market conditions.

The Seed Stage Story

The most dramatic shift is happening at seed stage. Lead investors have gone from taking $1.6M of a $3.0M round in 2021 to $2.3M of a $3.8M round in 2025.

This isn't just about round sizes growing—it's about leads claiming an ever-larger slice of the pie. Even when round sizes stay flat, lead allocations keep growing.

What Founders Should Do

Plan your syndicate early. If you want multiple investors in your round, start conversations early and be explicit about allocation expectations upfront.

Understand the trade-offs. A lead taking 60% of your round isn't inherently bad—it can mean faster decisions, cleaner terms, and stronger support. But it does mean fewer voices around your table.

Negotiate thoughtfully. Don't just focus on valuation. The composition of your round matters for governance, future fundraising, and strategic flexibility.

Keep optionality in mind. Consider how round composition affects your ability to bring in strategic investors or sector specialists in follow-on rounds.

The Bottom Line

This trend toward lead concentration isn't necessarily good or bad—it's just the new reality of venture fundraising. The most successful founders are those who understand this shift and plan accordingly.

NFDG: The $1.1B VC Fund That 4X’d in Two Years—Then Got Acquired by Meta

Saastr • July 6, 2025

Business•VentureCapital•ArtificialIntelligence•StartupInvesting•Venture Capital

In the rapidly evolving landscape of venture capital, few stories are as remarkable as that of NFDG—a $1.1 billion fund that achieved a stunning 4x return in just two years (at least on paper)—only to see its founders recruited by Meta in one of the most unusual acqui-hire arrangements in Silicon Valley history.

The deal was done in less than a week—and the NFDG website is already down:

June 29: Gross leaves Safe Superintelligence, which he co-founded and is NFDG’s crown jewel investment

This week: Zuckerberg announces Friedman joining Meta

July 4: Friedman confirms on X he’s started at Meta

July 5: News breaks about the tender offer

Today: NFDG website is down

NFDG was founded by two of Silicon Valley’s most respected figures: Nat Friedman, former CEO of GitHub, and Daniel Gross, formerly a partner at Y Combinator. The duo launched their venture fund in 2023, raising an impressive $1.1 billion in their debut fund—a testament to their combined reputation and track record in the tech industry.

Friedman brought deep experience in developer tools and enterprise software from his time leading GitHub through its acquisition by Microsoft and subsequent growth. Gross contributed his expertise in early-stage investing and startup acceleration from his tenure at Y Combinator, where he helped identify and nurture some of the accelerator’s most successful companies.

From the outset, NFDG positioned itself as an AI-focused venture fund, anticipating the massive wave of innovation that would sweep through the artificial intelligence sector. This strategic focus proved prescient as the fund launched just as the generative AI boom was beginning to reshape entire industries.

The fund’s most spectacular success story is undoubtedly Safe Superintelligence, co-founded by Daniel Gross himself alongside Ilya Sutskever (former OpenAI co-founder and chief scientist). The company’s valuation trajectory tells a remarkable story:

Previous round: $5 billion valuation

Current valuation: $30 billion (as of 2024)

Growth multiple: 6x increase

NFDG’s role: Early investor through Gross’s direct involvement

This investment exemplifies NFDG’s unique approach, combining financial backing with direct operational involvement to drive exceptional growth.

The rapid success of NFDG and its strategic investments highlight a broader trend in Silicon Valley, where the lines between venture capital and operational roles are increasingly blurred. The allure of directly shaping the future of transformative technologies, particularly in the AI sector, is drawing top talent away from traditional fund management roles. This shift underscores a profound change in how industry leaders perceive the balance between financial returns and the opportunity to influence technological innovation at its core.

Accel, General Catalyst Topped Increasingly Busy Active Investor Ranks In Q2

Crunchbase • July 11, 2025

Business•VentureCapital•InvestmentTrends•ArtificialIntelligence•SpaceTechnology•Venture Capital

In the second quarter of 2025, venture capital activity saw a significant uptick, with nine of the ten most active investors increasing their deal counts compared to the first quarter. Notably, Accel and General Catalyst emerged as leaders in this surge, each taking prominent roles in multiple high-profile funding rounds.

Accel led 20 rounds during this period, including substantial investments such as a $500 million financing for generative AI company Perplexity and a $260 million Series C for spacetech startup True Anomaly. Similarly, General Catalyst was at the forefront, leading 16 rounds, with its most significant being a $1 billion financing for AI writing assistant Grammarly. These substantial commitments underscore the firms' confidence in sectors like artificial intelligence and space technology.

While Accel and General Catalyst were the most active in terms of deal count, the largest individual investments were led by other firms. Meta made headlines with a $14.3 billion investment in Scale AI, marking a strategic and financial partnership that also saw Scale AI's founder, Alexandr Wang, join Meta. Following Meta, Founders Fund and Andreessen Horowitz were among the top spenders, with Founders Fund leading a $2.5 billion round for defense tech company Anduril, and Andreessen Horowitz leading a $2 billion seed financing for Thinking Machines Lab.

In terms of deal volume, Y Combinator stood out by participating in 45 post-seed financings, the highest among investors in Q2. This included significant rounds for companies like HR platform Rippling and AI robotics startup Gecko Robotics. General Catalyst and Accel also maintained high activity levels, participating in 38 and 31 deals, respectively.

The seed-stage investment landscape was similarly active, with Y Combinator leading with 50 seed investments, followed by Antler with 33. It's important to note that reporting practices in seed-stage investments can vary, as accelerators often report investments in batches, leading to fluctuations in deal counts.

Overall, the second quarter of 2025 demonstrated a robust venture capital environment, characterized by increased deal activity and substantial individual investments. This trend reflects growing confidence among investors in sectors such as artificial intelligence, defense technology, and space exploration.

AI

Vibe Coding is the Future. But “Roll Your Own?” That’s More Complicated.

Saastr • Jason Lemkin • July 12, 2025

Technology•Software•VibeCoding•SaaS•AI

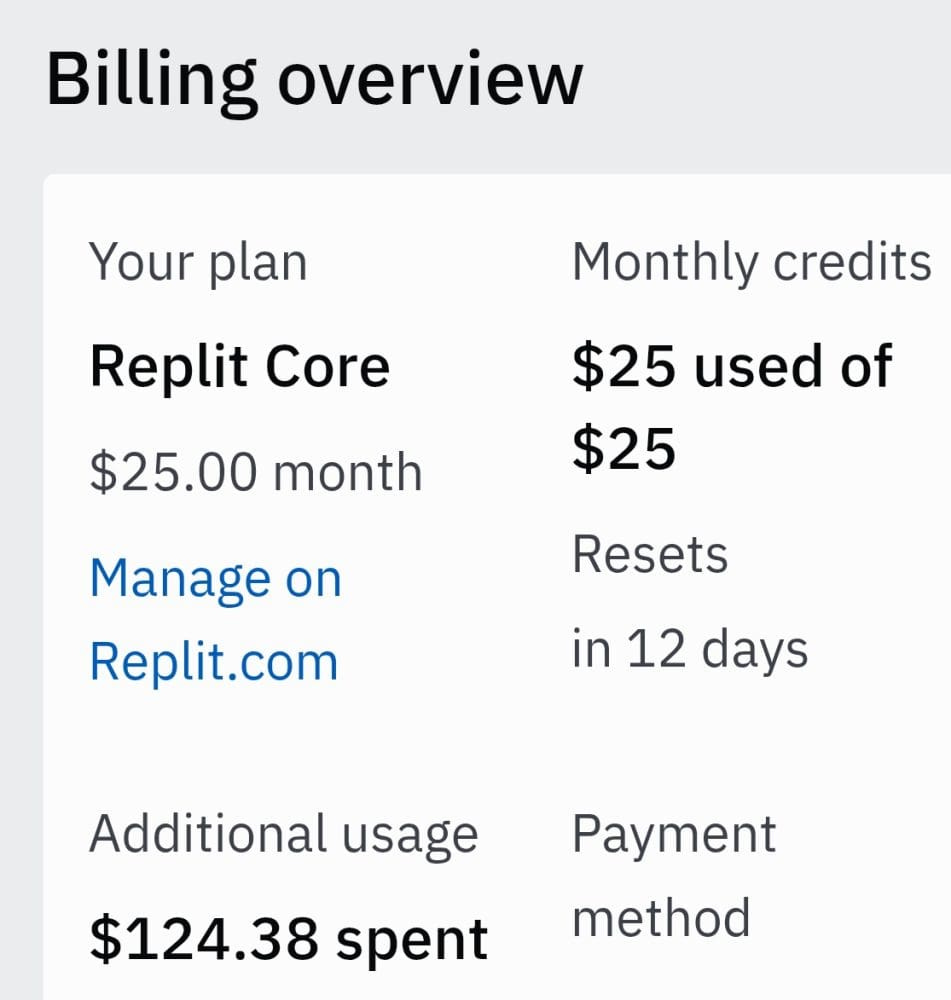

I spent the other deep in vibe coding on Replit for the first time — and I built a prototype in just a few hours that was pretty, pretty cool.

Getting into commercial-grade, enterprise-grade shape is different, though.

But to start it’s amazing:

You can build an “app” just by, well imagining it in a prompt

Replit QA’s it itself (super cool), at least partially with some help from you

and … then you push it to production — all in one seamless flow.

That moment when you click “Deploy” and your creation goes live? Pure dopamine hit.

First, I built a lightweight Cluely clone just for fun (emphasis on lightweight — it’s pretty rough around the edges, but the learning was the point). It was easy to build and … sort of worked:

Then I kicked off my second project, this time for real. To build a real, enterprise grade product folks would actually pay for. I’m maybe 5%-10% of the way there 2.5 days and $200 of replit credits in.

And whatever I build, needs to be novel. It needs to be something you can’t get elsewhere. Because as cool as vibe coding is, I won’t be able to build something better than Notion or Slack, let alone something with crazy compute like an Opus Clip or Higgsfield. All of which are … dirt cheap.

Vibe Coding Also Only Takes You So Far With Complicated Workflows, Enterprise Use Cases, Etc. For Now.

Over time, apps like Adobe Sign and DocuSign that were simple-ish at first have become incredibly deep workflow engines with 1000s and 1000s of workflows, maybe more. Vibe coding that is probably close to impossible. It might get you prototyped, it might get you going, it might help you learn. But I think that’s as far as most will get today 100% vibe coding.

Who has the time to rebuild those 1000s of intricate workflows? Make them actually secure? Enterprise-grade? Handle every edge case that real users will inevitably find?

I can vibe code a few. But not 1000s.

The learning: if it’s already built, I’d much rather spend $20-$200 a month for an app that already exists and is bulletproof.

If it’s already built. My time is worth more than $20/month.

In fact, I’ve already spent $200 the past 72 hours building my next app on Replit, and I’m only 10% done. This time I’m trying to build something commercial grade.

SaaS in the Vibe Coding Age in Fact Almost Seems Cheap Again. Great, Cost-Effective SaaS at least.

Many on X claim they could vibe code tons of their stack themselves now. “Why pay for Slack when we can build our own chat app?” “Why use Notion when we can create our own knowledge base?”

But vibe coding actually proves the opposite point. Yes, you can build at least some portion of almost any workflow + database app now. At least a simpler variant. The end-to-end vibe coding tools really are incredible.

But should you?

Notion is $0-$20 a month. And it’s really good. I can guarantee it will be 100% better than anything almost anyone can vibe code.

The New Calculus

For now, let’s be clear. There is no way no-engineers vibe coding apps in a few hours or even a week will replace major SaaS apps. No way. Hopefully it will put some pressure on legacy CRMs to stop nickel-and-diming customers, but even there, I have my doubts.

But if nothing else, vibe coding apps is unleashing new apps at an unprecedented pace. And they will all keep getting better and better, faster and faster. Especially for niche vertical apps that just don’t exist in the market, they may already be there. And for true developers that use them mainly to prototype, vibe coding is already epic.

For now, probably:

Vibe coding is perfect for:

Rapid prototyping (testing ideas)

Custom internal tools (helping teams work better)

Net-new workflow + database solutions that don’t exist yet, especially very niche tools

Learning and experimentation

The Real Insight

“Time is the new currency. Building is fun but buying saves sanity.”

This isn’t about replacing core business functions. For apps that need to work flawlessly at 3 AM when your biggest customer calls? Still buying.

The democratization of coding doesn’t make established SaaS obsolete — it makes us appreciate just how much complexity those companies have solved for us. “All we see is icebergs.”

Vibe coding is the future of creation. But the future of operations for now at least is still powered by companies that spent years getting the details right.

SaaS is becoming stronger, not weaker, thanks to vibe coding. When anyone can build the basics, the value of getting the advanced stuff right becomes even more apparent.

That $200/month Salesforce seat? Still a bargain. Not cheap. But a bargain.

The Vibe App Revolution is Real. Where It Goes Is The Only Murky Part.

If you need proof that AI code creation is absolutely on fire: Replit went from $10M to $100M ARR in just the first 6 months of this year alone.

10x growth in less than half a year.

When you see numbers like that, you realize we’re not just talking about a new tool or trend. We’re witnessing a fundamental shift in how software gets built. The barriers to creation have collapsed, and the market is responding accordingly.

The vibe coding revolution is here. The question isn’t whether it will change software production, it already has. The questions are just exactly where it will go for true production-grade, commercial apps. This year, and after.

For now, I’m going to keep vibe coding. And I’m also going to keep happily paying $20 a month for the best B2B apps. That I could never vibe code myself for $240 ($20 x 12). Not really. Nor could you.

How Anthropic Rocketed to $4B ARR — And Why Your B2B Playbook May Already Be Obsolete

Saastr • Jason Lemkin • July 12, 2025

Technology•AI•EnterpriseAI•SaaS•GrowthStrategy

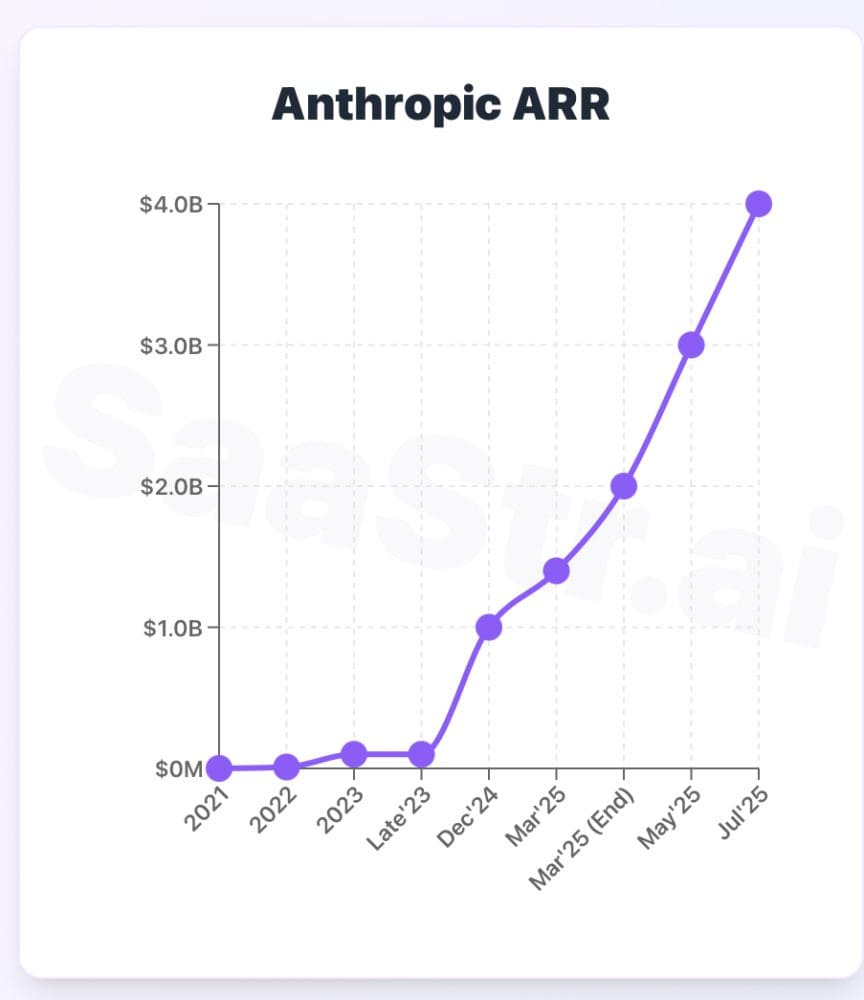

When Anthropic hit a reported $4 billion in annual revenue at the end of 1H’25, it marked more than just another AI milestone. It validated a completely new category of B2B growth that’s operating by fundamentally different rules than anything we’ve seen before.

Let’s break down the numbers that should make every SaaS founder rethink their growth assumptions:

The Growth Trajectory That Breaks Every SaaS Model

Anthropic’s Revenue Timeline:

2022: $10M (founding year revenue)

2023: $100M (10x growth)

Dec 2024: $1B ARR (10x growth again)

July 2025: $4B ARR (300% growth in 7 months)

That’s 100x growth in three years. To put this in perspective, it took Snowflake—one of the fastest SaaS companies in history—six quarters to go from $1B to $2B ARR. Anthropic did $1B to $4B in seven months.

The Enterprise-First Strategy That Worked

While OpenAI captured headlines with consumer ChatGPT adoption, Anthropic quietly built an enterprise juggernaut. Here’s how they did it:

API-First Revenue Model

Unlike the subscription-heavy models of traditional SaaS, 70-75% of Anthropic’s revenue comes from API calls through pay-per-token pricing. This creates several advantages:

Immediate scalability: No lengthy enterprise sales cycles

Usage-based pricing: Revenue scales directly with customer success

Lower customer acquisition costs: Developers can start using APIs instantly

Key Pricing: Claude Sonnet 4 is priced at $3 per million input tokens and $6 per million output tokens. When customers are processing complex code generation or multi-file operations, single sessions can consume 5,000-20,000 tokens.

Code Generation as the Primary Growth Driver

While everyone talks about general AI adoption, Anthropic identified code generation as the killer use case. Here’s why this matters:

Token intensity: Code generation consumes 10-50x more tokens than typical chat

Enterprise necessity: Companies can’t avoid automating development workflows

Stickiness: Once integrated into developer workflows, switching costs are massive

Major customers like Sourcegraph, GitLab, Replit, and Bridgewater Associates leverage Claude’s 200,000-token context window for complex coding tasks and financial analysis.

Channel Partnership Strategy

Rather than building massive direct sales teams, Anthropic distributes primarily through:

AWS Bedrock: Leveraging Amazon’s enterprise relationships

Google Vertex AI: Tapping into Google Cloud’s customer base

Direct API access: For developer-first adoption

This reduces sales costs while accelerating enterprise adoption through existing trusted relationships.

The SaaS Metrics That Don’t Apply

Traditional SaaS metrics break down when analyzing AI infrastructure companies:

CAC/LTV Becomes Irrelevant

When developers can start using your API with a credit card and scale to millions in usage, traditional customer acquisition cost calculations don’t work. Anthropic’s “customers” can go from $0 to $100K+ monthly usage without ever talking to a salesperson.

Churn vs. Expansion Revenue

In token-based models, expansion revenue isn’t about selling more seats—it’s about customers consuming more tokens as they build larger applications. One customer’s successful product launch can 10x their token usage overnight.

Gross Margins at Scale

AI infrastructure operates with different margin profiles than traditional SaaS. While Anthropic likely operates at 40-60% gross margins today (vs. 80%+ for typical SaaS), the absolute dollar margins are massive given the revenue scale.

xAI's Grok 4: The tension of frontier performance with a side of Elon favoritism

Interconnects • Nathan Lambert • July 12, 2025

Technology•AI•FrontierModels•ModelPerformance•AIAdoption

Elon Musk’s xAI launched Grok 4 on Wednesday, the 9th, with the fanfare of leading benchmarks and 10X RL compute for reasoning, but even with that it is unlikely to substantively disrupt the current user bases of the frontier model market. On top of stellar scores, Grok 4 comes with severe brand risk, a lack of differentiation, and mixed vibe tests, highlighting how catching up in benchmarks is one thing, but finding a use for expensive frontier models isn’t automatic — it is the singular challenge as model performance becomes commoditized.

In this post we detail everything about Grok 4, including:

Performance overview and a survey of early vibe checks,

Testing Grok 4 Heavy and how xAI’s approach to parallel compute compares to o3 pro,

xAI’s lack of differentiated products, and

MechaHitler and culture risk.

The core of it is a very impressive model, but the frontier model plagued with the most serious behavioral risks and cultural concerns in the AI industry since ChatGPT’s release.

Grok 4 is the leading publicly available model on a wide variety of frontier model benchmarks. It was trained with large scale reinforcement learning on verifiable rewards with tool-integrated reasoning.

Swyx at Smol AI and Latent.Space summarized the performance perfectly:

Rumored to be 2.4T params (the second released >2T model after 4 Opus?), it hits new high water marks on HLE, GPQA (leading to a new AAQI) HMMT, Connections, LCB, Vending-Bench, AIME, Chest Agent Bench, and ARC-AGI, and Grok 4 Heavy, available at a new $300/month tier, is their equivalent of O3 pro (with some reliability issues). What else is there to say about it apart from go try it out?

A few others include it being top overall by ArtificialAnalysis and dethroning Gemini 2.5 Pro on long context. It also launches with an API version (a first for xAI).

This is an extremely impressive list, and something we don’t see regularly in AI model releases as the landscape has been more competitive. The sorts of models with this “wiping the floor” on benchmarks are only the likes of o1, o3 (which was just an announcement, not released), and Gemini 2.5 Pro. Benchmark progress is in many ways going faster than ever — the previous major step like these models was arguably GPT-4 itself.

In order to achieve this, xAI put up a slide saying that they increased the RL compute from Grok 3 reasoning by 10X to create this model.

This plot is not something that should be taken as precise. Even if they did use the exact same pretraining compute as Grok 3 and scaled up RL to be exactly the same amount of compute, it is definitely case that it is not representative of any “RL is saturating already” or other timeline comments. The benchmarks and speed of releases speak for this — RL is enabling a new type of rapid hillclimbing and all of the leading labs are committing large personnel and compute resources to exploiting it.

We have no indications that we are near the top of the RL curve to balance out the GPT-4.5 release that showed “simple parameter scaling alone” (without RL), isn’t the short term path forward.

The Humanity’s Last Exam plot, while showcasing overall peak performance, is also a beautiful example of scaling both training time (RL) and test-time (inference time scaling, CoT, parallel compute) with and without tools. This is the direction leading models are going.

The main question with this release was then — does the usefulness of the model in everyday queries match the on paper numbers of the model?

Immediately after the release there were a lot of reports of Grok 4 fumbling over its words. Soon after, the first crowdsourced leaderboards (Yupp in this case, a new LMArena competitor), showed Grok 4 as very middle of the pack — far lower than its benchmark scores would suggest.

My testing agrees with this. I didn’t find Grok 4 particularly nice to use like I did the original Claude 3.5 Sonnet or GPT 4.5, but its behavior with tools was immediately of interest to me. Grok 4 is a model that is very reminiscent of o3 in its search-heavy style — this is a milestone I’ve been specifically monitoring, and again confirms that major technical differentiation doesn’t last long across frontier model providers. Maybe making an o3 style model isn't so hard, but making one that has style and taste is.

It’s the sort of behavior where the model almost always searches, e.g. for the simple query below. Grok 4 uses search, so does o3, but Claude 4 and Gemini 2.5 do not.

At the same time, it doesn’t seem quite as extensive in its search as o3, but much of this could be down to UX and inference settings rather than the underlying model’s training. The reasoning is far more interpretable than OpenAI and some other providers which is nice to understand how the model is using tools (e.g. the exact search queries).

Overall, the vibe tests indicate that Grok 4 is a bit benchmaxxed and overcooked, but this doesn’t mean it is not a major technical achievement. It makes adoption harder.

Along with the new model itself, xAI announced a new “Heavy” mode which “dynamically spawns multiple agents” to help solve problems. This new offering combined with the search-heavy behavior represented an important item to test explicitly.

In summary, Grok 4 Heavy behaves like a hybrid between Deep Research products and o1/3-Pro style models on open domains. This points to a new era of technical uncertainty as users and companies race to understand how the top models behave at inference. No longer is it enough to only serve long chains of thought at inference — Grok 4 Heavy shows substantial improvements across all of the reasoning benchmarks.

We don’t have enough information on Grok Heavy, o3-Pro, or Deep Research to know exactly which of these are close to each other. The operating assumptions in industry are that two types of parallel compute exist:

Multi-agent systems with an orchestrator model: In this case, which I interpret being close to Claude Code with parallelism enabled or Deep Research, one central repository manages parallel search agents assigned sub tasks.

Parallel, ranked generation: in this case, the same prompt is provided to multiple copies of the model and the best answer is selected by a verifier or reward model.

Both of these will be impactful for different domains, but the former is far closer to general agents that the industry is collectively striving for and anticipating.

Here I like Grok 4 Heavy’s answer better than ChatGPT Deep Research. They have similar information, but Grok 4 Heavy is more concise.

In this case and overall, Grok 4 outperforms OpenAI Deep Research. Grok 4 simply got far more of the correct links and presented it in the requested form. Combined with the live information graph on X, there are multiple groups who would benefit from this substantially and immediately.

This example above is one of the first times a single request to an AI model has done a “wide” search over source materials. A factor that eventually will come into play here is both user price and effective margins. We don’t know the costs to serve any of these models.

All in, the performance of Grok 4 is very spikey. It has incredible performance on benchmarks and some of the tests done are the best an AI has ever been at some information retrieval tasks, but it falls on its face in some simple ways when compared to its peers like o3 or Claude 4 Opus.

Despite all of this success, xAI and Grok still face a major issue — making a slightly better model in performance that is comparable on price isn’t enough to unseat existing usage patterns. In order to make people switch from existing applications and workflows, the model needs to be way better. This sort of gap I have only experienced with the original Claude 3.5 Sonnet pulling me from ChatGPT (until better applications and ecosystem pulled me back to ChatGPT). The question is — how does xAI monetize this technical success?

Grok’s differentiation is still that it doesn’t have many of the industry standard guardrails. This is great from a consumer perspective, but presents challenges at the enterprise level (even if the lack of alignment was only a minor worry).

With the current offerings, the performance of ChatGPT at $20/month is similar to Super Grok at $300/month. Where is their market?

Claude Code with higher tiers of Anthropic plans is the most differentiated offering among the paid chatbots. For many people, Claude Code is the most fun and useful way to use a language model right now. This is a minority group, but at least one that is willing to pay. For coding, I don’t think Grok 4’s search behavior is as good (same reason I don’t recommend using o3 in something like Cursor), where Claude is still king.

The xAI team did say “Grok 4 coding model soon” before and during the livestream (easily found via Grok 4), so they understand this. Still, the timeliness of this model with product-market-fit matters far more than all the benchmarks, as seen by Claude 4’s lackluster benchmark release. Claude 4 has only become more popular since its release day — I don’t see Grok 4 being the same.

Another timely example of a model that’ll have immediate and practical real-world uptake is the new open-weight Kimi K2 model. Moonshot AI describes their new, mostly permissively licensed 1T total, 32B active MoE model “Open Agentic Intelligence.” This model rivals Claude 4 Sonnet and Opus on coding and reasoning benchmarks.

This makes an impact by being by far and away the best open weight model in this class. Similar to, but not in the same magnitude, there will be a rush to deploy this model and build new products off the backbone of cheap inference from an optimized stack similar to the DeepSeek MoE architecture.

AI adoption and market share downstream of modeling success comes from differentiation in AI.

Grok 4 is the culmination of an ethos that will lead to more dangerous outcomes for AI with little upside on added performance. xAI, in the livestream, announced they have gotten extended security compliance tests commonly referred to as System and Organization Controls 2 (SOC 2) in order to sell into enterprises. This is a wholly useless endeavor when the underlying technology isn’t trustworthy for cultural reasons.

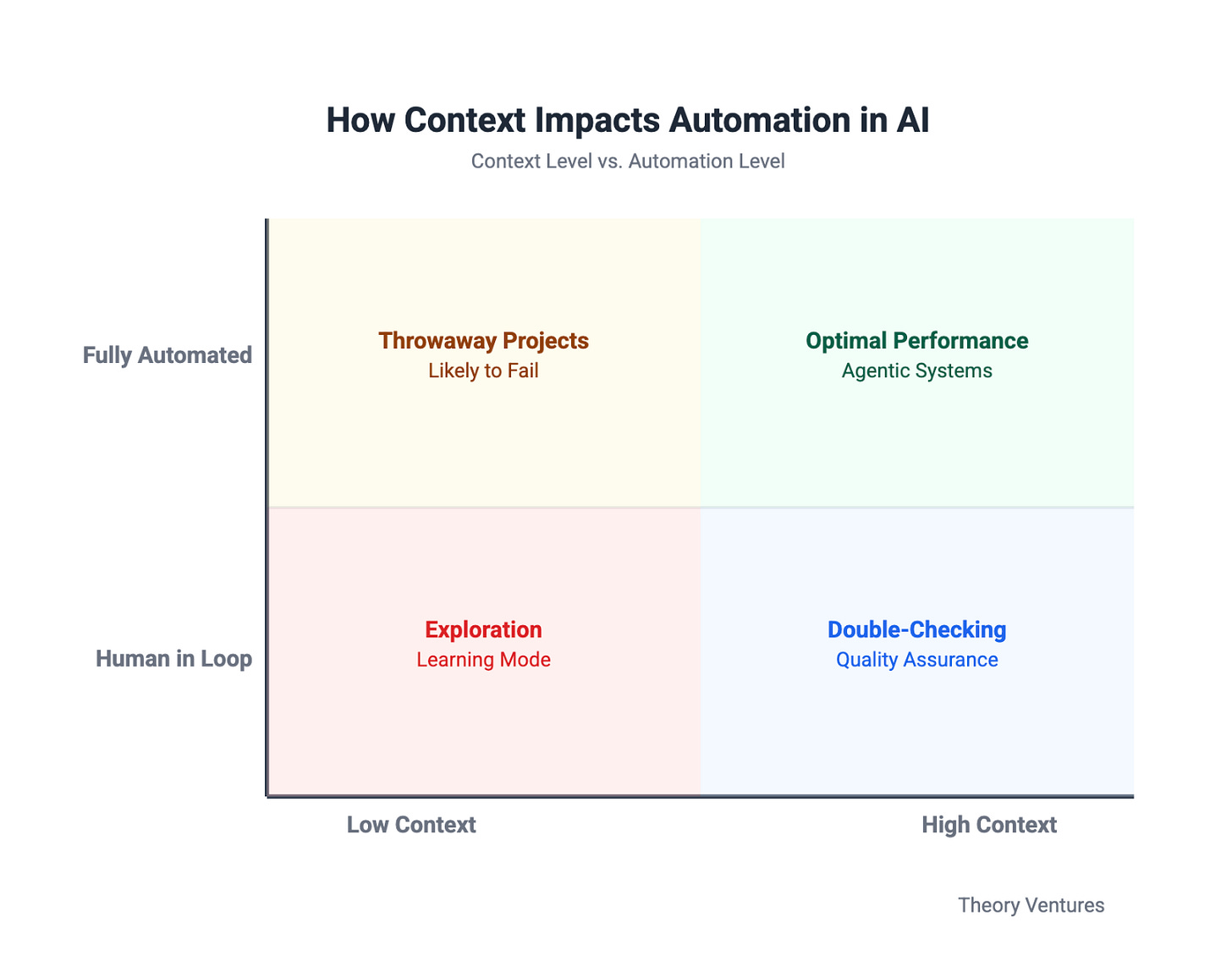

How To Navigate The AI Distribution Shift

Brianbalfour • Brian Balfour • June 29, 2025

Technology•AI•PlatformStrategy•DistributionShift•CompetitiveAdvantage

After I wrote the The Next Great Distribution Shift, the most common thing people were asking me is - “What do I do about it? How are you preparing?”

Fareed Mosavat and I tackled this in our recent episode of Unsolicited Feedback. The TL;DR: If we're right, you have months (not years) to get your platform strategy right. The gates are opening. And the innovation replication cycle has never been faster.

In this post (and episode), we take some steps to help you learn how to play the game before the game plays you.

Listen On: Apple | Spotify | YouTube

Recap: The Next Great Distribution Shift

In The Next Great Distribution Shift, I laid out four points (skip this section if you’ve already read that article).

The AI Tech Shift Happened. The AI distribution Shift Is Just Beginning.

The AI technology shift has transformed product capabilities, business models, and competitive landscapes. Yet despite this technological revolution, we have not experienced its corresponding distribution shift. This isn't unusual. Every major platform transition follows the same pattern—technology first, distribution second.

Distribution shifts consistently lag technology shifts a couple of years. We witnessed this delay during the emergence of social networks, search engines, and mobile platforms. The technology foundation establishes first, creating new possibilities and disrupting existing workflows. The distribution revolution follows, determining which companies capture and control the value created by these technological capabilities.

We Are Now Approaching The Inflection Point Where The AI Distribution Shift Will Emerge

The competitive conditions necessary for distribution shift emergence are now aligned. We have achieved market consensus that AI chat experiences represent a massive, transformative category while the ultimate winner remains unclear.

Multiple major players are actively competing for platform dominance: OpenAI with ChatGPT, Anthropic with Claude, Google with Gemini, Meta with Llama, and Apple's strategic positioning remains uncertain. This competitive dynamic creates the exact environment that historically triggers distribution platform development.

Simultaneously, traditional distribution channels are experiencing significant degradation. SEO effectiveness has declined, app store discovery has become increasingly difficult, and paid advertising channels are delivering diminishing returns. This distribution scarcity creates market pressure for alternative channels, accelerating adoption of emerging platforms.

The convergence of competitive uncertainty and distribution scarcity establishes ideal conditions for platform emergence and ecosystem development.

The Distribution Shift Will Follow The Same 3 Step Cycle

Every successful distribution platform follows an identical progression that reflects structural market dynamics rather than platform-specific strategies.

Step 1: Moat Identification Market consensus emerges around category importance while multiple players compete for dominance. The eventual winner identifies sustainable competitive advantages that differentiate from feature parity competition. In the AI landscape, this moat is shifting from model intelligence to context and memory accumulation—the platforms that can gather and leverage user context most effectively will achieve escape velocity.

Step 2: Opening The Gates The leading platform creates an open ecosystem to accelerate moat development. This involves establishing value exchange mechanisms where third-party developers receive capabilities and organic distribution in exchange for extending platform functionality and contributing to competitive advantage accumulation. We are beginning to see early signals of this phase with integration announcements and platform development hiring.

Step 3: Closing The Gates Once competitive position is secured, platforms optimize for monetization and control. Organic distribution becomes artificially constrained toward paid channels, successful third-party applications get absorbed into first-party features, and revenue sharing terms deteriorate significantly. This phase is inevitable once platforms achieve market dominance.

There Is No Opting Out

Platform participation represents a strategic prisoner's dilemma where individual rational decisions create collective competitive dynamics that force market-wide participation.

If competitors integrate with emerging platforms and gain competitive advantages through enhanced capabilities or distribution access, non-participating companies face systematic disadvantage. Market forces compel integration regardless of long-term platform control concerns.

This dynamic explains why established companies like HubSpot integrate with ChatGPT despite obvious risks of user relationship displacement. The competitive pressure of potential customer preference for integrated experiences outweighs platform dependency concerns.

The strategic choice is not whether to engage with emerging platforms, but how to optimize timing, resource allocation, and competitive positioning within an inevitable participation framework. Understanding cycle progression enables proactive strategy development rather than reactive competitive responses.

How Do You Play The Game?

As I said on Unsolicited Feedback, my intention is not to paint a picture that these platforms are “good” or “evil.” I’m passing no judgement. This cycle happens due to competitive and capitalistic incentives.

My personal mentality is that “it is what it is.” But it’s a game. They are playing you, so you need to know how to play them. Given that, the most common question I got on the original post was - So what do I do next?

What’s Your Betting Strategy?

While my personal prediction centers on ChatGPT achieving platform dominance, this outcome is not guaranteed. The current competitive environment mirrors historical distribution shifts where multiple viable candidates compete for market control before a winner emerges.

OpenAI with ChatGPT

Anthropic with Claude

Google with Gemini

Meta with Llama and Meta AI

Apple with ??? (it will come eventually)

This competitive dynamic directly replicates previous distribution shifts. Facebook competed against MySpace, Orkut, Hi5, and Friendster before achieving dominance. Google emerged victorious despite Yahoo's early distribution supremacy. The pattern demonstrates that initial market position does not guarantee long-term platform control. That means at this stage you are taking bets and you need a betting strategy.

DIVERSIFY OR YOLO?

The two most common reactions to this stage are:

I’ll be early, but diversify across platforms until a winner emerges.

I’ll just wait to dedicate any resources until it’s clear who the winner is.

These are rarely the right strategies. Waiting until the winner is clear means you are typically too late. The early arbitrage advantages are probably gone and you will be fighting an uphill battle.

Being early and diversifying might be possible for very large companies that have large experimental budgets to spread around. Startups don’t have this advantage. They have resource constraints and need to focus. You need to bet, and you need to bet right. Higher risk, higher reward. In other words, portfolio strategy does not apply to startups.

Fareed Mosavat gave a couple of great examples in the Unsolicited Feedback Episode:

"In the mobile shift, if you bet on just Apple, you could be a winner, like Instagram. If you bet on just Android, you could not. If you did Apple and Android, you would grow faster, it turned out, because both those platforms are dominant. But building for both took a lot of resources at the time. And building on Windows Phone was a huge waste of time."

He went on to give another example in the shift to Social:

“The same was true on the Facebook platform. Some people did ok just on MySpace in the early days, but all of those developers shifted to Facebook, right? And there were a million other networks and there was a lot of encouragement from investors, from thought leaders, et cetera, saying should build as much distribution across them. It turned out the winning move were those that just built on the Facebook platform."

The point Fareed is making, is that every ounce of energy you spend on building on the platform that is not the ultimate winner has a massive tradeoff to spending energy on the platform that does end up being the winner.

How o3 and Grok 4 Accidentally Vindicated Neurosymbolic AI

Garymarcus • July 13, 2025

Technology•AI•NeurosymbolicAI•DeepLearning•ArtificialIntelligence

How o3 and Grok 4’s recent developments have quietly confirmed the validity of neurosymbolic AI, an approach that integrates neural networks and symbolic reasoning, marking a significant shift in the AI landscape after decades of debate. Neurosymbolic AI asserts that the strengths of neural networks—learning from large-scale data—complement the strengths of symbolic AI—explicit representation and rigorous reasoning—forming a crucial hybrid for advancing artificial intelligence beyond the limitations of either approach alone.

Neurosymbolic AI has been historically overshadowed by the prevailing enthusiasm for pure deep learning, which champions neural networks scaled by massive data and compute power as the route to true AI. Early proponents of deep learning like Geoffrey Hinton and Ilya Sutskever argued that neural networks would eventually surmount their flaws with enough parameters and training, citing the vast complexity of the human brain compared to current models. Opposing this, Gary Marcus, an early and persistent advocate of neurosymbolic AI, argued that deep learning’s inability to understand causality and reason abstractly is inherent and not just a matter of scale. Marcus’s position has long been marginalized within an ecosystem where funding and scientific focus favored purely connectionist methods.

The article traces the roots of AI into two traditions: connectionist neural networks inspired loosely by brain structures, and symbolic AI grounded in formal logic and computational abstractions developed by figures like Alan Turing and John McCarthy. Neurosymbolic AI proposes harmonizing these by embedding symbolic reasoning capabilities within neural systems. Marcus has emphasized three indispensable symbolic elements: algebraic systems (explicit variables and operations), structured symbolic representations (compositional and systematic), and database-like distinctions (individuals vs. kinds) to avoid overgeneralization and hallucinations—persistent problems in neural-only models.

Despite resistance—especially from influential figures like Hinton, who dismissed symbolic integration as misguided—some notable progress using neurosymbolic principles emerged, notably from Google DeepMind’s successes (AlphaGo, AlphaFold) that blend symbolic algorithms with neural components. Still, the broader AI field persisted with the “scale is all you need” ideology, betting on ever-larger language models like GPT-3 and GPT-4. Yet, even with astronomical computational resources (Elon Musk claims Grok 4 employed 100 times the compute of Grok 2), recent benchmarks reveal diminishing returns on pure scaling. Performance plateaus and persistent errors in reasoning, hallucination, and misalignment underscore that simply "scaling up" neural networks falls short of the path to artificial general intelligence (AGI).

What has shifted is the subtle but crucial adoption of symbolic tools in state-of-the-art models. OpenAI’s “code interpreter,” which allows language models to call and execute symbolic Python code, exemplifies neurosymbolic AI in practice—despite industry reluctance to openly label it as such. These models leverage symbolic algorithms explicitly, improving accuracy in tasks requiring structured reasoning, such as solving the Tower of Hanoi puzzle or generating crossword grids—tasks where pure neural networks struggle and hallucinate. This fusion approach enriches the representational and logical capacity beyond what raw pattern-matching in neural nets can achieve.

Quantitative evidence from recent model launches, such as Grok 4, suggests that the addition of symbolic processing dramatically boosts performance on challenging benchmarks, affirming the decades-long argument for neurosymbolic methods. The diminishing returns from purely data-driven training and the clear gains from integrating symbolic computations illustrate the complementary strengths of these traditions. However, the journey to AGI remains far from complete. Challenges like symbol grounding, reliable spatial reasoning, and constructing dynamic cognitive models “on-the-fly” need deeper research and breakthroughs beyond the current bolt-on approaches.

Sociologically, the article reflects on how investment trends and scientific orthodoxy influenced AI’s trajectory, with the dominant “scaling” narrative favored because it provided a clear, monetizable roadmap for investors and companies. Admitting reliance on symbolic methods complicates this narrative and potentially dilutes the simplistic message that compute alone can solve AI’s challenges. Consequently, neurosymbolic AI’s full potential has been underexplored and underfunded, even as top labs quietly implement hybrid approaches in recent years.

In conclusion, the recent successes of models like o3 and Grok 4 unexpectedly validate the neurosymbolic paradigm long championed by Marcus. These advances underscore the necessity of embracing hybrid systems combining data-driven learning and symbolic reasoning to overcome fundamental AI limitations. The article suggests opening scientific and industrial minds to a broader spectrum of approaches beyond pure deep learning is vital for sustained progress toward AGI. Encouraging transparency, interdisciplinary collaboration, and wider acceptance of neurosymbolic AI may catalyze the next wave of AI innovation.

Key Takeaways:

AI historically split into connectionist (neural net) and symbolic traditions; neurosymbolic AI merges these strengths.

Pure deep learning faces inherent limits in reasoning, abstraction, and causality; symbolic systems provide crucial complementary abilities.

Influential figures opposed neurosymbolic AI, delaying progress and skewing funding toward pure scaling approaches.

Recent models like Grok 4 and OpenAI’s code interpreter feature symbolic reasoning tools integrated with neural networks, improving performance on complex reasoning tasks.

Benchmarks show diminishing returns from scaling compute alone, with symbolic integrations offering significant improvements.

Neurosymbolic AI is not a single method but a set of approaches combining neural nets and symbolic tools; more research needed on integration specifics and unresolved challenges.

Industrial and academic communities would benefit from greater openness to neurosymbolic approaches to foster breakthroughs toward true AGI.

Kimi K2 and when "DeepSeek Moments" become normal

Interconnects • July 14, 2025

Technology•AI•OpenSourceAI•ChineseAI•DeepSeek

The DeepSeek R1 release earlier this year was more of a prequel than a one-off fluke in the trajectory of AI. Last week, a Chinese startup named Moonshot AI dropped Kimi K2, an open model that is permissively licensed and competitive with leading frontier models in the U.S. If you're interested in the geopolitics of AI and the rapid dissemination of the technology, this is going to represent another "DeepSeek moment" where much of the Western world — even those who consider themselves up-to-date with happenings of AI — need to change their expectations for the coming years.

In summary, Kimi K2 shows us that HighFlyer, the organization that built DeepSeek, is far from a uniquely capable AI laboratory in China, China is continuing to approach (or reached) the absolute frontier of modeling performance, and the West is falling even further behind on open models.

Kimi K2, described as an "Open-Source Agentic Model" is a sparse mixture of experts (MoE) model with 1 trillion total parameters (~1.5x DeepSeek V3/R1's 671 billion) and 32 billion active parameters (similar to DeepSeek V3/R1's 37 billion). It is a "non-thinking" model with leading performance numbers in coding and related agentic tasks (earning it many comparisons to Claude 3.5 Sonnet), which means it doesn't generate a long reasoning chain before answering, but it was still trained extensively with reinforcement learning. It clearly outperforms DeepSeek V3 on a variety of benchmarks, including SWE-Bench, LiveCodeBench, AIME, or GPQA, and comes with a base model released as well. It is the new best-available open model by a clear margin.

These facts with the points above all have useful parallels for what comes next: Controlling who can train cutting edge models is extremely difficult. More organizations will join this list of OpenAI, Anthropic, Google, Meta, xAI, Qwen, DeepSeek, Moonshot AI, etc. Where there is a concentration of talent and sufficient compute, excellent models are very possible. This is easier to do somewhere such as China or Europe where there is existing talent, but is not restricted to these localities.

Kimi K2 was trained on 15.5 trillion tokens and has a very similar number of active parameters as DeepSeek V3/R1, which was trained on 14.8 trillion tokens. Better models are being trained without substantial increases in compute — these are referred to as a mix of "algorithmic gains" or "efficiency gains" in training. Compute restrictions will certainly slow this pace of progress on Chinese companies, but they are clearly not a binary on/off bottleneck on training.

The gap between the leading open models from the Western research labs versus their Chinese counterparts is only increasing in magnitude. The best open model from an American company is, maybe, Llama-4-Maverick? Three Chinese organizations have released obviously more useful models with more permissive licenses: DeepSeek, Moonshot AI, and Qwen. A few others such as Tencent, Minimax, Z.ai/THUDM may have Llama-4 beat too but are a half step behind the leading Chinese models on some combination of license and performance.

This comes at the same time that new inference-heavy products are coming online that'll benefit from the potential of cheaper, lower margin hosting options on open models relative to API counterparts (which tend to have high profit margins).

Kimi K2 is set up for a much slower style "DeepSeek Moment" than the DeepSeek R1 model that came out in January of this year because it lacks two culturally salient factors: DeepSeek R1 was revelatory because it was the first model to expose the reasoning trace to the users, causing massive adoption outside of the technical AI community, and the broader public is already aware that training leading AI models is actually very low cost once the technical expertise is built up (recall the DeepSeek V3 $5M training cost number), i.e. the final training run is cheap, so there should be a smaller reaction to similar cheap training cost numbers in the Kimi K2 report coming soon.

Still, as more noise is created around the K2 release (Moonshot releases a technical report soon), this could evolve very rapidly. We've already seen quick experiments spin up slotting it into the Claude Code application (because Kimi's API is Claude-compatible) and K2 topping many nice "vibe tests" or creativity benchmarks. There are also tons of fun technical details that I don't have time to go into — from using a relatively unproven optimizer Muon and scaling up the self-rewarding LLM-as-a-judge pipeline in post-training. A fun tidbit to show how much this matters relative to the noisy Grok 4 release last week is that Kimi K2 has already surpassed Grok 4 in API usage on the popular OpenRouter platform.

Later in the day on the 11th, following the K2 release, OpenAI CEO Sam Altman shared the following message regarding OpenAI's forthcoming open model: "we planned to launch our open-weight model next week. we are delaying it; we need time to run additional safety tests and review high-risk areas. we are not yet sure how long it will take us. while we trust the community will build great things with this model, once weights are out, they can’t be pulled back. this is new for us and we want to get it right. sorry to be the bearer of bad news; we are working super hard!"

Many attributed this as a reactive move by OpenAI to get out from the shadow of Kimi K2's wonderful release and another DeepSeek media cycle.

Even though someone at OpenAI shared that the rumor that Kimi caused the delay for their open model is very likely not true, this is what being on the back foot looks like. When you're on the back foot, narratives like this are impossible to control.

We need leaders at the closed AI laboratories in the U.S. to rethink some of the long-term dynamics they're battling with R&D adoption. We need to mobilize funding for great, open science projects in the U.S. and Europe. Until then, this is what losing looks like if you want The West to be the long-term foundation of AI research and development. Kimi K2 has shown us that one "DeepSeek Moment" wasn't enough for us to make the changes we need, and hopefully we don't need a third.

NotebookLM adds featured notebooks from The Economist, The Atlantic and others

Techcrunch • July 14, 2025

Technology•AI•Research•Notebooks•Google

Google is enhancing its AI-powered research and note-taking assistant, NotebookLM, by introducing a series of featured notebooks. These curated collections, developed in collaboration with various authors, publications, researchers, and nonprofits, aim to provide users with in-depth explorations across a wide range of topics, including health, travel, financial analysis, and more.

The initial lineup of featured notebooks includes:

Longevity advice from Eric Topol, bestselling author of "Super Agers: An Evidence-Based Approach to Longevity"

Expert analysis and predictions for the year 2025 as shared in The Economist's annual report, "The World Ahead"

An advice notebook based on Arthur C. Brooks' "How to Build A Life" columns in The Atlantic

A science fan's guide to visiting Yellowstone National Park, complete with geological explanations and biodiversity insights

An overview of long-term trends in human well-being published by the University of Oxford-affiliated project, Our World In Data

Science-backed parenting advice based on psychology professor Jacqueline Nesi's popular Substack newsletter, Techno Sapiens

The Complete Works of William Shakespeare, for students and scholars to explore

A notebook tracking the Q1 earnings reports from the top 50 public companies worldwide, for financial analysts and market watchers alike

These featured notebooks are designed to offer users working examples of how NotebookLM can be utilized to delve deeper into subjects of interest. Users can read the original source material, pose questions, explore topics, and receive answers that include citations. Additionally, they can listen to pre-generated Audio Overviews or browse the notebook's main themes using the app's Mind Maps feature.

This initiative builds upon the recently launched feature that allows users to publicly share their notebooks with others on the app. Since its debut last month, Google reports that more than 140,000 public notebooks have been shared. The company plans to expand its collection of featured notebooks in the coming months, including more collaborations with The Economist and The Atlantic.

The featured collection of notebooks is rolling out to NotebookLM on the desktop starting today.

🔮 Kimi K2 is the model that should worry Silicon Valley

Exponentialview • Azeem Azhar • July 15, 2025

Technology•AI•MachineLearning•OpenSource•Innovation

In October 1957, Sputnik 1 proved that the USSR could breach Earth’s gravity well and shattered Western assumptions of technological primacy. Four years later, Vostok 1 carried Yuri Gagarin on a single loop around the Earth, confirming that Sputnik was no fluke and that Moscow’s program was accelerating.

In today’s AI, DeepSeek plays the Sputnik role – as we called it in December 2024 – as an unexpectedly capable Chinese open-source model that demonstrated a serious technical breakthrough.

Now AI has its Vostok 1 moment. Chinese startup Moonshot’s Kimi K2 model is cheap, high-performing and open-source. For American AI companies, the frontier is no longer theirs alone.

In today’s analysis, we’ll get you up to speed on Kimi K2, including:

What Kimi K2 is and how it works – its architecture, optimizer and training process, and how it was developed inexpensively and reliably on export-controlled chips.

Why Kimi K2 matters strategically – how it shifts the centre of AI gravity, particularly on efficiency, and why it’s a wake-up call for US incumbents.

What comes next – the implications for open-source versus closed-source, AGI strategy, and China’s growing AI advantage.

What’s so special about Kimi K2?

First off, it’s not a Kardashian 😂. But it is engineered for mass attention. Only here, the mechanism is literal. Like DeepSeek, Kimi K2 uses a mixture-of-experts (MoE) architecture, a technique that lets it be both powerful and efficient. Instead of processing every input with the entire model (which is slow and costly), MoE allows the model to route each task to a small group of specialized “experts.” Think of it like calling in just the right specialists for a job, rather than using a full team every time.

K2 packs one trillion parameters, the largest for an open-source model to date. It routes most of that capacity through 384 experts, of which eight – roughly 32 billion parameters – activate for each query. Each expert hones a niche. This setup speeds the initial pass over text while regaining depth through selective expert activation to deliver top-tier performance at a fraction of the compute cost.

But Kimi K2 didn’t start from scratch. It built directly on DeepSeek’s open architecture.

One of the most beautiful curves in ML history

It’s a textbook case of the open innovation feedback loop, where each release seeds the next and shared designs accelerate the whole field. That loop let Kimi K2 focus on the next innovation: its approach to training.

Training a large language model is like adjusting millions of tiny knobs – each one a parameter that nudges the model toward fewer mistakes. The optimizer decides how large each adjustment should be. The industry standard, AdamW, updates each parameter based on recent trends and gently nudges it back toward zero. But at massive scale, this can go haywire. Loss spikes – sudden jumps in error – can derail training and force costly restarts.

Moonshot’s MuonClip model introduces two innovations to improve the training and stability of AI systems.

First, it adds “second-order” insight, meaning it doesn’t just look at how the model is learning (via gradients), but also how those gradients themselves are changing. This helps the model make sharper, more stable updates during training to improve both speed and reliability.

Second, it adds a safety mechanism called QK-clipping to the attention mechanism. Normally, when the model calculates how words relate to each other (by multiplying ‘query’ and ‘key’ weights), those values can sometimes become too large and destabilize the system. QK-clipping caps those scores before they spiral out of control, acting like a circuit-breaker to keep the model focused and stable.

The result is “[o]ne of the most beautiful loss curves in ML history,” as AI researcher Cedric Chee put it. Training runs longer, it is more reliable and at an unprecedented scale for open-source.

This would have unlocked massive compute savings. Research from earlier this year estimates that Muon optimizers are roughly twice as computationally efficient as AdamW. This would have been a major help with export controls. Moonshot likely had to train K2 on compliant A800 and H800 hardware instead of the flagship H100s. The training ran on more than 15.5 trillion tokens, roughly 50x GPT-3’s intake, without a single loss spike, catastrophic crash or reset. Given this, training was likely relatively inexpensive. It probably cost in the low tens of millions of dollars.

Beyond its architecture and optimizer, Kimi K2 was trained with agentic capabilities in mind. Moonshot built simulated domains filled with real and imaginary tools, then let competing agents solve tasks within them. An LLM judge scored the outcomes, retaining only the most effective examples. This taught K2 when to act, pause, or delegate. Even without a chain-of-thought layer, where the model generates intermediate reasoning steps before answering, the public Kimi-K2-Instruct checkpoint performs impressively on tool use, agentic, and STEM-focused benchmarks, matching or exceeding GPT-4.1 and Claude 4 Sonnet. Quite differently, It also ranks as the best short-story writer.

Artificial Analysis notes that Kimi K2 is noticeably more verbose than other non-reasoning models like GPT-4o and GPT-4.1. In their classification it sits between reasoning and non-reasoning models. Its token usage is up to 30 % lower than Claude 4 Sonnet and Opus in maximum-budget extended-thinking modes but nearly triple that of both models when reasoning is disabled.