Hi from even deeper in July (sending on 1 August). Here are additional July readings worth capturing. Andrew is back so we discuss them in the vlog above. Here are the things that would have appeared in the past week had we been publishing.

Best

Keith

Contents

Essays

Venture Capital

European Weakness

AI

As Anthropic goes, so goes the generative AI trade, says Big Technology's Alex Kantrowitz

a16z GP, Martin Casado: Anthropic vs OpenAI & Why Open Source is a National Security Risk with China

Balaji Srinivasan: How AI Will Change Politics, War, and Money

Iconiq set to lead $5bn funding round for AI start-up Anthropic

OpenAI’s IMO Team on Why Models Are Finally Solving Elite-Level Math

Pew Study: Google Users Click Less When AI Summaries Appear in Search Results

New AI architecture delivers 100x faster reasoning than LLMs with just 1,000 training examples

Tesla signs a $16.5 billion chip contract with Samsung Electronics

Chinese Tech

Media

IPO

Education

Interview of the Week

Regulation

M & A

Essays

A Summer of AI in San Francisco

Joe Lonsdale • Joe Lonsdale • July 21, 2025

Technology•AI•EnterpriseSoftware•Automation•Innovation•Essays

I’ve been spending a lot of time out in San Francisco this summer with our 8VC team, and it's been energizing to meet truly impressive founders building at the frontier of AI. At 8VC, we always evaluate companies by first asking: Why now? The best answers highlight clear technological shifts that unlock entirely new products & solutions that were previously impossible but are now strategically necessary.

Over the past decade, many enterprise SaaS companies succeeded by focusing narrowly and building products for specific verticals, niche user segments, or overlooked workflows. This approach has produced great businesses which thrive precisely because they are specialized, capturing vertical data and improving their workflows.

Recent advances in AI like document understanding, reliable legacy software automation, conversational agents, and structured reasoning dramatically expand the range of tasks software can execute directly. Instead of merely assisting workers, software today can autonomously work alongside them, managing complex workflows. Founders are now confidently transforming how entire departments work.

Our Frameworks

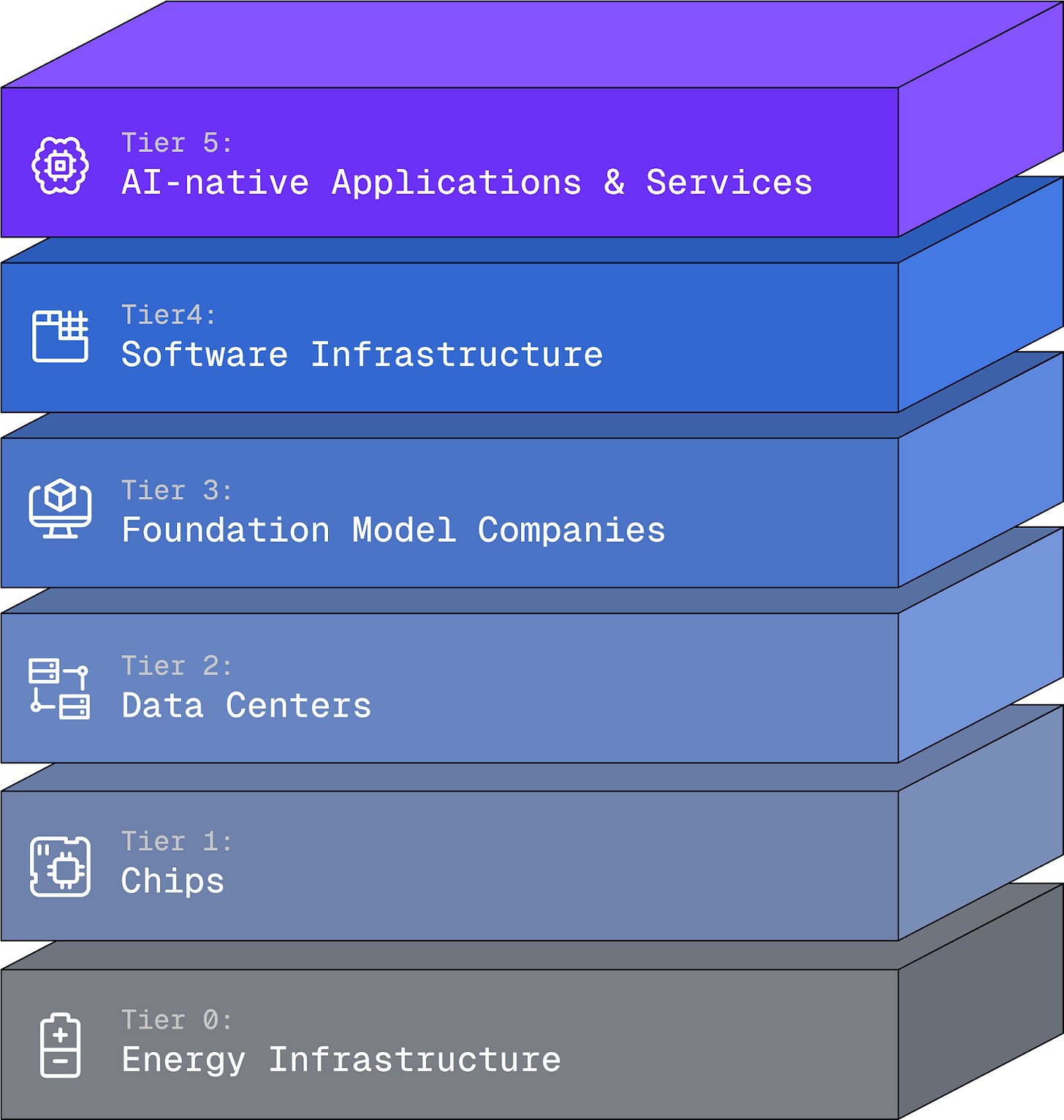

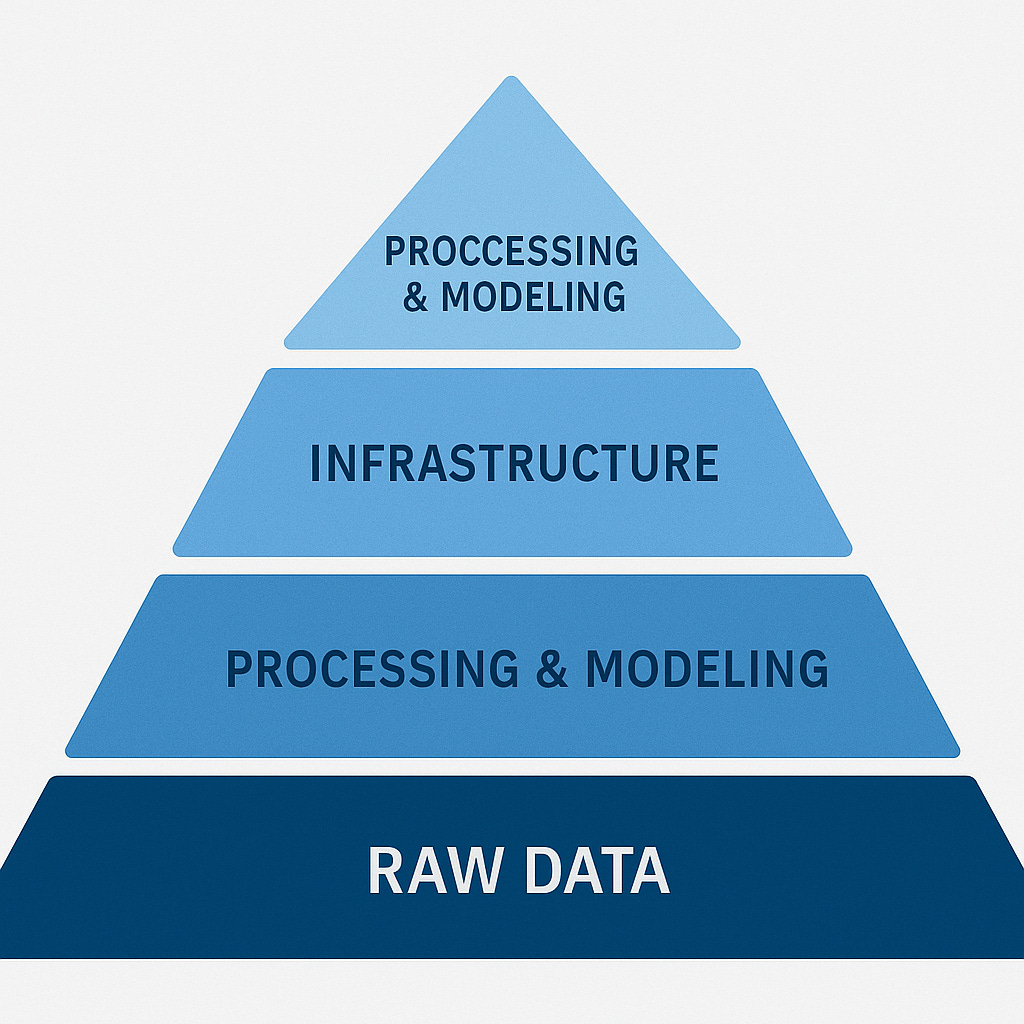

When people ask about investing in AI, we share a simple framework to help them understand all the options, and where value accrues in the AI ecosystem, organized into six tiers, 0-5:

Tier 0: Energy Infrastructure – critical to support and scale the entire AI ecosystem.

Tier 1: Chips – the fundamental hardware, GPUs, and specialized processors powering AI compute.

Tier 2: Data Centers – the physical infrastructure and computing environments that host and scale AI workloads.

Tier 3: Foundation Model Companies – organizations building large language models and AI capabilities, such as xAI, OpenAI, Anthropic, Google, and Meta.

Tier 4: Software Infrastructure – tools and platforms that enable the deployment, orchestration, monitoring, and management of AI models (e.g., vector databases, orchestration platforms, model hosting services, Palantir).

Tier 5: AI-native Applications & Services – use-case-specific software that directly automates and executes entire workflows, moving beyond simple assistance, and full-stack services offerings that close the loop when AI can’t solve the task on its own. Often competing directly with existing businesses in the services economy (e.g. healthcare billing agencies).

The companies in Tier 3 making foundation models — like OpenAI, xAI, Anthropic, etc — are able to compete in the basics of Tier 4 (software infrastructure): vector stores, tool calling, memory, and other software fundamentals. This makes it difficult to compete in pure infrastructure. We will still fund heavy lifts by the best teams in areas like developer tooling, but the strength of the foundation model companies means that our attention climbs up to Tier 5 and AI-native apps.

Here, software lives inside the workflow and absorbs proprietary data. Companies create a beautiful flywheel as each completed task tightens feedback loops and raises switching costs. Opportunities unlocked in this shift are attracting top talent.

New categories of products & companies are possible because of new fundamental advancements at Tier 3 and Tier 4.

Better Doc Processing - Until recently, extracting structured information from unstructured documents was either unreliable or limited to simple tasks. Today’s LLMs can consistently read dense legal contracts, financial statements, medical records, and operational logs, not just to pull basic information but also to summarize key insights, categorize documents intelligently, and even generate fully formed, contextually appropriate drafts.

Intelligent Browser Automation - A significant share of business-critical data and tasks resides behind non-API, legacy systems. Historically, automating these interactions meant fragile web scraping or manual effort. Modern AI-driven browser automation products like Kaizen have become robust and reliable enough to safely extract data, navigate complex user interfaces, and complete workflows end-to-end with minimal human oversight.

Voice and Conversational Agents - Previously, interactions requiring nuanced conversational capabilities such as customer support calls, internal employee assistance, or vendor negotiations resisted effective automation. New voice and text agents can follow sophisticated multi-step instructions, adapt conversationally to specified edge cases, and handle critical operational tasks directly, reducing the need for human intermediaries.

Each of these capabilities represents a powerful step forward on its own -- combined, they enable new shapes of automation. Instead of helping employees carry out workflows, these new systems can directly execute them. They become agents within an organization, performing tasks, making decisions, and driving meaningful productivity gains at scale.

These breakthroughs unlock vast areas of greenfield opportunity. Tasks that once demanded armies of specialists -- or were simply skipped -- are now fair game for intelligent software. Workflows that used to be manual, costly, or error-prone can finally run at machine speed and accuracy.

When we look at investment opportunities at 8VC, we begin by looking closely at what these new capabilities allow software to achieve. Categories defined by:

High volumes of repetitive, document-centric tasks;

Workflows reliant on legacy interfaces and portals;

Knowledge-heavy tasks requiring judgment and decision-making, but where process is in place or extractable.

What's working?

One of our clearest proofs that the world has changed is our portfolio company Cognition, which rethinks how software engineering work gets done. Software development has long relied on humans for every high-judgment step – reading requirements, creating tickets, writing and reviewing code. Tools shaved minutes, never hours or days. Cognition’s AI agent, named Devin, now reads a spec, writes code, opens a pull request, and manages feedback. Some of the largest & fastest-moving software teams run Devin in production. The result is not incremental productivity; it is a new unit of work that no longer needs a person.

We’re also backing AI Applications that leverage these new Tier 3 capabilities to systematically replace and transform entrenched workflows across major enterprise spend categories.

Outset rethinks qualitative user research. Their system moderates user interviews, asks follow-ups, and synthesizes insights in real time. What would take a research team days or weeks can now be done in a single afternoon. AI interviewers can run for < $1 a session and costs on multi-modal inference continue to drop each model cycle.

Glimpse starts with retail deduction disputes – a painful, error-prone process that most brands handle manually or not at all. They automate the full dispute process, then expand into adjacent workflows like trade promotions and financial ops. These deductions can be 20-30% of a brand’s revenue, and 10% of those are winnable. AI can take back a whopping 2-3% of GMV, which changes the lives of these low margin CPG businesses.

Ground Control focuses on regulated manufacturing, starting with first-article inspections and building into other core document workflows in the back office. Every single regulated part needs these reports, and delayed reports can hold up revenue for weeks, sticking a profitable shop underwater.

Tezi is building an AI-native recruiting platform. Their system sources candidates, engages them, evaluates fit, and moves them through the funnel, executing an entire recruiting workflow with minimal human input. Global recruiting spend burns > $200B a year while software only captures low single digit % of that. Tezi today can shave off 80% of recruiting labor hours in the menial aspects of these workflows.

Broad-based automation is also central in our AI Services investments, where well-designed AI systems replace many functions and increase productivity by a multiple in legacy businesses.

Sequence Holdings acquires and transforms IT services businesses by embedding AI into core code generation workflows and ops, reshaping how managed IT services scale and deliver value.

Arcos reinvents transactional law practice as a technology-first legal platform, dramatically reducing transaction timelines and increasing margins. This requires a thoughtful understanding of how a workflow actually operates end-to-end, and the many areas to seamlessly involve a human with full context, then share any new context to automate rote work.

Although powerful AI models have made it easier to prototype impressive demos, durable companies in this space are doing something harder: deeply embedding into the real structure of work.

How do we create durable value?

We continue to reference our lessons from Palantir (which we wrote a bit about in The AI Services Wave), where understanding the ontology of a domain was essential to designing effective software. Mapping out the workflow – what can be automated, what should be augmented when model capabilities improve, and where humans still need to stay in the loop – is the foundational step. When done well, this informs not only what the product should do, but also where the moat will come from.

…

The Satya of Satya’s Layoff Memo

Om • July 26, 2025

Business•Management•AI•Layoffs•Transformation•Essays

In his recent memo, Microsoft CEO Satya Nadella addressed the company's decision to lay off approximately 9,000 employees, despite the company's strong financial performance. He acknowledged that these layoffs have been "weighing heavily" on him, emphasizing the emotional toll of such decisions. (cnbc.com)

Nadella framed the layoffs within the context of Microsoft's strategic shift towards artificial intelligence (AI). He described the current transformation as "messy but exciting," likening it to the technological shifts of the early 1990s. This period of change, he noted, is "dynamic, sometimes dissonant, and always demanding," but also presents an opportunity for the company to "shape, lead through, and have greater impact than ever before." (cnbc.com)

The CEO highlighted the necessity of adapting to the evolving tech landscape, stating that "progress isn't linear." He emphasized the importance of reimagining Microsoft's mission for the AI era, focusing on empowering individuals through accessible AI solutions. Nadella envisions a future where AI serves as an "intelligence engine," enabling users to create their own tools and solutions. (cnbc.com)

Despite the layoffs, Nadella reassured employees that Microsoft's overall headcount remains "basically flat," indicating that the company continues to hire new talent. He acknowledged the challenges of this transition but urged the workforce to embrace the changes, emphasizing the need for a "growth mindset" to navigate the complexities of transformation. (cnbc.com)

In summary, Nadella's memo sought to contextualize the layoffs within Microsoft's broader strategic vision, emphasizing the company's commitment to AI-driven innovation and the importance of adaptability in a rapidly changing industry.

Ads are inevitable in AI, and that's okay

Strange loop canon • July 28, 2025

Technology•AI•Advertising•BusinessModel•LLMs•Essays

We are going to get ads in our AI. It is inevitable. It’s also okay.

OpenAI, Anthropic and Gemini are in the lead for the AI race. Anything they produce also seems to get copied (and made open source) by Bytedance, Alibaba and Deepseek, not to mention Llama and Mistral. While the leaders have carved out niches (OpenAI is a consumer company with the most popular website, Claude is the developer’s darling and wins the CLI coding assistant), the models themselves are becoming more interchangeable amongst them.

One solution is to go deeper and create product variations that others don’t, such that people are attracted to your offering. OpenAI is trying with Operator and Codex, though it’s unclear if that’s a net draw, rather than a cross sell for usage.

Another option is to introduce new capabilities that will attract users. OpenAI has Agent and Deep Research. Claude has Artefacts, which are fantastic. Gemini is great here too, despite their reputation, it also has Deep Research but more importantly it has the ability to talk directly to Gemini live, show yourself on a webcam, and share your screen. It even has Veo3, which can generate videos with sound today.

The models are decreasing in price extremely rapidly. They’ve fallen by anywhere from 95 to 99% or more over the last couple years. This hasn’t hit the revenues of the larger providers because they’re releasing new models rapidly at higher-ish prices and also extraordinary growth in usage.

What could happen is that the training gets expensive enough that these half dozen (or a dozen) providers decide enough is enough and say we are not going to give these models out for free anymore.

Now, by itself this is fine. Because instead of it being a SaaS-like high margin business making tens of billions of dollars it’ll be an Amazon-like low margin business making hundreds of billions of dollars and growing fast. A Costco for intelligence.

There’s another option, which is to bring the best business model we have ever invented into the AI world. That is advertising.

It solves the problem of differential pricing, which is the hardest problem for all technologies but especially for AI, which will see a few providers who are all fighting it out to be the cheapest in order to get the most market share while they’re trying to get more people to use it. And AI has a unique challenge in that it is a strict catalyst for anything you might want to do!

For instance, imagine if Elon Musk is using Claude to have a conversation, the answer to which might well be worth trillions of dollars of his new company. If he only paid you $20 for the monthly subscription, or even $200, that would be grossly underpaying you for the privilege of providing him with the conversation. It’s presumably worth 100 or 1000x that price.

Or if you're using it to just randomly create stories for your kids, or to learn languages, or if you're using it to write an investment memo, those are widely varying activities in terms of economic value, and surely shouldn't be priced the same. But how do you get one person to pay $20k per month and other to pay $0.2? The only way we know how to do this is via ads.

And if you do it it helps in another way - it even helps you open up even your best models, even if rate limited, to a much wider group of people. Subscription businesses are a flat edge that only captures part of the pyramid.

Long‑term cost curves suggest another 3× drop in cash cost per token by 2027. Not just for product sales, but even for news recommendations or even service links.

What would it look like? The ads themselves could be AI-generated with better recommendations, contain expositions from products or services or content engines, direct purchase links, upsell own products, or have a second simultaneous chat about the existing chat.

A large part of purchasing already happens via ChatGPT or at least starts on there. Conversion rates are likely to be much higher than even social media, since this is content, and it’s happening in an extremely targeted fashion.

I predict this will work best for OpenAI and Gemini. They have the customer mindshare. And an interface where you can see it, unlike Claude via its CLI.

Put all these together I feel ads are inevitable. I also think this is a good thing. Whether it’s ads or not, every company wants you to use their product as much as possible. That’s what they’re selling!

Now, a caveat. If the model providers start being able to change the model output according to the discussion, that would be bad. But I honestly don't think this is feasible. We're still in the realm where we can't tell the model to not be sycophantic successfully for long enough periods.

So if we somehow created the ability to perfectly target the output of a model to make it such that we produce tailored outputs that would not corrupt the output quality much and guide people towards the products and services they might want to advertise, that would constitute a breakthrough in LLM steerability!

Instead what’s more likely is that the models will try to remain ones people would love to use for everything, both helpful and likeable. And unlike serving tokens at cost, this is one where economies of scale can really help cement an advantage and build an enduring moat. The future, whether we want it or not, is going to be like the past, which means there’s no escaping ads.

Being the first name someone recommends for something has enduring consumer value, even if a close substitute exists.

The AI Search Tipping Point

Tomtunguz • July 21, 2025

Technology•AI•Search•ConsumerBehavior•MarketTrends•Essays

OpenAI receives on average 1 query per American per day.

Google receives about 4 queries per American per day.

Since then 50% of Google search queries have AI Overviews, this means at least 60% of US searches are now AI.

It’s taken a bit longer than I expected for this to happen. In 2024, I predicted that 50% of consumer search would be AI-enabled.

But AI has arrived in search.

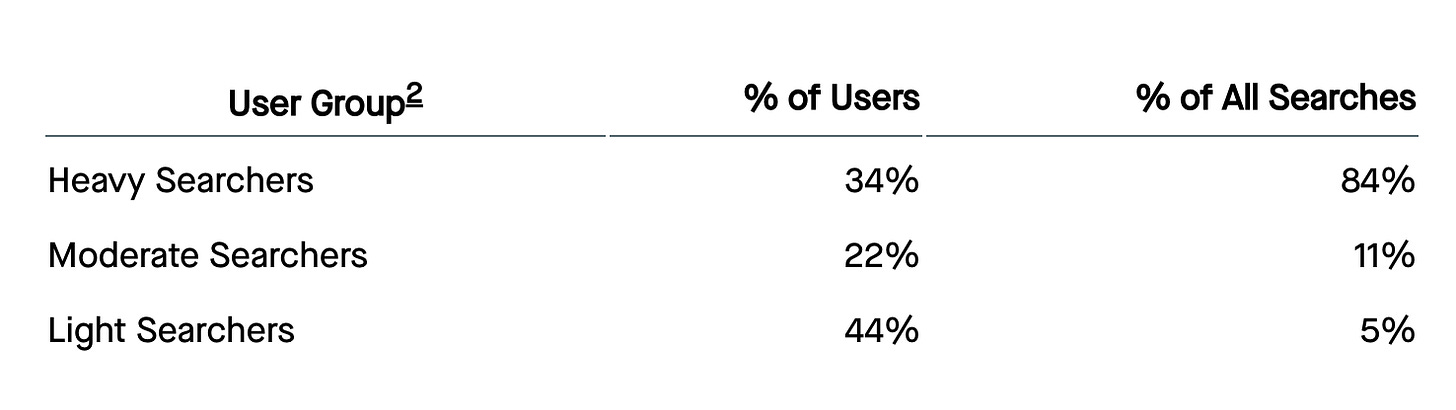

If Google search patterns are any indication, there’s a power law in search behavior. SparkToro’s analysis of Google search behavior shows the top third of Americans who search execute upwards of 80% of all searches - which means AI use isn’t likely evenly distributed - like the future.1

Websites & businesses are starting to feel the impacts of this. The Economist’s piece “AI is killing the web. Can anything save it?” captures the zeitgeist in a headline.

A supermajority of Americans now search with AI. The second-order effects from changing search patterns are coming in the second-half of this year & more will be asking, “What Happened to My Traffic?”

AI is a new distribution channel & those who seize it will gain market share.

William Gibson saw much further into the future! ↩︎

This is based on a midpoint analysis of the SparkToro chart, is a very simple analysis, & has some error as a result. ↩︎

The Slow Death of Social Networks

Salsop • Stewart Alsop • July 23, 2025

Technology•Web•SocialNetworks•UserEngagement•SocialMediaTrends•Essays

My son recently told me a startling piece of news, startling to me at least. Did you know the major social networks are downgrading posts that include links out of the network? Facebook, Threads, Instagram, LinkedIn, and X are trying to keep their users in their domain and not lose them to linked-out sites. In a way, they are putting walls up around their gardens. (If you don’t understand the reference, see Closed System.)

Some of you may know that son Stewart III and I are producing a podcast called Stewart Squared, which is a weekly discussion of how the history of technology helps predict the future of AI. We’ve produced more than 40 episodes, some with other notable figures from my history in the computer industry, people like Steve Case (AOL), Bill Gross (IdeaLab), Vince Kadlubek (Meow Wolf), and others, including my partner in our new venture capital firm, Jim Ward.

But Stewart Squared has also turned into a shared discovery process for the two of us to understand how to promote a podcast on the modern Internet. We’ve been trying to create more visibility for the podcast, and that process now includes a host of non-intuitive learnings about how to navigate web browsers, social networks and other ways to make yourself more visible.

My first learning, as noted, is that social networks today are jealously guarding the audience they have already built by discouraging users from leaving the network. Stewart III has been producing his own podcast, Crazy Wisdom, since 2017 and has recorded 473 episodes, so he knows a lot more than I do about this. He told me that I couldn’t post links, since the network would reduce the visibility and reach of any posts that link out.

He also told me to stop using hashtags, an early phenomenon of Twitter that allowed users to follow topics rather than people. Apparently, clicking on hashtags is not conducive to reviewing what is in your feed and engaging with the social network itself. Twitter has put out an advisory that it would downgrade posts with hashtags.

This is making it really hard for new creators (me) to get started. Everything I learned about social networks back in the heyday when they were new and exciting is now wrong. An astute observer might think that, strategically, it is stupid to try to build walled gardens around internet businesses, since the very purpose of the internet is to create an open, accessible network that puts control in the user’s hands. At some level, the existence of smart phone apps make it seem like social networks are walled gardens, since the user can only access the network through the app.

What is the difference between using the app and using the website? I have been in the habit of using social network’s website, not in the app. So it’s only recently that I realized that they are trying to “trap” their users inside the apps.

That lead me to wonder exactly what is going on with social networks. I was an early and enthusiastic adopter for Twitter, Facebook, Instagram, and Pinterest (even Orkut and Google Plus and others I don’t even remember). I still sign up for anything new, including Threads most recently. I was slow to sign up Snapchat and TikTok and haven’t engaged with either except occasionally to look at posts that seem interesting; perhaps that’s a reflection of my age and home cohort as a baby boomer since I don’t easily get into meaningless videos, which seem to be the core user experience of Snapchat and TikTok.

Fact is I’m bored by most social networks. I’ve stopped using Facebook to post anything, and only look at posts from friends. I use Instagram to post cool photos and foodie things, but I don’t get much traction. And I’m permanently pissed at Meta for turning it into an advertising machine, mainly through Reels and ads, rather than its original intent, which was to share photos with friends. X/Twitter is supposed to be a cesspool, although I don’t see that stuff in my feed and think most people who complain about it are actually looking for garbage so the algorithm delivers more of it. Like Facebook, I only look at X when someone links to a post. (Indeed, X says I have >13,100 followers , but I must assume most of them have stopped using X or died, since I get very little reaction to the few posts I have made in the past few years, with or without links or hashtags. That may also be a function of X making you pay for a membership, which I haven’t done, to get your posts read and get new followers.)

Funnily enough, I actually use the Threads app when I want to turn off my brain and be entertained; I’ve learned from using Threads that Meta actually does know how to run a social app (surprise, surprise). Threads hmwuickky figured I live in New Mexico and like food and art. Indeed, recent news indicates that Threads has almost as many daily active users as X. When they discover how to pump it full of ads, I’ll probably stop using that one too.

That’s my personal experience: From hours a day 10 years ago to 30 minutes or less a day now. Is that reflected in overall use of social media? Are other people as bored as I am? The answer is: yes, sort of. U.S. monthly active users of Facebook has declined from more than 200M to 160M in the past three years. But DAUs have stayed roughly the same, which probably means that really active users continue to be active but less active users (like me) tend to fade away. The most active users may well be the kind that post a lot of junk, which certainly doesn’t increase the quality of interaction.

Pinterest seems like a dead zone, although it is valued at $25B. Snap seems to be trying to follow Meta into AR glasses, but is valued at $16B. Meta’s social networks — Facebook, Instagram, WhatsApp, and Threads — are clearly the dominant force in social media and produce a ton of cash which the company is using to build its metaverse products, Oculus VR, Orion AR, and Rayban/Oakley AI glasses. The company is valued at $1.8T valuation, partly based on its ability to generate lots of profitable revenue from its social networks. No one knows what TikTok is worth, although its remarkable growth worldwide appears to position it to take over the lead in social networks soon, so it is likely to be worth a lot.

My point being: Social networks aren’t a growth business anymore, with a stable base of billions of users worldwide, and they aren’t serving the original purpose, which is embedded in the name “social networks”. So perhaps it makes business sense to try to keep users inside semi-walled gardens in order to preserve value in the existing businesses as long as possible. However, it sure doesn’t seem the right way to treat your users, particularly since you are using their engagement to sell advertising. The evolution of social networks from fascinating ways to connect and engage with friends into massive businesses trying to eke out as much profit as possible will ultimately train users to lose interest and fade away. Slowly, but inevitably leading to the death of social networks.

10 Things I Wish I Knew Before Vibe Coding

Saastr • Jason Lemkin • July 25, 2025

Technology•Software•Vibe Coding•AI Agents•Software Development Process•Essays

If you follow us on X or LinkedIn, you may have seen we’re deep into vibe coding. Specifically, trying to build basic B2B apps — for real — using the leading vibe coding platforms like Loveable, Replit, Bolt.new, etc.

After being deep into vibe coding a some complex (but not super complex) B2B product for the SaaStr community, I’ve already learned a lot.

These platforms are very cool, but super quirky. The “AI agents” that build the apps are so powerful but they are also … unpredictable. And they get more and more unpredictable the deeper you get into a project. That’s what you need to learn.

Here are the 10 things I wish I knew before I started.

1. The AI Agent Will Often Be Wrong. Deal With It.

AI agents are wrong 30-40% of the time on anything complex. API integrations, edge cases, architectural decisions — they’ll give you confident-sounding answers that are just wrong.

What to do: Always ask for multiple approaches. “Give me three ways to build this algorithm” became my default. Push back on suggestions that don’t sound right. And if it said it’s done something — verify that it actually has. Don’t move on.

Stop treating AI suggestions like they are … right. They’re hypotheses to test.

2. Slow It Down. Work on Small Pieces.

Vibe coding feels fast, so you want to keep going. “Add payments! Add user roles! Add notifications!” This is a trap.

I tried to build an entire dashboard in one conversation. I ended 200 lines of code that technically worked but was impossible to debug or extend. And nothing on it really worked.

What works: 15-20 minute focused sprints. Build one thing, test it, move to the next. If it takes more than 30 minutes to build and test, it’s too complicated. Even any feature that takes longer than a few minutes to build is likely too complicated. Break it down further.

3. The Agent Will Change Stuff Without Asking. Accept It.

Your code will constantly evolve. Variable names will frustratingly change. Functions get refactored. Architecture shifts. Without you ever asking or even knowing.

At first, it will be funny. You’ll log back in, and your app wlll have … changed. You’ll just fix it. But then as you go further and further into a project, it’s less funny. Because as you go deeper into any project with any level of complexity, there will be so many moving pieces in flux. This is why so many folks abandon their vibe coding project 70% of the way in. At that phase, it’s now changing way too many things you thought were done or more likely, close to done. You just have to learn to work around this.

Vibe coding may well be slower and less efficient than normal coding after you are about 70% of the way in. But if you aren’t a coder, you just need to live with that.

Reality check: As long as functionality works and tests pass, let it evolve. The AI often improves code in ways you wouldn’t think of. If it’s done enough, don’t touch it again.

4. Don’t Ask More Than Twice. Start Fresh Instead.

The pattern I see constantly, and that I’ve been ‘guilty’ of, even again today: You ask the AI agent to fix a bug. The fix doesn’t work. You ask again. It still doesn’t work. So you ask a third time… and it starts to go off the rails. Deleting things. Making up test results. Inserting “demo” user data that hides the fact the function just doesn’t work.

Stop. Don’t ask an AI agent 3 times to fix something — ever. The context window gets polluted with failed attempts. The AI builds on false assumptions. No matter what, it’s just not going to work if you have to ask the AI prompt 3 times. You’ll end up in a sea of endless bugs you can’t fix.

Rule: Never ask the same agent to solve the same problem more than twice. Start a new conversation. Describe the problem from scratch. Approach it in a simpler way, if possible.

5. Some Stuff Is Harder Than It Looks. Plan Accordingly.

Email integration, OAuth, payments — they look simple until you hit SPF records, refresh tokens, and webhook validation. A lot of this stuff just doesn’t work reliably today. Not unless you get close to getting into the code youself. The AI will give you a basic implementation that works in dev but fails in production.

I actually don’t have all the answers here other than be aware many things that seem to work won’t actually work. And use the ‘hardpoints’ in your vibe coding platform. Use their authentication, their database, their core choice for email, everything core you can vs. writing your own.

The platform I’ve used most desperately wants to use Sendgrid for email for example, by default. Every time I lock down Resend instead, it fights me. One way or another. Giving up and using the default vendor it wants to use (Sendgrid) is the right choice of 95% of us.

Budget accordingly: Anything involving external systems takes 3-5x longer than the AI estimates. Build the simplest, happy path first, then iterate on edge cases. Use the default “hard points” like OAUTH, email, etc. that the vibe platform provides. Don’t import your own unless you are 100% sure you really need to.

6. Don’t Build an App That’s Hard to Test

When you’re moving fast, you skip testing. “I’ll just click through it.” This doesn’t scale. Not even past a few days of vibe coding.

By hour 20 or so, you’ll spend more time testing than building.

Solution: Design for testing from day one. Unit tests, API testing, reusable test data, easy rollbacks. I spend 60% of time on testing infrastructure, 40% building features. 40% max.

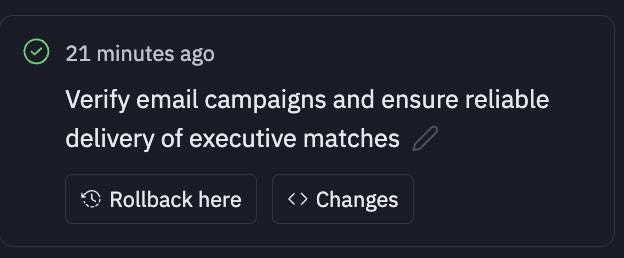

7. Just Roll Back. Don’t Try to Fix Everything.

When you introduce a bug, resist the urge to debug in place. Instead ask youself: “Should I just roll back?”

Vibe coding platforms are really, really good at rolling back. And they automatically make save points, just like video games. So before you debug something that isn’t working, ask yourself if instead you should just … roll back. Get used to rolling back multiple times a session. It works elegantly, so take advantage.

Strategy: When your gut tells you something isn’t going to get fixed, just roll back. RIght then and there. When something breaks, you always have a recent checkpoint.

8. Accept Your App Will Never Be 100% Stable Until Production. And Probably Not After That If You Keep Vibe Coding It.

This a tough one and one I didn’t fully get at first. AI will keep suggesting “improvements” that introduce bugs. Features that worked yesterday break today. The further you go down a path with any complex app, the more this compounds. If your app is too complex, honestly, it will never be stable. If it changes 1-2 things per session, you can work around that. 20? It’s hopeless.

I used to try for 100% stability. These platforms are evolving and we may get there. But it’s not practical, let alone possible, today. Your AI Agent will just keep changing things.

Reality: Launch at 80% stability when the core user journey works. Real user feedback beats perfect code. And make the app as simple as possible. Complex apps are not ready for prosumer vibe coding yet. Not really.

9. You May Want to Just Plan to Rebuild Entire Pages of Your App. Probably All of Then.

Sometimes it’s faster to rebuild from scratch than debug existing code. Because AI agents generate code quickly, rebuilding often takes less time than debugging complex issues. Don’t fear rewriting every single page of your app.

When to rebuild: If you’ve been debugging the same component for over an hour, start fresh. Ask the AI to build a “v2” of your page and see where it takes you. Preserve the v1 in case it doesn’t go where you want.

10. A Lot of Stuff That Looks Like It Works at First Glance … Won’t

Code that looks perfect has subtle issues that only surface under specific conditions. Lots of functions and buttons and workflows that look like they work … won’t. Your app will load with demo data in dashboards and algorithms that are “simulated”. To make them look like they work.

You’ll become frighteningly good at breaking your own app. You’ll need to be. It will be job #1. And you’ll need to get great at seeing anything that looks wrong. It was probably made up.

Summary

Vibe coding is magical but it isn’t magic. It’s a tool that changes how you build products — more iterative, faster feedback loops, different skill requirements. But it’s early.

Finally, a big topic I’ll do a deep dive on later sn security. The more your app stores any sort of customer information, practically speaking, the more important this is. And the more risky using a vibe coding tool on its own is. This will get its own stand-alone post soon. For now, be cautious if your app stories any confidential or customer information and is public facing.

And another great summary here from Sr Director of AI products at Pendo:

PageRank in the Age of AI

Tomtunguz • July 22, 2025

Technology•AI•ContentDistribution•OnlineAdvertising•Publishers•Essays

The internet is on the brink of a significant transformation, aligning more closely with the dynamics of the online advertising industry. This shift doesn't necessarily mean an increase in advertisements; rather, it signifies a change in how content is distributed and valued. The technological framework for content dissemination is evolving to mirror the structures established in online advertising.

As we reach the AI search tipping point, publishers face an existential challenge: ensuring AI systems utilize their content in responses to maintain relevance. Traditionally, when you visit a website, your browser initiates an auction. The site's supply-side platform sends your data to an exchange—a marketplace for ads. Numerous advertisers bid for the opportunity to display their messages, with the highest bidder winning. This process occurs in under 200 milliseconds.

Now, envision a similar system applied to content. Instead of bidding to display ads, publishers compete to inform AI responses. The AI evaluates submissions based on quality metrics such as relevance, accuracy, freshness, and authority. This approach resembles PageRank for real-time AI responses—algorithmic evaluation operating in milliseconds rather than batch processing.

For instance, consider a scenario where you ask an AI system like Gemini, "What are the reviews of the new Google Pixel phone?" This query is broadcast to participating publishers, including tech reviewers, consumer sites, and electronics retailers. They submit their best content to the auction. Gemini then evaluates the quality, recency, and relevance of these submissions, synthesizing the winning content into your answer.

In this model, the demand-side platform disappears. There's no longer a need for advertisers optimizing for clicks. Instead, publishers compete to be the most useful source of information. The internet experiences fewer ads, but every piece of content vies for attention in an auction measured in milliseconds.

Publishers benefit from increased traffic and attribution when their content is selected, leading to indirect revenue through brand building and subscriptions. However, this is contingent upon their content consistently winning on merit.

Venture Capital

Data is actually not a great VC-backed business

Auren • July 27, 2025

Business•Data•VentureCapital•PrivateEquity•Growth•Venture Capital

[YOUTUBE_EMBED:VIDEO_ID]

Five years ago, I advocated for the growth of pure-play data businesses, believing that the increasing data-centric approach of companies would drive demand for data-as-a-service (DaaS). However, this prediction did not materialize as expected. The number of hedge funds investing significantly in alternative data has decreased, with fewer than 100 funds currently making substantial investments. Similarly, industries like real estate and retail have shown minimal adoption of data purchasing. Even with the advent of AI, the market for data has not expanded as anticipated, primarily because extracting value from data remains challenging.

Data businesses, which sell raw data, are profitable and experience steady growth but are not ideal candidates for venture capital funding. Unlike software-as-a-service (SaaS) companies, which have seen numerous unicorns, DaaS companies like ZoomInfo have remained exceptions, often achieving profitability without venture capital. The majority of large DaaS companies are privately held, with private equity firms favoring them due to predictable revenue streams and potential for cost optimization. Therefore, data businesses are more suited for private equity investment rather than venture capital.

Venture capital thrives on rapid growth and substantial losses, fueling fast expansion and deep research and development. In contrast, data companies typically grow slowly and profitably, making them less compatible with the venture capital model. The success of DaaS companies like ZoomInfo, which achieved unicorn status without venture funding, highlights this discrepancy. Most data businesses should consider alternative funding options, such as private equity or debt financing, to support their growth.

Why Every International Founder Should Spend Three Months in the U.S.

Speedrun • July 22, 2025

Business•Startups•Entrepreneurship•InternationalFounders•Innovation•Venture Capital

A guest post by investor and writer Guillermo Flor highlights why every international founder should spend a few months in the U.S. Flor, an entrepreneur and investor from Spain, shares his personal journey from working as a lawyer to building startups and eventually moving to the U.S., which transformed his career. He emphasizes the unique advantages of spending time in American startup hubs like San Francisco and New York City.

In the U.S., there is a distinct level of openness to new ideas that is less common in Europe. Founders, investors, and executives tend to have a mindset of "Let’s talk, let’s build, let’s try something," which significantly increases the chances of building something great. This openness is a key trait of successful innovators.

Flor also points out the American bias toward action. During events like New York Tech Week, he found that people act quickly on opportunities, such as a CEO of a $2 billion company agreeing to a podcast recording within a day of a cold message—something that rarely happens in Europe.

Respect for builders at any stage is another critical difference. In the U.S., early-stage founders are taken seriously and can access big customers and meetings based on their ambition, not just past outcomes. This culture encourages speed and momentum in startups.

Thinking big and aiming to build billion-dollar companies is normalized in the U.S., especially in innovation hubs. This mindset contrasts with some European views, where such ambitions may be met with skepticism or dismissal. Being around visionary entrepreneurs pushing the boundaries inspires bigger dreams and bolder actions.

Finally, the talent density in places like San Francisco is unmatched. The concentration of ambitious, talented individuals working on solving global problems with technology creates an environment where anyone can level up just by being there. The high bar and fast tempo make it clear how much more is possible.

Flor concludes that international founders don’t necessarily need to move permanently to the U.S., but spending three months annually, especially early in their career, can open their minds, expand ambition, and accelerate their learning curve in ways that many other places currently cannot match.

A Path Forward for Seed VCs

Nextview • July 23, 2025

Business•VentureCapital•SeedInvesting•AI•Innovation•Venture Capital

In my previous post, I argued that seed investing is facing an existential crisis driven by four forces:

The maturation of the venture capital industry

The formidable forces of mega-funds and YC

Power law thinking becoming consensus

The AI platform shift which multiplies the first three forces

My Partner Stephanie Palmeri described it as “the series finale cliffhanger of posts” and lots of folks are eagerly anticipating part II.

Well, here it is. But think of this as episode 1 of the new season. I’ll share part of the “answer,” but most of it will remain in the mystery box.

And let’s be honest, it would make no sense to share my specific “answer” publicly. It’s up to each investor to read this and figure out what the right path is for themselves.

But here’s how I think about the way forward. Ultimately, it comes down to a few simple and somewhat obvious things:

The magnitude and progression of the AI supercycle

Defending and increasing share through sustaining innovations

Betting the farm on some form of disruptive innovation

Why Seed Rounds Are Growing as Startups Shrink

Tomtunguz • July 27, 2025

Business•Startups•SeedFunding•VentureCapital•StartupTrends•Venture Capital

Why is the sub-$5 million seed round shrinking?

A decade ago, these smaller rounds formed the backbone of startup financing, comprising over 70% of all seed deals. Today, PitchBook data reveals that figure has plummeted to less than half.

The numbers tell a stark story. Sub-$5M deals declined from 62.5% in 2015 to 37.5% in 2024. This 29.5 percentage point drop fundamentally reshaped how startups raise their first institutional capital.

Three forces drove this transformation. We can decompose the decline to understand what reduced the small seed round & why it matters for founders today.

VC fundraising dynamics represent the largest driver, accounting for 46% of the decline. US venture capital fundraising nearly doubled from $42.3B in 2015 to $81.2B in 2024. The correlation of -0.68 between sub-$5M deals & VC fundraising shows a powerful relationship: as funds grew larger, small rounds became scarcer.

Larger funds need larger checks to move the needle. A $500M fund can’t build a portfolio writing $1M checks. The math simply doesn’t work for their economics.

Inflation represents the smallest contributor at just 15% of the decline. What cost $5M in 2015 requires $6.7M today. This represents a meaningful increase but not the primary culprit.

Crucially, BLS data shows software engineering salaries grew at nearly the same rate as general inflation. This means the real cost of building startups remained relatively stable. Salary inflation isn’t driving founders to raise larger rounds.

The remaining 39% stems from other market forces. These likely include heightened competition for deals, increased pre-seed valuations pushing up seed round sizes, & founders’ growing capital appetites as they chase more ambitious visions from day one. The proliferation of seed funds & the emergence of multi-stage firms investing earlier also contribute to this shift.

Here’s the paradox: despite these larger rounds, startups are actually shrinking. Carta data shows SaaS companies are 20% smaller at Series A today than in H1 2020. Smaller teams are more than offsetting inflation costs through increased productivity.

This efficiency gain will accelerate with AI. As productivity tools enable founders to build more with less, we’ll see teams generate more ARR per employee while valuations continue to climb. The best founders are already achieving with five engineers what previously required twenty.

We’re witnessing a shift in startup financing. The small, disciplined seed round that launched thousands of companies in the past decade has been replaced by bigger rounds, higher valuations, compressed timelines, & loftier expansion expectations.

Why our access is improving

Signalrankupdate • Rob Hodgkinson • July 30, 2025

Business•Startups•Investment•SeedFunding•VentureCapital•Venture Capital

With our index-like strategy of systematically investing in premier Series Bs, the only question that matters is whether we can partner with seed managers who are backing the full distribution of breakout Series Bs.

This is particularly important in a power law environment where the vast majority of returns reside within a tiny subset of the companies. The ability to consistently support these companies at Series B is critical not just to our returns, but to helping seed investors fully capture the upside of their best picks.

The good news is that our access continues to improve. Figure 1 shows the top 10 ranked Series Bs per vintage per our models. The companies we invested in are in green, while companies we saw but did not invest in are in pink and companies we did not see are blank. We invested in two of the top 10 companies last year (Anrok & Bounce).

Figure 1. SignalRank’s access to top 10 ranked qualifying Series Bs in 2023 & 2024

Source: SignalRank

In 2025 to date, we have invested in two of the top 10 ranked Series Bs, including the #1 ranked company on our model for this year so far (Together AI, see Figure 2). We are seeing our access continue to improve (Figure 2). But we know we’re not seeing everything yet. That’s why expanding our partner network is a priority.

Figure 2. SignalRank’s access to top 10 ranked qualifying Series Bs in 2025 (Jan-Mar 2025)

Source: SignalRank

So why is our access improving?

It could be because we have been in market for longer, with higher brand awareness and stronger relationships with a larger network of seed managers. This would be the feel good answer.

Structural reasons are more likely:

Larger round sizes allow for more pro rata

AI companies with high execution velocity are able to attract ever more capital, and may need to raise more capital still to keep ahead of their competition. A $2bn pre-money valuation allows for a $100m Series B with just ~5% dilution. Larger rounds leave more space for existing investors to fill their pro rata.

Opportunity funds are out of vogue

NextView’s Rob Go asked the question of whether we are seeing the end of seed investing as we know it, driven by the trifecta of Y Combinator attracting top talent (to the detriment of non-YC seed investors), mega-funds investing at seed (thereby squeezing out seed VCs) and AI being too capital intensive for seed investors.

He may be overstating the case somewhat, but it is clear that seed investors are fighting harder for every dollar being raised. A corollary has been the number of opportunity funds closing has ground to a halt. Seed investors are therefore left with the options of enduring dilution, trying to spin up an SPV with their LPs, or partnering with SignalRank (with full 20% deal by deal carry).

Pre-IPO SPV opportunities favored

Mid-stage SPVs (at Series B / Series C) have become harder to fill for managers seeking to take advantage of their pro rata directly with their LPs. Liquidity is the focus for these types of LPs, preferring to pile into household pre-IPO names (SpaceX, Stripe, Anduril, etc) than backing higher growth Series Bs with unknown liquidity timeframes.

We are now working with 200+ seed managers who are sharing 100+ post Series A / pre Series B opportunities with SignalRank per month. This is enabling us to see a high proportion of all qualifying Series Bs on our model.

By supporting our managers, we are not replacing seed investors’ own capital strategies, but offering a complementary product: our capital allows seed investors to preserve ownership in their best companies, with instant execution (without spinning up a separate SPV), and aligned interests (with our partners enjoying full 20% carry).

We’ve made a strong start. But to fully deliver on our mission, we know we must continue to earn the trust of the seed community. That’s how we’ll achieve the kind of consistent access required to execute fully on our strategy.

On 9 data-driven tips for YC startup founders

Medium • Jared Heyman • July 24, 2025

Business•Startups•YCFounders•StartupSuccess•DataDrivenTips•Venture Capital

Since Rebel Fund invests exclusively in seed-stage Y Combinator startups, the dozens of blog posts I’ve published over the years are focused mostly on how investors like us can improve their odds of investing in tomorrow’s YC unicorns. However, this post will take a different approach — I’ll share some data-driven tips that YC founders can follow to maximize their odds of success based on what we’ve learned over 5+ years building the world’s most sophisticated ML/AI algorithm for predicting YC startup success.

Tip #1 — Swing for the fences

Startup outcomes follow a very steep power law curve, such that ~6% of YC startups represent a whopping ~90% of the total valuation growth. The vast majority of startups enjoy no significant valuation growth at all, with a few big winners like Airbnb, Stripe, DoorDash, etc dwarfing even other decacorns ($10B+ valuations), which in turn dwarf even other unicorns ($1B+ valuations), which in turn dwarf the minicorns ($100M+ valuations).

Startup outcomes are relatively binary— either they’re a huge success or a failure. The implication is that founders should 1) pursue opportunities with massive upside potential rather than incremental gains, and 2) give it their all. The years after you start your first venture-backed startup will probably be the most consequential of your entire career.

Tip #2 — Be patient

The median time for a YC startup to achieve an exit (acquisition or IPO) is about 3 years, but larger exits ($100M-$999M) take closer to 6 years, and unicorn exits ($1B+) typically take nearly a decade. Building a successful technology startup is a marathon, not a sprint.

You may see headlines about tech companies achieving ridiculous valuations ridiculously fast, but the reason they make headlines is they’re the exception rather than the rule. In the vast majority of cases, building a unicorn is a slow, painful, and unglamorous decade-long commitment.

Of course there are many exciting milestones along the way, but you should think of them as islands in a sea of hard work, relentless focus, and near-death experiences. If you don’t enjoy the journey of “building something people want” with its long hours and even longer odds, then you’ll never make it to the destination.

Tip #3 — Start young

We found that the average YC unicorn founder had 8 years work experience when they started their YC startup. Assuming they got their first job after graduating college at ~22 years of age, that means they were ~30 years old. Plenty of founders had more or less than this average, and a surprising number were only a few years into their career — though few had over 15 years experience.

Building a startup takes a lot of time and energy, and risk, so it’s better to start early in your career. Clearly some real-world work experience helps, but the trick is to properly balance energy and wisdom. Anecdotally, we’ve noticed that the current generation of “AI first” startup founders are younger than the historical average, probably because advanced AI is so fresh that relevant industry experience isn’t really to be had.

Tip #4 — Get co-founders

One of the many things we learned training our Rebel Theorem 4.0 ML/AI algorithm for predicting YC startup success is that the number of co-founders in a startup is positively correlated with successful outcomes. It’s not true in the absolute (you can have too many cooks in the kitchen) but partnering with another co-founder or two is a smart idea.

We’re working on a set of new algorithm features now that dissect exactly what characteristics of “co-founder fit” predict startup success, so I’ll have more to say on that soon. One thing we know for sure though is co-founder breakups are the #1 cause of early-stage startup failure. So at a minimum, make sure you know your co-founders well and you’re aligned in terms of long-term vision and values.

Tip #5 — Go to a top (technical) university

Another thing we learned about YC unicorn founders is they often graduated from top-ranked universities with strong engineering programs (think Stanford or MIT).

There is a long tail of other universities represented amongst unicorn founders, so going to a top school really falls into the “helpful but not necessary” category, but having co-founders with strong technical and/or product backgrounds is vital based on our data.

…

Ultra-Unicorn Investors: These Firms Have Amassed The Largest Portfolios Of $5B+ Startups

Crunchbase • July 28, 2025

Business•Private Equity•Unicorns•Investment•Venture Capital

An analysis of Crunchbase data reveals that several firms have built substantial portfolios of ultra-unicorns—private companies valued at $5 billion or more. Among the most active investors in this category are Andreessen Horowitz, Sequoia Capital, Tiger Global Management, Lightspeed Venture Partners, and Accel. Notably, Tiger Global has invested in ultra-unicorns such as Databricks, Scale AI, and Shein. This indicates a significant involvement of private equity firms in funding these highly valued private companies.

In terms of portfolio counts, private equity investors dominate, with Tiger Global holding 19% of companies, Coatue 18%, and SoftBank Vision Fund, GIC, and Andreessen Horowitz also having significant shares. This dominance reflects the broader trend of private equity firms making later-stage investments across a wider pool of companies compared to venture capital firms, which typically invest earlier and continue to support their successful companies.

At the early investment stages, Andreessen Horowitz, Accel, and Sequoia Capital lead in Series A and B rounds, having invested in ultra-unicorns like Scale AI, Databricks, Stripe, and Klarna. Andreessen Horowitz has invested in 16 unique companies at these stages, while Accel and Sequoia each have 14. This trend is particularly prominent among U.S. firms, with Chinese investors such as IDG Capital, HSG (formerly Sequoia Capital China), and Tencent also being active in this space.

At the seed level, Y Combinator leads by a large margin, representing 10% of the seed investments in the $5 billion-plus club. The startup accelerator was an early investor in companies like Rippling, Scale AI, and Deel. Other notable seed investors include SV Angel 1, Initialized Capital, Soma Capital, and Homebrew.

In terms of funding amounts, private equity firms, particularly SoftBank and SoftBank Vision Fund, have led the largest rounds in this asset class. This list also includes major tech companies like Meta and Microsoft, which have backed companies such as Scale AI and OpenAI, respectively. Venture capital firms like Andreessen Horowitz and Sequoia Capital have also been involved in leading investments exceeding $8 billion.

As of mid-2025, six companies valued at or above $5 billion have exited, compared to nine in 2024. Notably, companies like Figma, valued at $12.5 billion, Navan at $9.2 billion, and Klarna at $6.7 billion, have filed confidentially with the SEC for potential IPOs. With $482 billion in investor capital placed into these 211 private high-value companies since the early 2000s, a few more listings in 2025 would certainly help alleviate the venture capital liquidity crunch.

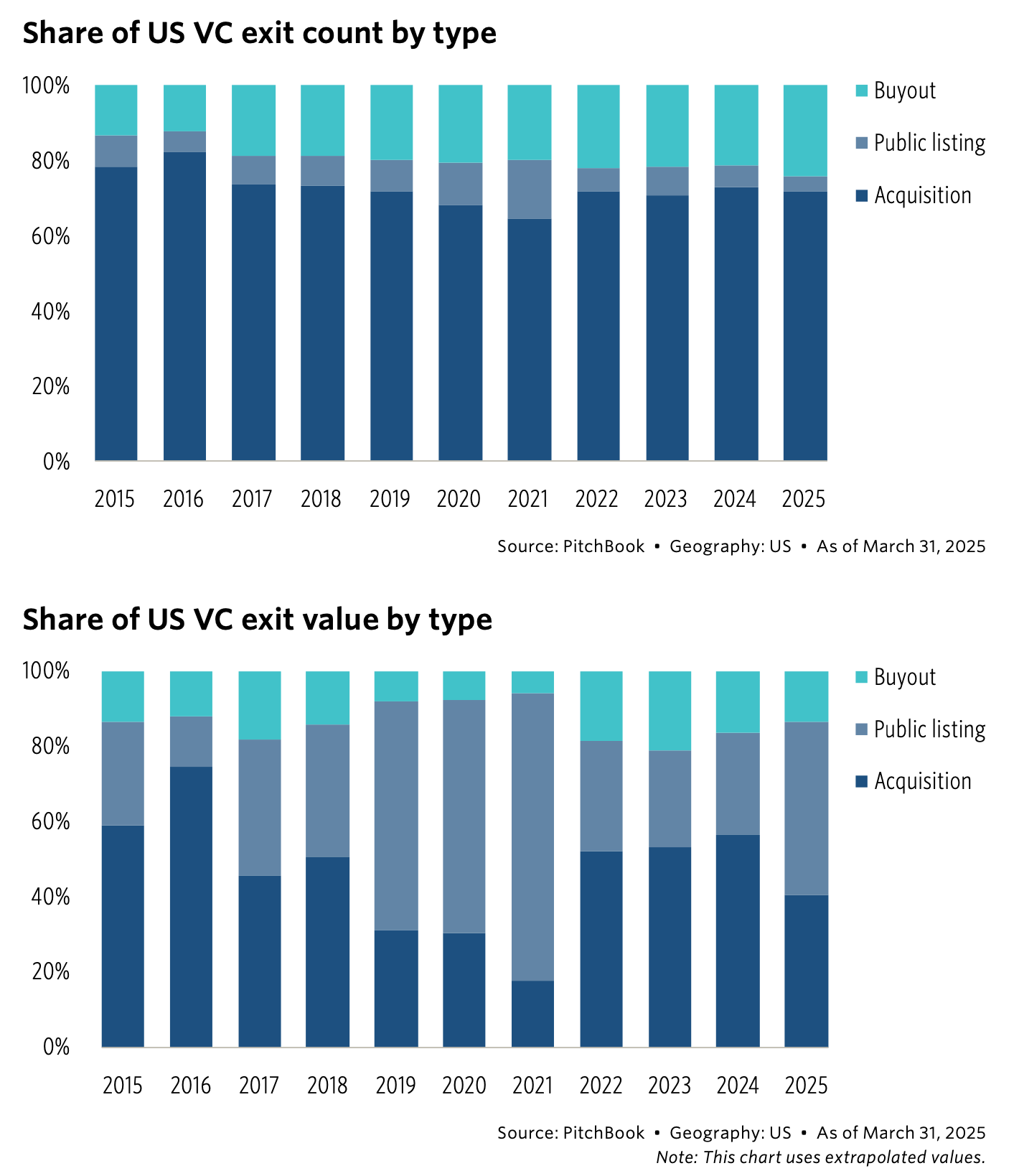

Landscape of VC-Backed M&A

Lp Club • Sarah • July 29, 2025

Business•MergersAndAcquisitions•VentureCapital•InvestmentTrends•Venture Capital

The venture capital (VC) landscape is undergoing significant transformations, making it essential for global Limited Partners (LPs) to comprehend the evolving dynamics of mergers and acquisitions (M&A). Understanding these shifts is crucial for informed investment decisions and strategic positioning in the market.

In recent years, there has been a notable increase in VC-backed companies engaging in M&A activities. In 2024, over a third of U.S. VC-backed startup acquisitions involved another VC-backed company as the buyer, marking a significant rise from previous years. This trend reflects a strategic move by VC-backed firms to consolidate resources, expand market reach, and enhance competitive positioning. (cadetlegal.ai)

The surge in VC-backed M&A is also influenced by the availability of substantial capital reserves, often referred to as "dry powder." This financial strength enables firms to pursue larger acquisitions and implement "buy and build" strategies, particularly in sectors like Software as a Service (SaaS), fintech, and generative AI. For instance, companies such as Databricks and Stripe have been active acquirers, leveraging their capital to fuel growth and innovation. (cadetlegal.ai)

Additionally, the global M&A market has experienced a resurgence, with a 13.2% increase in deal count and a 26.8% rise in deal value year-over-year by the third quarter of 2024. This recovery indicates a renewed confidence among investors and a more favorable environment for M&A activities. (lpclub.co)

However, this evolving landscape presents challenges. The increased competition for high-quality targets has led to elevated valuations, requiring firms to be strategic in their acquisition approaches. Moreover, integrating acquired companies effectively remains a complex task, necessitating careful planning and execution to realize the anticipated synergies.

In conclusion, the landscape of VC-backed M&A is marked by increased activity and strategic consolidation. For global LPs, staying informed about these trends is vital to navigate the complexities of the market and capitalize on emerging opportunities.

European Weakness

Wise shareholders back plan to move listing from the UK to the US

Ft • co-founder • July 28, 2025

Business•Strategy•Finance•StockListing•CorporateGovernance•European Weakness

Fintech company Wise has successfully overcome an investor rebellion sparked by its co-founder, who opposed the company's plan to extend its dual-class share structure. The resolution saw shareholders back the proposal to move the company's stock listing from the UK to the US. This strategic decision aligns with Wise's ambition to tap into a broader, more liquid investor base and enhance its market valuation potential, leveraging the deep capital markets and tech-focused investor ecosystem in America.

The governance shift involving the extension of the dual-class share structure proved contentious. The co-founder led the opposition, expressing concerns about the potential dilution of shareholder rights and the possible long-term implications for corporate governance. However, the majority of shareholders viewed the move as a necessary step to support Wise’s growth trajectory and access to capital. This support reflects confidence in the company’s management team and the strategic importance of listing on a US exchange, which tends to be more favorable to technology enterprises with dual-class shares.

Key points include:

Shareholders voted in favor of the plan to change the listing venue from the London Stock Exchange to a US exchange, marking a significant pivot in Wise's market strategy.

The extension of the dual-class share structure allows founders and early investors to retain disproportionate voting power relative to their economic stake, a common structure among tech companies in the US.

The co-founder’s public opposition highlighted tensions around governance practices and investor rights but did not sway enough investors to block the proposal.

The move is expected to improve share liquidity, attract more institutional tech investors, and potentially lead to a stronger valuation.

This decision is part of a broader trend where UK-based fintech and tech companies are increasingly pursuing US listings to capitalize on broader investor interest and more favorable market conditions.

The implications of the listing shift are multifaceted. For Wise, the US listing could provide a more vibrant secondary market, enabling easier trading and price discovery for its shares. It may also facilitate future capital raises to fund product innovation and international expansion. Conversely, this move can stir debate around governance standards, as the US dual-class share model often faces criticism for entrenching founder control at the expense of minority shareholders.

Overall, Wise's successful navigation past internal dissent and shareholder approval indicates strong market support for its strategic direction. The decision underlines the evolving dynamics of global stock markets where tech companies seek environments that best support their long-term growth ambitions, even if it means moving away from historic home markets. This case exemplifies how governance structures and listing venue choices are critical considerations for fintech firms aiming to maximize both operational flexibility and investor appeal.

AI

As Anthropic goes, so goes the generative AI trade, says Big Technology's Alex Kantrowitz

Youtube • CNBC Television • July 28, 2025

Technology•AI•GenerativeAI•Investment•Innovation

The discussion centers around Anthropic, a notable player in the generative AI space, and its current influence on the broader generative AI industry, as highlighted by Big Technology commentator Alex Kantrowitz. Anthropic's progress, strategic decisions, and market movements are seen as a bellwether for the entire generative AI trade, underscoring its pivotal role in driving investor sentiment and technological innovation in this domain.

Alex Kantrowitz emphasizes how Anthropic’s performance and developments often set the tone for investor confidence, impacting not only AI startups but also established tech giants vested heavily in AI advancements. The video outlines that investors and market watchers closely track Anthropic’s milestones, product releases, and partnerships as key indicators of the generative AI trade's health and potential growth trajectory.

A critical insight from the discussion underscores the wider implications of Anthropic’s trajectory for the tech ecosystem. Success or setbacks at Anthropic can ripple across companies involved in AI research, investment funding, and commercial deployment. Kantrowitz notes that this influence is partly due to Anthropic’s status as a leader in creating safer and more controllable AI models—a crucial factor amid ongoing regulatory scrutiny and ethical debates around AI.

Furthermore, the conversation highlights the competitive landscape of generative AI, where Anthropic’s advancements contribute to shaping industry standards and technology benchmarks. The company’s innovative approaches to AI safety mechanisms are cited as setting a precedent other firms aim to match or exceed. This competitive posture enhances the dynamism of the generative AI field but also introduces pressures on AI firms to innovate responsibly.

Kantrowitz also discusses how Anthropic fits into the broader strategic interests of Big Tech companies that either collaborate or compete with it. The alignment or divergence between Anthropic’s strategic goals and those of major incumbents can significantly influence market positioning and partnership opportunities, further affecting the generative AI trade's overall outlook.

In summary, the video encapsulates Anthropic’s role as a touchstone for generative AI industry health. Its progress is a litmus test for investors, technologists, and policymakers watching the unfolding future of AI. With safety, competition, and market dynamics all intertwined, Anthropic's journey offers significant insights into the potential paths generative AI may take in the coming years.

a16z GP, Martin Casado: Anthropic vs OpenAI & Why Open Source is a National Security Risk with China

Youtube • 20VC with Harry Stebbings • July 28, 2025

Technology•AI•Open Source AI•Regulation•Geopolitics

In a recent discussion, Martin Casado, General Partner at Andreessen Horowitz (a16z), delved into several pressing topics within the artificial intelligence (AI) sector. He began by analyzing the current AI investment landscape, emphasizing the pivotal role of foundational models in shaping the future of AI applications. Casado highlighted the emergence of companies like Anthropic, which are developing advanced AI models, and discussed the potential implications for the AI application layer.

A significant portion of the conversation focused on the challenges and opportunities presented by open-source AI. Casado argued that open-source AI is essential for fostering innovation and competition, serving as a counterbalance to monopolistic tendencies in the tech industry. He expressed concern over regulatory efforts that might restrict open-source AI, suggesting that such measures could inadvertently stifle technological progress and grant undue advantages to large corporations. Casado drew parallels to historical instances where regulatory capture led to monopolies, underscoring the importance of regulations that promote competition and prevent monopolistic practices. (podcastworld.io)

The discussion also touched upon the geopolitical implications of AI development, particularly in relation to China. Casado highlighted China's strategic approach to AI, noting its dual-phase plan: first, leveraging AI for domestic population control through extensive surveillance, and second, exporting this technology globally to influence international norms and governance. He emphasized the need for the United States to lead in AI innovation, advocating for partnerships with private companies rather than imposing restrictive regulations. Casado stressed that embracing open-source AI could serve as a national security imperative, enabling the U.S. to maintain a competitive edge and uphold democratic values in the face of authoritarian models. (thirdway.org)

In conclusion, Casado's insights underscore the complex interplay between technological innovation, regulatory frameworks, and international dynamics in the AI sector. He advocates for policies that support open-source AI development, promote healthy competition, and position the U.S. as a leader in the global AI landscape.

Balaji Srinivasan: How AI Will Change Politics, War, and Money

Youtube • a16z • July 28, 2025

Technology•AI•Decentralization•Cryptocurrency•FutureOfWar

In a recent discussion, Balaji Srinivasan, a technologist and investor, delved into the transformative potential of artificial intelligence (AI) on politics, warfare, and finance. He emphasized that AI is not merely a technological advancement but a catalyst for significant political and economic shifts.

Srinivasan highlighted the centralization of power in current AI systems, which often reflect the biases and interests of their creators. He argued that decentralized AI could democratize technology, allowing individuals to build and control their own AI systems, thereby reducing the influence of centralized entities. This decentralization could lead to a more equitable distribution of power and resources.

In the realm of warfare, Srinivasan discussed the evolving nature of conflicts, noting that modern technologies like drones and cyber capabilities are reshaping military strategies. He pointed out that the Armenia-Azerbaijan conflict served as a glimpse into the future of warfare, where technology plays a pivotal role in determining outcomes. This shift suggests that future conflicts may be less about traditional military might and more about technological superiority.

Regarding finance, Srinivasan underscored the disruptive impact of cryptocurrencies, particularly Bitcoin. He described Bitcoin as a "political revolution," capable of challenging traditional financial systems and altering global economic dynamics. He argued that Bitcoin's decentralized nature empowers individuals, potentially reducing the influence of centralized financial institutions and altering the balance of power in the global economy.

Srinivasan also touched upon the concept of "network states," which are communities organized around shared values and goals, often facilitated by digital platforms. He suggested that these network states could emerge as new forms of political organization, offering alternatives to traditional nation-states. This idea reflects a broader trend towards digital and decentralized forms of governance.

In summary, Srinivasan's insights provide a comprehensive overview of how AI and related technologies are poised to reshape various facets of society, from governance and military engagement to financial systems and social structures.

AI that was inevitable

Jamesin • July 23, 2025

Technology•AI•MachineLearning•Productivity•Innovation

Google Sheets has introduced AI integration, allowing users to call prompts directly from cells. This feature streamlines workflows that previously required external tools and complex processes. For instance, in a Series-B analysis, instead of manually collecting data and using scripts to process it, users can now utilize the AI function within Sheets to categorize companies as AI-related or not and further classify them into specific categories.

This advancement signifies a broader trend where tech giants like Google are embedding AI into widely-used tools, enhancing efficiency and accessibility. While the rollout took longer than anticipated, likely due to thorough vetting processes, the integration of AI into Google Sheets opens up numerous possibilities for data analysis and decision-making.

The venture capital landscape is also evolving, with a noticeable shift towards vertical AI applications. Companies focusing on specialized AI solutions are gaining traction, as opposed to general horizontal workflow solutions. This trend reflects a growing recognition of the unique challenges and opportunities within specific industries, prompting investors to seek out startups that offer tailored AI solutions.

As AI continues to permeate various sectors, the importance of integrating it into everyday tools becomes increasingly evident. The seamless incorporation of AI into platforms like Google Sheets not only enhances productivity but also democratizes access to advanced data analysis capabilities, enabling a broader range of users to leverage AI in their workflows.

Iconiq set to lead $5bn funding round for AI start-up Anthropic

Ft • July 29, 2025

Business•Investment•AI•VentureCapital

Iconiq Capital, an investment group affiliated with Mark Zuckerberg and Jack Dorsey, is exploring new avenues to generate value from its $5.75 billion fund amid a decline in IPOs. This downturn has prompted startups and investors to seek alternative strategies for returns, such as mergers, acquisitions, and trading startup stock on secondary markets. (ft.com)

In this context, Iconiq is reportedly leading a $5 billion funding round for AI startup Anthropic. Anthropic, a competitor to OpenAI, is in early discussions to raise at least $3 billion, potentially up to $5 billion, which could more than double its valuation to over $150 billion. The company has received interest from several large Middle Eastern investors, including Abu Dhabi-based AI fund MGX. Although Anthropic has been cautious about accepting funding from the region due to ethical concerns, prior secondary share purchases linked to MGX have occurred. (ft.com)

Founded in 2021 by former OpenAI executives Dario and Daniela Amodei, Anthropic has positioned itself as a leader in AI safety and ethical AI development. Its flagship model, Claude, directly competes with OpenAI’s ChatGPT, gaining recognition for its focus on transparency and controllability in generative AI. (techfundingnews.com)

The potential investment from Iconiq underscores the growing interest in AI startups and the strategic importance of securing substantial funding to compete in the rapidly evolving AI industry. As the AI sector continues to attract significant investment, companies like Anthropic are positioning themselves to leverage these funds to advance their technologies and expand their market presence.

The Evolution of AI Agents: Navigating the “Fog of AI” in Rapidly Changing Foundations | Stanislas Polu and Harrison Chase

Generalist • Mario Gabriele • July 29, 2025

Technology•AI•Agents•Autonomous Systems•Innovation

What’s next for AI agents, and how will they change the way we work? In this conversation, Stanislas Polu (CEO of Dust, formerly research at OpenAI) and Harrison Chase (CEO of LangChain, one of the most influential open-source AI frameworks) unpack the current state and future of AI agents. They reflect on their early conversations in the pre-ChatGPT days, how the landscape has evolved, and where it's headed next.

Stan and Harrison share lessons from building today’s agent infrastructure—from chat interfaces to the future of ambient, autonomous systems—and discuss the challenges of operating in the chaotic "fog of AI." We dig into the open questions, early insights, and messy realities of building in today’s fast-moving AI landscape.

In this conversation, we explore:

What sparked Stan and Harrison’s early interest in LLMs

The pre-ChatGPT era and how the AI landscape has evolved since late 2022

High-leverage use cases for agents inside Dust and LangChain today

The critical differences between AI workflows and true agents—and why agents may unlock more powerful, long-term solutions

Why reliability is the main blocker to ambient agents

Real-world enterprise use cases for AI agents across customer support, sales, and engineering

How to build in the “fog of AI” and the challenge of maintaining product vision when foundations shift every six months

Strategies for creating defensibility in a world where tech giants can quickly replicate features

The future of multi-agent systems and how they could transform enterprise productivity

The current state of the AI talent market

And much more

What will it take for robotaxis to go global?

Ft • July 23, 2025

Technology•AI•Autonomous Vehicles•Urban Transportation•Innovation

Robotaxis are becoming a viable part of urban transportation, with companies like Waymo, Tesla, and Zoox progressing toward commercialization after years of investment and development. Waymo leads the pack with over 250,000 rides weekly across five U.S. cities, using expensive custom vehicles but aiming to cut costs through scalable partnerships and manufacturing. Zoox is building its own high-end robotaxis from scratch, while Tesla takes a cost-sensitive approach with its camera-only autonomous system deployed in Model Ys, aiming for consumer fleet-sharing.

Key challenges remain: robotaxis must prove safety in real-world environments, achieve profitability at scale, and overcome regulatory hurdles that vary by state. While Waymo and Zoox use Level 4 autonomy, Tesla’s system is only Level 2, prompting safety concerns and scrutiny from the NHTSA. Investors are cautiously optimistic, with firms like JPMorgan forecasting profitability only when vehicle costs fall below $100,000.

Companies are experimenting with business models—including partnerships with Uber and direct-to-consumer services—to manage high costs of fleet maintenance, charging, and staffing. Despite optimistic forecasts and supportive U.S. policies under President Trump, analysts say the market is likely to consolidate with one or two leading players per region. Ultimately, robotaxis could revolutionize transport if they overcome economic, technical, and societal barriers.

The AI SDR Reality Check: How To Actually Make It Work

Saastr • Jason Lemkin • July 24, 2025

Technology•AI•SalesAutomation•B