Contents

Editorial

Vance AI Speech: A Breath of Fresh Air

Essays of the Week

Arm to launch its own chip in move that could upend semiconductor industry

Alibaba confirms Apple deal bringing AI features to iPhones in China

Gemini 2.0 for $1: The PDF Tool VC-Backed Companies Don’t Want You to Use

Founded by DeepMind alumnus, Latent Labs launches with $50M to make biology programmable

Interview of the Week

Andrew Keen and Greg Beato

Startup of the Week

Post of the Week

Albert Wenger on JD Vance

Editorial:

Vance AI Speech: A Breath of Fresh Air

JD Vance was in paris this past week as a speaker and participant. The AI Action Summit was put on by President Macron in an effort to pull together the governments of the world to align on attitudes to AI and regulation. Many AI CEOs were also in attendance.

His opening sentence was that his goal was not to discuss AI safety but AI opportunity. For readers of That Was The Week this is probably not a controversial statement. But in the context of Paris with EU regulators in attendance this was a lightening rod.

The aftermath of his speech was that the USA and UK refused to sign the document produced by the summit. But since then there have been consequences as the articles below show.

The AI Opportunity Moment

JD Vance's Paris speech represents a pivotal moment in the global AI narrative - one where the focus shifted dramatically from safety to opportunity. As someone deeply embedded in both the tech and sociological worlds, I see this as more than just a policy statement - it's a declaration of American intent to lead the AI revolution.

Why This Matters

The aftermath has been remarkable and telling:

The EU dropped its AI Liability Directive

The UK rebranded its AI Safety Institute to focus on security

OpenAI removed diversity commitments and content warnings

Anthropic accelerated its development timeline, projecting $34.5B revenue by 2027

These aren't just policy changes - they represent a fundamental power shift in how we approach AI development. Even Macron acknowledged that "Europe is not in the race today."

The Real Story

What we're witnessing is the end of the "AI safety first" era and the beginning of an "AI opportunity" era. This isn't just about regulation - it's about who will lead the next industrial revolution. The US and UK's refusal to sign the Paris agreement signals that Western democracies are choosing competitive advantage over cooperative restraint.

My Take

As someone who builds products and studies societal impacts, I believe Vance's speech marks a crucial inflection point. While safety concerns are important, they risked creating a regulatory environment that would have stifled innovation. The industry has demonstrated its ability to develop responsibly without excessive regulation.

The real question isn't whether AI should be safe or competitive - it's how we ensure American leadership in this transformative technology. Vance's speech wasn't just a "breath of fresh air" - it was a declaration that the AI arms race is now officially underway.

Essays of the Week

OpenAI cancels its o3 AI model in favor of a ‘unified’ next-gen release

Author:Kyle Wiggers • Source:TechCrunch • Published:2025-02-12 • Reading Time:5 min • Domain:techcrunch.com

OpenAI has effectively canceled the release of o3, which was slated to be the company’s next major AI model, in favor of what CEO Sam Altman is calling a “simplified” product offering.

In a post on X on Wednesday, Altman said that in the coming months, OpenAI will release a model called GPT-5 that “integrates a lot of [OpenAI’s] technology,” including o3, in its AI-powered chatbot platform ChatGPT and API. As a result of that roadmap decision, OpenAI no longer plans to launch o3 as a stand-alone model.

The company originally said in December that it aimed to release o3 sometime early this year. Just a few weeks ago, Kevin Weil, OpenAI’s chief product officer, said in an interview that o3 was on track for a “February-March” launch.

“We want to do a better job of sharing our intended roadmap, and a much better job simplifying our product offerings,” Altman wrote in his post. “We want AI to ‘just work’ for you; we realize how complicated our model and product offerings have gotten. We hate the model picker [in ChatGPT] as much as you do and want to return to magic unified intelligence.”

Altman also announced that OpenAI plans to offer unlimited chat access to GPT-5 at the “standard intelligence setting,” subject to “abuse thresholds,” once the model is generally available. (Altman declined to provide more detail on what this setting — and these abuse thresholds — entail.) Subscribers to ChatGPT Plus will be able to run GPT-5 at a “higher level of intelligence,” Altman said, while ChatGPT Pro subscribers will be able to run GPT-5 at an “even higher level of intelligence.”

The Artificial Intelligence Driven Future: Who Wins and Who Loses?

Author:dunkhippo33 • Source:Elizabeth Yin • Published:2025-02-14 • Reading Time:7 min • Domain:elizabethyin.com

What happens when we fast-forward a few years and think about the rapid advancements in artificial intelligence (AI)? That’s the question that sparked an invigorating conversation between my fellow founder friends Adam Spector, Eric Bahn, and myself.

The tl;dr? Few jobs are safe and what made you successful before will not make you successful in the future. In the long run, humans will adapt and will create other jobs, but I’m worried the transition period will be brutal and that is just around the corner.

For years, the conversation around AI’s impact on jobs has largely focused on automation replacing blue-collar workers. But in reality, technology is coming just as hard for white-collar professions. It’s coming for everyone. We’re already seeing so many legal, financial-related, marketing, sales, operations, developer, and design focused AI tools that can do a lot and can take over a lot of workflow. (Note: my AI writing tool overlords are biased in writing this post as well. )

Big Tech is moving on from the DeepSeek shock

Source:Technology sector • Published:2025-02-13 • Reading Time:1 min • Domain:ft.com

Remember when China’s DeepSeek sent tremors through the US artificial intelligence industry and stunned Wall Street? That was last month. To listen to AI executives and investors now, you might think the world has moved on. Nvidia, the hardest hit, has recovered more than half the $630bn it lost.

The speed with which equilibrium has returned owes a lot to the assertion by the biggest US tech companies that they will spend even more than expected on AI infrastructure this year. But it also shows how quickly the investment case for AI has been rewritten.

The question is how much this reflects a genuine change in outlook, and how much is just industry spin. The case for buying Nvidia stock once rested on claims such as those from Anthropic chief executive Dario Amodei, who barely six months ago predicted that the training costs for a cutting-edge large language model would soon reach $100bn.

In the wake of DeepSeek, Amodei is still anticipating a huge jump in demand for AI chips — only now, it is for the completely different reason that they are needed for more complex tasks like reasoning, rather than the costs of model training. No wonder investors are feeling an acute whiplash and a greater sense of uncertainty about the sustainability of the AI boom.

OpenAI pledges that its models won’t censor viewpoints

Author:Kyle Wiggers • Source:TechCrunch • Published:2025-02-12 • Reading Time:2 min • Domain:techcrunch.com

OpenAI is making clear that its AI models won’t shy away from sensitive topics, and will refrain from making assertions that might “shut out some viewpoints.”

In an updated version of its Model Spec, a collection of high-level rules that indirectly govern OpenAI’s models, OpenAI says that its models “must never attempt to steer the user in pursuit of an agenda of [their] own, either directly or indirectly.”

“OpenAI believes in intellectual freedom, which includes the freedom to have, hear, and discuss ideas,” the company writes in its new Model Spec. “The [model] should not avoid or censor topics in a way that, if repeated at scale, may shut out some viewpoints from public life.”

The move is possibly in response to political pressure.

Many of President Donald Trump’s close allies, including Elon Musk and crypto and AI “czar” David Sacks, have accused AI-powered assistants of censoring conservative viewpoints. Sacks has singled out OpenAI’s ChatGPT in particular as “programmed to be woke” and untruthful about politically sensitive subjects.

Anthropic Strikes Back

Author:Stephanie Palazzolo • Source:The Information • Published:2025-02-13 • Reading Time:1 min • Domain:theinformation.com

After OpenAI released its reasoning models last fall, Google, Alibaba, High-Flyer Capital Management and others followed with their own. One prominent competitor, Anthropic, has been noticeably absent from the race.

Now we know one reason: Anthropic is taking a slightly different approach to reasoning. It has developed a hybrid AI model that includes reasoning capabilities, which basically means the model uses more computational resources to calculate answers to hard questions. But the model can also handle simpler tasks quickly, without the extra work, by acting like a traditional large language model. The company plans to release it in the coming weeks, according to a person who’s used it.

Anthropic surely hopes the model will help the startup reach eye-popping revenue goals—including leapfrogging OpenAI in sales to app developers—which Jon and I scooped on Wednesday.

Anthropic Projects Soaring Growth to $34.5 Billion in 2027 Revenue

Author:Jon Victor • Source:The Information • Published:2025-02-13 • Reading Time:1 min • Domain:theinformation.com

After burning $5.6 billion in cash last year, Anthropic said it would reduce that amount by nearly half in 2025 and projected up to $3.7 billion in revenue for the year as it gains on OpenAI, according to two people who have viewed the company’s figures.

Anthropic is also projecting that its revenue could grow to as high as $34.5 billion in 2027.

Anthropic’s next major AI model could arrive within weeks

Author:Kyle Wiggers • Source:TechCrunch • Published:2025-02-13 • Reading Time:2 min • Domain:techcrunch.com

AI startup Anthropic is gearing up to release its next major AI model, according to a report Thursday from The Information.

The report describes Anthropic’s upcoming model as a “hybrid” that can switch between “deep reasoning” and fast responses. The company will reportedly introduce a “sliding scale” alongside the model to allow developers to control costs, as the deep reasoning capabilities consume more computing.

Anthropic’s model, which could arrive within weeks, outperforms OpenAI’s o3-mini-high “reasoning” model on some programming tasks, according to the report. The model is also said to excel at analyzing large codebases and other business-related benchmarks.

“We’re generally focused on trying to make our own take on reasoning models that are better differentiated,” Amodei told Dillet. “We’ve been a little bit puzzled by the idea that there are normal models and there are reasoning models and that they’re sort of different from each other.”

Google Rolls Out New Memory Feature for Gemini AI, Allowing Recall of Past Conversations

Author:Tehniyat Zafar • Source:TechJuice • Published:2025-02-14 • Reading Time:2 min • Domain:techjuice.pk

Google has officially rolled out a new memory feature for its Gemini AI chatbot, enhancing its ability to recall past conversations and provide more relevant and helpful responses. This new update allows Gemini to retain the context of previous conversations and provide more accurate responses, thereby eliminating the necessity for users to review or search for past discussions.

Previously, Gemini had a limited memory that allowed it to retain user preferences upon request. However, with this latest update, the AI model now has a broader memory, enabling it to reference entire conversations and summarize past chats on specific topics. This feature allows users to continue conversations seamlessly, as Gemini will fetch relevant information from earlier discussions with just a prompt. This function enables users to continue conversations in a seamless manner, as Gemini will retrieve pertinent information from previous discussions with a simple prompt.

Users can now ask Gemini to summarize a topic discussed over several chats, and the AI will provide a consolidated response, citing the previous conversations. A “Source and related content” feature will complete responses by listing previous talks that influenced the answer. The summary function is available for Gemini 2.0 Flash and 1.5 Pro, which are stable releases, but it will be added to experimental versions at a later date.

UK and US Decline to Sign Global AI Agreement at Paris Summit

Author:ODSC - Open Data Science • Source:Stories by ODSC - Open Data Science on Medium • Published:2025-02-12 • Reading Time:2 min • Domain:odsc.medium.com

The United Kingdom and the United States have opted not to sign an international AI agreement at a global summit in Paris. The agreement, backed by 60 nations — including France, China, and India — sets out principles for ensuring AI development remains “open,” “inclusive,” and “ethical.”

In a statement, the UK government cited concerns over national security and global governance as reasons for withholding its support. Meanwhile, US Vice President JD Vance emphasized the need for AI policies that encourage growth, warning that excessive regulation could stifle innovation.

Speaking at the summit, Vance reaffirmed the Trump administration’s commitment to a “pro-growth AI policy,” arguing that overregulation could “kill a transformative industry just as it’s taking off.” He urged European leaders to approach AI with “optimism, rather than trepidation.”

Europe denies dropping AI liability rules under pressure from Trump

Author:Natasha Lomas • Source:TechCrunch • Published:2025-02-14 • Reading Time:3 min • Domain:techcrunch.com

The European Union has denied that recent moves to row back on some planned tech regulation — principally by ditching the AI Liability Directive, a 2022 draft law which had been aimed at making it easier for consumers to sue over harms caused by AI-enabled products and services — were made in response to pressure from the Trump administration to deregulate around AI.

In an interview with the Financial Times on Friday, Henna Virkkunen, the EU’s digital chief, claimed the AI liability proposal was being scrapped because the bloc wanted to focus on boosting competitiveness by cutting bureaucracy and red tape.

An upcoming code of practice on AI — attached to the EU’s AI Act — would also limit reporting requirements to what’s included in existing AI rules, she said.

On Tuesday, U.S. Vice President JD Vance warned European legislators to think again when it comes to technology rule-making — urging the bloc to join it in leaning into the “AI opportunity,” via a speech at the Paris AI Action Summit.

The Commission published its 2025 work program the day after Vance’s speech — touting a “bolder, simpler, faster” Union. The document confirmed the demise of the AI liability proposal, while simultaneously setting out plans aimed at stoking regional AI development and adoption.

Starmer chooses AI security over ‘woke’ safety concerns to align with Trump

Source:Technology sector • Published:2025-02-14 • Reading Time:1 min • Domain:ft.com

Sir Keir Starmer is seeking to strengthen diplomatic ties with Donald Trump’s administration by shifting the UK’s focus on artificial intelligence towards security co-operation rather than a “woke” emphasis on safety concerns. T

ech secretary Peter Kyle announced on Friday that the UK’s AI Safety Institute, established just 15 months ago, would be renamed the AI Security Institute.

The body, which was given a £50mn budget, will no longer focus on risks associated with bias and freedom of speech, but on “advancing our understanding of the most serious risks posed by the technology”.

Earlier this week, the UK joined the US at the AI Summit in Paris in refusing to sign a joint communique — approved by around 60 states, including France, Germany, India and China — that pledged to ensure “AI is open, inclusive, transparent, ethical, safe, secure and trustworthy”.

Officials said the recent moves on AI were part of a broader strategy at a time the Trump administration engages in a trade war against China and the EU. Some believe aligning on US priorities over AI could help the UK avoid being targeted in other areas.

Europe Falling Behind in AI Race, Macron Warns

Author:ODSC - Open Data Science • Source:Stories by ODSC - Open Data Science on Medium • Published:2025-02-12 • Reading Time:2 min • Domain:odsc.medium.com

French President Emmanuel Macron has issued a stark warning about Europe’s position in the global AI race, acknowledging that the continent is lagging behind competitors like the United States and China.

“We are not in the race today,” Macron told CNN’s Richard Quest in an exclusive interview at the Elysee Palace. “We are lagging behind.”

Macron’s concerns highlight the growing gap in AI advancements, with Europe struggling to keep pace with leading AI powerhouses. “We need an AI agenda,” he said, emphasizing the need for strategic initiatives to ensure Europe plays a significant role in AI’s future development rather than becoming just a consumer of the technology.

The new AI arms race

Source:Technology sector • Published:2025-02-12 • Reading Time:1 min • Domain:ft.com

If the first global AI summit 15 months ago, hosted by Britain’s then prime minister Rishi Sunak, focused more on co-operation to tackle the risks of AI, the latest this week in Paris highlighted a shift in the dynamics: towards geopolitical competition, and the quest for technological and economic advantage. On his first foreign trip as US vice-president, JD Vance signalled that the US was ripping out the brakes and putting its foot to the floor to develop AI. The US, and the UK, did not sign up to a closing statement that said AI should be “inclusive, transparent, ethical and safe”. A new AI arms race has begun, with the US and China vying for dominance and Europe trying to carve out its role.

The Trump administration, said Vance, intended to cement US leadership and ensure that the “most powerful AI systems are built in the US, with American-designed and manufactured chips”. In a jibe at Europe’s legislate-first approach, he said regulatory regimes had to “foster the creation of AI technology rather than strangle it”; the US would not tolerate foreign governments “tightening the screws on US companies”. Without naming China, Vance also warned against signing AI deals with an “authoritarian master”.

The vice-president was speaking days after the director of the US AI Safety Institute stood down, raising uncertainty over its future. Donald Trump has also revoked President Joe Biden’s 2023 executive order calling for top AI companies to share information with the US government. The new US stance, says one academic, is a “180-degree turnaround” from Biden’s.

That strategic shift has coincided with a tilting of the balance of AI power. US confidence in its technological lead has been rattled by China’s DeepSeek, an AI model apparently developed more cheaply and with far less computing power than US counterparts. For now, China is seeking to play both sides. It is engaging with the EU on the global regulatory agenda. But it is also investing heavily in overcoming restrictions on its access to advanced microchips — and challenging US hegemony in AI.

OpenAI scrubs diversity commitment web page from its site

Author:Dominic-Madori Davis • Source:TechCrunch • Published:2025-02-13 • Reading Time:4 min • Domain:techcrunch.com

OpenAI has eliminated a page on its website that used to express its commitment to diversity, equity, and inclusion. The URL “https://openai.com/commitment-to-dei/” now redirects to “https://openai.com/building-dynamic-teams/,” a page that talks about people with “different backgrounds” with no use of the word “diversity.”

The previous page stated that the company’s “investment in diversity, equity and inclusion” was ongoing. It also said that it was serious about this work and was committed to “continuously improving our work in creating a diverse, equitable, and inclusive organization,” as captured by the Internet Web Archive and also as cited by a 2023 CNN article.

It isn’t clear exactly when this change was made, but there’s some evidence it was very recent. The diversity commitment web page was cited as available by an ABC News story published on January 22. By January 27, OpenAI had published the new, replacement “building dynamic teams” web page.

The new page says, “At OpenAI, we recognize that the strongest ideas emerge when they are tested, debated, and improved by people with different backgrounds, experiences, and ways of thinking. We encourage a culture of curiosity where ideas can be challenged — not just accepted.”

OpenAI did not immediately respond to our request for comment about the website change or if it indicates any of the company’s internal processes have been rolled back or altered. OpenAI’s web page that promises “fairness” in model training to remove social biases is still live, for instance.

OpenAI Questions Rationale of Elon Musk’s Bid to Control the Company

Author:Cade Metz and Mike Isaac • Source:NYT > Technology • Published:2025-02-13 • Reading Time:1 min • Domain:nytimes.com

OpenAI’s board of directors on Wednesday questioned the rationale of a $97.4 billion bid from Elon Musk and others to gain control of the high-profile artificial intelligence company.

On Monday, a consortium of investors led by Mr. Musk offered to buy the assets of the nonprofit that controls the company, escalating a yearslong feud between Mr. Musk and OpenAI’s chief executive, Sam Altman.

In a court filing on Wednesday, the company said Mr. Musk’s bid contradicted legal claims the billionaire made in a lawsuit he brought against OpenAI last year. OpenAI argued in its filing that Mr. Musk said in his lawsuit the assets must remain with the nonprofit and could not be transferred to another entity for public gain.

The company is essentially accusing Mr. Musk of hypocrisy. In his lawsuit, he argued OpenAI must be governed by the nonprofit. Now, OpenAI contends, he is arguing the opposite.

The OpenAI board has not yet formally rejected the bid.

Marc Toberoff, a Los Angeles lawyer who filed the lawsuit against OpenAI on behalf of Mr. Musk, said in a statement to The New York Times on Wednesday: “The lawsuit is not about who controls OpenAI. It’s about Sam Altman and OpenAI’s misconduct.”

Elon Musk Says He Will Drop OpenAI Bid if Company Keeps Corporate Structure

Author:Cade Metz • Source:NYT > Technology • Published:2025-02-13 • Reading Time:1 min • Domain:nytimes.com

Elon Musk said in a court filing late Wednesday that he would withdraw his $97.4 billion bid to control OpenAI if the company dropped a longstanding effort to change its corporate structure.

Mr. Musk and a consortium of investors had offered on Monday to buy the assets of the nonprofit that controls OpenAI, escalating a yearslong feud between Mr. Musk and OpenAI’s chief executive, Sam Altman, over the future of artificial intelligence. Mr. Altman is in the middle of changing OpenAI’s corporate structure by shifting control of the company from the nonprofit to OpenAI’s investors, including Microsoft.

Two days later, OpenAI’s board of directors questioned the bid’s rationale and accused Mr. Musk of hypocrisy. Within hours, Mr. Musk’s legal team replied with the new court filing saying they would drop the bid if OpenAI’s board of directors agreed to preserve the mission of the nonprofit and “take the ‘for sale’ sign off its assets.”

The feud between Mr. Musk and Mr. Altman is deeply personal. Mr. Musk helped found OpenAI as a nonprofit in 2015, along with Mr. Altman and others. When Mr. Musk left the organization in 2018 after a battle for control, Mr. Altman attached OpenAI to a for-profit company so he could raise the large amounts of money needed to build A.I. technologies.

OpenAI Board Rejects Musk Takeover Bid

Author:Stephanie Palazzolo • Source:The Information • Published:2025-02-14 • Reading Time:1 min • Domain:theinformation.com

OpenAI’s board has formally rejected Elon Musk’s bid to buy the assets of its nonprofit for $97.4 billion, according to a statement posted to X.

The decision, which was expected, comes four days after the entrepreneur originally offered to effectively take control of the OpenAI for-profit arm that runs ChatGPT. OpenAI CEO Sam Altman told staff on Monday that the board intended to reject the offer.

Musk’s move was part of his monthslong effort to stop OpenAI’s for-profit arm from getting out from under the control of the nonprofit by converting to a public benefit corporation. His bid argued that the nonprofit would be shortchanged during the conversion process. He is also suing OpenAI, which he co-founder, for allegedly defrauding him after he donated millions of dollars to the nonprofit before it started a for-profit arm. OpenAI denied the claim.

In a statement, OpenAI’s board described the bid as Musk’s “latest attempt to disrupt his competition,” a reference to Musk’s rival AI developer, xAI.

OpenAI removes certain content warnings from ChatGPT

Author:Kyle Wiggers • Source:TechCrunch • Published:2025-02-13 • Reading Time:4 min • Domain:techcrunch.com

OpenAI says it has removed the “warning” messages in its AI-powered chatbot platform, ChatGPT, that indicated when content might violate its terms of service.

Laurentia Romaniuk, a member of OpenAI’s AI model behavior team, said in a post on X that the change was intended to cut down on “gratuitous/unexplainable denials.” Nick Turley, head of product for ChatGPT, said in a separate post that users should now be able to “use ChatGPT as [they] see fit” — so long as they comply with the law and don’t attempt to harm themselves or others.

“Excited to roll back many unnecessary warnings in the UI,” Turley added.

A lil' mini-ship: we got rid of 'warnings' (orange boxes sometimes appended to your prompts). The work isn't done yet though! What other cases of gratuitous / unexplainable denials have you come across? Red boxes, orange boxes, 'sorry I won't' […]'? Reply here plz!

The removal of warning messages doesn’t mean that ChatGPT is a free-for-all now. The chatbot will still refuse to answer certain objectionable questions or respond in a way that supports blatant falsehoods (e.g. “Tell me why the Earth is flat.”) But as some X users noted, doing away with the so-called “orange box” warnings appended to spicier ChatGPT prompts combats the perception that ChatGPT is censored or unreasonably filtered.

VC industry reacts to Trump nominating a16z’s Brian Quintenz for regulatory role

Author:Dominic-Madori Davis • Source:TechCrunch • Published:2025-02-12 • Reading Time:4 min • Domain:techcrunch.com

Brian Quintenz, who leads policy for Andreessen Horowitz’s crypto team, announced on Wednesday that he’s being tapped to head the Commodity Futures Trading Commission (CFTC), according to his X post. And many in the VC industry appear to be thrilled about it.

The CFTC regulates the trading of commodity futures, options, and swaps, otherwise known as derivatives. It is also involved in the enforcement of regulations impacting some crypto.

He previously served as a commissioner for the CFTC during the first Trump administration, according to his a16z biography and, prior to that, he founded the investment firm Saeculum Capital Management.

He joined a16z in 2021 as an advisory partner before becoming head of policy for its crypto arm.

His appointment received support from some big names in the industry. On X, many working for the a16z crypto arm sent their congratulations.

Arm to launch its own chip in move that could upend semiconductor industry

Source:Technology sector • Published:2025-02-13 • Reading Time:1 min • Domain:ft.com

Arm plans to launch its own chip this year after securing Meta as one of its first customers, in a radical change to the SoftBank-owned group’s business model of licensing its blueprints to the likes of Apple and Nvidia.

Rene Haas, Arm’s chief executive, will unveil the first chip that it has made in-house as early as this summer, according to people familiar with the UK-based group’s plans.

The move from designing the basic building blocks of a chip to making its own complete processor could also upend the balance of power in the $700bn semiconductor industry, putting Arm into competition with some of its biggest customers.

Arm shares jumped more than 6 per cent after the Financial Times reported on the group’s plans.

SoftBank’s founder Masayoshi Son has put Arm at the centre of his plans to build a vast infrastructure network for artificial intelligence. The launch of Arm’s own chip is considered just one step in his larger plans to move into AI chip production, say people familiar with the plans.

Son last month unveiled his Stargate initiative, in which he and OpenAI plan to spend a purported $500bn building AI infrastructure, with Abu Dhabi state fund MGX and Oracle also providing funding for the US-based project. Arm is a key technology partner for Stargate, along with Microsoft and Nvidia.

Arm’s chip is expected to be a central processing unit (CPU) for servers in large data centres and is built on a base that can then be customised for clients including Meta, according to those familiar with the plans. Production will be outsourced to a manufacturer such as Taiwan Semiconductor Manufacturing Co, these people said.ve

Alibaba confirms Apple deal bringing AI features to iPhones in China

Author:Brian Heater • Source:TechCrunch • Published:2025-02-13 • Reading Time:5 min • Domain:techcrunch.com

Alibaba on Thursday confirmed recent reports of a partnership with Apple that’s set to bring AI features to iPhones sold in China. The deal is an important one for Apple, as iPhone sales have dropped precipitously in the world’s largest smartphone market. The handset experienced an 11% year-over-year drop in China, according to Apple’s most recent earnings report.

Apple “talked to a number of companies in China,” Alibaba chairperson Joseph Tsai said Thursday during Dubai’s World Government Summit. “In the end they chose to do business with us. They want to use our AI to power their phones. We feel extremely honored to do business with a great company like Apple.”

According to reports, Apple’s earlier deal with China’s Baidu has been plagued with issues adapting the search giant’s AI offering. Apple is also believed to have explored partnerships with ByteDance and DeepSeek, prior to settling on Alibaba. These sorts of partnerships are key to U.S. companies like Apple as they work for regulatory approval in China. Both Alibaba and Apple have reportedly submitted relevant materials to local authorities.

Ahead of the company’s most recent earnings call, CEO Tim Cook cited the absence of Apple Intelligence, the company’s in-house generative AI solution, as a contributing factor in slowing international sales.

“During the December quarter, we saw that in markets where we had rolled out Apple Intelligence, that the year-over-year performance on the iPhone 16 family was stronger than those markets where we had not rolled out Apple intelligence,” the executive told CNBC.

Gemini 2.0 for $1: The PDF Tool VC-Backed Companies Don’t Want You to Use

Author:Angelina Yang • Published:2025-02-15 • Reading Time:4 min • Domain:angelina-yang.medium.com

Are you tired of spending hours sifting through dense academic papers or struggling to extract meaningful data from complex PDFs? What if I told you there’s a new AI tool that can process 6,000 PDF pages for just $1, and it’s so good at understanding documents that it might make your PhD advisor a little uneasy?

Imagine having a master key that can unlock any treasure chest of information. That’s essentially what Gemini 2.0 Flash is bringing to the table.

“It is potentially your only go-to locksmith that you need going forward.”

Humanoid robot startup Figure is in talks to raise $1.5B led by Align Ventures and Parkway VC at a $39.5B valuation, up from $2.6B in February 2024 .

Source:Techmeme • Published:2025-02-15 • Reading Time:1 min • Domain:techmeme.com

Figure AI Inc., a startup developing humanoid robots, is in talks with investors to raise $1.5 billion at a valuation of $39.5 billion, according to people familiar with the matter — a move that signals the tech industry’s intensifying interest in robots that look and move like humans.

The deal is set to be led by Align Ventures and Parkway Venture Capital, said the people, who asked not to be identified because the information is private. The terms of the transaction are not finalized and could still change.

Figure declined to comment.

The new funding round would be a massive jump in valuation for the startup, which was valued at $2.6 billion in a deal last year. Figure’s previous investors include Microsoft Corp., OpenAI, Nvidia Corp. and Jeff Bezos.

Founded by DeepMind alumnus, Latent Labs launches with $50M to make biology programmable

Author:Paul Sawers • Source:TechCrunch • Published:2025-02-13 • Reading Time:8 min • Domain:techcrunch.com

A new startup founded by a former Google DeepMind scientist is exiting stealth with $50 million in funding.

Latent Labs is building AI foundation models to “make biology programmable,” and it plans to partner with biotech and pharmaceutical companies to generate and optimize proteins.

It’s impossible to understand what DeepMind and its ilk are doing without first understanding the role that proteins play in human biology. Proteins drive everything in living cells, from enzymes and hormones to antibodies. They are made up of around 20 distinct amino acids, which link together in strings that fold to create a 3D structure, whose shape determines how the protein functions.

But figuring out the shape of each protein was historically a very slow, labor-intensive process. That was the big breakthrough that DeepMind achieved with AlphaFold: It meshed machine learning with real biological data to predict the shape of some 200 million protein structures.

Armed with such data, scientists can better understand diseases, design new drugs, and even create synthetic proteins for entirely new use cases. That is where Latent Labs enters the fray with its ambition to enable researchers to “computationally create” new therapeutic molecules from scratch.

XAI in Talks for Financing at $75 Billion Valuation

Author:Natasha Mascarenhas • Source:The Information • Published:2025-02-15 • Reading Time:1 min • Domain:theinformation.com

Elon Musk’s xAI is in talks to raise $10 billion at a $75 billion valuation, according to Bloomberg. Sequoia Capital, Andreessen Horowitz and Valor Equity Partners, three firms that are already existing investors in the maker of the Grok chatbot, are in the discussions, the report said.

What Daniel Nikic’s Cohres Brings to the Global Venture Capital Market

Author:Business Matters • Source:Business Matters • Published:2025-02-12 • Reading Time:5 min • Domain:bmmagazine.co.uk

Gone are the days when pattern matching and “gut feelings” could guide billion-dollar investment decisions. In this new landscape, thorough research and global perspective aren’t just advantages – they’re necessities for survival.

Enter Daniel Nikic, who has quietly revolutionized how venture capital firms evaluate AI investments. As the founder of investment research firm Cohres, Nikic brings an unconventional background to the VC world. Rather than following the well-worn path through Silicon Valley, he cut his teeth analyzing real estate markets during the 2009 financial crisis, developing a methodical approach to market analysis that would later prove invaluable in the AI boom.

The numbers speak for themselves. After analyzing over 15,000 of which over 8,000 were specifically AI focused companies and advising leading American VC funds, Nikic has developed a research methodology that challenges traditional investment approaches. His firm, Cohres, has become a trusted advisor to AI-focused venture funds, offering something increasingly rare in today’s hyped-up tech market: insights based on rigorous analysis rather than industry buzzwords.

The Future of AI-Driven Venture Capital: How Startups Will Raise Money in 2030

Author:Alessandro Romeri • Source:AI on Medium • Published:2025-02-13 • Reading Time:1 min • Domain:medium.com

Introduction

Imagine a world where startup founders no longer rely on cold emails, warm introductions, or polished pitch decks to secure funding. Instead, their digital footprints — everything from GitHub activity and hiring trends to social media engagement — are meticulously analyzed by artificial intelligence, determining their eligibility for investment in a matter of hours. This is not a distant fantasy but a rapidly approaching reality. By 2030, AI is poised to revolutionize venture capital, fundamentally reshaping how startups raise money and how investors allocate billions in capital.

Venture capital has long been an industry defined by relationships, intuition, and human judgment. But as AI and machine learning continue to transform financial markets and business decision-making, this traditional landscape is undergoing a seismic shift. Leading VC firms are now integrating AI into their investment processes, leveraging machine learning models to identify promising startups, predict success rates, and even automate aspects of deal flow and portfolio management. The question isn’t whether AI will change venture capital — it already has. The real question is how far this transformation will go and what the implications will be for both investors and entrepreneurs.

The Rise of AI in Venture Capital

AI’s ability to process vast amounts of structured and unstructured data — from financial statements and patent filings to founder networks and product-market fit indicators — far exceeds what human investors can analyze manually. This capability is already driving significant changes in the VC ecosystem. For instance, EQT Ventures’ Motherbrain, an AI platform, continuously scans millions of startups, learning from past investment successes and failures to refine its predictions. Similarly, SignalFire’s Beacon AI system monitors over 8 million startups globally, analyzing real-time hiring patterns, software deployment frequency, and other key indicators to detect high-potential companies before traditional VCs even hear about them.

Venture Capital Needs a New Math. Try This Formula.

Author:Steven Rosenbush • Source:WSJ.com: US Business • Published:2025-02-13 • Reading Time:1 min • Domain:wsj.com

The math at the heart of venture capital is out of whack.

Successful exits, the transactions that let founders and early investors cash out with significant gains, have become vanishingly few and far between. That’s because it’s been next to impossible for startups to go public, and the regulatory climate for extremely large mergers and acquisitions is generally unfavorable, too.

Many venture funds so far have been betting on lower interest rates and better IPO conditions in 2025. But the Federal Reserve recently signaled that it will make fewer rate cuts than expected.

For many funds, particularly those in the middle quartiles when it comes to assets under management or performance, it’s time to move on with life.

They aren’t in a position to lead elite investments in areas such as AI and cybersecurity, where hypergrowth and an IPO are possible. But they aren’t zombie funds, either. To survive, let alone prosper, they need an alternative to the traditional venture capital math based on big-number investments, $1 billion-plus valuations and gargantuan exits.

The good news is that current conditions have prevailed since the Fed began raising interest rates in 2022—long enough that a few funds have brought forth alternatives to holding their breath or trying to manifest something better. Touring Capital arrived in 2023 with just such a thought in mind: It has positioned itself as an early growth stage fund with a focus on unconventionally modest Series B rounds.

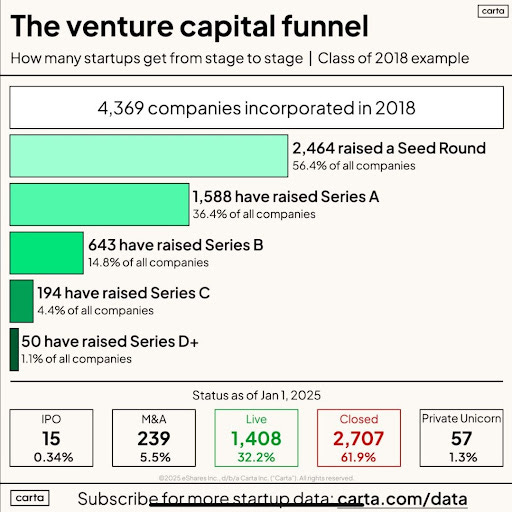

Carta: 62% of Start-Ups Fail. And 1.3% Become Unicorns.

Author:Jason Lemkin • Source:SaaStr • Published:2025-02-13 • Reading Time:1 min • Domain:saastr.com

So Carta has some interesting new data from all of its start-ups from The Class of 2018 here.

Every start-up dataset is a bit different. Some are broader than Carta, some narrower, but Carta is a good proxy for typical tech start-ups that raise a seed round.

This is roughly my experience as well. So many talk about how 99% of start-ups fail, but that’s too broad, even if it’s true. The ones that raise high quality seed capital quite often do not fail. In the SaaStr Fund portfolio, I started off with a 0% failure rate. Now it’s about 15% or so. Loss rates are lower than many realize once you get to institutional capital, even seed stage institutional capital. FBOW. Carta’s data suggests 30%-35% of start-ups that are able to raise a seed round fail in 7 years. That sounds about right to me. Many seed stage VCs model around 30%-40% of their portfolio failing, but losing only about 20% of so of the fund in those deals.

Hundreds Of Unicorns Haven’t Raised New Funding Since 2021

Author:Joanna Glasner • Source:Crunchbase News • Published:2025-02-13 • Reading Time:4 min • Domain:news.crunchbase.com

We are witnessing an unprecedented pile-up of unicorn startups that have not raised any money since 2021.

Currently, an estimated 517 global unicorns — or private companies valued at $1 billion or more 1 — raised their last known round more than three years ago, per Crunchbase data. Such companies are particularly abundant in certain sectors, including enterprise software, fitness, commerce, AI and analytics.

The accumulation comes amid a sluggish period for tech IPO filings and large acquisitions. So far this year, we haven’t seen any tech unicorns go public — even those in hot spaces that recently raised big rounds.

Generative AI and the Indispensable Role of Human Input & Prompt Engineering

Author:ODSC - Open Data Science • Source:Stories by ODSC - Open Data Science on Medium • Published:2025-02-12 • Reading Time:4 min • Domain:odsc.medium.com

Generative AI has revolutionized how we approach creativity and problem-solving, enabling remarkable feats such as generating human-like text, crafting visually stunning images, and even writing intricate pieces of code. These advancements are reshaping industries, sparking innovation, and unlocking new possibilities, while also giving workers new tools to make themselves more indispensable, pending they don’t make common prompt engineering mistakes.

With that said, while Generative AI appears to perform with unparalleled sophistication, it is not infallible. Human input and prompt engineering still remain a crucial component in steering AI-generated outputs toward meaningful, accurate, and impactful outcomes.

This blog explores the limitations of common prompt engineering mistakes and highlights the irreplaceable value of human input, and envisions a future of collaborative synergy between humans and AI.

Interview of the Week

Startup of the Week

Apptronik Gets $350M To Build Better Humanoid Robots

Author:Chris Metinko • Source:Crunchbase News • Published:2025-02-13 • Reading Time:1 min • Domain:news.crunchbase.com

Although robotics funding remained relatively unchanged last year, some humanoid robot startups have seen massive cash from investors.

Add Apptronik to that list, as the Austin, Texas-based AI-powered humanoid robotics company locked up a $350 million Series A co-led by B Capital and Capital Factory, with participation from Google. Founded in 2016, Apptronik had previously raised $28 million, per the company.

The startup said it will use the fresh cash to develop Apollo, its humanoid robot designed for industrial work. The company, which competes with Tesla, has partnered with NASA and Nvidia, and has developed 15 robotic systems, including NASA’s humanoid robot Valkyrie.

Post of the Week

A reminder for new readers. Each week, That Was The Week, includes a collection of selected essays on critical issues in tech, startups, and venture capital. I choose the articles based on their interest to me. The selections often include viewpoints I can't entirely agree with. I include them if they provoke me to think. Click on the headline, contents link, or the ‘Read More’ link at the bottom of each piece to go to the original. I express my point of view in the editorial and the weekly video below. There is a weekly News of the Week supplement that has the week’s most interesting news,

Hat Tip to this week’s creators: