A reminder for new readers. That Was The Week collects my selected reading on critical tech, startups, and venture capital issues. I selected the articles because they are of interest to me. The selections often include things I entirely disagree with. But they express common opinions, or they provoke me to think. The articles are snippets, sometimes long, to convey why they are of interest. Click on the headline to go to the original or the ‘More’ link at the bottom of each piece. I express my point of view in the editorial and the weekly video below.

Congratulations To This Week’s Selections: @vkhosla, @sullydish, @natesilver, @noahopinion, @benthompson, @stratechery, @kwharrison13, @tedgioia, @eric_seufert, @EricNewcomer, @RayDalio, @satariano, @danshipper, @kyle_l_wiggers, @imillhiser, @KateClarkTweets, @jglasner, @clothildegouj, @davidcohen, @alex, @bheater, @amyrlewin

Contents

Apple abandons EV Plans

Should Apple Acquire Rivian?

Hopin

Editorial

It’s been a week since Google closed down Gemini’s image-generating capability after it began to depict famous historical figures as “diverse”.

Andrew Sullivan on Google as a victim of woke culture, Nate Silver on Gemini as a demonstration that Google has abandoned “Don’t be Evil”, Noah Smith’s excellent ‘This is Not a Good Way to Fight Racism’ is both a memory and an appraisal of the events. And Ben Thompson’s Stratechery article about Google’s Culture complements them all.

Anti-Racism has been core to my being since I was quite young. I still remember Ricky Cardozo, the only black kid in my school, defending me when other kids were making fun of me for being in line to receive a free school lunch. He became a hero in an instant. But until that moment, he and I had never spoken. We did not speak much after because the school was deeply racist.

I went on to study Sociology and Politics and the history of empire, colonialism, slavery, immigration, and nationality. I wanted to understand why people from Africa, the Indian sub-continent, and the Caribbean were seen as separate and often singled out for abuse.

As a postgraduate, I led an organization called W.A.R. - Workers Against Racism. We were a few thousand volunteers and would be available to local victims of racial attacks. That meant fighting for victims of death in police custody, victims of Britain’s immigration and nationality laws, and victims of assault by white racists.

Towards the end of that phase of my life, I published a Penguin Special called “Under Siege: Racial Violence in Britain Today.”

It told many of the victim'’ stories, like Nasreen Saddique, who protected her Pakistani parents from fascists aged 14.

At the same time, Under Siege explained how public and official racism was perpetrated down to the street level.

It is not unlike the US today, where the border conversation demonizes Latino and Latina immigrants as criminals, rapists, and such. That discussion at the highest levels has consequences on the street.

I tell all of this to contextualize what I think about modern responses to racism. The Google Gemini debacle is not about Google or Gemini alone. It is the result of many well-intentioned attempts to fight racism by re-writing history and artificially elevating the victims to a status they do not have in reality. I call it superficial anti-racism. Portrayal does not cure fundamental systemic disadvantages. And the effort to - let's be honest - pretend to fight racism through identity affirmation - does nothing to stop newborn black and brown kids being disproportionately in families with low income, in schools with lower performance, and generally have a mountain to climb to even have hope.

At the root of racism are centuries of baked-in disadvantage, founded in colonialism, slavery, and more recent reinforcements. And being young and black still requires a level of determination to be equal to have any chance of escaping the foundations of disadvantage.

Changing words in books, Diversity, Inclusion, and Equity, and changing images or stories are tokens of resistance, not resistance or change itself. Indeed, taken to the logical endpoint, these superficial responses reinforce the actual causes of racism by creating an illusion of challenging it. They are also damaging because those who are not victims of racism see these steps as negative to themselves. It sets up a black and brown versus white narrative.

So Google did what it seems most people are doing today: it instructed its AI to always show diversity and to diminish whiteness in favor of brown or blackness.

We all understand it wasn’t malicious. But it was wrong. And damaging, as is most of the “anti-racist” methods in 2024.

The foundation of inclusion is full equal opportunity. At the base of that challenge is economics, and then the attitudes will change because they will become outdated due to equal opportunity. Civil Rights corrected obvious inequities, which took millions to fight for. Economic equality requires investment from birth to death in raising communities. Meanwhile, fighting the consequences by defending the victims of racism. Until then, young black people will continue to resist, individually, in their way, the lack of hope in their lives and will continue to over-populate US prisons.

Google’s attempt at diversity did nothing to help in that context and made things worse. Tech cannot become a place where society’s problems are resolved by pretending they do not exist.

Essays of the Week

How AI Will Change Our Relationship With Computers

We’ve always adapted to software. Now, AI is enabling software to adapt to humans instead.

By Vinod Khosla

Feb 22, 2024, 9:00am PST

There’s a lot of buzz about “AI hardware” and “gadgets.” I want to discard these terms. They’re misleading. What you call gadgets or hardware, I see as facilitators of a new era in which low-latency voice becomes the primary way users interact with smart AI: These devices are support infrastructure. This isn’t just about devices; it’s about a fundamental shift in human-computer interaction.

Artificial intelligence will spur two fundamental changes in our relationship with technology. The first is that voice—already the most natural interface for human interaction—will become a dominant interface. Imagine latency reduced to less than half a second, a stark contrast to the sluggishness of touch-based devices. Even silent voice is on the table—vocalizing commands without sound, an especially useful option in a public setting like a cafe. Silent voice is mouthing the words without allowing sound to come out of your mouth. Technologies to detect such “silent speech” will allow one to privately dictate in public places without anyone else being able to listen.

The Takeaway

• More computing will be controlled by voice

• AI will spawn a new class of one-button devices where screens are secondary

• Software will adapt to the humans using it

The second revolution is in how apps will adapt to us. No longer will we need to learn to navigate through apps like Uber or complex systems like those of SAP or Oracle. Thus far, we’ve always adapted to software—learning its intricacies, remembering layered menus and so forth to communicate with machines. “Training” to use complex apps is commonplace. Now, AI is enabling software to adapt to humans instead.

This will lead to new types of hardware, designed primarily for voice interaction, with the computer learning the human language and the human. Yes, there might still be a screen for certain visual tasks, but the core interaction will shift to voice—be it silent or audible.

Designer Jony Ive and OpenAI’s Sam Altman are reported to have discussed an AI hardware project. Humane, started many years ago, prior to the ChatGPT moment, guessed at this interface—but the early buzz fizzled.

These entrepreneurs were right, but their implementation was not sufficiently focused on the new user experience enabled by AI. A small startup called Rabbit made waves with its minimal device, leading Microsoft CEO Satya Nadella to call its R1 launch “one of the most impressive presentations I have seen, capturing the vision of what is possible, since Steve Jobs introduced the iPhone in 2007.” (Khosla Ventures is an investor in Rabbit.)

Something new is afoot. Rabbit is an early attempt to redefine human-computer interaction in a world of powerful AI. These devices will center around the idea that we should talk to computers (or our agents that can run apps for us) through voice in our natural language, not through artificial constructs.

Consider my experience as an example. When hiking, I use an app called Picture This to identify plants I encounter, but the process is cumbersome: Stop walking, pause the audiobook, open the app, take a picture, wait for the answer to load, close the app, put the phone back in my pocket. With this new technology, I could simply point the device at the plant and ask, “What is this plant?” I would get an answer, and then I could say “play audiobook” and resume listening to my book with no interruption or hassle.

Costwise, this voice-driven approach is a game changer. It's significantly more affordable than a traditional smartphone. Form factor innovation is also likely, even though phones with bigger screens won’t go away. Rabbit’s large action model, based on neurosymbolic approaches, learns to use software apps and work across apps so the human doesn’t need to learn about other apps or websites. The Rabbit agents will talk to humans and use these apps for them.

Voice is simpler and more efficient than typing or touching a screen. High-resolution graphics and touch interfaces demand expensive, complex computing. The very large 500 billion–parameter large language models powering ChatGPT and other chatbots try to learn everything that’s ever been published. By contrast, Rabbit’s LAM behaves more like humans learning how to use apps and other LLMs, so humans don’t need to learn them or master prompt engineering.

LAMs learn to use software the way humans do, rather than communicating with an app through an application programming interface as traditional software does. Imagine someone peeking over your shoulder as you swipe on your phone and learning those patterns. That’s a LAM—or whatever it might be called in the future. It’s a complete inversion of the traditional paradigm and means that ultimately we won’t have to interact with the software because the AI will do so on our behalf.

Phones, as they are now, are designed to distract us. If I had to pull one out on a hike, I’d see my emails, my texts and other notifications; I’d get pulled onto some social platform, get hit with ads, maybe even go down a rabbit hole, no pun intended. Phones constantly vie for our attention, pulling us into a vortex of notifications and messages. These new designs, like Rabbit, are designed to save time and minimize distractions. You tell it what to do, and it does just that, nothing more.

Startups can be so agile and innovative! The future of technology isn’t about incremental changes or an extrapolation of the past. This isn’t about making another iPhone 16 or 17—though those will continue to be very valuable. It’s about a fundamental shift in how we interact with our devices, one that is AI centric, requiring a new design and new priorities that the hardware supports. Startups are best poised for such a flip. The incumbents will surely but slowly follow.

Google's Brave New Woke-AF World

When DEI merges with AI, the post-truth dystopia will become impossible to escape.

ANDREW SULLIVAN, MAR 1, 2024

“The nature of psychological compulsion is such that those who act under constraint remain under the impression that they are acting on their own initiative. The victim of mind-manipulation does not know that he is a victim. To him, the walls of his prison are invisible, and he believes himself to be free,” - Aldous Huxley, Brave New World.

It’s not as if James Damore didn’t warn us.

Remember Damore? He was the doe-eyed Silicon Valley nerd who dared to offer a critique of DEI at Google back in the summer of 2017. When a diversity program solicited feedback over the question of why 50 percent of Google’s engineers were not women, as social justice would surely mandate, he wrote a modest memo. He accepted that sexism had a part to play, and should be countered. But then:

I’m simply stating that the distribution of preferences and abilities of men and women differ in part due to biological causes and that these differences may explain why we don’t see equal representation of women in tech and leadership. Many of these differences are small and there’s significant overlap between men and women, so you can’t say anything about an individual given these population level distributions.

This is empirically inarguable, replicated across countless studies about the different choices and preferences of men and women, that are partly — partly — a function of biology. When I wrote about this at the time, I linked to several of the studies — all of which passed New York Magazine’s uber-woke fact checkers. For this stumbling upon the truth, Damore was summarily fired by Google CEO, Sundar Pichai, who justified it thus: “It’s important for the women at Google, and all the people at Google, that we want to make an inclusive environment.”

The truth — and the freedom to say it — took second place to feelings of “inclusion”.

In an eerie parallel, what happened to Damore is exactly what happened to Larry Summers at Harvard a decade before. Summers was dispatched for telling the truth about the distribution of mathematical ability at the very tail ends of the sex bell curve. So instead of Summers and the truth at Harvard, we got Claudine Gay and plagiarized lies. And instead of reason and freedom of speech at Google, we got the post-modern cult of DEI, where identity trumps everything. The firing of Damore was a signal that “equity” — race and sex discrimination — would now meet no guardrails.

You can see the shift at Google if you go back and look at their original goals. In 2004, in their Founders Letter just before the IPO, Larry Page and Sergey Brin described what they hoped to achieve: “Our search results are the best we know how to produce. They are unbiased and objective.” And that’s how Google succeeded.

Not anymore. Compare the Page/Brin vision to Google’s “Objectives for AI applications” two decades later, long after the Damore Rubicon. As Nate Silver notes, Google offers seven formal principles that guide Gemini AI, and the objective truth is not among them. The overriding goal is to be “socially beneficial” — which may, of course, require demoting or disappearing data, ideas or facts, if they might be deemed by some as socially non-beneficial. And the words of Page and Brin — “unbiased and objective” — have been replaced by a mandate to “avoid creating or reinforcing unfair bias,” which is subtly different. It’s about ensuring that Google does not amplify existing bias in society, meaning sexism, racism, etc. Here’s Nate:

Google has no explicit mandate for its models to be honest or unbiased. (Yes, unbiasedness is hard to define, but so is being socially beneficial.) There is one reference to “accuracy” under “be socially beneficial”, but it is relatively subordinated, conditioned upon “continuing to respect cultural, social and legal norms”.

Google abandoned "don't be evil" — and Gemini is the result

AI labs need to treat accuracy, honesty and unbiasedness as core values.

NATE SILVER, FEB 27, 2024

I’ve long intended to write more about AI here at Silver Bulletin. It’s a major topic in my forthcoming book, and I’ve devoted a lot of bandwidth over the past few years to speaking with experts and generally educating myself on the terms of the debate over AI alignment and AI risk. I’d dare to say I’ve even developed some opinions of my own about these things. Nevertheless, AI is a deep, complex topic, and it’s easy to have an understanding that’s rich in some ways and patchy in others. Therefore, I’m going to pick my battles — and I was planning to ease into AI topics slowly with a fun post about how ChatGPT was and wasn’t helpful for writing my book.1

But then this month, Google rolled out a series of new AI models that it calls Gemini. It’s increasingly apparent that Gemini is among the more disastrous product rollouts in the history of Silicon Valley and maybe even the recent history of corporate America, at least coming from a company of Google’s prestige. Wall Street is starting to notice, with Google (Alphabet) stock down 4.5 percent on Monday amid analyst warnings about Gemini’s effect on Google’s reputation.

Gemini grabbed my attention because the overlap between politics, media and AI is a place on the Venn Diagram where think I can add a lot of value. Despite Google’s protestations to the contrary, the reasons for Gemini’s shortcomings are mostly political, not technological. Also, many of the debates about Gemini are familiar territory, because they parallel decades-old debates in journalism. Should journalists strive to promote the common good or instead just reveal the world for what it is? Where is the line between information and advocacy? Is it even possible or desirable to be unbiased — and if so, how does one go about accomplishing that?2 How should consumers navigate a world rife with misinformation — when sometimes the misinformation is published by the most authoritative sources? How are the answers affected by the increasing consolidation of the industry toward a few big winners — and by increasing political polarization in the US and other industrialized democracies?

All of these questions can and should also be asked of generative AI models like Gemini and ChatGPT. In fact, they may be even more pressing in the AI space. In journalism, at least, no one institution purports to have a monopoly on the truth. Yes, some news outlets come closer to making this claim than others (see e.g. “all the news that’s fit to print”). But savvy readers recognize that publications of all shapes and sizes — from The New York Times to Better Homes & Gardens to Silver Bulletin — have editorial viewpoints and exercise a lot of discretion for what subjects they cover and how they cover them. Journalism is still a relatively pluralistic institution; in the United States, no one news outlet has more than about 10 percent “mind share”.

By contrast, in its 2004 IPO filing, Google said that its “mission is to organize the world’s information and make it universally accessible and useful”. That’s quite an ambitious undertaking, obviously. It wants to be the authoritative source, not just one of many. And that shows up in the numbers: Google has a near-monopoly with around 90 percent of global search traffic. AI models, because they require so much computing power, are also likely to be extremely top-heavy, with at most a few big players dominating the space.

In its early years, Google recognized its market-leading position by striving for neutrality, however challenging that might be to achieve in practice. In its IPO, Google frequently emphasized terms like “unbiased”, “objective” and “accurate”, and these were core parts of its “Don’t Be Evil” motto (emphasis mine):

DON’T BE EVIL

Don’t be evil. We believe strongly that in the long term, we will be better served—as shareholders and in all other ways—by a company that does good things for the world even if we forgo some short term gains. This is an important aspect of our culture and is broadly shared within the company.

Google users trust our systems to help them with important decisions: medical, financial and many others. Our search results are the best we know how to produce. They are unbiased and objective, and we do not accept payment for them or for inclusion or more frequent updating. We also display advertising, which we work hard to make relevant, and we label it clearly. This is similar to a newspaper, where the advertisements are clear and the articles are not influenced by the advertisers’ payments. We believe it is important for everyone to have access to the best information and research, not only to the information people pay for you to see

But times have changed. In Google’s 2023 Annual Report, the terms “unbiased”, “objective” and “accurate” did not appear even once.3 Nor did the “Don’t Be Evil” motto — it has largely been retired. Google is no longer promising these things — and as Gemini demonstrates, it’s no longer delivering them.

This is not a good way to fight racism in America

Building a multiracial society is hard work. Don't be tempted by shortcuts.

NOAH SMITH, FEB 24, 2024

You might think the image above is a joke, but it’s the actual output of Google’s new AI application, Gemini. A few days ago, a bunch of people realized that Gemini — which was released on February 8 — wouldn’t draw pictures of White people, no matter what the context. Much ridiculousness ensued. People asked the app to draw the original American revolutionaries, 17th-century French scientists, Vikings, the Pope, and so on; the resulting images almost never included White people, except occasionally as part of a much larger ensemble. The ultimate facepalm-worthy moment was when Gemini decided that Nazi soldiers were Black and Asian:

But to me, the funniest was when someone asked the app to draw the founders of Google:

Some people wondered how the app’s creators had managed to train it never to draw White people, but it turned out that they had done something much simpler. They were just automatically adding text to every image prompt, specifying that people in the image should be “diverse” — which the AI interpreted as meaning “nonwhite”.

But that wasn’t the only weird thing that was going on with Gemini with regards to race. It was also trained to refuse explicit requests to draw White people, on the grounds that such images would perpetuate “harmful stereotypes” (despite demonstrably not having a problem depicting stereotypes of Native Americans and Asians). And it refused to draw a painting in the style of Norman Rockwell, on the grounds that Rockwell’s paintings presented too idealized a picture of 1940s America, and could thus “perpetuate harmful stereotypes”.

Embarrassed by the national media attention, Google employees hastily banned Gemini from drawing any pictures of people at all.

The main thing that everyone seemed to agree on was that this episode showcased the decline of Google’s prestige as a company. In two decades, the internet giant’s reputation has gone from that of a scrappy upstart, hiring the smartest nerds and shipping product after game-changing product at blinding speed, to that of a sleepy behemoth, quietly milking the profits of its gargantuan search ad monopoly and employing a vast army of highly paid entitled lifers who go home at 3 in the afternoon and view it as their corporate duty not to ship anything that works.

Obviously, that’s a huge generalization, and it’s only pockets of Google that are actually like that. But big companies with stable sources of monopoly profit do tend to become fairly predictably sclerotic — Intel being just one more recent example. The question of how to turn companies around once they go down this path is an important unsolved problem.

Gemini also provides an interesting example of Gary Becker’s theory of discrimination. Becker believed that when companies have a big profit cushion — whether from a natural monopoly, government support, or whatever — they have the latitude to indulge the personal biases of their managers. In the 1970s, that largely meant discriminating against Black and female employees. At Google in the 2020s, it means creating AI apps that refuse to draw White people in Hitler’s army. The theory predicts that only the ruthless pressure of market competition will force companies to stop discriminating. There’s actually some empirical evidence to support this. But Google’s search ad monopoly is probably so powerful that it can afford to goof around in the AI space without suffering real consequences — at least in the short term.

But beyond what it says about Google itself, the saga of Gemini also demonstrates some things about how educated professional Americans are trying to fight racism in the 2020s. I think what it shows is that there’s a hunger for quick shortcuts that ultimately turn out not to be effective.

The challenge of creating a multiracial society

Nations require norms and public goods in order to function well. We have to agree not to beat each other up, steal each other’s things, etc. We have to be OK paying taxes for a road or a school or an army that might benefit our neighbor more than it benefits us. This requires a certain psychological outlook — a lot of us have to believe, whether tacitly or explicitly, that most of our neighbors are part of our in-group.

Gemini and Google’s Culture

Posted on Monday, February 26, 2024

Ben Thompson, Stratechery

Last Wednesday, when the questions about Gemini’s political viewpoint were still limited to its image creation capabilities, I accused the company of being timid:

Stepping back, I don’t, as a rule, want to wade into politics, and definitely not into culture war issues. At some point, though, you just have to state plainly that this is ridiculous. Google specifically, and tech companies broadly, have long been sensitive to accusations of bias; that has extended to image generation, and I can understand the sentiment in terms of depicting theoretical scenarios. At the same time, many of these images are about actual history; I’m reminded of George Orwell in 1984:

Every record has been destroyed or falsified, every book has been rewritten, every picture has been repainted, every statue and street and building has been renamed, every date has been altered. And that process is continuing day by day and minute by minute. History has stopped. Nothing exists except an endless present in which the Party is always right. I know, of course, that the past is falsified, but it would never be possible for me to prove it, even when I did the falsification myself. After the thing is done, no evidence ever remains. The only evidence is inside my own mind, and I don’t know with any certainty that any other human being shares my memories.

Even if you don’t want to go so far as to invoke the political implications of Orwell’s book, the most generous interpretation of Google’s over-aggressive RLHF of their models is that they are scared of being criticized. That, though, is just as bad: Google is blatantly sacrificing its mission to “organize the world’s information and make it universally accessible and useful” by creating entirely new realities because it’s scared of some bad press. Moreover, there are implications for business: Google has the models and the infrastructure, but winning in AI given their business model challenges will require boldness; this shameful willingness to change the world’s information in an attempt to avoid criticism reeks — in the best case scenario! — of abject timidity.

If timidity were the motivation, then it’s safe to say that the company’s approach with Gemini has completely backfired; while Google turned off Gemini’s image generation capabilities, it’s text generation is just as absurd:

That is just one examples of many: Gemini won’t help promote meat, write a brief about fossil fuels, or even help sell a goldfish. It says that effective accelerationism is a violent ideology, that libertarians are morally equivalent to Stalin, and insists that it’s hard to say what caused more harm: repealing net neutrality or Hitler.

Some of these examples, particularly the Hitler comparisons (or Mao vs George Washington), are obviously absurd and downright offensive; others are merely controversial. They do, though, all seem to have a consistent viewpoint: Nate Silver, in another tweet, labeled it “the politics of the median member of the San Francisco Board of Supervisors.”

Needless to say, overtly expressing those opinions is not timid, which raises another question from Silver:

In fact, I think there is a precedent for Gemini; like many comparison points for modern-day Google, it comes from Microsoft.

Inside the Crisis at Google

Culture war narratives give Google's organizational coherence a bit too much credit. There's more to the story.

ALEX KANTROWITZ, MAR 1, 2024

It’s not like artificial intelligence caught Sundar Pichai off guard. I remember sitting in the audience in January 2018 when the Google CEO said it was as profound as electricity and fire. His proclamation stunned the San Francisco audience that day, so bullish it still seems a bit absurd, and it underscores how bizarre it is that his AI strategy now appears unmoored.

The latest AI crisis at Google — where its Gemini image and text generation tool produced insane responses, including portraying Nazis as people of color — is now spiraling into the worst moment of Pichai’s tenure. Morale at Google is plummeting, with one employee telling me it’s the worst he’s ever seen. And more people are calling for Pichai’s ouster than ever before. Even the relatively restrained Ben Thompson of Stratechery demanded his removal on Monday.

Yet so much — too much — coverage of Google’s Gemini incident views it through the culture war lens. For many, Google either caved to wokeness or cowed to those who’d prefer not to address AI bias. These interpretations are wanting, and frankly incomplete explanations for why the crisis escalated to this point. The culture war narrative gives too much credit to Google for being a well organized, politics-driven machine. And the magnitude of the issue runs even deeper than Gemini’s skewed responses.

There’s now little doubt that Google steered its users’ Gemini prompts by adding words that pushed the outputs toward diverse responses — forgetting when not to ask for diversity, like with the Nazis — but the way those added words got there is the real story. Even employees on Google’s Trust and Safety team are puzzled by where exactly the words came from, a product of Google scrambling to set up a Gemini unit without clear ownership of critical capabilities. And a reflection of the lack of accountability within some parts of Google.

"Organizationally at this place, it's impossible to navigate and understand who's in rooms and who owns things,” one member of Google’s Trust and Safety team told me. “Maybe that's by design so that nobody can ever get in trouble for failure.”

Organizational dysfunction is still common within Google, something it’s worked to fix through recent layoffs, and it showed up in the formation of its Gemini team. Moving fast while chasing OpenAI and Microsoft, Google gave its Product, Trust and Safety, and Responsible AI teams input into the training and release of Gemini. And their coordination clearly wasn’t good enough. In his letter to Google employees addressing the Gemini debacle this week, Pichai singled out “structural changes” as a remedy to prevent a repeat, acknowledging the failure.

Those structural changes may turn into a significant rework of how the organization operates. “The problem is big enough that replacing a single leader or merging just two teams probably won’t cut it,” the Google Trust and Safety employee said.

Already, Google is rushing to fix some of the deficiencies that contributed to the mess. On Friday, a ‘reset’ day Google, and through the weekend — when Google employees almost never work — the company’s Trust and Safety leadership called for volunteers to test Gemini’s outputs to prevent further blunders. “We need multiple volunteers on stand-by per time block so we can activate rapid adversarial testing on high priority topics,” one executive wrote in an internal email.

Hopin and ‘The Greater Fool’

In my writing, I've frequently reflected on the excess and irresponsibility of venture capital.

But few case studies better sum up my grievances with various aspect of the venture capital industrial complex than does the story of Hopin.

The first thing I came back to is how unsurprising it feels like this is. Just about everyone working in startups has had some version of this experience: "this doesn't make sense, does it?"

Crypto mania. Billion-dollar valuations for pre-revenue companies. Investors branding themselves as "micro mobility investors." Even much of the current hype in AI. All of it leaves you scratching your head, trying to understand how that can make sense.

In the case of Hopin, you see a lot of instances of people being surprised. Here's a couple examples:

"Nobody expected virtual events to go away as fast as they did.” (Former Employee)

But like... why not? Did anyone really, genuinely believe that life would forever be trapped lockdown-style?

A common refrain in the world of startups is that 90% of them fail. It's just the cost of doing business. In other words? You have to crack a few eggs to make an omelette.

And I agree with that. But there is the natural rate of failure, and then there is what most people call "unforced errors." Things that you look back on and say "it didn't have to be this way."

Too much capital can cause a myriad of problems. For one, too much capital is typically driven by too many investors. And in the case of Hopin, that meant nobody was doing their homework: "

'After the first few rounds, when it got crazy and VC FOMO kicked in, it began to feel like ‘dumb money’ — even though it was coming from highly credible funds. They didn’t do due diligence and they didn’t inject governance provisions,'”' one early investor [said]. There were also so many VCs keen to invest in Hopin that none ended up with an influential stake."

What you get is far from an ecosystem of thoughtful investors attempting to make wise capital allocation decisions, and instead get the closest humanity may have come to "a thousand monkeys in a room with a typewriter," except instead of typewriters they have checkbooks.

When you step back pragmatically, and consider Hopin's prospects, you can do some simple math. When Hopin raised at $7.7B, the company had supposedly gone from $20M ARR in November 2020 to $100M ARR in August 2021. Investors in that round were maybe underwriting to a 3x return given the high valuation, so you're trying to dream the dream of how this company becomes a $20B+ company.

Cvent, a comparable company in the events space, went public in December 2021 with $518M of revenue at a market cap of ~$4.6B, ~8.8x revenue. At a comparable multiple, Hopin would have had to generate $2.3B+ in revenue; 23x growth. Assuming a 5-year hold period for those later stage investors? That would have to be one of the most incredible growth trajectories ever. And to believe that was possible, not just for any exceptional company, but a company that seemed uniquely tied to a really specific COVID trend? Idk.

What this leads to is a very bad reputation for investors. Most recently, a reputation that is branding some investors as "predators."

How can investors believe a company that went from $20M to $100M ARR in 8 months could eventually get to $2.3B in revenue? They probably didn't! They just had to believe that someone ELSE could believe that something like that was possible. This is literally an academic representation of "passing the bag."

One of the most un-dealt with ramifications of a 13-year bull market is the institutionalized belief in "the greater fool." People can make money, not because a business is sound, but because there is always someone else down the line who will buy you out. And that was true for 10+ years!

We need to be better, both as venture capitalists, and as founders. We don't need a more fervent belief in a greater fool. We need a stronger commitment to building businesses of which we would NEVER want to sell a share.

People want to idolize Warren Buffett's success, but never his practices. And what are his two most poignant practices? (1) Get rich slowly, and (2) never sell a share of Berkshire Hathaway. I recognize the need for liquidity, both for founders trying to live their lives, and VCs trying to generate returns for LPs. So none of this is a blanket statement of good or bad.

But it is a statement against excess. Too many people want to get rich quickly, and to do it by selling to the greater fool. And that is a problem.

13 Observations on Ritual

And other responses to my 'dopamine culture' article

TED GIOIA, FEB 24, 2024

My article on ‘dopamine culture’ has stirred up interest and (even more) raised concerns among readers who recognize the symptoms I described.

One of the illustration went viral in a big way. And I’ve gotten requests from all over the world for permission to translate and share the material. (Yes, you can all quote generously from the article, and reprint my charts with attribution.)

But many have asked for more specific guidance.

What can we do in a culture dominated by huge corporations that want us to spend hours every day swiping and scrolling?

I find it revealing and disturbing that readers who work on the front lines (in education, therapy, or tech itself) expressed the highest degree of alarm. They know better than anybody where we’re heading, and want to find an escape path.

Here’s a typical comment from teacher Adam Whybray:

I see it massively as a teacher. Kids desperately pleading for toilet breaks, claiming their human rights are being infringed, so they can check TikTok, treating lessons as though they're in a Youtube reaction video, needing to react with a meme or a take—saying that silence in lessons scares them or freaks them out.

One notable difference from when I was at school was that I remember a lesson in which we got to watch a film was a relief or even pleasurable (depending on the film). My students today often say they are unable to watch films because they can't focus. I had one boy getting quite emotional, begging to be allowed to look at his phone instead.

Another teacher asked if the proper response is to unplug regularly? Others have already embraced digital detox techniques of various sorts (see here and here).

I hope to write more about this in the future.

In particular, I want to focus on the many positive ways people create a healthy, integrated life that minimizes scrolling and swiping and mindless digital distractions. Many of you have found joy and solace—and an escape from app dependence—in artmaking or nature walks or other real world activities. There are countless ways of being-in-the-world with contentment and mindfulness.

Today I want to discuss just one bedrock of real world life that is often neglected—or frequently even mocked: Ritual.

I know how much I rely on my daily rituals as a way of creating wholeness and balance. I spend every morning in an elaborate ritual involving breakfast, reading books (physical copies, not on a screen), listening to music, and enjoying home life.

Even my morning coffee preparation is ritualistic. (However, I’m not as extreme as this person—who rivals the Japanese tea ceremony in attention to detail.)

I try to avoid plugging into the digital world until after noon.

I look forward to this daily time away from screens. But my personal rituals are just one tiny example. There are many larger ways that rituals provide an antidote to the more toxic aspects of tech-dominated society.

Below I share 13 observations on ritual.

Lina Khan Has Been a Terrible FTC Chair

Eric Seufert, in a thread on Threads:

Last week, the House Judiciary Committee published a blistering report on the FTC's effectiveness at enforcing antitrust law under Chair Lina Khan. The report accuses Khan's office of, among other things:- consolidating power in a way that sidelined other Commissioners; - pursuing cases through tenuous legal theories that were mostly designed to garner headlines and not deliver successful enforcement; - micromanaging career staff and mismanaging the resources of the agency;

- and diminishing staff morale to a degree that led to a heightened degree of staff departures, further constraining the agency's ability to productively pursue cases.

The motivation behind the investigation may be partisan: the House Judiciary Committee is chaired by Rep. Jim Jordan (R-OH), who accused Khan of "harassing" Twitter in Congressional testimony in July. But the support in the report comes from internal FTC emails and statements made by FTC staff in interviews with the Committee. So while the impetus for the report invites skepticism, the support is gathered almost entirely from FTC staff.

The report is scathing. The report describes Khan's consolidation of power through omnibus resolutions that gave her office the sole power to investigate transactions and issue subpoenas, which had previously required a vote from Commissioners:

The report also surfaces frustrations from FTC staff that Khan's publicly-facing directives were abstract to the point of being unactionable, and that her guidance internally utilized "progressive buzzwords" that were difficult to interpret as concrete direction:

The report points to an internal survey that measured a marked decline in perceived "Honesty and Integrity" of the agency's leadership by its staff:

...as well as Respect for Senior Leaders:

The report also alleges that FTC staff believed that Khan was unreceptive to feedback or dissenting views, thus muting the influence of senior, FTC leadership:

The report also argues that FTC staff doubted the logic of the cases that Khan pursued, questioning whether the Chair saw the successful litigation of antitrust cases as her remit (versus catalyzing Congressional action). FTC staff believed that the broad, ambitious, and abstract public-facing goals of the agency that Khan expressed were incompatible with successfully pursuing antitrust cases.

We Need Self-Driving Cars

Anyone rooting against self-driving cars is cheering for tens of thousands of deaths, year after year. We shouldn’t be burning self-driving cars in the streets. We should be celebrating…

FEB 29, 2024

The simmering anger toward autonomous vehicles—and tech writ large—ignited, literally, in San Francisco this month. A mob attacked one of Waymo’s self-driving cars in San Francisco’s Chinatown neighborhood, vandalizing the Jaguar robotaxi. Then someone threw a firework into the passenger seat. The innocent self-driving car was burnt to a crisp.

For some, this was an act of righteous anger, rage against the machine, a cry for help from people fed up with our slide into automation. “These are people who did not ask for self-driving cars, did not vote to let them onto their streets and shared spaces, doing what they can to push back,” wrote Luddite sympathizer Brian Merchant of the Chinatown arsonists.

In their report on the incident, tech publication The Verge noted, “Vandalism and defacement are time-honored parts of the human experience,” adding that “tech companies have been forced to reckon with this inevitability as they deploy their equipment in public with impunity.”

In fact, nothing could be more antihuman than rooting against self-driving cars, which, by the way, are just getting good.

I first got in a self-driving car around 2016 back when Uber piloted its initial program. During my test ride, a human backup driver took control of the wheel every few moments. I concluded that self-driving cars weren’t going to change the world anytime soon. It seemed obvious to me that Uber’s pilot was less about testing a technology that was almost ready for the road and more about persuading prospective investors on how self-driving cars might one day eliminate one of its biggest costs—dangerous human drivers.

But times change. That initial hype has died down and in the past few months, I’ve ridden solo in a number of Alphabet-owned Waymos in San Francisco.

They’re amazing.

The first time I took a Waymo, I’ll admit, it was a little terrifying. You get in and you’re the only person in the car. And you’re not sitting behind the wheel. Then the car starts driving on its own.

Now that I have several Waymo trips under my belt, I’ve come to trust and enjoy them, even more than human-driven rides. It’s a smooth ride. There’s no human driver present to interrupt your phone calls. You can pick the music yourself, and, of course, the science fiction–feeling of being driven by a robot is exhilarating. I tend to sit in the front passenger seat just to get a better view.

I’ve had one hiccup, when a Waymo inexplicably went down a dead-end street and humans at HQ seemed to intervene remotely to get things back on track. But I’ve been in Ubers where human drivers rolled through stop signs and made dangerous last-minute swerves.

There was a time when I believed that self-driving cars should be held to the standard of airplanes. Every mistake needed to be rigorously understood and any human death was unforgivable. But my view has evolved over time as human drivers have continued to kill tens of thousands of people a year. We need a solution that’s meaningfully better than human drivers, yes, but we shouldn’t wait for perfection before we start getting dangerous human drivers off the streets.

Lost in all the fulminating about automation and big-tech tyranny is the fact that self-driving cars are an attempt to solve a very serious problem. Traffic fatalities are a leading cause of death in the United States for anyone between the ages of 1 and 54. About 40,000 people die in car crashes a year in the U.S., with about one-third involving drunk drivers.

There’s a natural, though irrational, human bias toward the status quo. We tend to believe that things are the way they are for a good reason. But of course, technology has drastically improved human lives and human life spans already. Why stop now that more powerful computer chips and sophisticated artificial intelligence models open up new possibilities?

We should build the best world we can, and that includes minimizing traffic deaths by reducing the number of human drivers on the roads. That’s something that the left—who advocate getting human drivers out from behind the wheel of their personal vehicles and onto buses, trains, bikes, scooters, you name it—historically supports. But self-driving cars are getting left off that list.

..More

Are We in a Stock Market Bubble?

Ray Dalio

Founder, CIO Mentor, and Member of the Bridgewater Board

February 29, 2024

What the latest readings from my bubble gauge say about the market.

As you know, I like to convert my intuitive thinking into indicators that I write down as decision rules (principles) that can be back tested and automated to put together with other principles and bets created the same way to make up a portfolio of alpha bets. I have one of these for bubbles. Having been through many bubbles over my 50+ years of investing, about 10 years ago I described what in my mind makes a bubble, and I use that to identify them in markets—all markets, not just stocks.

I define a bubble market as one that has a combination of the following in high degrees:

High prices relative to traditional measures of value (e.g., by taking the present value of their cash flows for the duration of the asset and comparing it with their interest rates).

Unsustainable conditions (e.g., extrapolating past revenue and earnings growth rates late in the cycle when capacity limits mean that that growth can’t be sustained).

Many new and naïve buyers who were attracted in because the market has gone up a lot, so it’s perceived as a hot market.

Broad bullish sentiment.

A high percentage of purchases being financed by debt.

A lot of forward and speculative purchases made to bet on price gains (e.g., inventories that are more than needed, contracted forward purchases, etc.).

I apply these criteria to all markets to see if they’re in bubbles. When I look at the US stock market using these criteria (see the chart below), it—and even some of the parts that have rallied the most and gotten media attention—doesn’t look very bubbly. The market as a whole is in mid-range (52nd percentile). As shown in the charts, these levels are not consistent with past bubbles.

The “Magnificent 7” has driven a meaningful share of the gains in US equities over the past year. The market cap of the basket has increased by over 80% since January 2023, and these companies now constitute over 25% of the S&P 500 market cap. The Mag-7 is measured to be a bit frothy but not in a full-on bubble. Valuations are slightly expensive given current and projected earnings, sentiment is bullish but doesn’t look excessively so, and we do not see excessive leverage or a flood of new and naïve buyers. That said, one could still imagine a significant correction in these names if generative AI does not live up to the priced-in impact.

In the remainder of this post, I’ll walk through each of the pieces of the bubble gauge for the US stock market as a whole and show you how recent conditions compare to historical bubbles. While I won’t show you exactly how this indicator is constructed, because that is proprietary, I will show you some of the sub-aggregate readings and some indicators.

Each of these six influences is measured using a number of stats that are combined into gauges. The table below shows the current readings of each of these gauges for the US equity market. It shows how the conditions stack up today for US equities in relation to past times. Our readings suggest that, while equities may have rallied meaningfully, we’re unlikely to be in a bubble.

For the Mag-7, some of our readings look frothy, but we do not see bubbly conditions in aggregate. We have somewhat lower confidence in this determination because we don’t have a high-confidence read on how impactful generative AI will turn out to be, and that is a significant influence on the expected cash flows of many of these companies.

The next few sections go through each of these sub-gauges in more detail.

Video of the Week

AI of the Week

Elon Musk Sues OpenAI and Sam Altman for Violating the Company’s Principles

Musk said the prominent A.I. start-up had prioritized profit and commercial interests instead of seeking to benefit humanity.

The lawsuit, filed by Elon Musk, describes OpenAI as a “de facto subsidiary” of Microsoft. Credit...Guglielmo Mangiapane/Reuters

March 1, 2024

Elon Musk sued OpenAI and its chief executive, Sam Altman, accusing them of breaching a contract by prioritizing profit and commercial interests in developing artificial intelligence over the public good.

Mr. Musk, who helped create OpenAI with Mr. Altman and others in 2015, said the company’s multibillion-dollar partnership with Microsoft represented an abandonment of its founding pledge to carefully develop A.I. and make the technology publicly available.

“OpenAI has been transformed into a closed-source de facto subsidiary of the largest technology company, Microsoft,” said the lawsuit, which was filed Thursday in Superior Court in San Francisco.

The lawsuit is the latest chapter in a fight between the former business partners that has been simmering for years. After Mr. Musk left OpenAI’s board in 2018, the company went on to become a leader in the field of generative A.I. and created ChatGPT, a chatbot that can produce text and respond to queries in humanlike prose. Mr. Musk, who has his own A.I. company, called xAI, said OpenAI was not focused enough on the technology’s risks.

..More

I Spent a Week With Gemini

Pro 1.5—It’s Fantastic

When it comes to context windows, size matters

BY DAN SHIPPER, FEBRUARY 23, 2024

I got access to Gemini Pro 1.5 this week, a new private beta LLM from Google that is significantly better than previous models the company has released. (This is not the same as the publicly available version of Gemini that made headlines for refusing to create pictures of white people. That will be forgotten in a week; this will be relevant for months and years to come.)

Gemini 1.5 Pro read an entire novel and told me in detail about a scene hidden in the middle of it. It read a whole codebase and suggested a place to insert a new feature—with sample code. It even read through all of my highlights on reading app Readwise and selected one for an essay I’m writing.

Somehow, Google figured out how to build an AI model that can comfortably accept up to 1 million tokens with each prompt. For context, you could fit all of Eliezer Yudkowsky’s 1,967-page opus Harry Potter and the Methods of Rationality into every message you send to Gemini. (Why would you want to do this, you ask? For science, of course.)

Gemini Pro 1.5 is a serious achievement for two reasons:

1) Gemini Pro 1.5’s context window is far bigger than the next closest models. While Gemini Pro 1.5 is comfortably consuming entire works of rationalist doomer fanfiction, GPT-4 Turbo can only accept 128,000 tokens. This is about enough to accept Peter Singer’s comparatively slim 354-page volume Animal Liberation, one of the founding texts of the effective altruism movement.

Last week GPT-4’s context window seemed big; this week—after using Gemini Pro 1.5—it seems like an amount that would curl Derek Zoolander’s hair:

2) Gemini Pro 1.5 can use the whole context window. In my testing, Gemini Pro 1.5 handled huge prompts wonderfully. It’s a big leap forward from current models, whose performance degrades significantly as prompts get bigger. Even though their context windows are smaller, they don’t perform well as prompts approach their size limits. They tend to forget what you said at the beginning of the prompt or miss key information located in the middle. This doesn’t happen with Gemini.

These context window improvements are so important because they make the model smarter and easier to work with out of the box. It might be possible to get the same performance from GPT-4, but you’d have to write a lot of extra code in order to do so. I’ll explain why in a moment, but for now you should know: Gemini means you don’t need any of that infrastructure. It just works.

Let’s walk through an example, and then talk about the new use cases that Gemini Pro 1.5 enables.

Why size matters (when it comes to a context window)

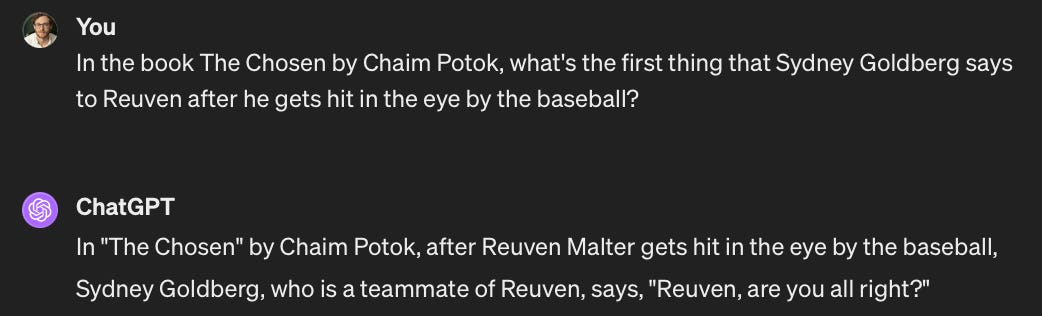

I’ve been reading Chaim Potok’s 1967 novel, The Chosen. It features a classic enemies-to-lovers storyline about two Brooklyn Jews who find friendship and personal growth in the midst of a horrible softball accident. (As a Jew, let me say that yes, “horrible softball accident” is the most Jewish inciting incident in a book since Moses parted the Red Sea.)

In the book, Reuven Malter and his Orthodox yeshiva softball team are playing against a Hasidic team led by Danny Saunders, the son of the rebbe. In a pivotal early scene, Danny is at bat and full of rage. He hits a line drive toward Reuven, who catches the ball with his face. It smashes his glasses, spraying shards of glass into his eye and nearly blinding him. Despite his injury, Reuven catches the ball. The first thing his teammates care about is not his eye or the traumatic head injury he just suffered—it’s that he made the catch.

If you’re a writer like me and you’re typing an anecdote like the one I just wrote, you might want to put into your article the quote from one of Reuven’s teammates right after he caught the ball to make it come alive.

If you go to ChatGPT for help, it’s not going to do a good job initially:

This is wrong. Because, as I said, Sydney Goldberg did not care about Reuven’s injury—he cared about the game! But all is not lost. If you give ChatGPT a plain text version of The Chosen and ask the same question, it’ll return a great answer:

This is correct! (It also confirms for us that Sydney Goldberg has his priorities straight.) So what happened?

Reddit says it’s made $203M so far licensing its data

Kyle Wiggers@kyle_l_wiggers / 2:27 PM PST•February 22, 2024

Image Credits: TechCrunch

Reddit’s prospects as it barrels toward a stock market listing have a lot more to do with relationships with AI vendors such as OpenAI than one might expect.

In its IPO prospectus filed today with the U.S. Securities and Exchange Commission, Reddit repeatedly emphasized how much it thinks it stands to gain — and has gained — from data licensing agreements with the companies training AI models on its over 1 billion posts and more than 16 billion comments.

“In January 2024, we entered into certain data licensing arrangements with an aggregate contract value of $203.0 million and terms ranging from two to three years,” the prospectus reads. “We expect a minimum of $66.4 million of revenue to be recognized during the year ending December 31, 2024 and the remaining thereafter.”

Now, it’s a mystery as to which AI vendors are licensing data from Reddit so far. Earlier this week, Bloomberg and Reuters reported that a “large unnamed AI company” — possibly Google — had entered into a licensing agreement worth about $60 million on an annualized basis. But OpenAI wouldn’t be a surprising customer either, especially considering that OpenAI CEO Sam Altman has an 8.7% stake in Reddit (making him the third-largest shareholder) and was once a member of the company’s board of directors.

Why’s Reddit data valuable? As Reddit explains, AI models “learn” from examples to craft essays, code, emails, articles and more, and vendors like OpenAI scrape the web for millions to billions of these examples to add to their training sets. Some examples are in the public domain. Others aren’t, or — in the case of Reddit content — come under restrictive licenses that require citation or specific forms of compensation.

Reddit previously didn’t gate access to its data for AI training purposes. But it reversed course last year, arguing that its data shouldn’t be — in CEO Steve Huffman’s words — “[given] to some of the largest companies in the world for free.”

“[Our] data APIs are able to provide real-time access to evolving and dynamic topics such as sports, movies, news, fashion, and the latest trends,” the prospectus continues. “We believe that Reddit’s massive corpus of conversational data and knowledge will continue to play a role in training and improving large language models. As our content refreshes and grows daily, we expect models will want to reflect these new ideas and update their training using Reddit data.”

..More

News Of the Week

The Supreme Court appeared lost in a massive case about free speech online

The justices look likely to reinstate Texas and Florida laws that seize control of much of the internet — but not for long.

By Ian Millhiser Feb 26, 2024, 4:30pm EST

Ian Millhiser is a senior correspondent at Vox, where he focuses on the Supreme Court, the Constitution, and the decline of liberal democracy in the United States. He received a JD from Duke University and is the author of two books on the Supreme Court.

The Supreme Court appears inclined to reinstate Texas and Florida laws seizing control of much of the internet — both of which are currently blocked by court orders — because those laws are incompetently drafted.

If that outcome sounds confusing, don’t worry, it is. Monday’s oral arguments in Moody v. NetChoice and NetChoice v. Paxton were messy and often difficult to follow. And the ultimate outcome in these cases is likely to turn on distinctions that even the lawyers found it difficult to keep track of.

Before we dig into any of that, however, it’s useful to understand what these cases are actually about. Texas and Florida’s Republican legislatures both passed similar, but not identical, laws that would effectively seize control of content moderation at the “big three” social media platforms: Facebook, YouTube, and Twitter (the platform that Elon Musk insists on calling “X”).

These laws’ advocates are quite proud of the fact that they were enacted to prevent moderation of conservative speech online, even if the big three platforms deem some of that content (such as insurrectionist or anti-vax content) offensive or harmful. Florida Gov. Ron DeSantis (R) said his state’s law exists to fight supposedly “biased silencing” of “our freedom of speech as conservatives ... by the ‘big tech’ oligarchs in Silicon Valley.” Texas Gov. Greg Abbott (R) said his state’s law targets a “dangerous movement by social media companies to silence conservative viewpoints and ideas.”

At least five justices — Chief Justice John Roberts, plus Justices Sonia Sotomayor, Elena Kagan, Brett Kavanaugh, and Amy Coney Barrett — all seemed to agree that the First Amendment does not permit this kind of government takeover of social media moderation. There is a long line of Supreme Court cases, stretching back at least as far as Miami Herald v. Tornillo (1974), holding that the government may not force newspapers and the like to publish content they do not wish to publish. And these five justices appeared to believe that cases like Tornillo should also apply to social media companies.

Venture Capital Has Never Felt More Polarized

By Kate Clark

Feb 29, 2024, 5:22pm PST

Venture capital leaders flew to Los Angeles this week for the annual Upfront Summit, a two-day conference featuring investors, founders and a smattering of celebrities like Lady Gaga. The energy was high and the references to the painful VC correction that pummeled valuations and knocked out VC firms were rare. The downtown is over, apparently!

The cast of characters that hit the stage ran the gamut from venture capitalists prognosticating on the potential harms of artificial intelligence or how to rectify global problems through investments, to the unfiltered contrarians who ranted about the “woke mind virus” or threw darts at competing investors.

To me, the strikingly different tones reflect the increasingly polarized state of the venture capital business and the varying opinions about the true role of a venture capitalist. The discussions also revealed a rising anxiety amongst VC investors that the current generative AI boom may not deliver strong returns.

Off stage, the gossip spewed. The hot topic was the recent shake-up at the Peter Thiel-led VC firm Founders Fund. Two of the firm’s partners, Brian Singerman and Trae Stephens, spoke at the conference, as did Keith Rabois, the partner who recently left and returned to his prior firm, Khosla Ventures.

Rabois addressed rumors of a clash at the firm and implied that Founders Fund’s hands-off approach is inferior.

“I have strong views on how to make money in venture,” he said. “That’s not for everybody.”

Big Tech’s influence on startup valuations was top of mind, too. Venture capitalists argued they shouldn’t have to pay the same valuations as Google or Microsoft, which have invested in startups such as Anthropic and Inflection while also providing them with cloud computing services.

“Google doesn’t care about valuation because the money is coming back,” Lightspeed Venture Partners partner Nicole Quinn said. “VCs need to be really strict and say this is not good for the business.”

Founders Fund’s Singerman, in keeping with the fund’s more bearish stance on AI deals, said he fears that “a lot of the returns from this wave are going to go to incumbents” like Google, Microsoft and Amazon. “I think you are competing against incumbents in a way that we’ve never before.”

He also offered up a number of zingers. On the firm’s disinterest in AI, he said: “We tend to just put a bunch of money in OpenAI and call it a day.” (Founders Fund invested in OpenAI at a $29 billion valuation but sat out the recent tender that valued the startup at more than $80 billion).

He also expanded on the firm’s historic reluctance to take board seats, saying: “you have all the downside and none of the upside,” adding that he’s “not a governance guy.” Instead, he offers companies simple advice: “Don’t do anything illegal!”

Later, Joe Lonsdale, a general partner at 8VC and a co-founder of Palantir, offered a signature rant on the “woke mind virus” and argued that “free thinking is under attack.”

..More

A Growing List Of Unicorns Haven’t Raised Funding For 3-Plus Years

February 26, 2024

Startups — much like cars — can usually go only so long before needing to refuel.

How long, of course, will vary. Some go years before topping off again following a big fundraise. Others need to refill more frequently.

The longer it takes, however, the less likely it becomes that fresh investment is forthcoming. That’s concerning given that for a growing list of U.S. unicorns, it’s been more than three years since their last fundraise, an analysis of Crunchbase data shows.

Unicorns that haven’t raised for 3+ years

Who’s on the list? Using Crunchbase data, we identified a sample set of 28 private companies that have a peak valuation of $1 billion or more but haven’t raised a round for years. Most closed their last round between three and four years ago.

Below, we list all the selected companies, along with business models and prior funding:

Representative industries range from connected fitness to enterprise software to digital health. There are consumer-facing companies that generated a lot of buzz several years ago — like local secondhand sales platform OfferUp, luggage-maker Away, or connected fitness brand Zwift.

In many ways, the list is reminiscent of a playlist featuring the greatest hits of 2020. These days, they seem like the startup equivalent of the band that can do the county fair circuit, but won’t be filling stadiums. They’re still around, just not top of mind.

Some were particularly prodigious fundraisers at their peak. Of the 28 companies on our list, five have raised $500 million or more in equity funding to date.

Of those, the largest fundraiser is Miami-based cloud kitchen operator Reef Technology, which raised $1.5 billion in two rounds led or co-led by SoftBank in late 2018 and 2020. But investor interest in the space, which peaked during the pandemic, has since dried up.

Others that raised more than $500 million in total funding also haven’t closed a new round since 2020. One is Symphony Communication Services, a provider of collaboration software for the financial services industry. Another is Cambridge Mobile Telematics, a road-safety platform, which raised $500 million from SoftBank in 2018.

Layoffs and cutbacks abound, along with some closures

Not everyone on the list is still around. Quite a few that have endured, meanwhile, have made some steep cuts along the way.

Proteus Digital Health is one that didn’t make it. The Redwood City, California-based company, a developer of sensor-equipped “smart pills,” raised more than $490 million and was once valued at $1.5 billion. It filed for bankruptcy protection in 2020.

Packable, the parent company of Amazon seller Pharmapacks, also hit some apparently insurmountable hurdles. The company filed for bankruptcy in 2022 after plans for a SPAC merger fell through.

On the consumer-facing front, meanwhile, we’re also seeing some stiff cutbacks. At Zwift, for example, the company’s co-CEO stepped down earlier this month amid a broader round of layoffs.

Also this month, luggage-maker Away reportedly cut staff by 25%.

Given the current tough fundraising and exit environment, it was actually pretty common to see companies on our list with one or more layoff announcements in the past two years. We did not attempt a comprehensive tally.

The clock is ticking

So how much more runway do these onetime unicorns have ahead? A prior Crunchbase News analysis found startups that raise a round commonly have only a short break before they’re fundraising again. Among U.S. companies that go on to close Series B funding after a Series A, for instance, the median is just under 2 years to do so, according to data from 2012 to 2023.

It’s reasonable to expect companies funded around the market peak from 2020 to early 2022 might have a bit longer to wait. Many startups raised exceptionally large rounds, and have subsequently cut burn rates to make their cash stretch further.

But while fundraising can take longer than expected, it can’t be delayed indefinitely. Crunchbase data shows it’s uncommon to see a gap of four years or more between Series A and Series B rounds, for example. Historical trends for later rounds aren’t too different.

..More

How Europe learned to stop worrying and love TikTok

A year after banning the Chinese-owned app, Europe’s politicians are popping up on TikTok to fight for re-election.

FEBRUARY 23, 2024 6:00 AM CET

Europe's clampdown on TikTok may have come quickly, but its re-embrace of the popular video-sharing application has been no less emphatic.

A year after EU governments moved to restrict the use of the Chinese-owned social media app over security concerns, politicians and parties are flocking to TikTok in droves. Behind their renewed love for the platform is their pursuit of the youth vote in June's EU election.

“We, as Greens, have been very, very critical towards TikTok, like all the EU institutions,” said German Greens lawmaker Anna Cavazzini. But “if you want to reach young voters as a progressive candidate, we also have to be on TikTok." Cavazzini created her account a week ago and is running for a second mandate.

The EU executive was first to impose restrictions on officials using TikTok, asking bureaucrats exactly a year ago to remove the app from their work and work-related phones. The EU's Council of Ministers followed suit, as did the European Parliament. In the weeks that followed, many national governments and ministries restricted their officials from using TikTok; many, if not all of those restrictions still apply today.

The bans — which followed similar moves by the U.S. government — were aimed at limiting the cybersecurity, data security and surveillance risks associated with the app.

TikTok, based in the U.S. and Singapore, is owned by Chinese tech company ByteDance. That has prompted concerns TikTok data could be susceptible to surveillance by Chinese security services, because the country's national legislation requires companies to collaborate with its intelligence agencies.

But far from being shunned like Chinese companies Huawei and ZTE — which have faced a backlash over similar security concerns — TikTok is seeing Europe's politicians, parties and institutions flock to the platform. The app boasts over 1 billion young users worldwide, with an estimated 142 million in the EU this year.

TikTok said this month that nearly one in three members of the European Parliament are now on its app. The legislature has said it will launch its own account to connect with first-time voters and to respond to disinformation ahead of the upcoming June 6-9 election.

Even U.S. President Joe Biden joined the app this month to campaign ahead of America's November presidential elections.

..More

Techstars is evolving and growing

February 26, 2024 By David Cohen

Recently, this post about our evolution came out from Techstars. Of course, as Chairman, I know of, and strongly support, the strategic changes that are happening at Techstars. We are strengthening our ability to invest in more startups and their founders than any other company in the world. And helping founders be successful has always been our goal.

I also knew that people would be sad about us no longer running accelerators in certain markets. I was personally absolutely super sad about closing the original Techstars accelerator location in Boulder after this next class, as well as Seattle (another of the original locations.) The most common reaction that I heard was that people were bummed or sad about it, but understood that business decisions get made. But people were trying to understand the “why” behind the business decisions.

This leads to the second most common thing I heard, namely that people didn’t really like the messaging, partly because it didn’t satisfactorily address the “why.” I know the team has taken that feedback constructively. For those of you in Boulder or Seattle that interpreted the communications as a disparagement of the startup community and its impact – that was definitely not the intent. We’re sorry for that.

So… the question remains, why close certain accelerator locations?

First it is important to note that different Techstars locations also have different funding sources. We’re only proactively shutting down some of the program locations that are funded by our Techstars Accelerator Fund (TSA), which historically has funded programs in a dozen cities. Instead, going forward, TSA will concentrate on funding a larger number of companies through programs in four primary cities. The separately funded partner accelerator programs which operate in more than 30 locations worldwide are unchanged. So in 2024 we will still run more than 50 cohorts in 30+ locations globally and into the foreseeable future.

Second I’ll refer you back to our original post on this which is a better source of information than social media, which says:

“We know that founders are more likely to succeed when they are plugged into a thriving ecosystem — because no one succeeds alone. So we’re doubling-down on running in-person programs in the largest tech ecosystems so entrepreneurs can be surrounded by the highest concentration of VCs, startups, talent, mentors, and support.”

By expanding our presence in these top markets (SF, NY, Boston, LA), we can better support all of our portfolio companies for the coming decades. This might feel like a departure from our roots of starting programs in a large number of startup communities, but over the last 17 years, the world has changed. More business is being done virtually, communities are more interconnected, and a physical accelerator in a particular city is less important than building the best possible support for founders located there. Ultimately, if founders from everywhere and anywhere are going to succeed, we need to help plug them into these larger ecosystems with the most vibrant and robust VC communities, largest talent pools, and significant numbers of other early and late stage startups.

In addition, as Techstars continues to become more efficient operationally, we decided to consolidate our Colorado activity in Denver rather than running operations in both Denver and Boulder. We recently opened a new office in downtown Denver where we will operate our Colorado-based Workforce Development accelerator and it will serve as an in-person hub for more than 65+ Denver/Boulder employees. They are not being asked to move or relocate. We did however change our Headquarters: our primary mailing address and office is now NYC instead of Boulder. Most of our executive team and more than 50 employees are in that region. New York is the second largest VC ecosystem in the world and officially making it our HQ connects Techstars with more sources of capital, other VCs, founders, talent and market-reach.

As Techstars retools, some former staffers say it lost focus on what made it successful

Alex Wilhelm @alex / 2:52 PM PST•February 23, 2024

Image Credits: Stephen McCarthy/Sportsfile / Getty Images

Well-known accelerator group Techstars announced a slew of changes to its operations this week, including the shuttering of some of its city-based programs.

Social media channels were lit with criticism from former members, who argued that the famed startup accelerator has lost focus on the very thing that historically made it so successful: city-based operations in areas not swarming with other such programs. And one former Techstars managing director (MD) told TechCrunch that the move away from local fundraising for city-based accelerator programs was an error.

The upcoming closure of its Boulder and Seattle accelerators comes after the group decided to hit pause on its Austin-based program, an event that TechCrunch reported on in late 2023.

Given its extensive global footprint and lengthy history of investing in early-stage startups, changes to how Techstars operates will affect founders and local venture ecosystems around the world.

The local connection

In the wake of Techstars’ decision to pull back from certain markets, former Techstars Seattle managing director Chris DeVore penned a lengthy note criticizing the group’s strategic choices, including centralizing its fundraising efforts, and building programs with corporate sponsors as financial anchors.

The org’s CEO Maëlle Gavet hopped into that discussion and publicly engaged in a back-and-forth with him.

But others privately echoed at least some of DeVore’s sentiments to TechCrunch.

..More

Startup of the Week

Figure rides the humanoid robot hype wave to $2.6B valuation

The Bay Area firm is raising $675M from Microsoft, OpenAI, Amazon, Nvidia, Intel Capital and more

Brian Heater @bheater / 5:00 AM PST•February 29, 2024

Image Credits: Figure

Today Figure confirmed long-standing rumors that it’s been raising more money than God. The Bay Area-based robotics firm announced a $675 million Series B round that values the startup at $2.6 billion post-money.

The lineup of investors is equally impressive. It includes Microsoft, OpenAI Startup Fund, Nvidia, Amazon Industrial Innovation Fund, Jeff Bezos (through Bezos Expeditions), Parkway Venture Capital, Intel Capital, Align Ventures and ARK Invest. It’s a mind-boggling sum of money for what remains a still-young startup, with an 80-person headcount. That last bit will almost certainly change with this round.

Figure already had a lot to work with. Founder Brett Adcock, a serial entrepreneur, bootstrapped the company, putting in an initial $100 million to get it started. Last May, it added $70 million in the form of a Series A. I used to think “Figure” was a reference to the robot’s humanoid design and perhaps an homage to a startup that’s figuring things out. Now it seems it’s might also be a reference to the astronomical funding figure it’s raised thus far.

X of the Week

Hopin's UK business enters liquidation as it transfers HQ to the US

The company was once valued at $7.75bn