A reminder for new readers. That Was The Week includes a collection of my selected readings on critical issues in tech, startups, and venture capital. I selected the articles because they are of interest to me. The selections often include things I entirely disagree with. But they express common opinions, or they provoke me to think. The articles are sometimes long snippets to convey why they are of interest. Click on the headline, contents link or the ‘More’ link at the bottom of each piece to go to the original. I express my point of view in the editorial and the weekly video below.

Congratulations to this week’s chosen creators: @sama, @openai, @om, @krishnanrohit, @peternixey, @eringriffith, @AndreRetterath, @ry_paddy, @cutler_max, @Kantrowitz, @PranavDixit, @ttunguz, @geneteare, @sarahfielding_, @carlfranzen

Contents

Math Problems with ChatGPT 4o

News Of the Week

Startup of the Week

X of the Week

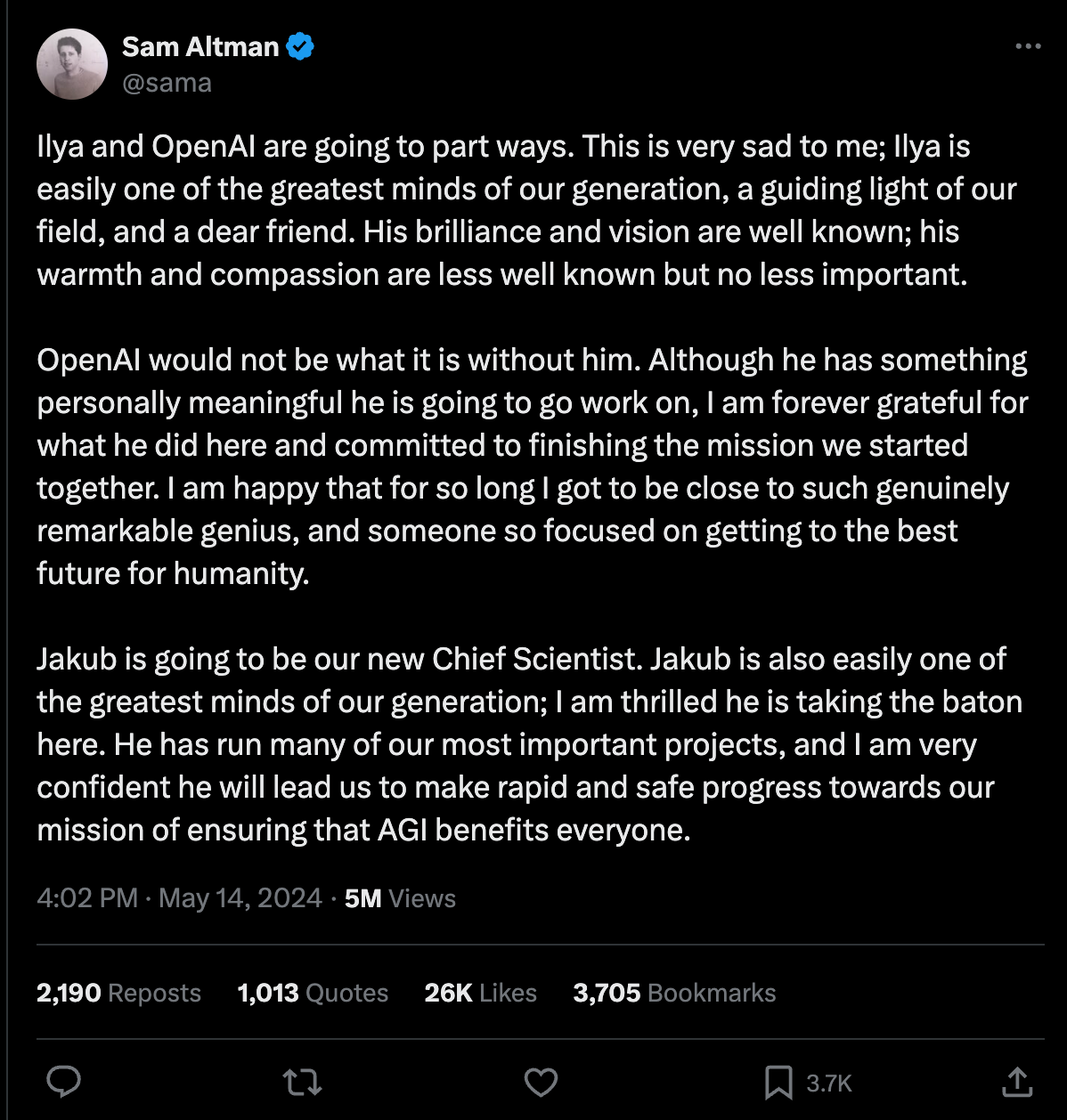

Sam Altman on Ilya leaving OpenAI

Editorial

OpenAi and Google announced their AI offerings' next iteration this week. As @Om Malik explains in one of this week’s Essays of the Week, OpenAi won in this high-stakes battle.

Make no mistake — the reason OpenAI is achieving all this success (and hype) is because they have a product that for now is stellar. Nonetheless, OpenAI has created excitement that reminds me of the emergence of Palm, and later social networks. They stoked the imagination, and possibilities. Of course!

Om is right. Sam Altman did his own post later in the day of the announcements:

First, a key part of our mission is to put very capable AI tools in the hands of people for free (or at a great price). I am very proud that we’ve made the best model in the world available for free in ChatGPT, without ads or anything like that.

Free to consumers, or 8 billion earthlings, is possible due to the revenues OpenAI can make from business users. It represents a very big step forward. The company also released a desktop app, initially on the Mac, that can interact with other apps.

But for me, the best way to think about what was delivered, aside from free, is summed up in this week’s title—Eyes, Ears, Hands, and Mouth. OpenAI has enabled every smartphone camera on the planet to become the AI's eyes and ears. Both still images and video can be used as inputs to a conversation. Of course, the microphone, too. This week’s video of the week shows this for teaching a student how to solve a math problem. The mouth reference acknowledges that we can now speak to ChatGPT in a human-like way, including cross-talking and interruptions. And, of course, we can still type using our hands.

This changes the problem of giving AI data—images, video, sound, and speech can all become data for input and learning.

They also gave chatGPT a memory. It can remember things across sessions. The scope of what will now be possible is expanded to a much longer list.

Rohit Krishnan writes about what comes next in his essay:

The true change will come once we can enable large numbers of them to work together. And we’re getting glimpses of how they can do this across all modalities that are important to use. Whether that’s writing code or seeing something or listening to something or writing or reading something or a mixture of all of these.

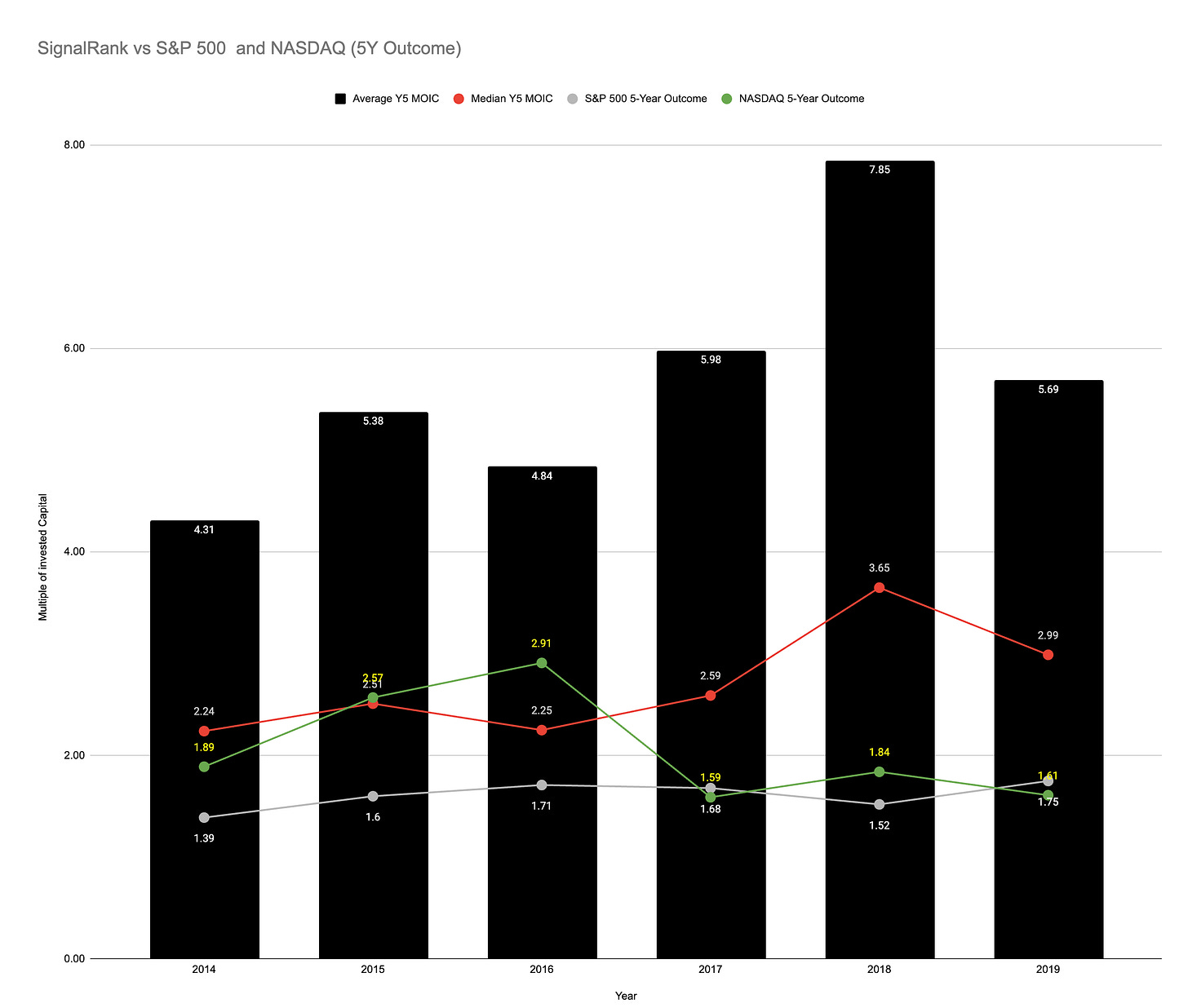

He is talking about AI to AI interactions that can produce even better and faster outcomes. I did this myself earlier in the week. I was asking ChatGPT to create a chart showing the performance of the SignalRank Index against the S&P 500 and the NASDAQ over the 2014-2019 period.

ChatGPT did not have the NASDAQ data, so I asked Claude.ai for it. Once I had it I went back to ChatGPT and it completed the work. Here’s the chart:

It seems clear that almost any problem that can be described, shown, listened to can now be given to ChatGPT and answered.

Eyes, Ears, Hands, and mouths are all part of our intelligent robotic future, too. The building blocks for rapid productivity advancement are being put into place.

Marc Andreessen and Ben Horowitz discuss the implications for manufacturing in their podcast this week.

This was a very important week.

Essays of the Week

GPT-4o

By Sam Altman

There are two things from our announcement today I wanted to highlight.

First, a key part of our mission is to put very capable AI tools in the hands of people for free (or at a great price). I am very proud that we’ve made the best model in the world available for free in ChatGPT, without ads or anything like that.

Our initial conception when we started OpenAI was that we’d create AI and use it to create all sorts of benefits for the world. Instead, it now looks like we’ll create AI and then other people will use it to create all sorts of amazing things that we all benefit from.

We are a business and will find plenty of things to charge for, and that will help us provide free, outstanding AI service to (hopefully) billions of people.

Second, the new voice (and video) mode is the best computer interface I’ve ever used. It feels like AI from the movies; and it’s still a bit surprising to me that it’s real. Getting to human-level response times and expressiveness turns out to be a big change.

The original ChatGPT showed a hint of what was possible with language interfaces; this new thing feels viscerally different. It is fast, smart, fun, natural, and helpful.

Talking to a computer has never felt really natural for me; now it does. As we add (optional) personalization, access to your information, the ability to take actions on your behalf, and more, I can really see an exciting future where we are able to use computers to do much more than ever before.

Finally, huge thanks to the team that poured so much work into making this happen!

MAY 16, 2024 | On Technology | Om Malik

How OpenAI Stole Google’s Thunder

I have been thinking about the OpenAI and Google I/O announcements — and how they represent two polar opposite strategies, and thus diametrically opposed perceptions of two entities that are in a small, elite club that is creating a direction for a set of technologies known as “artificial intelligence.”

Let’s start with OpenAI’s announcement just a day before Google I/O:

It introduced a GPT-4o, which it describes as a large multimodal model that accepts image and text inputs and produces text outputs. By accepting both image and text inputs, the model opens up a whole new range of possibilities for AI applications.

It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time (opens in a new window) in a conversation.

How does this happen? Previously you used Voice Mode to talk to ChatGPT. This had latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average. Voice Mode was a three-step process — (a model) transcribes audio to text, (using GPT-3.5 or GPT-4) takes in text, and outputs text, and a third simple model converts that text back to audio. Now, OpenAI says, “a single new model works end-to-end across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network.

It is faster and 50% cheaper.

What does it mean? Think of a voice assistant that not only understands you and your questions, but also has the capability to have an empathetic two-way conversation without it feeling too uncanny, or feeling stilted like, say, Siri or Alexa. It also has solved the problem faced by “real-time translation.”

AI embraces its product arc

fuzzy processors are entering mass production

ROHIT KRISHNAN, MAY 14, 2024

How do you compete in providing intelligence?

This week was a masterclass in helping figure this out. OpenAI announced a new model, GPT-4o, which is better and faster than its flagship GPT-4-Turbo. It’s also natively multimodal, meaning it can read and write text, images, audio and video natively.

Thanks for reading Strange Loop Canon! Subscribe for free to receive new posts and support my work.

Pledge your support

(The “o” in 4o stands for “omnimodal” which is a fancier way to say multimodal, I think?). It can:

see what’s going on around it and understands it

have full conversations with no latency

you can interrupt it and have a natural conversation

sounds like Scarlett Johansson

live translation and conversation across multiple languages

OpenAI’s demo was clearly timed. The next day Google had their I/O conference, and demonstrated many of the same capabilities, and then some.

2 million token context window

spatial reasoning (it could remember where the person had left their glasses)

a fully multimodal model too, which can see and read and listen to you

they have Gemini Nano and Flash, small models which can work on-device

live spam testing

integrated into their products - from search to google cloud to android to messages and more

Sam talked about Universal Basic Compute, a way to provide their flagship model for free to all users with minimal usage cap. They delivered on that with GPT-4o.

This was my prediction for OpenAI, and between them and Google, this is exactly what we got.

Google was already giving Gemini 1.5 Pro away for limited time, as is Meta, and they’re integrating it into all their offerings. We’ve entered the Universal Basic Compute era in some ways at least.

It also means a whole new war has started in the AI domain, this is the year of productisation.

The bull case

The bull case is to look at GPT-4o as the first salvo in creating a fully multimodal model that understands the world. Why is this important? Because until now most advanced models were good in one or two domains (text models for text, video for video, image for image, sometimes crossing boundaries).

And it’s smart! It’s the same model as the “im-also-a-good-gpt2-chatbot” that quietly debuted on lmsys last week.

OpenAI’s Spring release will end up being far more significant than most of us might suspect

Just like any employee, getting good results from AI means providing good instructions. The more specific and detailed your instructions the more specific and detailed your results.

But as anyone who’s ever had a boss knows, people aren’t great at specific and detailed instructions. In fact we often don't even know what we want, never mind how to communicate it.

The real-world patch for this is a meeting. Your and your boss sit down and talk until you think you know what they want. Or at least what they said they wanted.

Ideally your boss would take the time to email you a nice clear brief. They’d get things straight in their head, write them down and save you a meeting.

But writing briefs takes time and effort. And things that take time and effort tend to lead us to things that don’t. Like meetings. Or Instagram.

Getting good results from AI means typing a lot of words.

Which requires effort. And hands.

Which we don't have while driving our cars or out on our morning run.

But we do have our voice.

OpenAI already has a voice assistant. And it's impressive, light years ahead of Siri and Alexa. But also very awkward.

If I leave even the briefest of pauses it interrupts me to talk. But I can't interrupt in return (other than by pressing a button). All of which makes it feel very uncomfortable.

But it's still special. And I find myself wanting it to do more. I want it to read out my email and then say: “pop the invoice Steve sent me into Xero and tell him I'll pay him on Tuesday”. That feels like a natural extension.

But I can't. It doesn’t yet have access to my email or Xero. And even if it did the interaction would be too awkward to merit the battle.

But Monday’s demo felt very different. The conversation was smooth. The AI didn’t interrupt Mira and Mira could interrupt the AI. It felt like a human. Like the foundation for a new class of interaction.

In 2015 Apple quietly laid a similar foundation. The fingerprint sensor.

In those days everyone locked their phone using a 4-digit PIN. A PIN that was so easy to steal that the polite thing was to look away as someone was typing it.

But for the iPhone to fulfil its destiny, for it to be used for frictionless purchases, stock-trading, banking and health, it needed to be much more secure. It needed more than a 4-digit pin. And with Apple’s biometrics, it got it.

Without the infrastructure of stronger authentication, most of that class of apps couldn’t have been built. Stronger authentication unlocked them.

I think that voice will prove to be a similar unlock for AI. In making machine-voice feel natural, OpenAI have:

- increased the quality you can expect from AI (by decreasing the friction of briefing it)

- increased the frequency you’ll use AI (by extending the places you can do so)

I think it’s brilliant, transformative, and as always, just a little bit scary.

Tensions Rise in Silicon Valley Over Sales of Start-Up Stocks

The market for shares of hot start-ups like SpaceX and Stripe is projected to reach a record $64 billion this year.

By Erin Griffith, Reporting from San Francisco, May 6, 2024

Sohail Prasad, an entrepreneur, launched a fund in March called the Destiny Tech100. The fund owns shares in hot tech start-ups like the payments firm Stripe, the rocket maker SpaceX and the artificial intelligence company OpenAI.

Few people get the chance to invest in these privately held companies since their shares are not openly traded. Mr. Prasad’s intention with Destiny was to let the rest of the world get a piece of them through his fund.

But soon after Destiny debuted, two tech start-ups — Stripe and Plaid, a banking service — said the fund did not legally own their shares. A competitor criticized Destiny as “too good to be true.” Robinhood, the stock trading app, stopped letting investors buy into the fund, saying it had been added to its app by mistake.

Mr. Prasad was not surprised by the uproar. It was a sign of “a true cultural movement in which DXYZ is at the forefront,” he said, referring to Destiny by its ticker symbol.

Tensions over the shadowy and often enigmatic market of private company stocks have reached a boiling point, just as the buying and selling of such shares has grown bigger than ever. At its center is an age-old debate: Should everyone have access to the riches and risks of investing in Silicon Valley start-ups?

The market for private company stocks, also known as the secondary market, is on track to hit a record $64 billion this year, up 40 percent from last year, according to Sacra, a research firm focused on private investments. A decade ago, the private company stock market was roughly $16 billion, according to Industry Ventures, a firm focused on secondary transactions.

As the appetite for private company shares has soared, so have the headaches. If a company is publicly traded, like Apple or Amazon, anyone can easily buy and sell its stock. But privately owned tech start-ups like Stripe typically have a small circle of owners, such as their founders and employees, as well as the wealthy individuals and venture capital firms that provided financing for the companies to grow. The companies’ stocks do not usually change hands.

Now, as these start-ups mature and don’t appear to be in a rush to go public, a wider range of investors are becoming eager to own their stock. New online marketplaces that match sellers of start-up stock with interested buyers have sprung up.

Most Used Startup Databases & How to Find the Best Provider

Startup Database Benchmarking 2024

ANDRE RETTERATH, MAY 16, 2024

According to 50% of DDVCs, the biggest pain point in the process of becoming more data-driven is finding the right tools. To overcome this hurdle, we’ve been building and testing “VC Tool Finder” in closed beta and plan to publicly launch it soon.

The second biggest pain point in becoming more data-driven, as stated by 46% of respondents, is finding the right data sources. To tackle this headache, we published a comprehensive database benchmarking study in 2020 where we compared original startup data with its representation across the most prominent commercial databases including CBInsights, Crunchbase, Dealroom, and Pitchbook.

We looked at various dimensions such as coverage and accuracy across startups included, founders and their academic degrees, funding rounds, round sizes, valuations, and a lot more. The “winners” were VenturesSource, Pitchbook, and Crunchbase.

Since then, a lot has happened. CBInsights acquired VentureSource, Crunchbase raised $ 50M, and most importantly, LLMs and GenAI levelled the playing field for the collection and processing of unstructured data.

In light of these dynamics and the absence of other reliable benchmarking studies, Tom Oechel from TU Munich and I updated my previous study by including new database providers and extending our sample of original data from July 2019 to March 2024. Hereby, we were able to compare sample 1 (1998 until July 2019) with sample 2 (August 2019 until March 2024) and gained unique insights about trends across coverage and accuracy of these providers.

I presented the results at the Data-Driven VC Summit last week and received so much positive feedback and follow-up questions that I decided to share a brief summary with key insights below. If you’d like to learn more, get the full 44-page study with all tables and analyses here.

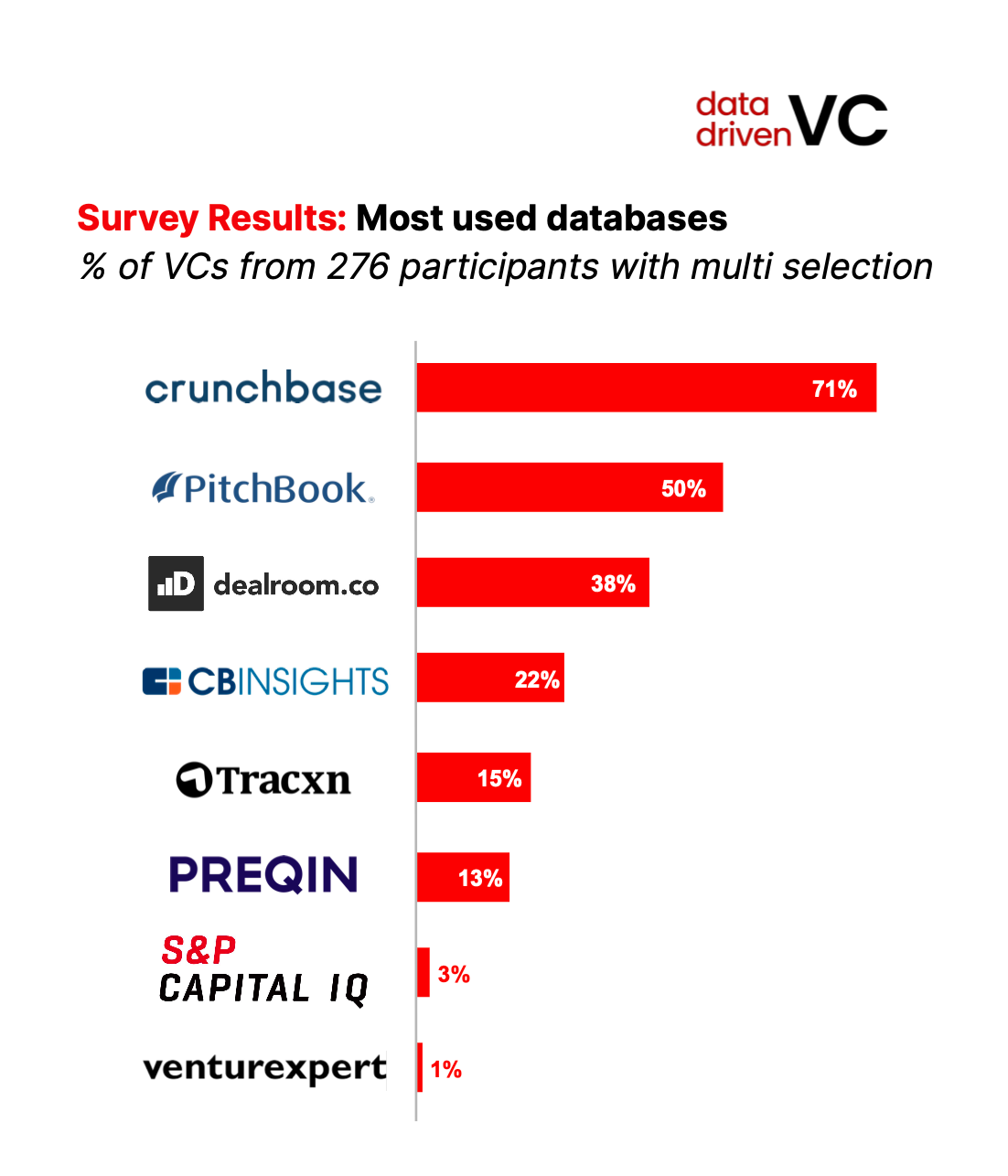

What Are the Most Used Databases?

As part of the Data-Driven VC Landscape 2024 survey, we asked 276 VCs which startup database they use. Below are the results with multi-selection.

It’s no surprise that Crunchbase, which came out top 3 in our 2020 study and is priced among the cheapest in the market (even with API), has the highest penetration today. Pitchbook, which also came out top 3 in our previous study but is priced the highest, ranks second. More surprisingly, Dealroom has the third highest penetration. Wondering why? Well, let’s dive in. Our new study provides the answer.

Video of the Week

AI of the Week

Her

OpenAI's latest release, and how Google compares

PATRICK RYAN, AND MAX CUTLER - MAY 17, 2024

“I’m afraid I can’t do that, Dave”

Once again, OpenAI has stolen the spotlight.

GPT-4o represents a leap forward in AI’s ability to reason, especially with audio and visual inputs. It can talk pretty well too, and it’s starting to feel uncannily human (creepy AF of course). The speech pattern and response times are >10x faster than previous versions, and almost indistinguishable from a real person.

Two more videos were making the rounds on Twitter.

One showed the model walking a boy through some geometry homework and the other doing a real-time translation between Italian and English.

Impressive stuff showcasing precision, versatility and humour in equal measure. They also demonstrate the threat OpenAI may represent to the thousands of startups building “wrappers” on top of ChatGPT - perhaps it will be able to just do everything, without a need for point solutions to specific problems (a question Ben Evans raised recently).

In terms of technical improvements, in past builds, OpenAI used three different models for its voice feature:

one to transcribe audio into readable text;

the core GPT-3.5/4 to digest the text and prepare a response, and;

then a third model to convert the text back to an audio output.

GPT-4o bins this chained process.

It is one end-to-end model; everything is processed by the same neural network.

It is now much better at grasping context: tone of the speaker, background noise, etc. and outputs a more textured response, complete with touches of something that seems like emotion (almost but in a creepy way where you know it isn’t).

OpenAI Wants To Get Big Fast, And Four More Takeaways From a Wild Week in AI News

Ignore the flirty bot, OpenAI’s big strategic play became clearer this week.

ALEX KANTROWITZ, MAY 17, 2024

In a season of big AI news, few weeks have felt more significant than this one. OpenAI introduced its new GPT-4o model, Google unveiled a deeper AI vision, and Apple dropped more hints ahead of a massive AI-themed WWDC event.

At Big Technology, we also hosted our first public event with Box CEO Aaron Levie, well-timed with the AI news. Our live podcast sold out — filling 130 seats, with scores more on the waitlist — and meeting so many of you was a thrill. You can see some photos below.

Amid the noise, here are the five core takeaways from the week, including some commentary from Levie on crucial developments the headlines seemed to miss:

OpenAI Wants to Get Big Fast

OpenAI cut the cost of using its newest GPT-4 model by 50% and made it twice as fast as the previous version. By doing so, it’s practically daring companies considering building with its technology to give it a chance, hoping to get big fast. ROI spreadsheets are likely getting reworked as we speak.

“We're nowhere near the point where a lowering of cost doesn't disproportionately impact what you can now build,” Levie said.

OpenAI’s strategy to get its technology in the hands of as many developers as possible — to build as many use cases as possible — is more important than the bot’s flirty disposition, and perhaps even new features like its translation capabilities (sorry). If OpenAI can become the dominant AI provider by delivering quality intelligence at bargain prices, it could maintain its lead for some time. That is, as long as the cost of this technology doesn’t drop near zero.

Upgrade to paid

Free ChatGPT-4o Is a Risky Bet With Upside

OpenAI is also betting that ChatGPT will get big fast, upgrading its free version by two generations to GPT-4o. That might disincentivize some users from paying $20 per month for ChatGPT Plus. But ChatGPT needs the jolt more than OpenAI needs the money.

ChatGPT’s growth has flatlined, and OpenAI’s latest announcement revived interest in the product. Sticking free ChatGPT users with an inferior bot would squander the buzz, so OpenAI is making ChatGPT-4o available to everyone for free. Hardcore users will likely remain on the paid version, looking for higher rate limits and other perks. But even if OpenAI loses a few Plus subscribers, the tradeoff should be worth it.

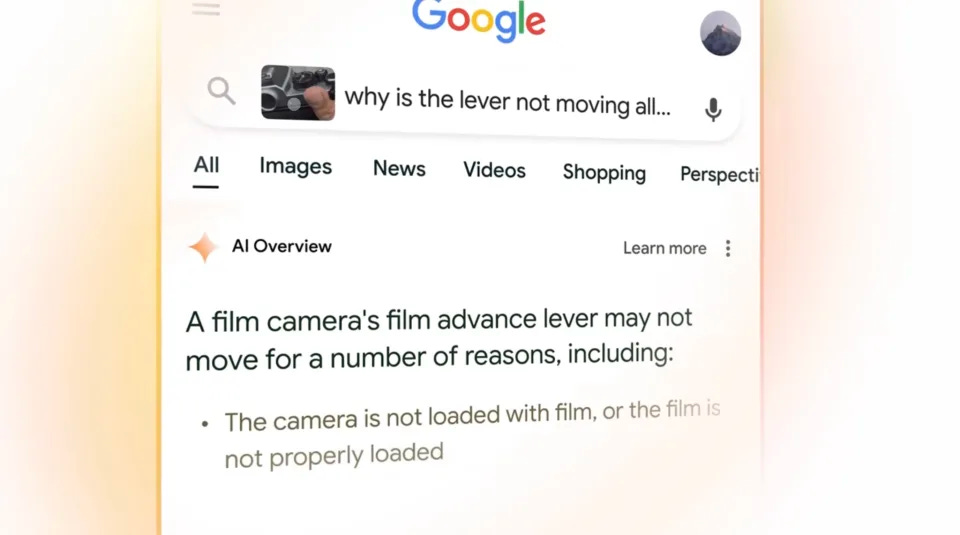

Google Search will now show AI-generated answers to millions by default

AI Overviews are now available in the US and will roll out to more than a billion people by the year's end.

Pranav Dixit, Senior Editor

Google is shaking up Search. On Tuesday, the company announced big new AI-powered changes to the world’s dominant search engine at I/O, Google’s annual conference for developers. With the new features, Google is positioning Search as more than a way to simply find websites. Instead, the company wants people to use its search engine to directly get answers and help them with planning events and brainstorming ideas.

“[With] generative AI, Search can do more than you ever imagined,” wrote Liz Reid, vice president and head of Google Search, in a blog post. “So you can ask whatever’s on your mind or whatever you need to get done — from researching to planning to brainstorming — and Google will take care of the legwork.”

Google’s changes to Search, the primary way that the company makes money, are a response to the explosion of generative AI ever since OpenAI’s ChatGPT released at the end of 2022. Since then, a handful of AI-powered apps and services including ChatGPT, Anthropic, Perplexity, and Microsoft’s Bing, which is powered by OpenAI’s GPT-4, have challenged Google’s flagship service by directly providing answers to questions instead of simply presenting people a list of links. This is the gap that Google is racing to bridge with its new features in Search.

Starting today, Google will show complete AI-generated answers in response to most search queries at the top of the results page in the US. Google first unveiled the feature a year ago at Google I/O in 2023, but so far, anyone who wanted to use the feature had to sign up for it as part of the company’s Search Labs platform that lets people try out upcoming features ahead of their general release. Google is now making AI Overviews available to hundreds of millions of Americans, and says that it expects it to be available in more countries to over a billion people by the end of the year. Reid wrote that people who opted to try the feature through Search Labs have used it “billions of times” so far, and said that any links included as part of the AI-generated answers get more clicks than if the page had appeared as a traditional web listing, something that publishers have been concerned about. “As we expand this experience, we’ll continue to focus on sending valuable traffic to publishers and creators,” Reid wrote.

In addition to AI Overviews, searching for certain queries around dining and recipes, and later with movies, music, books, hotels, shopping and more in English in the US will show a new search page where results are organized using AI. “[When] you’re looking for ideas, Search will use generate AI to brainstorm with you and create an AI-organized results page that makes it easy to explore,” Reid said in the blog post.

If you opt in to Search Labs, you’ll be able to access even more features powered by generative AI in Google Search. You’ll be able to get AI Overview to simplify the language or break down a complex topic in more detail. Here’s an example of a query asking Google to explain, for instance, the connection between lightning and thunder.

AI Spending Patterns : It's Not What You Think

By Tomasz Tunguz, Theory Ventures

Ramp published its quarterly spending trends & revealed how businesses are spending on AI. There are many great data points that underscore the growth in AI but there are important nuances in the patterns.

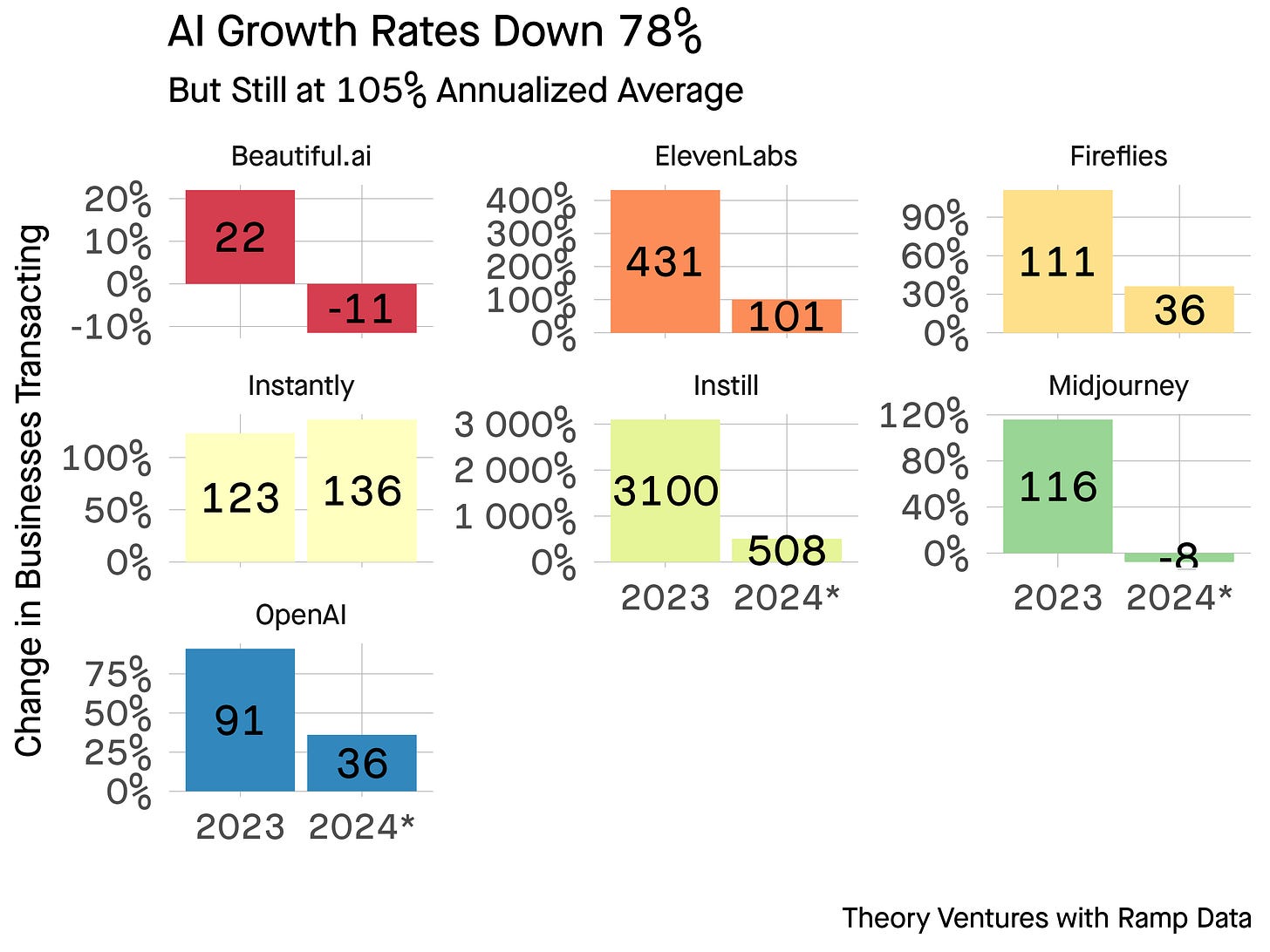

First, AI growth rates across the most popular vendors have fallen 78% annually. On average, these AI businesses are growing customer counts 105% ; the median is 38%.1

However, this isn’t a uniform trend. Some companies have seen contraction in customer counts. Both the companies with negative account retention still see relatively minor account churn : 15-25% account churn at these price points is common.

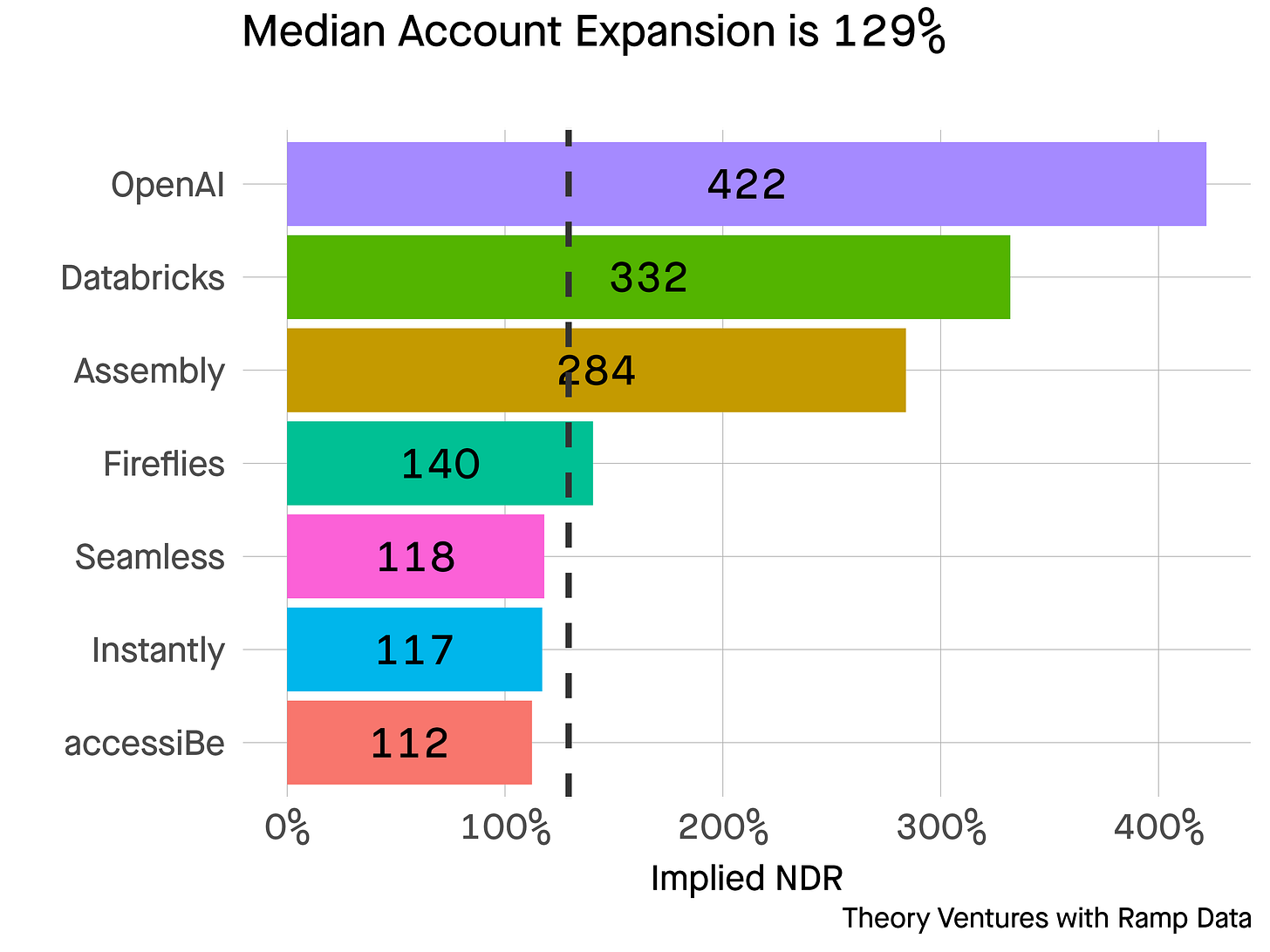

Second, the account expansion remains relatively strong. The top quartile software companies around 125% today & the companies above are very similar with a median of 129% net dollar retention (NDR).

These data points suggest that AI infused software companies’ metrics will asymptote to overall software metrics in time. NDR is almost equal. Growth rates remain very strong, but perhaps are seeing some attenuation.

In some categories, revenue growth is more challenged which highlights a challenge in very fast growing markets : quality of revenue.

The underlying technology shifting rapidly - the announcements from Google & OpenAI this week underscore the point; buyer preferences are evolving rapidly as they start to understand the relevant applications of the technology, the costs of deployment & the value gained from different applications ; vendor pricing dynamics also play a role. OpenAI reduced prices by 50% this week driving deflation in the market.

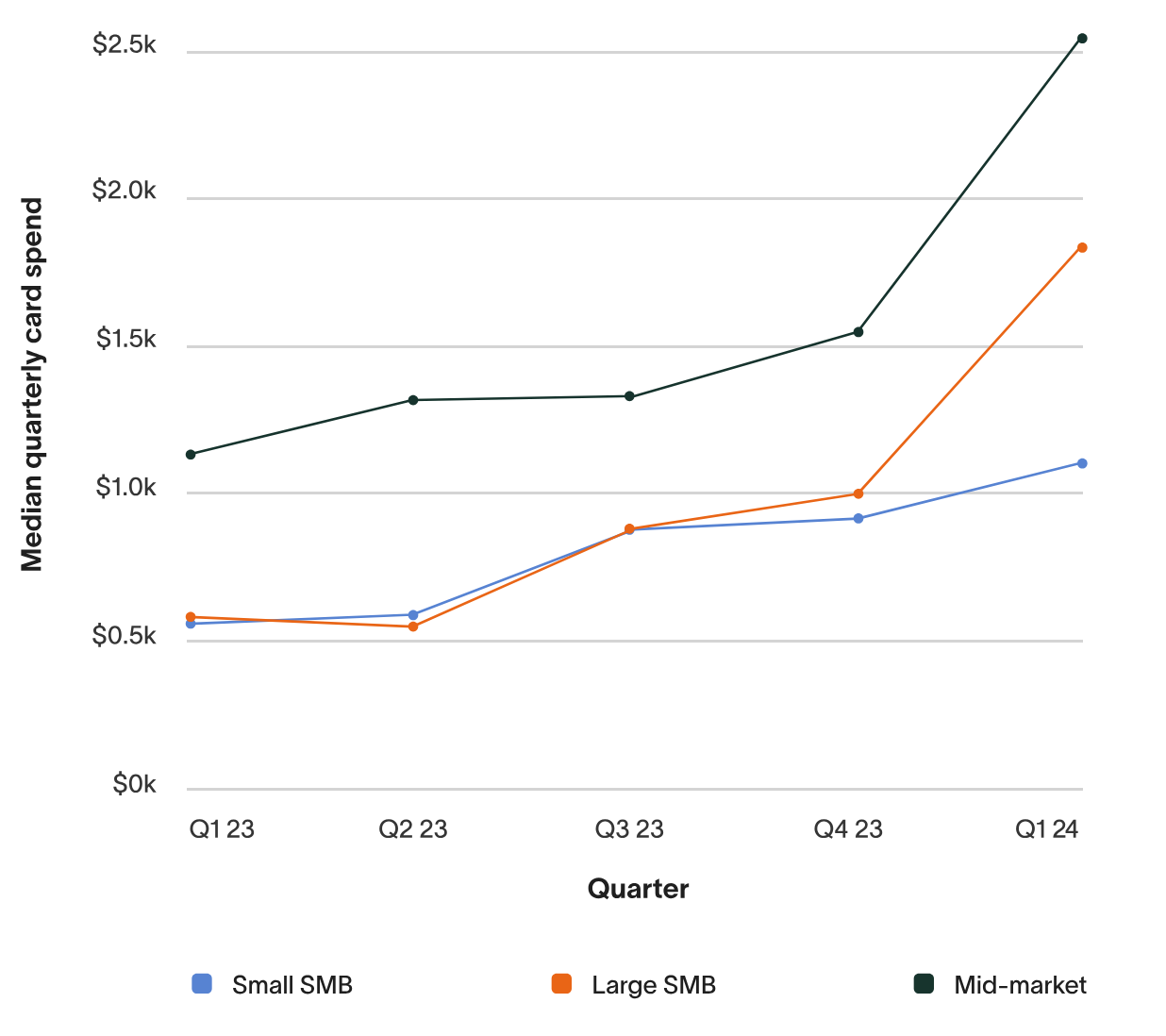

The additional data within the report underscores AI’s growth in the last year.

“Card transaction volume for AI software services jumped 293% year-over-year compared to an increase of just 6% in overall software transaction volume during the same period.”

This growth is true across company size.

News Of the Week

AI, Web3 And E-Commerce Led For New Unicorns In April 2024

Eleven companies joined The Crunchbase Unicorn Board in April 2024 — a big jump up from the six companies that joined in April 2023 but still a fraction of the 40 added to the board in April 2022.

The AI, Web3 and e-commerce sectors each added two companies to the board, Crunchbase data shows.

Of the 11 new unicorns created last month, six are U.S.-based, with one each from Germany, France, Brazil, Cayman Islands and Australia.

The largest round among the new unicorns was raised by Xaira Therapeutics, which announced an initial funding of $1 billion as it launched out of stealth.

Two of the new April unicorn companies were valued at a billion in their first year: Xaira Therapeutics and Cognition.

Two unicorn companies also exited the board at valuations above their last private value. Cybersecurity company Rubrik and cash reward company Ibotta both went public in April on the New York Stock Exchange.

And four companies already listed as unicorns increased valuation from prior rounds. Island, a secure browser company, doubled its valuation from its previous funding round six months ago. And FloQast, Rippling and Ramp each raised up rounds from prior fundings.

Let’s look at the new unicorns in April by sector.

AI

Cognition, maker of Devin, an AI software engineering teammate, raised a $175 million Series A funding led by Founders Fund. The less than 1-year-old San Francisco company was valued at $2 billion.

San Francisco-based Perplexity AI raised a $63 million funding led by Daniel Gross, former lead of AI at Y Combinator. The 1-year-old company was valued at $1 billion.

E-commerce

Berlin-based Autodoc, an online auto parts dealer, raised a minority funding led by Apollo. The 15-year-old company was valued at $2.5 billion.

Social video commerce app Flip raised a $144 million Series C led by Streamlined Ventures. The 4-year-old company based in Los Angeles was valued at $1.2 billion.

Web3

Blockchain platform Berachain, a 2-year-old company based in the Cayman Islands, raised a $100 million Series B. The funding, led by Framework Ventures and Brevan Howard Digital, valued the company at $1.5 billion.

New York-based Monad, a 1-year-old company, raised a $225 million Series A funding. The company is a layer 1 blockchain that can process Ethereum transactions faster. The funding was led by crypto investor Paradigm and was valued at $1 billion.

Biden administration quadruples import tariff for Chinese EVs

It comes as part of tariff increases on $18 billion worth of imports from China.

Sarah Fielding, Tue, May 14, 2024, 6:00 AM PDT

The United States is taking additional measures to quash China’s influence on its economy. The White House has announced a tremendous increase in tariffs on $18 billion worth of Chinese imports, including semiconductors, steel, aluminum and EVs. The latter’s tariff is set to increase fourfold, from 25 percent to 100 percent—a move that the White House claims “will protect American manufacturers.” The announcement further reported that China’s EV exports grew 70 percent between 2022 and 2023.

Other tariff increases, such as the jumps from 25 percent to 50 percent for semiconductors and solar cells, are also significant. Then there are batteries, which are getting a tariff raise from 7.5 percent to 25 percent. Medical products are also a part of this hike, with tariffs on needles and syringes increasing from zero percent to 50 percent.

Startup of the Week

ChatGPT now lets you import files directly from Google Drive, Microsoft OneDrive

May 16, 2024 3:22 PM

Credit: VentureBeat made with Midjourney V6

The news from OpenAI this week continues: today, the company announced it has updated its signature large language model (LLM) chatbot ChatGPT with the capability to import files directly from external cloud drives Google Drive and Microsoft OneDrive.

The capability is coming to paying subscribers to ChatGPT Plus, Team, and Enterprise users and will be available when using the new underlying GPT-4o model that OpenAI debuted on Monday, as well as older models.

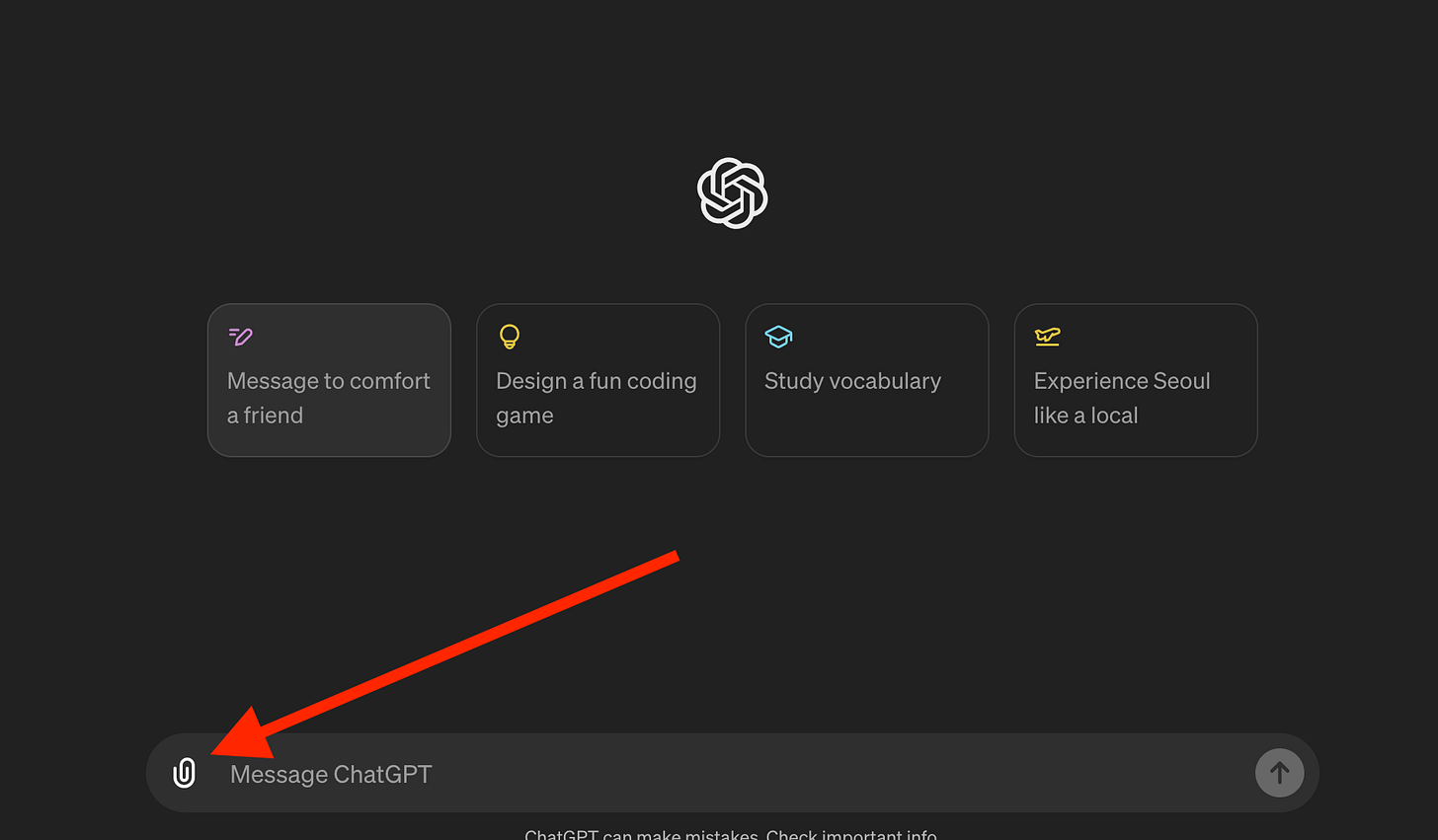

Users can find the new integration by clicking the tiny paper clip icon to the left of their prompt/text entry bar at the bottom of the ChatGPT default interface on desktop.

OpenAI posted an animated video (republished below) showing the workflow goes once you do this in its blog announcing the update and on its X account.

You’ll need to grant access from your Microsoft OneDrive or Google Drive accounts to use the feature, but once you do, you can select a range of file types, including spreadsheets, presentations, and documents, to import directly into ChatGPT.