A reminder for new readers. That Was The Week collects the best writing on critical issues in tech, startups, and venture capital. I selected the articles because they are of interest to me. The selections often include things I entirely disagree with. But they express common opinions, or they provoke me to think. The articles are snippets or the original. Click on the headline (in the contents or on each article) to go to the original or click the ‘More’ link at the bottom of each piece. I express my point of view in the editorial and the weekly video below.

Hats Off To This Week’s Contributors: @RyanMorrisonJer, @geneteare, @mgsiegler, @spyglass_feed, @saulausterlitz, @ClareMalone, @benedictevans, @mikeloukides, @ErikNaso, @kateclarktweets, @finkd, @mattbirchler, @imillhiser, @jaygoldberg, @ron_miller, @btaylor, @sierraplatform, @eladgil

Contents

AI and Everything Else - Benedict Evans from Slush

Elad Gil on AI

Editorial: And The Oscar Goes to Sora

OpenAI teased its new video creation model - Sora - this week.

In doing so it released a technical report and several examples of prompts and outputs.

Cautious to not over-state the end game the company said:

We explore large-scale training of generative models on video data. Specifically, we train text-conditional diffusion models jointly on videos and images of variable durations, resolutions and aspect ratios. We leverage a transformer architecture that operates on spacetime patches of video and image latent codes. Our largest model, Sora, is capable of generating a minute of high fidelity video. Our results suggest that scaling video generation models is a promising path towards building general purpose simulators of the physical world.

All of the videos are incredible, albeit only a minute or less each. My favorite is the Dogs in Snow video:

Although the ‘Closeup Man in Glasses’ is also wonderful.

I mention this because the speed at which AI is addressing new fields is - in my opinion - mind-boggling. Skills that take humans decades to perfect are being learned in months and are capable of scaling to infinite outputs using words, code, images, video, and sound.

It will take the advancement of robotics to tie these capabilities to physical work, but that seems assured to happen.

When engineering, farming, transport, or production meets AI then human needs can be addressed directly.

Sora winning an Oscar for Cinematography or in producing from a script or a book seems far-fetched. But it wasn’t so long ago that a tech company doing so would have been laughable, and now we have Netflix, Amazon Prime, and Apple TV Plus regularly being nominated or winning awards.

Production will increasingly be able to leverage AI.

Some will say this is undermining human skills, but I think the opposite. It will release human skills. Take the prompt that produced the Dogs in Snow video:

Prompt:

A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

I can imagine that idea and write it down. But my skills would not allow me to produce it. Sora opens my imagination and enables me to act on it. I guess that many humans have creative ideas that they are unable to execute….up to now. Sora, DallE, and ChatGPT all focus on releasing human potential.

Google released its Gemini 1.5 model this week (less than a month after releasing Gemini Ultra 1.0). Tom’s Guide has a summary and analysis by Ryan Morrison

Gemini Pro 1.5 has a staggering 10 million token context length. That is the amount of content it can store in its memory for a single chat or response.

This is enough for hours of video or multiple books within a single conversation, and Google says it can find any piece of information within that window with a high level of accuracy.

Jeff Dean, Google DeepMind Chief Scientist wrote on X that the model also comes with advanced multimodal capabilities across code, text, image, audio and video.

He wrote that this means you can “interact in sophisticated ways with entire books, very long document collections, codebases of hundreds of thousands of lines across hundreds of files, full movies, entire podcast series, and more."

In “needle-in-a-haystack” testing where they look for the needle in the vast amount of data stored in the context window, they were able to find specific pieces of information with 99.7% accuracy even with 10 million tokens of data.

All of this makes it easy to understand why Kate Clark at The Information penned a piece with the title: I Was Wrong. We Haven’t Reached Peak AI Frenzy

I will leave this week’s editorial with Ryan Morrison’s observation at the end of his article:

What we are seeing with these advanced multimodal models is the interaction of the digital and the real, where AI is gaining a deeper understanding of humanity and how WE see the world.

Essays of the Week

AI Leads New Unicorn Creation As Ranks Of $1B Startups Swells

February 13, 2024

Fewer startups became unicorns in 2023, but The Crunchbase Unicorn Board also became more crowded, as exits became even scarcer.

That means that 10 years after the term “unicorn” was coined to denote those private startups valued at $1 billion or more, there are over 1,500 current unicorn companies globally, collectively valued at more than $5 trillion based on their most recent valuations from funding deals.

All told, fewer than 100 companies joined the Unicorn Board in 2023, the lowest count in more than five years, an analysis of Crunchbase data shows.

Of the 95 companies that joined the board in 2023, AI was the leading sector, adding 20 new unicorns alone. Other leading unicorn sectors in 2023 included fintech (with 14 companies), cleantech and energy (12 each), and semiconductors (nine).

Based on an analysis of Crunchbase data, 41 companies joined the Unicorn Board from the U.S. and 24 from China in 2023. Other countries were in the single digits for new unicorns: Germany had four new companies, while India and the U.K. each had three.

New records nonetheless

Despite the slower pace of new unicorns, the Crunchbase board of current private unicorns has reached new milestones as fewer companies exited the board in 2023.

The total number of global unicorns on our board reached 1,500 at the start of 2024, which takes into account the exclusion of those that have exited via an M&A or IPO transaction. Altogether, these private unicorn companies have raised north of $900 billion from investors.

This year also marks a decade since investor Aileen Lee of Cowboy Ventures

coined the term unicorn for private companies valued at a billion dollars or more.

In a new report looking at the unicorn landscape 10 years later, Lee said she believes the unicorn phenomenon is not going away, despite a sharp downturn in venture funding in recent years. She expects more than 1,000 new companies in the U.S. alone will join the ranks in the next decade.

Unicorn exits

In 2023, 10 unicorn companies exited the board via an IPO, far fewer than in recent years. That contrasts with 20 companies in 2022 and 113 in 2021.

However, M&A was more active in 2023. Sixteen unicorn companies were acquired in 2023 — up from 2022 when 11 companies were acquired and slightly down from 2021 with 21 companies exiting via an acquisition.

December numbers

Eight new companies joined The Crunchbase Unicorn Board in December 2023. The highest monthly count last year for new unicorns was 10 and the lowest was two.

Of the new unicorns, three are artificial intelligence companies. Other sectors that minted unicorns in December include fintech, cybersecurity, food and beverage, and health care.

The new unicorn companies minted in December 2023 were:

Behold: The Sports Streaming Bundle

It just makes sense. Sports was the last thing holding together the cable TV bundle. Now it will be the start of the streaming bundle.

That's my 5-minute reaction to the truly huge news that Disney, Warner, and Fox are launching a new sports streaming service, combining their various sports rights into one package. Well, presumably. The details are still quite thin at this point. Clearly, several entities were racing to this story, with both WSJ and Bloomberg claiming "scoops" by publishing paragraph-long stories with only the high level facts. I'm linking to Varietyabove, which at least has a few more details, including (canned) quotes from Bob Iger, Lachlan Murdoch, and David Zaslav.

Fox Corp., Warner Bros. Discovery and Disney are set to launch a new streaming joint venture that will make all of their sports programming available under a single broadband roof, a move that will put content from ESPN, TNT and Fox Sports on a new standalone app and, in the process, likely shake up the world of TV sports.

The three media giants are slated to launch the new service in the fall. Subscribers would get access to linear sports networks including ESPN, ESPN2, ESPNU, SECN, ACCN, ESPNEWS, ABC, Fox, FS1, FS2, BTN, TNT, TBS, truTV and ESPN+, as well as hundreds of hours from the NFL, NBA, MLB and NHL and many top college divisions. Pricing will be announced at a later date.

Each company would own one third of the new outlet and license their sports content to it on a non-exclusive basis. The service would have a new brand and an independent management team

Yes, this is essentially running the Hulu playbook of old, but only for sports content. No, that ultimately didn't end well, but Hulu had a decent enough run before egos got involved.1 Here, the egos are once again being (at least temporarily) set aside to do something obvious: make money. Sports is the one bit of content that most people watch in one form or another, live no less (hence why it was keeping the cable bundle together). And increasingly, with the rise of streaming, it was becoming impossible to figure out what game was on, where. You could get access to most games online now, but it might require buying four or five different services. And again, then finding which one the game you wanted was actually on.

40 Years Ago, This Ad Changed the Super Bowl Forever

An oral history of Apple’s groundbreaking “1984” spot, which helped to establish the Super Bowl as TV’s biggest commercial showcase.

By Saul Austerlitz

Published Feb. 9, 2024Updated Feb. 10, 2024

Four decades ago, the Super Bowl became the Super Bowl.

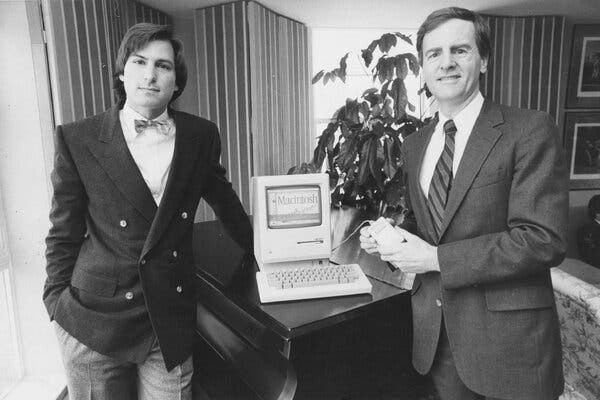

It wasn’t because of anything that happened in the game itself: On Jan. 22, 1984, the Los Angeles Raiders defeated Washington 38-9 in Super Bowl XVIII, a contest that was mostly over before halftime. But during the broadcast on CBS, a 60-second commercial loosely inspired by a famous George Orwell novel shook up the advertising and the technology sectors without ever showing the product it promoted. Conceived by the Chiat/Day ad agency and directed by Ridley Scott, then fresh off making the seminal science-fiction noir “Blade Runner,” the Apple commercial “1984,” which was intended to introduce the new Macintosh computer, would become one of the most acclaimed commercials ever made. It also helped to kick off — pun partially intended — the Super Bowl tradition of the big game serving as an annual showcase for gilt-edged ads from Fortune 500 companies. It all began with the Apple co-founder Steve Jobs’s desire to take the battle with the company’s rivals to a splashy television broadcast he knew nothing about.

In recent interviews, several of the people involved in creating the “1984” spot — Scott; John Sculley, then chief executive of Apple; Steve Hayden, a writer of the ad for Chiat/Day; Fred Goldberg, the Apple account manager for Chiat/Day; and Anya Rajah, the actor who famously threw the sledgehammer — looked back on how the commercial came together, its inspiration and the internal objections that almost kept it from airing. These are edited excerpts from the conversations.

JOHN SCULLEY On Oct. 19, 1983, we’re all sitting around in Steve [Jobs’s] building, the Mac building, and the cover of Businessweek says, “The Winner is … IBM.” We were pretty deflated because this was the introduction of the IBM PCjr, and we hadn’t even introduced the Macintosh yet.

STEVE HAYDEN Jobs said, “I want something that will stop the world in its tracks.” Our media director, Hank Antosz, said, “Well, there’s only one place that can do that — the Super Bowl.” And Steve Jobs said, “What’s the Super Bowl?” [Antosz] said, “Well, it’s a huge football game that attracts one of the largest audiences of the year.” And [Jobs] said, “I’ve never seen a Super Bowl. I don’t think I know anybody who’s seen a Super Bowl.”

FRED GOLDBERG The original idea was actually done in 1982. We presented an ad [with] a headline, which was “Why 1984 Won’t Be Like ‘1984,’” to Steve Jobs, and he didn’t think the Apple III was worthy of that claim.

Is the Media Prepared for an Extinction-Level Event?

Ads are scarce, search and social traffic is dying, and readers are burned out. The future will require fundamentally rethinking the press’s relationship to its audience.Clare Malone

February 10, 2024

My first job in media was as an assistant at The American Prospect, a small political magazine in Washington, D.C., that offered a promising foothold in journalism. I helped with the print order, mailed checks to writers—after receiving lots of e-mails asking, politely, Where is my money?—and ran the intern program. This last responsibility allowed me a small joy: every couple of weeks, a respected journalist would come into the office for a brown-bag lunch in our conference room, giving our most recent group of twentysomethings a chance to ask for practical advice about “making it.” One man told us to embrace a kind of youthful workaholism, before we became encumbered by kids and families. An investigative reporter implored us to file our taxes and to keep our personal lives in order—never give the rich and powerful a way to undercut your journalism. But perhaps the most memorable piece of advice was from a late-career writer who didn’t mince words. You want to make it in journalism, he said? Marry rich. We laughed. He didn’t.

I’ve thought a lot about that advice in the past year. A report that tracked layoffs in the industry in 2023 recorded twenty-six hundred and eighty-one in broadcast, print, and digital news media. NBC News, Vox Media, Vice News, Business Insider, Spotify, theSkimm, FiveThirtyEight, The Athletic, and Condé Nast—the publisher of The New Yorker—all made significant layoffs. BuzzFeed News closed, as did Gawker. The Washington Post, which lost about a hundred million dollars last year, offered buyouts to two hundred and forty employees. In just the first month of 2024, Condé Nast laid off a significant number of Pitchfork’s staff and folded the outlet into GQ; the Los Angeles Times laid off at least a hundred and fifteen workers (their union called it “the big one”); Time cut fifteen per cent of its union-represented editorial staff; the Wall Street Journal slashed positions at its D.C. bureau; and Sports Illustrated, which had been weathering a scandal for publishing A.I.-generated stories, laid off much of its staff as well. One journalist recently cancelled a networking phone call with me, writing, “I’ve decided to officially take my career in a different direction.” There wasn’t much I could say to counter that conclusion; it was perfectly logical.

“Publishers, brace yourselves—it’s going to be a wild ride,” Matthew Goldstein, a media consultant, wrote in a January newsletter. “I see a potential extinction-level event in the future.” Some of the forces cited by Goldstein were already well known: consumers are burned out by the news, and social-media sites have moved away from promoting news articles. But Goldstein also pointed to Google’s rollout of A.I.-integrated search, which answers user queries within the Google interface, rather than referring them to outside Web sites, as a major factor in this coming extinction. According to a recent Wall Street Journalanalysis, Google generates close to forty per cent of traffic across digital media. Brands with strong home-page traffic will likely be less affected, Goldstein wrote—places like Yahoo, the Wall Street Journal, the New York Times, the Daily Mail, CNN, the Washington Post, and Fox News. But Web sites that aren’t as frequently typed into browsers need to “contemplate drastic measures, possibly halving their brand portfolios.”

What will emerge in the wake of mass extinction, Brian Morrissey, another media analyst, recently wrote in his newsletter, “The Rebooting,” is “a different industry, leaner and diminished, often serving as a front operation to other businesses,” such as events, e-commerce, and sponsored content. In fact, he told me, what we are witnessing is nothing less than the end of the mass-media era. “This is a delayed reaction to the commercial Internet itself,” he said. “I don’t know if anything could have been done differently.”

Video of the Week

AI and Everything Else - Benedict Evans from Slush

AI of the Week

The OpenAI Endgame

Thoughts about the outcome of the NYT versus OpenAI copyright lawsuit

February 13, 2024

Since the New York Times sued OpenAI for infringing its copyrights by using Times content for training, everyone involved with AI has been wondering about the consequences. How will this lawsuit play out? And, more importantly, how will the outcome affect the way we train and use large language models?

There are two components to this suit. First, it was possible to get ChatGPT to reproduce some Times articles very close to verbatim. That’s fairly clearly copyright infringement, though there are still important questions that could influence the outcome of the case. Reproducing the New York Times clearly isn’t the intent of ChatGPT, and OpenAI appears to have modified ChatGPT’s guardrails to make generating infringing content more difficult, though probably not impossible. Is this enough to limit any damages? It’s not clear that anybody has used ChatGPT to avoid paying for a NYT subscription. Second, the examples in a case like this are always cherry-picked. While the Times can clearly show that OpenAI can reproduce some articles, can it reproduce any article from the Times’ archive? Could I get ChatGPT to produce an article from page 37 of the September 18, 1947 issue? Or, for that matter, an article from the Chicago Tribune or the Boston Globe? Is the entire corpus available (I doubt it), or just certain random articles? I don’t know, and given that OpenAI has modified GPT to reduce the possibility of infringement, it’s almost certainly too late to do that experiment. The courts will have to decide whether inadvertent, inconsequential, or unpredictable reproduction meets the legal definition of copyright infringement.

The more important claim is that training a model on copyrighted content is infringement, whether or not the model is capable of reproducing that training data in its output. An inept and clumsy version of this claim was made by Sarah Silverman and others in a suit that was dismissed. The Authors’ Guild has its own version of this lawsuit, and it is working on a licensing model that would allow its members to opt in to a single licensing agreement. The outcome of this case could have many side-effects, since it essentially would allow publishers to charge not just for the texts they produce, but for how those texts are used.

It is difficult to predict what the outcome will be, though easy enough guess. Here’s mine. OpenAI will settle with the New York Times out of court, and we won’t get a ruling. This settlement will have important consequences: it will set a de-facto price on training data. And that price will no doubt be high. Perhaps not as high as the Times would like (there are rumors that OpenAI has offered something in the range of $1 million to $5 million), but sufficiently high enough to deter OpenAI’s competitors.

$1M is not, in and of itself, a terribly high price, and the Times reportedly thinks that it’s way too low; but realize that OpenAI will have to pay a similar amount to almost every major newspaper publisher worldwide in addition to organizations like the Authors Guild, technical journal publishers, magazine publishers, and many other content owners. The total bill is likely to be close to $1 billion, if not more, and as models need to be updated, at least some of it will be a recurring cost. I suspect that OpenAI would have difficulty going higher, even given Microsoft’s investments—and, whatever else you may think of this strategy—OpenAI has to think about the total cost. I doubt that they are close to profitable; they appear to be running on an Uber-like business plan, in which they spend heavily to buy the market without regard for running a sustainable business. But even with that business model, billion-dollar expenses have to raise the eyebrows of partners like Microsoft.

The Times, on the other hand, appears to be making a common mistake: overvaluing its data. Yes, it has a large archive—but what is the value of old news? Furthermore, in almost any application but especially in AI, the value of data isn’t the data itself; it’s the correlations between different datasets. The Times doesn’t own those correlations any more than I own the correlations between my browsing data and Tim O’Reilly’s. But those correlations are precisely what’s valuable to OpenAI and others building data-driven products.

OpenAI Sora– The most realistic AI-generated video to date

OpenAI Sora is an AI text-to-video model that has achieved incredibly realistic video that is hard to tell it is AI. It’s very life-like but not real. I think we have just hit the beginning of some truly powerful AI-generated video that could change the game for stock footage and more. Below are two examples of the most realistic AI prompt-generated videos I have seen.

Prompt:

A stylish woman walks down a Tokyo street filled with warm glowing neon and animated city signage. She wears a black leather jacket, a long red dress, and black boots, and carries a black purse. She wears sunglasses and red lipstick. She walks confidently and casually. The street is damp and reflective, creating a mirror effect of the colorful lights. Many pedestrians walk about.

Prompt:

Drone view of waves crashing against the rugged cliffs along Big Sur’s garay point beach. The crashing blue waters create white-tipped waves, while the golden light of the setting sun illuminates the rocky shore. A small island with a lighthouse sits in the distance, and green shrubbery covers the cliff’s edge. The steep drop from the road down to the beach is a dramatic feat, with the cliff’s edges jutting out over the sea. This is a view that captures the raw beauty of the coast and the rugged landscape of the Pacific Coast Highway.

Prompt:

Animated scene features a close-up of a short fluffy monster kneeling beside a melting red candle. The art style is 3D and realistic, with a focus on lighting and texture. The mood of the painting is one of wonder and curiosity, as the monster gazes at the flame with wide eyes and open mouth. Its pose and expression convey a sense of innocence and playfulness, as if it is exploring the world around it for the first time. The use of warm colors and dramatic lighting further enhances the cozy atmosphere of the image.

Sora can generate videos up to a minute long while maintaining visual quality and adherence to the user’s prompt. OpenAI SOra states they are teaching AI to understand and simulate the physical world in motion, with the goal of training models that help people solve problems that require real-world interaction.

I Was Wrong. We Haven’t Reached Peak AI Frenzy.

By Kate Clark

Feb 15, 2024, 4:16pm PST

After Sam Altman’s sudden firing last year, I argued the chaos that followed his short-lived ouster would inject a healthy dose of caution into venture investments in artificial intelligence companies. I figured we’d finally reached the peak of the AI venture capital frenzy when a threatened employee exodus from OpenAI risked sending the value of the $86 billion AI juggernaut almost to zero.

There was plenty of other proof that the hype for generative AI was fading. Investors were openly saying they planned to be a lot tougher on valuation negotiations and would ask startups harder questions about governance. Some companies had begun to consider selling themselves due to the high costs of developing AI software. And an early darling of the AI boom, AI-powered writing tool Jasper, had become the butt of jokes when it slashed internal revenue projections and cut its internal valuation after having won a $1.5 billion valuation in 2022.

I forgot that everyone in Silicon Valley suffers from short-term memory loss. After a week sipping boxed water with venture capitalists from South Park to Sand Hill Road, I’m convinced I called the end of the AI frenzy far too soon.

In fact, I expect this year will deliver more cash into the hands of U.S. AI startups than last year, when those companies raised a total of $63 billion, according to PitchBook data.

Altman’s fundraising ambitions will surely boost the total. A recent report from The Wall Street Journal said Altman plans to raise trillions of dollars to develop the AI chips needed to create artificial general intelligence, software that can reason the way humans do. Even if that number is actually much smaller, talk of such goals lifts the ceiling for other startup founders, who are likely to think even bigger and to be more aggressive in their fundraising.

Investor appetite for AI companies is still growing, too. These investors claimed last fall that they were done with the FOMO-inspired deals, but they’re pushing checks on the top AI companies now harder than ever.

News Of the Week

I tried Vision Pro. Here's my take

The Quest 3 is better than you might expect

Posted by Matt Birchler

13 Feb 2024

Alex Heath for The Verge: Zuckerberg says Quest 3 is “the better product” vs. Apple’s Vision Pro

He says the Quest has a better “immersive” content library than Apple, which is technically true for now, though he admits that the Vision Pro is a better entertainment device. And then there’s the fact that the Quest 3 is, as Zuck says, “like seven times less expensive.”

I currently own both headsets and while I’m very excited about the potential in the Vision Pro, I actually find it hard to fully disagree with Zuck on this one. I think a lot of people have only used the Vision Pro would be surprised how well the Quest 3 does some things in comparison.

For example, the pass-through mode is definitely not quite as good as the Vision Pro’s, but it’s closer than you might expect. And while people are rightly impressed with how well the Vision Pro has windows locked in 3D space, honestly the Quest 3 is just as good at this in my experience. When it comes to comfort, I do think the Vision Pro is easier to wear for longer periods, but I find it more finicky to get in just the right spot in front of my eyes, while the Quest 3 seems to have a larger sweet spot. And let’s not even talk about the field of view, which is way wider on the Quest to the point of being unnoticeable basically all the time. I kinda think field of view will be similar to phone bezels in that you get used to what you have and anything more seems huge — you can get used to the Vision Pro’s narrower field of view, but once you’re used to wider, it’s hard to not notice when going back.

The Vision Pro has some hardware features that help it rise above (the massively higher resolution screen jumps to mind), but I’m just saying that if you’re looking for everything to be 7x better to match the price difference, I don’t think that’s there.

Beyond this, the products are quite different, though. As Zuckerberg says, the Quest 3 is more focused on fully immersive VR experiences, and while the Vision Pro has a little of that right now, it’s not really doing the same things. And when it comes to gaming it’s not even close. The Quest 3 has a large library of games available and that expands to almost every VR game ever made with Steam Link.

On the other hand, the Vision Pro is much for a “computer” than the Quest ever was. If you can do it on a Mac or an iPad, you can probably already do it on the Vision Pro. And I’m not talking about finding some weird alternate version of your task manager or web browser that doesn’t sync with anything else in your life, I’m talking about the apps you already know and love. This is huge and it’s Apple leveraging its ecosystem to make sure you can seamlessly move from Mac to iPhone to iPad to Vision Pro. And if you can’t install something from the App Store, the web browser is just as capable as Safari on the iPad. If all else fails, you can always just bring your full Mac into your space as well. I will say the Quest 3 can do this and has the advantage of working with Windows as well, but if you have a Mac, it’s much, much better.

This is more words than I expected to write about a CEO saying his product is better than the competition’s (shocker), but I do think that Zuck’s statement is less insane than some may think it to be.

The Supreme Court will decide if the government can seize control of YouTube and Twitter

We’re about to find out if the Supreme Court still believes in capitalism.

By Ian Millhiser Feb 15, 2024, 7:00am EST

Ian Millhiser is a senior correspondent at Vox, where he focuses on the Supreme Court, the Constitution, and the decline of liberal democracy in the United States. He received a JD from Duke University and is the author of two books on the Supreme Court.

In mid-2021, about a year before he began his longstanding feud with the biggest employer in his state, Florida’s Republican Gov. Ron DeSantis signed legislation attempting to seize control of content moderation at major social media platforms such as YouTube, Facebook, or Twitter (now called X by Elon Musk). A few months later, Texas Gov. Greg Abbott, also a Republican, signed similar legislation in his state.

Both laws are almost comically unconstitutional — the First Amendment does not permit the government to order media companies to publish content they do not wish to publish — and neither law is currently in effect. A federal appeals court halted the key provisions of Florida’s law in 2022, and the Supreme Court temporarily blocked Texas’s law shortly thereafter (though the justices, somewhat ominously, split 5-4 in this later case).

Nevertheless, the justices have not yet weighed in on whether these two unconstitutional laws must be permanently blocked, and that question is now before the Court in a pair of cases known as Moody v. NetChoice and NetChoice v. Paxton.

The stakes in both cases are quite high, and the Supreme Court’s decision is likely to reveal where each one of the Republican justices falls on the GOP’s internal conflict between old-school free market capitalists and a newer generation that is eager to pick cultural fights with business.

Arm Results Set The World On Fire

February 13, 2024 · by D/D Advisors · in Analyst Decoder Ring. ·

Arm reported its second set of earnings as a (once again) public company last week. These numbers were particularly strong, well above consensus for both the current and guided quarters. Arm stock rallied strongly on the results up ~30% for the week. These numbers were important as they go a long way to establishing the company’s credibility with the Street in a way their prior results did not.

That being said, we saw things we both liked and disliked in their numbers. Here are our highlights of those:

Positive: Growing Value Capture. One of our chief concerns with the company since IPO has been the low value they capture per licensed chip shipped – roughly $0.11 per chip at the IPO. That figure continued to inch higher in the latest results, but critically they pointed out that their royalty rate doubles with the latest version of their IP (v9). This does not mean that all of their royalty rates are going to double any time soon, but it does point very much in the right direction. Critically, they noted this rate increase applies to architectural licenses as well.

Negative: The Model is Complex. Judging from the number of questions management fielded on the call about this rate increase no one really knows how to model Arm. The company has a lot of moving parts in its revenue mix, and they have limits to their ability to communicate some very important parts of their model. We think that at some point the company would be well served by providing some clearer guide posts on how to build these models or they risk the Street always playing catch up with a wide swing of expectations each quarter.

Positive: Premium Plan Conversion. The company said three companies converted from their AFA plan to the ATA model. We will not get into the details of those here, but these can best be thought of in software terms with customers on low priced subscription plans converting to Premium subscription plans. This is a good trend, and management expressed a high degree of confidence that they expect to see it continue. They have spent a few years putting these programs in place and seem to have thought them through. This matters particularly because these programs are well suited for smaller, earlier-stage companies. The old Arm struggled to attract new customers in large part because of the high upfront costs of Arm licenses. Programs like AFA and ATA could go a long way to redressing those past wrongs.

Negative: China remains a black box. Arm China is of course a constant source of speculation. In the latest quarter it looks like a large portion of growth came from China which does not exactly square with other data coming from China right now. It is still unclear to us how much of Arm’s revenues from China’s handset companies gets booked through Arm China as a related party transaction and how much is direct. Investors are confused too. There is no easy solution to this problem, digging too hard into Arm China’s numbers is unlikely to make anyone happy with the answers, but hopefully over time it all settles down.

Positive: Growing Complexity of Compute. Management repeatedly mentioned this factor, noting that this leads to more chips and more Arm cores shipping in the marketplace. Some of this is tied to AI, but we think the story is broader than that. It is going to be tempting to see much of Arm’s growth as riding the AI wave, but this does not fully capture the situation. The AI story is largely about GPUs, which are not particularly heavy with Arm cores. But those GPUs still need some CPU attach, and AI accelerators can sometimes be good Arm targets.

Negative: Diversification. Arm remains heavily dependent on smartphones, and we suspect the return to inventory stocking by handset makers is playing a big role in their guidance. When asked about segmentation of their results the company declined to update the model provided during the IPO. We hope to see some diversification here when they do update their figures later in the year.

Overall, the company did a good job in the quarter. They still have some kinks to work out with their communication to the Street, but this was a good second step as a public company.

Startup of the Week

Bret Taylor’s new AI company aims to help customers get answers and complete tasks automatically

Ron Miller @ron_miller / 6:36 AM PST•February 13, 2024

Image Credits: mi-vector / Getty Images

We’ve been hearing about former Salesforce co-CEO Bret Taylor’s latest gig since he announced he was leaving the CRM giant in November 2022. Last February we heard he was launching an AI startup built with former Google employee Clay Bavor. Today, the two emerged with a new conversational AI company called Sierra with some bold claims about what it can do.

At its heart, the new company is a customer service bot. That’s not actually all that Earth-shattering, but the company claims that it’s much more than that, with its software going beyond being an extension of a FAQ page and actually taking actions on behalf of the customer.

“Sierra agents can do so much more than just answer questions. They take action using your systems, from upgrading a subscription in your customer database to managing the complexities of a furniture delivery in your order management system. Agents can reason, problem solve and make decisions,” the company claimed in a blog post.

Having worked with large enterprise customers at Salesforce, Taylor certainly understands that issues like hallucinations, where a large language model sometimes makes up an answer when it lacks the information to answer accurately, is a serious problem. That’s especially true for large companies, whose brand reputation is at stake. The company claims that it is solving hallucination issues.

Image Credits: Sierra

At the same time, it’s connecting to other enterprise systems to undertake tasks on behalf of the customer without humans being involved. These are both big audacious claims and will be challenging to pull off.