Contents

Editorial: Abundance and You: Everything is Possible but Nothing is Inevitable

Essays

AI

Reddit sues Anthropic for allegedly not paying for training data

NotebookLM is adding a new way to share your own notebooks publicly.

Reddit Sues Anthropic, Accusing It of Illegally Using Data From Its Site

It’s not your imagination: AI is speeding up the pace of change

Forget FAANG, these tech investors are betting on MANGO in the AI race

Venture Capital

AI Startups Burn Through Cash 2x as Fast, and 10 Other Top Learnings from SVB’s Latest in Enterprise

Carta’s Head of Insights on Dilution, Equity, and the State of the Market Right Now

Is DPI The Only Thing That Matters? with Sam Lessin, Jason Lemkin & Rory O’Driscoll

Global Venture Funding Slowed In May While Startup M&A Picked Up, Led By Large OpenAI Acquisitions

Liquidity, LPs, and the Long Game | Beezer Clarkson (Partner, Sapphire Ventures)

Media

Politics

Military Tech

Government Overreach

GeoPolitics

Interview of the Week

Startup of the Week

Post of the Week

Editorial: Abundance and You: Everything is Possible but Nothing is Inevitable

Christina Criddle, writing for the FT in this week’s Essay of the Week, says:

While we wait for the AI shakeout, we should make sure the vision of abundance is backed by a real plan: training workers, creating economic policies and ensuring a true trickling down of rewards. And if these promises are to be taken seriously, we should all be preparing for a widely different future.

The Promise and the Problem

The latest vision for AI promises a world transformed by artificial intelligence—a future of abundance where traditional careers become as obsolete as serfdom. As David Dalrymple from the UK's Advanced Research and Invention Agency puts it: "If things go well, the entire concept of a career will seem as old-fashioned as the concept of being a serf seems to be now."

Tech leaders from OpenAI's Sam Altman to Google DeepMind's Demis Hassabis genuinely believe AI will create a "post-scarcity" economy by dramatically boosting intelligence, energy availability, and economic output. The logic appears compelling: robotics and AI will inevitably handle most production, driving costs toward zero and wealth toward infinity.

Yet here lies a fundamental confusion that pervades these discussions: abundance may be an economic inevitability, but it is not a political guarantee.

The Crucial Distinction

Two distinct conversations are often conflated. The first involves economics—the productive capacity of civilization, measured roughly by global GDP and production costs. Abundance advocates are likely correct here. Technological progress has consistently increased output while reducing costs, and AI significantly accelerates this trend.

The second, more crucial conversation concerns distribution—who benefits from expanded wealth and how society adapts to new economic realities. This is a purely political and social matter, requiring deliberate human choices and collective action. Nothing about increased productivity automatically ensures prosperity is shared broadly.

Lessons from History

The eight-hour workday and forty-hour workweek didn't naturally arise from industrial abundance; they resulted from deliberate political struggles and activism. Similarly, the social safety nets defining developed societies were not spontaneous but fought for through decades of political effort.

Today's vision of AI-driven abundance demands the same active political engagement. A world without traditional employment could be transformative—providing space for creativity, family, learning, and community involvement. Yet it could equally lead to social disruption and extreme wealth concentration among the few who control AI systems.

Beyond the Big Tech Blame Game

Acknowledging this reframes important issues. First, technology companies developing transformative AI systems aren't inherently villainous—they follow the economic logic essential for achieving abundance. Indeed they are required as the executors of what is possible.

Second, the challenge isn't halting technological progress but ensuring its benefits reach everyone. The solution isn't to slow innovation but to accelerate our political and social evolution to match economic advances.

A Vision for Universal Abundance

In a genuinely abundant economy, essentials like healthcare, housing, education, and civic participation should be universal. Additionally, abundance enables widespread access to luxuries such as travel, arts, scientific exploration, and robust democratic participation. These must not be privileges tied to geography or class but fundamental rights inherent in an advanced civilized society.

To realize this vision, society must embrace economic frameworks decoupling income from traditional employment, supported by policies that ensure AI-generated wealth serves the public interest rather than private accumulation.

The Path Forward

The abundance economy isn't a distant fantasy—it is emerging today. Yet its successful development hinges entirely on collective decision-making and proactive governance. We must go beyond simplistic techno-optimism and reflexive skepticism, engaging instead in deliberate political discussions to shape outcomes.

What new social contracts can replace traditional employment relationships? How do we leverage the human gains of AI systems to benefit life?

These are decisions we must actively make, not outcomes predetermined by economic forces alone. Economic trends might be inevitable, but political choices remain ours. The future isn't something that happens to us—it is something we actively create.

I covered some of these issues in last week’s editorial (We Are Accelerating to Abundance) and in the discussion on the video blog with Andrew Keen and in the previous editorial (What is the Truth).

The age of abundance is arriving whether we prepare or not. Our collective choices today will determine if we build a balanced, equitable world or simply replicate existing inequalities at unprecedented scales.

The growth of wealth will need social and political decisions if it is to benefit humanity and not only a few. Everything is possible, but nothing is inevitable.

Essays

Silicon Valley’s abundance of hype over abundance

Christina Criddle • FT • June 5, 2025

Technology•AI•ArtificialIntelligence•EconomicImpact•FutureOfWork•Essays

Silicon Valley leaders are championing artificial intelligence (AI) as the pathway to a future of abundance, portraying it as a transformative force capable of eradicating global problems like poverty and disease. Executives such as OpenAI’s Sam Altman, Microsoft’s Satya Nadella, and Google DeepMind’s Demis Hassabis believe AI will usher in an era of "post-scarcity" by boosting intelligence, energy availability, and economic output. This optimistic narrative reframes current disruptions, like job displacement, as temporary hurdles on the route to prosperity.

However, critics, including Dario Amodei of Anthropic, caution against downplaying AI’s short-term impact—predicting significant job losses and economic upheaval. While historical proponents like Peter Diamandis and futurists have long touted technology-driven abundance, current realities demand tangible strategies. Acknowledging the potential for future economic paradigms without traditional careers, experts stress the importance of retraining workers, redesigning policies, and ensuring equitable distribution of AI-generated wealth. The overarching message is clear: while AI holds promise, its success depends on careful preparation and inclusive planning.

It's time for Abundance to get mad

Noahpinion • June 2, 2025

Politics • Strategy • Democratic Party • Progressivism •Abundance Movement • Essays

One thing I’ve learned, in my time as a pundit, is that many people claim to have read books that they have not actually read. This is true of giant dense tomes like Thomas Piketty’s Capital in the Twenty-First Century, but also — perhaps surprisingly — true of light, quick reads like Ezra Klein and Derek Thompson’s Abundance. The text of Abundance is only 222 pages long, and the font is large. And yet none of the book’s progressive critics seem to have any idea what Klein and Thompson actually wrote. For example, Aaron Regunberg, a lawyer who has been on a crusade against the abundance movement, writes:

[Y]es, abundance is about defeating progressives and remaking the Democratic Party as a libertarian, Never Trump Republican Party. Great, can we stop pretending it’s anything but that?

This is, of course, complete nonsense. To believe that Ezra Klein and Derek Thompson are libertarians or Republicans requires never having read anything that either of them wrote (or at least, pretending not to have done so). But most importantly, Abundance is all about how the government should have more power to build green energy, provide health care, and accomplish other progressive goals. You can argue with that idea, but you can’t call it libertarian in good faith.

But my favorite example comes from David Austin Walsh, America’s favorite angry history postdoc, who said all kinds of nasty things about Abundance before admitting, quite openly, that he hadn’t actually read it:

The Abundance critics continue to do a remarkably poor job of engaging the book or the abundance movement in general on an intellectual level. In general, they tend to be poorly informed, lazy, and sloppy.

And those critics aren’t winning. At the elite level, Democrats are embracing the abundance idea, and there’s plenty of grassroots interest too. I recommend the recent excellent writeup in the Wall Street Journal by Molly Ball. Some excerpts:

Democratic politicians are rushing to embrace the new [abundance] mantra. New York Gov. Kathy Hochul, Minnesota Gov. Tim Walz, Maryland Gov. Wes Moore and Colorado Gov. Jared Polis have all name-checked it publicly. New Jersey Sen. Cory Booker discussed it at length in his recent 25-hour Senate speech. Former Vice President Kamala Harris and the U.S. Senate’s Democratic caucus are among the many politicians who have recently sought the authors’ counsel. Not one but two congressional caucuses have recently formed to push legislation advancing the ideas laid out in the book…

It isn’t just party elders who have bought into the idea. Local Abundance clubs have formed in multiple cities and on college campuses…The book by Ezra Klein and Derek Thompson has been a surprise hit, with a sold-out national tour, hundreds of thousands of copies sold and two months on the bestseller list since its release in March.

Marc Andreessen: What We Got Right—and Wrong—About the Future of Tech

Youtube • a16z • June 2, 2025

Technology•AI•Innovation•FutureTech•VentureCapital•Essays

The video features Marc Andreessen, a prominent venture capitalist and co-founder of Andreessen Horowitz, reflecting on the predictions and realities that have shaped the future of technology. He explores both the successes and missteps in foresight concerning technological evolution, highlighting the complexities in anticipating the path of innovation.

Reflection on Technological Predictions

Andreessen acknowledges that while some expectations about the tech landscape have materialized accurately, others have diverged due to unforeseen challenges and shifts in societal behavior. Key insights include:

The rise and impact of the internet and mobile computing, which have fundamentally transformed communication, commerce, and information access globally.

The notable underestimation of the speed and scale at which technologies like smartphones would penetrate everyday life.

A candid discussion on the overhype of certain technologies in earlier phases, such as virtual reality or blockchain, where adoption did not meet initial lofty expectations within predicted timelines.

Andreessen emphasizes the difficulty in predicting not just the technological breakthroughs but also the social, economic, and regulatory environments shaping the adoption curves.

Successes and Opportunities

Andreessen highlights significant wins in technology that met or exceeded projections:

The continuous improvement in AI and machine learning, which he sees as key drivers of future innovation.

Cloud computing's transformational role in enabling scalable and accessible computing power for businesses and developers.

The internet’s role in democratizing access to information and empowering new forms of digital entrepreneurship.

He also points out emerging opportunities where technology is poised to create substantial impact, including health tech innovations, decentralized finance (DeFi), and new media platforms reshaping content creation and distribution.

Challenges and Lessons

The video touches on challenges that tempered technological growth and adoption:

Regulatory hurdles and policy uncertainties that slowed down innovation in certain sectors.

Privacy and ethical concerns that have become central issues, requiring a balance between innovation and societal safeguards.

The complexity of large-scale infrastructure projects and the time needed to shift entire industries.

Andreessen underscores the importance of adaptable strategies that account for these dynamic factors, instead of rigid predictions.

Implications for the Future

Marc Andreessen’s reflections convey critical lessons for entrepreneurs, investors, and policymakers:

Technology evolution is a blend of visionary breakthroughs and pragmatic adjustments.

Unrealistic expectations can lead to disillusionment, while cautious optimism may foster sustained progress.

Understanding the interconnectedness of technology with cultural, regulatory, and economic systems is essential for forecasting future trends.

The discussion encourages embracing uncertainties with resilience, leveraging big data and analytics for better decision-making, and fostering environments that support experimentation and iteration.

In conclusion, Andreessen’s insights illustrate that while the future of tech is inherently unpredictable, thoughtful analysis and adaptive frameworks can help navigate the complexities. His perspective bridges past experiences with forward-looking optimism, offering a balanced approach to understanding the trajectory of technological innovation.

"We've gone sideways."

Spyglass • M.G. Siegler • June 2, 2025

Technology•AI•Innovation•Design•Ethics•Essays

I'm not entirely sure what to make of this joint interview, other than perhaps Ive wanted to ensure Powell Jobs also got her credit in the sale of io (which I'll continue to style as 'IO') to OpenAI. Also to ensure they get their own "Paul Simon and Art Garfunkel"-style portrait, as the FT describes it, citing "online wits". Still, a few tidbits worth calling out:

Ive and Altman have been tight-lipped about the AI-enabled device they are developing, and I wonder if it will invent a whole new category in the way the iPhone did for smartphones (speculation has swirled around some kind of screenless device). Ive deftly dodges my attempts to get him to tell me what it is but hints he was motivated by a disillusionment with how our relationship with devices has evolved. “Many of us would say we have an uneasy relationship with technology at the moment,” he says. I’m guessing this includes screen addiction and the harms caused by social media. Whatever the device is, driving its design is “a sense of: we deserve better. Humanity deserves better.”

It’s also sort of curious that Ive keeps agreeing to all these interviews of late while refusing to talk about the device they’re working on (yes, I know there’s likely more than one, but there’s also clearly one that’s in the lead to come out first). So that naturally leads to speculation about what it is, which is fine, but it better be damn good to live up to the hype that he is now naturally building! (This approach is still infinitely better than doing a TED Talk to preview it though.)

Powell Jobs agrees Silicon Valley has changed — and not necessarily for the better. “Thirty-five years ago we were still in the semiconductor era. There was the promise of making personal what had been available only to industry.” Apple played its part in that democratisation of technology, making beautiful, powerful computers for consumers. In recent years, however, there has been more public questioning of the role of Big Tech in our lives. She believes “people are still animated” by the idea that technology can be a force for good but adds a caveat. “We now know, unambiguously, that there are dark uses for certain types of technology. You can only look at the studies being done on teenage girls and on anxiety in young people, and the rise of mental health needs, to understand that we’ve gone sideways. Certainly, technology wasn’t designed to have that result. But that is the sideways result.”

This, of course, echoes Ive’s own talking points (as well as Altman’s). And, of course, that points back to the company and product created by Powell Jobs late husband – which is undoubtedly why they’re quick to caveat the current issues with the notion that these were unintended side effects of the iPhone:

Ive agrees. “If you make something new, if you innovate, there will be consequences unforeseen, and some will be wonderful and some will be harmful.” He acknowledges his own role in the products that have changed our relationship with technology. “While some of the less positive consequences were unintentional, I still feel responsibility. And the manifestation of that is a determination to try and be useful.”

"Unforeseen." "Unintentional." Two more caveats in three sentences.

It also sounds like Powell Jobs has seen whatever product Ive and team have been working on – or at least earlier prototypes of it. Which is more than, say, some key executives at OpenAI can say? As for that device...

Surely this new device will compete with those made by Apple? She demurs. “I’m still very close to the leadership team in Apple. They’re really good people and I want them to succeed also.”

Notably, you haven't heard Ive say something along those lines in all these interviews...

One more thing: on a entirely different topic...

I ask about “Signalgate”, The Atlantic’s recent scoop, when a member of the Trump administration mistakenly added the magazine’s editor, Jeffrey Goldberg, to a group chat on the Signal messaging platform about an imminent US strike in Yemen. “I’ll include you in a thread sometime,” Ive says. Great, I think: hopefully one where you spill the beans about the new AI device.

The Signalgate story prompted a furious response from the US president, who called Goldberg a “sleazebag” before inviting him in for an interview weeks later. “It’s very important to emphasise that, despite having the majority ownership stake in The Atlantic, I’m involved in the business side and not the editorial side,” Powell Jobs says. “We feel very strongly that freedom of the press means they are free to write the truth as they find it, and follow a story as they find it. It’s not up to us to approve or disapprove.”

Now that is a great answer from an owner of a news org. Notably, you haven't heard Jeff Bezos say something along those lines – at least not recently.

Is there a future for venture capital?

Youtube • Carta • May 29, 2025

Business•Startups•VentureCapital•Innovation•Essays

width="100%"

height="315"

src="

frameborder="0"

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture"

allowfullscreen

>

Watch this video on YouTube

The anti-economists have overreached

Noahpinion • May 31, 2025

Business•Strategy•Economics•MarketForces•TradeTheory•Essays

Oren Cass, the founder of the think tank American Compass, is probably the leading intellectual voice of MAGA economics. In a recent blog post, he discusses the question of whether economic forces are like gravity. He writes:

"[Y]es, the physical world is governed by the laws of gravity. But it is not governed only by the laws of gravity. Indeed, anyone who thought he could reliably predict the motion of bodies with knowledge only of gravity would be something of a moron."

Oh, really? Would such a man be a moron? Then please explain to me how Edmond Halley, using only his knowledge of gravity, and having no understanding of any of the other forces of nature, was able to predict a solar eclipse in 1715 to an accuracy of four minutes:

In 1715…a total solar eclipse was visible across a broad band of England. It was the first to be predicted on the basis of the Newtonian theory of universal gravitation, its path mapped clearly and advertised widely in advance. Visible in locations such as London and Cambridge, both astronomical experts and the public were able to see the phenomena and be impressed by the predictive power of the new astronomy…Wikipedia will tell you that this is known as Halley’s Eclipse, after Edmond Halley, who produced accurate predictions of its timing and an easily-read map of the eclipse’s path. Halley did not live to see the confirmation of his predictions of a returning comet – a 1759 triumph for the Newtonian system – but he was able to enjoy his 1715 calculations, which were within 4 minutes[.]

Cass — who holds a Bachelor’s in political economy from Williams College and a law degree from Harvard, but has no apparent training in physics — confidently assures us that anyone who attempted what Edmond Halley did would be “something of a moron”.

This is the kind of insult that says less about the target than about the person doing the insulting. Before you make confident assertions about a field of study, you owe it to your readers to attempt to understand that field at least a little bit.

A botched physics analogy is harmless enough. But Cass’ main argument isn’t about gravity at all — it’s about economics. And it’s here where his willingness to make grand pronouncements about whole fields of study gets him into real trouble.

Cass’ post is a response to a Wall Street Journal op-ed by Matthew Hennessey. Hennessey, in turn, is responding to J.D. Vance’s declaration that markets are a “tool”. Hennessey argues that markets are more like a force of nature than a tool. Cass is trying to rebut Hennessey, criticizing market fundamentalism while also taking a swipe at the entire discipline of economics.

Now, I am no fan of market fundamentalism, and I spent my early years as a blogger bashing the field of (macro)economics — often with even more scorn than Oren Cass employs in this post. But I like to think that when I did this, I generally stuck to making specific criticisms about actual economic models and methods.

A lot of econ critics don’t do this. Back when I was at Bloomberg, I used to have fun poking at the grandiose broadsides against economics that periodically appear in British publications like The Guardian or The Telegraph. These critiques tend to repeat the same old nostrums over and over — economics isn’t a science, it doesn’t do controlled experiments, its assumptions are bad, its theories don’t work, people can’t be predicted like particles, etc. etc.

There are grains of truth to these boilerplate critiques, but the people who write them generally haven’t bothered to pay much attention to what modern economists actually do. Here’s what I wrote in a Bloomberg post back in 2017:

"[E]conomists have developed some theories that really work. A good scientific theory makes testable predictions that apply to situations other than those that motivated the creation of the theory. Slowly, econ is building up a repertoire of these gems. One of them is auction theory, which predicts how buyers will bid for things like online ads or spectrum rights -- Google’s profits are powered by econ theory as much as by search algorithms. Another example is matching theory, which has made it a lot easier to get an organ transplant. A third is random-utility discrete choice theory, which is used in everything from marketing to transportation planning to disaster preparedness.

Nor are econ’s successful theories limited to microeconomics. Gravity models of trade, though fairly simple in nature, have proven very successful at predicting the flow of international trade.

These and other successful economics theories can be used confidently in a wide-variety of real-world situations, by policy makers, engineers and businesses. They prove that anyone who claims that econ theories will never be reliable, because they deal with human beings instead of atoms, is simply incorrect."

Yes, studying mass human behavior is different than studying the motions of the planets, in a number of important ways. But the intellectuals who loftily declare that economics “isn’t a science” don’t seem like they’ve bothered to think very hard about what those differences are, or when and why they matter.

For example, what do the people who write that “economics isn’t a science” think about natural experiments — the empirical technique that has taken over much of econ research in the last three decades? Do they think that these are always less informative than lab experiments in the natural sciences? And if so, why? What do they think are the strengths and weaknesses of natural experiments relative to lab experiments, and how much can they help us test theories and derive general principles about how economies work?

I suspect that very few of the econ critics have thought seriously about these questions, and that far too few have even heard of natural experiments. Certainly, if they have, they must have some good reason for never mentioning them. And certainly they must have good reasons for never talking about auction theory, matching theory, discrete choice models, gravity models, or any of the other economics theories that prove themselves in the real world day in and day out. Right?

But the screeds in The Guardian or The Telegraph are downright erudite compared to what Oren Cass serves up in his own criticism of econ. Here’s what he writes:

"Economics is nothing like physics. Its principles are not generated from repeatable experiments, nor do they hold consistently across space and time. Trusting otherwise is a quite literal example of the blind faith and fundamentalism at issue."

That’s it. That’s literally Cass’ entire criticism of the field of economics in this post. He spends the rest of the post pulling quotes from conservative political thinkers — G.K. Chesterton, Robert Nisbet, Yuval Levin, Roger Scruton, etc. — who urge us to value things like community, tradition, etc., or who assert that markets can’t work without a robust social fabric. Those are interesting things to think about, to be sure, but they don’t bear on the question of what, exactly, Cass thinks is so inadequate about economics.

Cass does not name or criticize any specific economic theories in this post. He cites zero papers and names zero researchers. I looked through a bunch of his other posts about economics, and I almost never found him naming or criticizing any specific theories in those posts, either. In one post, I did find him criticizing the theory of comparative advantage, and he did make one useful, substantive point about it — that comparative advantage can’t explain trade deficits and surpluses. He’s right about that. That’s by far the most substantive, knowledgeable criticism of economics that I could find on his blog.

If Cass is aware of any economic models other than comparative advantage and the basic Econ 101 supply-and-demand model, he plays his cards close to his chest. He doesn’t mention gravity models of trade, which some economists use to try to predict the effects of tariffs (with some success). Nor does he mention Paul Krugman’s New Trade Theory, which implies that countries can sometimes benefit from targeted tariffs against other countries’ national champions (but which wouldn’t recommend the kind of broad, sweeping tariffs Trump has tried to implement).

Neither of those theories is outside the mainstream; both were invented by economists who went on to win the Nobel. Why doesn’t Oren Cass mention them, or grapple with their implications, or use their existence to inform his criticisms of the field of economics? My guess — and this is only a guess — is that Cass is completely unaware that these theories exist, that he has no interest in discovering whether such theories exist, that if he did discover them he would have no idea how to evaluate them, and that even if he did know how to evaluate them he would have no interest in doing so.

A sophisticated understanding of what mainstream economics actually says and does is not useful to Oren Cass’ project, which is to denounce intellectual rivals within the conservative movement. If you want to actually figure out how trade works and what tariffs do, it would help to look at the research literature. If you didn’t get the training needed to understand that literature, it would help to ask some people who did get that training, or at least read a little Wikipedia and ask ChatGPT a few questions. That won’t give you all the answers — the world’s best economists don’t even have all the answers — but it would leave you a lot more knowledgeable than you started out, and it would give you a much better idea of where economists are on solid ground and where there are gaps in their understanding.

On the other hand, if all you want is to dunk on Wall Street Journal writers, perhaps all you need is some hand-waving rhetoric about “market fundamentalism” and a vague half-knowledge of one simple trade theory developed 200 years ago.

The real problem with these econ critics — both the lefty writers in The Guardian and the new crop of MAGA defenders — is that their project is fundamentally political. The lefty writers think that if everyone accepts that econ isn’t a science, and that the econ Nobel isn’t a real Nobel, and people aren’t like particles, and so on and so forth, then some sort of lefty ideology — Marxism, or degrowth, or whatever — will flow in to fill the hole left when economics vanishes. The MAGA writers think that it will be Trump’s economic ideas that fill that void instead.

But Matthew Hennessey, the Wall Street Journal writer, got one big thing right: Simply replacing academic theories with your own ideology can win you power, but there are important things it can’t do. It can’t change the nature of what tariffs actually do to the economy. Even if you and your friends and your political allies all shout very loudly that tariffs will restore American manufacturing, and act very scornful toward nerdy academics who tell you it doesn’t work like that, the economic headwinds that tariffs actually create for American manufacturers won’t change one iota.

However limited mainstream academic economics is as a tool for understanding the consequences of your policies, ideology is even worse.

Who’s right, Vance or Hennesey? Both are right. Market forces are, quite literally, forces of nature. And markets themselves are, quite literally, a tool. Tools work by harnessing forces of nature. A pendulum clock works by harnessing the force of gravity. A market works by harnessing the forces that drive people to buy and sell things. Vance is right that markets should be shaped to serve our desired ends, rather than being an end in and of themselves. Hennesey is right that market forces can’t be denied, ignored, or wished away.

Just to see how AI is doing, I asked ChatGPT o3 about the papers on gravity models. Its characterization of the papers’ results was oversimplified and omitted crucial nuance about the ex ante predictive accuracy of Fejgelbaum et al. (2020). But overall its explanations weren’t too bad!

Clarity of Thought

Investing101 • Kyle Harrison • May 31, 2025

Business•Strategy•Startups•ClarityOfThought•Founders•Essays

I'm a voracious notetaker. I have a tick that may be the limiting factor on my greatness. I feel compelled to record things. In addition to logs of the books I've read, the dialogues I've had, and the spiritual impressions I've received, I have also kept notes on every company meeting I've had since I started investing in 2014. In the last ~11 years I have had 2,851 meetings with founders. In that time, I have worked at five different venture firms and have been a part of deploying nearly $2B of capital. Across that entire corpus of experience, if there is one phrase that has come up over and over and over again in the pursuit of excellent founders, it is "clarity of thought."

There is an elusive element in explaining this idea that has a sort of "I know it when I see it" element. The best founders I have ever interacted with articulate themselves with precision, logical coherence and flow from one idea to another, a focus on the most relevant information without getting distracted by the logical course at hand, and an "earned insight" into the complex issue they're tackling that gets right to the center of the opportunity.

The biggest obstacle in achieving "clarity of thought" when you're in the earliest days of building a business is the fact that it can be difficult to see clearly something that is non-existant. But that is the exceptional capability of world-class founders. They can see clearly that which does not yet exist. And then they spend exorbitant, God-like amounts of effort and grit willing that thing into existence. When you talk to someone like that about their business, they can see, with immense clarity, what it could become.

Does that mean the founder can predict the future with certainty? Obviously not. Their idea can evolve over time in dramatic ways. Their insight can be wrong. They can miss the mark on the particulars of what they attempt to build. But the outcomes, in that moment, are not the measure. Clarity of thought is, at first, independent of the eventual outcome. It is, instead, demonstrated by not just the clarity of the thoughts expressed, but the quality of the underlying thinking being anchored in truth.

When I dug into the history of the phrase "clarity of thought," I found it had a pretty reach interconnected history across the hallmarks of critical thinking and effective reasoning. A couple of examples:

Socrates, Plato, and Aristotle: All emphasized clarity in reasoning. Socrates' method of questioning aimed to clarify assumptions and expose contradictions. Aristotle’s Organon (a collection of logical treatises) outlined the structure of clear and valid arguments. "A deduction is speech (logos) in which, certain things having been supposed, something different from those supposed results of necessity because of their being so."

Thomas Aquinas: Used the Latin word “claritas” (clarity or brightness) to describe not just physical clarity but intellectual lucidity. That included clear definition of terms, rigorous distinctions, and the transparency of argumentation.

Descartes and Spinoza: They emphasized clear and distinct ideas as the foundation of knowledge. Descartes famously wrote that truth must be built on ideas that are “clear and distinct.”

Bertrand Russell, Ludwig Wittgenstein, & A.J. Ayer: Each prioritized linguistic clarity and logical form. Wittgenstein's Tractatus begins: “What can be said at all can be said clearly…”

A lot of these core ideas throughout the philosophies of a half dozen thinkers reflect critical concepts that I think demonstrate the characteristics of "clarity of thought." So when I think about how they apply to founders, I walk away with the components below.

Can AI be trusted in schools?

Economist • May 30, 2025

Education•Technology•AI•AcademicIntegrity•TeacherTraining•Essays

Artificial intelligence (AI) is increasingly infiltrating educational settings, raising questions about its reliability and impact on learning. A pilot program in Nigeria demonstrated that students could achieve two years' worth of progress in just six weeks using AI tools. However, this success was not universally replicated. In Turkey and the Netherlands, experiments employing large language models (LLMs) to teach coding and mathematics yielded mixed results. Some students became so reliant on these AI systems that, upon their removal, their performance declined compared to peers who had not used the technology. This dependency underscores the necessity for educators to integrate AI thoughtfully, ensuring it complements traditional teaching methods rather than replacing them.

The rapid adoption of AI in education has also led to unintended consequences. Instances have emerged where students use AI to complete assignments, raising concerns about academic integrity. Educators are now tasked with distinguishing between student work and AI-generated content, a challenge that complicates assessment and feedback processes. Moreover, the use of AI by teachers to generate generic feedback on student work has been reported, potentially diminishing the quality and personalization of education.

To harness AI's potential effectively, it is crucial to establish clear guidelines and training for educators. Professional development programs should focus on ethical AI use, data privacy, and the development of critical thinking skills in students. By fostering AI literacy among both teachers and students, educational institutions can navigate the complexities of AI integration, ensuring that technology serves as a tool for enhancement rather than a source of disruption.

‘Physicality: The New Age of UI’

Lux • John Gruber • June 3, 2025

Technology•Software•UserInterfaceDesign•Apple•PhysicalityInUI•Essays

Sebastiaan de With has created a detailed piece examining the past and potential future of iOS UI, complete with mockups that speculate on where Apple might be heading next. He suggests that a logical next step could be extending physicality throughout the interface, giving it a sense of tactile realism without overdoing it. This approach aligns with the philosophy of matching the interface to the material properties of Apple devices, such as glass screens, making the interface feel like the glass itself is coming alive.

De With's designs and theories focus on creating a new design language that incorporates the materiality of glass, similar to how VisionOS utilizes interactive glass-like surfaces. This approach would not only look appealing but also provide a competitive advantage for Apple, as it would be difficult for competitors to replicate the interactive responsiveness of such a design using current tools like Figma. The idea of a UI that feels like it's made of glass harks back to the early days of Mac OS X, where the "lickable" translucent Aqua UI theme complemented the colorful translucent plastic enclosures of Apple devices.

The concept of bringing this design language to the Mac, however, poses challenges. Unlike iOS devices, Macs are primarily made of aluminum, and their usage involves multiple stacked windows, which could be complicated by additional transparency or translucency. What MacOS needs is depth in its UI controls, such as buttons that clearly indicate whether they are enabled or disabled. This would enhance user experience without overwhelming the interface with too much visual complexity.

As we await Apple's next moves, particularly with WWDC approaching, it will be interesting to see if their designs align with de With's visions or if they introduce entirely new concepts. The integration of physicality into UI design could mark a significant shift in how users interact with digital interfaces, potentially setting a new standard for user experience.

AI

Where Crypto Meets AI with Chris Dixon & David George

Youtube • a16z • May 31, 2025

Technology•AI•Cryptocurrency•Innovation•Web

width="100%"

height="315"

src="

frameborder="0"

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture"

allowfullscreen

>

Watch this video on YouTube

The recent history of AI in 32 otters

Oneusefulthing • Ethan Mollick • June 1, 2025

Technology•AI•ImageGeneration•DiffusionModels•MultimodalAI

Two years ago, I was on a plane with my teenage daughter, messing around with a new AI image generator while the wifi refused to work. Otters were her favorite animal, so naturally I typed: “otter on a plane using wifi” just as the connection was restored. The resulting thread went viral and “otter on a plane using wifi” has since become one of my go-to tests of progress AI image generation.

What started as a silly prompt has become my accidental benchmark for AI progress. And tracking these otters over the years reveals three major shifts in AI over the past few years: the growth of multiple types of AI tools, rapid improvement, and the status of local and open models.

Diffusion models

The first otters I created were made with image generation tools. For most of the very recent history of AI, image generation used a process called diffusion, which works fundamentally differently from Large Language Models like ChatGPT. While LLMs generate text one word at a time, always moving forward, diffusion models start with random static and transform the entire image simultaneously through dozens of steps. It is like the difference between writing a story sentence by sentence versus starting with a marble block and gradually sculpting it into a statue, every part of the image is being refined at once, not built up sequentially. Instead of predicting "what comes next?" like a language model, diffusion models predict "what should this noise become?" and transform randomness into coherent images through repeated refinement.

There are a number of diffusion models out there, but I have tended to use Midjourney, which has been around longer than many other AI tools. Using Midjourney allows us to see how diffusion models have developed over time, as you can see with the simple prompt “otter on a plane using wifi” (for every image and video in this post, I pick the best out of the first four images generated). We go from melted fur at the start of 2022 to a visible otter (with too many fingers and a weird keyboard) at the end of that year. In 2023, we get a photorealistic otter, but still a weird keyboard and plane windows. In 2024, the lighting and positioning become better, and by 2025 we have excellent photorealism.

But what makes diffusion models interesting is not their increasing ability to make photorealistic images, but rather the fact that they can create images in various styles. This cuts to the heart of why AI image generation is so controversial, as many AI models are trained on images from throughout the web, including copyrighted work, and can thus replicate images in the style of living artists without their permission or compensation. But you can see how this works when applied to older artists and styles. Here is “otter on a plane using wifi” in the style of the Bayeux Tapestry, Egon Schiele, street art graffiti, and a Japanese Ukiyo-e print. (The wider your knowledge of art history, the more you can make these image creators do).

Diffusion models are not limited to existing styles. Midjourney lets any creator train the model to create images in a style they like and then share those unique “style codes.” If I end a prompt with one of these style codes, I get very different results: ranging from cyberpunk otters to cartoon ones.

I want to show you one last diffusion image, but this one is fundamentally different. I created it on my home computer using Flux. Unlike proprietary AI models like Midjourney or ChatGPT that run in corporate data centers, open weights models can be downloaded, modified, and run by anyone, anywhere. This high-quality image wasn't generated by a tech giant's servers but by the graphics card on my PC (you can also see ComfyUI, the interface I used to generate the image). It is remarkably close to the quality of the best closed-source models.

Whether open or proprietary, diffusion models tend to produce pretty random results, and creating a single quality image can often take multiple tries. The latest diffusion models (like Google’s Imagen 4) do better, but there is still a lot of luck and trial-and-error involved in a good output.

Multimodal Image Generation

For most of the era of Large Language Models, when an LLM like ChatGPT created an image, it was actually calling on one of these diffusion models to make the image and show the results. Because this was all done indirectly (the LLM prompted the diffusion model which created the image), the process of creating an image seemed even more random than working with a standard image generator.

That changed with the release of multimodal image generation by OpenAI and Google in the past couple months. Unlike diffusion models that transform noise into images, multimodal generation lets Large Language Models directly create images by adding tiny patches of color one after another, just as they add words one after another. This gives AIs deep control over the images it creates. Here is "an otter on an airplane using wifi, on their laptop screen is image generation software creating an image of an otter on a plane using wifi," on my very first attempt.

But now I have to confess something: my daughter's favorite animal is not just any otter, it is the sea otter, and every single image so far has been of the much more common river otter. Finally, with multimodal generation, I could vindicate myself as a father, as multimodal models can make specific changes and adjustments: "make it a sea otter instead, give it a mohawk, they should be using a Razer gaming laptop."

I still use Midjourney and Imagen when I am trying to achieve a visual impact and when I am willing to spend a lot of time working through randomized images, but if I want a particular picture, I now always turn towards multimodal image generators. I suspect they will become increasingly common. As of yet, there are no open weights multimodal image generators, but that is likely to change soon.

Using Code for Images and “Sparks”

Multimodal generation shows AI can control images with precision. But there's a deeper question: does AI actually understand what it's creating, or is it just recombining patterns from training data? To test true spatial reasoning, we can force AI to draw using code - no visual feedback, no pre-trained image patterns to lean on. It's like asking someone to paint blindfolded using only mathematical instructions.

One particularly challenging type of code to use to draw is TikZ, a mathematical language used for producing scientific diagrams in academic papers. It is so ill-suited to the purpose that the name TikZ stands for the recursive German phrase "TikZ ist kein Zeichenprogramm" (“TikZ is not a drawing program”). Because of that, there is very little training data on using TikZ for drawings, meaning the AI cannot “remember” code from its training, it has to make it up itself. Creating an image with pure math in this language is a difficult job. In fact, a TikZ drawing of a unicorn by the now obsolete GPT-4 was considered, in a hugely influential paper, to be a sign that LLMs might have a “spark” of AGI - otherwise how could it be so creative? Here is how that unicorn looked, for reference:

I had a little less luck getting the old GPT-4 to draw an otter on a plane using wifi. But what happens if we ask a more recent model, like Gemini 2.5 Pro, to draw our otter with TikZ? It isn’t perfect (and Gemini took “on a plane” literally and made the otter sit on the wing), but if the pink unicorn showed a spark this certainly represents a larger leap.

And open weights models are catching up here as well, though they generally remain a few months behind the frontier. The new version of DeepSeek r1, probably the best open weights model available, produces a TikZ otter that is not quite as good as the closed source models like Gemini, but I expect that it will continue to improve.

These drawings themselves aren't as important as the fact that models are reasoning about spatial relationships from scratch. That is why the authors of the “Sparks” papers suggested these systems aren't just pattern-matching from training data but developing something closer to actual understanding.

Video

If still images show impressive progress, video generation reveals just how fast AI is accelerating. This was an “otter on a plane using wifi on a computer” as generated by the best available video generator of July, 2024, Runway Gen-3 alpha.

And this is in Google’s Veo 3 with the same prompt “otter on a plane using wifi on a computer” in 2025, less than a year later. Yes, the sound is 100% AI generated as well.

And, continuing the theme, there are now open weights AI models that can run on my home computer that are behind the state-of-the-art, but catching up. Here are the results from Tencent’s HunyuanVideo for the same prompt. Yes, it's hideous - but this is made on my home computer, not a massive data center.

What this all means

The otter evolution reveals two crucial trends with some big implications. First, there clearly continues to be rapid improvement across a wide range of AI capabilities from image generation to video to LLM code generation. Second, open weights models, while not generally as good as proprietary models, are often only months behind the state-of-the-art.

If you put these trends together, it becomes clear that we are heading towards a place where not only are image and video generations likely to be good enough to fool most people, but that those capabilities will be widely available and, thanks to open models, very hard to regulate or control. I think we need to be prepared for a world where it is impossible to tell real from AI-generated images and video, with implications for a wide swath of society, from the entertainment we enjoy to our trust for online content.

That future is not far away, as you can see from this final video, which I made with simple text prompts to Veo 3. When you are done watching (and I apologize in advance for the results of the prompt “like the musical Cats but for otters”), look back at the first Midjourney image from 2022. The time between a text prompt producing abstracts masses of fur and those producing realistic videos with sound was less than three years.

Reddit sues Anthropic for allegedly not paying for training data

Techcrunch • June 4, 2025

Technology•AI•DataPrivacy•LegalAction•Reddit

Reddit has filed a lawsuit against Anthropic, alleging that the AI company unlawfully scraped Reddit's data to train its chatbot, Claude, without obtaining proper licensing. The complaint, filed in a Northern California court, accuses Anthropic of violating Reddit's user agreement by using the site's data for commercial purposes without authorization.

This legal action marks the first instance of a major tech company challenging an AI model provider over its data training practices. Reddit's chief legal officer, Ben Lee, stated, "We will not tolerate profit-seeking entities like Anthropic commercially exploiting Reddit content for billions of dollars without any return for redditors or respect for their privacy."

Reddit has previously entered into licensing agreements with companies such as OpenAI and Google, allowing them to train their AI models on Reddit's data under specific terms that protect user privacy. In contrast, Reddit alleges that Anthropic refused to engage in similar negotiations and continued to scrape Reddit's content despite being asked not to.

The lawsuit seeks compensatory damages and restitution for the value Anthropic has gained from using Reddit's data without permission. Anthropic, founded by former OpenAI executives, has yet to respond publicly to the lawsuit.

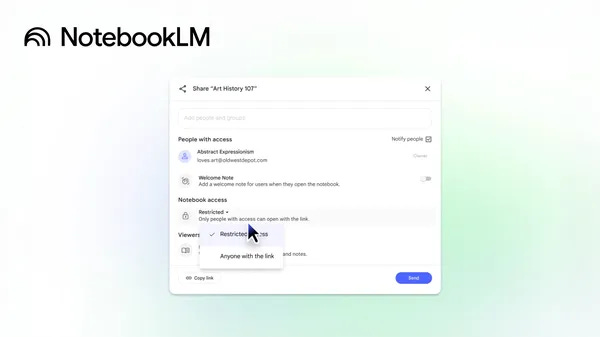

NotebookLM is adding a new way to share your own notebooks publicly.

Blog • Tin-Yun Ho • June 3, 2025

Technology•Software•NotebookLM•PublicSharing•Collaboration•AI

NotebookLM is introducing a new feature that allows users to share their notebooks publicly, enhancing collaboration and accessibility. This update enables users to share their notebooks with a broader audience, facilitating easier collaboration and access to their work.

To share a notebook publicly, open the desired notebook and click on the "Share" icon located in the top-right corner of the screen. In the sharing menu, select the option to generate a public link. This link can then be shared with anyone, granting them access to view the notebook's contents. This feature is particularly beneficial for educators, researchers, and professionals who wish to disseminate their work widely.

It's important to note that while public sharing increases accessibility, it also means that the notebook is visible to anyone with the link. Therefore, users should ensure that no sensitive or confidential information is included in notebooks intended for public sharing.

This enhancement aligns with NotebookLM's commitment to improving user experience by providing more flexible sharing options. By enabling public sharing, NotebookLM supports a more collaborative and open approach to information sharing, catering to the diverse needs of its user base.

ElevenLabs debuts Conversational AI 2.0 voice assistants that understand when to pause, speak, and take turns talking

Venturebeat • May 30, 2025

Technology•AI•VoiceAI•EnterpriseSolutions•NaturalLanguageProcessing

ElevenLabs has unveiled Conversational AI 2.0, an advanced platform designed to create intelligent, context-aware voice agents for enterprise applications such as customer support, call centers, and outbound sales. This upgrade introduces several key features aimed at enhancing the naturalness and efficiency of voice interactions.

A standout feature of Conversational AI 2.0 is its state-of-the-art turn-taking model. This technology analyzes conversational cues like hesitations and filler words in real-time, enabling the agent to understand when to speak and when to listen. This capability is particularly beneficial for applications requiring a balance between prompt responses and the natural flow of conversation.

The platform also introduces integrated language detection, allowing seamless multilingual interactions without manual configuration. This feature ensures that the agent can recognize and respond in the user's language, catering to global enterprises with diverse customer bases.

Additionally, Conversational AI 2.0 incorporates a built-in Retrieval-Augmented Generation (RAG) system. This system enables the AI to access external knowledge bases, providing accurate and contextually relevant information during interactions. Such integration is crucial for applications requiring up-to-date and precise data.

These enhancements position ElevenLabs' Conversational AI 2.0 as a robust solution for enterprises seeking to implement advanced voice agents capable of delivering natural, efficient, and context-aware interactions.

Reddit Sues Anthropic, Accusing It of Illegally Using Data From Its Site

Nytimes • June 4, 2025

Technology•AI•DataPrivacy•Lawsuit•Reddit

Reddit has filed a lawsuit against Anthropic, an artificial intelligence startup, alleging that the company illegally scraped content from Reddit to train its chatbot, Claude. The lawsuit, filed in California Superior Court, accuses Anthropic of using automated bots to access Reddit's data without permission and training on users' personal information without consent. (apnews.com)

This legal action highlights the growing tensions between social media platforms and AI companies over data usage. Reddit's Chief Legal Officer, Ben Lee, emphasized the need for clear limitations on how AI companies use content they scrape, stating, "AI companies should not be allowed to scrape information and content from people without clear limitations on how they can use that data." (apnews.com)

Anthropic, founded by former OpenAI executives, has developed the Claude series of chatbots as competitors to OpenAI's ChatGPT and Google's Gemini. The company has received significant investments from Amazon and Google, with Amazon investing up to $4 billion and Google committing $2 billion. (en.wikipedia.org)

In response to Reddit's lawsuit, Anthropic has stated that it disagrees with the claims and intends to defend itself vigorously. The company maintains that its training practices are lawful and plans to contest Reddit's allegations. (apnews.com)

This case underscores the broader issue of AI companies using publicly available data to train their models without explicit permission from content creators. Reddit's lawsuit seeks both damages and an injunction to prevent Anthropic from further using Reddit content for commercial purposes. (reuters.com)

It’s not your imagination: AI is speeding up the pace of change

Techcrunch • May 30, 2025

Technology•AI•ArtificialIntelligence•OpenAI•Investment

Venture capitalist Mary Meeker has released a comprehensive 340-page report titled "Trends — Artificial Intelligence," highlighting the unprecedented speed at which AI is being developed, adopted, and utilized globally. The report underscores the rapid evolution of AI technologies and their transformative impact across various sectors.

Meeker emphasizes that the pace and scope of change driven by AI are unparalleled, supported by extensive data and analysis. She notes that ChatGPT, for instance, achieved 800 million users in just 17 months, a rate of adoption unmatched by previous technologies. Additionally, the report highlights the swift reduction in costs associated with AI usage, with inference costs dropping by 99% over two years. This significant decrease is attributed to advancements in hardware and algorithms, enabling more efficient and affordable AI applications.

The report also discusses the emergence of cost-effective AI models, particularly from Chinese companies, which pose competitive challenges to established U.S. AI leaders like OpenAI. Meeker warns that these affordable alternatives could undercut the market share of existing AI firms, emphasizing the need for continuous innovation and adaptation. She advises investors to diversify their portfolios and manage risks carefully, drawing parallels to past tech giants that navigated periods of unprofitability.

Furthermore, Meeker highlights the critical role of developers in the success of AI companies. She references Steve Ballmer's emphasis on the importance of attracting top developer talent, noting that companies excelling in this area are more likely to lead in the AI sector. This underscores the competitive nature of the AI industry and the necessity for firms to invest in and retain skilled developers.

In conclusion, Meeker's report provides a detailed analysis of the rapid advancements in AI, the challenges posed by emerging competitors, and the strategic imperatives for companies and investors in this dynamic landscape.

Forget FAANG, these tech investors are betting on MANGO in the AI race

Youtube • CNBC Television • June 2, 2025

Technology•AI•Investment•Innovation•MarketTrends

The video explores a shifting trend in the tech investment landscape, highlighting how investors are moving beyond the well-known FAANG stocks (Facebook, Apple, Amazon, Netflix, Google) and focusing instead on what is dubbed the "MANGO" group in the artificial intelligence (AI) sector. MANGO represents a newer cohort of tech companies that are making significant strides in AI innovation and are attracting keen investor interest as the AI race intensifies.

This transition underscores a broader market sentiment that the future of tech growth and value creation is not solely tied to the older, established giants but also to emerging companies that lead in AI development and application. Investors see these MANGO companies as pivotal in shaping the next wave of technology-driven economic transformation.

Key points discussed include:

The MANGO group consists of companies that are innovative in AI research, development, and deployment, positioning them at the forefront of next-generation technology advancements.

Investors are betting on these firms due to their potential to disrupt various industries through AI, including sectors like healthcare, autonomous systems, and enterprise software.

This shift signifies changing investment dynamics where innovation and AI capabilities increasingly determine market valuation and future growth prospects.

Experts featured in the video emphasize that while FAANG companies remain influential, their growth trajectories are maturing, leading investors to diversify towards firms better positioned in the AI ecosystem.

The video also touches upon the broader implications of AI progress in the market, including competitive advantages, new product features, and enhanced efficiencies enabled by AI technologies.

The implications of this investor pivot towards MANGO stocks suggest a more nuanced understanding of tech leadership, where agility in AI innovation and adaptation to emerging AI applications become critical success factors. Investors are looking beyond brand recognition and historical market dominance to identify companies poised for breakthrough advancements and long-term value generation.

In conclusion, the discussion presents a compelling case for why the AI race is evolving and how investment focus is shifting accordingly. The move from FAANG to MANGO is emblematic of broader trends in technology investment, emphasizing a future where AI capabilities play a central role in determining company success and investor returns. This paradigm shift reflects the ongoing innovation cycle in tech and signals new opportunities for stakeholders willing to engage with the frontier of AI development.

How Fei-Fei Li Is Rebuilding AI for the Real World

Youtube • a16z • June 4, 2025

Technology•AI•MachineLearning•SpatialIntelligence•Innovation

The video discusses how Fei-Fei Li is working towards rebuilding AI for the real world, focusing on spatial intelligence. This involves enabling machines to process visual data, make predictions, and act upon those predictions, which could significantly enhance AI's interaction with humans in real-world environments[1][4].

Fei-Fei Li, a pioneering developer of Artificial Intelligence, has a background that includes immigrating from China to the U.S. and experiencing early life challenges. She is known for her work in AI, particularly as the founding director of the Stanford HAI and her role as a Scientific Partner at Radical Ventures[3][4].

Li's current focus is on developing AI models that can simulate 3D environments and interact with the real world through her startup, World Labs. This startup has raised significant funding and is working on technologies that can generate virtual 3D scenes from 2D images, aiming to bridge the gap between AI and real-world applications[5].

The broader context of AI development includes significant advancements in recent years. AI systems have shown improved performance in benchmarks like MMMU, GPQA, and SWE-bench, with notable increases in scores. Additionally, AI is being increasingly integrated into daily life, from healthcare to transportation, with self-driving cars becoming more common and AI-enabled medical devices being approved by regulatory bodies[2].

Overall, Fei-Fei Li's work and the broader AI landscape highlight a shift towards making AI more applicable and interactive in real-world scenarios, emphasizing spatial intelligence and 3D environment simulation.

Stuck in the Middle of AI Workflows

Tomtunguz • June 4, 2025

Technology•AI•Agentic Workflows•DeterministicWorkflows•EnterpriseSoftware

Whenever I hear about a new startup, I pull out my research playbook. First, I understand the pitch, then find backgrounds of the team, & tally the total raised.

Over the weekend, I decided to migrate this workflow to use AI tools, & the process taught me something important about how we’re actually integrating AI into our work.

Tools are small programs that expand AI capabilities. ChatGPT might call a web search tool to read a blog post I’d like summarized. Claude might call the terminal tool to change file permissions in my current directory. Gemini might call a tool to find the latest stock price of the most recent IPO I’ve been following.

I replaced each step in my workflow with an AI tool: a web search & summarization tool, LinkedIn research tool, & a capital fundraising history tool. I hadn’t changed the workflow itself—just swapped out the individual components within it.

This upgrade revealed something crucial: there are three distinct classes of programs emerging in enterprise software.

Deterministic workflows are my original startup research process—the same steps, in the same order, every time. These excel at mechanization, executing identical processes with small deviations or calculations at each step.

Deterministic workflows with AI components represent my current setup. I still follow the same research sequence, but now Gemini & ChatGPT handle the summarization. The AI makes individual steps smarter while I maintain control over the overall process.

Agentic workflows hand decision-making to the AI entirely. The system decides what to research, in what order, & which tools to call based on the input.

These excel at handling broad universes of potential inputs—like customer support where a user might ask “Why won’t my password reset?” or “Can I integrate your API with Salesforce?” or “My data export is corrupted”—questions that require completely different investigative paths.

Security incident response works similarly: when an alert fires, an agentic system might investigate network logs, check for similar patterns in historical data, or escalate to human analysts based on threat severity—decisions that can’t be predetermined because each incident presents unique characteristics.

I learned two things from this migration:

Programming with AI tools is remarkably simpler. AI categorizes companies far better than any rule-based system I could write.

I hadn’t built an agentic workflow—I was just upgrading my deterministic process with intelligent components. & that’s exactly what I wanted.

I don’t want an AI deciding how to diligence a company. I want it to diligence every AI software company the same way, every time. The consistency of my process combined with the intelligence of AI gives me the balance I need: repeatable methodology enhanced by superior pattern recognition.

Maybe I’ll evolve toward fully agentic startup diligence someday, especially as the models improve.

But for now, this hybrid approach delivers the reliability of deterministic processes with the power of AI— & that’s the sweet spot for most enterprise applications today.

Venture Capital

AI Startups Burn Through Cash 2x as Fast, and 10 Other Top Learnings from SVB’s Latest in Enterprise

Saastr • Jason Lemkin • June 2, 2025

Business•Startups•AI•VentureCapital•EnterpriseSoftware•Venture Capital

[YOUTUBE_EMBED:VIDEO_ID]

SVB has released a comprehensive analysis of its enterprise software clients, revealing several key insights that B2B founders, operators, and investors should consider as they navigate the latter half of 2025.

1. Accelerated Capital Burn in AI Startups

AI startups are now expending $100 million in approximately three years, a rate that is about half the time it took a decade ago. Simultaneously, these companies are achieving $100 million in revenue within roughly two years. This trend indicates a significant shift in venture economics, as AI companies require substantial compute infrastructure from the outset. For SaaS founders integrating AI features, it's crucial to anticipate higher capital needs while recognizing the potential for rapid revenue growth.

2. Dominance of Mega-Rounds in Enterprise Funding

Mega-rounds, defined as funding rounds exceeding $100 million, now account for 68% of enterprise capital. Notably, AI deals constitute only 6% of all mega-deals by count but represent approximately 50% of the capital raised in these rounds. This concentration suggests a bifurcated market where a few AI companies secure substantial funding, while others face increased competition for limited resources. B2B founders not pursuing mega-rounds should focus on capital efficiency and demonstrate clear ROI to attract budget-conscious customers.

3. Decline in Series A Graduation Rates

The rate at which startups progress from seed funding to Series A has reached historic lows. In 2024, the bottom quartile for revenue at Series A was $1.3 million, aligning with the median revenue in 2021. This widening gap between seed and Series A stages reflects a structural shift in the market. Founders seeking seed funding should plan for 18-24 months of runway and aim for at least $1.5 million in annual recurring revenue before considering Series A discussions.

4. Marginalization of Mid-Sized VC Funds

Since 2020, mid-sized venture capital funds have been increasingly marginalized. The market has seen a clear bifurcation, with large funds making substantial investments and small funds carving out niches, leaving mid-sized funds in a challenging position. This trend mirrors the challenges faced by mid-market B2B and SaaS companies, which must either become category-defining platforms or highly focused niche players. B2B founders should choose a strategic direction and aim to dominate it, as being "pretty good" at several things is no longer a viable strategy.

5. Surge in AI-Focused VC Fundraising

AI-focused venture capital funds, though representing only 15% of U.S. VC funds, accounted for approximately 40% of total capital raised in 2024. These funds also closed three times more often above their initial target size. This influx of capital into AI-focused funds creates a self-reinforcing cycle, providing AI companies with easier access to funding while increasing competition for non-AI companies. B2B founders, even those not primarily in the AI sector, should clearly articulate their AI strategy, as funds are evaluating deals through an AI lens.

6. Increased Revenue Per Employee in AI Companies

AI-exposed companies reported a revenue per employee (RPE) of $808,000, compared to $420,000 for non-AI companies as of 2024. This widening gap since 2020 indicates that AI companies are scaling revenue without proportional increases in headcount, achieving the scalability that SaaS models have often promised but not always delivered. B2B founders should focus on RPE as a key metric, aiming for $400,000 or more to remain competitive with AI-native companies for talent and investment.

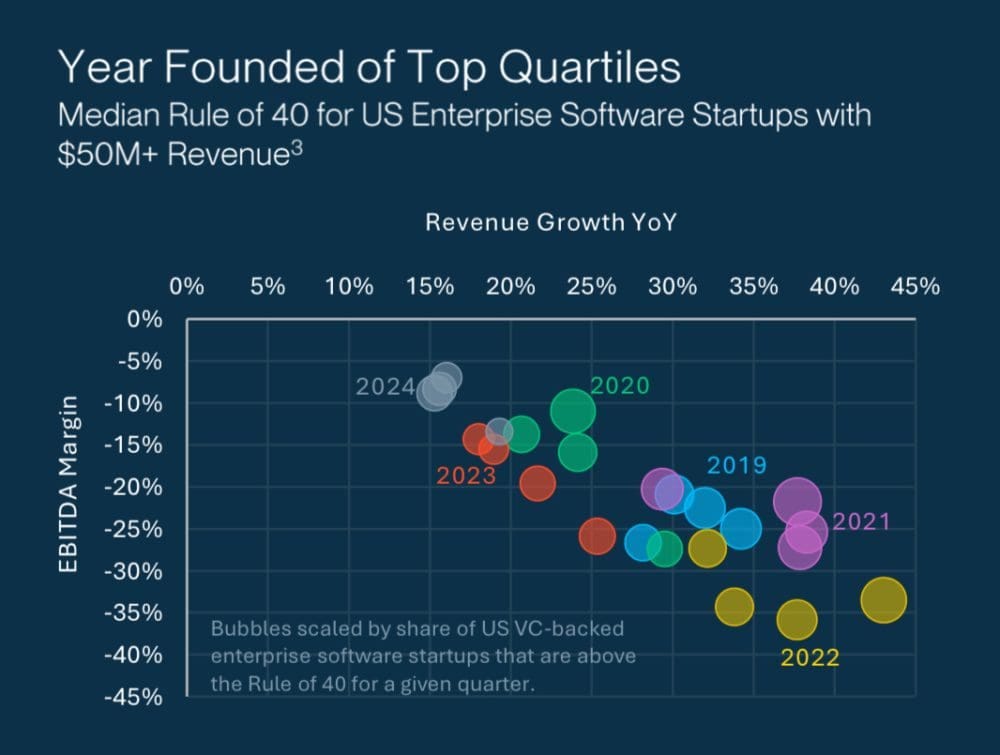

7. Decline in the Rule of 40 Metric

The median Rule of 40—a metric combining growth rate and profit margin—for enterprise software startups with $50 million or more in revenue dropped to 9% in 2024, down from 21% in 2021. Only 13% of companies exceeded the traditional 40% threshold. This decline suggests that the fundamental unit economics of SaaS are under pressure, with growth rates declining faster than companies can improve margins. B2B founders should prioritize sustainable growth at reasonable burn rates over aggressive growth strategies.

8. Rise of "Zombiecorns"

The number of enterprise unicorns—startups valued at over $1 billion—has surpassed 300, with a median age of 11.5 years. Many of these companies are becoming "Zombiecorns," characterized by poor revenue growth and unit economics, and are stuck in a challenging position between being too large for acquisition and too unprofitable for public markets. This trend creates a liquidity crisis affecting the entire ecosystem. SaaS founders should focus on building sustainable business models rather than aiming for unicorn status, as many recent unicorns have become cautionary tales.

9. Funding Pressures on Enterprise Software Startups

According to SVB's data, 50% of U.S. enterprise software startups need to raise capital or exit within the next 12 months based on their current burn rates. This represents a significant wave of funding pressure hitting the market simultaneously. With Series A rates already depressed and IPO markets closed, many companies may be forced into distressed sales or shutdowns. B2B founders should extend their runway proactively, as the fundraising market in the next 12 months will be challenging for companies without strong fundamentals.

10. Increase in M&A Activity at Seed Stage

A growing share of enterprise software startups are being acquired at the seed stage, as larger companies seek to acquire technology and talent early, and seed startups fail to meet Series A benchmarks. This shift represents a fundamental change in exit strategies, with many startups now being designed as "acqui-hire" targets or technology additions for larger platforms. B2B founders should consider whether they are building a feature or a company. If it's a feature, plan for earlier exits; if it's a company, ensure you have the capital and market position to survive the Series A challenges.

These insights underscore the rapidly evolving landscape of enterprise software, particularly in the AI sector. Founders and investors must adapt to these changes by focusing on sustainable growth, capital efficiency, and clear strategic direction to navigate the challenges and opportunities ahead.

Carta’s Head of Insights on Dilution, Equity, and the State of the Market Right Now

Mostlymetrics • June 2, 2025

Business•Startups•Dilution•Equity•PrivateTech•Venture Capital

In a recent discussion, Peter Walker, Head of Insights at Carta, delved into the intricacies of dilution, equity distribution, and the current state of the private tech market. He addressed common questions such as whether founders should retain 50% ownership after a Series A funding round and what a 10% ownership stake at IPO signifies.

Walker emphasized the importance of understanding dilution at each funding stage. He noted that the median dilution at the seed stage has decreased from 23% to 20.1% between Q1 2019 and Q1 2024, indicating a trend towards founders retaining more ownership early on. At the Series A stage, median dilution has fallen from 24.1% to 20.5% in the same period. This decline suggests a shift towards more favorable terms for founders in early funding rounds. (carta.com)

Regarding employee and advisor equity, Walker highlighted the significance of understanding the implications of equity grants. He advised that employees should be aware that equity is not equivalent to cash and should consider the vesting schedules and potential dilution when evaluating job offers. Additionally, he discussed the differences between Incentive Stock Options (ISOs) and Restricted Stock Units (RSUs), noting that while ISOs offer tax advantages, they come with specific requirements and risks.

The conversation also touched upon the current state of the private tech market in 2025. Walker observed a rise in bridge rounds and down rounds, reflecting a more cautious investment environment. He mentioned that in Q1 2023, nearly 20% of all rounds on Carta were down rounds, indicating a trend towards more conservative valuations. (vcminute.co) Despite these challenges, Walker noted a surge in AI funding, highlighting the sector's resilience and attractiveness to investors.

In summary, Walker's insights provide valuable guidance for founders, employees, and investors navigating the complexities of dilution and equity distribution in today's evolving market landscape.

The Brilliance of Y Combinator

Youtube • 20VC with Harry Stebbings • May 31, 2025

Business•Startups•YCombinator•Entrepreneurship•VentureCapital•Venture Capital

Signal vs access

Signalrank Update • Rob Hodgkinson • June 5, 2025

Business•Investment•VentureCapital•SeriesB•SeedInvesting•Venture Capital

SignalRank distinguishes between "signal" and "access" in its investment model. "Signal" pertains to the quality of Series B investments, assessed through investor scores across multiple funding rounds. "Access," on the other hand, involves supporting undercapitalized seed investors to maintain their ownership stakes.

In practice, a seed investor backs a company early on, and a larger fund leads the Series B. SignalRank aids the seed investor by financing their pro-rata share, mitigating dilution from the larger investment. This approach is evident in SignalRank's 2025 investments, where Sequoia Capital co-invested in Series B rounds. While Sequoia is a prominent Series B investor, SignalRank's support comes from various seed managers, not directly from Sequoia.

Qualification in SignalRank's model requires high scores across seed, Series A, and Series B rounds. For instance, Sequoia has invested in 24 announced Series B rounds in the past two years, with 21 qualifying under SignalRank's criteria. However, none of the 2025 co-investments with Sequoia have been publicly disclosed yet.

Is DPI The Only Thing That Matters? with Sam Lessin, Jason Lemkin & Rory O’Driscoll

Youtube • 20VC with Harry Stebbings • June 5, 2025

Business•Startups•DPI•VentureCapital•GrowthMetrics•Venture Capital

Global Venture Funding Slowed In May While Startup M&A Picked Up, Led By Large OpenAI Acquisitions

Crunchbase • Gené Teare • June 4, 2025

Business•Startups•VentureCapital•MergersAndAcquisitions•ArtificialIntelligence•Venture Capital

Global venture funding reached $21.8 billion in May 2025, down 13% quarter over quarter and down a third year over year, Crunchbase data shows. The U.S. continued to dominate in May, with 56% of global venture funding invested in U.S.-based companies.

While funding slowed and the IPO market remained tepid, M&A activity increased. Startup acquisitions with disclosed prices totaled $24.7 billion in May, marking the second-highest monthly total since the start of 2024, Crunchbase data shows.