Contents

Essay

2026

Media

Venture

AI boom transforming the venture capital, megacap investing landscape

Pat Grady & Alfred Lin on the Tactics of Great Venture Investing | Ep. 36

Are private valuations set for a correction? Henry Ward, CEO of Carta, on #capitalmarket trends

Jeff Bezos’s Project Prometheus Joins The Unicorn Board Alongside 18 Other Startups In November

Education

Regulation

AI

Disney CEO on $1 billion investment in OpenAI: ‘This is a good investment for the company’

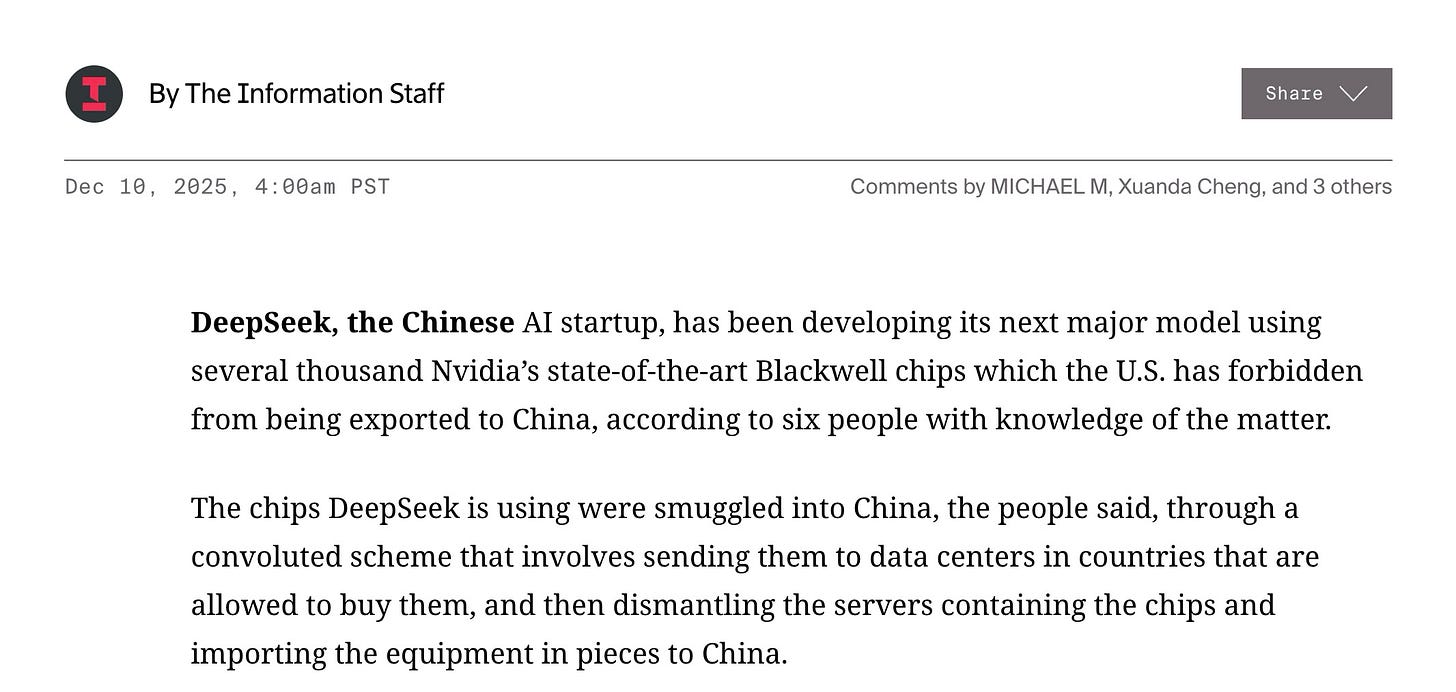

Nvidia Wins US Approval to Sell H200 Chips to China | Bloomberg Tech 12/9/2025

SoftBank and Nvidia reportedly in talks to fund Skild AI at $14B, nearly tripling its value

OpenAI Unveils More Advanced Model as Race With Google Heats Up

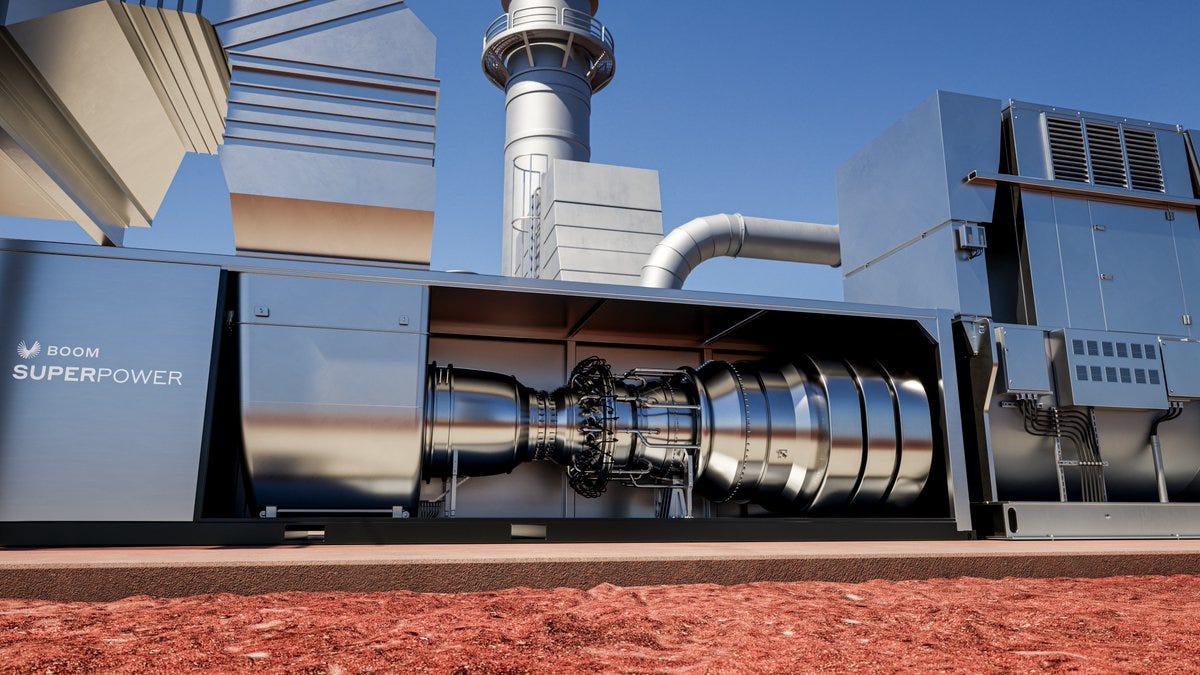

A new product, a new customer, a new financing!Introducing Superpower

Interactions API: A unified foundation for models and agents

LeCun’s Alternative Future: A Gentle Guide to World-Model AI [Guest]

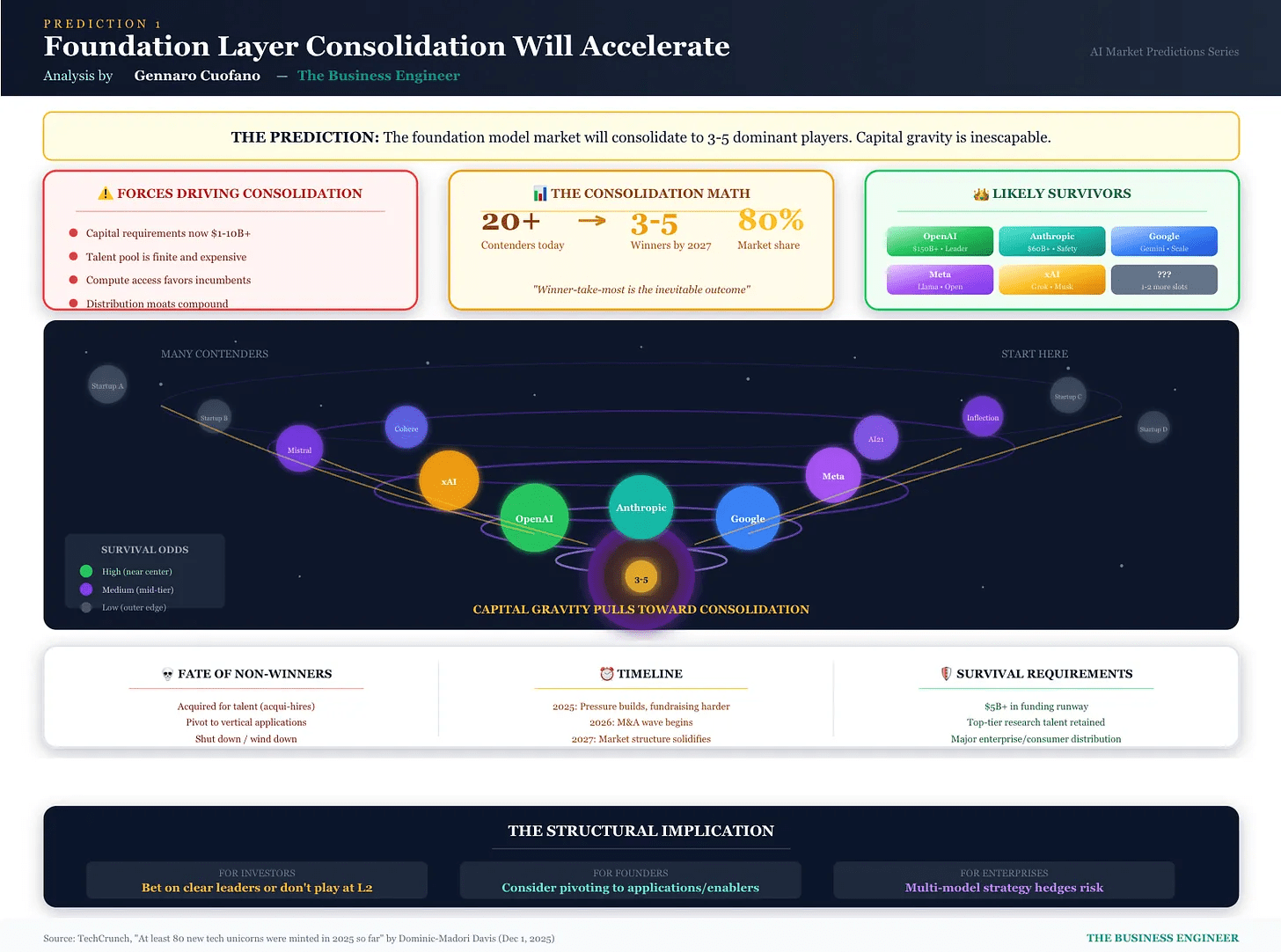

Foundation Model Consolidation Is No Longer a Forecast — It’s a Mechanical Outcome

The Rise of Neolabs: Where the Next AI Breakthroughs Will Come From & 11 AI Labs to follow

OpenAI says it’s turned off app suggestions that look like ads

China

Interview of the Week

Editorial:

Human¹⁰⁰ - Why This Week Proves AI Is Our Greatest Invention, Not Our Replacement

This week’s stories feel like a chaotic pile-up: Netflix swallowing Hollywood, Disney investing in OpenAI and licensing 200 characters for use by consumers, SpaceX eyeing a $1.5 trillion IPO, and Sam Altman declaring an enterprise ‘code red’ over lunch and then announcing ChatGPT 5.2.

It’s easy to see this as a handful of tech giants and Venture Capitalists vacuuming up the last scraps of independence in media, capital, and intelligence. The prevailing narratives offer two bleak choices: the doomer view that sees an alien, runaway technology needing to be caged, or the diminishment view that insists only ‘real’ humans can be creative, framing AI as a cheap, threatening imitation incapable of going beyond its training set of human produced materials.

Both are wrong, and this week’s material shows why. We are not witnessing the triumph of machines over humanity. We are witnessing Human¹⁰⁰— the amplification of human ambition, creativity, and capability to the power of 100. And this due to a tool of our own making.

The connection between Ben Thompson’s ‘Hollywood End Game’ and the a16z ‘Big Ideas 2026’ is not about tech eating culture; it’s about human systems being rebuilt for 100x scale and precision.

Netflix isn’t killing Hollywood; it’s applying a human-engineered model—global data, direct relationships, algorithmic curation—to a century of human storytelling. The result? As Thompson notes, IP is being revalued not destroyed. The ‘end game’ is a more efficient, global pipeline for the stories we create. The fantastic Scandinavian dramas I watch are only possible because of this.

Similarly, the $1.21 gigawatt order for the ‘Superpower’ turbine isn’t an AI monster demanding sacrifice; it’s humans building unprecedented energy infrastructure to power the next phase of human computation and innovation. AI is ours, not a thing in itself.

This brings us to the week’s most revealing tension: the AI Value Gap.

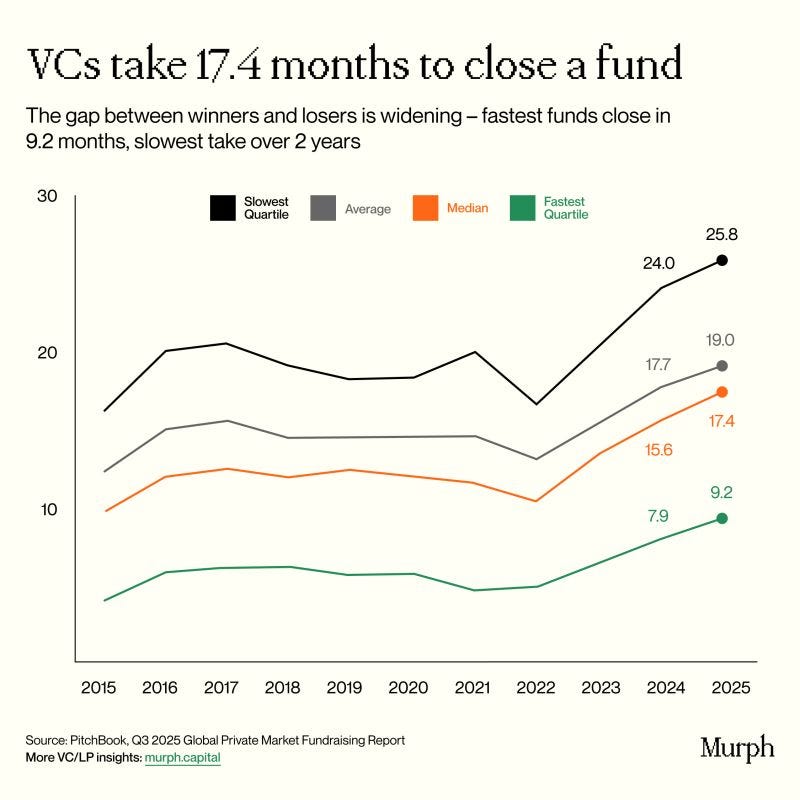

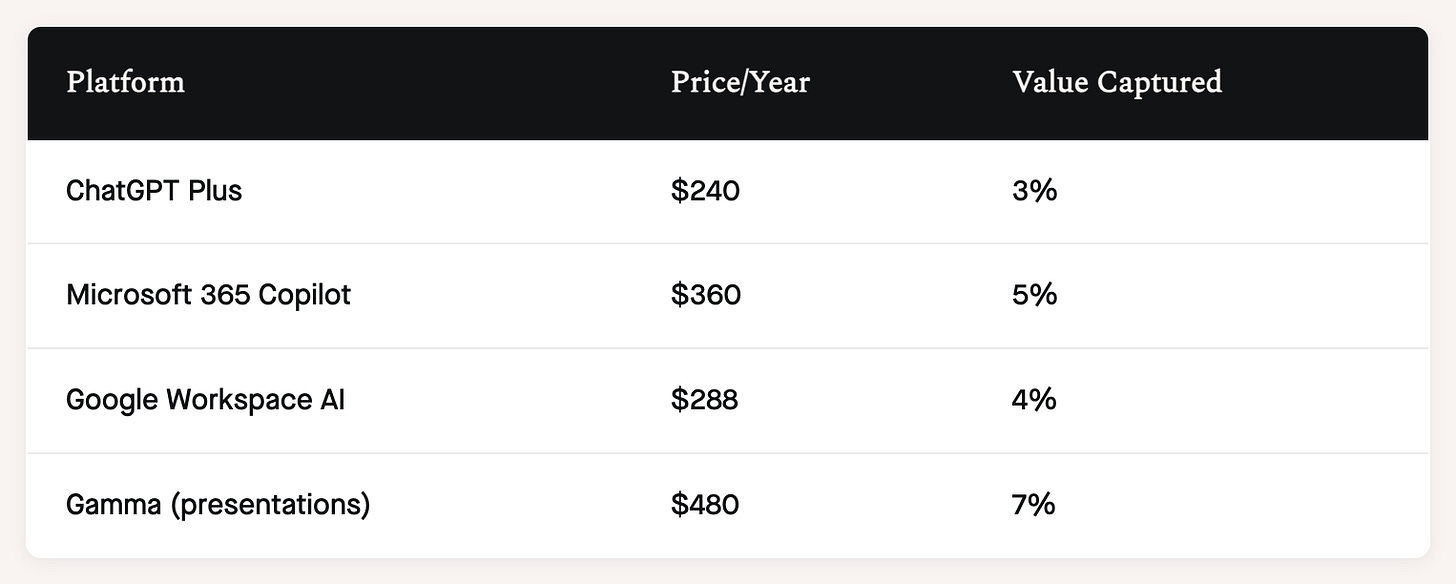

OpenAI’s own data shows AI saves the average knowledge worker 54 minutes a day—worth about $7,282 per seat annually in recovered productivity. Yet tools like ChatGPT Plus capture only 3% of that value.

This is early innings. The value gap exists because we’re still learning to price augmentation.

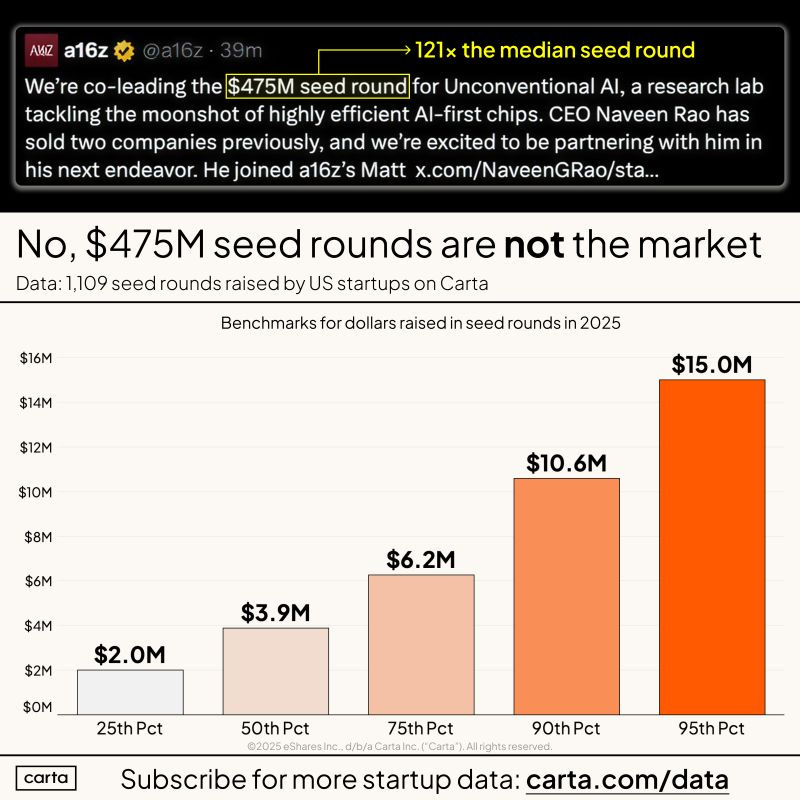

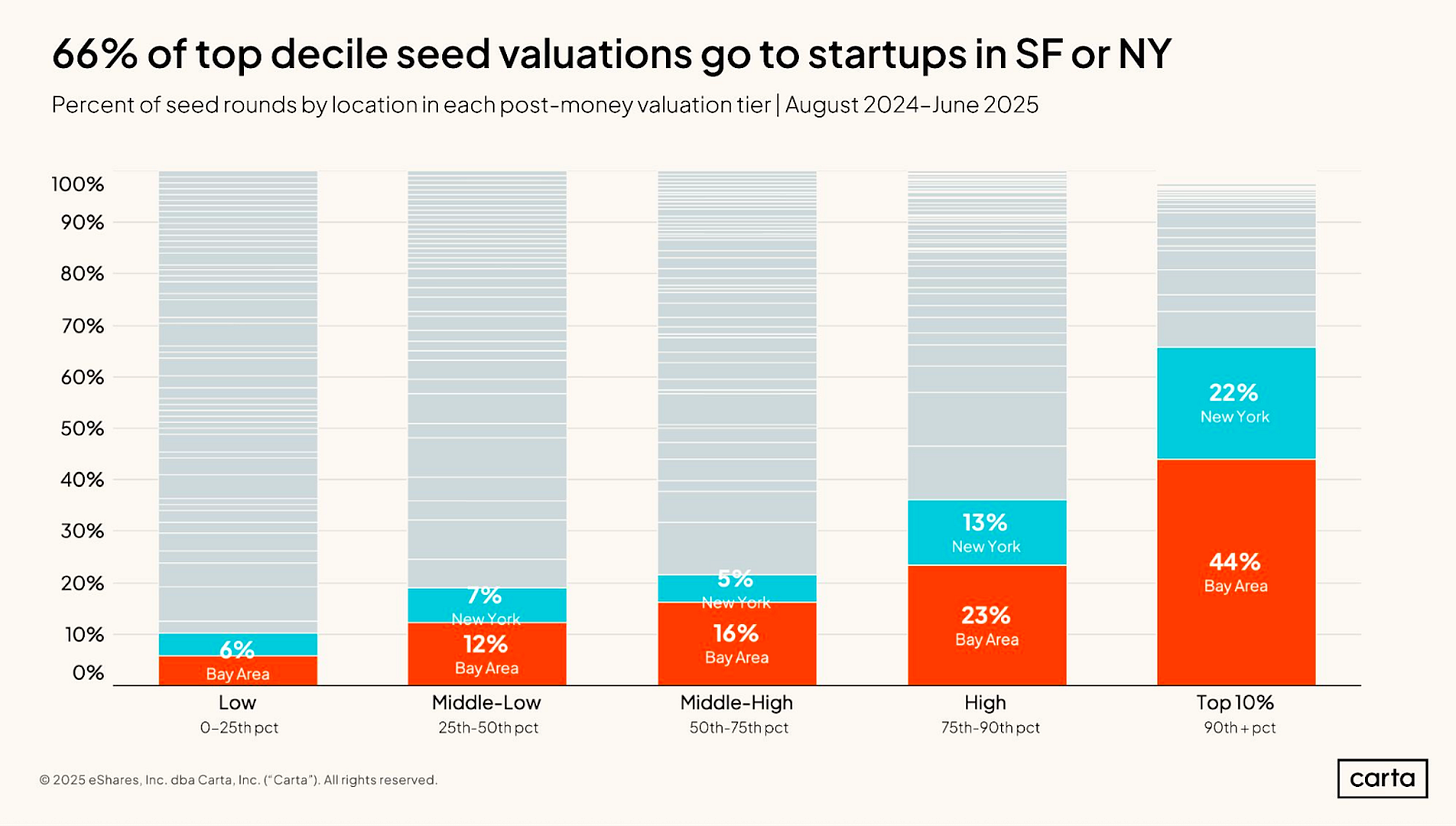

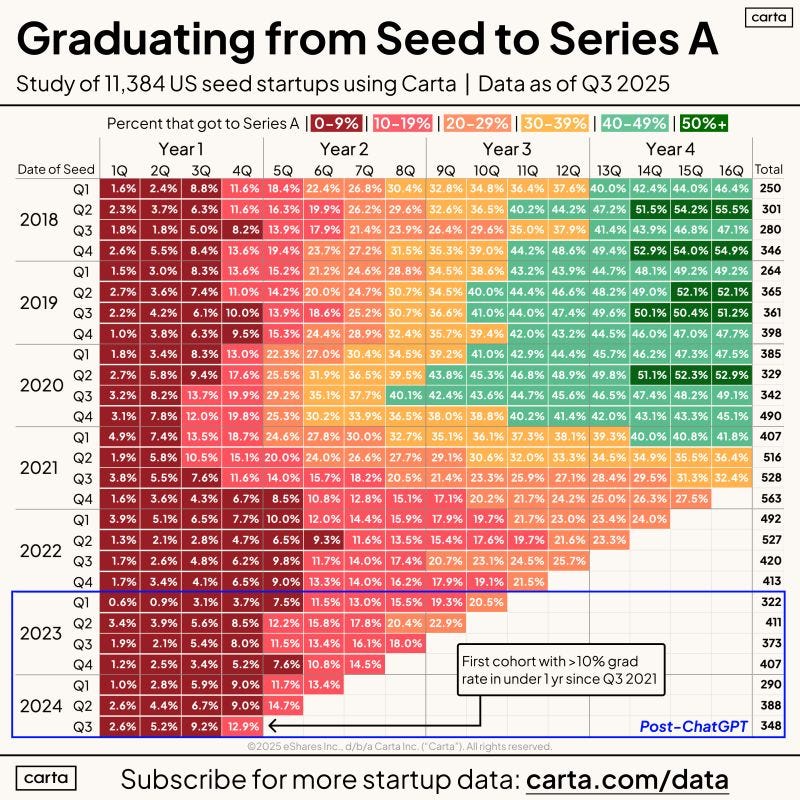

As the Carta seed data shows, founders are building vertical applications—in classrooms from Iceland to Alabama, in enterprise workflows, in robotic ‘brains’ like Skild AI—that embed AI as a force multiplier.

The value will accrue not to some silicon overlord, but to the humans and companies that learn to wield it. Disney’s $1 billion bet on OpenAI isn’t capitulation; it’s a human institution betting its legendary creativity can be amplified, not replaced.

The doomer and diminisher views miss the story entirely.

They see the foundation model consolidation—where capital intensity mechanically favors a few winners—as a loss of control. But look closer: the ‘Neolabs’ emerging, the open-weight models from China, the Nordic education partnerships. The frontier is expanding, not contracting. The ‘mechanical outcome’ is more platforms, not fewer minds. And better humans. Even more dramatic is the collective uplift of human potential made possible by our new toolset. And the gross wealth that will create.

So where does this leave us? The unresolved question isn’t ‘Can we control AI?’ but ‘Can we govern the abundance it creates?’

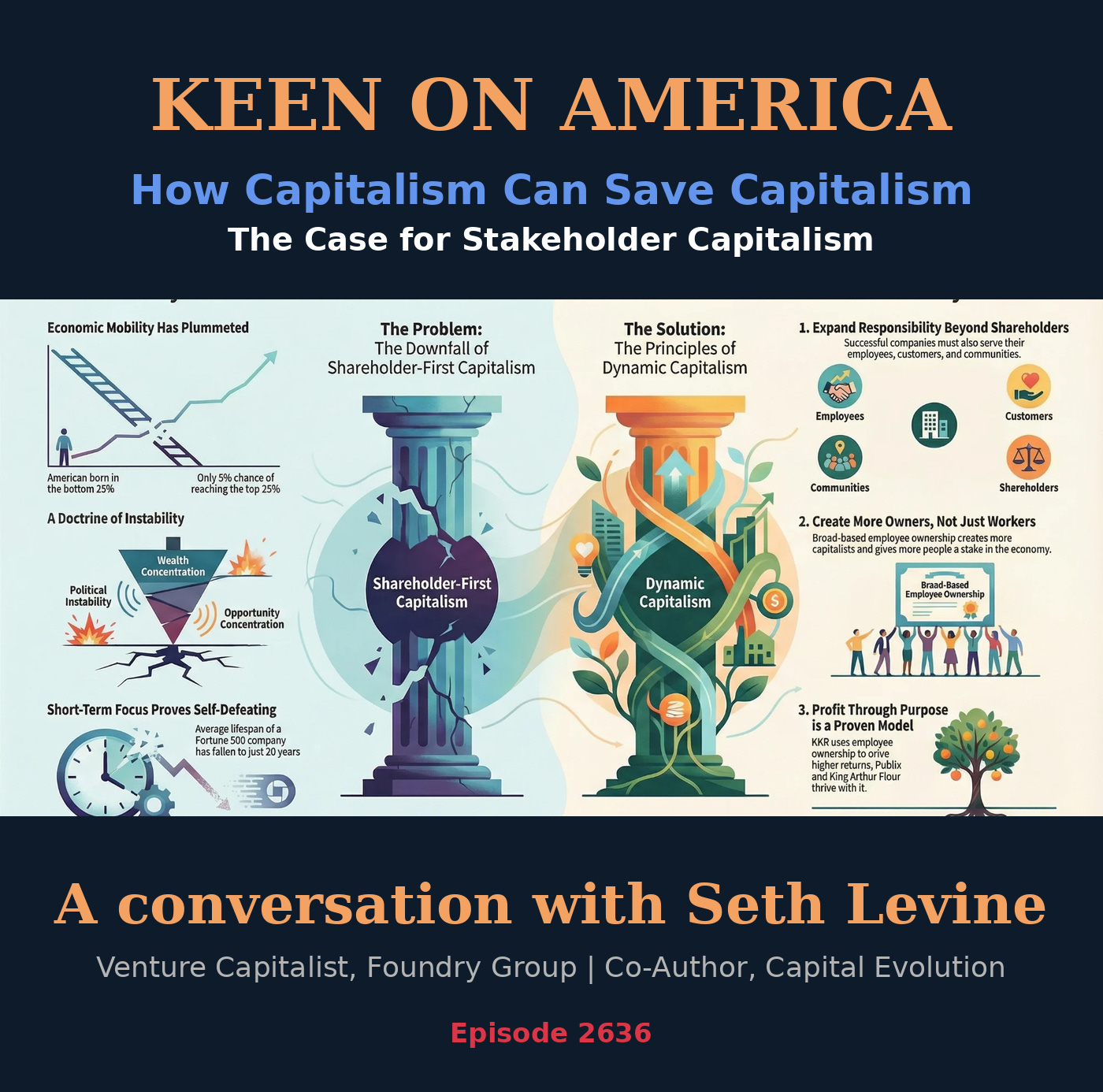

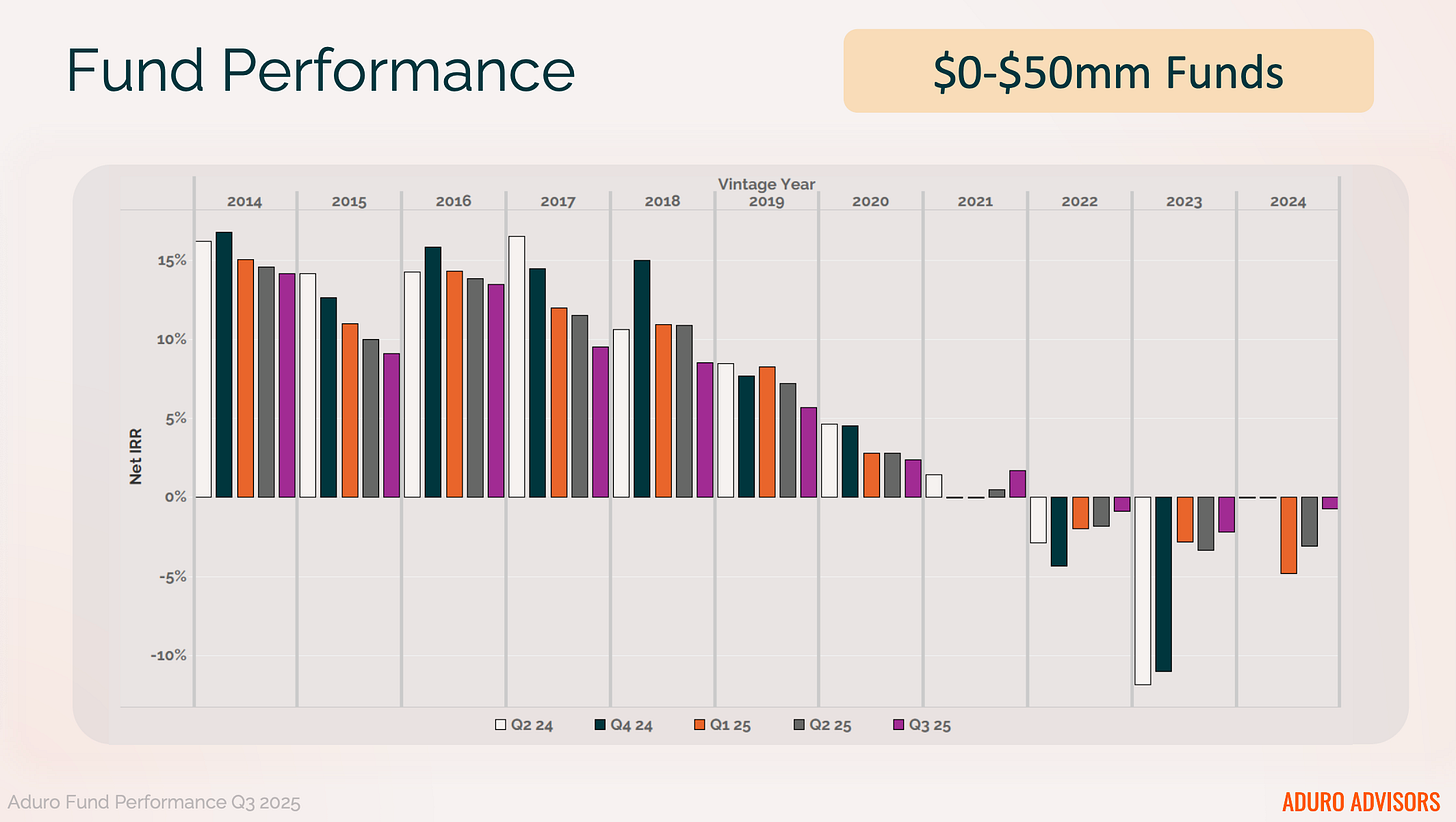

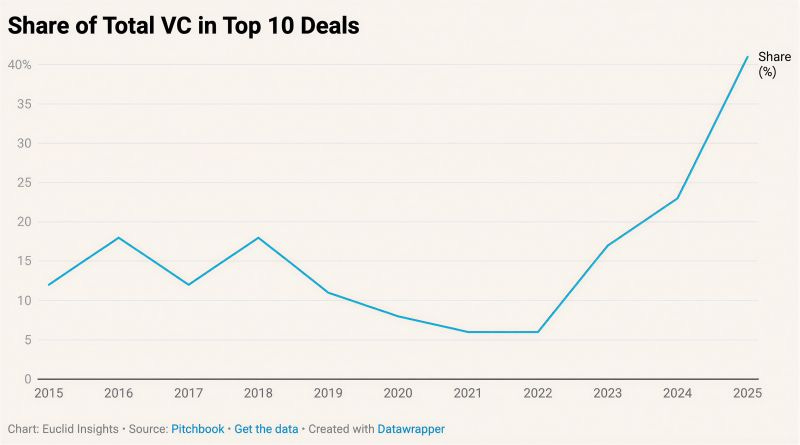

The concentration of VC capital into the top 10 deals, the potential valuation correction Henry Ward warns of, Trump’s ‘ONE RULE’ push for federal AI preemption—these are the real battles. They are fights over how to distribute the 100x gains of Human¹⁰⁰, not whether to prevent them. And the wealth creation leaders - Elon, Sam, and, all the others, need to understand that they are not building personal wealth but human uplift.

Looking ahead, we should watch three things: whether the productivity value gap closes through new business models, whether open ecosystems can keep pace with consolidated capital, and if our policy frameworks can be designed for acceleration and universal benefit, not fear.

The progress this week points to is not alien or dehumanizing. It is profoundly, exponentially human. Our task is not to slow it down or talk it small, but to ensure we’re all holding a piece of the amplifier. Human¹⁰⁰ needs to be the framing for an optimistic and determined view of the future.

Essay

America Must Prepare for the Future of War

Nytimes • December 8, 2025

GeoPolitics•Defence•US Military Reform•Future Of War•Cyber Warfare•Essay

Evolving Nature of Warfare

The central argument is that warfare has fundamentally shifted in form, speed and technological underpinnings, and that the existing U.S. military structure is not adequately designed for this new reality. Instead of traditional, large-scale, manpower-heavy conflicts, modern war is increasingly characterized by cyberoperations, autonomous and remotely piloted systems, space-based assets, information warfare and economic and infrastructure disruption. The piece contends that America risks strategic surprise and potential defeat if it continues to rely on legacy assumptions about how wars begin, unfold and are won. Reform is framed not as a marginal optimization but as an urgent redesign of how the United States organizes, equips, trains and commands its forces.

Key Features of the “Future of War”

War is becoming more networked and data-driven, with sensors, drones and satellites feeding real-time information into algorithmic decision-making systems.

Non-kinetic domains such as cyberspace, space, and the information environment play as large a role as land, sea, and air in shaping battlefield outcomes.

Adversaries can inflict major damage—on power grids, communications, financial systems or political stability—without crossing traditional thresholds of open armed attack.

Technology is lowering barriers to entry, enabling smaller states and even nonstate actors to deploy tools like drones, cyberweapons and precision-guided munitions that once required superpower-level resources.

These dynamics weaken the relevance of sheer troop numbers or traditional platform dominance (e.g., tanks, large surface ships) and elevate agility, resilience and technological integration as decisive factors.

Why U.S. Military Reform Is Necessary

The U.S. defense establishment still largely reflects Cold War and post–9/11 counterinsurgency paradigms, with budget priorities favoring big, expensive platforms and long procurement cycles.

Hierarchical command structures and bureaucratic acquisition processes slow down innovation, leaving the U.S. lagging behind the speed at which commercial technology evolves and adversaries adapt.

Training and doctrine remain oriented around conventional battles rather than distributed operations, contested information environments, and persistent cyber and space threats.

The editorial board argues that this mismatch between structure and threat environment creates vulnerabilities that adversaries like China, Russia, Iran or technologically capable nonstate actors can exploit.

Core Elements of Recommended Reform

Modernizing Capabilities

Shift resources from legacy systems to emerging technologies such as autonomous platforms, advanced cyberdefense and offense, AI-enabled analytics, resilient satellite constellations and counter-drone systems.

Invest in rapid, modular procurement that can integrate commercial innovations quickly rather than waiting for decade-long acquisition programs.

Reorganizing and Training for New Domains

Treat cyber, space and information warfare as core theaters of conflict, not supporting functions, with dedicated forces, doctrine and clear lines of authority.

Train service members to operate in highly contested, electronically degraded environments where GPS, communications and centralized command cannot be assumed.

Strengthening Civil-Military and Allied Integration

Coordinate more closely with private-sector technology firms that increasingly drive innovation in AI, cloud computing, communications and space systems.

Deepen cooperation with allies to share intelligence, integrate systems, and present a more coherent deterrent posture in critical regions.

Strategic and Political Implications

The argument carries several broader implications:

Deterrence now depends less on visible mass and more on credible, adaptive capabilities in unseen domains; adversaries must believe the U.S. can respond rapidly and asymmetrically to a wide range of provocations.

Democratic oversight and public understanding of war’s changing nature become more challenging as operations shift into opaque cyber and space arenas, raising questions about transparency, escalation risks and legal frameworks.

Budget debates will become sharper as policymakers confront trade-offs between maintaining existing forces and investing in new technologies and organizational changes that may be politically controversial but strategically necessary.

Conclusion and Call to Action

The piece concludes that the United States faces a choice between proactively reshaping its military for the emerging character of conflict or clinging to outdated structures that deliver a false sense of security. Reform is portrayed as urgent rather than optional: the future of war is already visible in ongoing conflicts and cyber incidents worldwide. By modernizing capabilities, reorganizing around new domains, and integrating technology and alliances more effectively, America can better deter adversaries, protect its infrastructure and values, and reduce the risk that the next major conflict catches it unprepared.

What’s Next After You Lose Someone’s Money

This is going to be big • Charlie O’Donnell • December 11, 2025

Essay•Venture

I recently got hit up for a backchannel reference on a founder I had backed. His company didn’t return anything to investors when it got sold, and I hadn’t heard from him after the sale—so I didn’t know about the new company.

It’s perfectly reasonable to feel a bit awkward after you’ve lost someone’s money, regardless of whether they’re an individual angel or a venture capital investor. Just because it isn’t technically a VC’s own money wouldn’t make it any less of a black eye within their firm, right?

The follow-up after a loss might not be a conversation you’re excited to have—but it’s the best thing you can do for your reputation and your growth. Here’s how to have that conversation so these loose ends don’t come back to bite you.

What do I mean by that? Well, it’s a bit awkward to have to respond to a reference check with, “I haven’t heard from them, so I don’t know anything about this new company.” That’s going to make the new potential investor wonder if maybe you left on bad terms or whether the founder has any reason to think I wouldn’t want to speak with them.

That’s the funny thing—most founders wouldn’t imagine I’d want to chat with them after they lost my fund’s money, but as long as they worked hard and did their best, why wouldn’t I? Every startup investor knows going in that the chances of success are going to be low. Do founders really think that VCs just have a broken relationship with the founders that don’t make a big return—which is most of them?

When you were in the trenches with a founder, watching them fight tooth and nail to make something of your investment, you’ve gained a ton of respect—more than you could ever lose with a negative financial outcome. The idea that they’d rather back a complete stranger than work with you again doesn’t square with how they invest. They asked their own investors to give them 30 or 40 shots on goal because they know the first one, two, three, or twenty might not work out.

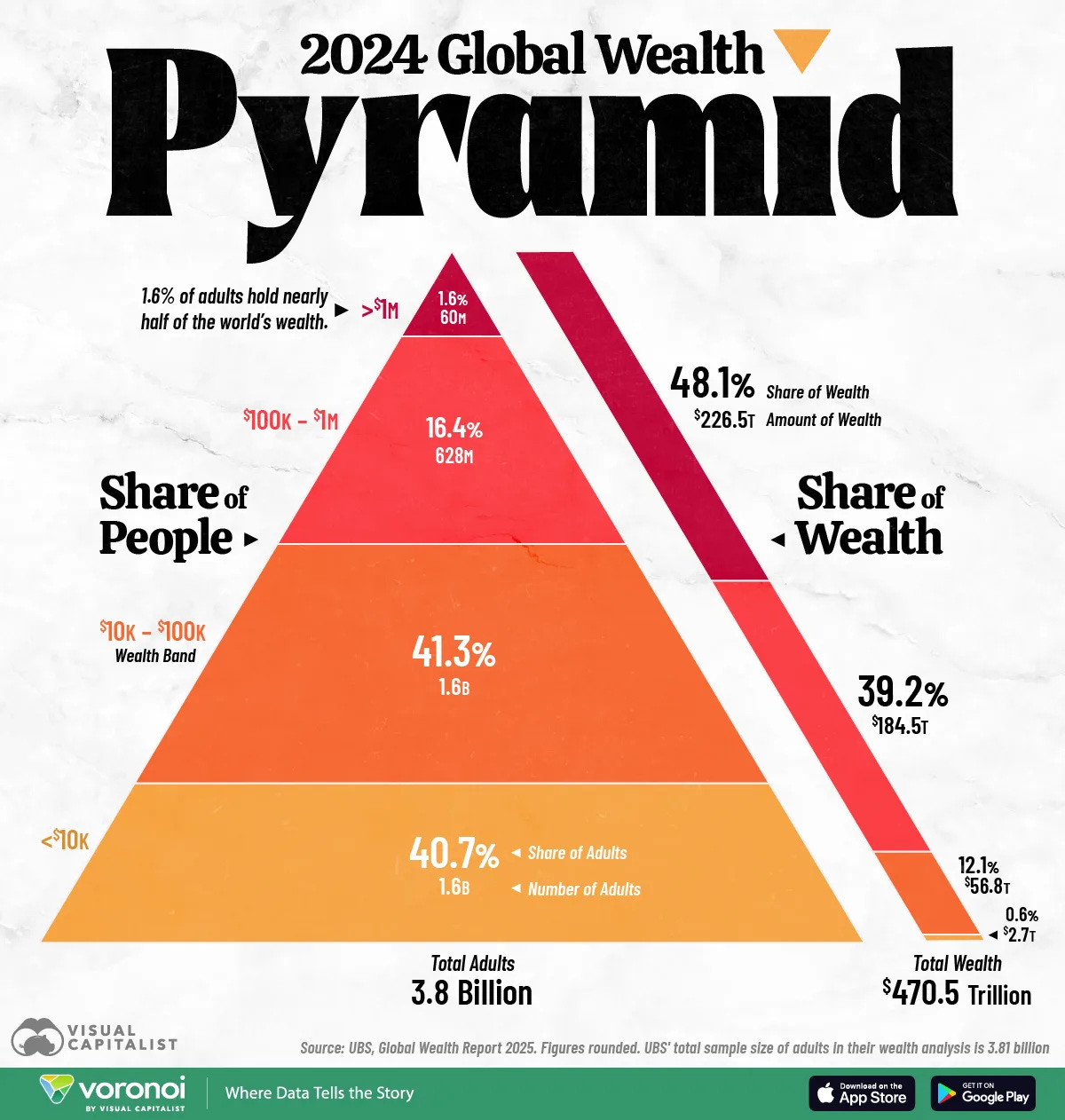

The Global Distribution of Wealth, Shown in One Pyramid

Visualcapitalist • December 9, 2025

Essay•GeoPolitics•Wealth Inequality•Global Wealth Distribution•UBS Global Wealth Report

Visualized: The Global Distribution of Wealth

See visuals like this from many other data creators on our Voronoi app. Download it for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

Key Takeaways

Just 1.6% of adults worldwide hold nearly 48% of global wealth.

Almost 3.1 billion adults, or 82% of the world’s adult population, control just 12.7% of total wealth.

The bottom wealth tier, for those in the $0-$10k wealth bracket, represents 1.55 billion adults but only 0.6% of global wealth.

The world got richer in 2024, with global personal wealth growing by 4.6%. However, the distribution of that wealth remains uneven.

At the top of the global wealth pyramid sits a small elite holding nearly half of the world’s assets, while billions of people in lower tiers own only a sliver of global wealth.

This infographic uses data from UBS’ latest Global Wealth Report to break down the global wealth pyramid by number of people and the share and amount of wealth they hold.

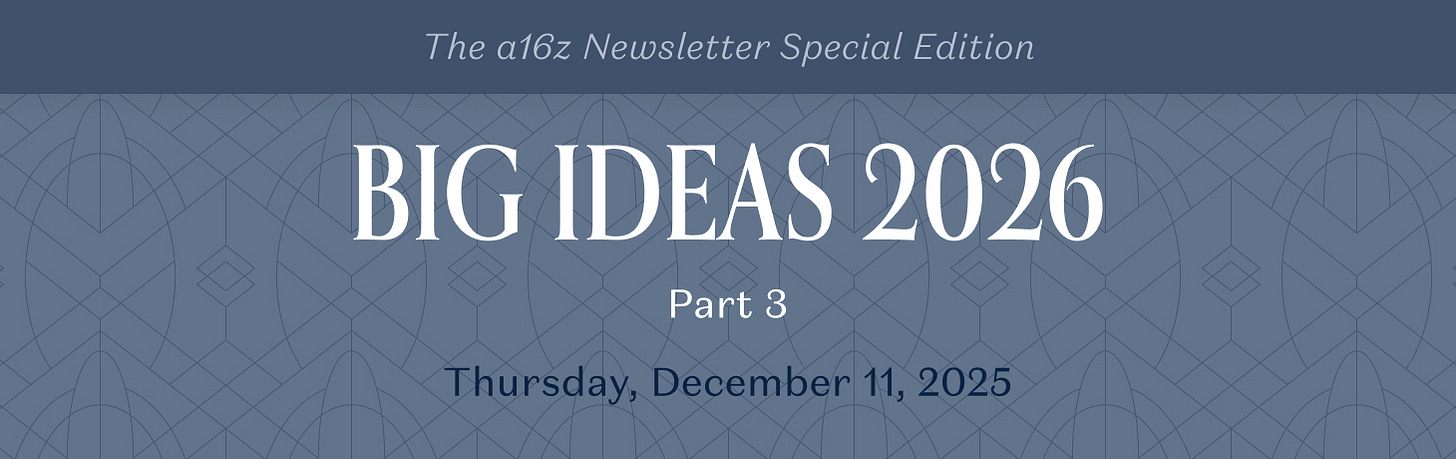

The Data on Wealth Distribution

UBS segments the world’s 3.8 billion adults into four wealth tiers, ranging from those with less than $10,000 to those with more than $1 million, who lie at the top of the global wealth pyramid.

The table below shows how wealth is distributed globally between these four tiers of adults:

At the apex of the pyramid, 60 million adults, who make up just 1.6% of the global population, own $226 trillion, or nearly half of all household wealth worldwide.

Beneath the apex, the world’s upper-middle tier (those with $100k–$1M in net worth) includes 628 million adults who collectively hold $184 trillion, representing 39.2% of global wealth.

The largest cohort of adults sits in the middle-lower band: 1.57 billion adults with $10k–$100k, holding a combined $56.8 trillion. Despite accounting for 41% of the world’s population, this cohort owns only 12% of global wealth.

At the base of the pyramid are 1.55 billion adults—40.7% of the population. Together, they hold $2.7 trillion, or 0.6% of global wealth.

Breaking Down the Top of the Wealth Pyramid

Of the 60 million adults at the top of the global wealth pyramid, 2,891 individuals are billionaires, collectively holding over $15.6 trillion in wealth.

Of these, just 15 individuals own more than $100 billion in wealth, while another 16 individuals fall in the $50 billion to $100 billion wealth bracket. The remaining 2,860 billionaires have less than $50 billion in wealth.

Can We Stop Our Digital Selves From Becoming Who We Are?

Nytimes • December 7, 2025

Essay•Media•Attention Economy•Social Media•Digital Identity

How Attention Shapes the Self

The core argument is that what we choose to notice—online and offline—gradually builds who we are. The piece suggests that our “digital selves” are not separate masks but active forces that train our minds, emotions, and relationships. As we repeatedly attend to certain types of content, interactions, and platforms, we reinforce particular habits of thought and feeling, which then influence our offline identity. Rather than asking how to wall off a “real” self from a “digital” self, the article urges us to see attention as a limited, formative resource that must be directed with care.

The Mechanics of Digital Capture

Algorithms are designed to maximize engagement by learning what keeps us looking, not what makes us wise or fulfilled.

Over time, feeds learn our emotional triggers—anger, outrage, envy, or fear—and preferentially show us material that elicits them.

The more we respond to a certain kind of post (for example, political outrage or status comparison), the more the system shows us similar content, gradually narrowing our sense of what is normal or important.

This creates a feedback loop: our attention trains the algorithm, and the algorithm, in turn, trains our attention. The article stresses that this is not simply about “wasting time,” but about shaping our dispositions: whether we are patient or impulsive, generous or suspicious, curious or closed.

Digital Selves as Habit Machines

Each platform encourages a specific “micro‑self”: the witty poster, the hot‑take commentator, the aesthetic curator, the relentless networker.

Performing these roles repeatedly builds habits: we learn to think in tweet‑length sound bites, to scan experiences for their photo potential, or to judge news by how well it will perform socially.

These habits do not stay confined to the screen; they leak into everyday conversation, perception, and even memory, influencing how we interpret events and how we see other people.

The article argues that our digital personas are less like costumes and more like training regimens: what we rehearse, we become. Even if we feel detached or ironic about our online self, the repetition of behaviors still engrains patterns in us.

Responsibility and Structural Power

On one hand, individuals are urged to take responsibility for their attention: to notice what kinds of content leave them drained, anxious, or brittle, and to deliberately shift away from those patterns.

On the other hand, the piece emphasizes that this is not purely a matter of willpower: platform design, default settings, and opaque recommendation systems exert enormous influence.

Frictionless design—endless scroll, autoplay, persistent notifications—lowers the cost of surrendering attention and raises the cost of resisting.

Thus, the question “Can we stop our digital selves from becoming who we are?” becomes partly a political and regulatory question about what kinds of attention economies we permit, and partly an ethical question about what we practice daily.

Strategies for Reclaiming Attention

Deliberate constraints: time‑boxed use, device‑free spaces, or following fewer accounts to widen and slow the feed.

Reorientation: seeking content that deepens perspective—long‑form writing, diverse viewpoints, or creators who reward patience rather than outrage.

Reflective practices: noticing after a session how one feels—stressed, resentful, energized, inspired—and treating that as data about what to change.

The article frames these not as purity rituals but as ways of aligning our digital behaviors with the kinds of people we hope to become.

Implications for Identity and Society

Individually, the piece suggests that guarding attention is an act of self‑authorship: by deciding what deserves our sustained focus, we decide which parts of us grow. Socially, pockets of collective attention—subcultures, fandoms, political tribes—coalesce into shared realities that may be hard to bridge. If large groups spend most of their digital time in outrage‑optimized environments, institutions, public discourse, and trust itself are reshaped. The concluding message is not that we must abandon digital life, but that we must treat attention as the medium from which both our inner lives and our common world are made—and act accordingly.

Opinion | Is AI Making Us Dumb?

Wsj • Andy Kessler • December 7, 2025

Essay•Education•AI and Learning•Critical Thinking•Moral Panic

Central Argument

The piece argues that fears about artificial intelligence “making us dumb” are misplaced and function largely as a distraction from deeper, longstanding failures in the education system. Rather than seeing AI as a corrosive force on human intelligence, the article frames it as a tool—powerful, fallible, and value-neutral—that exposes gaps in how we teach people to think, write, and reason. Moral panic over new technology, the author suggests, has accompanied everything from calculators to the internet, and in each case the real issue has been whether schools adapt to teach higher-order skills instead of rote tasks that machines can now do better.

Technology Panic vs. Educational Reality

The article situates current anxiety about AI alongside historical reactions to earlier technologies: calculators supposedly eroding math skills, spellcheck weakening spelling, search engines undermining memory.

In each episode, predictions that the technology would “dumb down” society failed to materialize; instead, people shifted to using tools to offload routine work and focus on more complex problems.

The author contends that blaming AI for intellectual decline is easier than confronting uncomfortable evidence of poor educational outcomes, such as weak reading comprehension, limited numeracy, and superficial writing skills.

These failings predate AI and reflect systemic problems in curricula, teacher training, incentives, and cultural expectations around effort and rigor.

AI as a Mirror of Human Weaknesses

The article treats generative AI as a mirror that reflects both our strengths and our deficits.

When students lean on AI to write essays, it reveals that many were never taught to structure arguments, think critically, or revise thoughtfully in the first place.

Concerns that AI-generated text will flood the world with mediocrity highlight a prior reality: much human-produced writing is already formulaic and shallow.

Rather than calling AI inherently “dumbing,” the author suggests it reveals the low bar our institutions have long tolerated in reading, writing, and analytical skills.

What Education Should Do Differently

The article calls for education systems to shift decisively toward:

Teaching critical thinking, logic, and argumentation rather than memorization.

Emphasizing original thought, skepticism, and the ability to interrogate sources, including AI outputs.

Training students to treat AI as a starting point or assistant, not an authority or substitute for thought.

Assignments and assessments need to evolve: open-ended projects, oral defenses, in-class problem solving, and tasks that require personal insight or real-world application become more important if AI can handle boilerplate responses.

Teachers should explicitly integrate AI into pedagogy: show its errors, biases, and limitations, and have students critique and improve AI-generated content.

Autonomy, Incentives, and Personal Responsibility

The argument stresses that human agency remains central: people choose whether to outsource thinking or to use tools to think better.

AI can tempt users into intellectual laziness, but so can television, social media, or any convenience technology; the key is discipline, expectations, and incentives.

When grades, jobs, or reputations reward depth, originality, and correctness, individuals will be motivated to go beyond generic AI answers.

The author implies that cultivating self-discipline and intrinsic curiosity matters more than banning or fearing AI tools.

Implications and Broader Impact

The article concludes that AI is unlikely to “make us dumb” on its own; instead, it will widen the gap between:

Those who use it as a cognitive amplifier—fact-checking, brainstorming, modeling, and accelerating learning.

Those who treat it as a crutch and accept unexamined outputs.

This divergence amplifies the urgency of reforming education so that more people learn how to question, verify, and build on AI rather than substitute it for real understanding.

Ultimately, blaming AI serves as an excuse to avoid the harder work of fixing a failing school system and raising expectations for intellectual effort. The real danger is not the technology, but our willingness to settle for shallow thinking when far better is possible.

2026

Big Ideas 2026: Part 2

A16z • a16z New Media • December 10, 2025

Venture•2026

This article presents a collection of forward-looking predictions from venture capital investors, focusing on the transformative impact of artificial intelligence across the industrial and consumer application landscapes in the coming year. The central thesis is that AI is moving beyond digital automation to fundamentally reshape physical industries, redefine enterprise workflows, and create new consumer paradigms, marking a shift from software that “ate the world” to software that will “move it.”

American Dynamism: AI Rebuilds the Physical World

The contributors from the American Dynamism team envision a renaissance of the American industrial base, powered by AI-native and software-first approaches. David Ulevitch argues that the most important shift is the rise of companies that start with simulation, automated design, and AI-driven operations to build next-generation energy, manufacturing, logistics, and infrastructure. This is not about modernizing the past but constructing what comes next.

Key sub-themes within this industrial transformation include:

The Factory Mindset: Erin Price-Wright predicts applying a factory mindset—emphasizing scale, repeatability, and modular deployment of AI and autonomy—to complex sectors like nuclear energy, housing construction, and data center build-out.

Physical Observability: Zabie Elmgren foresees a revolution in “physical observability,” where billions of networked cameras and sensors create a real-time, AI-native fabric to monitor and manage cities and critical infrastructure, enabling advanced robotics and autonomy.

The Electro-Industrial Stack: Ryan McEntush introduces the concept of the “electro-industrial stack”—the integrated technologies from minerals to software that power electric machines. He warns that national leadership in the next industrial era depends on mastering this hardware foundation.

The Data Crusade: Will Bitsky identifies a coming “crusade for data” within critical industries. He posits that industrial companies possess a comparative advantage in generating valuable, process-oriented training data from their physical operations, which will become a new strategic asset.

Apps: AI Becomes Invisible and Integral

The Apps team shifts focus to how AI integration will evolve within software and services, moving from visible tools to embedded, proactive systems.

Business Model Reinforcement: David Haber emphasizes that the best AI startups will amplify their customers’ core economics, driving revenue rather than just cutting costs, by aligning deeply with customer incentives.

New Distribution and Interfaces: Anish Acharya highlights ChatGPT’s evolution into a major distribution channel and “AI app store” for consumer products, while Marc Andrusko predicts the “death of the prompt box,” with AI becoming proactive, invisible scaffolding within workflows.

Agentic Workflows and Enterprise Orchestration: Olivia Moore and Seema Amble detail the expansion of AI agents from single-task solutions to managers of entire multi-modal workflows and customer relationship cycles. Amble notes this will force large enterprises to create new roles like “AI workflow designers” and invest in “systems of coordination” to manage fleets of digital workers.

Industry-Specific Rebuilding: Angela Strange argues that AI will only transform sectors like banking and insurance when the underlying infrastructure is rebuilt to be AI-native, leading to merged categories and dramatically larger market winners.

Consumer Shift: Bryan Kim predicts a major pivot in consumer AI from “help me” (productivity) to “see me” (connectivity), using multimodal data to build products that foster stronger personal relationships and self-understanding.

The overarching conclusion is that 2026 will be a pivotal year where AI transitions from a promising technology to the foundational layer of both the physical economy and digital enterprise. Success will belong to those who build trust into physical observability, exploit new data and distribution channels, and have the courage to rebuild legacy systems from the ground up with AI as the core operating principle.

SpaceX Could Lead $2.9 Trillion in Private Valuation to Market

Bloomberg • Bailey Lipschultz • December 10, 2025

Venture•2026

A potential initial public offering (IPO) for SpaceX is viewed by market analysts as a watershed event that could unlock a wave of other highly valued private companies to follow suit. The article argues that SpaceX, as a “centicorn” valued at over $100 billion, could act as a catalyst, freeing up an estimated $2.9 trillion in private company valuation to eventually enter the public markets. This figure is based on an analysis of the 30 largest private, venture-backed companies in the United States.

The Centicorn Bottleneck and Market Impact

The current market has seen a significant backlog of large, mature private companies, often called “centicorns” or “decacorns,” that have delayed public listings. These delays have been driven by a combination of ample private capital, regulatory complexity, and volatile public market conditions. A successful SpaceX IPO would demonstrate that public investors are willing to support and value companies with complex, capital-intensive, and long-term business models akin to space exploration and satellite internet.

The sheer size and prominence of a SpaceX listing would likely improve overall market sentiment toward new issuances.

It would provide a recent, high-profile comparable valuation for other companies in adjacent sectors like aerospace, advanced manufacturing, and deep-tech.

The event could create a “proof of concept” for taking visionary, founder-led companies with transformative goals public.

A Shift in the IPO Landscape

The analysis suggests that the IPO market has been missing these flagship, growth-oriented technology companies, which has had a dampening effect on the entire sector. The successful debut of a company like SpaceX could reignite investor appetite for growth and innovation, shifting focus back from purely profitability-driven narratives. This would be particularly impactful for companies in sectors that require significant upfront investment before reaching sustained profitability.

Furthermore, the article notes that many late-stage private companies and their investors are awaiting a clear signal from the market. A strong performance by SpaceX could provide the confidence needed for other centicorns in fields such as artificial intelligence, financial technology, and biotechnology to accelerate their own IPO timelines. The influx of such companies would significantly broaden the investment opportunities available to public market participants.

Implications for Investors and the Economy

The unlocking of $2.9 trillion in private value would represent a major liquidity event for early investors, employees with equity, and the companies themselves. This capital could be recycled into new ventures, fueling further innovation. For public markets, it would mean access to a new generation of industry-defining companies that have matured outside of the traditional IPO cycle.

However, the article also implies that this potential wave is contingent on the specific success of the SpaceX offering. Any stumble could prolong the private market logjam. The central thesis is that the SpaceX IPO is not just another market listing; it is positioned as a potential key that unlocks the next phase of growth companies transitioning to public ownership, reshaping the landscape for investors and the economy at large.

Enterprise Will Be a Top OpenAI Priority In 2026, Sam Altman Tells Editors at NYC Lunch

Bigtechnology • Alex Kantrowitz • December 11, 2025

AI•Business•OpenAI•Enterprise•Competition•2026

OpenAI CEO Sam Altman told a room full of editors and news CEOs this week that OpenAI will prioritize selling AI to businesses in 2026.

Altman lunched at Rosemary’s Midtown with leaders from The Atlantic, The New Yorker, The New York Times, and other top national publications on Monday. The conversation, wide ranging and at times unwieldy, featured a charming and disarming Altman speaking candidly about himself, his business, and plans for the coming year.

Altman’s plans for OpenAI’s enterprise push was the biggest revelation from the lunch. Under Altman, OpenAI has excelled at building consumer products, with ChatGPT approaching 900 million weekly users. But the company has faced fierce competition when selling its AI models to businesses, primarily from Anthropic, which is leading the enterprise AI market.

At the lunch, Altman made clear that selling to enterprises was a massive OpenAI priority, and mentioned that it was an application problem, not a training problem, that the company needed to solve. Altman was straightforward about OpenAI’s need to build better products for enterprises and his intent to fast track them.

For OpenAI, growing its enterprise business could be the surest way to scale revenue as it pursues one of history’s great infrastructure buildouts. Enterprise AI is the fastest growing software category in history, expected to bring in $37.5 billion next year, according to Gartner, up from almost zero in 2022.

Should OpenAI make inroads in the category, it could more easily justify new funding rounds and better support its push to build $1.4 trillion of computing infrastructure in the coming years. Notably, OpenAI’s October agreement with Microsoft, which added some distance between the companies, gives it greater leeway to build for enterprises.

Altman also addressed the ‘Code Red’ within OpenAI following the emergence of Google’s Gemini as a competitor. Altman has said Google’s AI model surpassed OpenAI’s GPT models in some areas, but at the lunch he pushed back on the notion that the latest Gemini model was an existential threat to OpenAI. The company has been through multiple code reds in its history, Altman said, and this one would end soon.

Big Ideas 2026: Part 1

A16z • December 9, 2025

AI•Tech•Agentic Infrastructure•Personalization•World Models•2026

Overview: Big Problem Spaces for 2026 Builders

The piece outlines major problem spaces a16z partners expect founders to tackle in 2026 across infrastructure, growth, bio/health, and games/consumer. The unifying theme is AI moving from point tools to deeply embedded systems that reshape data infrastructure, security, creative work, enterprise software, healthcare, education, and interactive worlds. Many predictions hinge on agents—autonomous AI systems—shifting load patterns in infrastructure, redefining how we build products, and changing what counts as value or engagement.

Infrastructure: From Unstructured Chaos to Agent-Native Systems

Multimodal data entropy as core bottleneck: Enterprises are drowning in PDFs, screenshots, logs, emails, videos, and other “semi-structured sludge.” This unstructured universe holds ~80% of corporate knowledge, but its decay in freshness, structure, and truth throttles AI performance, causing RAG hallucinations, fragile agent workflows, and heavy human QA. Startups that continuously clean, structure, reconcile, validate, and govern multimodal data become “keys to the kingdom” for use cases like contract analysis, onboarding, claims, compliance, engineering search, and sales enablement.

AI-automated cybersecurity work: Cybersecurity has long faced millions of unfilled roles because skilled technicians are stuck in “soul-crushing” level 1 work reviewing floods of alerts and logs. AI-native tools that automate repetitive triage, correlation, and response break the vicious cycle where tools detect everything and humans review everything. This frees security teams to “chase down bad guys,” design systems, and address deep vulnerabilities rather than drown in low-level noise.

Agent-native infrastructure as table stakes: Legacy backends were built for predictable, human-speed, 1:1 action-response patterns. Agents trigger recursive, bursty “thundering herds” of thousands of sub-tasks and API calls that resemble DDoS attacks to old systems. 2026 infrastructure must shrink cold starts, collapse latency variance, and massively increase concurrency. The true bottleneck becomes coordination—routing, locking, state management, and policy enforcement across huge parallel execution—defining winners in the agent era.

Multimodal creative tools: Early products like Kling O1 and Runway Aleph hint at a future where creators can feed models videos, reference images, voices, and motion clips and ask for precise extensions, reshoots from new angles, or consistent characters across scenes. The big opportunity is marrying powerful multimodal models with interfaces that give director-level control, enabling everything from meme creators to Hollywood studios to rely on AI as a core creative medium.

AI-native data stack evolution: The “modern data stack” is consolidating into unified platforms (e.g., Fivetran/dbt, Databricks), but a truly AI-native architecture is still emerging. Key fronts include: integrating performant vector stores with traditional structured data; enabling agents to solve the “context problem” by finding the right semantic layers and business definitions; and transforming BI tools and spreadsheets as workflows become more automated and agentic.

Growth: Enterprise Software, Agents, and New KPIs

Systems of record lose primacy: AI that can read, write, and reason across operational data turns ITSM/CRM from passive databases into active, autonomous workflow engines. The strategic locus shifts from the database to the intelligent agent layer that anticipates, coordinates, and executes end-to-end processes; systems of record become commoditized persistence tiers.

Vertical AI enters “multiplayer mode”: Vertical AI has already driven $100M+ ARR in domains like healthcare, legal, and housing. After information retrieval and reasoning, 2026 brings multi-agent, multi-party workflows. Vertical software, steeped in domain-specific interfaces and regulations, orchestrates agents for buyers, sellers, tenants, advisors, vendors, and partners. This coordination—negotiating within constraints, routing to specialists, syncing changes, and learning from expert markups—unlocks higher task success rates and strong network effects.

Designing for agents, not humans: As agents become primary consumers of web and app content, traditional optimization (SEO, UX, visual hierarchy, hooks) gives way to machine legibility. Agents won’t miss the critical insight buried on page five; they’ll extract and interpret telemetry, CRM data, and logs, then post concise insights where humans operate (e.g., Slack). Content and software must be structured for retrieval, reasoning, and interoperability rather than just human reading.

The end of screen-time as a KPI: AI applications increasingly deliver value with minimal attention or interaction—e.g., DeepResearch queries, AI clinical note tools like Abridge, AI coding tools like Cursor, or AI-driven financial analysis. This breaks the 15-year paradigm where screen time or click volume signaled value. New pricing and ROI measurement must account for outcomes like doctor satisfaction, developer productivity, analyst wellbeing, and user happiness, rewarding vendors that explain impact simply and credibly.

Bio + Health: The Rise of “Healthy MAUs”

A new healthcare segment, “healthy MAUs,” emerges: people who are not acutely sick but want ongoing monitoring, insights, and preventive care. Traditional reimbursement models and insurance have favored treatment over prevention, leaving “healthy YAUs” underserved until they become high-cost patients.

With AI dramatically lowering care delivery costs, novel prevention-focused insurance products, and consumer willingness to pay subscriptions, startups and incumbents can serve this large, recurring, data-rich segment. Continuous engagement, proactive insights, and personalized plans become core to healthtech growth.

Speedrun: World Models, Personalization, and AI-Native Education

World models as a new storytelling medium: Tools like Marble and Genie 3 generate interactive 3D worlds from text prompts, foreshadowing a “generative Minecraft” where players co-create evolving universes via language (e.g., “create a paintbrush that turns anything pink”). These shared, programmable spaces enable new genres, digital economies, and training grounds for AI agents and robots, blurring lines between creator and player.

“The year of me” and hyper-personalization: In 2026, products across education, health, and media pivot from optimizing for the average consumer to optimizing for each individual. AI tutors adapt to each student’s pace and curiosity; personalized health stacks tailor routines to one’s biology; media remixes news and stories into feeds tuned to unique interests and tone. The next generation of giants will win by “finding the individual inside the average.”

The first AI-native university: Beyond incremental tools, an AI-native university is envisioned as an adaptive organism: courses, advising, research collaboration, and operations continuously reconfigure via data and AI. Schedules self-optimize; reading lists update nightly with new research; learning paths adapt in real time. Precedents like ASU’s OpenAI partnership and SUNY’s AI literacy requirements hint at this future. Faculty become architects of learning systems, and assessment shifts to grading howstudents use AI. Graduates emerge fluent in orchestrating and governing AI, fueling the broader AI-driven economy.

Critical Takeaways and Implications

AI and agents are forcing a re-architecture of core infrastructure (data, backends, security) for scale, context, and coordination.

Enterprise value is migrating from static systems of record and human attention metrics to autonomous execution layers and outcome-based ROI.

Healthcare and education are poised for structural change, with continuous, preventive, and personalized models at the center.

New creative and interactive mediums—multimodal tools, world models, generative worlds—will create both cultural shifts and new economic frontiers.

Founders who build for agents, personalization, and multiplayer coordination are best positioned to define the 2026–2030 landscape.

Big Ideas 2026: Part 3

A16z • a16z New Media • December 11, 2025

Crypto•Blockchain•Stablecoins•Tokenization•AI•2026

This article presents a collection of 17 forward-looking predictions from a16z crypto partners and guest contributors on the key trends and innovations expected to shape the cryptocurrency and blockchain space in 2026. The forecasts span a wide range of topics, including privacy, AI integration, stablecoins, tokenization, security, and the evolving regulatory landscape, painting a picture of a maturing industry moving beyond speculation toward foundational infrastructure for the internet.

Privacy as a Critical Moat and Network Effect

Ali Yahya argues that privacy will become the most important differentiator for blockchains, creating a powerful “privacy network effect.” He posits that while bridging public assets is trivial, bridging secrets between chains is difficult and leaks metadata. This creates significant lock-in, as users on a private chain are less likely to move and risk exposure. Consequently, a handful of privacy-focused chains could capture most of the crypto market value, as privacy is deemed essential for real-world financial applications.

The Intersection of AI, Crypto, and New Economic Models

Several predictions focus on the convergence of AI and crypto. Andy Hall foresees prediction markets becoming “bigger, broader, and smarter,” leveraging AI agents for trading and analysis and using crypto for decentralized governance and proof-of-human verification. Scott Kominers anticipates AI being used for substantive research, enabling a new “polymath” style that harnesses AI “hallucinations” within layered agent workflows, a process that will require crypto for model interoperability and compensation.

Furthermore, Liz Harkavy warns of an “invisible tax on the open web,” where AI agents extract value from content without supporting the ad-based revenue models that fund it. The solution proposed is a shift to real-time, usage-based compensation systems, potentially powered by blockchain micropayments. Sean Neville identifies a related bottleneck: the shift from “Know Your Customer” (KYC) to “Know Your Agent” (KYA). He notes that with non-human identities vastly outnumbering human ones in finance, cryptographically signed credentials will be essential for agents to transact reliably.

The Evolution of Finance: Stablecoins, Tokenization, and Wealth Management

The financial infrastructure of crypto is predicted to deepen. Guy Wuollet calls for more “crypto-native” thinking in tokenizing real-world assets (RWAs), favoring synthetic representations like perpetual futures over skeuomorphic tokenization. He also advocates for the onchain origination of debt assets rather than the tokenization of offchain loans. Jeremy Zhang and Sam Broner highlight the critical need for better stablecoin on/off ramps and their role in modernizing legacy banking systems. Stablecoins, which processed an estimated $46 trillion in volume—nearly 3x Visa’s volume—offer a way to innovate without replacing decades-old core banking software.

Maggie Hsu envisions “wealth management for all,” where tokenization and crypto rails enable personalized, actively managed portfolios for everyone, not just high-net-worth individuals. This includes easier access to tokenized private market assets and automated rebalancing across a tokenized portfolio spectrum.

Security, Decentralization, and Regulatory Clarity

On security, Daejun Park predicts a shift from “’code is law’ to ‘spec is law’,” advocating for the use of AI-assisted tools to prove and enforce global invariants in DeFi protocols as a guardrail against exploits. Shane Mac argues for decentralized, quantum-resistant messaging protocols, stating that without decentralization, even unbreakable encryption can be switched off by a central authority.

Finally, Miles Jennings points to potential U.S. crypto market structure legislation as a pivotal moment for 2026. He argues that clear regulation would eliminate the “legal contortions” founders have faced, allowing blockchain networks to finally operate as designed—open, autonomous, and decentralized—unleashing their full technical potential.

The State of AI: life in 2030

Ft • December 8, 2025

AI•Tech•Automation•Inequality•FutureOfWork•2026

Overview: A 2030 Shaped by AI Everywhere, but Not for Everyone

The article envisions daily life in 2030 as deeply infused with artificial intelligence, from transport and healthcare to education and entertainment. It argues that AI will be less a visible “wow” technology and more a pervasive infrastructure, comparable to electricity or the internet. At the same time, it stresses that this AI-rich world will be sharply unequal, creating clear divides between those who can access, understand and shape AI systems and those who are largely subject to them. The central tension is between unprecedented convenience and productivity on one side, and new forms of dependency, surveillance and economic stratification on the other.

Robots, Robotaxis and the Automation of Everyday Mobility

Autonomous vehicles and fleets of robotaxis are portrayed as routine in many major cities by 2030, reducing the need for private car ownership and changing urban design around pick-up hubs and logistics.

Small delivery robots, warehouse bots and domestic helpers manage a spectrum of physical tasks, from last‑mile deliveries to basic home chores, particularly for affluent households and aging populations.

The piece notes that while accidents and regulatory disputes continue, overall safety records of autonomous systems surpass those of human drivers, reinforcing political and commercial momentum.

Public transport is increasingly orchestrated by AI for dynamic routing and predictive maintenance, making mobility more efficient but also more data‑intensive and dependent on a handful of large technology providers.

Work, Productivity and the New Division of Labour

Generative AI tools capable of coding, drafting documents, and producing media are embedded in most professional software by 2030, acting as “first‑draft workers” across white‑collar industries.

Routine cognitive tasks in law, accounting, marketing and customer service are heavily automated, compressing traditional career ladders: junior roles shrink, while demand grows for a smaller number of high‑skill “AI supervisors” and domain experts.

Manual and care work are less transformed: robots assist but do not fully replace cleaners, construction workers or caregivers, leaving many of the lowest‑paid jobs intact rather than eliminated.

Productivity statistics rise, but wage gains and job security disproportionately accrue to people who can design, deploy or manage AI systems, widening professional inequality.

AI Haves and Have-Nots: Economic and Social Inequality

The article emphasizes that powerful foundation models and robotic platforms are controlled by a small set of corporations and governments, creating an “AI elite” with privileged access to compute, data and talent.

Wealthy individuals and advanced economies use AI as a force multiplier—optimizing investments, education, healthcare and security—while poorer communities and countries rely on cheaper, more constrained AI services.

This disparity manifests in everyday experiences: premium AI tutors, health triage systems and personalized financial advisors for the rich, versus generic, ad‑driven or surveillance‑heavy tools for the rest.

The author suggests that AI will amplify existing structural divides (income, education, infrastructure) rather than automatically bridging them, unless deliberate redistribution and regulation are implemented.

Governance, Surveillance and the Struggle for Control

Governments increasingly depend on AI for welfare administration, policing, border control and national security, making algorithmic decision-making central to state power.

The piece warns of “soft coercion” through scoring systems, predictive policing and automated eligibility checks that can be opaque and hard to challenge, especially for marginalized populations.

Corporate surveillance also intensifies: workplaces use AI to track productivity and behavior, while consumer platforms profile users in real time for dynamic pricing and targeted content.

Regulatory efforts exist—requirements for transparency, auditability and limits on high‑risk applications—but enforcement is uneven across jurisdictions, leading to a fragmented global landscape of AI rights and protections.

Culture, Human Agency and Everyday Life

AI‑generated media—music, video, games, news summaries—becomes the default for much casual consumption, raising questions about authenticity and the dilution of human-made culture.

Personalized “AI companions” and chatbots offer emotional support, entertainment and practical assistance, particularly to the elderly and socially isolated, blurring lines between tool and relationship.

Education leans heavily on adaptive tutoring systems that tailor content to each student, improving outcomes for some but risking over‑standardization and data‑driven labeling of children from an early age.

The article concludes that by 2030 the key issue will not be whether AI is powerful or pervasive—it will be— but who sets the terms of its deployment, who captures the gains, and how much meaningful agency ordinary people retain in AI‑mediated systems.

Bloomberg News Now: Spacex Seeks $1.5T IPO Valuation

Youtube • Bloomberg Podcasts • December 9, 2025

Venture•2026

The central focus of the content is a report that SpaceX, the aerospace manufacturer and space transportation company founded by Elon Musk, is seeking a staggering $1.5 trillion valuation for a potential initial public offering (IPO). This figure represents an unprecedented ambition for a private company and would instantly position SpaceX as one of the most valuable public companies in the world, rivaling or surpassing the market capitalizations of tech giants like Apple and Microsoft at various points in their history. The discussion frames this not just as a financial milestone but as a pivotal moment for the space industry and public markets.

The Scale of the Ambition

The proposed $1.5 trillion valuation is the key data point driving the analysis. To provide context:

It dwarfs the valuations of other major recent IPOs and would be among the largest public market debuts in history.

This valuation reflects immense investor confidence in SpaceX’s dual-track business model: its established, revenue-generating rocket launch services (Starlink and Falcon rockets) and its long-term, high-risk/high-reward project to colonize Mars through the Starship program.

The discussion likely explores how this valuation is justified by SpaceX’s dominant market position in commercial launches and the potential future revenue from its Starlink satellite internet constellation, which aims to provide global broadband coverage.

Implications for Markets and the Space Sector

A SpaceX IPO at this valuation would have profound ripple effects. It would provide a massive liquidity event for early investors and employees, potentially creating a new wave of wealth. For the public markets, it would offer retail and institutional investors their first direct opportunity to invest in the forefront of the commercial space race, a sector previously accessible only to venture capital and private equity. Furthermore, such a successful public listing could unlock significant capital for SpaceX to fund its capital-intensive Mars ambitions, accelerating development timelines. It would also set a new benchmark for valuations across the entire aerospace and “New Space” sector, potentially driving up investment in competitors and ancillary service providers.

Challenges and Considerations

Despite the headline figure, the analysis would also consider significant hurdles. Regulatory scrutiny from bodies like the SEC would be intense for a deal of this magnitude and complexity. Market conditions at the time of the offering would be critical; achieving a $1.5 trillion valuation requires sustained investor appetite and a compelling narrative that balances near-term profitability with visionary long-term goals. There are also inherent risks in SpaceX’s operations, from the technical challenges of Starship development to the competitive and regulatory landscape of global satellite internet.

In conclusion, the report of SpaceX targeting a $1.5 trillion IPO valuation signifies a watershed moment where the space economy transitions from a niche, government-dominated field to a central pillar of the global financial and technological landscape. The success or failure of such an offering would not only determine SpaceX’s financial future but also signal the market’s belief in the long-term commercial viability of interplanetary ambition.

Private equity may regret inviting in mom and dad

Ft • December 9, 2025

Venture•2026

The private equity industry’s recent push to attract retail investors, or “mom and dad” capital, is creating a new set of risks that the sector may not be fully prepared to handle. While opening funds to a broader investor base provides a fresh source of capital, it also invites greater regulatory scrutiny, increased litigation risk, and a fundamental shift in the relationship between fund managers and their investors. The courts, rather than the industry itself, may ultimately define the terms of this democratization, potentially imposing stricter standards of transparency and fiduciary duty.

The Drive for Retail Capital

For years, private equity has been the domain of large institutional investors like pension funds and endowments. However, as competition for capital intensifies, major firms are increasingly marketing funds and products to accredited retail investors. This strategic shift is driven by several factors:

The vast, untapped pool of wealth held by high-net-worth individuals.

A desire to diversify their investor base beyond traditional institutions.

The perception that retail capital may be more “sticky” and less sensitive to short-term performance fluctuations.

This move is often framed as a democratization of an asset class that has historically delivered superior returns, albeit with higher risk and illiquidity.

The Inevitable Rise of Litigation

The article argues that inviting less sophisticated investors into complex, opaque private equity structures is a recipe for legal disputes. Retail investors, unlike seasoned institutional limited partners (LPs), are more likely to sue when investments underperform or when fee structures and conflicts of interest are not clearly communicated. The courts are poised to become a central arena where the obligations of private equity general partners (GPs) to these new investors are tested and defined. A series of high-profile lawsuits could establish new precedents around disclosure requirements and the standard of care owed to retail participants, effectively regulating the industry through case law.

Implications for the Private Equity Model

This judicial oversight could force significant changes to the traditional private equity operating model. The industry’s characteristic secrecy and complex fee arrangements—often negotiated in detail by sophisticated institutional LPs—may not withstand scrutiny from judges and juries sympathetic to individual investors. Firms may be compelled to adopt greater transparency, simplify fee structures, and provide more frequent and detailed reporting. Furthermore, the threat of litigation could alter the risk calculus for fund managers, potentially making them more cautious in their strategies and operations.

Ultimately, the article suggests that private equity’s pursuit of retail money is a double-edged sword. While it solves a capital-gathering problem, it introduces a powerful new counterweight: the legal system acting on behalf of the individual investor. The industry may find that in seeking democratization, it has inadvertently empowered a force that will demand accountability and reshape its practices in ways it did not anticipate.

Journalism will become the center of gravity for YouTube’s next era

Niemanlab • Joon Lee • December 11, 2025

Media•Journalism•YouTube•DigitalMedia•CreatorEconomy•2026

For the past decade, the dominant ethos on YouTube has been entertainment, with creators actively distancing themselves from the trappings of traditional journalism. However, a significant cultural and strategic shift is underway, positioning journalism as the central pillar for the platform’s future growth, prestige, and cultural relevance. This evolution is being driven by a convergence of external pressure, creator maturation, and YouTube’s own ambitions to compete on the largest screens in the home.

The Catalyst: A Crisis of Civic Responsibility

The 2024 U.S. election served as a stark turning point, exposing the platform’s vulnerabilities. YouTube faced intense criticism as creator-driven podcasts and conversations, operating without editorial oversight or fact-checking, heavily shaped political narratives and public understanding. This moment highlighted that YouTube’s immense scale had outpaced its infrastructure for civic responsibility, forcing a reckoning with the need for more trustworthy content.

The Creator Evolution: From Entertainers to Institutions

A new class of top creators is already evolving into roles that resemble legacy media, outgrowing pure entertainment.

Marques Brownlee has become a definitive voice in consumer technology, filling a role once held by traditional critics.

Philip DeFranco’s show has matured from creator drama into a format closer to a nightly news broadcast.

Even MrBeast is now treated as a public institution with civic weight, sparking speculation about building a company rivaling Disney.

Creators like Jon Youshaei and Colin and Samir effectively run trade publications for the creator economy itself.

As these creators are covered like celebrities and CEOs, they encounter the same need for legitimacy that traditional institutions have: they require journalism. Scaling to become a cultural force necessitates more care, structure, transparency, and ultimately, editorial standards and reporting.

YouTube’s Strategic Imperative: Trust Over Watch Time

YouTube’s competition with Netflix for dominance on the living room TV is a key driver of this shift. While Netflix relies on prestige programming for cultural authority, YouTube possesses scale and watch time but struggles with credibility on the big screen. The platform’s next era will be defined by building trust. Journalism is uniquely positioned to fill this gap—not as a primary revenue driver, but as a source of legitimacy. It signals that the platform helps users “make sense of the world,” transforming YouTube from an entertainment hub into a civic institution.

The Hybrid Future: A Two-Way Street

This transformation is a two-way street, demanding adaptation from both sides.

Creators moving toward journalism: Successful creators hitting a “ceiling” will need to adopt journalistic rigor, fact-checking, and editorial processes to maintain trust at scale.

Journalists moving toward creators: Journalists seeking relevance must master the intimate, human voice and relationship-building that YouTube demands. Credibility will be built through presence and emotional clarity as much as through traditional bylines. Reporters who thrive will be those who can translate complex ideas with both accuracy and a connective, accessible style.

The most successful early examples of this hybrid model come from journalists like Cleo Abram, Johnny Harris, Adam Cole, and Joss Fong, who have built independent, niche-focused enterprises on YouTube that often outperform their legacy media counterparts in reach and engagement.

The defining content of the late 2020s will be created by those who successfully fuse journalistic rigor with YouTube’s native language of intimacy and immediacy. This fusion will determine whether YouTube can sustainably compete with Netflix not just for entertainment minutes, but as a trusted institution that helps society understand itself.

Media

Netflix and the Hollywood End Game

Stratechery • Ben Thompson • December 8, 2025

Media•Film•Streaming•Netflix•Hollywood

The article presents a detailed analysis of the current state of the Hollywood entertainment industry, framing it as an “end game” driven by the strategic dominance of Netflix and the disruptive force of YouTube. It argues that the traditional studio model, built on controlling intellectual property (IP) and its theatrical release window, is being fundamentally dismantled. Netflix’s strategy is central to this shift, as it has successfully moved the industry’s center of gravity from theaters to the home, thereby devaluing the exclusive theatrical window that was once the studios’ primary leverage.

The Netflix Strategy: Owning the Customer Relationship

Netflix’s core advantage is its direct relationship with over 300 million global subscribers. This allows it to:

Amortize content costs globally: A show like Squid Game* can be a massive financial success based on its ability to attract and retain subscribers worldwide, not on its domestic box office or syndication revenue.

Operate without the constraints of theatrical release schedules: Netflix can release content on its own timeline, optimizing for subscriber engagement rather than maximizing opening weekend box office.

Utilize data to inform content decisions: The company’s vast trove of viewing data provides insights into what resonates with audiences, reducing the reliance on the high-risk, high-reward “blockbuster” model.

The article posits that Netflix is now leveraging this position to reshape the value of intellectual property itself. By offering massive upfront payments to secure global rights in perpetuity, Netflix is making a calculated bet that it can increase the long-term value of IP through its platform better than the traditional studios can through cyclical theatrical releases, home video, and licensing windows.

The YouTube Disruption and the “Aggregator” Theory

Simultaneously, the piece highlights YouTube as the other dominant force, representing a different kind of threat. While Netflix competes for premium, scripted content, YouTube dominates attention for everything else—user-generated content, vlogs, and unscripted entertainment. The article applies the “Aggregator Theory,” where platforms that control demand (users/attention) have power over suppliers (content creators). Netflix is an aggregator for high-budget content, while YouTube is the ultimate aggregator for everything else. This dual pressure squeezes traditional media companies from both sides.

The End Game for Traditional Studios

For legacy Hollywood studios, the options are narrowing. The article outlines several strategic paths, each with significant challenges:

Building their own direct-to-consumer platforms: This is the path chosen by Disney, Warner Bros. Discovery, and others, but it requires massive investment with no guarantee of reaching Netflix’s scale. The author is skeptical, noting that “it is not clear that any of them have a sustainable business model.”

Becoming arms dealers to the aggregators: This involves licensing content to Netflix, Amazon, and Apple. While providing short-term revenue, this strategy cedes the customer relationship and may accelerate the decline of their own platforms.

Doubling down on theatrical exclusives: A focus on must-see theatrical events (e.g., superhero sequels, franchise films) is one remaining area of leverage. However, this is a high-risk, hit-driven business that is becoming increasingly narrow.

The overarching conclusion is that the entertainment landscape is consolidating around a few giant aggregators. Netflix is positioned to be the primary aggregator for premium, narrative content, confident that its global scale and data capabilities allow it to extract more value from IP than the system it helped destroy. The “end game” is a market where a handful of platforms control audience access, and traditional studios are forced into a subordinate role as suppliers or niche players.

Disney to Invest $1 Billion in OpenAI and License Characters for Use in ChatGPT, Sora

Wsj • Ben Fritz • December 11, 2025

Media•AI•Entertainment•Intellectual Property•Partnership

Disney has agreed to invest $1 billion in OpenAI and license its characters for use in the startup’s products, according to people familiar with the matter, a major bet by the entertainment giant that the technology will be a boon to its business rather than a threat.

The three-year deal will let users of OpenAI’s ChatGPT and its Sora video generator create content featuring characters from Disney’s vast library, including those from Marvel, Pixar and Star Wars, the people said. Disney will also get a seat on OpenAI’s board.

The agreement, which is expected to be announced soon, is a landmark moment for both companies and the entertainment industry. It represents a significant commitment by a traditional media company to generative AI, a technology that has been seen by many in Hollywood as an existential threat to jobs and creative control.

For OpenAI, the deal provides a massive injection of capital and a powerful partner with one of the world’s most valuable portfolios of intellectual property. It also gives the startup a high-profile endorsement as it faces increasing regulatory scrutiny and competition.

The partnership comes as Disney is locked in a separate, high-stakes legal battle with Google over alleged copyright infringement related to AI. Disney and other major media companies have accused Google of using their content to train AI models without permission. The Disney-OpenAI deal, by contrast, is a consensual licensing agreement that could set a precedent for how media companies monetize their content in the AI era.

Netflix’s WBD bid is an antitrust drama without a villain

Ft • December 9, 2025

Media•Broadcasting•Antitrust•Streaming•Mergers

The article examines the complex antitrust considerations surrounding Netflix’s potential bid for Warner Bros. Discovery (WBD), framing it as a regulatory drama without a clear-cut antagonist. It argues that while the deal would create a media behemoth, traditional antitrust frameworks struggle to define the competitive harm in the rapidly evolving streaming landscape. The central conflict is not between a monopolistic predator and the market, but between old regulatory definitions and new market realities.

The Core Antitrust Challenge: Defining the Market

A primary hurdle for regulators would be defining the “relevant market” in which the combined entity would operate. This legal concept is crucial for assessing market power and potential harm to competition.

Lawyers for the companies would likely argue for a broad market definition encompassing all forms of video entertainment, including traditional linear TV, other streaming services, social media video, and even gaming. This would minimize the combined entity’s perceived market share.

Opponents, such as rival studios or consumer groups, would push for a narrow definition focused solely on premium subscription video-on-demand (SVOD) services. This would make Netflix-WBD’s market share appear dominant and raise significant red flags.

The article suggests regulators are caught between these two poles. The old world of cable bundles and broadcast TV is fading, but the new digital ecosystem is fragmented and includes competitors like YouTube, TikTok, and Amazon Prime, which operate on different economic models (ad-supported, part of a broader retail subscription).

Shifting Power Dynamics in Entertainment

The analysis highlights that the power in media has decisively shifted from distribution to content ownership and IP. Netflix’s interest in WBD is driven by the latter’s vast libraries (HBO, DC, Warner Bros. film catalog) and production capabilities. A merger would be a defensive move to secure must-have content in an era of escalating costs and competition, rather than an offensive play to corner a distribution market.

Furthermore, the article points out that consumer choice in streaming is paradoxically both vast and constrained. While there are many services, the cost of subscribing to all major ones is becoming prohibitive, leading to “subscription fatigue.” This could allow a truly scaled player with the deepest content library to exert significant pricing power, which is a classic antitrust concern, even if the market is hard to define.

Regulatory Implications and the Lack of a “Villain”

The piece concludes that this potential deal exposes the inadequacy of current antitrust tools. The usual narrative of a “villain” stifling competition doesn’t neatly fit. Netflix is competing with tech giants with immense balance sheets (Apple, Amazon) and legacy media companies desperate to transition (Disney, Paramount). Blocking the deal could weaken players against these larger rivals, while allowing it could reduce the number of major Hollywood studios and creative competitors.

The ultimate regulatory decision would hinge on whether authorities view the market through a traditional lens—where consolidation reduces competitor count—or a modern one, where competition comes from unpredictable quarters and scale is necessary for survival. The drama, therefore, lies in this philosophical and legal clash, with significant implications for the future structure of the global entertainment industry.

Netflix’s Swallowing of Warner Bros. Will Be the End of Hollywood

Nytimes • December 6, 2025

Media•Film•Netflix•WarnerBros•HollywoodConsolidation

Overview and Central Argument

The piece argues that a hypothetical acquisition of Warner Bros. by Netflix would mark a decisive, perhaps irreversible, break with the traditional Hollywood studio system. Past fears about “the end of Hollywood” have repeatedly surfaced with the advent of television, VHS, cable, DVDs, and streaming, but the article suggests this deal would be categorically different. Rather than merely disrupting how films and shows are distributed, it would erase the remaining institutional and cultural boundaries that separate Silicon Valley–style tech platforms from legacy studios, effectively turning Hollywood into a content division of a global tech company.

Why This Merger Is Uniquely Dangerous

The article emphasizes that Hollywood’s past crises involved new technologies but preserved a competitive ecosystem of distinct studios, talent agencies, and theater chains.

By contrast, letting Netflix absorb Warner Bros. would consolidate an enormous library (from classic films and DC superheroes to prestige TV) under a single, data-driven subscription platform.

This combination, the author suggests, would:

Greatly reduce bargaining power for writers, directors, actors, and independent producers.

Allow Netflix to dictate terms not just in streaming but across theatrical, TV, and licensing windows.

Set a precedent for further tech–studio mega-mergers, accelerating consolidation.

Impact on Creativity, Risk-Taking, and Culture

The article contends that Hollywood’s greatest achievements came from tension between commerce and artistry: studios needed hits but also relied on creative gambles that occasionally produced transformative cinema.

Netflix’s algorithm-centric model, when applied to Warner Bros.’ vast IP, would likely:

Prioritize predictable franchises, sequels, and “content” calibrated to churn and retention metrics over singular artistic visions.

Shorten the lifespan of films and series, as projects are judged quickly on engagement data rather than allowed to build word-of-mouth or cult status.

Reduce mid-budget, adult-oriented dramas and offbeat originals that don’t fit clear data patterns but historically defined much of Hollywood’s cultural influence.

The author warns that the result would be an entertainment landscape where cultural memory is shaped by what fits one company’s recommendation system, not by diverse creative experimentation.

Market Power, Labor, and Competition

The merger is framed as a power shift from a historically fragmented industry to a near-vertical platform:

Control over production, distribution, and discovery (via Netflix’s interface) would give the combined entity outsized leverage over labor, including guilds that only recently fought for protections in the streaming era.

Competitors—other studios, streamers, and theatrical exhibitors—would be pressured to respond with their own mega-mergers or risk marginalization.

The article suggests that regulatory scrutiny would be essential, not just in traditional antitrust terms (prices, consumer harm) but in broader cultural terms:

When one company commands global attention, it shapes which stories get told, which regions’ voices are amplified, and how democratic societies understand themselves.

Broader Cultural and Democratic Implications

Beyond business implications, the author argues that Hollywood has served as a global storytelling engine, exporting American narratives, ideals, and critiques of power.

Consolidating that function into a single corporate logic risks:

Narrowing the range of political, social, and historical perspectives that reach mass audiences.

Making controversial or challenging works more likely to be suppressed, quiet-released, or buried by an algorithm rather than overtly censored.

The fear is not just fewer movies, but a global culture increasingly mediated through the design choices and growth imperatives of one dominant platform, eroding the pluralism that historically characterized the film industry.

Conclusion and Call for Resistance

The article concludes that while Hollywood has repeatedly survived technological shocks, this merger would transform its underlying structure in a way that may be irreversible.

It calls for:

Strong regulatory intervention to block or heavily condition such a deal.

Collective resistance from creators, unions, and audiences who value a diverse, competitive cultural ecosystem.

If this acquisition proceeds, the author suggests, the phrase “the end of Hollywood” may no longer be hyperbole but a description of a new era in which Hollywood as an independent, multi-studio system effectively ceases to exist.

★ Meta Says Fuck That Metaverse Shit

Daring fireball • John Gruber • December 7, 2025

Media•Publishing•Meta•Metaverse•Branding

Mike Isaac, reporting for The New York Times, “Meta Weighs Cuts to Its Metaverse Unit” (gift link):