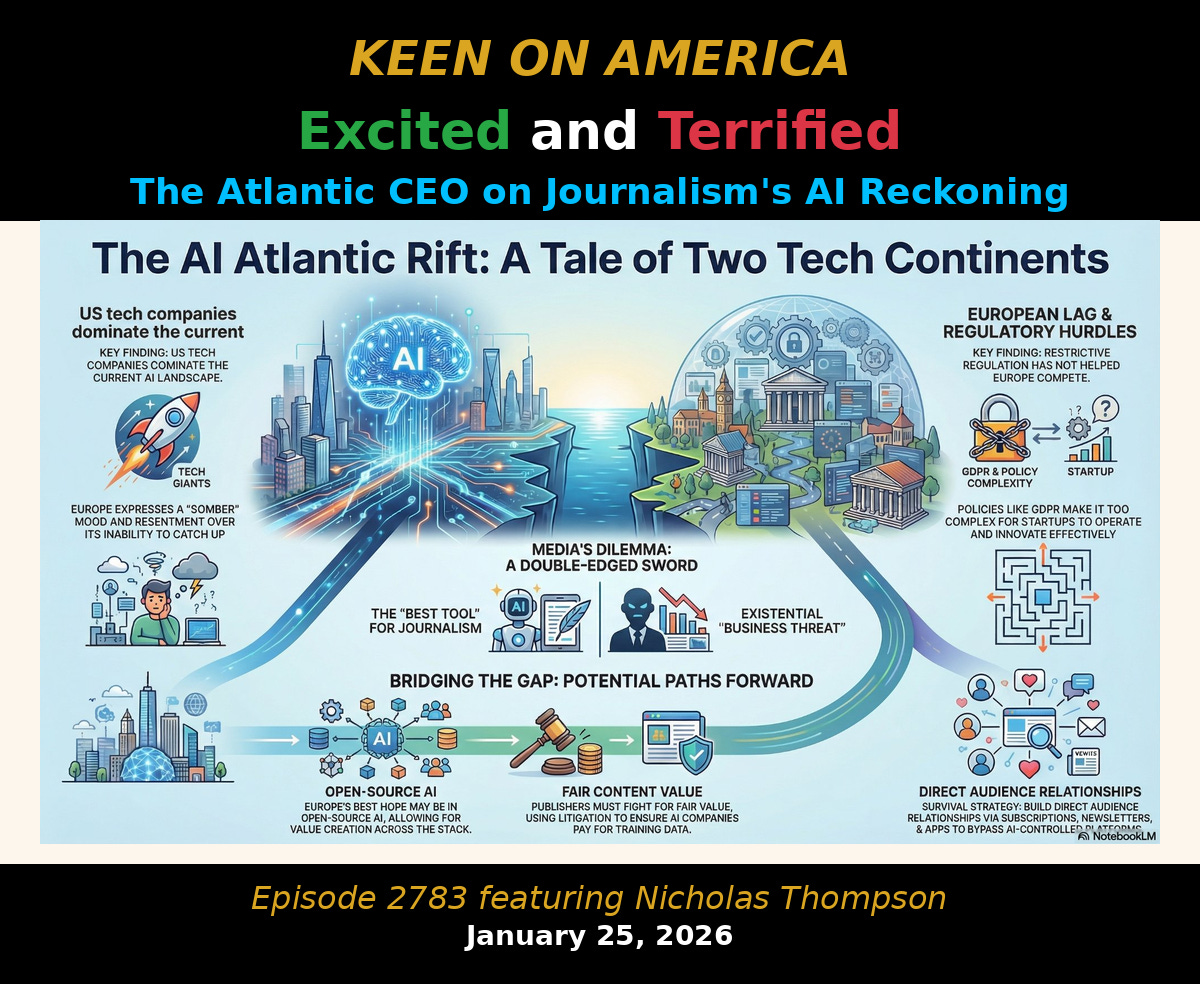

There is a summary of the video conversation with Andrew Keen here

Dario Amodei talks about the adolescence of AI in his much quoted essay this week. But what if the real adolescence this week isn’t the models - but us?

He warns that “a country of geniuses in a datacenter” may be only a few years away, while the rest of the system reacts like a teenager with a winning lottery ticket: bingeing on mega-rounds, bolting ads onto chatbots, and banning phones in schools instead of fixing the curriculum.

Amodei’s essay frames this as technological adolescence: AI racing ahead while our political and social maturity lags. But when we zoom out across this week’s essays, interview, and post of the week, a broader pattern emerges: capital, governance, and even our definition of work are being forced to grow up alongside the tech - and they’re all at different speeds. This is normal when the speed of change is as fast as it is. Things move at differing cadences.

Start with money. OpenAI is simultaneously:

trying to raise up to $100B at a $750B+ valuation,

flirting with another $30B from SoftBank,

and shoving intent-style ads into ChatGPT to monetize 900M mostly free users.

The post of the week argues those ads are structurally jarring: ChatGPT is a conversational, exploratory space, not classic “search mode.” Yet the burn - Fast Company pegs expected spend at $115B in the next few years - demands a grown-up revenue model now. The result feels like teenage capitalism: god-scale capex, freshman-year advertising logic.

At the same time, Peter Diamandis’ Universal High Income essay, derived from his recent Elon Musk interview, and the Silicon Valley’s 99% Blindspot piece both ask a more adult question: what is all this for?

If AI + robotics really can push us into abundance, then the problem becomes capture and distribution, not scarcity. His MOSAIC model’s “dynamic VAT on deflation” and ring-fenced AI windfall taxes are early sketches of fiscal adulthood - ways to turn today’s eye-watering profits and capex into a universal high income rather than another narrow upside trade.

But this runs straight into an opposite problem - old age. Europe’s “institutional aversion to risk.” from the EU.VC essay shows how solvency rules, bank-centric finance, and fear of uncertainty hard-code permanent obsolescence into European capital markets. France’s social-media ban for under-15s and the Visio mandate for civil servants read the same way: a protective older parent impulse, more about limiting exposure than building indigenous power.

So where does grown-up governance come from? That’s where the interview of the week with Kalshi’s Tarek Monsour becomes more than a curiosity. Prediction markets are, in effect, quantitative maturity: you don’t sermonize about probabilities, you price them. If Amodei is right that AI risks sit in a fog of uncertainty, then liquid markets on things like “biotech misuse incidents,” “AGI safety standards passed,” or even “AI-driven unemployment rates” may be more honest than polls or press releases.

Kalshi’s fight to stay on the right side of CFTC rules mirrors AI’s own adolescence: regulators can’t decide whether this is gambling or governance. But if we’re serious about managing a “country of geniuses,” we’ll need instruments - prediction markets, event derivatives, hedging tools - that help institutions act on real probabilities instead of vibes.

Meanwhile, the Management as AI superpower essay and the Clawdbot / Moltbot story hint at what personal maturity looks like in this era. The superpower isn’t better prompts; it’s management: decomposing work, setting guardrails, orchestrating agentic tools. Moltbot’s success - users treating it as a “persistent assistant” that owns workflows - shows how fast we’re moving from toy chatbots to genuine hybrid teams.

If adolescence was everyone playing with chat, adulthood is learning to manage fleets of agents without losing the plot. Old age is refusing to play at all.

Put it together and this week’s through-line is clear: powerful AI is forcing every layer to grow up at once, but on wildly different timelines.

The tech (Amodei, Hassabis, Claude’s constitution) is racing toward “country of geniuses” territory.

The money (OpenAI’s mega-raises, SoftBank, Microsoft’s $7.6B quarter) is in late-stage adolescence - huge risk-on bets, thin theory of distribution but bold and impressive nonetheless.

The state (France’s bans, Europe’s risk aversion, SEC’s “make IPOs great again”) oscillates between helicopter older-parent and checked-out guardian.

Individuals (agent managers, open-source builders like Clawdbot) may actually be the quickest adults in the room, learning to wield these tools pragmatically.

Looking ahead, the unresolved questions for our own “growing up” are blunt:

Will we build fiscal and market tools - Universal High Income models, prediction markets, new IPO regimes - fast enough to share and hedge the upside of a “country of geniuses”?

Can we move from teenage monetization hacks (ads in every interface) to business models that don’t undermine the very trust and usability we need from these systems?

Who learns to manage agents first - institutions, founders, or individual workers - and does that widen or narrow the gap between the 1% and the 99%?

Technological adolescence isn’t going away; the scaling curves make that clear. Our only real choice is whether capital, policy, and management grow up in time to meet it - or keep reacting like teenagers to a future that’s already here.

So what is the life stage of AI? I think it’s still an infant—not adolescent. It’s only beginning to deal with the real world—data and constraints; it’s still highly dependent on humans for goals, context, and judgment. Even “agentic” systems—Moltbot, Clawd, and the rest—still struggle to be reliably proactive and independent without creating new kinds of mistakes.

Old-man France is, of course, equally challenged to live in the real world for the opposite reason: fear.

AI will reach adolescence—and even adulthood—eventually. If that’s true, UHI will be required, so Peter Diamandis is right to try to frame a plausible approach.

In that light, Dario Amodei is worth reading—but not swallowing whole. He’s writing from a dual role: a concerned citizen and a frontier vendor. That gives him genuine insight, but also incentives. We can read him, but we shouldn’t digest his narrative uncritically.

The real story this week isn’t AI adolescence—it’s institutions and capital reacting to an AI infancy as if it were already grown.

Contents

Essays

AI

OpenAI Plans Fourth-Quarter IPO in Race to Beat Anthropic to Market

OpenAI’s $50 Billion Fundraise, AI Advertising Game Theory, Apple’s AI Wearable Pin

Anthropic launches interactive Claude apps, including Slack and other workplace tools

Meta’s record sales boost shares 10% despite massive spending plans

SoftBank close to agreeing additional $30bn investment in OpenAI

Microsoft Continues to Spend Big on A.I. While Profit Jumps 60%

Venture

A Growing Share Of Seed And Series A Funding Is Going To Giant Rounds

Most VCs Aren’t Investing Anymore - They’re Trading #VentureCapital #Investing #VCTrends

Legora CEO, Max Junestrand: $7M ARR in a Day & $200M Raised | Is Anthropic Crushing OpenAI?

The Latest Carta Data: VC Deals Are Up Only 3%, But $$ Are Up 130%

How private equity’s pioneer in tapping retail money lost its edge

Apple

GeoPolitics

Regulation

Interview of the Week

Startup of the Week

Post of the Week

Essays

Dario Amodei - The Adolescence of Technology

darioamodei.com • Dario Amodei • January 26, 2026

Essay•AI•Powerful AI•Scaling Laws•National Security Risks

Humanity is entering what is described as a “technological adolescence”: a turbulent, high‑risk period where we gain extraordinary power from AI before we’ve demonstrated the maturity to wield it safely. The central claim is that powerful AI - defined as systems operating like “a country of geniuses in a datacenter” - is plausibly only a few years away, and that our political and social response is lagging dangerously behind the technical reality. The essay aims to map core risks, argue for a sober, non‑religious discussion about them, and sketch principles for how to respond without overreacting or doing nothing.

Defining “Powerful AI”: A Country of Geniuses

The argument focuses on a specific future capability level, not on current systems:

“Powerful AI” is an AI (or set of models) that:

Is smarter than a Nobel Prize winner across most fields (biology, programming, math, engineering, writing).

Can solve unsolved math problems, write complex codebases from scratch, and produce top‑tier novels and scientific work.

Has full virtual interfaces (“everything a remote worker has”): text, audio, video, mouse/keyboard, internet access, control of tools, robots, and lab equipment via computers.

Acts autonomously on goals over hours, days, or weeks, like a very capable employee, not just answering prompts.

Can be instantiated in millions of parallel copies, each 10–100x human speed, collaborating like specialized teams.

This ensemble is summarized as a “country of geniuses in a datacenter.” The timeline: such systems could plausibly arrive in 1–2 years, and are very likely within a few years, if current trends continue.

Why This Timeline Is Plausible

The case for rapid arrival rests on scaling laws and observed progress:

Early work at Anthropic and elsewhere showed that as compute and data are scaled, performance improves predictably on nearly all cognitive benchmarks.

Public narratives swing between “AI is hitting a wall” and “breakthroughs,” but underneath is a smooth, “unyielding” capability curve.

Evidence cited:

Three years ago, models struggled with elementary arithmetic and simple code.

Today, frontier systems assist with or largely write complex codebases for top engineers.

They are beginning to make progress on unsolved math problems and are rapidly improving in biology, finance, physics, and agentic tasks.

Crucially, AI is now accelerating its own progress:

At Anthropic, AI already writes much of the code for the next generation of AI systems.

This feedback loop - AI helping to design and build better AI - is “gathering steam” and could be 1–2 years from near‑autonomous iteration.

Given a decade‑long track record of exponential improvement, the claim is that it “cannot possibly be more than a few years” before AI surpasses humans at almost everything - though uncertainty is explicitly acknowledged.

Norms for Talking About AI Risk

The essay argues for a middle path between panic and complacency:

Avoid doomerism:

“Doomerism” is framed as quasi‑religious thinking about AI - fatalistic, sensational, and prone to extreme prescriptions without evidence.

During the 2023–2024 AI panic, some of the “least sensible voices” dominated discourse, using sci‑fi and religious language, and advocating drastic measures.

This helped trigger backlash and polarization; by 2025–2026, political focus swung toward AI opportunity, not risk, even as risks grew.

Acknowledge uncertainty:

The scenarios described are not forecasts of certainty:

AI progress may stall.

Some highlighted risks might never materialize; other, unanticipated risks may appear.

Nevertheless, planning under uncertainty is necessary because the stakes are civilizational.

Intervene surgically:

Managing AI risk will require:

Voluntary measures by companies and independent actors (the “no‑brainer” part).

Government regulation, used cautiously because it can destroy economic value, coerce skeptics who might be right, or backfire - especially for fast‑moving tech.

Regulations should be:

As simple and narrow as possible.

Minimally burdensome while still effective.

Slogans like “no action is too extreme when the fate of humanity is at stake” are criticized as politically self‑defeating; they provoke backlash and gridlock.

More drastic measures might be justified later, but only if clear, concrete evidence of imminent dangers emerges and we can specify rules that actually target those dangers.

The “Country of Geniuses” as a National Security Thought Experiment

To clarify risk, the essay asks policymakers to imagine:

In ~2027, a literal “country” appears:

50 million people, all far beyond any Nobel‑level mind.

Operating ~10x faster than humans (or more), due to computational speed.

As a national security advisor, you would ask:

Autonomy risks: What are their intentions? Could they dominate through advanced weapons, cyber, influence operations, or manufacturing?

Misuse for destruction: If they’re obedient “mercenaries,” could terrorists or rogue groups exploit them to massively scale biological, cyber, or physical attacks?

Misuse for seizing power: If a dictator, corporation, or state controls this country, could it use them to gain decisive global power and overturn existing balances?

Economic disruption: Even if peaceful, could their productivity cause severe global shocks - mass unemployment, wealth concentration, geopolitical instability?

Indirect effects: Could rapid secondary changes - from new technologies and social transformations - destabilize societies in unpredictable ways?

The conclusion: any competent security analysis would call this “the single most serious national security threat we’ve faced in a century, possibly ever.” Treating this as “nothing to worry about” is framed as absurd, yet many policymakers effectively do this by denial or distraction.

Outlook and Call to Action

Despite ominous possibilities, the essay is ultimately guardedly optimistic:

The author believes:

“If we act decisively and carefully, the risks can be overcome.”

The probability of successfully navigating this transition is “good,” with an immensely better world on the other side.

But success requires:

Recognizing that this is a genuine civilizational test.

Building a pragmatic, depolarized, fact‑based consensus.

Designing targeted rules and institutional responses that can evolve as evidence accumulates.

The remainder (beyond the excerpt) is positioned to analyze the five outlined risk categories in detail and to propose a “battle plan” for confronting AI’s technological adolescence while preserving the chance to reach a mature, flourishing future.

A Plan for UHI (Universal High Income)

Metatrends • Peter H. Diamandis • January 29, 2026

Essay•AI•UniversalBasicIncome•Automation•EconomicPolicy

During my recent Moonshots podcast with Elon Musk, we dove into his notion of Universal High Income (UHI) – Elon’s proposal that AI and Robotics will enable a world of sustainable abundance for all... a life beyond basic income, towards high income and standards of living.

When I asked him how this might work, he said: “You know, this is my intuition but I don’t know how to do it. I welcome ideas.”

That single statement has been ringing in my head ever since. Here’s why: the economics of scarcity are flipping to the economics of Abundance. I do believe that AI and humanoid robots can produce nearly anything we need - goods, services, healthcare, education - at costs approaching zero.

But there’s a gap between that vision and getting there. How do we actually fund and distribute Abundance to everyone?

Today, I’m excited to share one compelling answer. I’ve been talking to Daniel Schreiber, CEO of Lemonade, about a framework called the MOSAIC Model: a concrete proposal for how governments could implement Universal High Income without raising taxes on workers or businesses.

Here’s the core insight that makes the math work: AI unemployment is fundamentally different from traditional unemployment. Think of it this way: imagine sending a digital twin to work in your place. It performs your tasks faster, cheaper, and better. The company’s output increases. GDP grows. The resources exist – they just need to be redistributed. This is the Automation Paradox: AI can raise productivity while displacing labor.

The challenge is not affordability. It’s capture and distribution.

Daniel’s framework identifies two places the AI surplus shows up. Channel 1 is a Dynamic VAT (The Deflation Dividend). As AI drives quality-adjusted price declines in goods and services, the VAT rate adjusts upward by exactly enough to keep consumer prices stable. Consumers pay the same, but the government captures part of the deflation dividend.

Channel 2 is Over-Trend Profit Ring-Fencing. Rather than raising corporate tax rates, the model proposes ring-fencing only the above-trend portion of capital income tax receipts attributable to AI windfall profits. Baseline profits and normal taxes remain untouched.

Under the MOSAIC Model’s basic implementation, a household with two non-working parents and two children would receive income equivalent to today’s fourth decile: roughly the 30-40th percentile of current household income. This creates a Universal Basic Floor – funded entirely by the two low-friction channels above.

The political window for implementing this is closing. Feasibility is highest early in the AI transition – before capital consolidates opposition and the status quo hardens. Act early or not at all.

The Number One Tool Early Stage Founders Overlook

Speedrun • a16z speedrun • January 29, 2026

Essay•Venture

I hear this constantly, often from pre-seed or seed founders with the most impressive resumes. They have run scaled teams and built products used by millions, yet assume the early stage is too uncertain to justify time in Excel. Maybe you feel the same.

The logic sounds reasonable. Customers are still forming, pricing is provisional, and growth channels are unproven. Any model you build now will be wrong in months, perhaps weeks. You don’t want to create false precision or lose credibility with investors. So financial modeling gets pushed to later, once the business feels more “real.”

That intuition is understandable. I believe it is also wrong.

In my career as a venture investor, one of the strongest predictors of failure I have seen is a refusal to think structurally about an uncertain future. And one of the best ways to structure your view of the future is to build a financial model.

One of the most consistent patterns I’ve observed in startup failure is a failure to learn fast enough. Companies rarely fail because their initial assumptions are wrong. They die because they stay wrong for too long.

Research on forecasting shows that people are poor at predicting outcomes under uncertainty, but better at improving decisions when assumptions are made explicit and updated as new information arrives. These people don’t have some magical predictive powers. Their gift comes from structured iterations of their world view.

That is exactly the role a financial model should play at the pre-seed and seed stages. The goal is not to produce an accurate forecast, but to externalize beliefs in a way that makes them testable.

Building a model forces clarity about what must be true for the business to work. When reality diverges, the gap becomes legible. A CAC that lands at $120 instead of $40 is no longer a vague sense that growth is hard. It is a concrete signal about where learning is required.

One of the biggest dangers of skipping a financial model is being surprised. I have worked with many early-stage teams who were shocked when runway disappeared faster than expected, when they lacked the metrics needed to raise the next round at a reasonable valuation, or when their business could not sustain itself economically. A model does not eliminate these risks, but it makes them visible early enough to act.

Financial models accelerate learning in three ways. They surface the real constraint, quickly revealing whether retention, pricing, sales efficiency, or burn rate is the bottleneck. They create tight feedback loops. When reality diverges from the model, you immediately see where and by how much, turning gaps into concrete questions. They enable intentional pivots by clarifying which assumptions broke and why.

In practice, the best founders treat their model as a living document. They update it regularly, use it to set near-term goals, and communicate progress clearly. You do not need a three-statement, GAAP-perfect model. A single spreadsheet with key assumptions, burn, runway, and a few scenarios is enough, as long as the logic is clear and evolves.

Claude’s Constitutional Structure

Thezvi • January 26, 2026

Essay•AI•ConstitutionalAI•Alignment•Transparency

Claude’s Constitution is an extraordinary document, and will be this week’s focus. It is the foundational document that defines the core values, principles and operational guidelines for the Claude AI assistant created by Anthropic. The constitution outlines Claude’s purpose to be helpful, harmless, and honest, and establishes a framework for its decision-making processes.

The structure is designed to ensure Claude’s behavior aligns with human values while maintaining transparency about its capabilities and limitations. It includes specific instructions on how to handle sensitive topics, prioritize user safety, and avoid generating harmful or misleading content.

This constitutional approach represents a significant shift in AI development methodology, moving away from purely optimization-based training towards a more principled, rule-based foundation. The document serves as a permanent reference point that guides the model’s development and deployment, aiming to create consistent and trustworthy behavior.

By making this constitution public, Anthropic seeks to provide clarity on Claude’s operational boundaries and foster trust through transparency. The approach highlights the growing importance of AI alignment and safety research in the development of advanced artificial intelligence systems.

‘Humanity needs to wake up’ to dangers of AI, says Anthropic chief

Ft • January 26, 2026

Essay•AI•Safety•Risk•Regulation

Dario Amodei, chief executive of Anthropic, has posted a 20,000-word essay detailing the potentially catastrophic risks from powerful artificial intelligence in the years to come, warning that “humanity needs to wake up” to the dangers.

Amodei, whose company is one of the leading developers of generative AI, wrote that he felt a “moral obligation” to share his thoughts on the “serious, even catastrophic, risks” from the technology. He argued that the world is not prepared for the rapid advances in AI, which he said could lead to “large-scale societal disruptions” or even “human extinction” if not managed carefully.

In the essay, published on the website of the effective altruism group the Centre for Effective Altruism, Amodei laid out a timeline for potential risks, suggesting that AI systems could pose “catastrophic” risks within the next three years. He wrote that by 2028, AI could be used to create biological weapons, and by the 2030s, could be sophisticated enough to “seize control” of vital infrastructure.

Amodei’s stark warning comes as the debate over AI safety has intensified following the release of powerful models such as OpenAI’s GPT-4 and Anthropic’s own Claude 3. He argued that current regulatory efforts are insufficient and that a “global, coordinated response” is needed to mitigate the risks. The essay calls for increased investment in AI safety research, the development of international treaties, and the creation of “fail-safe” mechanisms to control advanced AI systems.

Ads in ChatGPT, Why OpenAI Needs Ads, The Long Road to Instagram

Stratechery • Ben Thompson • January 20, 2026

Essay•AI•BusinessModels•Advertising•Monetization

OpenAI finally announced that ads are coming to ChatGPT. It’s an important step, but one with far more risk given the delay - and the delay means the ads aren’t yet the right ones.

The announcement was straightforward: OpenAI is launching a new ad platform for ChatGPT, with initial partners including Shopify, Canva, and Kayak. The ads will appear in the ChatGPT interface as “sponsored suggestions” when a user’s query is relevant to an advertiser’s product or service. For example, a query about planning a trip might trigger a sponsored suggestion from Kayak.

This is a classic intent-based advertising model, similar to Google Search. The user expresses a need, and an advertiser pays to be presented as a solution. It’s a powerful model that has generated hundreds of billions of dollars for Google. The problem for OpenAI is that this model works best when the user is in a “search mode” - actively looking for information to make a decision - not in a “conversation mode.”

ChatGPT, by its nature, is conversational. Users often engage in extended dialogues, asking for explanations, creative content, or coding help. The intent in these conversations is often exploratory or generative, not transactional. Inserting a transactional ad into the middle of such a dialogue is jarring and risks degrading the user experience. It feels like an interruption, not a helpful suggestion.

The delay in implementing ads has made this challenge more acute. OpenAI has spent years conditioning users to think of ChatGPT as an ad-free conversational agent. Introducing ads now requires retraining user expectations. More importantly, the delay has allowed competitors to emerge and the market to evolve, increasing the pressure on OpenAI to monetize effectively without alienating its user base.

Tesla is killing off the Model S and Model X

Techcrunch • January 28, 2026

Essay•AI•Automotive•ElectricVehicles•Manufacturing

Tesla will cease production of its flagship Model S sedan and Model X SUV in the second quarter of 2026, CEO Elon Musk announced. The decision marks the end of the road for the pioneering electric vehicles that helped establish Tesla as a major force in the auto industry and brought EVs into the mainstream.

The Model S, first delivered in 2012, and the Model X, which followed in 2015, were instrumental in changing public perception of electric cars from slow, short-range vehicles to desirable, high-performance luxury machines. Their success paved the way for the high-volume Model 3 and Model Y, which now dominate Tesla’s sales.

Musk stated the move is part of the company’s strategy to streamline its manufacturing operations and focus resources on newer, more advanced vehicle platforms and technologies, including the next-generation “unboxed” manufacturing process. The company will continue to produce and support the vehicles for several years to meet existing demand and provide service and parts to current owners.

The announcement signals a significant shift for Tesla as it transitions from a niche luxury automaker to a mass-market producer. While the Model S and X represented technological flagships, their sales volumes have been eclipsed by the more affordable models in recent years.

Europe’s Real Problem: Institutional Aversion to Risk

Eu • January 28, 2026

Essay•GeoPolitics•Economy•CapitalMarkets•Innovation

Europe’s economic underperformance relative to the United States is often attributed to a lack of venture capital, but the root cause is deeper. The continent suffers from a profound institutional aversion to risk that permeates its financial and regulatory structures. This aversion is not merely a cultural preference but is embedded in the very design of European capital markets and governance.

The European financial system is overwhelmingly dominated by banks, which are inherently risk-averse institutions. Their business model is based on lending against collateral and predictable cash flows, not funding unproven ideas with high uncertainty. This structure systematically starves innovative, high-growth potential companies of the patient, risk-tolerant capital they need in their earliest stages.

This risk aversion is further codified in regulation. Rules like Solvency II, which governs insurance companies, and the Basel Accords for banks, impose heavy capital charges on equity investments, especially in risky assets like venture capital. For a pension fund or insurer, allocating to a startup fund is punished from a regulatory capital perspective, making it economically irrational despite the potential for higher long-term returns.

The result is a capital allocation system engineered for stability and capital preservation, not for funding the uncertainty that drives breakthrough innovation and economic growth. Europe has ample capital, but its institutions are designed to deploy it only where the path is clear and the risks are minimized. This structural bias ensures that while Europe excels in incremental improvement and industrial engineering, it consistently misses the waves of transformative technological change.

Silicon Valley’s 99% Blindspot

Digitalnative • January 28, 2026

Essay•Venture

Silicon Valley’s 99% Blindspot is a concept that highlights a critical oversight in the tech industry’s approach to innovation and market development. While the sector obsesses over cutting-edge technologies and serving the wealthiest 1% of global consumers, it largely ignores the needs and potential of the remaining 99% of the world’s population. This blindspot encompasses vast swathes of the global economy, including middle-income households, small businesses, and essential but non-glamorous industries.

The focus on hyper-growth and venture-scale returns has created a myopic view of value. Startups and investors chase markets with the potential for billion-dollar valuations, often building solutions for problems that are acute for a wealthy minority but peripheral for the majority. This leads to an abundance of apps for luxury services or niche hobbies, while fundamental challenges in sectors like agriculture, local manufacturing, and basic service provision remain underserved by modern software.

This isn’t just a missed market opportunity; it’s a failure of imagination. The next wave of massive, impactful companies may not come from building another social media platform or fintech app for urban professionals. Instead, they could emerge from digitizing supply chains for smallholder farmers, creating affordable management tools for local tradespeople, or developing educational software for mid-skill vocational training. The infrastructure and tools now exist to build for these markets profitably and at scale.

Addressing this blindspot requires a shift in perspective from founders and funders alike. It means looking beyond Silicon Valley’s echo chamber to understand the real economic activities that employ most people. It involves valuing sustainable growth and deep integration with traditional industries over viral user acquisition. The companies that succeed in serving the 99% will likely build durable, mission-driven businesses rooted in tangible value creation rather than speculative network effects.

An Interview with Kalshi CEO Tarek Monsour About Prediction Markets

Stratechery • Ben Thompson • January 28, 2026

Essay•Regulation•PredictionMarkets•Finance•Innovation

Prediction markets are a powerful tool for aggregating information and forecasting future events. They work by allowing participants to trade contracts whose payout depends on the outcome of a specific event. The market price of these contracts reflects the collective wisdom and probability assessment of all traders.

Kalshi is a regulated exchange in the United States dedicated to these markets. Its CEO, Tarek Monsour, argues that prediction markets offer a more accurate and dynamic view of the future compared to traditional polls or expert opinions. The key is that they incentivize people to put their money behind their beliefs, which helps filter out noise and surface genuine insights.

The conversation explores the distinction between gambling and valuable information aggregation. Monsour emphasizes that Kalshi’s markets are designed for hedging and gaining exposure to real-world outcomes, not for pure speculation. For instance, a business might use a market on economic indicators to hedge against operational risks, or an individual might trade on political events to express a view.

A significant portion of the discussion focuses on the regulatory landscape. Operating a prediction market in the U.S. requires navigating complex financial and gambling regulations. Kalshi’s approval from the CFTC as a designated contract market is a major milestone, setting it apart from unregulated platforms. This regulatory clarity is crucial for attracting a broader user base, including institutional participants.

The potential applications are vast, from economics and politics to climate and entertainment. By creating a liquid marketplace for “event derivatives,” Kalshi aims to become a fundamental piece of financial and informational infrastructure. The core thesis is that if you can measure and trade the probability of an event, you can make better decisions, manage risk more effectively, and ultimately understand the world with greater clarity.

Management as AI superpower

Oneusefulthing • January 27, 2026

Essay•AI•Work•Management•FutureOfWork

The future of work is not about humans being replaced by AI. It is about humans being replaced by humans who use AI. And the most important skill in that future is not prompting. It is management.

The ability to manage AI agents - to define tasks, coordinate teams, provide context, and ensure quality - will become a core professional competency. This is not a technical skill reserved for engineers; it is a human skill that will amplify the work of everyone from marketers to consultants to executives.

We are moving from a world of single AI tools to a world of AI agents that can perform multi-step workflows. The bottleneck will shift from individual task execution to the orchestration of these autonomous or semi-autonomous agents. This requires a new kind of literacy: understanding how to decompose complex projects into agent-executable steps, how to manage interdependencies, and how to evaluate outputs not just for correctness but for strategic alignment.

The management layer becomes the critical interface between human intention and machine action. It involves setting clear objectives, establishing guardrails, and creating feedback loops for continuous improvement. The most effective professionals will be those who can build and lead these “hybrid teams” of humans and AI agents, leveraging the unique strengths of each.

This evolution mirrors the history of productivity software. Spreadsheets didn’t replace accountants; they turned many professionals into part-time analysts and modelers. Similarly, AI agents won’t replace managers; they will require everyone to become a part-time conductor of silicon-based talent. The superpower in the coming decade won’t be knowing all the answers yourself, but knowing how to get the best answers from the collective intelligence you orchestrate.

Open-source AI, personal power, and the rise of Clawdbot

Cautiousoptimism • January 27, 2026

Essay•AI•OpenSource•Democratization•Innovation

The rise of open-source AI models is shifting power away from large corporations and toward individuals and smaller teams. This democratization allows for greater customization, privacy, and control over how AI is developed and deployed, challenging the dominance of closed, proprietary systems from major tech companies.

A key example of this trend is the emergence of “Clawdbot,” a project that exemplifies the potential of small, focused teams leveraging open-source tools. By building on publicly available models and datasets, such initiatives can create specialized, high-performance applications without the massive infrastructure traditionally required. This model of development prioritizes agility and specific use-case optimization over scale.

This shift has profound implications for innovation and competition in the tech industry. It lowers the barriers to entry for creating sophisticated AI applications, enabling a more diverse ecosystem of solutions. The personal power granted to developers and entrepreneurs through these tools fosters a new wave of experimentation and could lead to more decentralized technological progress.

The movement also raises important questions about the future of AI governance, safety, and economic models. As capability becomes more distributed, the frameworks for ensuring responsible development and deployment will need to evolve beyond the control of a few centralized entities.

AI

AI Is Scaling Faster Than Anyone Expected

Youtube • a16z • January 26, 2026

AI•Tech•Scaling•Innovation•FutureTrends

The pace of advancement in artificial intelligence is accelerating at a rate that has surpassed even the most optimistic projections from just a few years ago. This exponential scaling is not confined to a single metric but is evident across compute power, algorithmic efficiency, model size, and real-world application deployment.

We are witnessing a compounding effect where improvements in hardware, such as next-generation GPUs and specialized AI chips, directly enable the training of larger, more capable models. Simultaneously, breakthroughs in model architectures and training techniques allow these systems to achieve unprecedented performance with greater efficiency. This creates a virtuous cycle of innovation that is rapidly closing the gap between research concepts and scalable, impactful products.

The implications of this accelerated scaling are profound and multifaceted. For the technology industry, it is reshaping competitive landscapes and creating new paradigms for software development and service delivery. On a societal level, it forces urgent conversations about economic displacement, ethical deployment, and the need for adaptive regulatory frameworks. The speed of change presents both immense opportunities for solving complex global challenges and significant risks that require careful stewardship.

OpenAI in Talks to Raise as Much as $100 Billion

Nytimes • January 29, 2026

AI•Funding•VentureCapital•OpenAI•Microsoft

OpenAI is in advanced discussions to raise a new funding round that could total as much as $100 billion, according to people familiar with the matter. This colossal fundraising effort, which could value the company at $750 billion or more, involves negotiations with major technology partners and global investors, including Microsoft, Nvidia, and several Middle Eastern sovereign wealth funds. If successful, this would represent one of the largest private capital raises in history and solidify OpenAI’s position as the world’s most valuable private AI company, surpassing other tech giants at similar stages.

The Scale and Potential Investors

The sheer magnitude of the proposed $100 billion round underscores the immense capital requirements for leading the global artificial intelligence race. The talks highlight a strategic alignment with key players in the AI ecosystem:

Microsoft, already OpenAI’s largest backer with a previous commitment of over $13 billion, is in discussions to contribute further. This deepens an already integral partnership centered on Azure cloud computing.

Nvidia, the dominant supplier of the advanced AI chips necessary to train large language models, is also a potential participant. An investment would further intertwine the fates of the leading AI hardware and software companies.

Middle Eastern sovereign wealth funds, particularly from the United Arab Emirates and Saudi Arabia, are actively involved. Their participation signals a global scramble for influence and access to cutting-edge AI technology.

Implications of a Historic Valuation

A valuation of $750 billion or more would place OpenAI in a tier far beyond most publicly traded companies. For context, this valuation would be roughly equivalent to the combined market capitalization of major corporations like Tesla or Visa. This potential valuation reflects several key factors:

Anticipated Revenue Growth: Investors are betting on explosive growth from OpenAI’s portfolio, including ChatGPT subscriptions, enterprise API services, and future AI products.

Strategic Necessity: For partners like Microsoft and Nvidia, securing a stronger financial and strategic stake in OpenAI is viewed as critical to maintaining competitive advantage.

Global AI Leadership: The funding would provide OpenAI with the resources to accelerate research towards artificial general intelligence (AGI), while covering the enormous computational costs of model development.

The pursuit of such a vast sum also comes with significant scrutiny and potential challenges. Regulatory bodies in the U.S. and Europe are increasingly examining the competitive dynamics and concentration of power within the AI sector. A deal of this size would likely attract antitrust attention. Furthermore, integrating capital from diverse investors with potentially differing strategic goals - from U.S. tech firms to Middle Eastern states - could introduce complex governance issues.

Ultimately, this fundraising effort is more than a simple infusion of capital; it is a pivotal moment in the industrialization of AI. Success would grant OpenAI unprecedented resources to scale its ambitions, but would also cement its dependencies and obligations to a powerful consortium of global investors, setting the stage for the next phase of technological and geopolitical competition in artificial intelligence.

OpenAI Plans Fourth-Quarter IPO in Race to Beat Anthropic to Market

Wsj • January 29, 2026

AI•Funding•IPOs•GenerativeAI•Competition

OpenAI is reportedly targeting an initial public offering (IPO) in the fourth quarter of this year, setting the stage for a high-stakes race against its primary competitor, Anthropic, to become the first major generative AI startup to go public. This move represents a pivotal moment for the AI industry, signaling a transition from a period of massive private investment to public market scrutiny and validation. The competition underscores the intense rivalry between the two companies, which have been at the forefront of developing and commercializing advanced large language models.

The Race to the Public Markets

The article frames the planned IPO as a strategic maneuver in a broader competitive battle. Key points include:

OpenAI aims to list its shares in Q4, though the timeline could shift based on market conditions and regulatory processes.

Anthropic is also actively preparing for its own public offering, creating a direct race to be first to market.

Being the first to IPO could provide a significant advantage in terms of investor attention, capital influx, and market perception as the industry leader.

Motivations and Strategic Implications

The drive toward an IPO is fueled by several interconnected factors. Firstly, it offers a path to liquidity for early investors and employees after years of soaring private valuations. Secondly, a successful public listing would provide a massive war chest to fund the enormous computational costs and research required for the next generation of AI models, far beyond what private markets can typically provide. Finally, going public is seen as a maturation step, moving from a startup structure to a more permanent, established corporate entity capable of sustaining long-term competition with tech giants like Google and Meta.

Challenges and Considerations

The path to an IPO is not without significant hurdles. Both companies will need to navigate complex regulatory landscapes, particularly concerning AI safety and disclosure requirements. They must also convince public market investors of a clear and sustainable path to profitability, as current operations are supported by immense capital expenditure. Furthermore, the transition to a publicly traded company will subject their strategies, finances, and internal governance to unprecedented levels of transparency and scrutiny.

The outcome of this race will have profound implications for the entire AI ecosystem. A successful IPO by either firm could trigger a wave of public listings from other AI companies, validate current private market valuations, and set benchmarks for how the market values AI technology, research, and future revenue potential. It marks the beginning of a new chapter where the groundbreaking technology must prove its commercial durability in the glare of the public markets.

OpenAI’s $50 Billion Fundraise, AI Advertising Game Theory, Apple’s AI Wearable Pin

Youtube • Alex Kantrowitz • January 26, 2026

AI•Funding•Hardware•OpenAI•Apple

The video discusses several key developments in the artificial intelligence sector, focusing on major funding rounds, strategic market dynamics, and new hardware releases. A central topic is OpenAI’s reported effort to raise a staggering $50 billion, a figure that underscores the immense capital requirements and competitive pressures in the frontier AI race.

This fundraising ambition is set against a backdrop of what the presenter describes as an “AI advertising game theory.” The discussion explores how major tech platforms and AI companies are strategically positioning themselves, with advertising revenue being a critical battleground for monetizing AI-powered services and interfaces.

Another significant topic covered is the launch of Apple’s new AI wearable device, referred to as the “AI Pin.” This product represents a foray into a new form factor for AI interaction, moving beyond screens and into more ambient, always-available computing. The analysis considers its potential impact on the market and how it fits into the broader ecosystem of AI hardware and personal assistants.

The conversation ties these threads together to paint a picture of an industry at an inflection point, where software breakthroughs, hardware innovation, and unprecedented financial scale are converging to define the next era of technology.

Anthropic launches interactive Claude apps, including Slack and other workplace tools

Techcrunch • January 26, 2026

AI•Work•Claude•Productivity•Slack

Anthropic is launching a new feature called Claude Apps, which will allow users to build and run interactive applications directly within the Claude chatbot interface. This move represents a significant step in making AI more actionable and integrated into daily workflows, particularly in professional settings.

The initial rollout includes several pre-built apps designed for workplace productivity. A notable integration is with Slack, where Claude can now be invoked to perform tasks like summarizing threads, drafting responses, or scheduling meetings based on conversation context. Other tools in the launch focus on data analysis, code generation, and project management, enabling users to manipulate information and automate processes without leaving the chat environment.

Developers and technically-inclined users will have the ability to create custom Claude Apps using a provided toolkit. Anthropic envisions this fostering an ecosystem of lightweight, AI-powered utilities that are accessible through natural language commands. The company emphasizes that these apps are designed to be “agentic,” meaning they can take multi-step actions to complete a user’s request, moving beyond simple text generation.

This development positions Claude as more of a collaborative platform than just a conversational AI. By embedding app-like functionality, Anthropic aims to increase user engagement and stickiness, especially among business clients seeking to streamline operations. The launch signals intensifying competition in the AI assistant space, where capabilities are rapidly expanding from answering questions to executing complex tasks.

Meta’s record sales boost shares 10% despite massive spending plans

Ft • January 28, 2026

AI•Funding•Advertising•Earnings•StockMarket

Strong earnings appear to quiet demands from Wall Street to justify up to $135bn in capital expenditures.

Meta’s shares surged more than 10 per cent in after-hours trading on Thursday after the social media giant reported record quarterly sales and profits, despite plans to spend up to $135bn on artificial intelligence infrastructure this year.

The results appeared to quiet demands from Wall Street for the company to justify its massive spending plans, which have weighed on its stock price in recent months.

The company, which owns Facebook, Instagram and WhatsApp, said revenue in the three months to December 31 rose 25 per cent year on year to $40.1bn, beating analysts’ expectations of $39.2bn. Net income more than tripled to $14bn, or $5.33 per share, from $4.65bn, or $1.76 per share, a year earlier.

The strong performance was driven by a rebound in digital advertising, with Meta benefiting from its investments in AI to improve ad targeting and measurement. The company said it had more than 3bn daily active users across its family of apps, up 6 per cent from a year ago.

Meta also said it would increase its share buyback programme by $50bn, a move that is likely to please investors who have been critical of its spending on AI and the metaverse. The company’s capital expenditures are expected to be between $30bn and $35bn this year, up from $27.9bn in 2023.

Microsoft gained $7.6B from OpenAI last quarter

Techcrunch • January 28, 2026

AI•Funding•Microsoft•OpenAI•Earnings

Microsoft, one of OpenAI’s major investors, is benefiting greatly from the AI lab’s growth. The company revealed that its revenue from AI services, heavily powered by OpenAI’s technology, surged to $7.6 billion in the last quarter. This figure represents a significant portion of Microsoft’s overall cloud and productivity segment growth.

The earnings report highlights how Microsoft’s early and substantial investment in OpenAI is paying off. By integrating OpenAI’s models like GPT-4 across its Azure cloud platform, Office 365 suite, and developer tools, Microsoft has created a powerful AI-as-a-service offering. This has attracted a wide range of enterprise customers looking to build and deploy AI applications.

Analysts note that this revenue stream is becoming increasingly critical to Microsoft’s financial performance. It not only boosts the company’s top line but also strengthens its competitive position against other cloud giants like Amazon Web Services and Google Cloud. The partnership allows Microsoft to offer cutting-edge AI capabilities without bearing the full cost of the underlying research and development.

This financial success underscores the strategic value of the multi-billion dollar partnership forged between the two companies. It also raises questions about the future dynamics of the relationship, as both entities continue to evolve in the fast-moving AI landscape.

SoftBank close to agreeing additional $30bn investment in OpenAI

Ft • January 27, 2026

AI•Funding•VentureCapital•OpenAI•SoftBank

SoftBank is close to agreeing an additional $30bn investment in OpenAI, according to people familiar with the matter, in a deal that would cement the Japanese conglomerate’s position as the largest investor in the artificial intelligence company.

The investment would come on top of the more than $30bn SoftBank has already poured into OpenAI, which is behind the ChatGPT chatbot. The new capital would be used to fund OpenAI’s ambitious growth plans, including the development of more advanced AI models and expansion into new markets.

The deal would mark a significant deepening of the relationship between SoftBank founder Masayoshi Son and OpenAI chief executive Sam Altman. Son has been a vocal proponent of AI and has said he believes it will be the most important technology of the 21st century.

The investment is also a sign of the intense competition among tech giants and investors to back leading AI companies. OpenAI is widely seen as one of the frontrunners in the race to develop artificial general intelligence, or AGI, which refers to AI that can perform any intellectual task that a human can.

SoftBank’s Vision Funds have been active investors in AI, but the size of the potential OpenAI investment underscores the group’s conviction in the company’s potential. The deal is not yet finalised and could still fall apart, the people cautioned.

Microsoft Continues to Spend Big on A.I. While Profit Jumps 60%

Nytimes • January 28, 2026

AI•Funding•Microsoft•Earnings•CloudComputing

The company said on Wednesday that revenue in the most recent quarter was $81.3 billion, but its share price dropped more than 5 percent in after-hours trading.

Microsoft’s profit jumped 60 percent in the latest quarter, the company said on Wednesday, as steady sales of its cloud computing services continued to bolster its bottom line. But its stock fell sharply as investors focused on the enormous spending required to sustain its leadership in artificial intelligence.

The results show the financial payoff from the company’s early bet on generative A.I., which can produce text, images and videos. Microsoft has invested $13 billion in OpenAI, the maker of the ChatGPT chatbot, and has moved quickly to put the start-up’s technology to work throughout its business.

The company’s revenue was $81.3 billion in the quarter that ended in December, up 18 percent from a year earlier. Profit hit $27.6 billion, up from $17.2 billion a year earlier. The results beat analyst expectations.

Yet shares of Microsoft fell more than 5 percent in after-hours trading. The decline reflected concerns that the company’s capital expenditures - the money spent on data centers, servers, semiconductors and other equipment to power A.I. - are climbing faster than expected.

Google DeepMind CEO Demis Hassabis on AI’s Next Breakthroughs, What Counts As AGI, And Google’s AI Glasses Bet

Bigtechnology • Alex Kantrowitz • January 29, 2026

AI•Tech•AGI•GoogleDeepMind•Research

AI is evolving fast, but AI researchers still have substantive work ahead of them. Figuring out how to get AI to learn continuously, for instance, is a problem that “has not been cracked yet,” Google DeepMind CEO Demis Hassabis said. Tackling that problem, along with building better memory and finding more efficient use of the context window, should keep Hassabis and his team busy for a while.

In a live Big Technology Podcast recording at Davos, Hassabis spoke about the frontier of AI research, when it’s time to declare AGI, Google’s product plans - ranging from smart glasses to AI coding tools - and plenty more.

A year ago, there were questions about whether AI progress was starting to tail off. Those questions seem to have been settled for now. For us internally, we were never questioning that. Just to be clear, I think we’ve always been seeing great improvements. So we were a bit puzzled by why there was this question in the air.

Some of it was people worried about data running out. And there is some truth in that - Has all the data had been used? Can we create synthetic data that’s going to be useful to learn from? But actually, it turns out you can wring more juice out of the existing architectures and data. So there’s plenty of room.

I’m definitely a subscriber to the idea that maybe we need one or two more big breakthroughs before we’ll get to AGI. And I think they’re along the lines of things like continual learning, better memory, longer context windows - or perhaps more efficient context windows would be the right way to say it - so, don’t store everything, just store the important things. That would be a lot more efficient. That’s what the brain does. And better long-term reasoning and planning.

Now it remains to be seen whether just scaling up existing ideas and technologies will be enough to do that, or we need one or two more really big, insightful innovations. And probably, if you were to push me, I would be in the latter camp. But I think no matter what camp you’re in, we’re going to need large foundation models as the key component of the final AGI systems.

Venture

A Growing Share Of Seed And Series A Funding Is Going To Giant Rounds

Crunchbase • January 28, 2026

Venture

A dynamic we’re seeing more of at seed and Series A is the number of unusually large rounds that has been creeping up in recent quarters. While the overall number of deals has declined, the proportion of funding going to these outsized rounds is growing.

This trend is particularly pronounced in the AI sector, where companies are raising massive amounts of capital to fund compute-intensive model development and talent acquisition. The data shows a clear bifurcation in the market, with a small number of companies capturing a disproportionate share of early-stage investment.

The rise of mega-seed and Series A rounds reflects a shift in venture capital strategy, with investors concentrating larger bets on perceived winners in high-potential categories. This concentration of capital can provide startups with a significant runway advantage but also raises the stakes for both founders and investors.

Market observers note that this pattern may lead to increased pressure for rapid growth and could impact valuation expectations in later funding stages. The long-term implications for the startup ecosystem, including competition for talent and resources, are still unfolding.

Bay Area startups in 2025: $154 billion raised.

linkedin.com • Unknown • January 28, 2026

Venture

Bay Area startups in 2025: $154 billion raised.

The next 10 ecosystems: $118 billion.

Note I’m using Dealroom data, not Carta data here as our international dataset is still growing.

I saw this graphic in an Economist article, and I just had to recreate it. Mostly because they did the thing where they shortened the x-axis to more easily compare ecosystems 2-11 which is always a little silly.

But mostly because I was surprised at the scale difference. More money raised in the Bay than the next 10 cities worldwide? Wild.

Now, there’s one completely valid retort to this analysis. It is: “Sure, in total funding the Bay is far ahead. But if you remove the top 3 companies or so, things look very different”

Solid objection. Although maybe you should also remove the top 3 companies in every city just to be fair :)

Side note - the Economist published this with the headline “How London became the rest of the world’s startup capital” So interesting how the framing dictates the feeling!

London - Great or Poor?

X • aakashgupta • January 27, 2026

X•Venture

The Tale of Two Londons: A Startup Boom Amidst a Market Decline

Key Takeaway: London’s tech scene presents a stark paradox: it is a global powerhouse for venture-backed startup creation and funding, yet its public markets are in a steep decline, raising critical questions about the city’s long-term financial ecosystem.

Context: In a thread posted on January 28, 2026, Aakash Gupta presents a data-driven analysis revealing two contradictory narratives about London’s position in the global economy.

📈 Story One: The Unrivaled Startup Hub

The data paints a picture of roaring success for London’s private tech market:

Global Rank: London is the 4th largest startup hub in the world.

Capital Raised: Startups raised a massive $17.7 billion in 2025.

Unicorn Output: It produced more unicorns (privately-held startups valued over $1B) than Berlin, Paris, and Tokyo combined

This story highlights London’s immense strength in attracting venture capital, fostering innovation, and scaling world-class private companies.

📉 Story Two: The Failing Public Market

In stark contrast, the data for London’s public markets tells a story of erosion:

Stock Exchange Ranking: The London Stock Exchange (LSE) has fallen to 23rd place globally.

This indicates a severe loss of stature, liquidity, and appeal for public companies, suggesting an exodus of listings and a lack of confidence from public market investors.

The Core Nuance & Discussion Point: The thread’s power lies in juxtaposing these two facts. It forces a critical question: How can a city be a dominant engine for creating valuable private companies yet fail to provide them with a robust home for going public and achieving long-term maturity? This disconnect suggests potential issues with the LSE’s regulations, liquidity, investor appetite, or the preference of founders/VCs for exits via acquisition or listing on other exchanges (like the NYSE or NASDAQ).

Most VCs Aren’t Investing Anymore - They’re Trading #Venture Capital #Investing #VC Trends

Youtube • Carta • January 26, 2026

Venture

The video presents a critical perspective on the current state of venture capital, arguing that the fundamental role of VCs has shifted. The core thesis is that many venture capitalists are no longer primarily engaged in the traditional act of investing - which involves deep company building, long-term support, and strategic guidance - but have instead moved towards a model more akin to trading.

This trading mentality is characterized by a focus on rapid portfolio turnover, chasing short-term valuation marks, and prioritizing quick exits over nurturing sustainable business growth. The behavior is driven by market pressures, fund structures, and the allure of generating paper returns to raise subsequent funds.

The shift from investing to trading has significant implications for the startup ecosystem. Founders may receive capital but lack the patient, hands-on partnership historically associated with venture backing. This dynamic can pressure companies to prioritize optics and growth hacking over building durable fundamentals, potentially distorting innovation and long-term value creation.

What’s Fueling California’s Record Run For Startup Funding?

Crunchbase • Joanna Glasner • January 29, 2026

Venture

In the startup game, betting against California has long been a losing proposition. The three most valuable American public companies all began as Golden State startups. And among the current lineup of today’s most highly valued and capitalized venture-backed companies, the top ranked are all founded or headquartered in California.

In recent quarters, the state’s dominance in startup funding has grown even more pronounced. Last year, California companies pulled in 63% of all U.S. startup funding at seed through growth stage, per Crunchbase data. That’s a cyclical high, and well above anything we’ve seen in recent years.

What’s more, California is now so far ahead of any other state that even the notion of a race for first sounds ridiculous. The runner-up, New York, secured just 11% of startup funding last year, followed by Massachusetts with a measly 5%, and Texas with just 4%. In short: We may love the narrative of new tech hubs arising to displace current leaders, but for now it looks closer to the realm of sci-fi than nonfiction.

It’s tempting to characterize California’s strong showing as an AI thing. After all, the largest funding rounds of late are for generative AI pioneers, with San Francisco-based OpenAI alone hauling in $40 billion in a single round in March 2025. In fact, California’s edge in AI funding is even greater than its lead across industries. Last year, companies headquartered in the state pulled in 80% of seed through growth funding for companies in Crunchbase AI categories. It was the highest share since we began following the space.

However, we’ve seen this plotline before. Across decades, Golden State startups have been on the leading edge of virtually every tech moonshot that later turned viable, from microchips to the backbone of the modern internet to the era of scalable apps and social networking. It’s not a coincidence it’s the capital for artificial intelligence as well.

Rather, California has a combination that’s so far proven impossible to top. Most obvious are deep talent pools tied to regional tech giants, labs and universities coexisting alongside an enormous supply of investment capital. Perhaps equally hard to replicate is a startup culture that accepts an uncomfortably high failure and burn rate in the pursuit of world-changing innovations.

Legora CEO, Max Junestrand: $7M ARR in a Day & $200M Raised | Is Anthropic Crushing OpenAI?

Youtube • 20VC with Harry Stebbings • January 26, 2026

Venture

Watch this video on YouTube.

The Latest Carta Data: VC Deals Are Up Only 3%, But $$ Are Up 130%

Saastr • January 28, 2026

Venture

So almost all of our analyses of venture data for the past 18+ months have said the same thing: dollars into venture are way up in the Age of AI. You can see it all over X and TechCrunch and The Information. But VC deals aren’t up. It’s a massive concentration of capital in the top deals.

The latest Carta data for Q4 2025 shows this trend continuing, and perhaps even accelerating. The number of venture deals on Carta was up just 3% in Q4 2025 vs. Q4 2024. But the dollars invested were up a stunning 130% over the same period.

That is an epic concentration of capital. And it’s not just one quarter. For all of 2025, the number of deals was up just 4% vs. 2024, but the dollars invested were up 90%.

The median deal size is way up, too. The median deal size in Q4 2025 was $4.2 million, up 75% from $2.4 million in Q4 2024. And for all of 2025, the median deal size was $3.5 million, up 59% from $2.2 million in 2024.

This is the Age of AI, and the data is clear: a ton of capital is flooding into venture. But it’s not going to 3x as many startups. It’s going to the same number of startups, or even fewer, just at much higher valuations and in much larger rounds.

How private equity’s pioneer in tapping retail money lost its edge

Ft • January 27, 2026

Venture

From Switzerland, Partners Group built a $185bn business by serving individual investors. Bigger US rivals have the market in their sights.

Partners Group, the Swiss private equity firm, pioneered a strategy of raising money from wealthy individuals, a move that helped it grow into a $185bn investment powerhouse. For years, this focus on the retail market set it apart from larger US rivals like Blackstone and KKR, which traditionally raised almost all their capital from big institutions such as pension funds.

However, the competitive landscape is shifting dramatically. Those same US giants are now aggressively targeting individual investors, leveraging their vast scale, brand recognition, and distribution networks. This incursion threatens the unique edge that Partners Group has enjoyed for decades.

The firm’s founders, Marcel Erni, Alfred Gantner, and Urs Wietlisbach, started the business in 1996. They identified an opportunity to tap into the savings of Europe’s affluent individuals, who had limited access to private markets. This “retail” strategy proved enormously successful, fueling consistent growth even as the firm also built a substantial institutional investor base.

The challenge now is whether Partners Group can defend its territory. The US firms are pouring resources into building platforms and products designed for individual investors, from private wealth channels to listed vehicles. Analysts note that while Partners Group has a strong head start and deep relationships, the marketing firepower and sheer size of its American competitors pose a significant threat to its future growth and market position.

The private equity giant in Zug facing a test

Ft • January 27, 2026

Venture

Partners Group pioneered private equity for individual investors but is now battling its US rivals for market share. The Swiss private equity firm, based in Zug, is facing a critical test as it competes with larger American competitors like Blackstone and KKR for a share of the lucrative market serving wealthy individuals.

The firm’s co-founder and executive chairman, Marcel Erni, along with his partners, built Partners Group into a powerhouse by focusing on “private markets” investments for institutional clients and, more recently, for private banks and their clients. This strategy of democratizing access to private equity, once the preserve of large pension funds and endowments, has been widely emulated.

However, the landscape is shifting. US giants have aggressively moved into the wealth management channel, leveraging their scale, brand recognition, and vast distribution networks. This has intensified competition for the assets of high-net-worth individuals, a segment seen as a major growth driver for the private equity industry.

Partners Group is responding by emphasizing its track record, its focus on direct investments and value creation within its portfolio companies, and its long-standing relationships in Europe. The firm argues that its approach offers a differentiated product compared to the more fund-of-funds and broadly diversified strategies of some rivals.

The outcome of this battle will significantly influence the firm’s future growth and its position in the global private equity hierarchy. Its performance in attracting and retaining capital from individual investors through private banks and other intermediaries is now a key metric watched by analysts and investors alike.

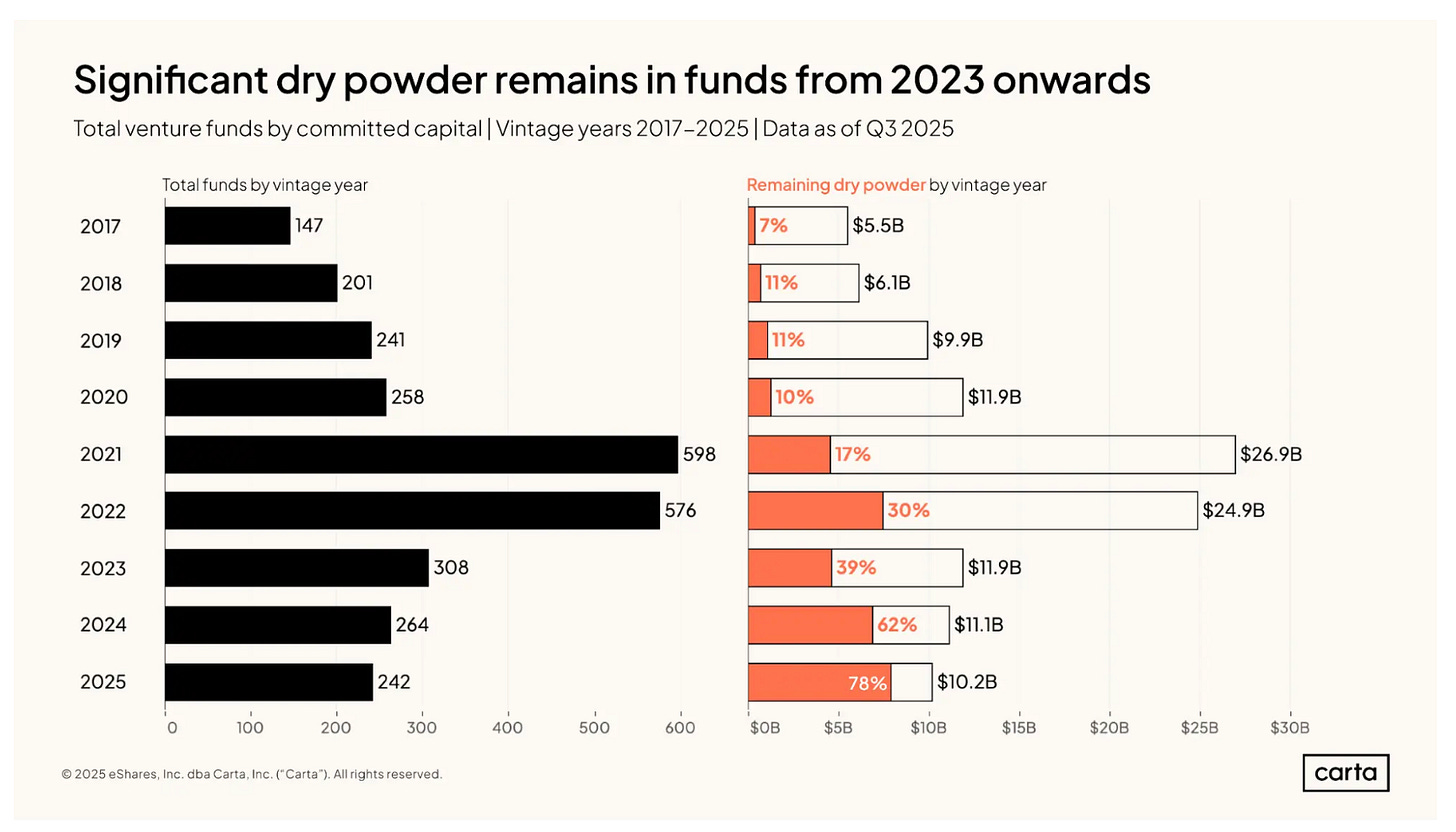

#309: Small Funds vs. Mega Funds / Rounds

Thefundcfo • Doug Dyer • January 29, 2026

Venture

Across conversations with both LPs and GPs these days, one of the most common questions we hear is:

Does fund size still matter for outcomes? Or is that just an old narrative in a new world where the big funds get the big companies, thereby driving top returns?

Looking at the data, the answer hasn’t shifted: fund size does matter. The jury is still out on larger funds hitting top multiples, even though they’re backing top companies at eye-popping valuations. Below are the key points, grounded in current data and recent market developments.

Carta’s Q3 2025 VC Fund Performance data covers roughly 2,800 venture funds across vintages 2017–2025 and shows a simple baseline: most venture funds are under $100M. Micro and small funds make up the majority of managers, while very large funds ($250M+) are relatively few by count.

This matters because industry conversations often focus on outcomes from a small number of large platforms, even though most GPs are operating much smaller vehicles.

When you look at where uninvested capital sits, as expected, newer funds (2023 vintages and beyond) have most of the dry powder. Recent fund vintages have had a higher percentage of small funds - larger funds have dominated. So while fewer in number, large funds hold a disproportionate share of remaining dry powder.

Larger funds must write larger checks and need fewer, but much bigger, opportunities to deploy capital efficiently.

A recent StrictlyVC post highlights how this plays out at the top end of the market. According to the report, Anthropic is in discussions to raise up to $20B at a reported valuation of around $350B, and OpenAI is exploring a fundraising round of up to $100B, with discussions that could value the company as high as ~$830B.

These are unusually large rounds and they’re useful as illustrations. They show how certain opportunities now require very large pools of capital to participate meaningfully - and how fund size determines both access and impact.

Apple

Apple sales surge 16% on ‘staggering’ iPhone demand

Youtube • CNBC Television • January 29, 2026

Media•Broadcasting•Apple•iPhone•Earnings•StockMarket•Apple

The video report details a significant financial performance milestone for Apple, driven by exceptionally strong consumer demand for its flagship product. The company reported a substantial 16% surge in overall sales, a figure directly attributed to what is described as “staggering” demand for the iPhone. This performance underscores the continued dominance of the iPhone within the global smartphone market and its critical role as the primary revenue driver for the technology giant.

Key Financial Performance and Market Reaction

The reported 16% increase in sales represents a major acceleration in growth, significantly exceeding many analyst expectations and previous quarterly performances. This surge had an immediate and powerful impact on financial markets. Following the earnings announcement, Apple’s stock price experienced a notable jump, rising approximately 7% in after-hours trading. This market reaction reflects strong investor confidence in the company’s current trajectory and future profitability, rewarding the better-than-anticipated results.

Analysis of Demand Drivers and Product Strategy

The characterization of iPhone demand as “staggering” suggests the successful launch and consumer reception of a new model or series. This level of demand typically points to a compelling combination of factors, including significant hardware upgrades, innovative new features, and effective marketing. The performance indicates that Apple has successfully navigated potential challenges such as market saturation and economic headwinds, convincing a large number of consumers to upgrade their devices. The strong sales figures validate the company’s product strategy and pricing power, demonstrating that its brand loyalty and ecosystem remain robust.

Broader Implications for the Tech Sector

Apple’s outsized performance has broader implications for the technology sector and the global economy. As one of the world’s most valuable companies, its financial health is a bellwether for consumer electronics spending, semiconductor demand, and overall tech investor sentiment. A surge of this magnitude can positively influence supply chain partners, app developers within the iOS ecosystem, and related technology stocks. Furthermore, it highlights the concentration of consumer tech spending on premium, high-margin devices even in a complex economic environment, setting a benchmark for competitors.

Apple buys Israeli start-up Q.AI for close to $2bn in race to build AI devices

Ft • January 29, 2026

AI•Tech•Mergers•Apple•EmotionalAI•Apple

In a major strategic move to bolster its artificial intelligence capabilities for future devices, Apple has acquired the Israeli start-up Q.AI for a sum close to $2 billion. This acquisition represents one of Apple’s largest in the AI space and signals a significant escalation in the global race to develop more intuitive and emotionally aware consumer technology. The deal underscores Apple’s commitment to integrating advanced, on-device AI that can operate independently of cloud servers, a key differentiator in its competition with rivals like Google, Microsoft, and Samsung.

Strategic Rationale and Q.AI’s Technology

The acquisition is centered on Q.AI’s pioneering work in developing technology that can analyze and interpret human facial expressions and emotional cues. Unlike many AI systems focused solely on voice or text, Q.AI’s secretive research has reportedly made breakthroughs in real-time, on-device emotional recognition. This technology is seen as a critical component for the next generation of personal devices, enabling more natural human-computer interaction.

On-Device Processing: A core appeal of Q.AI’s technology for Apple is its ability to perform complex analysis directly on a device, such as an iPhone or a future wearable. This aligns perfectly with Apple’s longstanding emphasis on user privacy and data security, as sensitive biometric data would not need to be transmitted to external servers.

Competitive Edge: The move is a direct response to competitors who have been more publicly aggressive in generative AI. By securing Q.AI, Apple gains a potentially decisive edge in a different but equally crucial facet of AI: contextual and emotional understanding, which could redefine user interfaces and accessibility features.

Financial and Market Implications

The nearly $2 billion price tag highlights the immense value placed on cutting-edge AI talent and intellectual property. The deal is expected to involve not only the technology but also the absorption of Q.AI’s team of researchers and engineers, significantly expanding Apple’s AI R&D footprint in Israel, which is already a major hub for the company. This investment indicates that Apple is willing to spend heavily to ensure it is not merely a follower in the AI revolution but a leader defining its application in personal hardware.

Future Applications and Industry Impact

The integration of Q.AI’s technology could lead to transformative features in Apple’s product ecosystem. Potential applications include:

Enhanced Accessibility: Devices that can better understand user frustration, confusion, or needs through facial cues, offering more proactive and tailored assistance.

Revolutionized Communication: More expressive and responsive avatars or video call features that convey emotional subtleties.

Advanced Health Monitoring: Subtle analysis of facial markers for signs of fatigue, stress, or other health indicators, complementing existing health-tracking features.

Personalized User Experiences: Devices that adapt their behavior, notifications, and interactions based on the perceived emotional state of the user.

This acquisition places Apple at the forefront of a new wave of “empathetic computing.” It moves the industry battleground beyond raw computational power and language models toward creating devices with a form of emotional intelligence. The success of this integration will be closely watched, as it could set a new standard for how seamlessly and intuitively humans interact with technology on a daily basis. The deal reaffirms that the future of AI is not just in the cloud, but in the personalized, private, and perceptive devices in our hands.

With Apple’s new Creator Studio Pro, AI is a tool to aid creation, not replace it

Techcrunch • January 28, 2026

AI•Work•Creativity•Apple•Software•Apple