A reminder for new readers. That Was The Week collects my selected reading on critical issues in tech, startups, and venture capital. I select the articles because they are of interest to me. The selections often include things I entirely disagree with. But they express common opinions, or they provoke me to think. The articles are snippets, sometimes long to convey why they are of interest. Click on the headline to go to the original or the ‘More’ link at the bottom of each piece. I express my point of view in the editorial and the weekly video below.

Hats Off To This Week’s Contributors: @ItsUrBoyEvan, @andrewchen, @tedgioia, @profgalloway, @eladgil, @bgurley, @altcap, @DrJimFan, @PeterJ_Walker, @sarahpereztc, @jonathanvanian.bsky.social, @blunderchief, @ChrisHarveyEsq, @Apple, @packyM

Contents

Editorial: Don’t Stop Thinking About Tomorrow

Packy McCormick on Why Venture Capital is the Best Asset Class - Venture Capital & Free Lunch

Editorial: Don’t Stop Thinking About Tomorrow

“Yesterday’s Gone” is the next part of the song, repeated twice. That’s about right, based on this week’s developments.

Last week, we saw that OpenAI’s Sora could produce video. This week, we learned that it can determine sounds to accompany the video. I know that because Dr. Jim Fan (@NVIDIA Research Manager & Lead of Embodied AI (GEAR Group). Creating foundation models for Agents, Robotics, Gaming.) posted examples on X. Here is one.

His full post or two of them joined together, is below in the reading.

Combine that with the other essays, and a picture of a bifurcation in tech trends begins to be obvious.

@ItsUrBoyEvan talks about the overarching impact of AI and vision-based spatial computing (the Vision Pro in particular) not needing a killer app because the canvas itself is the innovation, and everything will move to them.

The killer app, when it’s defined as a single app that drives new hardware adoption, is kinda, maybe, bullshit. In my research, there doesn’t appear to be a persistent pattern of this phenomenon. I’ve learned, instead, that whether a device will sell is just as much a question of hardware and developer ecosystem than that of killer application.

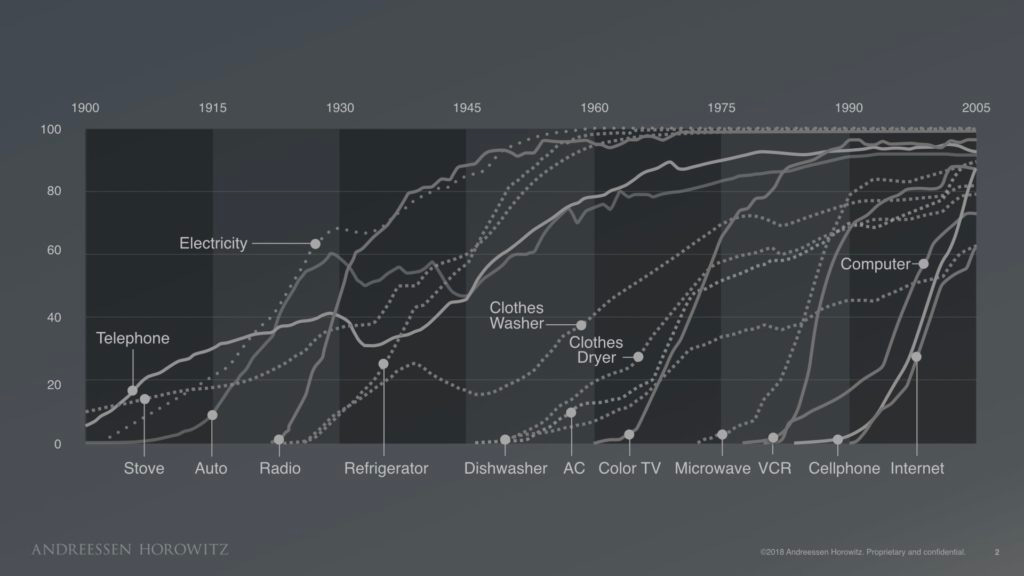

@andrewchen from A16Z has a short essay on X about the end of the mobile S curve and the beginning of the AI one.

Today, founders find themselves at a crossroads. Those who have hard-earned secrets in mobile apps will still find opportunities to use those secrets, but need to be mindful of the stage of S-curve they reside. They will have to be even more clever, do even more what’s new, in order to break out. And for those who stand at the beginning of the AI revolution, it’s easy to get started. But it’s hard to ride the chaos and stay on top. And even harder when the novelty inevitably stops, as only the best products will win.

This idea that both share is that the beginning of new things has far fewer requirements to participate in than the later stage, which contrasts @tedgioia’s essay on the state of culture.

Gioia notes that we are collectively moving to behaviors requiring less attention, and he characterizes this as post-entertainment or dopamine culture. His view:

Even the dumbest entertainment looks like Shakespeare compared to dopamine culture. You don’t need Hamlet, a photo of a hamburger will suffice. Or a video of somebody twerking, or a pet looking goofy.

Of course, he is right about that phenomenon, but I would pick at it and point out that great culture coexists with it. I will go to Berkley Rep this weekend to see a play; my TikTok use does not preempt it. But more importantly, mainstream media and entertainment now have a very high bar to get attention, and late s curve challenges will likely result in higher quality content to be able to attract us.

@ProfGalloway compounds Gioia’s point with a “Dopa” essay about gambling that was presented alongside the Superbowl on TV. He deep dives into the rise of gambling in live sport (and it won’t stop there).

Gambling has moved from something you do in isolation — at a geographically remote casino, using an illegal bookie, or socially in the context of a special event — to something available 24/7 on your phone. That has predictably accelerated the gambling business’s growth and brought billions into the sector. Just as banking’s move to smartphones morphed a tech-bro incel panic room into a bank run, our frictionless proximity to wagering is having effects we haven’t fully witnessed … yet

These essays lay out two alternate universes. One is where you awake excited about your day in the morning, and another is where you wake up afraid to read about what comes next.

Both things are happening at the same time. But history is cruel to “end of s curve” ecosystems and very kind to “start of s curve” trends. That’s why “Don’t Stop Thinking About Tomorrow, Yesterday’s Gone” summarizes my conclusions after this week’s developments. Robotics seems quite close now, also.

Essays of the Week

AI and the Vision Pro

Don’t Need a Killer App

Software that drives hardware adoption is rarer than you think

FEBRUARY 22, 2024

If you’ve spent enough time in startups, you’ve likely heard of the concept of the killer app: an application so good that people will buy new, unproven hardware just for the chance to use the software. It is a foundational idea for technology investors and operators that drives a whole host of investing and building activity. Everyone is on the hunt for the one use case so magical, so powerful that makes buying the device worth it.

People are hunting with particular fervor right now as two simultaneous technology revolutions—AI and the Apple Vision Pro—are underway. If a killer app emerges, it’ll determine who gains power in these platform shifts. And because our world is defined by tech companies, it will also determine who is going to have power in our society in the next decade.

But here’s the weird thing: The killer app, when it’s defined as a single app that drives new hardware adoption, is kinda, maybe, bullshit. In my research, there doesn’t appear to be a persistent pattern of this phenomenon. I’ve learned, instead, that whether a device will sell is just as much a question of hardware and developer ecosystem than that of killer application.

If we want to build, invest in, and analyze the winner of the next computing paradigm, we’ll have to reframe our thinking around something bigger than the killer app—something I call the killer utility theory. But to know where we need to go, we need to know where to start.

Where did the killer app come from?

Analysts have been wrestling with the killer app for nearly 40 years. In 1988, PC Week magazine coined the term “killer application” when it said, “Everybody has only one killer application. The secretary has a word processor. The manager has a spreadsheet." One year later, writer John C. Dorvak proposed in PC magazine that you couldn’t have a killer app without a fundamental improvement in the hardware: “All great new applications and their offspring derive from advances in the hardware technology of microcomputers, and nothing else. If there is no true advance in hardware technology, then no new applications emerge.”

This idea—in which the killer app is tightly coupled with new hardware capabilities—has been true in many instances:

VisiCalc was a spreadsheet program that was compatible with the Apple II. When it was released in 1979, it was a smashing success, with customers spending $400 in 2022 dollars for the software and the equivalent to $8,000–$40,000 on the Apple II. Imagine software so good you would spend 10x on the device just to use it.

Bloomberg Terminals and Bloomberg Professional Services are specialized computers and data services for finance professionals. A license costs $30,000, and the division covering these products pulls in around $10 billion a year.

Gaming examples abound, ranging from Halo driving Microsoft Xbox sales to The Breath of the Wild moving units of the Nintendo Switch. Every generation of consoles will have something called a “system seller,” where the game is so good that people will spend money to access it.

So this type of killer app—software that drives hardware adoption—does exist, and it has emerged frequently enough to be labeled a consistent phenomenon. However, if you are going to bet your career or capital on this idea, it is important to understand its weaknesses. There is one crucial device that violates this rule—the most important consumer electronic device ever invented. How do we explain the iPhone?

How does Apple violate the killer app theory?

When Steve Jobs launched the iPhone in 2007, he pitched it as “the best iPod we’ve ever made.” He also argued (incorrectly) that “the killer app is the phone.” Internet connectivity, the heart of the iPhone’s long-term success, was only mentioned about 30 minutes into the speech! And when it first came to market, it didn't even allow third-party apps. Jobs was sure that Apple could make better software than outside developers could. (Wrong.)

Even when he was finally convinced that the App Store should exist, it launched one year later with only 500 apps. By comparison, the Apple Vision Pro already has 1,000-plus native apps, just a few weeks after its release.

To figure out why people bought the iPhone, I read through old Reddit posts, blogs, and user forums. I tried to find an instance of someone identifying the iPhone’s killer app at launch. The answers were wide-ranging, from gaming apps to productivity use cases to even a flashlight app, but there wasn’t one consensus pick.

..More

The mobile S-curve ends, and the AI S-curve begins

There’s never been a bigger contrast between mobile and AI — it’s the end of one technology curve, and the start of the other. It’s been 15 years since the App Store was launched; while the generative AI revolution started merely 18 months ago. Mobile is now dominated by a duopoly of two giants, administering a collection of <100 apps that never seem to leave the charts (and a long tail that doesn’t matter much). This duopoly’s only true opponents are world governments. The brand new AI ecosystem, on the other hand, is in a state of utter chaos with new startups, technologies, and papers launched every week. One generative AI startup looks to be in the lead one week, and a few weeks later, their entire approach is in crisis — just look at AI video, recently! New approaches like open source, new types of hardware, regulation, and much more threaten to upend the stack rank every few quarters — isn’t that existing!?

Every startup is building on top of an existing S-curve. Perhaps you’re building in mobile, which is near the tail end of the curve. Or perhaps you’re working on spatial computing, web3, AI, or something near the beginning. This will color your product approach, how they take their startup to market, how investors think about funding in the sector, and so on.

THE "IT WORKS" FEATURE

When startups are early to an S-curve, as we’re seeing with AI right now, the act of building a product is often hard, but the growth can be easy. In the early days of the web, developers did not have the benefit of open source, cloud computing, high-level programming languages, and on. The sheer act of building something like eBay (something that we would now consider relatively simple), was a miracle in itself. The most important feature that a website needed to have was the “It Actually Works” feature. That is, the technology was difficult enough and the developer base small enough that delivering an actual working product was enough to attract users without much marketing or growth. You might know that today's AI landscape is perhaps not that different. You’ve now seen new startups posting a demo video or some test output and immediately there are thousands of users on their waitlist ready to try their product. In the age of social media, I think this has been accelerated for AI products that generate visual output — whether video, photos, 3D assets or otherwise — because it’s so easily shareable.

In the early days of mobile, it was often said that apps competed with boredom. Apps competed with waiting in line, sitting on the toilet, and all the other boring bits of time we’d rather be doing something else. But 15 years later, a new app has to compete with the most engaging experiences ever built — whether that’s an infinite stream of short videos, or an endless scroll of beautiful travel photos, or something else. As mobile hits the plateau of its S-curve, it’s not enough to simply “work.” In fact, mobile apps, have to be very very different than anything that has come before it to have a chance of success. Early S-curve products can fast follow and exercise Steve Jobs’s famous quote “good artists copy, great artists steal.” Late S-curve products have to contend with significantly higher user expectations of what constitutes a minimum viable product. Late S-curve startups are better off trying to create new categories, rather than fast-following, because at least new product categories might invent a small minimum viable product.

THE NOVELTY EFFECT

Novelty fuels early S-curve products. This desire for novelty means that whenever there are major new features or upgrades to underlying AI models, you see a rush of new users without much marketing effort. While all of this novelty drives high-level growth numbers, I will also argue that in the early phase of an S-curve, there is both high growth and high churn. During this phase, growth is easy because of word-of-mouth from highly enthusiastic early adopters. However, I would warn you that eventually low retention will catch up to even the fastest growing products as novelty effects start to die down. Novelty effects kick in because, well, humans are kind of dopamine fiends. When we see an amazing AI-generated image for the first time, it’s like WOWWOWOW — followed by sharing, commenting, and forwarding to friends. But do show people a cool AI photo a few dozen times, and we get used to it. We need even more, to be entertained. Thus, sharing goes down, and so down overall engagement. Every product on an S-curve in a rapid ascent up the middle of the S, but as the plateau starts to hit, then the novelty effects start to unwind. At some point, retention will be king, and only the most retentive products will survive.

Investors focus on the early part of the S-curve because the first few years should usher in big upticks simply by being in the market. It’s easier to deliver an “it works” feature than to compete in red oceans of established products, and founders can get new users, plenty of growth, while trading off the fact it might be a big harder to build a v1. This is why venture capital money often pours into a new sector like AI, causing many startups to follow the money and pivot into the category. Is this opportunistic? Yes. Is it smart? Probably also yes. The best markets often have tons of competition, are hot and very dynamic, and this is often seen as a good thing. If you’re in a market by yourself with no competition, then perhaps it’s not that great of a market after all?

THE LATE S-CURVE

The skills needed to succeed in a late S-curve market, in contrast, are very different. And they translate to a different set of dynamics that investors will evaluate.

..More

The State of the Culture, 2024

Or a glimpse into post-entertainment society (it's not pretty)

TED GIOIA, FEB 18, 2024

The President delivers a ‘State of the Union’ Speech every year, but that’s a snooze. Just look at your worthy representatives struggling to keep their eyes open. That’s because they’ve heard it all before.

We have too. Not much changes in politics. Certainly not the candidates.

There’s more variety at my local gas station, where at least I get to choose from three types of fuel and five flavors of Big Gulp.

So forget about politics. All the action now is happening in mainstream culture—which is changing at warp speed.

That’s why we need a “State of the Culture” speech instead. My address last year was quoted and cited, and was absolutely true back then—but it’s already as obsolete as the ChatGPT-1 help desk at the Bored Ape Yacht Club.

In fact, 2024 may be the most fast-paced—and dangerous—time ever for the creative economy. And that will be true, no matter what happens in November.

So let’s plunge in.

I want to tell you why entertainment is dead. And what’s coming to take its place.

If the culture was like politics, you would get just two choices. They might look like this.

Many creative people think these are the only options—both for them and their audience. Either they give the audience what it wants (the entertainer’s job) or else they put demands on the public (that’s where art begins).

But they’re dead wrong.

Maybe it’s smarter to view the creative economy like a food chain. If you’re an artist—or are striving to become one—your reality often feels like this.

Until recently, the entertainment industry has been on a growth tear—so much so, that anything artsy or indie or alternative got squeezed as collateral damage.

But even this disturbing picture isn’t disturbing enough. That’s because it misses the single biggest change happening right now.

We’re witnessing the birth of a post-entertainment culture. And it won’t help the arts. In fact, it won’t help society at all.

Even that big whale is in trouble. Entertainment companies are struggling in ways nobody anticipated just a few years ago.

Consider the movie business:

Disney is a state of crisis—where everything is shrinking (except the CEO’s paycheck).

Paramount just laid off 800 employees—and wants to find a new owner.

Universal is now releasing movies to streaming after just 3 weeks in theaters.

Warner Bros actually makes more money canceling films than releasing them.

The TV business also hit a wall in 2023. After years of steady growth, the number of scripted series has started shrinking.

Music may be in the worse state of them all. Just consider Sony’s huge move a few days ago—investing in Michael Jackson’s song catalog at a valuation of $1.2 billion. No label would invest even a fraction of that amount in launching new artists.

In 2024, musicians are actually worth more old than young, dead than alive.

This raises the obvious question. How can demand for new entertainment shrink? What can possibly replace it?

But something will replace it. It’s already starting to happen.

Here’s a better model of the cultural food chain in the year 2024.

Dopa Bowl

Scott Galloway @profgalloway

Published on February 16, 2024

Other than AI, gambling may be the fastest-growing $10-billion-plus industry in the U.S. A record 43 million Americans (1 in 6 people over the age of 18) bet on this year’s Super Bowl, wagering a total of $23 billion, a 35% jump from last year’s total. Next month, twice that many could bet on the NCAA men’s basketball tournament.

The media loves to catastrophize about AI, but, relative to the upside, the risks may be greater with gambling. There’s little chance gambling will make health care and education more accessible. And while the potential downsides of AI make for better clickbait, the risks of gambling are known and serious. However, the externalities feel less urgent. Why? Because the costs are (mostly) levied on young men and (mostly) tallied in isolation. Our nation has decided that problems facing almost every special interest group are, correctly, deemed issues that warrant study, empathy, and investment. But the issues affecting young men are viewed as a function of their lack of character that could be solved if they just “got their act together” or “were more in touch with their feelings.”

Leaving Las Vegas

In 2018 the Supreme Court struck down the federal ban on sports gambling, and 38 states have now legalized some form of it. It’s now an industry with annual revenue of $7.5 billion. Other online forms of gambling, operating in a legal gray area, are also growing quickly. But this hasn’t led to an upsurge in local business activity. Who has swallowed all this revenue? One guess: the tech industry. If you’ve watched any televised sports in the past few years, you’ve probably caught Kevin Hart, Jamie Foxx, and Charles Barkley hawking online gambling apps. They’re earning their money. DraftKings and BetMGM have seen their revenue increase 5x and 11x since 2020. FanDuel’s Irish parent, Flutter, listed on the NYSE last month, where it garnered a $37 billion valuation — more than Kia or Kroger.

Gambling has moved from something you do in isolation — at a geographically remote casino, using an illegal bookie, or socially in the context of a special event — to something available 24/7 on your phone. That has predictably accelerated the gambling business’s growth and brought billions into the sector. Just as banking’s move to smartphones morphed a tech-bro incel panic room into a bank run, our frictionless proximity to wagering is having effects we haven’t fully witnessed … yet.

The Las Vegas of … Las Vegas (Wall Street)

In truth, online gambling is larger than these figures suggest. Because there’s another industry we don’t list as “gambling” that we should. Online stock trading was a $10.7 billion industry in 2023. A meaningful portion of that is people managing long-term investments and providing growth capital for companies. However, the bulk of revenue generation comes from churn/trading. Customers use these apps to get in and out of stocks and buy elaborate, high-leverage derivative instruments. Online stock trading app Robinhood built its business on a stack of illegal and suspect business practices, paying fine after fine on the way to its IPO. Studies show that 97% of day traders lose money, but the house wins 100% of the time: Robinhood “crushed earnings and revenue estimates” for Q4, reporting $471 million in revenue on February 13, a 24% year-over-year increase. In the press release touting the results, the company said it was “building features for active traders.” There is upside here: Robinhood has introduced a new cohort to the markets, a good thing, and the best regulation is life lessons. But let’s call this what it is, a gambling app.

Things I Don't Know About AI

The more I learn about AI markets, the less I think I know. I list questions and some thoughts.

FEB 21, 2024

In most markets, the more time passes the clearer things become. In generative AI (“AI”), it has been the opposite. The more time passes, the less I think I actually understand.

For each level of the AI stack, I have open questions. I list these out below to stimulate dialog and feedback.

LLM Questions

There are in some sense two types of LLMs - frontier models - at the cutting edge of performance (think GPT-4 vs other models until recently), and everything else. In 2021 I wrote that I thought the frontier models market would collapse over time into an oligopoly market due to the scale of capital needed. In parallel, non-frontier models would more commodity / pricing driven and have a stronger opensource presence (note this was pre-Llama and pre-Mistral launches).

Things seem to be evolving towards the above:

Frontier LLMs are likely to be an oligopoly market. Current contenders include closed source models like OpenAI, Google, Anthropic, and perhaps Grok/X.ai, and Llama (Meta) and Mistral on the open source side. This list may of course change in the coming year or two. Frontier models keep getting more and more expensive to train, while commodity models drop in price each year as performance goes up (for example, it is probably ~5X cheaper to train GPT-3.5 equivalent now than 2 years ago)

As model scale has gotten larger, funding increasingly has been primarily coming from the cloud providers / big tech. For example, Microsoft invested $10B+ in OpenAI, while Anthropic raised $7B between Amazon and Google. NVIDIA is also a big investor in foundation model companies of many types. The venture funding for these companies in contrast is a tiny drop in the ocean in comparison. As frontier model training booms in cost, the emerging funders are largely concentrated amongst big tech companies (typically with strong incentives to fund the area for their own revenue - ie cloud providers or NVIDIA), or nation states wanting to back local champions (see eg UAE and Falcon). This is impacting the market and driving selection of potential winners early.

It is important to note that the scale of investments being made by these cloud providers is dwarfed by actual cloud revenue. For example, Azure from Microsoft generates $25B in revenue a quarter. The ~$10B OpenAI investment by Microsoft is roughly 6 weeks of Azure revenue. AI is having a big impact on Azure revenue revently. Indeed Azure grew 6 percentage points in Q2 2024 from AI - which would put it at an annualized increase of $5-6B (or 50% of its investment in OpenAI! Per year!). Obviously revenue is not net income but this is striking nonetheless, and suggests the big clouds have an economic reason to fund more large scale models over time.

In parallel, Meta has done outstanding work with Llama models and recently announced $20B compute budget, in part to fund massive model training. I posited 18 months ago that an open source sponsor for AI models should emerge, but assumed it would be Amazon or NVIDIA with a lower chance of it being Meta. (Zuckerberg & Yann Lecunn have been visionary here).

Questions on LLMs:

Are cloud providers king-making a handful of players at the frontier and locking in the oligopoly market via the sheer scale of compute/capital they provide? When do cloud providers stop funding new LLM foundation companies versus continuing to fund existing? Cloud providers are easily the biggest funders of foundation models, not venture capitalists. Given they are constrained in M&A due to FTC actions, and the revenue that comes from cloud usage, it is rational for them to do so. This may lead / has led to some distortion of market dynamics. How does this impact the long term economics and market structure for LLMs? Does this mean we will see the end of new frontier LLM companies soon due to a lack of enough capital and talent for new entrants? Or do they keep funding large models hoping some will convert on their clouds to revenue?

Does OSS models flip some of the economics in AI from foundation models to clouds? Does Meta continue to fund OS models? If so, does eg Llama-N catch up to the very frontier? A fully open source model performing at the very frontier of AI has the potential to flip a subportion the economic share of AI infra from LLMs towards cloud and inference providers and decreases revenue away from the other LLM foundation model companies. Again, this is likely an oligopoly market with no singular winner (barring AGI), but has implications on how to think about the relative importance of cloud and infrastructure companies in this market (and of course both can be very important!).

One of the most brilliant things in the Llama2 terms of use is the open commercial use of the license if you have fewer then 700 million users[1]. This obviously prevents some large competitors from using their models. But it also means if you are a big cloud provider you need to pay a license to Meta for Llama, which Microsoft has already done. This creates an interesting long term way for Meta to control (& monetize) Llama despite being open source.

How do we think about speed and price vs performance for models? One could imagine extremely slow incredibly performant models may be quite valuable if compared to normal human speed to do things. The latest largest Gemini models seem to be heading in this direction with large 1 million+ token context windows a la Magic, which announced a 5 million token window in June 2023. Large context windows and depth of understanding can really change how we think about AI uses and engineering. On the other side of the spectrum, Mistral has shown the value of small, fast and cheap to inference performant models. The 2x2 below suggests a potential segmentation of where models will matter most.

How do architectures for foundation models evolve? Do agentic models with different architectures subsume some of the future potential of LLMs? When do other forms of memory and reasoning come into play?

Do governments back (or direct their purchasing to) regional AI champions? Will national governments differentially spend on local models a la Boeing vs Airbus in aerospace? Do governments want to support models that reflect their local values, languages, etc? Besides cloud providers and global big tech (think also e.g. Alibaba, Rakuten etc) the other big sources of potential capital are countries. There are now great model companies in Europe (e.g. Mistral), Japan, India, UAE, China and other countries. If so, there may be a few multi-billion AI foundation model regional companies created just off of government revenue.

What happens in China? One could anticipate Chinese LLMs to be backed by Tencent, Alibaba, Xiaomi, ByteDance and others investing in big ways into local LLMs companies. China’s government has long used regulatory and literal firewalls to prevent competition from non-Chinese companies and to build local, government supported and censored champions. One interesting thing to note is the trend of Chinese OSS models. Qwen from Alibaba for example has moved higher on the broader LMSYS leaderboards.

What happens with X.ai? Seems like a wild card.

How good does Google get? Google has the compute, scale, talent to make amazing things and is organized and moving fast. Google was always the worlds first AI-first company. Seems like a wild card.

Video of the Week

Bill Gurley & Brad Gerstner | NVDA, Chips, AI Compute Build Out, AI Impact on Big Tech

Open Source bi-weekly convo w/ Bill Gurley and Brad Gerstner on all things tech, markets, investing & capitalism. This week, they discuss earnings, inflation, interest rates, the impact of AI on Big Tech, NVDA, chips, fabs, Altman’s $7T to meet AI Compute needs, & more. Enjoy another episode of Bg2.

AI of the Week

NVIDIA Launches RTX, Personalized GPT Models

Interested in creating your own GPT LLM model based on your own files? Imagine one that learns from your docs, videos, notes, and other pieces of data! Well, NVIDIA is ready to make this a reality with RTX.

Leveraging TensorTR-LLM, RTX, and retrieval-augmented generation, users are able to personalize a chatbot that answers their questions and fulfills creative demands. Currently, RTX is a free tech demo from NVIDIA. In all, the app is tailored to your specific needs, running it directly on your RTX-powered Windows PC.

Now, what do you need to run RTX on your own system? Well, the system requirements are modest. According to the announcement, users need an RTX 2080 Ti or better graphics card, 32GB of RAM, and 10GB of free disk space.

As you can see, not too bad and pretty much in reach for most users. So once set up, feed Chat with RTX your own text, code, or image files to train your unique LLM. The more data you provide, the sharper its responses become.

Now wait for a moment, and imagine a chatbot that seamlessly translates languages, crafts poems on demand, or even answers your technical questions with expert-level insight based on the data you feed it.

RTX makes this a reality for users who are adventurous enough to take on the challenge. This technology leverages “retrieval-augmented generation,” allowing your LLM to access and process your personal data alongside vast online information sources.

While still in its demo phase, Chat with RTX opens exciting possibilities for developers and curious minds alike. Imagine creating personalized assistants for specific tasks, crafting chatbots for businesses, or simply having a unique AI companion at your fingertips. The potential is vast.

..More

Sora is a data-driven physics engine.

Senior Research Scientist & Lead of AI Agents. Creating foundation models for Agents, Robotics, Gaming. @Stanford Ph.D. @OpenAI 's first intern.

If you think OpenAI Sora is a creative toy like DALLE, ... think again.

Sora is a data-driven physics engine. It is a simulation of many worlds, real or fantastical.

The simulator learns intricate rendering, "intuitive" physics, long-horizon reasoning, and semantic grounding, all by some denoising and gradient maths. I won't be surprised if Sora is trained on lots of synthetic data using Unreal Engine 5. It has to be!

Let's breakdown the following video. Prompt: "Photorealistic closeup video of two pirate ships battling each other as they sail inside a cup of coffee."

- The simulator instantiates two exquisite 3D assets: pirate ships with different decorations. Sora has to solve text-to-3D implicitly in its latent space.

The 3D objects are consistently animated as they sail and avoid each other's paths.

Fluid dynamics of the coffee, even the foams that form around the ships. Fluid simulation is an entire sub-field of computer graphics, which traditionally requires very complex algorithms and equations.

Photorealism, almost like rendering with raytracing. - The simulator takes into account the small size of the cup compared to oceans, and applies tilt-shift photography to give a "minuscule" vibe.

The semantics of the scene does not exist in the real world, but the engine still implements the correct physical rules that we expect. Next up: add more modalities and conditioning, then we have a full data-driven UE that will replace all the hand-engineered graphics pipelines.

Sora now gets synthetic audio

It's prompted by text, but the right conditioning should be on both text and video pixels. Learning an accurate video->audio mapping would also require modeling some *implicit* physics in the latent space.

Here's what an end2end transformer needs to figure out to simulate sound waves correctly:

1. Identify each object's category, materials, and spatial locations.

2. Identify the higher-order interactions between objects: is a stick hitting a wooden, metal, or drum surface? At what speed?

3. Identify the environment: restaurants? Space station? Yellowstone? Japanese shrine?

4. Retrieve the typical sound patterns of objects and surroundings from the model's internal memory.

5. Run "soft", learned physical rules to piece together and adjust parameters of the sound patterns, or even synthesize completely new ones on the fly. Kind of like "Procedural Audio" in game engines.

6. If the scene is busy, the model needs to overlay multiple sound tracks based on their spatial locations. None of the above is an explicit module! All will be learned by gradient descent through massive amounts of (video, audio) pairs, which are naturally time-aligned in most internet videos. Attention layers will implement these algorithms in their weights to satisfy the diffusion objective.

We don't have such a high-quality AI audio engine yet, but here's a very inspiring paper called "The Sound of Pixels" from 5 years ago: http://sound-of-pixels.csail.mit.edu

News Of the Week

Founders - how much of your company will you sell in a SAFE round?

Peter Walker

Head of Insights @ Carta | Data Storyteller

As the world has shifted to using the post-money SAFE as the standard way to fundraise at early startups, we can now get a good sense of how much of the company equity is going out the door.

Data comes from over 2,000 US companies raising on post-money SAFEs in 2023. Only including post-money as pre-money SAFEs are dependent on future events.

Key point - dilution rises as round size increases !

Here's the breakdown:

𝗥𝗮𝗶𝘀𝗲 𝗼𝗳 𝘂𝗻𝗱𝗲𝗿 $𝟮𝟱𝟬𝗞

• Founders sell about 1% of the company in such raises

• Up to 3% or so on the higher end

$𝟮𝟱𝟬𝗞-$𝟰𝟵𝟵𝗞

• About 5% of the company is sold (say a $300K raise on a $8M cap)

• 7% on the high end, 3% on the low end

$𝟱𝟬𝟬𝗞-$𝟵𝟵𝟵𝗞

• Median of 8% sold

• 5%-11% as the range

$𝟭𝗠-$𝟮.𝟰𝗠

• Median of 14% sold

$𝟮.𝟱𝗠-$𝟰.𝟵𝗠

• Median of 18% sold

$𝟱𝗠+

• Median of 19% sold

The dilution entailed in the larger rounds are a major reason why folks consider those "seed" rounds. Typically founders sell 20% in a priced seed, which aligns very closely with the $2.5M + rounds on SAFEs.

Now many founders will raise multiple "rounds" on SAFEs, if a round is defined by SAFEs signed at different valuation caps.

If you raise a friends & family of $200K (congrats on your amazing friends / fam) and then another $600K or so from a pre-seed fund, that's already 10% of your company sold. So jumping through a priced seed and then a Series A, this founding team is approaching 50% dilution after the A round.

Fundraising in this manner is always a balancing act between dilution on the one hand and capital needs on the other. Hopefully this gives founders a bit more of a baseline to rightsize their own raises!

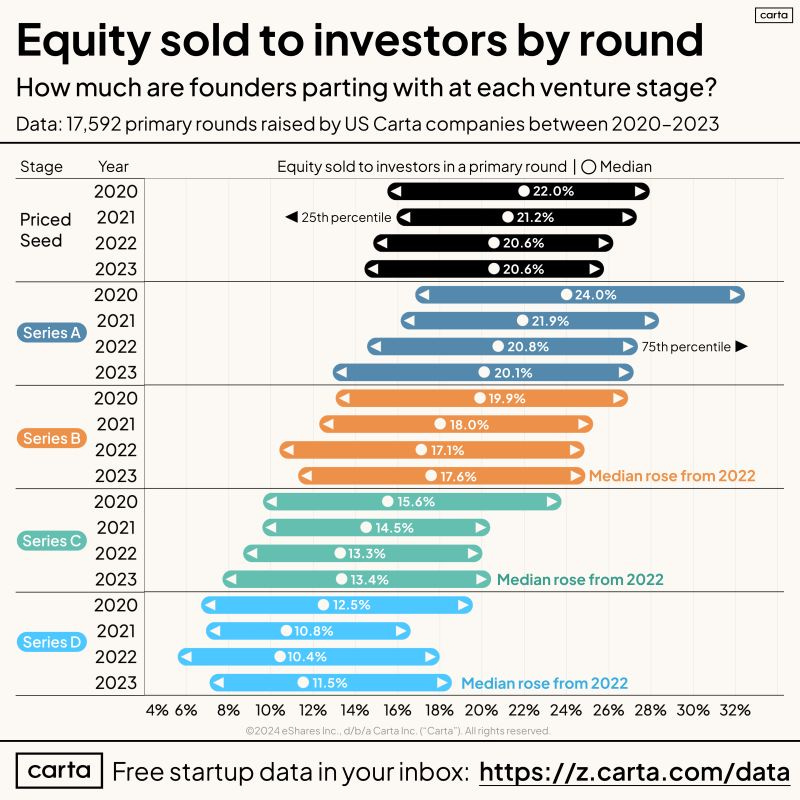

Real dilution by venture round - data from 17,000 primary rounds since 2020.

Peter Walker

Head of Insights @ Carta | Data Storyteller

The primary round note is important, as there are lots of bridge rounds, extensions, and all sorts of creative financing going on.

But in the standard venture alphabet round, here's how much founders are selling to investors:

𝗣𝗿𝗶𝗰𝗲𝗱 𝗦𝗲𝗲𝗱

• 20.6% median in 2023

• Nudging down from 2020, but flat from 2022

𝗦𝗲𝗿𝗶𝗲𝘀 𝗔

• 20.1% median in 2023

• Consistently notched downward over the past 4 years

𝗦𝗲𝗿𝗶𝗲𝘀 𝗕

• 17.6% median in 2023

• Bumped up from 17.1% in 2022

𝗦𝗲𝗿𝗶𝗲𝘀 𝗖

• 13.4% median in 2023

• Essentially flat from 2022

𝗦𝗲𝗿𝗶𝗲𝘀 𝗗

• 11.5% median in 2023

• Sizable rise from the 10.4% median in 2022

If you're looking for how much founders are selling through SAFEs and Convertible Notes, we covered that yesterday in a separate graphic. You can add in ~18% for a major SAFE round (over $2M) and 8-10% for a more minor SAFE round.

So - that's a lot of pie being handed out! Not to mention the employee option pool, which starts around 10% and grows to 20% or so by the time a Series D is in play.

Important to note that no company really takes the median dilution in every round. Each fundraising journey is peculiar - founders may sell 30% in a Series A, 5% in a Series B, and everywhere in between. No "right" way to do this.

But these benchmarks make the dead equity point very salient. If you've gone through a founder divorce at seed-stage, and that person left with 15-20% of the company fully vested, it makes the future slices of pie difficult for venture funds to get excited about.

Apple reveals new details about Spotify’s business as possible EU fine nears

Sarah Perez @sarahpereztc / 2:30 PM PST•February 22, 2024

Image Credits: TechCrunch

With the European Commission set to rule on Spotify’s complaint focused on competition in the streaming music market, there are hints that the ruling will not be in Apple’s favor. This week, the Financial Times reported the EC will issue its first-ever fine against the tech giant for allegedly breaking EU law over competition in the streaming music market. The fine is expected to be around €500 million (about $539 million USD), the report noted.

Instead of chalking up the fine as the cost of doing business, as a company that made history as the first to be valued at $3 trillion surely could, the tech giant is taking the fight to the public.

In a statement shared with media today, Apple argued against the idea that Spotify has been harmed by any anticompetitive practices on its part. (The statement was not issued by a single spokesperson, but rather comes from Apple itself). It reads:

We’re happy to support the success of all developers — including Spotify, which is the largest music streaming app in the world. Spotify pays Apple nothing for the services that have helped them build, update, and share their app with Apple users in 160 countries spanning the globe. Fundamentally, their complaint is about trying to get limitless access to all of Apple’s tools without paying anything for the value Apple provides.

Apple pointed out also that Spotify has a 56% share of the market, compared with 20% for Amazon Music and Apple Music’s 11%, per MIDiA’s 2022 report on the subscription music market.

..More

Nvidia Q4 Results: More Acceleration

February 22, 2024 · by D/D Advisors · in AI, Analyst Decoder Ring. ·

A quick note today. Nvidia reported its latest results. We have worked in semis a long time, and we remember in the distant past, say four years ago, when only a few hundred people cared much about Nvidia’s results (outside of its employees). Now it sometimes seems like the entire fate of Western Civilization depends on their results, such is the general frenzy around AI. At least, the results were very good.

The company reported revenue and EPS ahead of expectations, $22 billion on consensus of $20 billion and $5.16 on $4.63, respectively. The company also guided to revenue of $22 billion ahead of expectations of $24 billion. These are solid results, albeit not quite as awe-inspiring as the company’s last three sets of earnings. To put this in context, Nvidia’s total revenue in 4Q of last year was $6 billion, growing to $22 billion in the latest quarter.

We are not going to dig into all their commentary and numbers, but a few things stood out for us. Most notable was the company’s commentary that 40% of their data center revenue are from products being used for Inference workloads. AI inference is the mostly hotly contested sub-segment of the market now, one upon which most of Nvidia’s competitors have built their hopes. The inference build out is still in early days, so there is no guarantee that Nvidia can maintain this level. That being said, there is no reason to assume it cannot go up even further.

We also think it is important to look at Nvidia’s results in the context of broader changes in the market for data center processors. Nvidia now accounts for 75% of that market, up from 73% last quarter and 10% in 2019. That market has grown from $6 billion to $25 billion in just over 4 years. So they are making massive gains in a market that is growing at 36% at compound annual growth rate (CAGR) over that period. Looked at another way, if we exclude Nvidia, the market has only grown at a 3% rate over that period, a time which included major hyperscaler build outs. Over that period, Nvidia’s data center revenue grew at a 114% CAGR, it has been been more than doubling every year for almost five years.

With growth like that, for a company of this size, it is easy to see how they have attracted so much interest. Of course, there are two downsides to this.

..More

Reddit files to list IPO on NYSE under the ticker RDDT

PUBLISHED THU, FEB 22 20243:25 PM EST

KEY POINTS

Reddit on Thursday filed to go public.

Its market debut will mark the first major tech initial public offering of the year and the first social media IPO since Pinterest went public in 2019.

The social media company, founded in 2005 by technology entrepreneurs Alexis Ohanian and Steve Huffman, has raised about $1.3 billion in funding and has a post valuation of $10 billion, according to deal-tracking service PitchBook.

Budrul Chukrut | Lightrocket | Getty Images

Social media company Reddit filed its IPO prospectus with the Securities and Exchange Commission on Thursday after a yearslong run-up. The company plans to trade on the New York Stock Exchange under the ticker symbol “RDDT.”

Its market debut, expected in March, will be the first major tech initial public offering of the year. It’s the first social media IPO since Pinterest went public in 2019.

Reddit said it had $804 million in annual sales for 2023, up 20% from the $666.7 million it brought in the previous year, according to the filing. The social networking company’s core business is reliant on online advertising sales stemming from its website and mobile app.

The company, founded in 2005 by technology entrepreneurs Alexis Ohanian and Steve Huffman, said it has incurred net losses since its inception. It reported a net loss of $90.8 million for the year ended Dec. 31, 2023, compared with a net loss of $158.6 million the year prior.

Reddit is one of the most-visited websites in the U.S., according to analytics firm Semrush, but it has struggled to build an online advertising business comparable to those of tech giants such as Facebook parent Meta and Google parent Alphabet.

Reddit has more than 100,000 communities, 73 million average daily active uniques, or DAUq, and 267 million average weekly active uniques, according to the filing. As of the fourth quarter of 2023, Reddit’s U.S. average revenue per user, or ARPU, was $5.51, down from $5.92 from the previous year. The company’s global ARPU was $3.42, which was a 2% year-over-year decline from $3.49.

Reddit said that by 2027 it estimates the “total addressable market globally from advertising, excluding China and Russia, to be $1.4 trillion.” Reddit said the current addressable advertising market is $1.0 trillion, sans China and Russia.

..More

Microsoft and Intel strike a custom chip deal that could be worth billions

/

With the Microsoft deal, Intel notches a big partnership as it seeks to regain its former position at the top of chip manufacturing.

By Wes Davis, a weekend editor who covers the latest in tech and entertainment. He has written news, reviews, and more as a tech journalist since 2020.

Feb 21, 2024, 5:26 PM PST

Intel will be producing custom chips, designed by Microsoft for Microsoft, as part of a deal that Intel says is worth more than $15 billion. Intel announced the partnership during its Intel Foundry event today. Although neither company specified what the chips would be used for, Bloomberg noted today that Microsoft has been planning in-house designs for both processors and AI accelerators.

“We are in the midst of a very exciting platform shift that will fundamentally transform productivity for every individual organization and the entire industry,” said Microsoft CEO Satya Nadella in the official press release.

The chips will use Intel’s 18A process, which has been a big part of its road map since the company brought CEO Pat Gelsinger back to turn things around. The company is counting on its chip foundry services to put it back on top of the chipmaking world, and it seems that Microsoft will be the first major customer for this project.

Leaning on producing others’ designs is a playbook that’s worked well for competitor Taiwan Semiconductor Manufacturing Company (TSMC), which has lucrative partnerships with companies like Apple, Qualcomm, and AMD. Gelsinger told VentureBeat today that the company’s foundry is a big part of its strategy.

..More

VC Funds Regulatory Playbook

The Carta Policy Team Just Dropped the 'VC Regulatory Playbook' for Emerging Fund Managers. Let’s Review It.

FEB 20, 2024

Key Takeaways: We have written a lot about VC fund regulations here on Law of VC—probably more than any other Substack. Today, our attention turns to the Carta VC Regulatory Playbook. My initial thoughts are that this is the best practical guide on VC fund regulations. It distills the complex nature of SEC rules down to simple checklists and a good framework for emerging fund managers. Let’s review it in more detail. (Link).

On February 7th, the Carta Policy Team posted the VC regulatory playbook:

What is It?

The VC Regulatory Playbook is a 23-page guidebook that covers these topics:

Regulation of the ‘fundraising process’

Regulation of ‘private funds’

Regulation of the ‘fund manager’

ERA Compliance Checklist

Additional Regulatory Considerations

Back in Law of VC #20 - a Simple Framework, we learned that there are three principal laws that govern 80%+ of venture fund law:1

This is the same regulatory framework that underlies Carta’s VC Regulatory Playbook:

LPs: Regulation of the Fundraising Process

Funds: Regulation of Private Funds

GPs: Regulation of the Fund Manager

FAQs: Framing it in this way can help us understand some of the nuances and the common legal issues that emerging fund managers face today. For example—

Q: Can I advertise my fund’s offering?

Yes—see Rules 506(b) and (c):

Rule 506(b): no general solicitation or advertising is allowed;

Rule 506(c): you can advertise but only if you take “reasonable steps to verify” your LPs are accredited investors.2

Q: How many investors can legally invest in my venture fund?

Q: Do I need to file a Form ADV?

<$25M: ⚠️ If your total assets under management (AUM) are less than $25 million, you may not be eligible or even permitted to file a Form ADV with the SEC—see Regulation of the Fund Manager section, below.

Let’s dive into the most important parts of the guide:

I. Regulation of the Fundraising Process

Regulation D: The Key Takeaways

In the Last Money In Substack,

and

laid out a very good high-level summary of the primary exemptions under Regulation D:

You can think about the differences [between Rule 506(b) and Rule 506(c)] in the ability to leverage the public or not. Under 506(c), you can advertise your fund on a large billboard or even a Super Bowl TV ad, while 506(b) is more restrictive, like a private club with a member’s only invite.

Rule 506(b)—Member’s Only

Rule 506(b) is the traditional way to raise a fund. Most funds use this exemption because it offers two key advantages: (i) private placements provide a cleaner regulatory pathway, including the option to rely on Section 4(a)(2), and (ii) the rules are more lenient if you’re willing to forgo public advertising.

Pro Tip: While it may be possible to add 35 non-accredited LPs in 506(b) fund offerings, GPs almost always avoid it due to the uncertainty, disclosure obligations & costs.

Rule 506(c)—Influencer’s Wanted

Consider Rule 506(c) as the modern/influencer way to raise a fund. For example, most AngelList rolling funds are 506(c) funds. These funds tend to be more social media focused—Balaji (@balajis) and Jason Calacanis (@jason) are each publicly raising $50-100+ million venture capital funds under Rule 506(c).

Turner Novak launched Banana Fund 2 (a $20M VC fund) using Rule 506(c):

Form D

Funds relying on Rule 506 are required to file a Form D5 with the SEC within 15 days after “first closing”—that is, the date upon which any LP is “irrevocably contractually committed” to invest in the fund; which is generally the date both the GP and LP sign their counterparts to the limited partnership agreement (LPA) and fund documents.6

Startup of the Week

Apple Sports

Wednesday, 21 February 2024

“We created Apple Sports to give sports fans what they want — an app that delivers incredibly fast access to scores and stats,” said Eddy Cue, Apple’s senior vice president of Services. “Apple Sports is available for free in the App Store, and makes it easy for users to stay up to date with their favorite teams and leagues.”

Observations:

Apple Sports is indeed incredibly fast to load and update. Nearly instantaneous. You might think, “So what, it’s just loading scores and stats, of course it’s fast”, but the truth is ad tech, combined with poor programming, has made most sports apps slow to load. Most apps, period, really. Just being very fast to load ought not be a hugely differentiating factor in 2024, but it is. (ESPN’s app, for example, is incredibly slow to show anything useful after launching.)

Apple isn’t listing several major sports leagues — including MLB, WNBA, and the king of all leagues, the NFL — but that’s simply because they’re not in season. They’re only listing leagues currently playing. MLB, WNBA, and NFL will be included once they start playing.

Apple is including betting odds in game listings by default, with data from DraftKings. If you don’t want to see gambling odds, you need to turn them off in Settings → Sports. I like DraftKings, and have an account there, but I generally find that their odds are outliers and fluctuate more from the consensus odds. FanDuel and BetMGM are both more in line with the consensus, at least for the NFL. (I have no idea if either FanDuel or BetMGM offer odds as an API service for an app like Sports, though.) Anyway, I’m just glad the odds are there.

Live activities for your lock screen are available, but Sports doesn’t — yet — offer any Home Screen widgets.

Just like Apple’s new Journal app, Sports is iPhone-only. There’s no compatible version for Mac, Vision, TV, or Watch. The difference from Journal is that Journal is built-into iOS 17 (17.2 and later), but Sports is a download from the App Store — not built into the OS (yet?) — and can be installed on an iPad. But on iPad, it just runs in an iPhone layout. Does Apple think this Sports app is only relevant on iPhone (and perhaps, eventually, Apple Watch), or is this just the platform they targeted first and it’ll be available as a proper iPad and Vision app eventually? (I’m thinking it might never be a Mac app. Once Sports offers Home Screen widgets, you’ll be able to get those widgets on your Mac desktop via the feature that lets you put iPhone app widgets on your Mac.)

I generally have a good sense of why Apple does things the way it does, but it’s not clear to me at all why Journal, say, is now built into iOS 17 but Sports is only available from the App Store. I sort of think Sports will be included by default in iOS 18, but maybe I’m missing something here.

Sports syncs your favorite teams (and leagues?) between the Apple TV and Apple News apps, so if you’ve already set favorite teams in either of those apps, Sports already knows them. Sports also integrates with the TV for “watch now” — not just for sports that Apple itself broadcasts (like MLS soccer and Friday Night Baseball), but for any live sporting events available through any available streaming apps. That’s a killer feature. (ESPN, unsurprisingly, only has “where to watch” links for games broadcast on ESPN or ABC.)

The app this most sherlocks for me is Sports Alerts. I’ve been a big fan of Sports Alerts for years, and they’ve been great about adopting new features like Lock Screen Live Activities very quickly. But Apple Sports looks far better and offers far more clarity; Sports Alerts looks like what it is: a cross-platform app with an Android look-alike companion. A truly iOS-native live sports scores/stats app ought to be able to blow anything cross-platform out of the water, and Apple Sports seems to be that. Yahoo Sports has been sitting in my App Library, mostly unused, for years — I’ll probably delete it now.

The design language of Apple Sports is new. I wouldn’t say Sports looks much like Journal, but they’re similar insofar as they both are using a new, very simple, very focused UI design language. Sports is closest aesthetically, perhaps, to Apple Weather. But Sports shares with Journal a sort of fundamental “Here’s a scrollable feed of events, and there’s a menu at the top right of the list” gestalt. Sports’s simple layout and design is such that you don’t need to drill down or hunt for what you want. You get three main utterly self-explanatory tabs at the top — Yesterday, Today, Upcoming — and within each tab is a list of sporting events. Tap any event to open a card for that event with all the details, and from that card view, you can either swipe side-to-side to switch between different different events, or swipe down to dismiss the card and go back to the main list. It’s so simple and intuitive that it doesn’t seem designed at all, but that’s the sort of design that takes the most work and most iteration.

One question I’ve already seen asked is why make this a standalone app? Why not build it into the TV app or News app?

..More

X of the Week

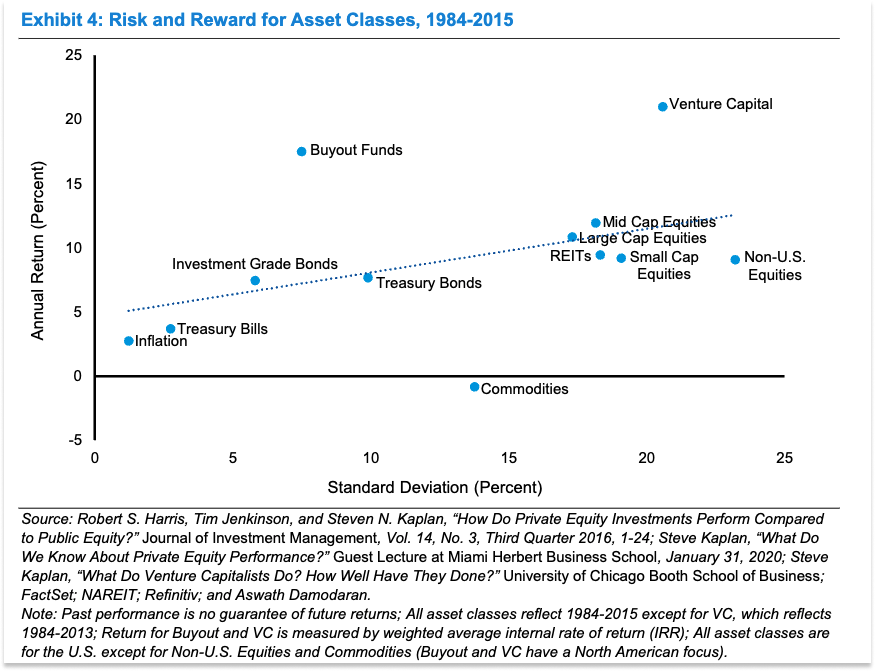

Venture Capital & Free Lunch

Why Venture Capital is the Best Asset Class

FEB 20, 2024

Venture capital gets a lot of shit, but I’m here to tell you that venture capital rocks.

In fact, it’s the best asset class there is.

I can hear the other asset classes yelling at me. Certainly, some have a case:

Public equities are the largest

US Treasuries are the safest

Real estate is the only one you can live in

Private equity is an asset class.

But there is no more beautiful asset class than venture capital.

Venture capital is a free lunch machine.

I’ll admit that venture capital isn’t perfect. It’s risky, illiquid, and highly variable. The best venture funds perform amazingly well; the worst ones are horrendous.

Even the best venture funds are wrong much more often than they’re right. Peter Lynch said of public markets investing, “In this business if you’re good, you’re right six times out of ten.” In venture capital, if you’re good, you’re right maybe three times out of ten, possibly twice, probably once, but when you’re right, you’re really right.

Therein lies the beauty.

No asset class’ constituents fail at a higher rate than venture capital’s, yet venture capital’s returns match or exceed all the others’.

Think of the dumbest fucking investment your least favorite venture capitalist has made. FTX, WeWork, Quibi, Juicero, Theranos, pick your poison. Choose all of them, if you’d like. Sprinkle in some Clubhouse, the 9,000th dating app, the 78,000th social network, the 19th best foundation model company, the 10,000 PFP NFT project.

All of those screaming zeroes are included in these returns:

The data isn’t super clean. Depending on the time horizon and whether you’re talking risk-adjusted returns, venture capital may or may not be the best performing asset class. And investing in venture capital requires locking your money up for a decade, unlike stocks or bonds which you can go sell right now.

But the fact that venture capital is even in the running, and wins on some time horizons, means that the world gets all of that innovation – from failures and winners alike – for free.

There is no such thing as a free lunch, except, perhaps, when it comes to venture capital.

Share this post