Contents

Editorial: Media: Forever Blowing Bubbles: While the Next Enlightenment is Being Built

Essays

Bubble?

Chipmaker TSMC reports nearly 40% surge in its net profit, thanks to AI

From Sports to AI, America Is Awash in Speculative Fever. Washington Is Egging It On.

Atlassian CEO, Mike Cannon-Brookes on Why Everything is Overvalued & Are We in an AI Bubble

OpenAI makes five-year business plan to meet $1tn spending pledges

BlackRock, GIP and Nvidia in $40bn data centre takeover to power AI growth

Venture

Why I No Longer Care About Startup Valuation When I Invest (Except for These Four Reasons)

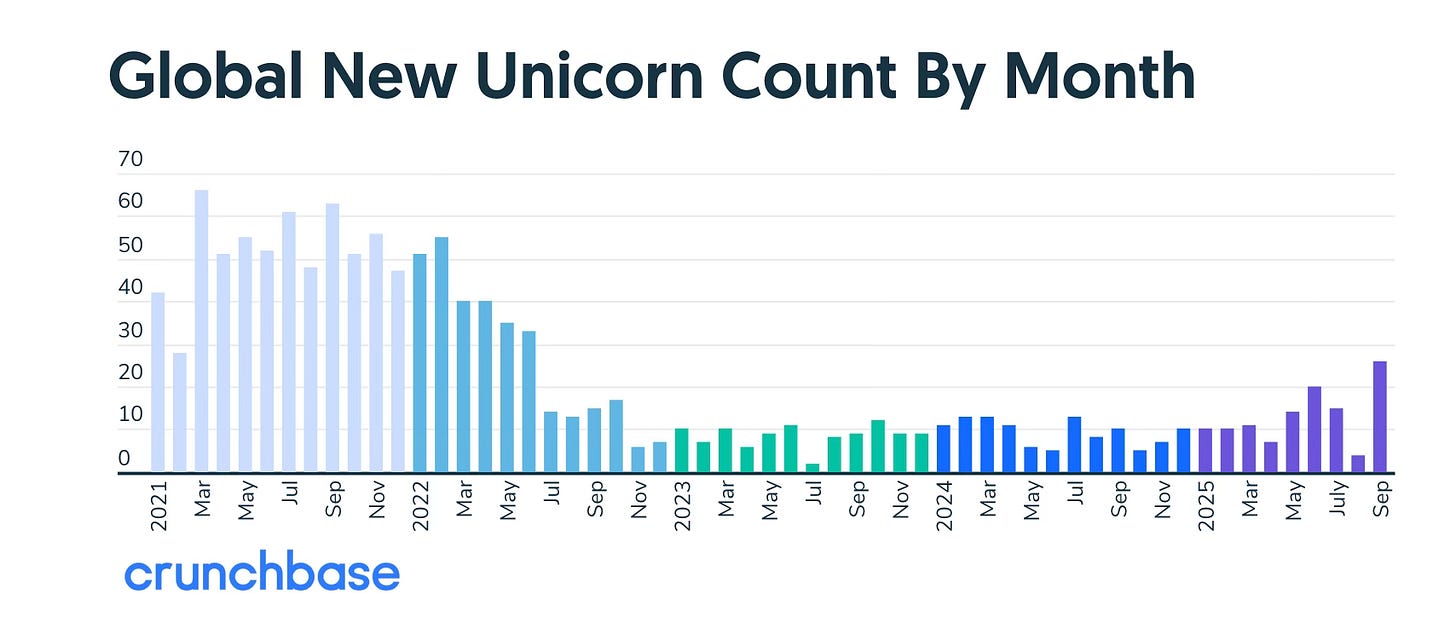

Highest Count Of New Unicorns Join Crunchbase Board In Over 3 Years As Exits Also Gain Steam

Goldman Sachs is acquiring Industry Ventures for up to $965M as alternative VC exits surge

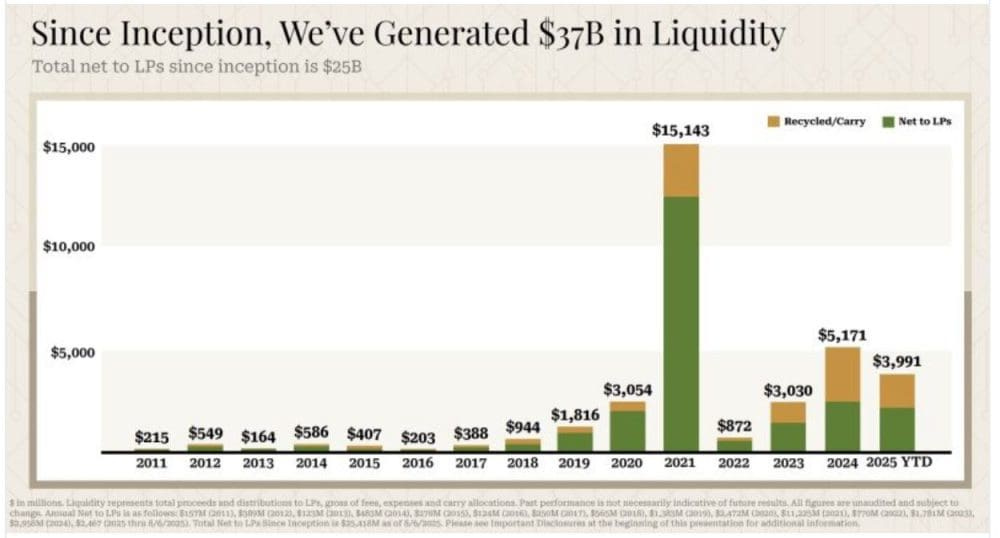

How A16Z’s Returns Show Just What an Epic Year and Epic Time 2021 Was. Will 2028 Be Next?

AI

Sex could become the next big business opportunity for AI companies

Generative AI in the Real World: Context Engineering with Drew Breunig

AI Is Juicing the Economy. Is It Making American Workers More Productive?

Airtable Hires A CTO From OpenAI And Buys An AI ‘Superagent’ Startup

You can make ChatGPT your personal shopper and deal hunter. Here’s how.

Media

Crypto

Apple

Tokenization

GeoPolitics

Regulation

Interview of the Week

Post of the Week

Media: Forever Blowing Bubbles

West Ham United of the English Premier League have an anthem sung by fans at the start of a game

I’m forever blowing bubbles

Pretty bubbles in the air

They fly so high

They reach the sky

And like my dreams they fade and die!

Fortunes always hiding

I’ve looked every where

I’m forever blowing bubbles

Pretty bubbles in the air!

The so-called “AI bubble” isn’t a bubble at all but the media is singing the bubble song.

What it really is, is a new productivity revolution in its earliest stages of development where infrastructure is built for the future. The bubble narrative misses the key fact of history - the entirety of modern society was built by speculation meeting scientific breakthroughs. Speculation is the only historical method of funding major innovation. That is also true in art, where spending on something yet to be born typifies creative effort.

The term “bubble” betrays a failure to distinguish between speculative excess and speculation with productive intent. As Scott Galloway recently observed,

“Seventy-five percent of market gains trace back to a handful of AI-exposed companies.”

That concentration invites fears of fragility, but it also signals where new value is being born — in the infrastructure layer of the next economy. Scale is only possible because of the concentration of value in hands prepared to deploy capital.

Chamath Palihapitiya’s deep dive into Amazon’s metamorphosis from retailer to infrastructure provider reads like a prelude to this moment: the Mag7 aren’t speculators; they’re platform architects. When the world’s largest firms redirect hundreds of billions toward computation, data, and models, they’re not chasing mirages. They’re rebuilding the substrate of productivity itself.

Steve Blank warns that “no science means no startups,” lamenting that the foundational research engine is being starved even as applied AI accelerates.

He’s right about the imbalance — but wrong to conflate it with hollowness. What we’re witnessing is the engineering phase of decades of accumulated science: algorithms born in academia now scaled into global utility.

As Mike Loukides writes in Enlightenment, AI doesn’t extinguish reason; it “extends cognition into the external world.” The capital surge into AI isn’t detaching from value; it’s funding the re-enlightenment of individual and collective human enterprise — embedding intelligence into every system that touches human decision-making. We shouldn’t fear the boom. We should manage it — and recognize it for what it is: the most consequential redirection of capital since the Industrial Revolution.

The Media’s “Bubble” Reflex

Financial media thrives on spectacle. Every era of transformative technology — from the railway boom to the internet — was framed as mania before being recognized as infrastructure. “Bubbles” sell headlines; “compounding productivity gains” don’t. The same playbook is being applied to AI. Bloomberg warns of “valuation hysteria,” The Economist cautions against “AI intoxication,” and even The New York Times calls this “a replay of the dot-com euphoria.”

But the story these outlets miss is that the froth sits atop decades of compounding investment in compute, algorithms, and data infrastructure. The signal — not the noise — is that the productivity base of the global economy is shifting toward intelligence as a utility.

The press frames NVIDIA’s trillion-dollar valuation as speculative exuberance, yet that same framing would have called Amazon’s AWS “a risky sideline” in 2008. The problem isn’t overstatement of price; it’s understatement of consequence.

Journalistic cycles demand a clickbait narrative, not a systemic view. In this sense, the media is performing its traditional role as volatility amplifier — compressing long-term technological progress into short-term emotional swings. As Galloway wrote, “Narratives, not numbers, move markets.” The dominant narrative right now is fear of excess; the missing one is faith in compounding capability.

From Clicks to Comprehension

Mike Loukides reminder in Enlightenment — that technology’s purpose is to “extend reason” — can be inverted as critique: the media’s purpose should be to extend understanding, not anxiety. But modern coverage optimizes for attention, not accuracy. When cable news cuts from OpenAI’s model release to layoffs at a chip factory, the implicit narrative is chaos. Yet the actual dynamic is creative destruction: productivity migrates faster than old accounting frameworks can measure it. The “bubble” framing is a cognitive shortcut — a substitute for analysis. It confuses velocity of investment with fragility of value.

The irony is that the media itself is being transformed by the very forces it misunderstands. AI is already reshaping reporting, editing, and distribution. Newsrooms run on models; analysts use LLMs to summarize earnings. The industry warning about AI disruption is already running on it. The “bubble” narrative is therefore self-referential — a fear story told by an industry being automated by the thing it fears. “Help!, they are spending money to replace me.”

From Science to Scale

Steve Blank’s hierarchy of innovation — basic science → applied research → engineering → startups — remains the right lens. What we’re seeing now is the transition from the middle layers to the market. For forty years, government and academic labs laid the foundations: neural nets, backpropagation, transformers. Private capital is now scaling those discoveries into real systems. The irony is that this “commercial frenzy” is what finally forces investment back down the chain. As capital demands efficiency, it will fund new chips, new physics, and new models of energy use. The flywheel between science and startup doesn’t break; it accelerates.

If anything, the AI boom is the missing bridge between fundamental science and systemic deployment. The Manhattan Project and the Space Race were publicly funded; this time, the private sector is financing the frontier. Highly concentrated wealth is the engine of innovation. Capitalism makes that possible in today’s world. The long term problem will not be over-investment but the very wealth concentration that makes it possible. We need OpenAI and NVIDIA and all the others to build future wealth. We also need policy to distribute the gains and ensure we get systemic uplift and not a new class of feudal overlords. Sam Altman’s Worldcoin project is a clue as to how that might happen.

Platform Capitalism and the Mag7 Playbook

Chamath Palihapitiya’s analysis of the Mag7 — Amazon, Apple, Microsoft, Google, Meta, NVIDIA, and Tesla — clarifies what’s happening. These are not “AI stocks” in the narrow sense. They are infrastructure behomeths capturing the productivity dividend of intelligence at scale. Amazon didn’t become the world’s utility by selling books; it did so by selling everything, including compute. Likewise, the firms leading this wave are transforming intelligence into infrastructure — AI as a service layer, a universal API for cognition.

The investment surge into these firms reflects a rational understanding of where value accrues in platform shifts. In the industrial era, value aggregated around energy and transport. In the information era, around data and networks. In the AI era, it aggregates around context — the ability to synthesize knowledge, predict behavior, and automate reasoning. Calling that a bubble is like calling the electrification of cities “hype.”

The New Enlightenment

Mike Loukides Enlightenment essay reframes the philosophical dimension: the Enlightenment was defined by reason, empiricism, and the belief that knowledge could improve the human condition. AI challenges us to extend those principles, not abandon them. By externalizing parts of cognition, we’re not ending the age of reason; we’re upgrading it. The machine is not replacing the mind — it’s reflecting it back at scale. If the first Enlightenment created the scientific method, the second may create the computational method: a world where human discovery and human creativity are accelerated by AI code.

This is why the AI boom feels chaotic — it’s a cognitive revolution colliding with financial markets. Every great shift in human understanding — printing press, electricity, internet — created excess, fraud, and euphoria. But history judges these periods not by their bubbles, but by their aftermaths. The winners of this cycle will be those who understand that intelligence itself is becoming an asset class.

From Speculation to Construction

We should stop debating whether this is a bubble and start asking what the speculative investment is building. Behind every overheated valuation lies an investment in compute, data, and capability. The correction will come, as always. And some will be losers. But what remains will be the foundation of a new industrial layer — knowledge infrastructure. Those who frame AI as a speculative mania miss the deeper story: capital is being redeployed from consumption to knowledge and understanding. That’s not froth. That’s progress.

Round Trip?

The other narrative recently suggests that the players in AI are spending the same money with each other in a closed looping circle . Nvidia, OpenAI, AMD, Oracle etc. These narrative leave out the investment capital coming in Fromm the outside, and also leave out the massive revenues being made by Nvidia, OpenAI, Anthropic, Microsoft and others. There is no circular closed system here. There is a huge growth of both infrastructure and revenue.

Essays

How Does the End Begin?

Profgalloway • October 17, 2025

Essay•AI•S

Overview

The piece argues that U.S. markets and near-term economic growth have become unusually dependent on a narrow cohort of AI-linked companies. It highlights extreme concentration at the top of the S&P 500 and attributes the bulk of recent index returns, profit expansion, capital spending, and even GDP growth to AI. The underlying question is whether such narrow leadership is sustainable — and how an “end” to the boom typically begins when expectations and capital intensity outpace broad-based value creation.

Market Concentration and Narrow Breadth

The article opens with a stark data point: “The top 10 stocks in the S&P 500 account for 40% of the index’s market cap.” This level of concentration means index performance increasingly reflects the fortunes of a handful of firms rather than the economy at large.

It then ties market gains directly to AI: since the launch of a popular chatbot in November 2022, “AI-related stocks have registered 75% of S&P 500 returns.” In plain terms, three quarters of recent equity gains have been driven by a small, thematically linked set of companies.

Concentration amplifies volatility. If leadership falters, passive investors tracking the index could face synchronized drawdowns. Conversely, continued outperformance by the leaders can mask underlying weakness in the broader market.

Earnings and Investment Engine

Profit growth is just as skewed: “80% of earnings growth” is attributed to AI-related names. That suggests multiple compression elsewhere is being offset by premium valuations in AI, while non-AI sectors lag.

Corporate investment has tilted even more: “90% of capital spending growth” is coming from AI-linked firms. This indicates an investment supercycle in data centers, chips, and model infrastructure, with spillovers across energy, real estate, and cloud services — but also the risk of overbuild if demand expectations prove too optimistic.

A narrow capex boom can crowd out other priorities, leaving the economy exposed to a single technological thesis. If the return on invested capital falls as projects scale, the feedback loop that has been rewarding leaders could reverse quickly.

Macro Dependence on AI

The article claims that “AI investments accounted for nearly 92% of the U.S. GDP growth this year.” If accurate, this implies the broader economy’s incremental growth is tied disproportionately to one vector of spending.

Such dependence can be double-edged. If AI delivers genuine productivity gains — faster software development, automated support, improved scientific discovery — growth can broaden and become self-sustaining. If not, GDP may recoil when the investment impulse slows, contracting orders for hardware, power, and construction linked to data infrastructure.

How Endings Typically Begin

Endings often start with a funding or narrative shift: a modest earnings miss, guidance cut, regulatory surprise, or cost overrun that reframes the growth story. In concentrated markets, small cracks at the top can propagate quickly through passive flows, risk models, and credit conditions.

Another path is saturation: as capex soars, marginal returns diminish, and scrutiny rises on monetization versus hype. Firms then confront tougher trade-offs — continue spending to keep pace, or slow investments and risk losing the arms race.

Policy and politics can also catalyze regime change. New rules on data usage, AI safety, export controls, or power-grid constraints could raise costs and slow deployment, testing valuations built on rapid scale-up.

Key Takeaways

The leadership of a few mega-cap AI firms now dominates index performance, earnings growth, and capex trends.

AI-driven investment appears to be the principal engine of recent U.S. GDP growth, signaling both powerful momentum and macro fragility.

The cycle likely ends not with a single dramatic event but with a sequence of disappointments — softer demand, rising costs of capital, regulatory friction — that gradually undermines the narrative.

For investors and policymakers, the challenge is to encourage diffusion of productivity gains beyond the top cohort while stress-testing scenarios where AI investment growth normalizes.

Implications

If AI’s promise converts into widespread productivity, today’s concentration could be a staging point for broader prosperity. If not, the economy and markets may have over-indexed to a single theme, leaving portfolios, jobs, and public revenues vulnerable to a synchronized unwind. In this framing, the “end” begins when the marginal dollar of AI spending no longer buys commensurate growth — and the market, intensely focused on the few, remembers the many.

America’s future could hinge on whether AI slightly disappoints

Noahpinion • October 12, 2025

Essay•AI•Tariffs•AIBubble•Macroeconomy

Overview

The piece argues that the current resilience of the U.S. economy rests disproportionately on an AI-driven investment boom, making America dangerously exposed if AI merely underperforms rather than fails outright. Despite weak manufacturing, soft payrolls, and consumer sentiment “at Great Recession levels,” growth is still positive and labor markets remain historically tight. Competing explanations exist—tariffs may be less binding than feared; weak sentiment could be partisan “vibecession”—but a growing body of evidence suggests AI-related capex is propping up GDP, stocks, and political narratives around policy competence. If that pillar wobbles, the author warns, the consequences would extend beyond markets to reshape public judgment of the current presidency and the broader policy regime supporting it.

The economic picture: weak signals vs. resilient aggregates

Manufacturing is “hurting badly” under Trump-era tariffs; payroll growth looks weak; consumer confidence has slumped.

Offsetting indicators: unemployment is “rising a little” but remains very low; prime-age employment is near all-time highs; New York Fed GDP nowcast is a bit over 2% while the Atlanta Fed’s is higher.

The divergence invites two stories: either the economy is broadly fine (tariff exemptions, overestimated tariff harms, sector-specific issues), or tariffs are a drag being masked by a powerful AI upswing.

AI as the single pillar

Citing the Financial Times, Pantheon Macroeconomics estimates H1 growth would have been just 0.6% annualized without AI spending—“half the actual rate.” Paul Kedrosky’s estimates align, while Jason Furman’s back-of-the-envelope suggests an even larger AI contribution.

Equity markets are similarly concentrated: Ruchir Sharma notes “AI companies have accounted for 80 per cent of the gains in US stocks so far in 2025,” and over one-fifth of S&P 500 market cap sits in Nvidia, Microsoft, and Apple—two being direct AI bellwethers.

The Economist cautions that “beyond AI” the economy looks sluggish: flat real consumption since December, weak job growth, slumping housing, and non-AI business investment down.

Policy insulation and exposure

Despite broad tariff actions, Joey Politano observes the administration “left AI and its supply chain mostly untouched,” effectively ring-fencing the sector as a national growth bet.

The author argues this tacit industrial policy heightens macro and political risk: if AI stumbles, tariffs’ drag would be unmasked, potentially tipping the economy into recession.

Why a crash could come from ‘mild disappointment’

The likely vulnerability is an “industrial bubble,” a term Jeff Bezos uses for episodes where real-economy overbuild meets optimistic mispricing. Unlike purely financial manias, the pain here flows through debt, capex, and loan defaults tied to overstated technological payoffs.

Bloomberg’s roundup captures the froth: record pace of spending on a still-unproven profit model; an MIT study finding “95% of organizations saw zero return” on AI initiatives; Harvard/Stanford researchers labeling output “workslop”—content that looks like work but “lacks the substance to meaningfully advance a given task.”

Technical headwinds add to the risk: diminishing returns to scaling laws; underwhelming model upgrades (OpenAI’s latest got “mixed reviews” after GPT-5 hype); and hard constraints from power grids as data-center electricity demand surges.

Crucially, the tech need not “fail”—it only needs to fall short of the most ardent expectations for equity values, capex plans, and financing structures to face a painful reset.

Political stakes

If AI is the economy’s load-bearing wall, a bust would “flip the narrative” on Trump’s presidency, analogous to how the 2008 crash indelibly marked George W. Bush. Given how “transformative” a second term appears, the sector’s trajectory could “determine the entire fate of the country,” in the author’s framing.

Key takeaways

AI’s macro lift: multiple estimates attribute roughly half or more of recent growth—and most equity gains—to AI-related spending and expectations.

Concentration risk: capex, market cap, and policy protection are clustered in a narrow AI supply chain, amplifying systemic exposure.

Industrial-bubble mechanics: lending and overbuild tied to optimistic use-cases could transmit even “mild disappointment” into defaults, retrenchment, and recession.

Non-AI weakness: flat consumption, soft jobs, and weak non-AI investment suggest limited cushions if the AI tide ebbs.

Narrative fragility: the political credit for resilience is contingent; an AI stumble could rapidly recast economic and electoral judgments.

Implications

The U.S. has, by omission and emphasis, made a national bet on AI as a growth engine. That bet may pay off over the long arc, but the near-term macro math is unforgiving: if returns to investment arrive slower than markets and policymakers have implicitly assumed, the unwind could be sharper and more politically consequential than standard equity corrections. The prudent stance, the author implies, is to treat AI as promising but not preordained—and to diversify growth drivers before disappointment turns cyclical softness into a narrative and policy crisis.

No Science, No Startups: The Innovation Engine We’re Switching Off

Steve Blank • October 13, 2025

Essay•Education•SciencePolicy•Startups•ResearchFunding

Tons of words have been written about the Trump Administrations war on Science in Universities. But few people have asked what, exactly, is science? How does it work? Who are the scientists? What do they do? And more importantly, why should anyone (outside of universities) care?

(Unfortunately, you won’t see answers to these questions in the general press – it’s not clickbait enough. Nor will you read about it in the science journals– it’s not technical enough. You won’t hear a succinct description from any of the universities under fire, either – they’ve long lost the ability to connect the value of their work to the day-to-day life of the general public.)

In this post I’m going to describe how science works, how science and engineering have worked together to build innovative startups and companies in the U.S.—and why you should care.

(In a previous post I described how the U.S. built a science and technology ecosystem and why investment in science is directly correlated with a country’s national power. I suggest you read it first.)*

How Science Works

I was older than I care to admit when I finally understood the difference between a scientist, an engineer, an entrepreneur and a venture capitalist; and the role that each played in the creation of advancements that made our economy thrive, our defense strong and America great.

Scientists

Scientists (sometimes called researchers) are the people who ask lots of questions about why and how things work. They don’t know the answers. Scientists are driven by curiosity, willing to make educated guesses (the fancy word is hypotheses) and run experiments to test their guesses. Most of the time their hypotheses are wrong. But every time they’re right they move the human race forward. We get new medicines, cures for diseases, new consumer goods, better and cheaper foods, etc.

Scientists tend to specialize in one area – biology, medical research, physics, agriculture, computer science, materials, math, etc. — although a few move between areas. The U.S. government has supported scientific research at scale (read billions of $s) since 1940.

Scientists tend to fall into two categories: Theorists and Experimentalists.

Theorists

Theorists develop mathematical models, abstract frameworks, and hypotheses for how the universe works. They don’t run experiments themselves—instead, they propose new ideas or principles, explain existing experimental results, predict phenomena that haven’t been observed yet. Theorists help define what reality might be.

Enlightenment

Oreilly • October 14, 2025

Essay•AI•Enlightenment•Education•CriticalThinking

In a fascinating op-ed, David Bell, a professor of history at Princeton, argues that “AI is shedding enlightenment values.” As someone who has taught writing at a similarly prestigious university, and as someone who has written about technology for the past 35 or so years, I had a deep response.

Bell’s is not the argument of an AI skeptic. For his argument to work, AI has to be pretty good at reasoning and writing. It’s an argument about the nature of thought itself. Reading is thinking. Writing is thinking. Those are almost clichés—they even turn up in students’ assessments of using AI in a college writing class. It’s not a surprise to see these ideas in the 18th century, and only a bit more surprising to see how far Enlightenment thinkers took them. Bell writes:

The great political philosopher Baron de Montesquieu wrote: “One should never so exhaust a subject that nothing is left for readers to do. The point is not to make them read, but to make them think.” Voltaire, the most famous of the French “philosophes,” claimed, “The most useful books are those that the readers write half of themselves.”

And in the late 20th century, the great Dante scholar John Freccero would say to his classes “The text reads you”: How you read The Divine Comedy tells you who you are. You inevitably find your reflection in the act of reading.

The Amazon Primer: How It Started, Where It Stands, What’s Next

Chamath • October 15, 2025

Essay•Venture

Overview

The piece revisits a long-held thesis about Amazon, anchored in a 2016 assessment—when the company’s market value was roughly $300B—that it was a “multi-trillion-dollar monopoly hiding in plain sight.” Framed as a fresh, data-informed primer drawing on nearly two decades of observation, it promises to synthesize how Amazon’s strategic architecture, operating model, and compounding advantages have translated into outsized market power and long-term value creation. The author sets an evaluative baseline with two concrete markers: the 2016 valuation context and the enduring conviction captured in the quote, “multi-trillion-dollar monopoly hiding in plain sight,” signaling a view that Amazon’s trajectory was systematically underappreciated at the time.

Core Thesis and Context

The central idea is that Amazon’s economic engine has been structurally mispriced by markets at various points, particularly in 2016, because investors and commentators underestimated the compounding effects of scale, integration, and data.

The primer’s intent is to reexamine that call with updated evidence, clarifying where the initial thesis held, where it needs nuance, and what new drivers have emerged.

The author positions a multi-year research arc—“studying Amazon for nearly two decades”—as the foundation for separating transient narratives from durable signals about the company’s moat and future optionality.

Analytical Lenses Likely Employed

Market structure and monopoly dynamics: not merely share in a single product category, but the orchestration of a multi-sided platform—retail marketplace, logistics, cloud infrastructure, and subscription—yielding network effects and cost advantages.

Unit economics and flywheel effects: a recurring theme in Amazon analysis is the reinvestment of scale benefits into lower prices, faster delivery, and broader selection, which in turn deepens customer lock-in.

Optionality via infrastructure: capabilities built for internal needs (e.g., fulfillment and compute) become externalized as services, opening new profit pools and reinforcing the core.

Time horizon arbitrage: a willingness to trade near-term margins for strategic positioning, with value recognition lagging operational reality—mirroring the 2016 “hiding in plain sight” framing.

What the Primer Seeks to Clarify

How and why the 2016 call proved prescient relative to subsequent value creation.

Which components of the business model (e.g., marketplace, logistics, cloud, subscriptions, advertising) most strongly contributed to the step-change from a $300B company to a multi-trillion-dollar platform.

The interplay between data, scale, and customer experience in sustaining advantage over time.

The boundary conditions of “monopoly” in a platform context—where cross-subsidies and integrated services complicate traditional market definitions.

Implications

For investors: understanding Amazon requires looking through GAAP snapshots to the underlying flywheel, cash generation in high-ROIC segments, and the compounding nature of infrastructure-led optionality.

For operators: Amazon’s playbook illustrates how building reusable capabilities (logistics, compute, identity, payments) can be leveraged across business lines to reduce marginal costs and accelerate innovation.

For policymakers: defining market power in ecosystems challenges conventional antitrust frameworks, which often consider narrow product markets rather than platform-level interdependencies.

Notable Quote and Data Points

“In 2016, when Amazon was a $300B company, I called it a multi-trillion-dollar monopoly hiding in plain sight.” This quote encapsulates both the valuation anchor and the thesis’ foresight.

Time-in-market: “studying Amazon for nearly two decades” underscores a longitudinal approach, implying that the primer is less a hot take than a cumulative synthesis.

Key Takeaways

The article offers an updated, evidence-driven validation of a bold 2016 thesis about Amazon’s latent scale and monopoly-like dynamics.

It centers on how integrated infrastructure, network effects, and reinvestment discipline translated into durable advantages and substantial value creation.

The work aims to map which levers mattered most to the company’s evolution from a $300B valuation to multi-trillion-dollar territory, and why many observers underestimated that compounding.

Beyond Amazon, the primer provides a template for analyzing platform companies where cross-subsidies, data feedback loops, and infrastructure externalization blur traditional market boundaries.

Bubble?

AI’s double bubble trouble

Ft • October 16, 2025

AI•Funding•Bubble•AIInfrastructure•LLMs•Bubble?

Overview

The piece argues that today’s artificial intelligence boom contains a “double bubble”: a surge of genuinely productive investment alongside pockets of exuberant speculation. The core distinction is between long-lived, economically useful assets and capabilities versus momentum-driven bets whose pricing far outstrips tangible cash flows. It contends that both phenomena are unfolding at once, making it harder for investors, operators, and policymakers to separate signal from noise and allocate capital wisely.

Where Spending Looks Like Investment

Picks-and-shovels: Build-outs of data centers, high-bandwidth networking, specialized chips, cooling, and especially reliable power are framed as foundational. These expenditures create durable capacity that benefits multiple AI use cases over years rather than quarters.

Capability compounding: Tooling, MLOps, data pipelines, and safety/observability layers are seen as productivity enablers that improve model reliability and lower total cost of ownership over time.

Enterprise integration: Spending that embeds AI into existing workflows (search, customer support, code assistance, analytics) can unlock measurable efficiency gains, stickier contracts, and defensible moats through data flywheels and switching costs.

Infrastructure economics: Even where near‑term margins are thin, the assets (power contracts, facilities, supply-chain access) are scarce and appreciate as demand grows, making returns more plausible on a multi‑year horizon.

Where Behavior Looks Like Speculation

Valuations untethered from unit economics: Application-layer companies priced on usage “hockey sticks” despite high inference costs and unclear gross margins.

Me‑too product risk: Thin “wrapper” apps dependent on the same foundation models face commoditization, weak moats, and distribution challenges.

Overpromised timelines: Claims of imminent artificial general intelligence or fully autonomous agents risk pulling forward expectations, while reliability, safety, and governance gaps persist.

Capital chasing narratives: Rapid fundraising rounds and secondary market activity can inflate prices before product–market fit or enterprise security compliance is proven.

How to Tell the Difference

Cash flow path: Investments tied to capacity, cost reduction, and contracted demand have clearer routes to payback than user‑growth stories with negative gross margins.

Scarcity and control points: Ownership or preferential access to power, advanced packaging, unique data, or distribution channels signals durability.

Substitution risk: Offerings that can be replicated by a prompt, plug‑in, or model parameter change are fragile relative to assets that require time, permits, or capex to duplicate.

Measured progress: Credible operators publish benchmarks that align with customer outcomes (latency, accuracy, compliance), not just model leaderboard wins.

Implications

For investors: Expect dispersion—some infrastructure and workflow players compound, while crowded consumer apps mean‑revert. Prioritize unit economics under stress scenarios (model price cuts, context limits, power constraints).

For operators: Secure energy and supply chains early; design for inference cost discipline; build proprietary data advantages; and align go‑to‑market with compliance and governance needs.

For policymakers: Grid expansion, permitting reform, and data/privacy standards will shape where productive investment clusters and whether speculative excess spills into systemic risks.

Key Takeaways

Both good investment and bad speculation are happening simultaneously.

Durable returns are likelier in scarce, capital‑intensive infrastructure and deeply embedded enterprise use cases.

The riskiest exposures are thin apps with weak moats, aggressive promises, and fragile unit economics.

Disciplined evaluation—of costs, scarcity, and substitution risk—helps navigate the “double bubble.”

AI has a cargo cult problem

Ft • October 16, 2025

AI•Funding•InvestmentBubble•CargoCult•CapitalEfficiency•Bubble?

Overview

The piece argues that parts of today’s artificial intelligence boom resemble a “cargo cult”: enthusiastic imitation of the trappings of success—massive spending, grand announcements, and headline-grabbing pilots—without the underlying causal ingredients that produce durable breakthroughs or profits. It warns that “Spending vast sums and inflating an investment bubble is no guarantee of unleashing technological magic,” framing a cautionary stance toward exuberant capital allocation and expectation-setting around AI.

What “cargo cult” means in this context

Borrowed from anthropological shorthand, “cargo cult” behavior arises when actors copy surface rituals of prior success, expecting similar outcomes, while missing the unseen mechanisms that actually drive results.

In AI, this manifests as a belief that bigger budgets, more GPUs, and sweeping “AI-first” slogans will automatically yield competitive moats, productivity surges, or scientific leaps—despite weak evidence of causality in specific use cases.

Symptoms in the current AI cycle

Escalating capital expenditures on compute and data centers billed as inevitable prerequisites for leadership.

Top-down mandates to “add AI” to products or workflows, independent of user need, data readiness, or MLOps maturity.

Valuation premia assigned to AI narratives rather than cash flows, unit economics, or defensible data advantages.

Demos and proofs-of-concept that impress in isolation but fail to translate into reliable, cost-effective production systems.

Why spending alone won’t deliver “technological magic”

Diminishing returns from naïve scaling when data quality, labeling, and feedback loops are the real bottlenecks.

Fragility in real-world deployment: hallucinations, brittleness under distribution shift, and safety/abuse risks.

Operational drag: inference costs, latency, energy constraints, and integration complexity across legacy systems.

Organizational gaps: scarce talent for prompt engineering, evaluation, red-teaming, and post-deployment monitoring.

Governance friction: privacy, IP, and compliance exposures that can erase thin margins or stall rollouts.

Principles to avoid cargo-cult AI

Start with the problem, not the model: define target tasks, success metrics, and alternatives (automation, UX redesign, rules).

Prove value early with small, measurable pilots; track ROI at a use-case level, not by platform spend.

Invest in data flywheels—collection, curation, human feedback, and domain ontologies—that compound over time.

Treat evaluation as a product: establish robust test suites, baselines, and continuous monitoring rather than relying on anecdotal demos.

Be model-agnostic: mix heuristics, retrieval, smaller specialist models, or fine-tunes when they beat frontier systems on cost/performance.

Build guardrails: access controls, privacy-preserving setups, content filters, and incident response.

Implications for stakeholders

Investors: favor evidence of durable data/process moats and efficient customer acquisition over raw compute announcements. Look for cohort-level retention, cost-to-serve trends, and margins resilient to model/provider switching.

Executives: resist “AI theater.” Tie budgets to unit-economic improvements and decommission pilots that fail to clear thresholds.

Policymakers: calibrate incentives to real productivity gains (standards, safety, workforce training) rather than broad subsidies that fuel misallocation.

Builders: pursue niches where domain context and proprietary data trump scale-idolatry; optimize for reliability, latency, and cost curves observable in production.

Key takeaways

Cargo-cult dynamics arise when capital and hype substitute for causal understanding of what makes AI deliver value.

Scale helps, but only if paired with the right data, evaluation, and operations; otherwise, costs compound faster than benefits.

Sustainable advantage is more likely to come from problem-fit, data systems, and disciplined deployment than from headline spending.

Prudent skepticism and rigorous measurement are antidotes to AI bubble mechanics—and the best path to real, compounding impact.

The Frothiest AI Bubble Is in Energy Stocks

Wsj • October 15, 2025

AI•Funding•EnergyStocks•Bubble?

Overview

The article argues that the hottest “AI trade” isn’t in chips or software but in energy companies pitching future solutions to meet AI’s massive power needs. These are largely “concept stocks” that are pre-revenue yet command soaring market valuations on the promise of powering next-generation data centers. As the piece puts it, “Concept stocks with no revenue have soaring valuations.” It adds that the biggest of these energy firms has the backing of OpenAI’s CEO: “OpenAI CEO Sam Altman has backed the biggest of these energy companies.” Together, these signals have pulled significant speculative capital into early-stage energy technologies tied to the AI boom.

What “concept stocks” means in this context

Companies at the pre-commercialization stage, often with novel or unproven technologies.

No current revenue, limited operating history, and heavy reliance on external financing.

Valuation driven less by near-term cash flows and more by long-dated narratives about market size, strategy, and perceived inevitability of demand.

Why AI is pulling energy valuations higher

The model-training and inference cycles that underpin AI require large, reliable, and low-cost electricity supplies.

Investors extrapolate accelerating compute demand into future electricity demand, bidding up the value of companies that claim they can deliver abundant power.

Narratives around grid constraints and the need for resilient, clean, baseload energy for data centers amplify perceived urgency and potential pricing power.

The signaling effect of high-profile backers

Having OpenAI’s leader associated with the largest of these energy plays serves as a powerful credibility and attention catalyst.

Such involvement can accelerate fundraising, lift private and public comparables, and attract strategic partners.

It also introduces reflexivity: perceived endorsement begets capital, which raises valuations, which in turn appears to validate the thesis—even absent operating revenues.

How these valuations are being justified

Total addressable market framing anchored to the projected growth of AI workloads and data center buildouts.

Mentions of prospective offtake agreements, long-term power purchase structures, or partnerships with hyperscalers—even when non-binding—are treated as traction.

Expectations of regulatory support, incentives, and fast-tracked permitting for critical power projects are factored into models despite timing and execution uncertainty.

Key risks and what to watch

Technology risk: performance, scalability, and reliability must be proven at commercial scale.

Timeline risk: long development cycles can collide with investor patience; delays raise financing needs and dilution.

Regulatory and grid interconnection risk: permits, siting, and transmission remain persistent bottlenecks.

Financing risk: capital intensity is high; cost-of-capital moves can materially reset valuations.

Commercial risk: non-binding MOUs may not translate into contracted revenues; watch for binding offtakes, firm PPAs, and first meaningful revenues.

Milestones to track: commissioning of pilot plants, third-party performance validation, unit economics, and progress toward positive gross margins.

Implications for investors and operators

The AI-energy linkage is real, but valuations may be front-running evidence. Without revenue, price discovery leans on sentiment and celebrity endorsements.

Portfolio construction should reflect binary outcomes: position sizing, diversification across technologies, and clear milestone-based underwriting are critical.

Operators should prioritize transparent technical disclosures, credible roadmaps to commercialization, and binding customer commitments to move beyond narrative-driven pricing.

Key takeaways

“Concept stocks with no revenue have soaring valuations,” centered on the promise of serving AI’s power demand.

The largest of these firms is bolstered by the involvement of OpenAI’s CEO, intensifying investor interest.

Valuations hinge on future demand narratives, offtake expectations, and policy tailwinds rather than current financials.

Scrutiny should focus on binding contracts, verified performance, and the timeline to first revenue and scale.

Chipmaker TSMC reports nearly 40% surge in its net profit, thanks to AI

Fastcompany • Associated Press • October 16, 2025

AI•Tech•TSMC•Semiconductors•AIChips•Bubble?

Earnings surge and AI tailwinds

Taiwan’s largest chipmaker reported a near-40% jump in quarterly net profit to a record 452.3 billion New Taiwan dollars (about $15 billion) for the July–September period, beating analyst expectations. Revenue rose 30% year over year, underscoring how demand for advanced processors tied to artificial intelligence continues to power top- and bottom-line growth. As the world’s biggest contract semiconductor manufacturer and a key supplier to Apple and Nvidia, the company is benefitting from a structural upgrade cycle in data centers and devices that increasingly hinge on AI-accelerated computing.

Key figures and context

Net profit: NT$452.3 billion (~$15 billion), a company record.

Profit growth: nearly 40% year over year.

Revenue growth: up 30% year over year for the quarter.

Customer mix: supplies leading-edge chips to Apple and Nvidia, anchoring demand visibility.

Geographic expansion: active fab projects in the United States and Japan.

What’s driving growth

The performance reflects an “AI supercycle” that is pulling forward orders for cutting-edge nodes used in training and inferencing. As hyperscalers and device makers race to integrate AI capabilities, they are prioritizing access to the most advanced manufacturing capacity. Morningstar analysts captured the backdrop succinctly: “Demand for TSMC’s products is unyielding... We expect AI demand to stay resilient.” That resilience is visible not only in server-class accelerators but also in premium smartphones and PCs where on-device AI features are becoming a differentiator, reinforcing mix improvements and utilization rates at advanced nodes.

Expansion and supply-chain resilience

To hedge geopolitical and supply-chain risk, the company is building fabrication plants in the U.S. and Japan. These moves diversify production away from a single geography while keeping the most sophisticated process know-how under tight control. The firm has committed $100 billion in U.S. investments, including new factories in Arizona, in addition to $65 billion pledged earlier—an investment scale designed to satisfy U.S. customer proximity requirements and policy incentives while maintaining global competitiveness.

Policy pressure and industry politics

Amid ongoing U.S.–China tensions, Washington has amplified calls to rebalance global chip production. The article notes that the U.S. Commerce Secretary, Howard Lutnick, proposed splitting production 50–50 between Taiwan and the U.S., an idea Taiwan rejected given its current manufacturing concentration and strategic advantages. While such proposals signal policy ambition to localize more capacity, the company’s dominant position, capital intensity, and hard-earned manufacturing yields make rapid geographic reallocation challenging. Morningstar’s view—doubting tariffs would materially hinder the company—highlights the pricing power and indispensability conferred by technological leadership.

Implications for customers and competitors

For mega-buyers like Nvidia and Apple, the results validate that leading-edge capacity remains the constraining resource in the AI era. Priority access can translate into product leadership and gross margin leverage.

For rivals and second-source foundries, catching up requires massive capital, ecosystem depth, and proven yields at the most advanced nodes—barriers that are difficult to overcome quickly.

For systems makers and cloud providers, diversified fab footprints in the U.S. and Japan may improve supply assurance and align with “friend-shoring” goals, but cost structures and ramp timelines will shape how much production ultimately shifts.

Outlook

The quarter’s outperformance, combined with robust AI order books and ongoing capacity additions, suggests momentum should continue into subsequent periods. The blend of structural AI demand, premium customer mix, and geographic diversification supports sustained investment and margin durability, even as macro and geopolitical uncertainties persist. In short, the firm is consolidating its role as the keystone of the AI hardware stack—translating technology leadership into record financial results while methodically addressing supply-chain and policy headwinds.

Key takeaways:

Record profit and strong revenue growth powered by AI-driven demand.

Strategic U.S. and Japan fabs aim to mitigate geopolitical risk and meet customer proximity needs.

Policy debates over production localization continue, but technological leadership and scale underpin the company’s resilience.

From Sports to AI, America Is Awash in Speculative Fever. Washington Is Egging It On.

Wsj • Greg Ip • October 16, 2025

Essay•Regulation•RetailInvesting•SportsBetting•Crypto•Bubble?

Overview

The piece argues that a broad “speculative fever” has gripped the United States, extending from stock markets to sports wagering and cryptocurrencies. It highlights how “working-class investors are flocking to stocks, betting and crypto,” framing this surge either as the flowering of democratized finance or as late-arrival exuberance that could end painfully. The narrative juxtaposes enthusiasm for risk with the possibility that easy access, cultural normalization, and policy choices have collectively stoked behavior more akin to gambling than long-term investing.

What’s Driving the Boom

Frictionless access: Zero-commission trading, slick mobile apps, and instant deposits make risk-taking feel casual and continuous.

Normalization of betting: The mainstreaming of sports gambling creates a cultural bridge between wagering and short-term trading tactics.

Narrative momentum: AI-fueled growth stories and viral online commentary provide simple, compelling reasons to chase rallies.

Low barriers for new investors: Retail-friendly platforms and fractional shares invite small-dollar participation that can scale quickly in aggregate.

The Role of Policy and Washington’s Influence

Government encouragement of risk-taking shows up indirectly through stimulus-era liquidity, the tolerance of easy money conditions for extended periods, and public-sector enthusiasm for strategic technologies such as AI.

Regulatory posture is presented as ambivalent: while investor protection is cited as a goal, policy signals can legitimize speculative venues and assets by integrating them into the mainstream financial conversation.

Public support for innovation—tax incentives, grants, and procurement—can be read as green lights for risk, even if the intention is industrial strategy rather than market froth.

Risks and Potential Downside

Late-cycle dynamics: When participation broadens to less affluent households, losses can be socially regressive if conditions reverse.

Blurred lines between investing and gambling: Momentum-chasing, leverage, and rapid turnover amplify volatility and behavioral pitfalls.

Fragile expectations: If growth narratives falter or liquidity tightens, today’s democratization could flip into disillusionment, eroding trust in markets and institutions.

Key Quotes and Framing

The article captures the ambivalence succinctly: “working-class investors are flocking to stocks, betting and crypto, beneficiaries of a new age of democratic finance—or the last invitees to a party that’s going to end.” This encapsulates both the promise of broader access and the peril of arriving at the peak of a cycle.

Implications

Policymakers face a trade-off between fostering innovation and curbing excess: calibrating rules to protect newcomers without smothering growth narratives.

Market educators and platforms will need stronger guardrails—clear disclosures, default risk limits, and tools to mitigate impulsive behavior.

For households, the central challenge is distinguishing durable investment from ephemeral speculation, especially when cultural cues and interface design reward speed over patience.

AI Economics Are Brutal. Demand Is the Variable to Watch.

Wsj • Steven Rosenbush • October 14, 2025

AI•Data•Tokens•AIUsage•Demand•Bubble?

Overview

The piece argues that the defining variable for near‑term AI economics is not model breakthroughs or chip supply alone, but demand—specifically, how much AI people actually use. The most practical proxy for that demand is “tokens,” the unit that measures how much text a model processes. The article’s core message is succinct: “Keep an eye on usage of AI, measured in units known as ‘tokens.’ It’s soaring.” This framing positions token consumption as a leading indicator for revenue growth, cost pressure, and market sustainability in an otherwise volatile, bubble‑prone environment.

What Tokens Capture

Tokens represent slices of text (or text‑like data) processed during prompts and outputs. Rising token counts signal that users are submitting more queries, longer prompts, or interacting with AI systems more frequently and in more complex ways. Because tokens accrue with every interaction, they tie directly to compute consumption, billable usage in many pricing models, and the stress placed on infrastructure. In short, token flow converts abstract “AI interest” into measurable demand.

Why Demand Is the Decider

Revenue mapping: In many business models, more tokens translate into higher billable usage, making token growth a direct proxy for top‑line expansion.

Capacity and cost: Surging tokens increase inference workloads, testing data center capacity and driving operational expenses.

Pricing power: If usage grows faster than capacity, providers may sustain or raise prices; if capacity outpaces demand, price competition can intensify.

Adoption depth: Token trends reveal whether AI is moving from experimentation to embedded workflows—longer, more frequent interactions suggest deeper integration.

The Harsh Economics

The article frames AI economics as “brutal” because costs scale with usage, while monetization depends on converting that usage into paying, repeat behavior. High fixed costs to build and maintain models and infrastructure meet variable inference costs each time tokens are processed. If token growth concentrates in low‑monetization use cases, margins compress. If it occurs in premium, enterprise, or mission‑critical workflows, margins can expand. Thus, demand quality matters as much as demand quantity.

Signals to Watch

Growth rate of tokens per user and per organization—evidence of intensifying engagement versus casual trials.

Mix of short versus long prompts and outputs—indicating complexity and potential value of tasks.

Conversion from free to paid token consumption—proof that usage is monetizable.

Elasticity to pricing and limits—whether usage holds up when guardrails or costs rise.

Token usage during peak periods—stress‑tests for reliability and user tolerance for latency.

Implications

For builders: Optimize for workflows that consistently consume tokens and deliver measurable ROI, not one‑off demos.

For operators: Align infrastructure spend with observed token trajectories to avoid overbuild or bottlenecks.

For investors: Track token volume, growth durability, and monetization pathways to distinguish sustainable adoption from hype.

For policymakers and enterprises: Demand‑driven scaling will surface issues around access, reliability, and responsible use as token loads climb.

Key Takeaways

Demand, expressed through token consumption, is the cleanest real‑time gauge of AI adoption and market health.

Token trends simultaneously illuminate revenue potential and cost exposure, explaining why AI economics can feel unforgiving.

Sustainable value will accrue where rising tokens align with sticky, high‑value use cases and resilient monetization.

Atlassian CEO, Mike Cannon-Brookes on Why Everything is Overvalued & Are We in an AI Bubble

Youtube • 20VC with Harry Stebbings • October 13, 2025

AI•Tech•Valuations•Bubble?

Overview

A wide-ranging conversation examines whether today’s tech markets are overvalued, how real an “AI bubble” might be, and what durable business building looks like through platform shifts. The discussion spans valuation sanity checks, AI’s impact on product design and pricing, the future of engineering work, and leadership principles from decades of company-building. Context on scale underscores the stakes: Atlassian serves 300,000+ customers and generates $5B+ in annual revenue, framing a practitioner’s view rather than a purely investor take. (thetwentyminutevc.com)

Are Markets Overvalued? The AI Bubble Question

The guest bluntly contends that “most of the things are vastly overvalued,” while allowing that a subset is actually undervalued and will be worth far more over time. He stresses the gap between “magical demos” and delivered enterprise value, arguing adoption will take longer than many expect as organizations rework processes, data and security. He has revised his own priors on AI platform scale, now seeing a plausible path for frontier model companies to reach multi‑trillion outcomes, even if the exact magnitude is uncertain. (texttube.ai)

What Changes In AI And SaaS Business Models

Multimodel by design: Rather than training proprietary models, the approach is to integrate and swap among best‑in‑class models quickly as the landscape evolves, making adaptability a core competency. (texttube.ai)

Design over data determinism: In platform shifts, great product design becomes a primary differentiator; AI will transform applications, but specialized tools will persist where they serve jobs‑to‑be‑done better than generic chat interfaces. (podcosmos.com)

Pricing is in flux: Classic per‑seat pricing strains under AI‑driven productivity. Expect hybrid models blending value‑based and consumption elements, with customer budget predictability still essential. Early AI margins are thin as costs outpace pricing power; defensibility must exceed “wrapper on a model.” (texttube.ai)

Talent, Tools, And Productivity

Despite “vibe coding” and code‑assist tools, the expectation is more engineers in the future, not fewer, because technology creation is not output‑bound; AI accelerates creation, spawning new products, features, and complexity that require expert builders. Internally, teams trial multiple AI dev tools (e.g., Cursor, GitHub Copilot), chosen for complementary strengths; coding is only one part of the engineer’s job alongside problem framing, debugging, integration, and operations. The practical constraint on AI tool pricing is low switching cost: vendors must prove outsized, measurable productivity to command premiums. (businessinsider.com)

Operating In Uncertainty: Strategy And Cadence

Strategy is framed as a series of explicit bets—formed with conviction, reviewed quarterly, and revised without sunk‑cost attachment. Customer conversations are treated as the stabilizing signal through noise; talking to the “right” customers, in sufficient number, builds internal confidence on what’s real versus hype. This cadence enables moving fast without being whipsawed by fashion. (texttube.ai)

Leadership And Company‑Building Lessons

Creative survival: To endure multiple platform transitions, companies must keep creating—sometimes killing their own products—rather than defending incumbency. The existential risk is losing creativity. (podcosmos.com)

Co‑leadership to solo leadership: Lessons from two decades of co‑CEO partnership emphasize mutual respect, complementary strengths, and clear “swim lanes”; the mindset carries into bolder AI‑era moves as a solo CEO. (podcosmos.com)

Strengths as dual‑edged: “Being unreasonable” is cast as both superpower and liability—useful for pushing boundaries, hazardous if untempered—illustrating how leadership traits are two sides of one coin. (texttube.ai)

Key Takeaways

Valuations: Much is overpriced, some is underappreciated; separating signal from hype requires evidence of durable unit economics and moats beyond model access. (texttube.ai)

AI trajectory: Bubble dynamics may be present, but enterprise value accrues on slower timelines than demos suggest; design and workflow change determine real ROI. (podcosmos.com)

Pricing evolution: Expect blended per‑value and consumption models as AI turns productivity into outcomes that must be measured and priced. (texttube.ai)

Talent outlook: AI shifts what engineers do and how they do it, but increases demand for core technologists as creation expands. (businessinsider.com)

The A.I. Bubble Looks Real

Nytimes • October 14, 2025

Essay•AI•SpeculativeBubble•DotComBust•HousingCrisisParallels•Bubble?

Thesis

The piece argues that today’s artificial-intelligence surge resembles a classic speculative bubble. Like the dot-com boom and the mid-2000s housing rise, exuberant expectations and rapid capital flows are inflating valuations far beyond what current fundamentals justify. When sentiment reverses, the deflation will be sharp and broadly painful.

Parallels to Past Bubbles

Dot-com echo: As with late-1990s internet stocks, investors are pricing in transformative future profits before sustainable business models are proven, rewarding growth narratives over cash flows.

Housing-crisis rhyme: Easy financing, herd behavior, and the belief that “this time is different” create a self-reinforcing cycle, until credit tightens and confidence breaks.

Mechanisms Inflating the Boom

Narrative momentum: Sweeping claims about productivity, automation, and “general intelligence” attract capital faster than real-world deployment can absorb.

Capital concentration: Money is clustering in a narrow set of platforms and suppliers, pushing a small cohort of names to outsized valuations while masking fragility beneath headline indices.

Costly infrastructure bets: Massive spending on compute, data centers, and specialized chips presumes sustained demand growth; if adoption lags, these fixed costs become a drag.

Signals of Overheating

Valuation stretch: Prices embed optimistic assumptions about market size, margins, and speed of monetization that would require near-perfect execution across multiple layers of the stack.

Copycat investment: Me-too product launches and speculative funding rounds suggest capital is chasing themes rather than defensible moats.

Disconnect with usage: Hype cycles can outpace durable user engagement and willingness to pay, revealing revenue shortfalls when promotional spend slows.

How the Pop Hurts

Corporate retrenchment: Overbuilt capacity and unmet revenue targets lead to write-downs, layoffs, and a wave of consolidation.

Financing squeeze: Risk appetite contracts, starving earlier-stage firms and compressing valuations across the ecosystem.

Real-economy spillovers: Suppliers, contractors, and regional economies tied to data-center and chip buildouts face demand shocks when projects pause.

Implications

Investors should separate durable utility from speculative narratives, stress-test assumptions about adoption curves, and scrutinize unit economics beyond headline growth. Operators need discipline on capex and a focus on verifiable productivity gains for customers. Policymakers and market overseers should be alert to leverage pockets and correlated exposures that could amplify a downturn. The central message: technological promise can be real while pricing becomes unreal—when expectations reset, the damage extends well beyond the most speculative corners.

AGI or Bust, OpenAI’s $1 Trillion Gamble, Apple’s Next CEO?

Youtube • Alex Kantrowitz • October 13, 2025

AI•Funding•AGI•OpenAI•AppleLeadership•Bubble?

Big Picture

The video frames a high-stakes moment for the tech industry around the pursuit of artificial general intelligence and what it would take—financially, organizationally, and societally—to get there. It uses the lens of a potential trillion-dollar-scale capital commitment to explore how leading AI firms might secure compute, energy, and talent at unprecedented levels, and what happens if the bet pays off—or doesn’t. It also considers what kind of leadership will be required at major platform companies to navigate this next era, using Apple’s eventual CEO succession as a case study in how strategy and operational discipline must evolve for an AI-first world.

AGI or Bust: Paths and Trade-offs

Explores why AGI has become a binary rallying cry: either push aggressively for step-change capability gains or risk falling behind in a compounding race for model quality, data, and distribution.

Highlights core bottlenecks—compute availability, high-quality data, and power—arguing that progress hinges on solving these together, not sequentially.

Surfaces safety and governance questions: capability scaling versus controllability, evaluation rigor, and the need for transparent benchmarks and third-party audits as systems begin to act autonomously across tools and networks.

Examines how productization might shift from single-turn chat to agentic workflows that plan, call tools, and interface with enterprise systems—raising new reliability, liability, and UX challenges.

The $1 Trillion Gamble: What It Buys

Breaks down the components of a trillion-dollar push: long-term compute contracts, custom silicon programs, data center build-outs, power procurement (including alternative and renewable sources), and strategic stakes across the supply chain.

Argues that scale alone is insufficient; the wager must unlock defensible distribution: integration into operating systems, productivity suites, developer platforms, and vertical enterprise stacks.

Discusses monetization pressure: from per-seat subscriptions and usage-based APIs to higher-value outcomes pricing tied to automation and decision support, with gross margin sensitivity to inference costs.

Notes the financing mosaic likely required—corporate cash flows, partnerships, pre-purchase agreements, sovereign/strategic investors—balanced against governance safeguards and public trust.

Apple’s Next CEO: Succession in an AI-First Era

Uses Apple’s leadership trajectory to illustrate how the next top executive at a platform giant must combine operational excellence with a coherent AI and silicon strategy.

Emphasizes on-device intelligence as a differentiator for privacy, latency, and battery, and the role of tightly coupled hardware, neural accelerators, and software to deliver reliable, everyday AI features.

Considers partner ecosystem implications: aligning incentive structures for developers, content owners, and accessory makers as generative features reshape creation, search, and services revenue streams.

Implications and Signals to Watch

Capital intensity will privilege firms that can secure long-duration power and compute; expect deeper vertical integration and unusual alliances between chipmakers, utilities, and hyperscalers.

Regulation will likely shift from post-hoc content rules to pre-deployment model oversight, evaluation standards, and incident reporting—impacting release cadence and competitive dynamics.

For enterprises, value will migrate from pilots to production-grade agents embedded in workflows; procurement will scrutinize observability, data residency, and total cost of ownership.

Consumer experiences will hinge on reliability and trust more than raw model IQ; brands that make AI disappear into intuitive interfaces may capture durable loyalty.

Key Takeaways

The pursuit of AGI concentrates financial, technical, and governance risk into a few pivotal bets whose outcomes will shape platform power for a decade.

A trillion-dollar commitment is less about headline capex and more about locking in enduring advantages across silicon, energy, and distribution.

Leadership at major platforms must marry supply-chain mastery with responsible AI strategy; succession choices will signal how aggressively each company plans to steer into agentic computing.

BlackRock CEO Larry Fink on the ‘AI bubble’

Youtube • CNBC Television • October 14, 2025

AI•Tech•LarryFink•AIBubble•DataCenters•Bubble?

Overview

In this clip, BlackRock’s Larry Fink weighs in on whether today’s market enthusiasm amounts to an “AI bubble.” His core message separates short‑term equity froth from a long‑duration, real‑economy investment cycle: he argues AI’s fundamentals are anchored in physical buildouts—compute, data centers, power, cooling, and networks—rather than the debt‑fueled speculation that characterized past bubbles. The result: even if valuations correct, the capex supercycle underpinning AI is likely to persist.

What’s driving the cycle

Compute demand and widespread adoption are pushing an unprecedented buildout of data centers. As Fink has noted on CNBC, “as we democratize AI, we need more data centers,” with marquee facilities scaling to roughly 1 gigawatt and costing on the order of “$50 billion per data center.” (cnbc.com)

Power is the binding constraint. In his 2025 investor letter, Fink highlights that a single hyperscale site can draw about 1 GW—roughly the electricity needed to power a major U.S. city on a peak day—forcing urgent investment in generation, transmission, and permitting reform. (blackrock.com)

Risks and market dynamics

Concentration: For now, AI remains dominated by large, cash‑rich platforms because of extraordinary upfront costs, though Fink expects costs to fall over time, broadening access and use cases. (benzinga.com)

Macro spillovers: He has warned that massive AI‑related capex could introduce new inflationary pressures. If inflation reaccelerates, long rates (e.g., the 10‑year Treasury) could revisit or exceed the 5%–5.5% range—an outcome that would “shock” equities and reset risk premia. (benzinga.com)

How this differs from a classic bubble

Cash vs. credit: The current wave is largely financed by corporate cash flows and equity, not highly leveraged balance sheets—making a drawdown less likely to trigger systemic stress.

Tangible assets: Spending is flowing into physical infrastructure (power, land, chips, cooling) that can retain value and utility across cycles, even if some AI software valuations compress.

Implications for investors

Near term: Expect valuation volatility in AI leaders and suppliers as rates, power availability, and GPU supply ebb and flow.

Medium term: “Picks and shovels” exposures—semiconductors, foundry and packaging, power generation and transmission, advanced cooling, specialized REITs, and project finance/private credit—stand to benefit as AI capacity scales.

Policy watch: Permitting, grid interconnects, and energy mix decisions will determine the pace and geography of deployment, influencing relative winners across regions and asset classes. (blackrock.com)

Key takeaways

The market can be frothy, but the AI buildout is a multi‑year capex theme rooted in hard assets.

Power and permitting are now as critical as GPUs to AI’s trajectory.

Rate sensitivity is high: an inflation surprise and higher long yields could reprice AI equities even as infrastructure spend continues. (benzinga.com)

When the Bubbles Burst

Mahablog • maha • October 14, 2025

Essay•GeoPolitics•RareEarths•Bubble?

Here’s an attention grabber — Why China Can Collapse the U.S. With One Decree. It’s by David Dayen at The American Prospect, so I’m inclined to take it seriously,

“When Donald Trump reacted to China’s export restrictions on rare earth minerals—a group of 17 chemical elements used in almost all electronics—by threatening a new 100 percent tariff on Chinese goods, Wall Street investors who had yawned at most of his erratic announcements for six months finally took notice. It was the same stock-tanking pressure that led Trump to climb down from his Liberation Day tariffs; sure enough, by Sunday, the TACO (Trump always chickens out) vibes started kicking in.”

“I have a great relationship with President Xi … he’s a great leader for their country, and I think we’ll get it set,” Trump told reporters on Air Force One on the way to Israel. “Don’t worry about China, it will all be fine!” he exclaimed on Truth Social. Next thing you know he’ll be apologizing in Mandarin like John Cena.

Here’s an explanation of the John Cena reference if you’re like me and didn’t get it.

“Holding the president of the United States on a tight leash is certainly a lucrative asset. But investors should probably be more wary of the situation. America has made an unusually directional economic bet that is at this moment totally dependent on Chinese rare earth exports. The circumstances that brought us here long predate Trump and are rooted in decades-long failures to retain our technological know-how and channel it into industrial production. It’s never too late for a wake-up call, but the country is in a terribly vulnerable position where China can snap its fingers and snuff out the only thing propping up our economy.”

Our economy is currently being propped up by what’s looking like an AI bubble. “Close to half of the gain in gross domestic product this year will come from data center construction, and around 80 percent of stock market gains are attributable to a handful of AI-heavy tech companies,” Dayen writes. And then he goes on to describe how we got here. This is the accumulation of a lot of business and government decisions going back many decades. But now that business karma is getting ripe we’ve got a perfect moron in charge of our economy to steer us through the crisis.

Paul Krugman has a post up about technology bubbles that’s partly hidden behind a paywall. But here’s another article that explains what Krugman wrote.

“In his newsletter on Monday, Krugman said that Trump’s tariff announcements six months ago were ‘a massive betrayal of the world’s trust,’ noting that previous tariff reductions were achieved ‘through many rounds of international negotiations, in which the U.S. and other nations solemnly agreed not to backtrack.’”

“Krugman said Trump now appears surprised that other countries are retaliating, referring to China’s new export controls on rare earths, which include several vital inputs for U.S. industry.”

“Reacting to the administration’s hypocrisy on the matter, Krugman said, ‘Gosh. Aggressive unilateral trade action is a ‘moral disgrace.’ Who knew?’”

“Krugman said that there is ‘one big difference’ between the trade strategies of the two countries, and it is that, unlike the U.S., ‘the Chinese appear to know what they’re doing.’”

OpenAI makes five-year business plan to meet $1tn spending pledges

Ft • October 14, 2025

AI•Funding•OpenAI•Infrastructure•DataCenters•Bubble?

Overview

OpenAI has drawn up a five‑year business plan to sustain more than $1 trillion in pledged spending for AI models and infrastructure, outlining new revenue lines, debt financing, and further fundraising to bridge the gap between massive capital needs and current cash flow. The plan seeks to diversify beyond today’s core subscriptions, while sequencing infrastructure buildouts and partnerships to reduce upfront costs and align payments with usage. (reuters.com)

What the plan includes

New products and revenue streams: bespoke AI services for governments and large enterprises; shopping and commerce tools; video creation via Sora; autonomous AI “agents”; and potential entry into online advertising. OpenAI is also weighing consumer hardware developed with Jony Ive as a way to create a differentiated, tightly integrated AI experience. (ft.com)

Funding approach: a mix of additional equity raises, new debt facilities, and structured partnerships in which infrastructure providers shoulder a large share of up‑front capex, with OpenAI paying over time through leases or usage‑based agreements. (reuters.com)

Compute and hardware: continued expansion of the Stargate data‑center initiative and deeper collaboration with Nvidia, AMD and Oracle to co‑develop and secure next‑generation AI hardware at scale, reducing per‑unit compute costs over time. (ft.com)

Scale of commitments and context

The plan follows a year of unprecedented infrastructure announcements around Stargate and related projects. Public remarks from leadership indicate tens of gigawatts of new capacity and capital outlays that could run into the high hundreds of billions of dollars, underscoring why a multiyear financing blueprint is necessary. “This is what it takes to deliver AI,” CEO Sam Altman said while unveiling large data‑center buildouts, framing them as a response to surging demand and the sector’s energy‑intensive compute needs. (cnbc.com)

Current business profile

OpenAI’s annualized revenue is roughly $13 billion, with subscriptions to ChatGPT accounting for a majority of sales. While only a small fraction of ChatGPT’s very large user base pays today, the plan envisions raising conversion and expanding lower‑cost offerings in emerging markets to widen the funnel. This consumer base is complemented by rapid enterprise uptake as companies integrate copilots and domain‑specific models into workflows. (ft.com)

Key financing mechanics

To reconcile near‑term losses with outsize capex, OpenAI is leaning on partner‑financed capacity (e.g., Oracle‑hosted compute), staggered payment schedules, and potential debt secured against contracted demand. The company is also exploring monetization of intellectual property and model licensing to third parties, and could supply compute directly as Stargate campuses come online. Investors and counterparties view falling unit costs and steep demand curves as making the economics workable over a five‑ to ten‑year horizon, though skeptics warn of “circular” financing risks across closely linked partners. (ft.com)

Risks, constraints, and external dependencies

Energy and siting: Multi‑gigawatt campuses face power, land, and water constraints; timelines depend on grid interconnections and new generation, including nuclear and renewables. (cnbc.com)

Market structure: Heavy reliance on a few chip and cloud suppliers raises concentration and pricing risk; co‑development aims to mitigate this but may not fully neutralize supply shocks. (ft.com)

Financial durability: Sustained negative free cash flow during buildout years requires continued access to capital markets and partner balance sheets; execution slippage could amplify financing costs. (reuters.com)

Systemic spillovers: Because many large tech and infrastructure players are now financially entangled with OpenAI’s roadmap, a shortfall in delivery could ripple across suppliers, lessors, and customers. (techcrunch.com)

Why it matters