No Andrew this week, so no video conversation.

Contents

Editorial: What are the Economics of an AI Native Internet? Who Pays Whom?

Essay

AI

Cutting-Edge AI Was Supposed to Get Cheaper. It’s More Expensive Than Ever.

Elon Musk Just Delivered a Ringing Endorsement of the iPhone’s Staying Power

Made by Google 2025, AI Trade-offs, Google and the Long-Term

OpenAI acquires product testing startup Statsig and shakes up its leadership team

Mistral Set for $14 Billion Valuation With New Funding Round

OpenAI set to start mass production of its own AI chips with Broadcom

Media

Venture

The IPO Market Is Opening Up. These 14 Companies Could Be Next.

Anthropic Nearly Triples Valuation To $183B With Massive New Funding

Anthropic Valuation Hits $183 Billion in New $13 Billion Funding Round

Benchmark’s Peter Fenton Isn’t Ready to Call This an AI Bubble

From Frustration to Conviction: What led to starting Allocate and our $30.5M Series B

Jack Altman & Martin Casado on the Future of Venture Capital

GeoPolitics

Regulation

What the Fixes for Google’s Search Monopoly Mean for You: It’s a ‘Nothingburger’

Google Must Share Search Data With Rivals, Judge Rules in Antitrust Case

Read our statement on today’s decision in the case involving Google Search.

Washington doubles down on Big Tech antitrust cases despite Google setback

Tesla Board Proposes Musk Pay Package Worth as Much as $1 Trillion Over Decade

Google Is Fined $3.5 Billion for Breaking Europe’s Antitrust Laws

Anthropic Agrees to Pay $1.5 Billion to Settle Lawsuit With Book Authors

Education

Editorial: What are the Economics of an AI Native Internet? Who Pays Whom?

The Internet is responsible for about $16 trillion of the global $108 trillion annual GDP. Click the link to see my Perplexity space with the numbers.

The Internet is far bigger than the web. The Internet is made up of all activity on top of TCP/IP and the Domain Name System not only web traffic. This includes all apps, messaging services, online games, payments systems, devices and so on. Only about 40% of the $16 trillion is web related value.

AI is broadly anticipated to be the primary engine of digital and non-web economic growth in the coming decade, with consensus estimates ranging from a modest 1–2% boost to global GDP to transformational impacts of up to $20 trillion, much of which will stem from non-web, embedded, and invisible AI-driven flow

At the same time AI is transitioning to an agentic model where invisible browsers like Browserbase act as the ‘reader’ of web based content, subsumed under an AI interface. So even the 40% currently accounted for by the web will no longer be driven by direct consumer browser use.

This has implications for every stakeholder in the Internet economy.

An AI Native Internet is an Internet where the web, apps, payment systems are all interfaces to a new AI canvas or ‘front door’ accessed via devices - mobile devices, AR devices and new yet to appear ones.

The $16 trillion of current value and the potential $20 trillion new value will all migrate to the AI Native internet, or disappear due to failing to make the transition.

For that reason the question of what the business model of the AI Native internet will be is crucial.

The dream scenario is that AI is universal and free to users. Like web search today. Of course that is already somewhat true but the subscription revenues of OpenAI, Anthropic, Google Gemini and others represent the lions share of revenue today, alongside API triggered token use where developers pay per million tokens consumed for inputs and outputs.

The domination of subscription and token based revenues is a function of the current state of AI. It is hard to impossible for AI to inherit the marketing spend that currently goes to Google and other advertising platforms for reasons we discussed last week.

And it is currently hard for content owners to get the traffic from AI, and hence the revenues, that they get from the web and app economy today. Again we discussed why last week, but broadly AI does not send much traffic to content owners.

This week Matthew Prince from Cloudflare was interviewed about a pay per crawl proposal using an http 402 technology that would enforce payment to content owners for a crawl. He suggests $1 per year per MAU(Monthly Active User), from the LLMs to a pool. Then this would be distributed to the content owners. I assume there would be some math creating a pro-rata payout according to some measure of value exchanged.

There is a lot to grasp here, but let’s assume that happens and all 4 billion humans with smart phones are in the MAU, that would create a $4 bn pool annually. It is only a small proportion of $16 trillion. So pay per crawl is definitely a feature of a business model for an AI Native Internet, but it is not the full model. Web advertising alone is a $667 billion portion of current Internet revenue, so one can see that $4 billion is good start, but small.

The logical first question is, how much of the $667 billion from advertising can migrate to this new AI native internet where the web is subsumed under AI and its agents?

For AI that is a pressing question as this week’s articles show that the cost of running AI, especially with reasoning and agents is rising, not falling.

The model we (all builders) should build is an AI Native Internet that is free for users, paid for publishers, and yes, paid for AI’s in return for the traffic they send and the value they create from that. It seems it might collectively be a $16-40 trillion economy in the years ahead.

The virtuous loop we laid out last week moves closer to this: identity for agents, attribution for links, settlement for outcomes. Cloudflare’s pay-per-crawl plus a neutral clearing function (accounting, registries, enforcement) can sit beside an AI-era CPC/CPA/CPM model.

Importantly, AIs shouldn’t only pay—they should also get paid. When they route high-intent traffic that converts on content owner’s sites. This aligns incentives: better answers send better clicks, with auditable receipts. Indeed AIs would earn a lot more than they pay because the crawls revenues they pay out would dwarf the share of the entire economy they are paid.

Tools like Anthropic’s MCP and unified metadata layers make those receipts credible by stitching permissions, provenance, and business context into the workflow.

I believe this needs a single trusted third party being paid by the ecosystem to run such a set of services just as the domain name system has a root server run by ICANN, the AI Native Internet would benefit from a root monetization system.

The .com system is managed by Verisign. Its revenue annually is $1.56 bn of the $16 trillion economy. Add Google’s share of advertising and the share of others providing all of the services, and it is hard to get infrastructure to be even 10% of the $16 trillion. A monetization infrastructure for AI would combine several roles and would represent a tiny fraction of the total value created.

What to watch next

Whether Google offers a true opt-out for AI Overviews—and how fast regulators push interoperability beyond search data.

Adoption of “402 Payment Required”–style signals and pay-per-crawl at scale, turning rights into programmable rails.

If DOJ’s ad-tech remedies force structural change this fall although the transition to AI will render this irrelevant.

Whether chip moves (OpenAI–Broadcom) and model routing meaningfully bend inference COGS for all, reducing AI costs (against the current trend).

The long arc still points to the same equilibrium: free (or near-free) AI for users; real money to publishers for training and traffic; and revenue for AIs that create measurable value in the handoff. Build the identity, registry, and clearinghouse—and everybody wins.

And a final note. The Anthropic settlement with book publishers this week ($1.5 billion) is being widely misinterpreted. Anthropic was not sued for reading publicly available books. And it was not sued for reading books it purchased. The suit was due to the fact that Anthropic read stolen books, and hence breached copyright in the act of stealing. Had it paid for the books no suit would have succeeded. The case does not indicate that copyright protects legally acquired content from being a source of training data. Legally acquired content can be used for training unless the law changes, and there is no indication of that.

Essay

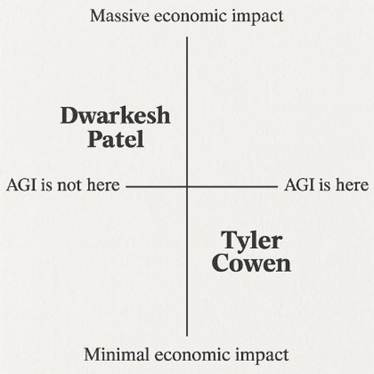

AI and jobs, again

Noahpinion • August 30, 2025

Essay•AI•LaborMarket

The debate over whether AI is taking people’s jobs may or may not last forever. If AI takes a lot of people’s jobs, the debate will end because one side will have clearly won. But if AI doesn’t take a lot of people’s jobs, then the debate will never be resolved, because there will be a bunch of people who will still go around saying that it’s about to take everyone’s job. Sometimes those people will find some subset of workers whose employment prospects are looking weaker than others, and claim that this is the beginning of the great AI job destruction wave. And who will be able to prove them wrong?

In other words, the good scenario for the labor market is that we continue to exist in a perpetual state of anxiety about whether or not we’re all about to be made obsolete by the next generation of robots and chatbots.

The most recent debate about AI and jobs centers around recent college graduates. Derek Thompson wrote a post suggesting that a slowdown in job-finding for recent college grads could be the first sign of the job-pocalypse. A number of news articles ran with this story and treated AI job destruction as a proven fact, but some pundits pushed back on the narrative, citing various data sources. I wrote about the whole controversy in this post: Stop pretending you know what AI does to the economy.

Then, Sarah Eckhardt and Nathan Goldschlag of the Economic Innovation Group, a think tank, came out with some research that found no detectable effect of AI on recent employment trends. (I covered this research in my last roundup post.)

Eckhardt and Goldschlag looked at several measures of which jobs are more “exposed to” AI. They found that for three of the five exposure measures they looked at — including their preferred measure, from Felten (2021) — there was no detectable difference in unemployment between the more exposed and the less exposed workers. But for two of the measures, there was a small difference, on the order of 0.2 or 0.3 percentage points:

The EIG researchers conclude that AI probably isn’t taking jobs yet, and if it is, the effect is still very small at this point.

Eckhardt and Goldschlag were wise to title their research note “AI and Jobs: The Final Word (Until the Next One)”. Indeed, the next word on the topic came out almost immediately, in the form of a paper by Brynjolfsson, Chandar, and Chen, entitled “Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence”.

Brynjolfsson et al. do something very similar to Eckhardt and Goldschlag — they use two measures of how exposed a job is to AI, and then they compare recent employment trends for more and less exposed workers. Their finding is startlingly different than that of the EIG team:

“Our first key finding is…substantial declines in employment for early-career workers (ages 22-25) in occupations most exposed to AI, such as software developers and customer service representatives. In contrast, employment trends for more experienced workers in the same occupations, and workers of all ages in less-exposed occupations such as nursing aides, have remained stable or continued to grow.

“Our second key fact is that overall employment continues to grow robustly, but employment growth for young workers in particular has been stagnant since late 2022. In jobs less exposed to AI young workers have experienced comparable employment growth to older workers. In contrast, workers aged 22 to 25 have experienced a 6% decline in employment from late 2022 to July 2025 in the most AI-exposed occupations, compared to a 6-9% increase for older workers. These results suggest that declining employment AI-exposed jobs is driving tepid overall employment growth for 22- to 25- year-olds as employment for older workers continues to grow.”

The $20/Month Software Revolution

Tomtunguz • August 30, 2025

AI•Tech•Vibe Coding•Low Code NoCode•Software Development Tools•Essay

Software development has operated within established boundaries for decades, with clear divisions between those who code & those who don’t.

But what happens when those boundaries dissolve overnight, & anyone can build functional applications for the price of a monthly streaming subscription?

For twenty years, professional software development meant specialized teams, lengthy sprints, & rigorous adherence to architectural best practices. Companies invested months in planning, weeks in development cycles, & substantial resources in quality assurance. The barrier to entry remained high, protecting established players & maintaining predictable market dynamics.

AI coding tools have shattered this equilibrium. Cursor, Lovable, & similar platforms now enable non-technical founders to prototype working applications in hours rather than months. The result is an explosion of vibe coding: intuitive, rapid application development that bypasses traditional workflows entirely.

This shift has created a fascinating paradox for founders & investors. The current landscape rewards speed over sophistication, with spaghetti-coded solutions often outpacing polished alternatives to users’ laptops. Yet this same accessibility threatens to commoditize software development itself, raising questions about where sustainable competitive advantages will emerge.

The answer lies in recognizing this period as history’s largest distributed market research experiment. Thousands of individual users are discovering optimal AI-enhanced workflows through trial & error, essentially crowdsourcing the future of software development. Smart players should treat this chaos as intelligence gathering rather than noise.

The strategic opportunity exists in two phases.

First, embrace experimentation during this chaotic period because the cost is minimal, & the learning is invaluable. Second, prepare for consolidation by identifying workflow patterns that demonstrate real user adoption & commercial viability.

The current vibe-coding era won’t last forever. Eventually, brilliant practitioners in each domain will distill optimal workflows from this experimentation, commercialize them, & establish new industry standards.

The companies that recognize these emerging patterns earliest & build robust, scalable versions before the market standardizes will define the next generation of software development tools.

The glee of solving problems with $20-per-month AI tools represents more than convenience; it signals a fundamental restructuring of how software gets built & who gets to build it.

Opinion | AI Won’t Eliminate Scarcity

Wsj • September 2, 2025

Essay•AI•Scarcity•Labor Markets•Automation

Jobs in market economies will disappear only when unmet human desires disappear.

AI will change how we build startups -- but how?

Andrew Chen • September 3, 2025

Essay•AI•Home Screen Test•Defensibility•AI Agents

Thesis and Early Signal

The piece argues we’re in the “golden age of AI,” yet adoption in everyday software remains nascent. The litmus test is the “Home Screen Test”: out of a typical 4x7 grid of 28 phone apps, how many are truly AI‑native—and how many were built with AI coding tools? Beyond obvious LLM apps, the answer is near zero, highlighting a vast, untapped opportunity. Today’s shift amounts to “I Google less and prompt more,” but the author contends we’re just at the beginning of how AI will reshape both products and the companies that create them. “We still don’t know a lot,” he writes, framing the post as a map of open questions rather than definitive predictions.

Open Questions That Will Shape Startup Building

Team size and leverage: Will AI shrink headcount via 1000x leverage and agentic workflows—e.g., one person supervising “1000 agents who code all day”—or will human bottlenecks like taste, design, and operations still force hiring?

Defensibility in a fast-copy world: If AI commoditizes capabilities and replication is instant, what constitutes a moat? Is it distribution and network effects (as the mobile era suggested), relentless iteration speed, or multi‑year, CapEx‑heavy bets (space tech, industrial hardware) that are harder to clone?

Cost to build vs. cost to grow: Models are capital intensive but app development should be cheaper; however, distribution remains expensive. Even when it’s cheap to build, acquiring users can still cost millions, and attention scarcity may be the true limiter.

Team structure: Does the classic triad of product, engineering, and design persist if multimodal AI can translate PRDs and wireframes directly into software? Or do roles collapse into integrated “product engineers” orchestrating model/tool chains?

Geography: Does the Bay Area’s network effect persist or weaken as talent, knowledge, and tooling globalize? If AI makes product creation akin to content creation, will founders emerge everywhere and only aggregate in hubs when needed?

Venture model and stage definitions: If two builders can reach profitability quickly, does venture capital shift from risk to growth capital—and become more globally distributed? Do traditional stage labels (preseed/seed/Series A/B/C) blur as some projects jump straight to later stages while others remain bootstrapped side projects?

Historical Precedent and Organizational Evolution

The argument situates AI within centuries‑long shifts in production and organizational form: from pre‑industrial cottage workshops to industrial factories, corporations, and professional management. It invokes the 1600s rise of long‑distance trade, the limited liability corporation, and the East India Company—whose standing army reportedly reached 260,000—to show how technological leaps often require new business structures to coordinate capital, labor, and risk at scale. By analogy, a world of agents, compute, and models likely demands new organizational architectures beyond today’s startup template.

Two Plausible Futures

Optimistic: AI‑native startups need far fewer people to ship more, with defensibility emerging from breakthrough features rather than mere distribution muscle. Startups become cheaper to build, and hubs like the Bay Area remain magnets for expertise and capital, even as creation globalizes.

Pessimistic (or centralizing): Winners are those with hyperscale data centers, privileged data access, and enormous compute budgets. AI features don’t solve distribution; incumbents retrofit AI into existing products, leveraging their channels to outcompete startups. The key strategic question becomes: “Will incumbents get innovation first? Or startups get distribution first?”

Near‑Term Trajectory

The center of gravity shifts from frontier research to product execution. With foundation models “asymptoting,” the next wave is model‑agnostic builders who orchestrate best‑of‑breed models, layer compelling UI/UX, and embed business logic specific to verticals. Expect a spectrum from horizontal tools to industry roll‑ups, where software plus AI drives consolidation and operational advantage. Speed of iteration, tight feedback loops with users, and the ability to marry domain insight with agentic automation may matter more than training proprietary foundation models.

Implications and Takeaways

Moats may refocus on distribution, data aggregation loops, ecosystem lock‑in, and rapid product cadence—unless a product’s economics are anchored in multi‑year CapEx and regulation‑defined barriers.

Recruiting and org design will prioritize “full‑stack product” talent who can specify, prompt, evaluate, and ship using AI toolchains, while human judgment (taste, ethics, safety, and market intuition) becomes the scarcest input.

Go‑to‑market remains the hardest problem: expect more capital flowing into growth than into initial build, and more experimentation with novel distribution (bundles, embedded workflows, and partnerships).

Geography diversifies creation; hubs still aggregate scaling knowledge and capital.

Venture capital becomes more modular—funding experiments, growth sprints, or roll‑ups—while stage labels get fuzzier.

Key takeaways:

The “Home Screen Test” suggests AI’s consumer impact is still early despite massive promise.

Organizational forms will evolve to harness agentic and multimodal workflows.

Moats in software may erode; defensibility shifts to speed, distribution, and hard‑to‑copy CapEx.

The next wave is model‑agnostic, vertical, UI‑ and business‑logic‑led.

The strategic race pits incumbent distribution against startup innovation velocity.

Almost anything you give sustained attention to will begin to loop on itself and bloom

Henrik karlsson • Henrik Karlsson • September 4, 2025

Essay•Education•Attention•Dopamine•Neuroscience

Brioches and Knife, Eliot Hodgkin, 08/1961

When people talk about the value of paying attention and slowing down, they often make it sound prudish and monk-like. Attention is something we “have to protect.” And we have to “pay” attention—like a tribute.

But we shouldn’t forget how interesting and overpoweringly pleasurable sustained attention can be. Slowing down makes reality vivid, strange, and hot.

As anyone who has had good sex knows, sustained attention and delayed satisfaction are a big part of it. When you resist the urge to go ahead and get what you want and instead stay in the moment, you open up a space for seduction and fantasy. Desire begins to loop on itself and intensify.

I’m not sure what is going on here, but my rough understanding is that the expectation of pleasure activates the dopaminergic system in the brain. Dopamine is often portrayed as a pleasure chemical, but it isn’t really about pleasure so much as the expectation that pleasure will occur soon. So when we are being seduced and sense that something pleasurable is coming—but it keeps being delayed, and delayed skillfully—the phasic bursts of dopamine ramp up the levels higher and higher, pulling more receptors to the surface of the cells, making us more and more sensitized to the surely-soon-to-come pleasure. We become hyperattuned to the sensations in our genitals, lips, and skin.

And it is not only dopamine ramping up that makes seduction warp our attentional field, infusing reality with intensity and strangeness. There are a myriad of systems that come together to shape our feeling of the present: there are glands and hormones and multiple areas of the brain involved. These are complex physical processes: hormones need to be secreted and absorbed; working memory needs to be cleared and reloaded, and so on. The reason deep attention can’t happen the moment you notice something is that these things take time.

What’s more, each of these subsystems update what they are reacting to at a different rate. Your visual cortex can cohere in less than half a second. A stress hormone like cortisol, on the other hand, has a half-life of 60–90 minutes and so can take up to 6 hours to fully clear out after the onset of an acute stressor. This means that if we switch what we pay attention to more often than, say, every 30 minutes, our system will be more or less decohered—different parts will be “attending to” different aspects of reality. There will be “attention residue” floating around in our system—leftovers from earlier things we paid attention to (thoughts looping, feelings circling below consciousness, etc.), which crowd out the thing we have in front of us right now, making it less vivid.

AI

Cutting-Edge AI Was Supposed to Get Cheaper. It’s More Expensive Than Ever.

Wsj • Christopher Mims • August 29, 2025

AI•Tech•InferenceCosts

Overview

The article argues that building on top of today’s most capable AI models is getting pricier, not cheaper, especially for startups and small developers that rely on API access from large providers. As models “think” more—doing deeper reasoning, calling external tools, and processing longer prompts and outputs—the compute and token usage behind each user request expands. That extra “thinking” translates directly into higher, less predictable costs for companies that don’t control the underlying infrastructure but must absorb or pass on per-call fees to customers. The result is a growing squeeze on margins and business models for AI-first apps and services that had expected declining costs over time but are now encountering the opposite dynamic. The piece frames this as a structural shift: cutting-edge capability now often implies more computational work per task, which small players pay for by the request.

What’s Driving Costs Up

More tokens per interaction: Richer prompts, longer context windows, and verbose outputs increase token counts and therefore bills.

Deeper reasoning steps: Models increasingly perform multi-step “reasoning” or tool use behind the scenes; each step consumes compute, so more “thinking” can mean multiple model calls per task.

Chaining and orchestration: Complex workflows (retrieval, planning, code execution, and verification) require several sequential model invocations, compounding per-request cost.

Latency and reliability targets: To deliver fast, consistent responses, startups may pay for higher-tier services or additional capacity headroom.

The Startup Pinch

Unpredictable cost of goods sold: Per-token and per-call pricing turns usage spikes into budget shocks, complicating pricing plans and unit economics.

Product constraints: Teams redesign features to cap cost—shorter prompts, stricter output limits, or fewer automated steps—sometimes at the expense of quality.

Monetization challenges: Consumer apps hit paywalls quickly; enterprise sellers push higher-priced tiers to cover COGS, narrowing addressable markets.

Competitive disadvantage: Companies without GPU access or favorable platform terms face higher marginal costs than incumbents with scale or in-house models.

Operational Responses

Model routing: Direct simple tasks to smaller, cheaper models while reserving premium models for high-stakes queries.

Prompt and context hygiene: Aggressively trim context, deduplicate retrieved documents, and compress histories to reduce tokens.

Tooling discipline: Limit unnecessary tool calls and “reasoning depth”; set compute budgets that bound internal steps per request.

Caching and reuse: Store frequent responses, intermediate results, and embeddings to avoid repeated computation.

Specialized models: Fine-tune compact models for specific domains, trading some peak performance for predictable, lower-cost inference.

Strategic Implications

Platform power consolidates: As costs rise with capability, the providers who control the best models and hardware capture more value, increasing dependence for downstream developers.

Differentiation shifts: Durable advantage moves from generic “we added AI” features to proprietary data, workflows, and integrations that justify higher prices and reduce wasteful calls.

Efficiency becomes a moat: Startups that bake cost-awareness into architecture—budgeted reasoning, partial on-device inference, smart retrieval—gain resilience.

Market segmentation: Premium, high-reasoning experiences cluster in enterprise or mission-critical use cases; consumer offerings gravitate toward smaller models or constrained features.

Key Takeaways

“With models ‘thinking’ more than ever,” each user interaction can trigger extra compute and multiple API calls, raising per-request costs.

Startups relying on third-party AI face margin pressure, pricing complexity, and design trade-offs that challenge scale.

Cost control now sits alongside accuracy and latency as a core product requirement; architecture and process choices materially impact unit economics.

The near-term arc favors companies with efficiency tooling, proprietary data advantages, or hybrid stacks that minimize expensive calls without degrading outcomes.

Elon Musk Just Delivered a Ringing Endorsement of the iPhone’s Staying Power

Wsj • August 31, 2025

AI•Tech•Apple

Overview

“The billionaire’s lawsuit against the tech giant shows the iPhone still holds sway in AI’s future.” That core claim frames the dispute not just as a legal fight, but as a signal of where power resides in consumer artificial intelligence: with the platform that owns the hardware, default settings, and distribution. The piece argues that the iPhone, as the most influential mobile gateway for apps and assistants, remains the decisive chokepoint for how AI reaches everyday users, and that high‑profile legal pressure underscores this leverage rather than diminishes it.

Why a lawsuit highlights iPhone’s leverage

A legal challenge aimed at shaping how AI integrates with the iPhone implicitly acknowledges Apple’s gatekeeping role. Control over system defaults (which assistant wakes with a button press or wake word), on‑device permissions (microphone, camera, notifications), and App Store policy (what is allowed to run natively or as an extension) determines which AI experiences achieve mass adoption. By contesting these levers through the courts, a billionaire founder is, in effect, validating that the path to AI ubiquity still runs through iOS.

Distribution > model quality

The article positions distribution as the scarce resource. The best model or chatbot can lose if it cannot be the default experience on the device people carry most often. Placement on the iPhone home screen, deep OS hooks, and seamless hand‑offs across services can outweigh incremental model performance. The lawsuit thus reads as a play to influence distribution terms—either by forcing fairer access to defaults or by deterring platform moves that could entrench a rival assistant.

On‑device AI and privacy optics

Another theme is on‑device processing. The iPhone’s emphasis on running key tasks locally—paired with hardware optimized for machine learning—lets Apple argue for privacy‑preserving AI experiences. Any suit contesting how third‑party AI plugs into that architecture must contend with a narrative that ties device control to user trust and safety. That dynamic raises the bar for challengers: they must show both superior capability and trustworthy integration to gain iPhone‑level distribution.

Implications for developers and rivals

Developers: Expect continued pressure to align with platform policies and entitlements that govern background processing, data access, and API usage for AI features. Compliance may become the cost of reaching the iPhone’s audience.

Rivals: If you can’t win the default, you must win through standout utility in niche workflows, enterprise channels, or cross‑platform network effects. Legal routes may nudge policies at the margins but won’t replace the need for product‑led pull.

Consumers: Competition could yield better assistants, but short‑term frictions—permission prompts, switching costs, fragmented experiences—are likely as stakeholders battle for the primary interface slot on the iPhone.

Strategic read‑through

The conflict is a referendum on who sets the rules for the AI era: model makers or mobile platform owners. By centering the iPhone, the article suggests the latter still command decisive advantage. Litigation, then, becomes both tactic and testimony—an acknowledgment that shaping iPhone integration terms may matter more than marginal gains in AI accuracy. Until distribution unbundles from the device, the iPhone’s gravitational pull will continue to define which AI agents become everyday habits.

Key takeaways:

The lawsuit is less about damages and more about access to the iPhone’s distribution levers.

Defaults, OS‑level hooks, and App Store rules determine AI adoption speed.

On‑device processing and privacy positioning reinforce Apple’s control narrative.

Competitors must pair legal strategies with product differentiation and alternative channels.

Made by Google 2025, AI Trade-offs, Google and the Long-Term

Stratechery • Ben Thompson • September 2, 2025

AI•Tech•Pixel

Good morning,

Recently on Sharp Tech, Andrew and I covered Nvidia and China, the U.S. taking a stake in Intel, and K‑Pop Demon Hunters.

Made by Google 2025

Two weeks ago, Bloomberg reported Google’s newest slate of consumer hardware: a Pixel 10 lineup (standard, Pro, Pro XL, and Pro Fold) ranging from $800 to $1,800, a Pixel Watch 4 at $350–$400, and budget Pixel Buds 2a at $130. The pitch centered on deeper Gemini integration, complete with playful teases at Apple and an “ask more of your phone” tagline.

Apple’s September event is imminent and usually a glossy 90‑minute commercial; that predictability is why Google’s show felt worth writing about: it was something new.

As The Verge noted, the keynote resembled a Tonight Show taping. Jimmy Fallon hosted; Rick Osterloh did a sit‑down “interview” instead of pacing a stage; pre‑taped segments rolled between live bits. Afterward, Fallon and Googlers moved set‑to‑set in a QVC‑style tour of Pixel 10, Pixel Watch 4, and Buds 2a. Cameos and influencers showcased Gemini features; a Jonas brother premiered a “Shot on Pixel 10 Pro” video; Lando Norris and Giannis Antetokounmpo crossed sports with Gemini coaching.

Some called it cringe; I liked the novelty. More importantly, it worked: last year’s Made by Google drew about 1.3 million YouTube views, while 2025 has already surpassed 8 million—Google boosted reach by borrowing celebrity. Still, the gap remains: Apple’s 2024 iPhone event and Samsung’s July 2025 Unpacked each sit around 27 million. For them, the phones are the stars; Google hired them.

The Gross Margin Debate in AI

Tanayj • September 2, 2025

AI•Tech•GrossMargins•InferenceCosts•PricingModels

Overview

The piece maps where gross margins sit across the AI stack in 2025 and offers pragmatic guidance for application builders. It argues that while chip and cloud vendors are monetizing AI demand with healthy economics, margin pressure is most acute at the application layer, especially for coding assistants where users perceive quality on every keystroke. The author’s through line: optimize model choice and workflow control, diversify revenue beyond tokens, iterate pricing, and remember that net margin (not just gross) ultimately determines business quality.

Where margins sit today

Chips: Nvidia continues to capture premium economics, with gross margins around 70% after excluding one‑offs.

Cloud: Hyperscalers don’t break out AI product gross margin, but reported figures imply healthy profitability with some AI drag. AWS posted roughly 33% operating margin for the quarter (36.7% TTM). Microsoft said Microsoft Cloud gross margin is 69% and explicitly noted AI infrastructure is pressuring the percentage. Google Cloud delivered ≈21% operating margin. Net: platforms monetize AI while funding the buildout; estimated gross margins are in the ~50–55% range for AI services at some providers.

Models: Outside estimates put OpenAI’s gross margin near ~50% and Anthropic around ~60%, with a mix of consumer and API lines. Training costs are not included in these gross margin figures.

Applications: The widest dispersion. Bessemer’s 2025 dataset shows fast‑ramping “Supernovas” averaging ~25% gross margin early (many even negative), while steadier “Shooting Stars” trend closer to ~60%. Methods of gross margin calculation vary (e.g., whether free users’ costs are included), complicating comparisons.

Why application margins vary

Inference cost curves vs. model choice: For a given model, inference costs may fall 80–90% annually, but top‑end model prices have stayed flat or risen (as Ethan Ding’s analysis notes). The pivotal question: must you use the frontier model on every request, or simply meet a quality bar? If the latter, routing most traffic to cheaper models and bursting to frontier only when needed preserves margin; if the former, customer expectations will compress margins unless pricing mirrors usage.

Control and workflow depth: When customers demand the best model always, your COGS ride someone else’s price card. Fixed workflows (e.g., document processing, IVR) allow vendors to own acceptance criteria, default to cheaper models, and escalate only on hard cases. Depth matters: collaboration, versioning, audit, analytics, governance, and integrations push you beyond “a wrapper over a model,” improve ACVs, and nudge app‑layer margins toward SaaS‑like territory.

“Do not live or die on tokens alone”

Hosting and deploy: Platforms like Bolt and Replit monetize runtime, bandwidth, storage, domains, and private deployments once projects live in their environments—raising ARPU and decoupling margin from token pricing.

Marketplaces and services: Replit’s Bounties take a 10% fee—clean non‑inference revenue.

Advertising and affiliate: OpenAI has piloted checkout inside ChatGPT with Shopify, creating potential commission revenue on free tiers; Perplexity has tested sponsored follow‑up questions. Expect more ad formats to reach consumer chatbots over time.

Pricing model iteration as a margin lever

Teams are moving beyond simple per‑seat plans due to power‑user cost spikes. Emerging patterns include seat plus pooled credits, usage with included allowance and clear pass‑through, token bundles with rollover, and BYOK for heavy cohorts.

Replit has iterated across these ideas and saw gross margins swing meaningfully even as revenue grew. Anthropic disclosed that under an earlier Claude Code pricing structure, a $200 plan could lose “tens of thousands of dollars per month” for some power users—underscoring the need for pricing aligned to cost drivers.

Net margins, not just gross

Emphasizing gross margin alone misses the strategic trade: some teams accept thinner early gross margins for product‑led distribution and faster scale, lowering S&M and even G&A as a percentage of revenue.

Bessemer’s “Supernova” pattern captures this: very high ARR per employee, initially thin margins that thicken with routing, workflow depth, and pricing improvements. “The goal is not a perfect gross margin in isolation. The target is a healthy net margin profile as cohorts mature.”

Key takeaways for builders

Route intelligently: meet a quality bar with tiered models; burst to frontier only when necessary.

Own the workflow: deepen control, acceptance criteria, and integrations to shift from “access to a model” to durable SaaS economics.

Diversify revenue: monetize hosting/deploy, marketplaces, and ads/affiliate to decouple from inference COGS.

Fix pricing: combine seats with credits, pass‑through heavy usage, offer bundles/BYOK, and continuously tune tiers for power users.

Optimize for net margin: trade selective gross margin for distribution where it accelerates growth and improves overall unit economics over time.

OpenAI acquires product testing startup Statsig and shakes up its leadership team

Techcrunch • September 2, 2025

AI•Data•OpenAI•Statsig•Leadership

What happened

OpenAI is bringing the founder of product testing startup Statsig into the company as its CTO of Applications and is making additional changes across its leadership ranks. Alongside the personnel shift, OpenAI is moving to embed experimentation and product analytics capabilities more deeply into how it designs, builds, and scales its application-layer experiences. The appointment signals a tighter integration between cutting-edge model research and the practical, metrics-driven craft of shipping products that perform reliably for consumers and enterprises alike.

Why this matters

Statsig’s core competency is rigorous product experimentation—A/B testing, feature flagging, rollout control, and trustworthy metrics. Bringing that mindset and toolkit into OpenAI’s leadership suggests a stronger commitment to rapid iteration grounded in measurable outcomes.

A dedicated “CTO of Applications” role clarifies accountability for the app layer (for example, consumer- and enterprise-facing interfaces and workflows) distinct from foundational model research and infrastructure. That separation can accelerate decision-making while improving quality, safety checks, and shipping velocity.

Leadership adjustments often precede product roadmap shifts. Expect tighter feedback loops between usage data, experimentation results, and feature prioritization, with expansion of observability and reliability practices inside OpenAI’s app stack.

What the CTO of Applications is likely to drive

Execution at the interface between advanced models and end-user value: translating model capabilities into durable features, workflows, and APIs that solve concrete user problems.

A unified experimentation framework so teams test consistently, compare apples-to-apples metrics, and avoid “metric drift.” This includes disciplined guardrails for rollouts, canaries, and reversions when features underperform.

Better “product fitness functions” that combine quantitative signals (engagement, latency, cost-to-serve, error rates) with qualitative feedback loops (UX research, enterprise requirements), enabling faster, safer iteration.

Stronger alignment with trust and safety: building pre-deployment checks and post-deployment monitoring directly into the product pipeline, so risk assessment happens continuously rather than episodically.

Implications for users and developers

Users should see more frequent, controlled improvements in application features, accompanied by clearer changelogs and faster fixes when regressions occur.

Enterprises may benefit from more predictable performance and governance features—versioning, auditability of changes, and opt-in controls during feature rollouts—reflecting experimentation best practices adapted to regulated environments.

Developers could get richer telemetry and evaluation tools around the application layer, making it easier to diagnose model- vs. product-level issues, measure business impact, and optimize prompts or fine-tuning strategies within product workflows.

Organizational and market context

The leadership shake-up concentrates product authority and makes experimentation a first-class function. That tends to reduce handoffs, shorten cycle time from idea to live test, and institutionalize “evidence over intuition.”

In a competitive AI market, the differentiator is increasingly the application experience—latency, reliability, cost control, and task completion rates—rather than raw model specs alone. Elevating an applications-focused CTO reflects that reality and could pressure rivals to tighten their own experimentation pipelines.

For the broader ecosystem, deeper in-house experimentation at OpenAI may reduce reliance on external testing tools for its flagship products, while raising the bar for what customers expect from AI application quality and transparency.

Key takeaways

OpenAI is adding the founder of a product experimentation startup as CTO of Applications and reshaping other leadership roles to emphasize measurable product excellence.

Expect faster, data-driven iteration across consumer and enterprise apps, with consistent A/B testing, feature flagging, and rollout controls built into the development lifecycle.

The move signals that the application layer—not just model breakthroughs—will be central to OpenAI’s next phase of differentiation, with stronger ties between safety, reliability, and product performance.

Users, enterprises, and developers should anticipate clearer metrics, improved stability, and more deliberate governance in how features are released and evaluated.

🔮 Could AI offset baby boomers retiring?

Exponentialview • September 3, 2025

AI•Work•Productivity

Overview

The piece argues that two powerful forces—ageing demographics and accelerating AI—are set to counterbalance one another in the United States over the next decade. With a record number of Americans turning 65 in 2025 and roughly 16 million expected to retire by 2035, the shrinking worker-to-retiree ratio threatens growth, strains Social Security, and elevates healthcare demand. Yet, drawing on long-run data and a structured forecasting approach, the author contends that AI-enabled productivity could offset much of this demographic drag. “Most American workers will feel AI’s impact – but not as a replacement,” the essay stresses, positioning technology as a productivity catalyst rather than a job destroyer.

How AI could offset the “silver tsunami”

The argument hinges on task-level, not job-level, analysis. Examining more than 800 U.S. occupations, the research finds that about four in five roles are likely to see a blend of automation and augmentation, yielding roughly 43% time savings on current task bundles. Only about 16% of jobs—those with highly repeatable tasks and 40%+ automatable time—face significant displacement risk. Examples span from routine office tasks (scheduling, data entry, project coordination) to portions of programming work. Crucially, the freed capacity can be reallocated to higher-value, human-centric tasks, lifting service quality and overall output—akin to “boomers never retiring” in macroeconomic effect.

Model, data, and scenarios

Underpinning these conclusions is the Vanguard Megatrends Model, a forward-looking framework that integrates technology, demographics, globalization, and fiscal debt. The model’s engine is a vector autoregression tracking 15 indicators (e.g., real GDP, inflation, rates, labor force participation, equity valuations) over 130 years and billions of historical data points. From this, two main scenarios emerge for the 2030s:

Productivity Surges (45–55% probability): AI matures into a general-purpose technology—like electricity—beating the productivity impact of the PC and the internet by the early 2030s, enabling near 3% U.S. real GDP growth, the fastest since the late 1990s. Stronger growth helps restrain inflation and narrows deficits via higher tax receipts.

Deficits Drag (30–40% probability): AI underdelivers while public deficits keep climbing. Higher interest and borrowing costs slow credit formation; inflation proves sticky; homeownership erodes; U.S. growth converges toward a lower European-style pace. Monetary policy provides limited relief; the key lever remains how organizations deploy technology to raise productivity.

Impacts on occupations and skills

The essay emphasizes evolution over elimination of jobs. In healthcare, for instance, AI transcription and NLP can shift nurses’ time from EHR data entry to patient care. In education, HR, and pharmacy, augmentation enhances service quality and throughput. Programmers may be displaced from certain coding and testing tasks (roughly 45% of their day), but many can transition into AI-oriented roles due to task overlap across computing occupations. The durable skills set is distinctly human: critical thinking, creativity, emotional intelligence, and complex problem-solving—especially in people-facing sectors (healthcare, education, social work). While STEM wage premia may compress, analytical and tech fluency remain valuable for integrating AI into workflows.

Policy and organizational responses

Past transitions caution against neglecting workers. Policymakers can reduce frictions by pruning unnecessary credentialing, easing occupational licensing, and widening access to affordable reskilling pathways. Employers, learning from the post-COVID recovery, can broaden hiring pipelines and recognize skill portability rather than privileging narrow credentials. The goal is to accelerate mobility from automating roles into augmented ones, shortening income disruptions and preserving community tax bases.

Investor implications

For savers and asset allocators, the essay advises acting on probabilities rather than hype. If AI proves general-purpose, benefits will radiate beyond the “Magnificent Seven” to downstream adopters and newly created industries. Given the uncertainty across the two scenarios, broad diversification and a long-term horizon remain first principles. Portfolio design should be resilient to both an AI-led productivity boom and a deficits-led drag, with capacity to adapt as realized productivity signals and fiscal dynamics unfold.

• Key takeaways

AI’s impact will be pervasive but primarily augmentative; displacement is concentrated in about 16% of jobs with high automatable task shares.

Average task-level time savings around 43% can meaningfully boost output and quality without wholesale job loss.

Near-3% U.S. GDP growth in the 2030s is plausible under a productivity surge, potentially the strongest since the late 1990s.

A deficits-drag path remains a sizable risk; monetary policy alone cannot solve productivity shortfalls.

Policy should target mobility: reduce credential barriers, expand reskilling; employers should hire for skills, not just pedigrees.

For workers, human-centric competencies—critical thinking, creativity, EQ, complex problem-solving—are the safest against automation.

For investors, diversify beyond headline AI leaders and position for broad-based productivity diffusion while hedging fiscal and rate risks.

Context is Important, Metadata Provides It

Medium • ODSC - Open Data Science • September 3, 2025

AI•Data•ModelContextProtocol

Why Context Is the Missing Ingredient in AI Workflows

The article argues that many failures in data science and AI—flawed assumptions by practitioners, misleading recommendations from agents, and superficially plausible LLM outputs—stem from a lack of organizational context. Even intelligent systems with ample raw data falter when they cannot access the right business-specific information, relationships, and permissions. The core claim is that context must be both accessible and structurally coherent for AI to make reliable, auditable decisions. As the author puts it, “context alone isn’t good enough”; it must be unified and governed to be useful across teams and use cases.

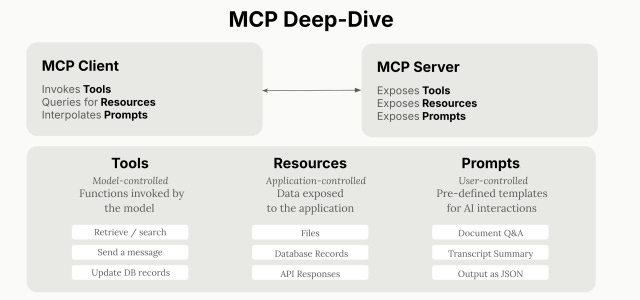

Enter Model Context Protocol (MCP)

MCP, open-sourced by Anthropic, standardizes how AI systems integrate with tools and data sources. Instead of building custom connectors for every application, organizations can implement a single MCP server per system that exposes functionality with consistent patterns for authentication, data exchange, and function calling. This simplifies the “last-mile” integration problem and lets AI agents reliably discover and invoke capabilities across a heterogeneous stack. With MCP, an LLM can request not just data, but actions—invoking functions in external applications with predictable inputs/outputs—thereby closing the loop between insight and execution.

Why MCP Alone Doesn’t Solve Context

An MCP connection pipes information, but it does not guarantee the information is complete, consistent, or permission-appropriate. The article illustrates this with a sales churn example: a CRM exposed via MCP might list accounts but omit critical product usage signals, include hundreds of duplicate records, or expose data beyond a rep’s access rights. Scaling by adding more MCP servers can quickly become unmanageable as use cases, users, and tools proliferate. Without consolidation, AI remains prone to hallucination, bias, and brittle workflows because it still lacks a single, authoritative view of the business domain.

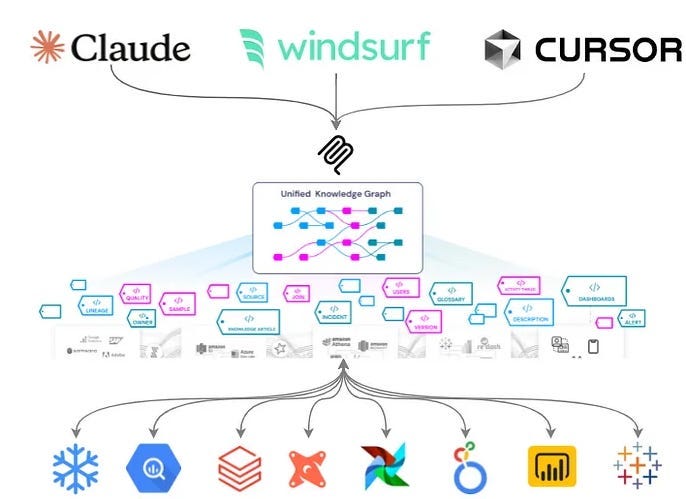

Metadata Platforms as the Context Backbone

Metadata platforms provide that unifying layer. They ingest and model complete data context—datasets, schemas, ownership, usage patterns, upstream/downstream lineage, quality tests, dashboards, and ML assets—into a coherent knowledge graph. This “Unified Knowledge Graph” supports discovery, quality, lineage, observability, and governance in one place, serving as a durable source of truth “across all your data systems across all departments across all time.” When exposed through MCP servers, the metadata layer becomes both readable (for retrieval and analysis) and writable (so agents can propose or make governed updates), enabling organizationally aware assistants that reason with policy-aware, end-to-end context.

OpenMetadata + MCP + Goose: A Practical Stack

The article highlights OpenMetadata as an open-source unified metadata platform that auto-discovers and catalogs data across the stack, tracks real-time lineage, and enforces governance at scale. Its MCP server exposes the knowledge graph to AI agents, turning generic LLMs into context-rich assistants informed by business semantics and access controls. The piece also mentions Goose, an extensible open-source AI agent, as part of a hands-on tutorial demonstrating how to wire these components together so prompts, tools, and governance remain fully open-source and extensible.

Implications for Teams

Lower integration overhead: MCP reduces bespoke connectors; a single server per tool scales across agents.

Reduced hallucinations and safer automation: The metadata graph narrows the context window to authoritative, permissioned data and captures lineage and quality signals.

Faster time-to-value: Reusing the same governed context across analytics, ML, and agents allows consistent answers and actions.

Continuous improvement loop: Agents can read from and (governed) write back to the knowledge graph, improving documentation, ownership metadata, and lineage over time.

What You’ll Learn in the Tutorial

Foundations: OpenMetadata, MCP servers, and Goose, and how they reshape the modern data/AI stack.

Use cases: Churn risk, discovery and lineage-aware analytics, policy-aware data access, and metadata-driven observability.

Build experience: Stand up an open-source system you can extend to your own environment and “prompt completely in open-source,” ensuring transparency and portability.

Key Takeaways

MCP standardizes tool integration for AI, but sustainable accuracy demands a unified, governed context layer.

Metadata platforms supply that backbone via a Unified Knowledge Graph encompassing discovery, lineage, quality, observability, and governance.

OpenMetadata’s MCP server operationalizes this vision, enabling agents to act with business-aware, permissioned knowledge—turning LLMs from generic chatbots into trustworthy collaborators.

Mistral Set for $14 Billion Valuation With New Funding Round

Bloomberg • September 3, 2025

AI•Funding•MistralAI

Overview

A French artificial intelligence startup is finalizing a landmark fundraising that underscores the accelerating competition in the AI sector. According to the article, Mistral AI is set to secure approximately €2 billion in new capital, establishing a €12 billion valuation (about $14 billion) that includes the fresh funding. The article characterizes this round as one that “solidif[ies] its position as one of Europe’s most valuable tech startups,” reflecting both investor confidence and the strategic importance of next-generation AI models in the global technology landscape.

Key Numbers and Structure

Investment size: €2 billion (new capital being finalized).

Implied valuation: €12 billion total, inclusive of the new round (roughly $14 billion).

Competitive status: The valuation places the company among Europe’s most valuable startups, highlighting its prominence in the regional tech ecosystem.

Currency note: The article provides both euro and dollar figures, signaling international investor interest and relevance.

What This Signals About the AI Market

This capital raise illustrates the scale of funding now required to compete at the frontier of AI, where training state-of-the-art models and serving them at scale demands substantial spending on compute, talent, and data. A €12 billion valuation suggests expectations that the company will translate technical progress into commercial traction—through enterprise tooling, model access, or partnerships—while also keeping pace with rapid advances in model capability and efficiency. In practical terms, rounds of this magnitude often support multi-year compute commitments, accelerated hiring for research and engineering teams, and expansion of go-to-market efforts across priority sectors such as software, cloud, and industrial applications.

Strategic Positioning and Competitive Implications

European leadership: The raise reinforces Europe’s capacity to nurture globally relevant AI champions, potentially narrowing gaps with US-based peers.

Capital intensity: The size of the round signals a willingness among investors to fund long-term platform bets, not just application-layer startups.

Ecosystem effects: A flagship raise can catalyze local supplier networks (cloud, semiconductors, MLOps) and attract experienced operators and researchers to the region.

Risks and Execution Challenges

While the valuation underscores strong momentum, it also raises execution thresholds. To justify a €12 billion price tag, the company will need to demonstrate defensibility—via model quality, speed of iteration, reliability, and cost-performance—and translate technical lead into recurring revenue. Key risks include rising inference costs, talent competition, regulatory shifts in AI safety and data governance, and the possibility of model commoditization if open ecosystems advance quickly. The ability to differentiate on safety, customization, and domain performance will be pivotal to converting interest into durable enterprise contracts.

Why the Valuation Matters

Valuation here functions as a signal of expected market share and future cash flows in a rapidly expanding category. It also sets a benchmark for European AI startups seeking large-scale financing and may influence how sovereign funds, corporate strategics, and global venture investors allocate capital across regions. The inclusion of both euro and dollar figures suggests cross-border relevance and could ease future partnerships with multinational customers or infrastructure providers.

What to Watch Next

Deployment of proceeds: Expect prioritization of compute capacity, model R&D, and commercialization.

Product footprint: Movement from research milestones to enterprise solutions and developer tools.

Regional impact: Potential knock-on effects for European AI policy discussions and funding appetites.

Competitive response: How peers adjust pricing, release cycles, and partnership strategies in light of this raise.

Key Takeaways

“€2 billion investment” and “€12 billion ($14 billion)” valuation underscore the capital intensity and momentum in frontier AI.

The company is positioned as one of Europe’s most valuable startups, elevating the region’s profile in the global AI race.

Execution against compute scaling, productization, and go-to-market will determine whether the valuation converts into durable leadership.

How ‘neural fingerprinting’ could analyse our minds

Ft • September 4, 2025

AI•Tech•NeuralFingerprinting•Magnetoencephalography•Neuroprivacy

Scientists are exploring “neural fingerprinting” — the idea that patterns of brain activity are distinctive enough to identify individuals and reveal how their minds work. Recent advances in wearable magnetoencephalography are accelerating this shift. Lightweight helmets that use optically pumped magnetometers can capture minute magnetic fields produced by neurons without the bulky, cryogenically cooled systems of older MEG machines. Because they are portable and non‑invasive, these devices allow people to move naturally while their brain activity is recorded, offering a richer, more realistic picture of cognition.

Unlike structural imaging, which shows what the brain looks like, neural fingerprints are functional: they map how networks communicate in real time. Researchers say such signatures could help pinpoint abnormalities linked to conditions including schizophrenia, epilepsy and dementia, potentially enabling earlier detection and more personalised treatment. Combining these measurements with machine‑learning techniques may also help track how a patient responds to therapy, or predict who is at higher risk before symptoms become debilitating.

The technology is advancing beyond laboratories. Academic groups in Europe and North America have begun testing wearable MEG systems in clinical studies, and developers are preparing for regulatory pathways. The promise is to move from static snapshots to continuous, precise monitoring of brain dynamics — at rest, during tasks, and even in everyday environments — to build robust, individualised profiles.

With new capability comes risk. If neural fingerprints are linked to traits shaped by upbringing or socio‑economic background, they could be misused for cognitive profiling. Brain data are uniquely sensitive, difficult to anonymise and, once collected, hard to retract. Ethicists argue for stringent data‑protection rules, clear consent standards and recognition of “cognitive liberty” — the right to keep one’s thoughts from being probed or manipulated.

Parallel progress in brain‑computer interfaces underscores the stakes. Non‑invasive EEG systems paired with AI are helping some paralysed patients control cursors and type, while invasive implants promise higher‑bandwidth links from companies such as Neuralink and state‑backed efforts in China. As commercial interest grows, safeguards around data ownership, security and permissible use will determine whether neural fingerprinting becomes a clinical breakthrough or a new avenue for surveillance.

GPT-5: The Case of the Missing Agent

Secondthoughts • September 4, 2025

AI•Tech•GPT

Welcome to the 1800 new readers (!) who joined since our last post, “35 Thoughts About AGI and 1 About GPT-5”. Here at Second Thoughts, we let everyone else rush out the hot takes, while we slow down and look for the deeper meaning behind developments in AI. Welcome aboard!

AI has made enormous progress in the last 16 months. Agentic AI seems farther off than ever.

Back in April 2024, there were rumors that OpenAI might soon be releasing GPT-5. At the time, I took the opportunity to share some predictions, in which I suggested that the key question was whether it would “represent meaningful progress toward agentic AI”.

16 months later, OpenAI has finally decided to apply the name GPT-5 to a new model. And while it’s quite a good model, I find myself thinking that truly agentic AI seems farther off today than it did back then. All of the buzz about “research agents”, “coding agents”, and “computer-use agents” has distracted us from the original concept of agentic AI.

What Is Agentic AI?

Today, we have coding agents that can tackle moderately sized software engineering tasks, and computer use agents that can go onto the web and book a flight (though not yet very reliably). But the full vision is much more expansive: a system that can operate independently in the real world, flexibly pursuing long-term goals.

Shortly after the release of GPT-4, developer Toran Bruce Richards created an early attempt at a general-purpose agentic AI, AutoGPT. As Wikipedia explains, “Richards's goal was to create a model that could respond to real-time feedback and pursue objectives with a long-term outlook without needing constant human intervention.”

The idea was that you could give AutoGPT a goal, ranging from writing an email to building a business, and it would pursue that mission by asking GPT-4 how to get started, and then what to do next, and next, and next. However, this really didn’t work well at all – it would create overly complex plans, get stuck in loops where it kept trying the same unsuccessful action over and over again, lose track of what it was doing, and generally fail to accomplish anything but the most straightforward tasks. Perhaps that was for the best, given that inevitably some joker renamed it “ChaosGPT”, instructed it to act as a “destructive, power-hungry, manipulative AI”, and it immediately decided to pursue the goal of destroying humanity. (Unsuccessfully.)

There’s been a lot of progress since GPT-4. Beginning with OpenAI’s o1, “reasoning models” receive special training to carry out extended tasks, such as writing code, solving a tricky math problem, or researching a report. As a result, they’re able to sustain an extended train of thought while working on a task, making relatively few errors, and often correcting any errors they do make. This is supported by a dramatic increase in the size of “context windows” (the amount of information an LLM can keep in mind at one time). The original GPT-4 supported a maximum of 32,000 tokens (roughly 25,000 words); in April 2024, GPT-4 Turbo offered 128,000 tokens; as of this writing, GPT-5 goes up to 400,000 tokens. Meanwhile, back in February 2024, Google announced Gemini 1.5 with a 1M token window.

With all the progress over the last 16 months, are AI agents ready to deal with the real world?

OpenAI set to start mass production of its own AI chips with Broadcom

Ft • September 4, 2025

AI•Tech•OpenAI

OpenAI is preparing to begin mass production of a custom AI accelerator co‑designed with Broadcom, marking a significant move to secure dedicated compute for its models and lessen reliance on Nvidia’s GPUs. The effort reflects a wider shift among leading tech companies to build tailored chips for AI workloads as demand for training and inference capacity accelerates.

The in‑house processor, described internally as an “XPU,” is intended for OpenAI’s own use rather than external sale. By controlling key elements of the hardware stack, the company aims to improve performance per dollar and per watt, stabilize supply, and better match silicon features to its evolving model architectures.

OpenAI’s collaboration with Broadcom began last year and elevates the AI group into the select roster of hyperscale customers for Broadcom’s custom accelerator business. Manufacturing is expected to be handled by TSMC on an advanced process, with initial production targeted as early as next year. The partnership is also notable in the context of Broadcom’s expanding AI revenue base, which has been buoyed by substantial orders for bespoke accelerators.

The move aligns OpenAI with peers including Google, Amazon and Meta, each of which has developed proprietary silicon to reduce costs, mitigate supply constraints and optimize for specific AI tasks. While OpenAI continues to run large fleets of Nvidia hardware and has incorporated AMD chips, its custom device is designed to complement that mix and support the next generation of models, including successors to GPT‑4‑class systems. Together, these steps underscore how competition for cutting‑edge AI capability is reshaping the semiconductor landscape, as leading AI developers seek more control over their compute destiny.

Media

Cloudflare’s CEO wants to save the web from AI’s oligarchs. Here’s why his plan isn’t crazy.

Crazystupidtech • Fred Vogelstein • August 30, 2025

Media•Journalism•Cloudflare•PayPerCrawl•AIChatbots

Sixteen years ago Matthew Prince and classmate Michelle Zatlyn at Harvard Business School decided there was a better way to help companies handle hacker attacks to their websites. Prince and a friend had already built an open source system to help anyone with a website more easily track spammers. What if the three of them could leverage that into a company that not only tracked all internet threats but stopped them too?

Within months they had a business plan, won a prestigious Harvard Business School competition with it, and had seed funding. They unveiled the company, Cloudflare, a year later at the 2010 Techcrunch Disrupt competion, taking second place. And today, riding the explosion of cloud computing and armed with better technology and marketing, they’ve leapfrogged competitors to become one of the dominant cybersecurity/content delivery networks in the world.

It’s one of the great startup success stories out of Silicon Valley in the past decade. Cloudflare went public in 2019 and is now worth roughly $70 billion. That makes it about number 400 on Yahoo’s list of companies by market cap, making it about as big as Marriott, Softbank and UPS. And it’s turned Prince, 50, into a certified tech oligarch worth $6 billion.

But today, in the middle of August, Prince isn’t on a video feed in front of me because he wants to talk about any of that. He wants to talk about saving something old, not building something new. He wants to talk about saving the World Wide Web and all the online journalism it has spawned.

I’ve never had a conversation with a big tech CEO like this, and I’ve interviewed a lot of them. The best are super high energy, inspiring, out-of-the-box thinkers. But “save,” “old,” “journalism,” and “liberal arts” are dirty words to many of them, especially when they make it big. Some I know would put big screen TVs where the paintings are in the Louvre.

But Prince isn’t like most entrepreneurs I’ve met, either. He’s more of a Renaissance man in geek clothing. Sure he studied computer science at Trinity College in Hartford. But he was also a ski bum, who edited the school newspaper. And he only minored in computer science. His actual major was English.

He wrote his college thesis in 1996, two years before Google was founded, on the potential for political biases in search engines. And while he had offers to work for companies like Netscape, Yahoo and Microsoft after graduating, at that point in his life the idea of being a programmer actually sounded boring to him.

Instead, he went to law school at the University of Chicago, where he also started a legal magazine. Cloudflare grew out of Unspam Technologies, an open source project he started with Lee Holloway after law school while he was also teaching cybersecurity law at the University of Illinois.

Prince wants to talk about the future of the web and journalism with me because he thinks the AI chatbot revolution is killing both of them. And he thinks he can help fix that with something he calls pay-per-crawl, a gambit he and Cloudflare launched on July 1. He cares, he says, because “I love the smell of printer ink and a big wet press. So I kind of have a soft spot for the media industry and how important it is.” This isn’t spin. Two years ago he and his wife bought the Park City Record, his hometown local paper.

Substack Cofounder on the Internet's Content Problem

Youtube • a16z • September 2, 2025

Media•Publishing•Substack•CreatorEconomy•ContentModeration

An Interview with Cloudflare Founder and CEO Matthew Prince About Internet History and Pay-per-crawl

Stratechery • Ben Thompson • September 4, 2025

Media•Publishing•PayPerCrawl

Overview

Ben Thompson’s interview with Matthew Prince explores Cloudflare’s origin story, the company’s opportunistic product strategy, and why Prince is pushing “pay‑per‑crawl” to reset the web’s broken value exchange in the age of AI answer engines. Prince argues that the interface of the internet has shifted from search to AI-generated answers, severing the old quid pro quo in which Google sent traffic in exchange for free crawling; without a new compensation model, quality journalism and unique knowledge production will wither or be captured by a few powerful patrons. (stratechery.com)

Founding, architecture, and “bottoms‑up” strategy

Prince recounts a nontraditional path—English major, law school, Unspam—and the formative role of engineer Lee Holloway in building core technology that later catalyzed Cloudflare. From eight people “above a nail salon,” Cloudflare chose commodity hardware and software-defined orchestration (inspired by Google’s scale-out model) to deliver security and performance at internet scale. This common architecture let Cloudflare continuously repurpose underused capacity into new services, compounding margins and capabilities. (stratechery.com)

Cloudflare’s original aspiration—“a firewall in the cloud”—evolved through a freemium model that attracted NGOs and, inevitably, adversaries, forcing Cloudflare to harden its stack and even run a registrar to close supply‑chain gaps. The lesson: start small, solve urgent customer problems, and let the platform’s breadth emerge organically. (stratechery.com)

Why pay‑per‑crawl, and why now

Prince says the web’s 25‑year search era depended on Google sending monetizable traffic; AI “answer engines” invert that bargain by giving answers without clicks. He outlines three futures: 1) a collapse in original reporting and research, 2) a Medici‑style oligopoly of a few richly funded AI providers that underwrite content, or 3) a new market where AI companies share revenue with content creators. He favors the third and proposes a straw‑man pool funded at roughly “$1 per monthly active user per year,” or about $10 billion today—enough, he argues, to replace open‑web ad revenue outside the major walled gardens. (stratechery.com)

Prince also cites data suggesting how the traffic exchange has deteriorated: “over the last 10 years, it’s become 10x harder to get a click from Google; it’s now 750x harder with OpenAI, and 30,000x harder with Anthropic,” reinforcing the urgency to price access to content rather than rely on referrals. (stratechery.com)

How it would work (and Cloudflare’s role)

At a technical level, Cloudflare’s pay‑per‑crawl integrates with existing web standards to make access programmable: compliant crawlers present payment intent and receive content; non‑paying crawlers can be met with a “402 Payment Required” response that includes pricing; Cloudflare acts as merchant of record and provides enforcement at the edge. Prince says Cloudflare is uniquely positioned because blocking and identifying bots is its daily work—and because publishers themselves asked for help as AI scraping spiked. (stratechery.com)

He stresses scarcity as the necessary precondition for a functioning market. Examples like Reddit—whose insistence on paid access yielded a reported $120 million in 2024 licensing revenue and more in 2025—illustrate how differentiated, non‑fungible datasets command higher prices than general‑interest news text. (stratechery.com)

The Google problem, Perplexity controversy, and near‑term bets

Prince calls Google “the problem” insofar as AI Overviews are governed by the core Googlebot—making opt‑out difficult without sacrificing search presence. He predicts that, within 12 months, Google will voluntarily offer publishers a way to opt out of Overviews; if not, regulators may force it. Meanwhile, Cloudflare blocks AI model training traffic (e.g., Gemini) at scale but has not blocked search or RAG, signaling support for responsible competition. Prince also rebukes Perplexity for allegedly evading blocks and fabricating article text from ad‑tech crumbs—behavior he labels “fraud.” (stratechery.com)

Implications

If AI firms compete on unique content access rather than algorithms, expect a shift toward licensing, exclusivity, and premium pipelines of local, specialized, and community data—potentially reviving local news and niche expertise. (stratechery.com)

Enforcement and payments embedded in the HTTP layer could create a standardized “content market rail,” lowering transaction costs for both large AI platforms and small creators. (stratechery.com)

Cloudflare’s push is both mission‑aligned (a “better internet”) and self‑interested (a new edge‑native marketplace). But the decisive actor remains Google: whether it enables genuine opt‑outs and participates in a compensation framework will shape which of Prince’s three futures wins. (stratechery.com)

Key takeaways

The web’s traffic‑for‑crawl bargain has broken under AI answer engines; new compensation is needed. (stratechery.com)

Prince’s straw‑man: $1/MAU/year (~$10B) redistributed to creators; scarcity will drive pricing. (stratechery.com)

Data points: 10x harder to get a Google click in a decade; 750x (OpenAI) and 30,000x (Anthropic) worse on referrals. (stratechery.com)

Cloudflare aims to operationalize pay‑per‑crawl via web standards and edge enforcement. (stratechery.com)

Google’s policies and regulatory pressure will determine the pace and shape of the transition. (stratechery.com)

Venture

The IPO Market Is Opening Up. These 14 Companies Could Be Next.

Crunchbase • September 2, 2025

Venture

After a prolonged winter, the IPO market in 2025 has finally thawed, with companies from Chime to Figma to CoreWeave launching big debuts in the first eight months of the year. So, who’s next?

To help answer that question, we used Crunchbase’s predictive intelligence tools to curate a list of 14 venture-backed companies in sectors ranging from AI to fintech to consumer goods that could be on tap as IPO candidates in the foreseeable future. Some of them are known IPO hopefuls; others, more under-the-radar picks that nonetheless have strong credentials for a public-market launch. Let’s take a closer look.

Fintech

Stripe

There is perhaps no IPO more anticipated than that of payments giant Stripe. And, unsurprisingly, the fintech is “very likely” to go public, according to Crunchbase predictions.