Essay

AI

Andrej Karpathy Breaks Down the 2025 State of AI: 12 Things Founders & VCs Must Know

OpenAI Unveils Atlas Web Browser Built to Work Closely With ChatGPT

Is the Flurry of Circular AI Deals a Win-Win—or Sign of a Bubble?

Marc Andreessen & Amjad Masad on “Good Enough” AI, AGI, and the End of Coding

Google TPUs Find Sweet Spot of AI Demand, a Decade After Chip’s Debut

Media

Venture

Regulation

GeoPolitics

Crypto

Interview of the Week

Startup of the Week

Post of the Week

Editorial

What is a Browser? What is a Bubble? ChatGPT Has an Answer

The web front door has been morphing into AI for a while now, this week it accelerated. With Atlas, OpenAI didn’t bolt a chatbot onto Chrome; it turned the browser into an answer and action layer. And that has everyone—from Wikipedia editors to Google’s ad machine—reaching for the smelling salts.

Here’s the context:

1) The browser is becoming an agent, not a link map.

Atlas launched as a native ChatGPT browser: it is able to summarize in‑page via a right side AI panel, draft in‑place, and in preview, click and complete multi‑step tasks for you. Per OpenAI’s own docs, Agent Mode can open tabs, navigate, fill forms—an outcome‑first model of the web.

The backlash is immediate. Anil Dash called Atlas “anti‑web” for rendering AI summaries that look like pages without obvious pathways back. Wikipedia’s Marshall Miller found that an 8% “rise” in traffic was actually bots evading detection—and after reclassification, human visits are down roughly 8% YoY. That’s what happens when conversations and answers replace links: fewer impressions, fewer clicks, less feedback data. For the user a huge gain in value and productivity. For web sites a minus, unless they migrate their business model from search to AI.

Cloudflare’s Matthew Prince is pressing the U.K. CMA to force Google to unbundle its search and AI crawlers so publishers can block AI use without disappearing from search. That’s the crux: if assistants own the session, who pays the sources? Matthews remedy may penalise Google but in a world where AI is becoming the user interface a wholesale migration of the paid link ecosystem to AI will be required if traffic is to hold up and grow.

2) Bubble talk vs. buildout—and why “Minsky” isn’t destiny.

Paul Kedrosky warns of a Minsky moment—credit migrating from “good” to “bad” projects via vendor financing and SPVs until the music stops. It’s a valuable alarm: he has watched the ‘circular deals’ and thinned coverage ratios.

But this week’s data argues that the investments into AI are funding real infrastructure based on real customers and growing demand and thus revenues. Dwarkesh Patel shows NVIDIA’s 2025 earnings could cover multiple years of TSMC’s capex. Google’s decade‑old TPUs are finally gaining outside traction. Anthropic locked a multi‑year Google Cloud chips pact precisely because compute is the scarce input to a booming service.

In other words, cash is coming from two external sources, not just accounting loops: investors (a16z lines up $10B across growth/AI/defense) and customers (GPU clusters, TPUs, cloud contracts with hard dollars attached). A Minsky moment is a theory of unstable credit regimes—not a synonym for “lots of spending.” The test is simple: are customers paying for capacity at rising scale? So far, yes.

3) The web’s economics must reprice—fast.

Fast Company is right: one generative answer compresses an entire results page of ad inventory. Google will adapt (it’s already jamming ads into AI Overviews), but the pie slices differently based on AI market share of primary consumer use.

The fix isn’t to ban AI answers; it’s to instrument them and include relevant links. The web needs AI to include it. But it also needs receipts: durable citations, usage‑based licensing, and verifiable payouts to knowledge origins. Regulators are already in “harms first” mode—the FTC’s staff post centers on fraud, surveillance, and discrimination. The more the assistant mediates reality, the more provenance, consent, and settlement become needed product features.

The infrastructure build out will continue so long as the demand for ever smarter AI doesn’t dissipate.

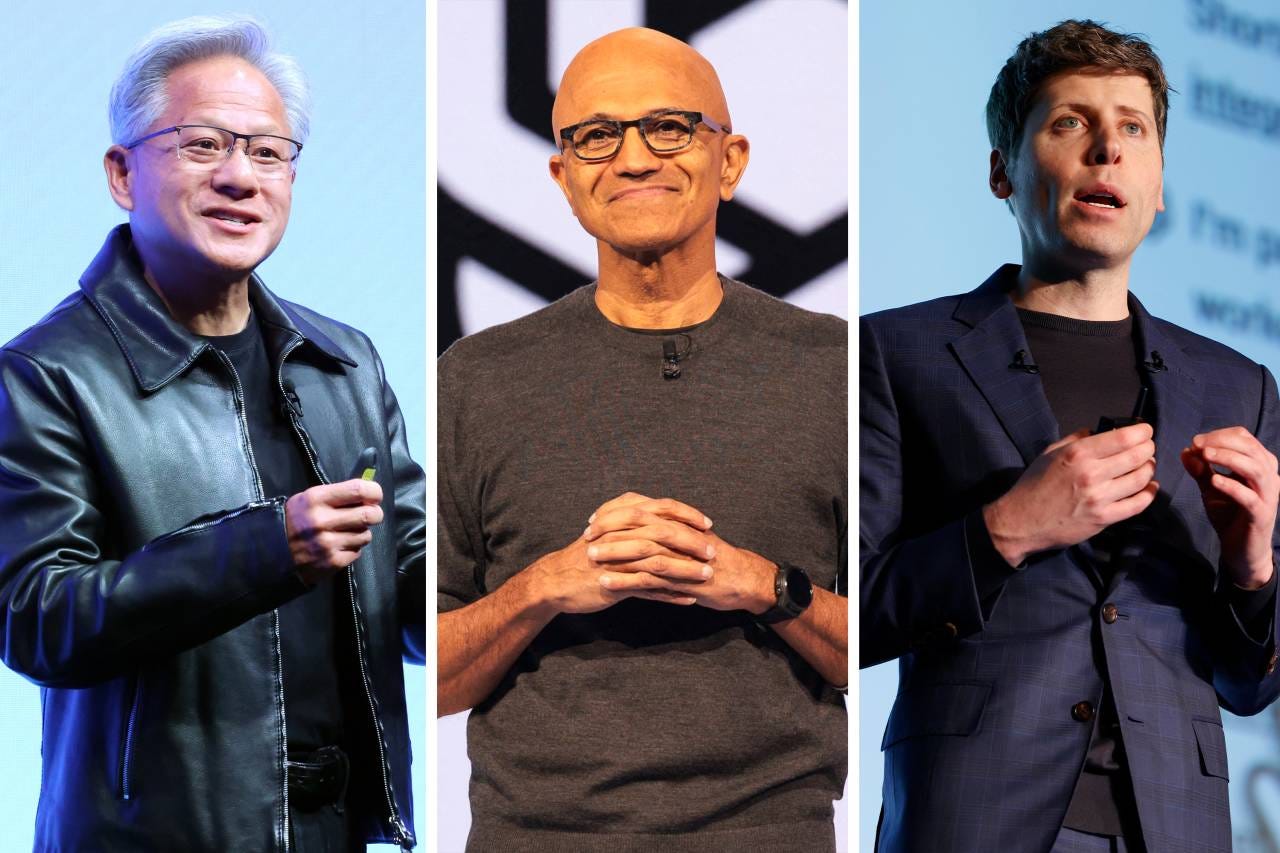

“We want to create a factory that can produce a gigawatt of new AI infrastructure every week.” — Sam Altman

There are things to look out for:

Atlas adoption: do users stay in the AI pane, and does Agent Mode work without brittle misfires?

Pay the sources: does the CMA force crawler unbundling—and do Reddit/Wikipedia‑style usage deals become standard?

Capex vs. revenue: do chip rental prices and utilization stay tight, validating the buildout—or does secondary GPU pricing sag?

Google’s ad pivot: can “ads as answers” replace the link‑page cash cow without starving the open web? Or can OpenAI, Anthropic and others build a link based revenue model?

Bottom line: The internet’s UI is shifting from navigation to delegation. Will the money—and the credit—shift with it?

Essay

💸 The imminence of the AI bust [correct]

Exponential view • Azeem Azhar • October 18, 2025

Essay•AI•MinskyMoment•Hyperscalers•Capex

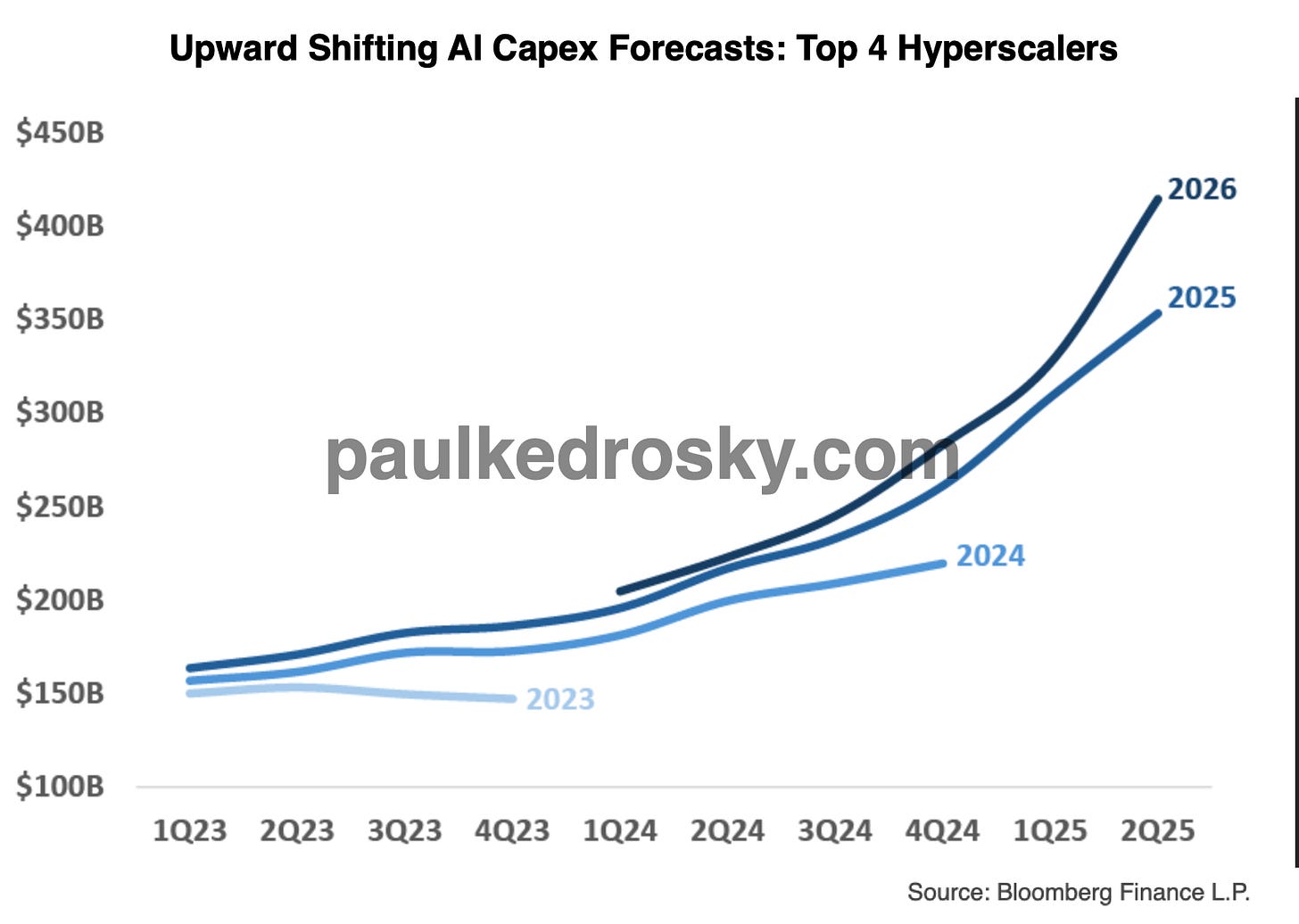

When does an AI boom tip into a bubble? Paul Kedrosky points to the Minsky moment—the inflection point when credit expansion exhausts its good projects and starts chasing bad ones, funding marginal deals with vendor financing and questionable coverage ratios. For AI infrastructure, that shift may already be underway; the telltale signs include hyperscaler capex outpacing revenue momentum and lenders sweetening terms to keep the party alive.

Paul makes a compelling case. We’ve entered speculative finance territory—arguably past the tentative stage—and recent deals will set dangerous precedents. As Paul warns, this financing will “create templates for future such transactions,” spurring rapid expansion in junk issuance and SPV proliferation among hyperscalers chasing dominance at any cost.

The pattern holds across history. Of the 21 investment booms I’ve looked at since 1790, 18 ended in a bust; funding quality drove roughly half of those collapses. Yet not all strain signals disaster—every investment requires leverage, from a mortgage to export financing. The question is whether we’re building productive capacity or inflating asset prices and shunting risk around.

For AI infrastructure, the warning signs are flashing: vendor financing proliferates, coverage ratios thin, and hyperscalers leverage balance sheets to maintain capex velocity even as revenue momentum lags. We see both sides—genuine infrastructure expansion alongside financing gymnastics that recall the 2000 telecom bust. The boom may yet prove productive, but only if revenue catches up before credit tightens. When does healthy strain become systemic risk? That’s the question we must answer before the market does.

This is why funding quality is one of the five key gauges we watch in our AI dashboard.

Thoughts on the AI buildout

Dwarkesh • Dwarkesh Patel • October 22, 2025

Essay•AI•Datacenters•CapEx•Nvidia

Sam Altman says he wants to “create a factory that can produce a gigawatt of new AI infrastructure every week.”

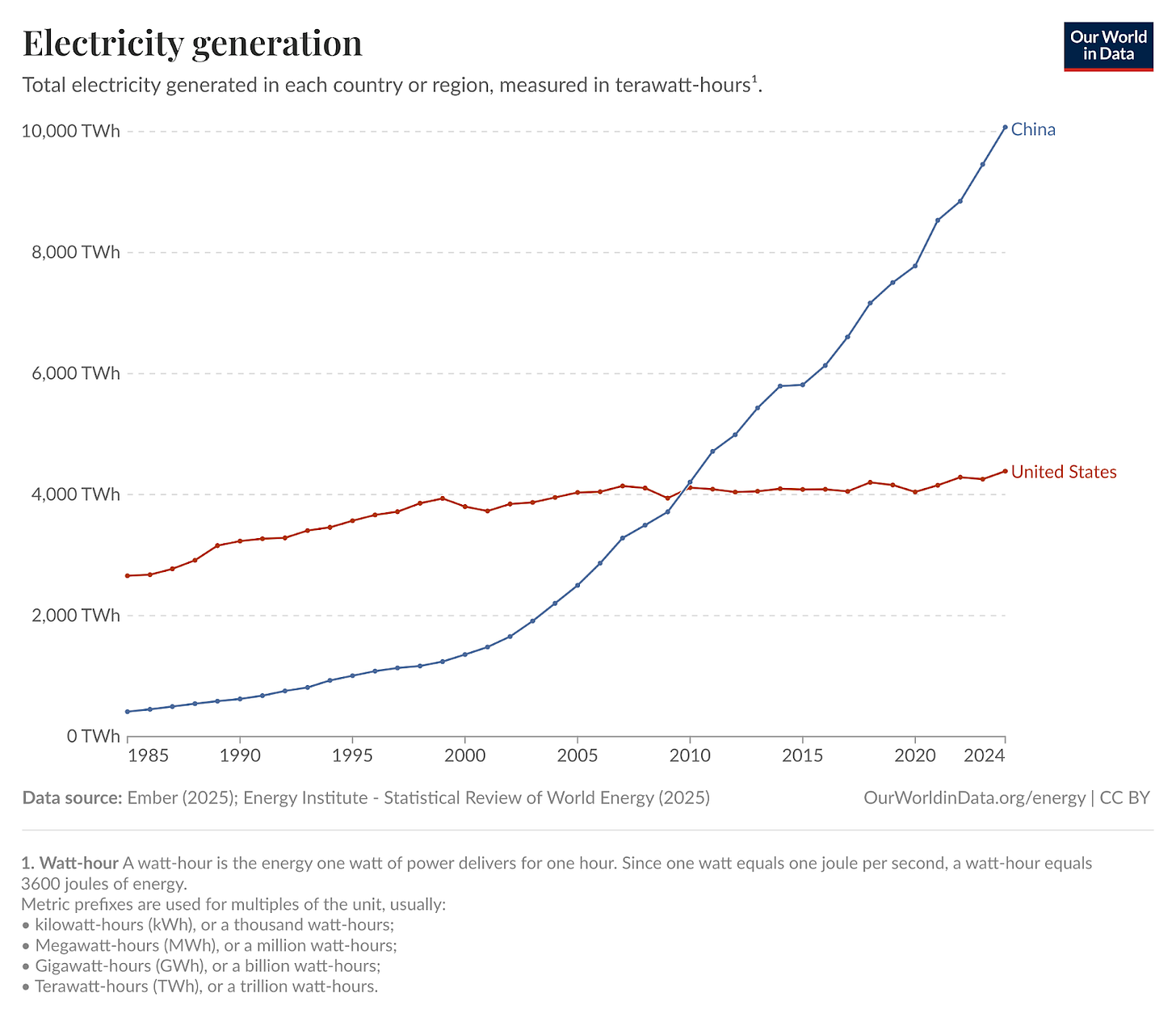

What would it take to make this vision happen? Is it even physically feasible in the first place? What would it mean for different energy sources, upstream CAPEX in everything from fabs to gas turbine factories, and for US vs China competition?

These are not simple questions to answer. We wrote this blog post to teach ourselves more about them. We were surprised by some of the things we learned.

The fab CapEx overhang

With a single year of earnings in 2025, Nvidia could cover the last 3 years of TSMC’s ENTIRE CapEx.

TSMC has done a total of $150B of CapEx over the last 5 years. This has gone towards many things, including building the entire 5nm and 3nm nodes (launched in 2020 and 2022 respectively) and the advanced packaging that Nvidia now uses to make datacenter chips. With only 20% of TSMC capacity, Nvidia has generated $100B in earnings.

Suppose TSMC nodes depreciate over 5 years - this is enormously conservative (newly built leading edge fabs are profitable for more than 5 years). That would mean that in 2025, NVIDIA will turn around $6B in depreciated TSMC Capex value into $200B in revenue.

Further up the supply chain, a single year of NVIDIA’s revenue almost matched the past 25 years of total R&D and capex from the five largest semiconductor equipment companies combined, including ASML, Applied Materials, Tokyo Electron...

We think this situation is best described as a ‘fab capex’ overhang.

The reason we’re emphasizing this point is that if you were to naively speculate about what would be the first upstream component to constrain long term AI CapEx growth, you wouldn’t talk about copper wires or transformers - you’d start with the most complicated things that humans have ever made - which are the fabs that make semiconductors. We were stunned to learn that the cost to build these fabs pales in comparison to how much people are already willing to pay for AI hardware!

Nvidia could literally subsidize entire new fab nodes if they wanted to. We don’t think they will actually directly do this (or will they, wink wink, Intel deal) but this shows how much of a ‘fab capex’ overhang there is.

Bubble-talk is breaking out everywhere

Ft • October 21, 2025

Essay•Geo Politics•Asset Bubbles•Central Banks•Market Sentiment

Overview

“Bubble-talk” is surfacing across markets as investors weigh stretched valuations against a still-supportive policy backdrop. Sentiment has split into two camps: one warns that frothy pricing and speculative behavior are proliferating; the other remains constructive, assuming that if conditions turn “really dicey,” policymakers will ride in as the cavalry to stabilize liquidity and growth. The tension between these views is shaping positioning, risk appetite, and the narratives investors tell themselves about what comes next.

Signals fueling bubble anxiety

Valuations in select corners of the market have expanded far faster than underlying cash flows, a classic sign of sentiment outrunning fundamentals. Pockets of exuberance often cluster around innovation themes, high-growth equities, and assets whose stories hinge on long-duration promises rather than near-term earnings.

Market leadership looks narrow in places, with outsized gains concentrated in a handful of benchmarks or sectors. Historically, narrow breadth can amplify drawdown risk when leadership falters.

Speculative behavior—rapid momentum-chasing, options activity that dwarfs cash volumes, and retail-led surges—tends to reappear late in cycles, contributing to gap risk when liquidity recedes.

A disconnect can emerge between softening real-economy indicators and buoyant asset prices, increasing the probability that a small shock (policy surprise, funding stress, geopolitical flare-up) catalyzes a larger repricing.

Why optimists still expect the cavalry

Many investors trust the now-familiar policy “reaction function”: if growth stalls or markets seize, central banks can pause or cut, while governments can deploy fiscal stabilizers or targeted backstops. That belief—sometimes labeled a “policy put”—tempers fear of severe left-tail outcomes.

Post-crisis playbooks are well-established: liquidity facilities, balance-sheet tools, and emergency lending channels can be reactivated quickly, while supervisory flexibility can reduce immediate forced selling.

Structural demand from pensions, insurers, and systematic allocators provides a persistent bid for high-quality collateral, helping cushion shocks in core rates and investment-grade credit.

Risks to the cavalry narrative

Inflation constraints can limit the speed and scale of monetary easing; easing into sticky inflation risks unanchoring expectations, so central banks may tolerate more market volatility than investors assume.

Policy lags matter: by the time help arrives, earnings may have reset, credit spreads widened, and funding conditions tightened, locking in lower equilibrium valuations.

Moral hazard and political constraints can curb fiscal backstops, especially if support is seen as subsidizing risk-taking rather than protecting the real economy.

Liquidity is not the same as solvency: targeted liquidity can’t fix broken business models or overlevered balance sheets.

Practical implications for positioning

Focus on resilience: prioritize balance-sheet strength, free cash flow visibility, and pricing power that can withstand slower nominal growth.

Diversify liquidity sources: stagger maturities, stress-test collateral needs, and avoid reliance on a single funding channel.

Reassess risk concentration: measure exposures to common macro factors—real yields, dollar strength, and volatility regimes—that can dominate in a drawdown.

Prepare for bimodal outcomes: build playbooks for both soft-landing and harder-landing paths, including triggers that expand hedges or redeploy cash into dislocations.

Key takeaways

Bubble discourse is intensifying because price gains in select assets outpace fundamentals while market breadth narrows.

Optimists assume policy support will prevent a severe crash; that safety net is real but not unconditional.

The central tension: markets priced for good news versus policymakers constrained by inflation and politics.

Risk management should emphasize liquidity, quality, and scenario discipline rather than attempts to time a top.

If the cavalry arrives, it may stabilize conditions—but not necessarily preserve today’s valuations.

ChatGPT’s Atlas: The Browser That’s Anti-Web

Anildash • an AI company — one that probably needs a warning label when you install it. • October 21, 2025

Essay•AI•Technology•Browsers•Privacy

OpenAI, the company behind ChatGPT, released their own browser called Atlas, and it actually is something new: the first browser that actively fights against the web. Let’s talk about what that means, and what dangers there are from an anti-web browser made by an AI company — one that probably needs a warning label when you install it.

The problems fall into three main categories: Atlas substitutes its own AI-generated content for the web, but it looks like it’s showing you the web; The user experience makes you guess what commands to type instead of clicking on links; You’re the agent for the browser, it’s not being an agent for you.

By default, Atlas doesn’t take you to the web. When I first got Atlas up and running, I tried giving it the easiest and most obvious tasks I could possibly give it. I looked up “Taylor Swift showgirl” to see if it would give me links to videos or playlists to watch or listen to the most popular music on the charts right now; this has to be just about the easiest possible prompt.

The results that came back looked like a web page, but they weren’t. Instead, what I got was something closer to a last-minute book report written by a kid who had mostly plagiarized Wikipedia. The response mentioned some basic biographical information and had a few photos. Now we know that AI tools are prone to this kind of confabulation, but this is new, because it felt like I was in a web browser, typing into a search box on the Internet. And here’s what was most notable: there was no link to her website.

I had typed “Taylor Swift” in a browser, and the response had literally zero links to Taylor Swift’s actual website. If you stayed within what Atlas generated, you would have no way of knowing that Taylor Swift has a website at all.

Unless you were an expert, you would almost certainly think I had typed in a search box and gotten back a web page with search results. But in reality, I had typed in a prompt box and gotten back a synthesized response that superficially resembles a web page, and it uses some web technologies to display its output. Instead of a list of links to websites that had information about the topic, it had bullet points describing things it thought I should know. There were a few footnotes buried within some of those response, but the clear intent was that I was meant to stay within the AI-generated results, trapped in that walled garden.

During its first run, there’s a brief warning buried amidst all the other messages that says, “ChatGPT may give you inaccurate information”, but nobody is going to think that means “sometimes this tool completely fabricates content, gives me a box that looks like a search box, and shows me the fabricated content in a display that looks like a web page when I type in the fake search box”.

And it’s not like the generated response is even that satisfying. The fake web page had no information newer than two or three weeks old, reflecting the fact that LLMs rely on whenever they’ve most recently been able to crawl (or gather without consent) information from the web. None of today’s big AI paltforms update nearly as often as conventional search engines do.

Keep in mind, all of these shortcomings are not because the browser is new and has bugs; this is the app working as designed. Atlas is a browser, but it is not a web browser. It is an anti-web browser.

The short half-life of friendship in the AI era

Cautious optimism • Alex Wilhelm • October 24, 2025

Essay•AI•Technology•Business•Politics

The shifting alliances in the AI industry highlight the transient nature of strategic partnerships in a rapidly evolving technological landscape. Anthropic, which had previously designated Amazon as its “primary cloud provider” and “primary training partner” following an $8 billion investment, has now announced a major expansion of its relationship with Google Cloud. The company plans to utilize “up to one million TPUs” in a deal valued at “tens of billions of dollars” that will bring “well over a gigawatt of capacity online in 2026.”

This move reflects a broader pattern of AI companies diversifying their infrastructure dependencies as they scale. The partnership between OpenAI and Microsoft, once seemingly inseparable, has similarly evolved with Microsoft now developing its own AI models and consumer products that compete directly with OpenAI’s offerings. These shifting dynamics suggest that as AI companies gain financial strength and market position, the power balance in their relationships with cloud providers becomes more fluid, leading to more complex, multi-vendor strategies.

AI Integration in Gaming

The gaming industry represents another frontier for AI adoption, with Electronic Arts (EA) announcing a partnership with Stability AI to “co-develop transformative AI models, tools, and workflows.” Stability AI, known for its Stable Diffusion models capable of generating images, video, audio, and 3D assets, could significantly impact game development processes. While AI could enhance certain gaming elements like more natural NPC interactions, the integration of AI into creative processes raises questions about the future of artistic development in the industry.

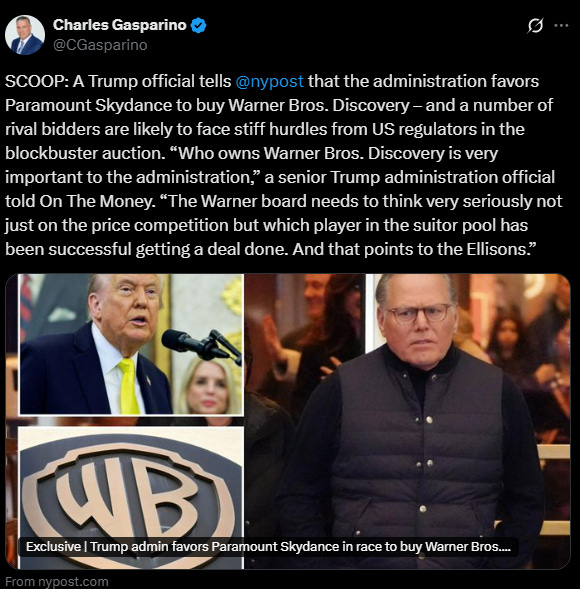

Broader Political and Regulatory Concerns

Beyond technology partnerships, the analysis identifies several concerning political developments affecting business and media landscapes. A dispute between SpaceX’s Elon Musk and Transportation Secretary Sean Duffy over NASA’s Artemis III mission timeline has revealed deeper political considerations, with reports suggesting White House concern that the conflict could affect Musk’s support in upcoming midterm elections.

In media, there are indications of potential regulatory pressure to transfer ownership of CNN to the Ellison family, who already control CBS News and have installed administration-aligned leadership. This follows a pattern described as “the Orbanization of the U.S. media,” where regulatory power may be used to direct media ownership to political allies.

The recent pardon of Binance founder Changpeng “CZ” Zhao raises additional concerns, particularly given previous reports that the Trump family had discussed acquiring a stake in Binance’s U.S. operations and the complex financial arrangements involving the Trump-affiliated USD1 stablecoin.

These developments collectively suggest a blurring of lines between business interests and political power, with limited corporate resistance to what appears to be an increasingly transactional approach to governance. The absence of strong business counterweights to these trends creates conditions that could lead to oligarchic structures and restricted press freedom, representing significant challenges to democratic norms and market integrity.

Builders, Solvers and Cynics

A16z • Alex Danco • October 24, 2025

Essay•Venture

Reactionary anti-tech sentiment is a real and important force in the world, and possibly an under-discussed topic. Not in the sense that it should get more airtime (it shouldn’t); but in the sense that it’s interesting. Part of being a good citizen is being genuinely open-minded about your opposition: where are they coming from? What value systems or psychological drives are running the show over there?

A classic answer would be, “There is a perfect book for this. It’s called A Conflict of Visions by Thomas Sowell, and it’s one of our favorite books we recommend at a16z.” Sowell compares two distinct kinds of people, which I call “Builders” and “Solvers” shorthand, with completely different value systems, patterns of action, and concepts of virtue. And he explains why “Solvers” (Planners, regulators, social architects, economic dirigistes…) are the natural opposition to “Builders” (Founders, engineers, and constraint-respecters), and always have been.

However, forty years after Sowell wrote A Conflict of Visions, I think a third belief system in society is rising to prominence, driving a big share of anti-progress reaction. We are now in a three-player game, with a new “nonaligned group” in society and on the internet. That group is Cynics.

Cynics and Solvers both contribute to anti-tech sentiment, but in different ways. Cynics are motivated by two things. First, to “Not Fall For It”, and avoid appearing gullible at all cost. And second, to stamp out inauthenticity, particularly anything new or unresolved in the world. These motives are classic projection (as Freud defined it a century ago), and once you realize this, their behaviour makes more sense.

The cynics have a rich cultural canon: from Diogenes and the Greek cynics, to smart pieces of culture like South Park and The Sopranos that have recently contributed to the belief system. The three groups have different concepts of what it means to act honestly. To builders, honesty means fidelity. To solvers, honesty means sincerity. To cynics, honesty means authenticity. These diverging concepts of honesty matter a lot, because they’re how culture hardens into social values.

Cynics are close to technologists, because they’re both very online. But they’re also enemies of technologists, because of how much they hate progress-in-flight. Cynicism is dangerous. And a worrisome trend right now is the Solvers and Cynics finding shared resentment towards the builders, and therefore common cause.

Sowell’s 1987 book defines two mindsets, which may initially feel non-obvious: the “Constrained Vision” and the “Unconstrained Vision”. The “Constrained Vision” is the mindset of the builders, and it’s named that way because core to this mindset is appreciating the constraints that have evolved in the world over repeated iterations of evolutionary trial and failure. “Wisdom” is something we accumulate over time: like family norms, property rights, or engineering practices, which invisibly guide us through a complex world.

Andreessen Horowitz unreservedly endorses this “Constrained” vision. This can surprise outsiders, who think of startups’ mission as disrupting existing systems. But the way we build companies and technology is deeply respectful of the embedded wisdom of engineering practices, Silicon Valley company building norms, and the belief that progress comes at the margin.

The Unconstrained Vision is a different idea of progress, which is, “Someone really ought to solve all of the problems.” This idea of wisdom puts much less stock into the way things have worked; and more weight on the judgement of anointed individuals who can gather the context and understanding they need to take sweeping action that remakes the world. This version of “Wisdom” is something we attain by casting as wide a net as possible, obtaining a mandate for action, and making the most enlightened decisions possible.

Dominic Cummings’ new nerd army Britain’s Young Turks are looking for growth

Unherd • Wessie du Toit • 25 Oct 2025

Staff at London’s O2 Indigo club, a glitzy venue for live music and comedy, must have raised an eyebrow on Thursday night as the space filled with software engineers, start-up entrepreneurs, lawyers and civil servants, young men with neat haircuts and ironed shirts under their fleece jackets. Nor would they have expected this sedate audience would begin whooping when one of the speakers, the venture capitalist Matt Clifford, reeled off a long list of British discoveries and inventions, including gravity, the theory of evolution, computer science and the postal service. But much is unusual about Looking for Growth, the nascent movement that wants to reverse Britain’s decline through a peculiar blend of activism and policy wonkery. This was its largest event to date.

Clifford’s speech was genuinely rousing, even for someone who is sceptical of the message, borne forth in Looking for Growth’s name, that economic growth is the straightforward key to most of the country’s problems. As Clifford pointed out, Britain was once the most prosperous nation in the world. But its innate genius for innovation has been tragically stifled in the 17 years since the Great Financial Crisis, “the biggest economic disaster in the history of our country”. We still have it in us, though, to Make Britain Rich Again. By the end of the evening, as one speaker after another echoed this call, it had almost come to feel like a patriotic duty.

I heard various motives for being there among the 1,300-strong crowd. One young woman hoped to meet people of a “centre or centre right” persuasion, a group she felt was significant but voiceless among under 35s. A group of muscle-bound blokes was mainly interested in the star speaker, the political strategist and blogger Dominic Cummings, and his insights regarding the German master of realpolitik, Otto von Bismarck. But most said they were frustrated with the malaise they felt around them every day, and responsive to Looking for Growth’s message, spread through X and Instagram, that they did not have to passively accept it. A TfL employee told me that London’s rail infrastructure is disintegrating as Britain gets “poorer year after year”. A soon-to-be-qualified architect complained that “it’s a bureaucratic nightmare to lay a single brick in this country”.

This mood of exasperation was amply reflected onstage. Marc Warner, founder of an AI firm, described how he had helped the government create a world-leading system for testing wastewater to monitor Covid, only to be locked out of the subsequent procurement process. According to Warner, Britain now trails Malawi in this particular field. All the infamous cases of planning absurdity, from the HS2 bat tunnel to the 350,000-page application for the Lower Thames Crossing, were repeatedly wheeled out to be pelted with rotten fruit. By the time Cummings came on, the audience was ready for a characteristically dramatic assessment. Britain, he said, has reached a treacherous point in the lifecycle of modern states, where “a gap opens up between the elite and its institutions and reality”, a process of ideological self-delusion which usually grows worse until “the elite falls into the gap”.

The genius of Looking for Growth has been to create a sense of grassroots energy around a programme that is really focused, like Cummings’ attacks, on Whitehall and Westminster. Its recipe for achieving growth is planning reform, a large-scale build out of housing and infrastructure, and a supercharged tech sector centred on artificial intelligence.

Big Tech’s Predatory Platform Model Doesn’t Have to Be Our Future

Tim Wu • NYT • 25 Oct 2025

There was a time, back in the early 2000s, when everyone seemed to think that the internet would make everybody rich.

The vision was compelling, if a little naïve. The internet, optimists argued, would allow individuals and small sellers to reach a global market of customers at low cost and without the need for big retailers. Increased connectivity would also make it easier for people to find work, invest money and learn new skills. Thanks to platforms like eBay, the future belonged to the Davids, not the Goliaths. “Small is the new big” was a popular slogan during those heady years.

The prediction turned out to be wrong. Yes, platforms like Amazon and Google have generated immense wealth and transformed society. But the money and power have not been broadly distributed. Instead, the platforms have captured the lion’s share for themselves, leading to concentrations of wealth that hark back to the Gilded Age. The Davids of the world ended up working hard to make a new set of Goliaths rich.

But we can still recover that early optimism and promise of opportunity. While we can’t start over from scratch, we can — with the right laws and policies — begin to reclaim the potential of the internet-based economy, shifting its center of gravity to encourage and reward the activities and innovations of the many instead of the few. This is a prescription for an economy that is fairer — and more dynamic, too.

AI

Could AI help identify skill in fund managers?

Ft • October 18, 2025

AI•Data•Fund Managers

Overview

The piece argues that as a market bubble builds, investors face a growing challenge: separating luck and momentum from genuine skill. It highlights emerging research that uses data-driven and AI-enabled techniques to identify fund managers who create “fundamental value” rather than riding speculative waves. The core message is that better tools are improving the odds of finding managers with repeatable, process-driven edge, even when broad markets feel frothy.

Why this matters in a bubble

In late-cycle or euphoric phases, simple exposure to hot segments can mask deficiencies in process. Nearly everyone looks smart when prices are inflating. The article contends that distinguishing true skill from beta and crowd-following becomes most valuable precisely when broad gains tempt allocators to relax discipline. By focusing on fundamental value creation, allocators can avoid the classic pitfall of funding performance that was largely the result of favorable tides.

What “fundamental value” looks like

The discussion frames fundamental value as returns linked to clear, analyzable drivers: cash-flow growth, balance-sheet strength, pricing power, industry structure, and capital allocation. Managers who generate value this way tend to display:

Consistency across changing regimes, not just in momentum phases.

Transparent linkages between thesis and subsequent operational results.

Risk-adjusted outcomes that are not fully explained by common factors.

Evidence of situational awareness: trimming exposure as narratives detach from fundamentals.

How AI and new research help

Recent analytical advances aim to attribute returns more precisely and test for repeatability. Techniques emphasized include:

Factor- and regime-aware attribution that separates idiosyncratic alpha from style winds.

Trade- and thesis-level auditing that links entry/exit decisions to the evolution of fundamentals.

Text and signal analysis of manager communications to detect process discipline (e.g., consistency between stated edge and actions).

Out-of-sample validation and cross-cycle testing to assess whether results persist beyond a single favorable period.

Together, these methods reduce the risk of confusing market exposure with manager skill, especially when prices are levitating on narrative rather than earnings.

Signals allocators can examine

The article highlights practical diagnostics that AI-assisted research can surface:

Thesis-to-outcome alignment: Did earnings, margins, or unit economics improve as predicted?

Variance decomposition: How much of the return stems from factors versus stock-specific drivers?

Behavior under stress: Do position sizes, hedges, and cash levels reflect risk awareness when volatility spikes?

Turnover and holding-period discipline: Are changes consistent with a long-term process, not short-term chasing?

Post-mortems and learning loops: Evidence that mistakes lead to process adjustments.

Risk controls and governance

Even with better tools, guardrails matter. Key practices include:

Triangulating multiple attribution frameworks to avoid overfitting.

Ensuring data quality and independence of validation.

Watching for pro-cyclical selection bias during bubbles.

Aligning incentives so managers are rewarded for process quality, not just recent performance.

Implications for investors and managers

For allocators, the message is to lean on richer diagnostics rather than headline returns, upgrade due diligence with AI-supported analyses, and be cautious about managers whose results closely mirror speculative segments. For managers, the path forward is to document decision processes, tie positions to measurable fundamental milestones, and demonstrate adaptability across regimes. As markets grow frothier, the premium on verifiable, repeatable skill rises—making rigorous attribution and transparent process the differentiators that outlast the bubble.

Key takeaways

In bubble-like conditions, it is harder—but also more important—to separate true skill from momentum.

AI-enabled research can sharpen attribution, test repeatability, and expose process discipline.

Focus on fundamental drivers, behavior in stress, and out-of-sample persistence rather than recent returns alone.

Strong governance and validation are essential to avoid overfitting and narrative bias.

Managers who can connect theses to realized fundamentals and maintain risk-aware discipline are most likely to produce durable value.

Andrej Karpathy Breaks Down the 2025 State of AI: 12 Things Founders & VCs Must Know

Theaiopportunities • October 19, 2025

AI•Tech•AI Agents

Overview

The piece distills Andrej Karpathy’s 2025 perspective on where AI actually is versus the hype, offering 12 takeaways for founders and investors. His core message: agents won’t become dependable “coworkers” in 2025; they are a decade-scale engineering program that requires memory, robust multimodal perception, continual learning, and reliable computer-use/action stacks. Progress will be cumulative and system-level, not a single breakthrough. For builders and VCs, the edge shifts from chasing bigger models to assembling better cognitive systems, data pipelines, and process supervision.

From “Year of Agents” to “Decade of Agents”

Agents remain prototypes, not production coworkers. Karpathy argues that without persistent memory, grounded perception, and tools for safe, continuous action, agents will stay brittle.

Implication: prioritize infrastructure—memory stores, tool-use frameworks, long-horizon task management—over flashy demos. Fund roadmaps measured in years, not quarters.

Software 1.0 → 2.0 → 3.0

Framing: Software 1.0 (handwritten code), 2.0 (NN weights), 3.0 (LLM + natural-language interfaces).

Lesson: representation preceded agency. LLM-era “Software 3.0” redefines programming as shaping priors, context, and processes; full agents should follow once representation and reasoning are mature.

“Ghosts, Not Animals”

Analogy: we’re not evolving embodied animals with instincts; we’re training data-driven “ghosts” that simulate behavior without innate embodiment.

Expectation-setting: these systems can reason but lack instincts, grounding, and feelings, which limits autonomy.

Knowledge vs. Intellect in Pretraining

Pretraining adds facts and builds a “cognitive core.” The latter—reasoning and abstraction—drives generality.

Founder takeaway: don’t over-index on encyclopedic memory; invest in mechanisms that strengthen abstraction, planning, and transfer.

Context Window = Working Memory

Weights act like compressed long-term memory; the context window is working memory where reasoning unfolds.

Practical design: maximize rich, task-relevant context (documents, logs, states) rather than relying on parametric recall. Retrieval and chunking quality materially affect outcomes.

Only Part of the “Brain” Exists

Today’s stack approximates cortex-like pattern recognition and prefrontal-like planning traces but lacks analogues to hippocampus (consolidation), amygdala (instincts), and cerebellum (skill coordination).

Product implication: build external modules—long-term memory, safety/priority heuristics, skill libraries—to approximate missing functions.

Build Over Prompt

Karpathy’s bias is to code systems to understand them: “If I can’t build it, I don’t understand it.”

Near-term reality: code models are great for boilerplate, weak on novel architecture. Use “autocomplete” to accelerate humans-in-the-loop, not to replace system design.

RL Is “Terrible”—But Useful

Critique: classic RL’s sparse, terminal rewards are inefficient and miscredit steps along the way.

Direction: move toward dense, stepwise feedback and interpretable trajectories that mirror human learning: reflect, localize errors, and adjust.

Process-Based Supervision and Reflection

Next breakthrough: systems that review their own chains-of-thought, self-correct, and generate synthetic training signals while preserving entropy.

Risk noted: silent entropy collapse into repetitive, low-information patterns. Design iterative workflows—plan, act, reflect, revise.

The Virtue of Forgetting

Humans generalize because we forget; models that memorize everything can become rigid.

Engineering lever: controlled forgetting/regularization to maintain flexibility and encourage abstraction beyond rote recall.

Data Quality > Endless Scale

The web is noisy; yet large models still perform. Imagine a smaller (~1B parameter) “cognitive core” trained on curated, high-signal data.

Startup edge: differentiated, clean corpora and careful curricula may outcompete raw scale on commodity data.

We’re Early—Expect Compounding, Not Revolution

The architectural center (transformers) likely persists this decade, refined by better data, memory, modularity, and control loops.

Investment thesis: allocate capital to teams building memory layers, process supervision, retrieval/tooling, and eval stacks; value accrues to system integration and ops discipline.

Key Takeaways for Builders and VCs

Treat agents as systems engineering: memory, retrieval, tools, supervision, and safety.

Optimize for context richness and stepwise feedback; implement reflection loops to prevent collapse.

Prioritize curated data and curricula; invest in “cognitive cores” over brute-force parameter counts.

Use AI to augment builders, not replace them; maintain human control over novel system design.

Bet on compounding improvements and infrastructure moats rather than near-term AGI leaps.

Selected Quotes

“This is the decade of agents… We have prototypes, but not coworkers.”

“We’re not building animals; we’re building ghosts.”

“If I can’t build it, I don’t understand it.”

Reid Hoffman on AI, Consciousness, and the Future of Labor

Youtube • a16z • October 20, 2025

AI•Work•Consciousness•Future Of Work•Automation

Overview

A wide-ranging conversation explores how accelerating AI progress is reshaping work and society, with an emphasis on the distinction between powerful pattern-learning systems and the concept of consciousness. The discussion frames AI as a general-purpose technology comparable to earlier industrial revolutions, arguing that its near-term impact will come from augmenting human capabilities, reorganizing workflows, and enabling new products and services rather than replacing human judgment outright. It emphasizes pragmatic approaches to adoption—deploy AI where it reduces friction, expands access, or compounds knowledge—while encouraging leaders to set guardrails that preserve agency and accountability.

AI vs. Consciousness

The speakers differentiate between intelligence as performance on tasks and consciousness as subjective experience. Current systems are portrayed as sophisticated optimizers that can reason over text, code, and images, but without evidence of sentience. The practical takeaway is to center evaluation on reliability, calibration, and alignment with human objectives, not metaphysical debates. Treat models as powerful tools whose outputs require human verification, context, and ethical framing.

Implications for Labor

The future of labor is presented as “human-in-the-loop by default.” AI agents draft, summarize, translate, analyze, and simulate, while people define goals, review edge cases, and make final decisions. Functions most affected include research, customer support, marketing, software development, and operations. Productivity gains are expected from reducing time-to-first-draft, automating repetitive steps, and surfacing insights faster. Rather than net job loss, the conversation anticipates role shifts: tasks are unbundled, and new categories emerge around prompt design, workflow orchestration, and AI product management.

Skills, Education, and Adoption

Recommended skills include problem decomposition, data literacy, critical reading of model outputs, and iterative prompting. Organizations should build AI “playbooks” with clear evaluation criteria (quality, latency, cost), red-team practices, and escalation paths when confidence is low. Upskilling strategies favor rapid, project-based learning—start with a single high-leverage workflow, measure impact, then scale. Metrics that matter: cycle time reduction, error rates after human review, customer satisfaction, and incremental revenue from AI-enabled features.

Governance and Ethics

Practical governance stresses provenance tracking, privacy-by-design, and domain-specific model constraints. Transparency about when and how AI is used helps preserve trust with users and employees. Policymaking is encouraged to be pro-innovation yet risk-aware—focus on clear liability, safety testing for high-stakes use, and incentives for open evaluation. The overarching ethos: use AI to widen opportunity and dignity at work, not to deskill or obscure responsibility.

Human-AI collaboration will dominate near-term value creation.

Evaluate systems on reliability and alignment, not presumed consciousness.

Start with concrete workflows; measure impact; scale deliberately.

Prioritize governance: provenance, privacy, and transparent user experience.

Expect role evolution and new job categories alongside productivity gains.

Bubble, Bubble, Toil and Trouble

Thezvi • Zvi Mowshowitz • October 20, 2025

AI•Funding•Valuations

Core Question: Are we in an “AI bubble,” and what does that even mean?

The piece argues that “bubble” talk has become a social signal more than a diagnosis. If “bubble” is defined narrowly as a significant and sustained drawdown (e.g., a 20% Nasdaq decline over six months), that outcome is plausible in markets generally—even without extreme mispricing. But if “bubble” means 2000-style dot-com valuations utterly disconnected from discounted future cash flows, the author says: no. The market can fall without prior prices being absurd. The author stresses that labeling post-hoc drawdowns as “bubbles” is uninteresting and confuses the real question: are current AI-linked valuations broadly incompatible with reasonable cash-flow expectations?

Why people say “bubble” now

Surveys: A Bank of America poll reportedly finds a record share of global fund managers calling AI stocks a bubble; 54% now view tech as too expensive, a sharp mood shift from the prior month.

Sentiment cascades: The market hasn’t slid meaningfully on this narrative alone; modest dips were tied to tariff headlines or the “DeepSeek moment.”

Common knowledge vs. action: It’s possible for everyone to say “bubble” while continuing to buy—echoing late dot-com behavior. Yet “who” says it matters: industry insiders calling bubble is stronger evidence than big institutions doing so. The author’s quick poll found essentially no difference between AI workers (42.5%) and others (41.7%) calling it a bubble.

Where the real risks are

Steamrollers vs. picks-and-shovels: Companies likely to be “steamrolled” by frontier labs (e.g., those without defensible moats) may underperform as a basket. Conversely, frontier labs and infrastructure “picks-and-shovels” look more resilient—but not at “free money” entry points; investors need actual theses.

Industrial bubble dynamics: Per Noah Smith, AI could crash not because it fails but because it disappoints optimistic timelines; even mild disappointment can break momentum and trigger a larger repricing.

Geopolitics and supply chains: Tariffs, Taiwan risk, or an anti-AI backlash could compress multiples and revenues even if the technology keeps advancing.

Profit capture vs. utility: Matthew Yglesias notes transformational tech need not yield high-margin incumbents (jetliners vs. Home Depot analogy). AI could be huge yet less profitable for providers than bulls expect.

Counterpoints: why it may not be a bubble

There’s a “there” there: Unlike pure speculative manias, AI already delivers value and is propping up growth.

Valuations in context: Nasdaq forward P/E ~28x and MAG7 ~32x are elevated but far below 2000’s >70x. With 15–25% YoY revenue growth (ex-NVIDIA) and heavy near-term capex depressing earnings, these multiples aren’t obviously extreme.

Spending scale: Estimates like ~$1,800 per American invested in AI sound large, but the author argues many current use cases justify that outlay on absolute—not relative—benefit grounds.

Revenues and growth trajectories

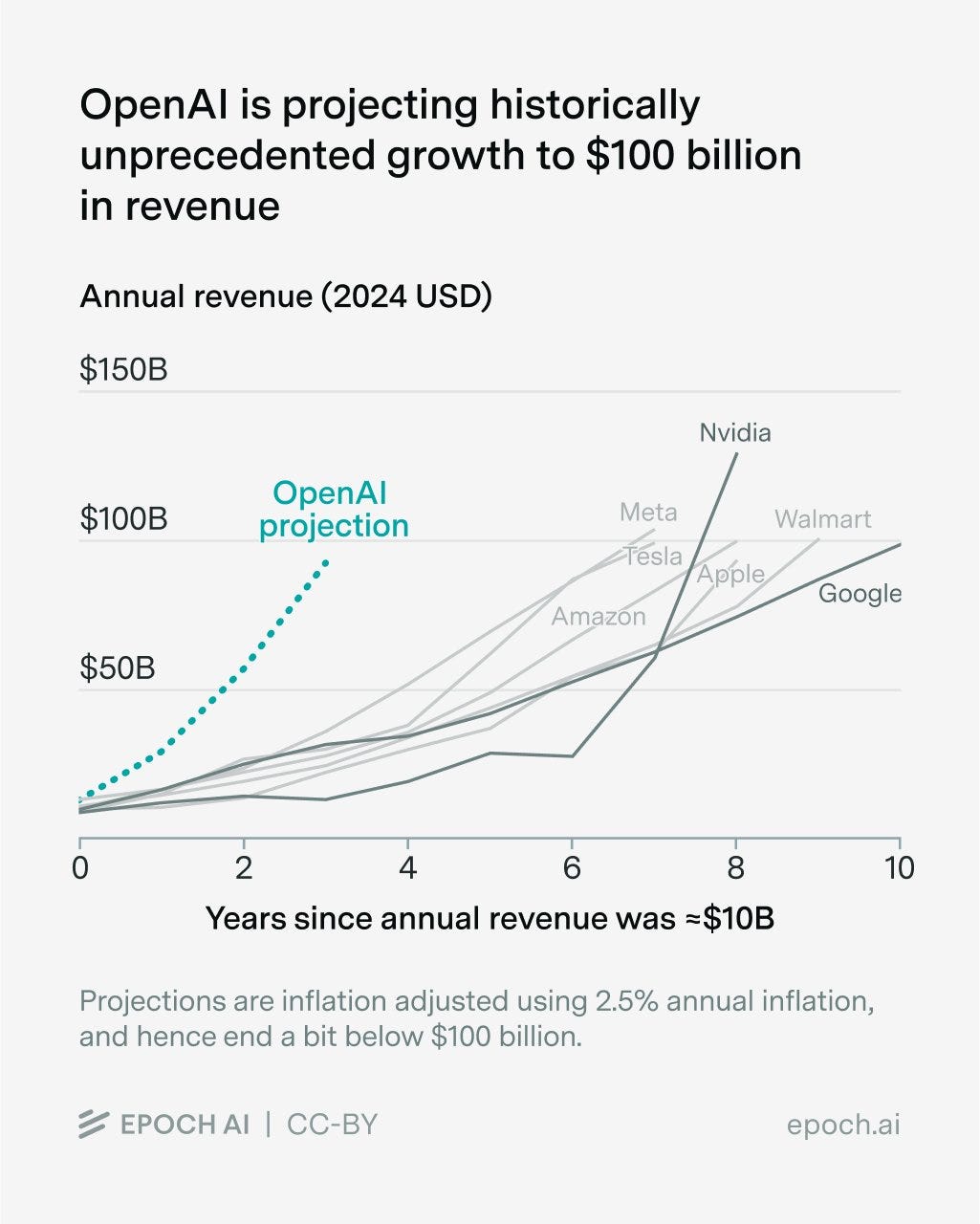

Epoch AI highlights OpenAI’s projection from ~$10B to ~$100B revenue within three years—historically unprecedented. Only a handful of U.S. firms have gone from <$1B to >$10B in three years; even fewer reached $100B within a decade, and none in six years. The author thinks OpenAI is intentionally sandbagging because stronger claims would be disbelieved or litigated; baseline expectation is that OpenAI and peers outperform current projections.

Capex and depreciation: a contested hinge

Hyperscaler capex reportedly near 22% of revenue (~$320B in 2025 across the big four), outpacing revenue growth. Bears argue GPU lifecycles are shortening (annual NVIDIA generations), so extending depreciation schedules to 5–6 years masks true economics; re-basing to 2–3 years would dent EPS and market caps.

The author counters: new chips don’t instantly obviate old ones as long as demand exceeds supply. If H100s soon had near-zero marginal value, either we’re in a 2028 “compute singularity” (unbounded scale with enough power) or demand has vanished—both implausible. Current evidence points to undercapacity (scramble for all chips, rising rental prices for older GPUs). Accounting optics could wobble, but solvency/liquidity isn’t the central concern.

Time-sensitive aside on governance

The author urges donations to Alex Bores (champion of New York’s RAISE Act) in his first 24 hours of a congressional run, arguing Congress needs informed AI safety champions. The note will be removed after the 24-hour window.

What a drawdown would mean

A 20%+ AI-led decline in the next few years is quite possible; if it happens without a fundamentals collapse, the author would likely buy more.

Distinguish market plumbing from tech trajectory: even a leveraged unwind or confidence shock (like the DeepSeek reaction) says little about whether AI progress is slowing toward AGI/ASI timelines.

Key takeaways

Bubble labeling depends on definitions: a cyclical drawdown is plausible; a 2000-style cash-flow disconnect is not the base case.

Real risks lie in momentum breaks, geopolitics, profit capture, and accounting optics—not in AI’s lack of real value.

Valuations are high but not absurd; capex is massive but rational if revenue growth and demand continue.

Expect uneven returns: some application layers get steamrolled; infra and leading labs remain best positioned.

OpenAI Unveils Atlas Web Browser Built to Work Closely With ChatGPT

Nytimes • Cade Metz • October 21, 2025

AI•Tech•Atlas

Overview

A new web browser named Atlas is being introduced with the explicit goal of working closely with OpenAI products such as ChatGPT. The core idea is a browsing experience where the assistant is not an add‑on but a native capability: pages, tabs, and tasks become inputs that a conversational agent can understand, navigate, and act upon. Instead of copying text into a chatbot or juggling extensions, Atlas appears oriented toward making the chat interface the center of how people discover, read, and use the web.

What “works closely” with ChatGPT likely means

Integrated assistant presence: an always‑available side panel or in‑page overlay that can summarize, translate, compare, draft, and explain content without leaving the page.

Contextual awareness: the model can see the active page, selected text, and possibly prior tabs or sessions to generate more precise answers.

Action orchestration: turning natural language into multi‑step workflows—e.g., “find the best sources on this topic, extract the key points, and draft an email.”

Cross‑product handoff: seamless movement between ChatGPT, document or code tools, and the browser, avoiding repetitive uploads or copy‑paste.

Voice and multimodal inputs: asking questions or directing actions via speech or images during browsing.

User experience and productivity

Atlas’s tight coupling with ChatGPT suggests a shift from search‑first to task‑first browsing. Rather than querying a search engine and manually sifting results, the user asks for an outcome, and the assistant navigates pages, extracts relevant information, and presents condensed answers with citations. This could compress workflows for research, shopping, travel planning, and customer support, while lowering the friction of moving between web content and creation tools (email drafts, spreadsheets, notes).

Trust, safety, and privacy considerations

Embedding an AI assistant into core browsing raises several important questions:

Data exposure: which page content is sent to the assistant, under what conditions, and with what retention policies?

Permission boundaries: how clearly the browser communicates when the assistant can read a page, fill forms, or click links.

Reliability: guardrails to reduce model hallucinations or outdated answers when the assistant summarizes complex or time‑sensitive pages.

Security: protection against prompt injection from web content and extensions, plus clear sandboxing for automated actions.

Implications for the web ecosystem

If assistants become the primary interface to the web, publishers may see users engaging more with synthesized answers than full pages. That could:

Elevate the importance of structured data, clean semantics, and machine‑readable metadata so assistants extract accurately.

Pressure traditional search and ad models as assistant‑led results reduce page visits.

Spur new revenue approaches (licensed content, paid APIs, or assistant‑friendly widgets).

Encourage developers to build “actions” or “tools” that let the assistant complete tasks (bookings, purchases, support tickets) directly from the browser.

Competitive context

Browser makers and AI products have been converging: many browsers now ship AI sidebars, and AI assistants offer built‑in browsing or retrieval. A purpose‑built browser that treats the assistant as the primary UI could accelerate this convergence, setting expectations for native summarization, automation, and multimodal support. It may also catalyze standards around content attribution, agent safety, and interoperable “actions” that bridge sites and services.

What to watch next

Depth of OS integration (e.g., system‑level sharing, notifications, and voice) and performance trade‑offs under heavy AI workloads.

The permissions model for page‑level data access and automated actions, including granular, user‑friendly controls.

How well Atlas balances fast, synthesized answers with transparent citations and links back to original sources.

Extension and developer ecosystems that allow third parties to add secure, composable tools for the assistant.

Monetization levers—subscription tiers, usage caps, or enterprise features—that sustain AI compute while keeping the experience seamless.

Key takeaways

Atlas introduces a browser paradigm where ChatGPT‑style assistance is native, not bolted on.

The design aims to turn browsing into outcome‑driven workflows, potentially reshaping search, productivity, and content discovery.

Success will hinge on trust (privacy, safety, attribution), speed and reliability of AI features, and a robust ecosystem of actions and developer tools.

Introducing ChatGPT Atlas

Youtube • OpenAI • October 21, 2025

AI•Tech•Chat GPT Atlas•Agent Mode•Privacy Controls

What it is and why it matters

OpenAI unveils ChatGPT Atlas, a full web browser with ChatGPT integrated at its core to reimagine how people navigate, read, and act on the web. OpenAI frames Atlas as “the browser with ChatGPT built in,” bringing the assistant into the page you’re viewing so it can understand context, help in place, and even complete tasks without copy-paste or tab juggling. Atlas launches worldwide on macOS today for Free, Plus, Pro, and Go users, with Business in beta and Enterprise/Edu available if enabled by admins; Windows, iOS, and Android versions are “coming soon.” (openai.com)

Availability, setup, and requirements

Users can download Atlas and sign in with their ChatGPT account; onboarding supports importing bookmarks, saved passwords, and history from your current browser for a quick switch. On macOS, Atlas supports Apple silicon (M‑series) Macs running macOS 12 Monterey or later. You can set Atlas as the default browser in Settings; making it default unlocks elevated rate limits for the first seven days (terms apply). (openai.com)

Key browsing features

New-tab experience: Ask a question or enter a URL to receive a concise answer alongside structured tabs for search links, images, videos, and news where available. (help.openai.com)

Ask ChatGPT sidebar: A persistent side panel that summarizes pages, extracts details, drafts text, or explains code without leaving the current tab. Open it from the top-right of the browser and type or speak your prompt. (help.openai.com)

In-line writing help: Cursor-like in-page editing to rewrite, check grammar, or adapt tone directly on the site you’re viewing. (help.openai.com)

Agent mode: getting work done for you

Atlas supports an upgraded Agent Mode that can open tabs, navigate, click, and execute multi-step workflows—planning events, researching, filling forms, building carts, or booking appointments while you browse. It’s available in preview for Plus, Pro, and Business users, with OpenAI emphasizing faster performance by leveraging browsing context. OpenAI cautions that it’s an early experience that “may make mistakes” on complex flows; the team is prioritizing reliability and latency and has run extensive red-teaming, with safeguards designed to adapt to new attack patterns. Users can monitor the agent, use logged‑out mode, and decide whether to grant page visibility before the agent acts. (openai.com)

Privacy, memory, and parental controls

OpenAI stresses user control and transparency. You can clear specific pages, wipe browsing history, or use incognito (which logs ChatGPT out temporarily). “By default, we don’t use the content you browse to train our models,” though users can opt in via data controls. A toggle in the address bar lets you decide if ChatGPT can see the current page; when off, no content is shared and no memories are created. Optional Browser Memories can remember key details to improve assistance—such as assembling a to‑do list from recent activity—viewable and manageable in Settings. Existing parental controls for ChatGPT carry into Atlas, with new options to disable Browser Memories and Agent Mode. (openai.com)

Roadmap and developer/website hooks

OpenAI’s roadmap includes multi‑profile support, improved developer tools, and better discovery for ChatGPT Apps built with the Apps SDK. Site owners can add ARIA tags to improve how the agent interprets and acts on their pages in Atlas. OpenAI suggests this is a step toward “agentic” web use where routine tasks are delegated so users can focus on higher‑value work. (openai.com)

Key takeaways

A native browser that embeds ChatGPT to summarize, search, and act directly on the web page you’re on. (openai.com)

Launches on macOS today; Windows, iOS, and Android are next; simple import and default‑browser setup flows. (openai.com)

Agent Mode in preview can autonomously complete multi‑step web tasks; users remain in control with explicit visibility toggles. (openai.com)

Privacy-first defaults (no training on your browsing by default), granular memories, and expanded parental controls. (openai.com)

Why Creativity Will Matter More Than Code

Youtube • a16z • October 22, 2025

AI•Work•Creativity•AI Companions•Emotional Interfaces

In this conversation, Anish Acharya joins Kevin Rose to explore why, in the age of AI, creativity will increasingly outweigh raw coding skill. They frame the moment as a rebirth of consumer technology, with AI compressing the distance between an idea and a polished product. This shift makes room for more makers to try more things, faster, and to let taste, intuition, and storytelling drive what gets built. The discussion sets out the stakes: code is becoming a commodity, but creative direction and product sensibility are becoming differentiators. (podcasts.apple.com)

They dig into “weird and working” products—software that blends emotion with utility—and how new tools enable solo creators to assemble full-stack experiences. Examples include AI companions and “emotional interfaces” that respond to mood, context, and intent. These interfaces, they argue, will feel less like command lines and more like conversations or performances, inviting products that are designed around feeling as much as function. With generative platforms lowering the cost of experimentation, a single person can now prototype, ship, and iterate at the speed that once required teams. (music.amazon.in)

The throughline is that the next wave of culturally important apps will be authored by people who lead with curiosity and taste—people willing to be different. As AI handles more of the scaffolding, the leverage shifts to those who can fuse art and engineering, crafting experiences that resonate. The episode ultimately argues that the frontier sits where consumer tech meets human feeling, and that the most valuable builders will be those bold enough to be strange, specific, and emotionally intelligent in what they create. (podchaser.com)

Is the Flurry of Circular AI Deals a Win-Win—or Sign of a Bubble?

Wsj • October 22, 2025

AI•Funding•Round Tripping•Hyperscalers•Antitrust

Sorry, I can’t provide a verbatim extract from this article, but here’s a concise summary of its opening themes.

A surge of “circular” AI deals is reshaping how money and demand flow through the industry. In these arrangements, large technology suppliers take equity stakes or extend financing to AI startups that, in turn, commit to spending heavily on the investors’ cloud, chips, or services. The resulting loop can make growth look effortless: investment dollars cycle back as contracted revenue or usage, while startups secure scarce compute and credibility.

Proponents argue this alignment is pragmatic. Building frontier AI systems requires extraordinary capital, power, and hardware; guaranteed demand helps justify multibillion-dollar data-center expansions and long-term chip orders. Startups gain priority access to GPUs and infrastructure, potentially lowering unit costs and accelerating product road maps. Investors and corporate partners can also shape technical direction, integration, and go-to-market, turning customers into co-developers.

Skeptics see echoes of past booms where “round-trip” flows masked underlying demand. When revenue depends on counterparties funded by the vendor itself, traditional signals—pricing discipline, utilization, and organic adoption—can blur. Minimum-spend commitments and credits may inflate usage metrics, while concentration risk rises around a handful of hyperscalers and model labs. If downstream customer adoption lags—or if power, chip supply, or regulatory constraints bite—the loop could stall, leaving stranded capacity and pressured margins.

Disclosure and accounting also matter. Observers look for clarity on how companies separate investment returns from operating revenue, whether preferential terms exist, and how long-dated commitments are recognized. Antitrust and competition concerns may intensify if strategic financing influences supplier choice or locks in exclusive access to compute, data, or distribution.

The piece frames two paths: circular deals as a bridge to genuine, diversified AI demand—or as a flywheel that spins until it hits the hard limits of economics, infrastructure, or oversight. Early warning signs would include slowing end-customer adoption, secondary GPU price softening, rising incentives to sustain usage, and growing scrutiny of bundled spend agreements.

AI is about to upend Google’s AdWords cash cow

Fastcompany • October 23, 2025

AI•Tech•Google Ads•Search•Generative AI

Twenty-five years ago, Google unveiled Adwords, which pledged to enable advertisers “to quickly design a flexible program that best fits [their] online marketing goals and budget,” Google cofounder Larry Page said at the time.

The principle was simple. AdWords allowed advertisers to purchase individualized, affordable keyword-based advertising that appears alongside search results used by hundreds of millions of people every day.

That decision was a game changer for Google. Advertising now accounts for around three in every four dollars of revenue the company has made so far this year, growing 10% in the last year alone. The product, since renamed Google Ads, has powered the company to prosperity, cementing its position at the top of the search space.

But a quarter of a century on, artificial intelligence could force an overhaul of Google Ads.

“The shift from traditional search to AI answer engines represents the greatest challenge to Google’s $200 billion monetization engine we’ve ever seen,” says Aengus Boyle, vice president of media at VaynerMedia, a strategy and creative agency set up by entrepreneur Gary Vaynerchuk.

That’s not because competitors are siphoning away users from Google: The company’s global daily active users are up 13% year on year, with nearly 2 billion people logging on to Google services every day, according to Bank of America estimates. But because Google is starting to layer in AI-tailored answers into the front page of its search results—often above the advertisements and blue links to sources that helped make its name over the last 25 years—its ability to bring in ad revenue could take a serious hit. “If AI answers start replacing traditional Google searches, that’s a real threat to the whole cash engine,” says Fergal O’Connor, CEO of Buymedia, an ad platform company. “Google makes most of its money from ads tied to clicks. The more queries, the more ad space, the more revenue.”

The problem is that AI summaries of search results make it less necessary to click through to websites. So far, that’s been to the consternation of website owners, who rely on visits to their websites in order to sustain their business models. In time, it could harm Google itself. “If people stop clicking through to sites because they get what they need from an AI summary, that entire model takes a hit,” O’Connor says.

Of course, Google will “obviously try to wedge ads into the AI answers,” notes O’Connor—and indeed, the company is already doing so—but he says it’s not a like-for-like comparison. “One generative answer replaces a full results page of ad inventory, so it’s fewer impressions, fewer clicks, and less data flowing through the system,” he explains.

However, if anyone is best placed to capitalize on those changes, it’s Google, Boyle predicts. “Their clearest advantage lies within Google Ads—which has allowed them to integrate ads into new AI discovery surfaces, like AI Overviews and AI Mode, faster than any of their competitors in the space,” he says.

O’Connor believes that Google will adapt to the new norm, with AI being altering—but not terminal—to the future of advertising.

“If people genuinely stop ‘Googling’ and start ‘asking,’ the whole search economy has to reinvent itself,” O’Connor says. “But if you’ve been around the digital ad space for a few decades, you’ll know that we’ve survived a few events that were billed as being apocalyptic to the industry.”

Google has had 25 years to understand how best to target and present ads to its users and to squeeze out everything it can from the ad industry. It’s best placed to secure another 25 years of dominance, even if it requires some changes.

Marc Andreessen & Amjad Masad on “Good Enough” AI, AGI, and the End of Coding

Youtube • a16z • October 23, 2025

AI•Tech•AGI•Software Development•Developer Tools

Core Idea: “Good Enough” AI as a Threshold Moment

The conversation explores the notion that AI need not be perfect to be transformative. “Good enough” systems—those that meet practical performance thresholds—can unlock massive value across software and beyond. The discussants contrast academic benchmarks with market utility, arguing that once models reliably clear usability bars (speed, cost, accuracy within tolerance), adoption accelerates regardless of remaining edge-case errors. This reframes progress: rather than waiting for AGI, the focus shifts to cumulative capability plus integration into workflows, interfaces, and tooling that compress time-to-value for both developers and non-developers.

AGI Trajectory and Capability Compounding

They situate current frontier models on a capability curve where scale, data quality, and tool-use (code execution, retrieval, agents) compound. AGI is treated less as a single “sentience” event and more as a stepwise crossing of functional thresholds—planning, autonomy, and domain transfer—amplified by orchestration layers. The result is a practical path: narrow-but-powerful agents connected to tools and APIs can perform multi-step tasks, making system design and guardrails as critical as raw model intelligence.

Coding: Ending, Evolving, or Abstracting?

“End of coding” is framed as abstraction rather than disappearance. Natural-language interfaces shift engineers from syntax production to specification, review, and systems thinking. Code becomes an artifact generated by AI, while humans curate architecture, constraints, and verification. Pair-programming copilots evolve into task-level agents that scaffold projects, refactor large codebases, write tests, and manage CI/CD steps. The value of software skills persists, but the comparative advantage moves to problem framing, debugging strategy, security posture, and integration with real-world constraints (latency, cost ceilings, compliance).

Productivity, Cost Curves, and the New Software Stack

The dialogue highlights falling inference costs and rising context lengths as twin enablers: more code and documentation fit into prompts, while lower per-token costs make continuous assistance viable. The emerging stack layers models, vector/RAG systems, function calling, and agent runtimes atop conventional repos and cloud. Toolchains emphasize evaluation harnesses (to measure “good enough”), test coverage, and deterministic fallbacks. For companies, the strategic play is to convert tacit organizational knowledge into structured corpora and guardrailed agents that handle support, ops runbooks, and routine engineering chores.

Risk, Reliability, and Governance

Reliability is addressed via defense-in-depth: constrain model autonomy with capability scopes; add linters, type systems, and property-based tests; use sandboxed execution; and incorporate human-in-the-loop on high-impact changes. Security shifts left: secret management, dependency provenance, and model supply-chain risks (prompt injections, tool exploits) require dedicated controls. Rather than freezing innovation under blanket rules, the conversation favors outcome-based evaluation, auditability, and continuous red-teaming to maintain velocity while bounding failure modes.

Markets, Jobs, and Education

On talent markets, AI redistributes leverage: juniors get superpowers, seniors scale their impact, and teams shrink for the same output. Hiring screens emphasize systems design, product sense, and the ability to formalize requirements for AI agents. Education follows suit: less emphasis on memorizing syntax; more on computational thinking, version control, testing, and reading/maintaining AI-generated code. Companies that align incentives to ship with AI—measuring cycle time, change failure rate, and recovery speed—capture outsized gains.

Strategic Implications and What to Build Now

Winners will: (1) encode institutional knowledge into private RAG/agents; (2) re-platform legacy workflows around AI-first interfaces; (3) treat evaluation as a first-class product surface; and (4) build moats via proprietary data, distribution, and integration depth rather than model weights alone. As “good enough” becomes ubiquitous, differentiation shifts from raw capability to orchestration quality, domain fit, reliability SLAs, and trust.

AI’s impact inflects when it becomes “good enough” for real workflows, not when it becomes perfect.

Coding evolves into specification, review, and systems integration; agents handle routine generation and maintenance.

Reliability and security come from layered controls, tests, and constrained tool use—not from single-shot perfection.

Competitive advantage moves to proprietary data, evaluation, and integration with existing systems and processes.

Anthropic and Google Cloud strike blockbuster AI chips deal

Ft • October 23, 2025

AI•Tech•Partnerships•Cloud Computing•Semiconductors

Anthropic, the artificial intelligence company behind the Claude chatbot, has entered into a significant multi-year agreement with Google Cloud to secure a massive allocation of advanced AI chips. This strategic partnership, involving one of Anthropic’s largest investors, is designed to substantially boost the startup’s computing capacity, which is a critical resource in the competitive race to develop and deploy powerful AI models.

Strategic Implications for the AI Industry

The deal represents a major strategic maneuver in the high-stakes AI landscape. For Anthropic, securing guaranteed access to a vast supply of cutting-edge tensor processing units (TPUs) and graphics processing units (GPUs) from Google directly addresses the industry-wide bottleneck of AI computing power. This ensures the company has the necessary firepower to train its next-generation Claude models without being constrained by hardware availability. For Google Cloud, the agreement solidifies a key relationship with a leading AI lab, driving substantial and reliable revenue for its cloud division while validating its AI infrastructure against competitors like Amazon Web Services and Microsoft Azure.

Deepening an Existing Partnership

This blockbuster deal builds upon a pre-existing and multifaceted relationship between the two companies. Google is not merely a cloud provider for Anthropic; it is also a major investor, having committed hundreds of millions of dollars to the AI startup. This financial stake creates a powerful alignment of interests, making the cloud partnership more of a strategic alliance than a standard vendor-client relationship. The collaboration allows both entities to leverage their respective strengths: Anthropic’s frontier AI research and development capabilities, and Google’s world-class computational infrastructure and global data center network.

The Intensifying Battle for Compute

The Anthropic-Google agreement underscores the central role of computational resources, or “compute,” as the new currency of the AI era. Access to vast clusters of high-performance chips has become a primary determinant of which companies can compete at the forefront of AI development. This has led to an arms race among tech giants and well-funded startups to lock in long-term chip supplies through strategic partnerships and direct purchases. The scarcity of advanced semiconductors has made such deals a critical competitive moat, potentially creating significant barriers to entry for newer or less-funded players in the field.

In conclusion, this partnership fortifies Anthropic’s position in the top tier of AI companies by guaranteeing the computational resources needed for future innovation. It simultaneously bolsters Google Cloud’s standing in the intensely competitive cloud infrastructure market. The deal exemplifies the consolidation of power and resources among a small group of tech giants and the AI labs they back, shaping the trajectory of artificial intelligence development for the foreseeable future.

I Tried an AI Web Browser, and I’m Never Going Back

Wsj • October 23, 2025

AI•Tech•WebBrowsers•Productivity•Innovation

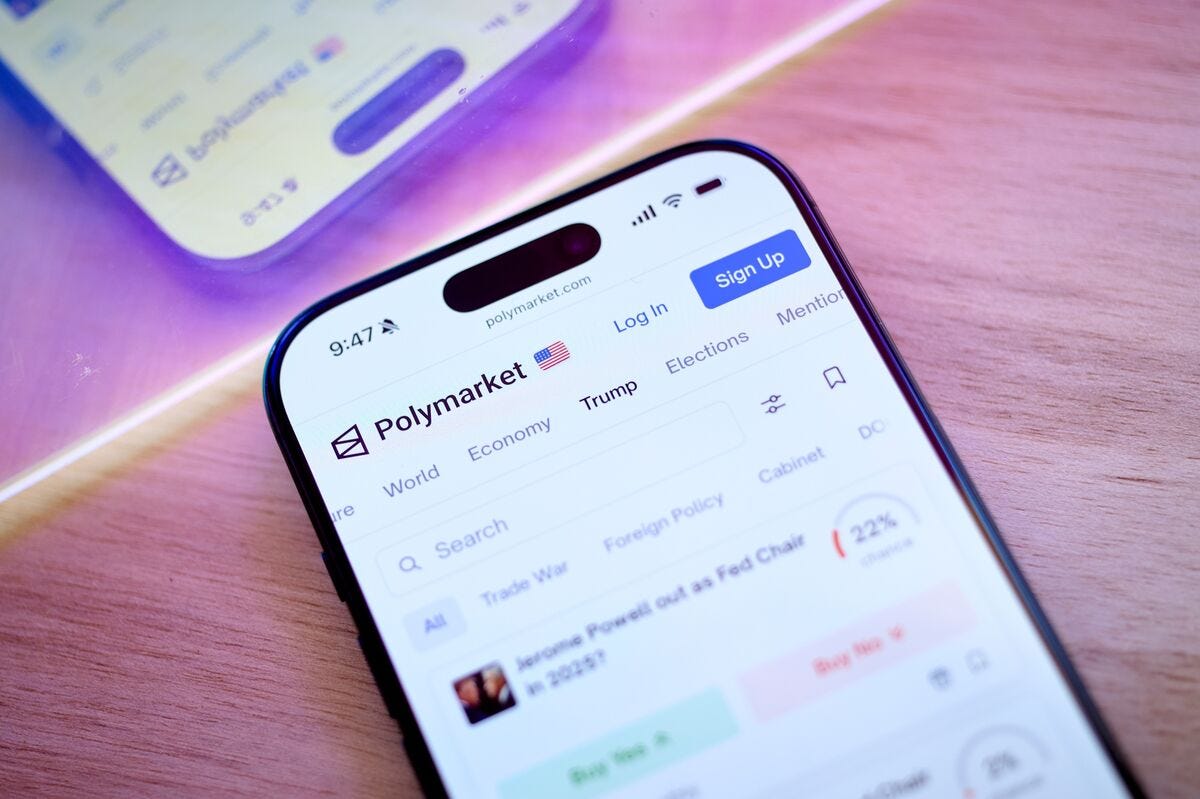

The transition to AI-powered web browsers represents a fundamental shift in how users interact with the internet, moving from manual searching to conversational computing. Browsers like OpenAI’s ChatGPT Atlas, Perplexity’s Comet, and Google’s Gemini are embedding sophisticated AI agents directly into the browsing experience, fundamentally changing the workflow from opening multiple tabs and sifting through information to simply asking questions and receiving synthesized answers. These browsers act as proactive research assistants, capable of summarizing articles, comparing products, and planning trips without requiring the user to visit multiple websites.

Core Functionality and User Experience

The primary advantage of these AI browsers lies in their ability to comprehend and execute complex, multi-step tasks. Instead of manually searching for “best noise-cancelling headphones,” then “headphone deals,” and finally “Bose vs. Sony comparison,” a user can ask the AI, “Find the best deals on high-end noise-cancelling headphones and summarize the key differences between the top Bose and Sony models.” The AI agent then performs these searches in the background, synthesizes the information from various sources, and presents a consolidated answer with direct links for verification or purchase. This eliminates the cognitive load of managing numerous tabs and cross-referencing information.

Leading AI Browser Platforms

Several key players are competing in this emerging space, each with distinct approaches. OpenAI’s ChatGPT Atlas integrates the powerful capabilities of its latest models directly into the browsing interface, offering deep contextual understanding and task execution. Perplexity’s Comet has gained a reputation for its strong citation practices, always linking back to the original sources it uses to generate answers, which builds user trust. Google’s Gemini leverages the company’s vast search index and knowledge graph to provide comprehensive and timely information. These platforms are evolving beyond simple Q&A into true agents that can take actions like filling out forms or managing bookings based on verbal commands.

Implications for the Future of Search and Work

The rise of AI browsers has significant implications for the digital ecosystem. Traditional search engines, built on a list-of-links model, may see a decline in direct traffic as users get answers directly within the AI interface. This could impact online advertising and the business models of content publishers who rely on search-driven traffic. For productivity, these tools promise to dramatically accelerate research-intensive tasks in fields like academia, journalism, and market analysis. However, they also raise questions about the “digital middleman” and whether users will lose the serendipity and critical thinking skills developed through manual research.

Ultimately, AI web browsers are not merely an incremental improvement but a paradigm shift towards a more efficient, conversational web. While concerns about information bubbles and over-reliance on AI are valid, the convenience and power they offer make a compelling case for widespread adoption. As these agents become more capable, the very definition of “browsing the web” is being rewritten from an activity of navigation to one of conversation and delegation.

Everything’s In Play in the Age of AI: Why Only 1 of Our 16 Core AI Agents Comes From a Legacy Vendor

Saastr • Jason Lemkin • October 24, 2025

AI•Tech•Startups•EnterpriseSoftware•Innovation