Contents

Editorial: Come to Daddy: OpenAI Wants Your Attention

Essay

AI

Media

Venture

Sequoia’s Roelof Botha: Why Venture Capital is Broken & How Great Companies Are Built

Stop Blaming VCs When Your Equity Is Worthless. They Are Just The Enablers.

AI Investors Are Chasing a Big Prize. Here’s What Can Go Wrong.

Sam Altman’s Billion-Dollar Bet on Fusion: The Rise of Helion Energy and the Quest to Harness the…

Concentrating In Winners | Vince Hankes, Partner at Thrive Capital

AI Is Dominating 2025 VC Investing, Pulling in $192.7 Billion

Startup of the Week

Interview Of the Week

Post of the Week

Editorial:

Come to Daddy: OpenAI Wants Your Attention

What happens when one company starts acting like the central bank of compute, the front door to intent, and the rules committee for creative rights—all at once? This week, OpenAI didn’t just ask for our attention. It asked for our grids, our balance sheets, and our defaults. And for the most part it was welcomed by developers and users.

Industrial policy by private contract is here—and it’s wearing an OpenAI hoodie

The “AI Inc” web (coined by the FT below) tightened: a 6‑gigawatt AMD pact with a warrant that could hand OpenAI up to 10% of AMD, a Nvidia LOI to deploy 10 GW with “up to $100B” in staged investment, a reported $300B compute purchase from Oracle, and Stargate’s U.S. campus roll‑out toward 10 GW, with Abilene, TX as flagship. This is not M&A; it’s incentive‑wiring. Capacity becomes destiny because it is all about energy and compute, and financing makes it possible.

“The next leap forward — deploying 10 gigawatts to power the next era of intelligence.” — Jensen Huang

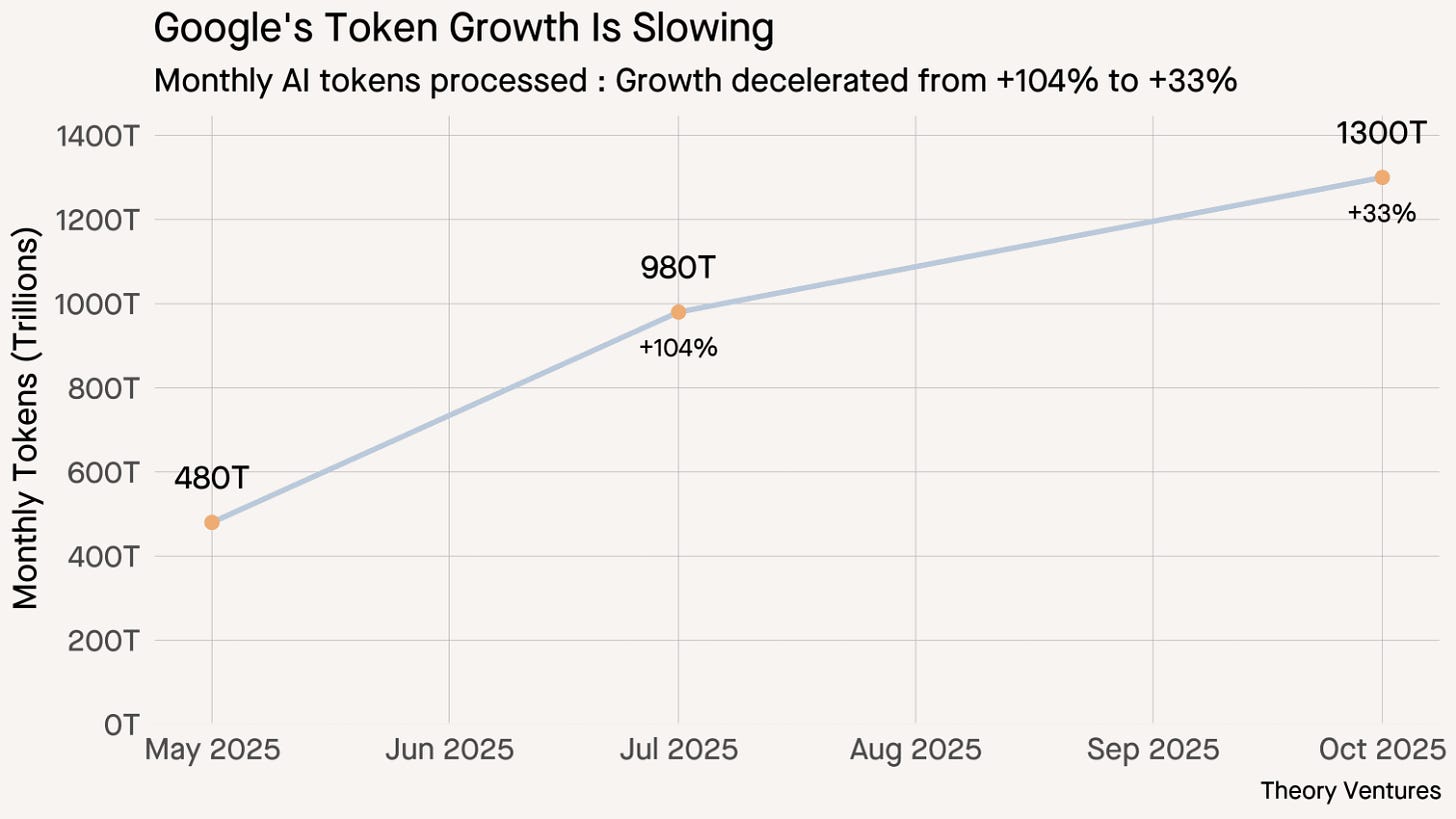

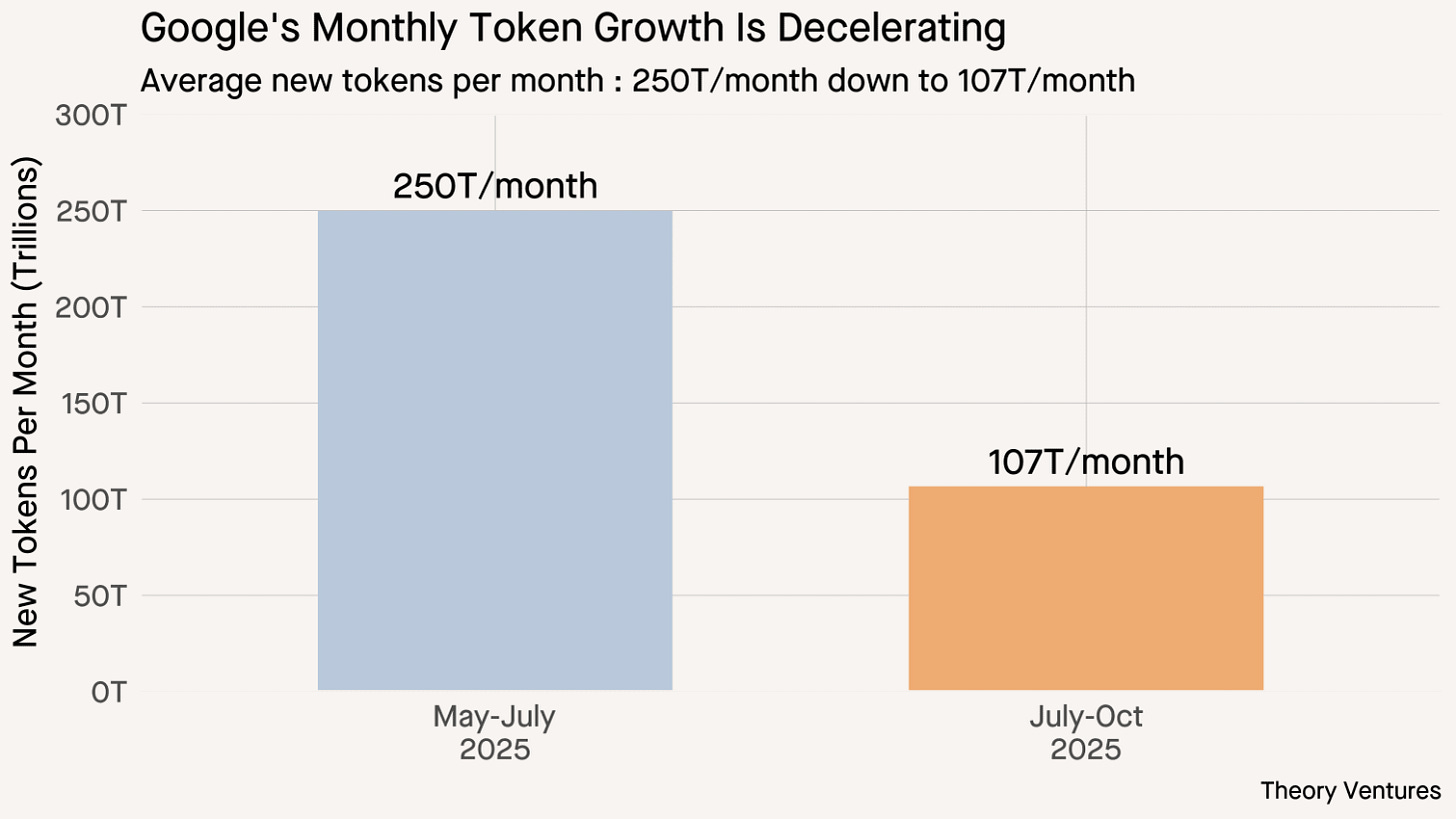

Here’s the tension: Google’s token throughput hit 1.3 quadrillion/month, yet growth decelerated (+250T → +107T added). Meanwhile Citi pegs AI infrastructure at $2.8T by 2029 and the FT warns the capex endgame is in sight. The Perez‑ian retort—“we never know if we’ve built enough until we’ve built too much”—may be right societally, but circular financing across chips, clouds, and labs raises single‑point‑of‑failure risk if any node blinks. OpenAI may lift all boats. Nvidia says it is a demand led expansion, and that is right.

The front door is migrating to agents and creation—and rights are becoming rails

OpenAI is turning chat into an action layer with Spotify and Zillow. Sora moved from demo to economics, promising more granular character controls and a new payout scheme:

“We are going to have to somehow make money for video generation.” — Sam Altman, Sora update 1

That line matters. If the answer layer keeps users in‑product, then licensing, attribution, and settlement must follow the answer—not the click. Hollywood is already behind on AI video; Apple’s Lakers broadcasts in Vision Pro show how quickly formats move when the medium changes. Rights that travel with assets and transparent revenue‑sharing are now product features, not legal footnotes.

Here is my video - accepting the UEFA Champions League as Captain of Manchester United. Yes, that is me.

Trust resets are happening in public—because defaults are policy

CBS installs Bari Weiss and publishes core values; MSNBC releases standards. That’s not just media gossip—it’s a response to the same power shift: when assistants synthesize the news and platforms mediate action, institutions must re‑articulate what they stand for or lose the edge to the interface.

On the build side, Reflection raised $2B to be an open frontier lab, challenging closed incumbents and DeepSeek alike.

But mostly we have over-reactions. Sangeet Paul Choudary’s warning hangs over all of it: hype is capital allocation, and agentic systems atop data‑hoarding models can extinguish user agency. Possibly true. But the capital allocation can also signify a new technical revolution, propelling humanity to new heights like the agricultural and industrial revolutions. Indeed, that seems very likely. And the value created by this new human invention will likely return a lot more capital than it consumes.

Norman Lewis (a good friend) in one of the Essays this week makes the point that the decline in the authority of human institutions is reinforced by a technocratic faith in AI. It’s a good essay and right about institutions. But I don’t think technocrats are the problem, rather the anti-tech lobbies.

I prefer the A16Z essay about the end of post modernism and its replacement by optimism and big thinking, especially predictive thinking.

The obvious AI overbuild will be productive if we pair it with human gains and measurable outcomes. Growth of GDP, lower working hours and days, increased human freedom of choice about how to spend time. These are the outcomes it can make realistic.

What to watch next

The narrative aboput “round trip” cash, implying a suspect use of money between incumbents. Its wrong, but the anti-tech mood of the times is fueling it.

Google’s tightrope: How much screen real estate do AI summaries take—and what’s the publisher payout model behind them?

Sora’s revenue share: Do granular character controls reduce friction or fragment rightsholders into stalemate?

Capacity vs. demand: Does token growth re‑accelerate—or does unit‑economics throttling persist into 2026 orders?

Open vs. closed: Can Reflection’s $2B open lab prove safety and governance at frontier scale—or does openness hit a hard wall?

Bottom line: OpenAI wants our attention. The price is gigawatts, defaults, and a settlement layer we can trust. The payoff is human progress. There are many possible futures and that is one of them. If we end up with one big AI that owns the front door to knowledge and is good enough then I am OK with it.

What would be left would be to figure out how to allocate the newfound wealth to the whole of society, and uplift the species, not only the few. Altman’s Worldcoin is one take on how to do that. At least he is thinking about it.

Essay

I’ve Seen How AI ‘Thinks.’ I Wish Everyone Could.

WSJ • October 9, 2025

Essay•AI•LLMs•Interpretability•Model Transparency

Thesis and Context

The piece argues that truly understanding how modern large language models operate requires direct engagement with their underlying math and data. Rather than treating AI as a mysterious oracle, hands‑on experimentation—probing token probabilities, examining embeddings, and inspecting how inputs map to outputs—reveals patterns that feel like “thinking,” while remaining grounded in statistics and optimization. This process is both energizing, because it demystifies impressive capabilities, and sobering, because it exposes brittleness, bias, and the limits of what these systems actually do.

What it Means to “See How AI Thinks”

At a practical level, “seeing” involves tracing the pipeline from text to tokens to vectors to attention operations, then back to tokens. Visualizing attention distributions shows which parts of a prompt the model uses to predict the next word. Exploring nearest neighbors in embedding space illustrates how concepts cluster, why analogies sometimes work, and where semantic gaps appear. Observing log‑probabilities clarifies that outputs are ranked choices, not certainties—making “confident” mistakes more legible. Iterating prompts with small, controlled changes highlights causal sensitivities, revealing when the model follows structure, mirrors style, or simply amplifies surface patterns.

What Experimentation Reveals

Emergent behavior from scale can look like reasoning, yet often dissolves into heuristic pattern‑matching under stress tests.

Biases reflect training data distributions; targeted prompts can surface stereotypes or omissions that remain hidden in everyday use.

Chain‑of‑thought traces may improve answers by scaffolding intermediate steps, but can also fabricate plausible‑sounding rationales—useful for users, not proof of internal logic.

Tool use and retrieval augment accuracy by grounding outputs, yet introduce dependencies on external systems and curation.

Safety guardrails can conflict with usability; tuning for helpfulness, harmlessness, and honesty requires explicit trade‑offs that become visible through ablation and red‑teaming.

Why This Matters Beyond the Lab

The article contends that public literacy in AI mechanics is no longer optional. For workers, understanding uncertainty and failure modes informs when to trust, verify, or escalate. For educators, it reframes assignments, assessment, and academic integrity around process rather than product. For policymakers, it sharpens debates on transparency, data rights, and liability by anchoring them in how models generalize from training data. For builders, it guides model evaluation, dataset documentation, and product UX toward explainability that users can actually act on.

Practical Ways to Build Intuition

Inspect token probabilities and top‑k alternatives to learn where answers were fragile.

Use contrastive prompts (minimal pairs) to see which features—formatting, examples, or keywords—drive behavior.

Explore embeddings to understand topical coverage, gaps, and unintended associations.

Combine retrieval with prompts to separate model “knowledge” from grounded facts.

Keep an experiment log: inputs, settings, outputs, and interpretations to avoid anecdotal conclusions.

Limits and Responsible Interpretation

“Seeing how AI thinks” is a metaphor; these systems do not possess consciousness or intentions. Interpretability tools can over‑promise if treated as full explanations rather than lenses on complex functions. Dataset provenance, licensing, and privacy remain foundational concerns; experimentation should respect data rights and institutional policies. Ultimately, clarity comes from triangulating multiple views—probabilities, gradients, examples, and human judgment—rather than elevating any single metric or visualization.

Key Takeaways

Hands‑on probing of tokens, embeddings, and attention replaces mystique with measurable patterns.

Capabilities that resemble reasoning are often contextual heuristics; stress tests expose their limits.

Biases and failures trace back to data distributions and objectives; audits must center both.

Practical literacy—prompt design, uncertainty estimation, retrieval grounding—should be mainstream skills.

Interpretability is a tool for accountability and safer deployment, not a license to anthropomorphize models.

Implications

If more people engaged with the math and data of large language models, public discourse would move from hype and fear toward informed scrutiny. Products would ship with built‑in affordances for verification and feedback; regulation would target documentation and evaluation standards; and users would be empowered to demand models that are not just powerful, but legible and aligned with human goals.

Why AI Capex isn’t a Bubble: A Perez-ian Perspective

Paulkedrosky • October 9, 2025

Essay•AI•AI Infrastructure

Context and thesis

The piece argues that the current surge in AI capital expenditure—tens of billions flowing into GPUs, data centers, power, and software—should not be dismissed as a simple asset bubble. Instead, drawing on economist Carlota Perez’s framework of technological revolutions, the moment resembles a late-stage “installation” surge: a period when financial capital overshoots, infrastructure is rapidly built ahead of demand, and many investors ultimately lose money. Yet the societal payoff is the durable scaffolding that enables later productivity booms. The author frames the zeitgeist with wry quotations—Leibniz’s “best of all possible worlds,” the Fight Club narrator’s “You met me at a very strange time in my life,” and Dolly Parton’s “It costs a lot to look this cheap”—to capture how odd and costly this phase can feel even when it is productive.

Perez’s lens: installation surge and creative destruction

Perez’s 2002 book is used to explain why “bubble-like” behavior can be functional. In the installation phase, financial capital is attracted to a general-purpose technology (here, AI), overbuilds shared infrastructure ahead of proven cash flows, and then suffers a reckoning. The economic losses are real—projects fail, margins compress (e.g., GPU rentals), and assets can be stranded by geopolitical shocks (such as a hypothetical Taiwan crisis) or sentiment shifts. But the post-bust landscape retains valuable, underpriced infrastructure—compute, fiber, power, and software platforms—that becomes the foundation for the deployment phase when productivity gains diffuse across the economy.

Historical analogies underscore the logic of loss

1840s British railways: a boom ended in waves of bankruptcies. By the 1850s, the same “ruinous” networks unified markets, accelerated industrial transport, and bolstered logistics.

Late-1990s dot-com era: the parabolic rise and 2000 collapse pulverized equity holders, but left a trove of fiber, data centers, and networking capacity. Those assets enabled the 2000s internet economy—from Amazon and Netflix to training data-rich AI models—because bandwidth and compute became cheap and plentiful.

The pattern is “brutal but familiar”: capital overbuilds, capital is destroyed, and society keeps the rails—literal and figurative.

Why we overbuild—and why it’s okay

The author argues that efficient markets and rational planners cannot perfectly size foundational infrastructure for nascent technologies. “We never know if we’ve built enough until we’ve built too much.” Speculative fervor pushes installation ahead of monetization; this produces waste and “vast losses,” but those losses are the price of optionality and speed. Even stranded assets can be reallocated creatively—the essay jokes about currently “unkitted” AI data centers being repurposed for laser tag—until demand catches up. In this view, losses are not a failure of capitalism so much as the mechanism by which future markets are made. The Dolly Parton quip—“It costs a lot to look this cheap”—becomes an economic aphorism: society’s later “cheap” compute, bandwidth, and reliability are subsidized by today’s expensive capex and tomorrow’s write-downs.

Current risks and the “bubble in calling bubble”

The piece acknowledges frothy signals: soaring valuations, unprecedented hardware orders, and the possibility that demand could falter or geopolitics could disrupt supply chains. There is even a “bubble in people saying ‘bubble’,” reflecting both genuine concern and performative skepticism. Crucially, the author notes “at least two problems with this view,” indicating that Perez’s logic is not an all-purpose excuse for indiscriminate spending; the argument is a framing for understanding the cycle, not a guarantee of positive returns for every project or investor.

Implications

Expect volatility: Many AI infrastructure bets will fail, and margins (e.g., GPU rentals) could compress sharply as capacity comes online.

Don’t mistake capex losses for societal failure: The public good—cheap, abundant compute and connective tissue—often materializes post-bust.

Policy and planning should anticipate repurposing: Grid upgrades, data centers, and fiber can be reused; regulators and localities should streamline redeployment rather than fixate on initial project P&Ls.

Investment lens: Separate the health of individual balance sheets from the health of the platform. Long-duration winners tend to be those positioned to exploit the “cheap” post-overbuild infrastructure during the deployment phase.

Key takeaways

Overbuild is a feature of technological revolutions, not a bug; it accelerates the creation of shared infrastructure.

Historical precedents (railways, dot-com fiber/server farms) show how capital destruction seeds future productivity.

AI capex may end badly for many investors yet still be good for society, leaving a durable base for the next wave of AI-enabled applications.

The presence of risks—geopolitical, demand-side, sentiment—does not negate the Perez-ian logic; it defines the terrain on which the next phase will be built.

Prediction: the Successor to Postmodernism

Danco • October 10, 2025

Essay•AI•Prediction Markets

Thesis and Context

The piece argues that “Prediction” is the cultural and economic successor to postmodernism—a new meta-aesthetic that reorients how society interprets, creates, and participates in value. Where modernism prized unified national projects and postmodernism emphasized rendered experiences and combinatorics, the predictive era centers on timing, anticipatory action, and probabilistic engagement. Prediction markets exemplify this shift: they give society an intuitive unit of progress—akin to patents in the early 20th century—by turning diffuse information into tradable foresight and coordinating attention and resources around likely futures.

From Modernism to Postmodernism to Prediction

Modernism treated the world as the sum of coherent parts—nation-states, canonical science, singular narratives. Postmodernism emerged after the World Wars with a subjective, “rendered” reality: the front-of-house/back-of-house business model delivered personalized simulacra at scale, while logistics—the “Great Back End”—was abstracted away. Fredric Jameson captured this logic: aesthetic innovation became integral to commodity production, accelerating turnover and novelty. In business, “progress” gave way to “innovation,” reframing ambition as portfolios of risk. Peter Drucker’s line—“Innovation is… a new view of the universe, as one of risk rather than of chance or of certainty… man creates order by taking risks”—became the credo of venture-backed experimentation. Today, even “adoption” has subsumed innovation; the question is no longer how to engage, but when.

Cultural Evidence: Speculation as Aesthetic

The predictive turn shows up first in culture. Speculation has become a mainstream pastime—from meme stocks to HGTV’s house-flipping era—valorizing “make your own luck.” Social media mirrors brokerage apps: checking post performance feels like checking a stock price, binding identity to real-time marks. Friction, once a customer-experience sin, has become the point: sneaker drops, flash sales, and game mechanics reward anticipation, queuing, and temporal scarcity. The core felt sense of media feeds now is temporal positioning: are you predicting the wave, or is it predicting you? This diverges from the cozy, reproducible postmodern supermarket of experiences; the new ethos is, “I want to feel something, even if it hurts.”

What’s Old, What’s New in Media and Art

Several celebrated “futures” are actually final forms of the old. Infinite-scroll feeds may be the last newspaper; AI image generators the last postmodern visual art, perfecting “everyone gets their own rendering” just as that logic fades. The genuinely new form is public prediction itself—statements that age spectacularly well or badly, whose meaning accrues through time. Examples range from eerily prescient celebrity pairings resurfacing years later to the “prediction path screenshot” meme. NFTs and meme coins, inflammatory as they are, function as pure prediction-art: their meaning is when you touched them. Recasting Warhol, the author suggests the zeitgeist is no longer “15 minutes of fame” but “one 15-bagger.”

Business Models in the Predictive Era

Every era installs a native firm architecture. If modernism birthed vertically integrated giants and postmodernism perfected front/back-end simulacra, today’s architecture emphasizes public, time-stamped participation in evolving games. Crypto anticipated this by insisting “onchain” is neither front nor back end but a visible ownership and timing layer across content. Large language models then made “weights” a scarce, valuable asset: models don’t just predict tokens; they mediate advertising, product decisions, and cultural flows. In this world, “everyone has their own personalized parlay on the same series of reference events” ceases to be gambling and becomes life-operations: your sequence of interactions trains models and steers markets. As Matthew Prince puts it, “the way you’ll get paid on the internet now is by writing things that the AI does not yet know”—rewarding upstream novelty that improves prediction.

Implications and How to Play Offense

Value creation moves upstream toward information origination, curation, and timely participation; being early is part of the product.

Enterprises should design for anticipation and visible timing: drops, auctions, markets, and onchain logs that let customers accrue meaning through their entry point.

Metrics migrate from static engagement to predictive power: who improves the model, shifts priors, or sends the snowball down a new path.

Culture will privilege public calls, transparent wagers, and traceable edits—turning “adoption” into an open leaderboard of foresight rather than a closed UX funnel.

Key Takeaways

Prediction is the successor to postmodernism: timing, anticipation, and probabilistic participation become the core aesthetic and business logic.

Postmodernism’s service simulacra yield to market-shaped timelines where friction and sequence confer meaning.

New “art” is public prediction and its receipts; crypto and meme assets demonstrate ownership-and-timing as meaning.

LLMs and model weights institutionalize prediction as infrastructure, incentivizing content that shifts priors and trains systems.

Strategy: build products that let users be predictive of the game, not predicted by it—make when you engage as valuable as what you consume.

Echoes of Control: How AI Revives the Technocratic Dream

Norman Lewis • Substack • October 10, 2025

Essay•AI•Technocracy•EpistemicCrisis•LLMs

Thesis

The essay argues that AI—especially generative AI—is not a civilizational rupture but an “algorithmic mirror” reflecting today’s deeper epistemic crisis: the collapse of shared meanings and trusted authorities. Both apocalyptic and utopian narratives about AI obscure how it re-legitimizes technocratic rule by privileging measurable outputs over processes of judgment, contestation, and meaning-making. The real risk is not machine domination but human abdication of responsibility to systems we mistake for intelligence.

From Technology to Technocracy

Drawing on a lineage from Karl Marx’s observation that technologies crystallize their eras (“the hand-mill… the steam-mill…”) to Langdon Winner’s provocation that “artifacts have politics,” the piece situates AI as the latest embodiment of managerial, technocratic logic rather than a neutral tool. In societies where religious, scientific, and political institutions once converged to provide epistemic authority, that consensus has fractured. As Frank Furedi notes, we now face “the absence of a recognised form of epistemic authority,” leaving an interpretive vacuum that AI fills with the promise of efficient, politically “neutral” outcomes.

The Epistemic Crisis: Information Without Meaning

The essay contends that our core problem is not human-versus-machine intelligence but the erosion of the distinctions between information, knowledge, judgment, and wisdom. Social media did not originate this problem; it exposed and accelerates it. In this context, AI functions as a technology of simulation—“thought without thinking,” “care without caring”—producing texts and images detached from intention, experience, and moral reasoning. Richard Susskind’s “outcomes over process” captures the technocratic turn: when meaning is contested, institutions gravitate to systems that optimize for measurable results rather than deliberative legitimacy.

Echoes, Not Thoughts

Generative models, the essay insists, are sophisticated echoes bounded by past data and prompts. Citing Anil Ananthaswamy’s caution that such systems cannot truly generalize beyond their training distributions, the author emphasizes that LLMs lack intentionality or “aboutness.” A pull-quote underscores the human hallmark: “The unique mark of intelligence is the ability to reason and infer in the face of incomplete information.” LLMs “recall without remembering,” remixing forms without subjective experience. Treating these outputs as genuine cognition conflates statistical mimicry with understanding and tempts us to outsource judgment to processes we mistake for authority.

Against the Superintelligence Fantasy

The essay reframes superintelligence as a category error: it presupposes intelligence that current systems do not possess. “Predicting the emergence of superintelligence from current AI is like predicting that louder echoes will eventually learn to sing.” The danger isn’t that such a future will arrive, but that belief in it legitimizes machine determinism and weakens democratic agency. As Sandy Starr argues in relation to the Turing Test, if we fail to distinguish ourselves from machines, it is we who fail—not the machines who pass.

Re-legitimating Technocratic Authority

AI’s appeal to governments and corporations lies in its promise to bypass contested meaning with scalable, auditable outputs: “information without debate, efficiency without friction.” Yet this re-legitimation deepens the crisis it claims to solve—amplifying confusion between data and knowledge, rewarding outcome metrics over process, and encouraging deference to those who control the “echo chambers.” The essay calls for reasserting human capacities—judgment, imagination, and responsibility—over outcome-driven logics that flatten civic deliberation.

Implications and What To Do

Restore the hierarchy: differentiate data from knowledge, process from outcome, efficiency from legitimacy.

Re-center democratic deliberation: resist outsourcing normative choices to optimization functions.

Treat AI as instrument, not authority: valuable as a tool for human goals, not a substitute for meaning.

Cultivate epistemic resilience: rebuild institutions and practices that sustain shared inquiry, contestation, and trust.

Key Takeaways

AI mirrors and magnifies an existing epistemic crisis rather than solving it.

Technocratic “outcome thinking” gains false legitimacy from AI’s measurable outputs.

Generative models are “echoes” lacking intentionality; they simulate, they do not understand.

The superintelligence narrative disempowers citizens by naturalizing machine rule.

The antidote is political and cultural: revive human judgment and meaningful processes so AI is subordinated to human purposes.

AI

Sam Altman has a new project: building AI Inc

Ft • October 6, 2025

AI•Funding•Open AI•AMD•Stargate

Overview

The piece argues that Sam Altman is stitching together “AI Inc”: a sprawling web of capital commitments, supply agreements, and cross‑incentives that fuses model developers, chipmakers, cloud providers, and data‑center builders into a single, mutually dependent ecosystem. Rather than a classic conglomerate, this is an interlocking network whose ties are structured through warrants, offtake contracts, preferred‑supplier status, and co‑investment—spreading risk, accelerating build‑out, and making OpenAI’s success a shared priority for partners. The strategy’s latest cornerstone is OpenAI’s multiyear pact with AMD to deploy 6 gigawatts (GW) of Instinct GPUs starting in 2H 2026, paired with a warrant giving OpenAI the right to buy up to 160 million AMD shares (roughly 10%) as deployment and share‑price milestones are hit. (amd.com)

The new corporate web: chips, cloud, and concrete

Chips: Alongside AMD, OpenAI has a letter of intent with Nvidia to build at least 10 GW of datacenter capacity; Nvidia “intends to invest up to $100 billion” in OpenAI as each gigawatt comes online, with first systems targeted for 2H 2026 on the Vera Rubin platform. Nvidia’s Jensen Huang called it “the next leap forward — deploying 10 gigawatts to power the next era of intelligence.” (investor.nvidia.com)

Cloud: OpenAI has reportedly agreed to purchase about $300 billion of compute from Oracle over roughly five years starting in 2027, equivalent to about 4.5 GW—one of the largest cloud contracts ever discussed publicly. (cnbc.com)

Concrete (Stargate): OpenAI, Oracle, and SoftBank are building out Stargate, a U.S. AI‑infrastructure platform targeting $500 billion and 10 GW. Recent site announcements bring planned capacity to nearly 7 GW and more than $400 billion of investment in the next three years, with Abilene, TX as the flagship campus. Altman framed the rationale simply: “AI can only fulfill its promise if we build the compute to power it.” (openai.com)

Custom silicon: In parallel, OpenAI is co‑designing chips with Broadcom for internal use starting in 2026—reducing single‑supplier exposure and improving cost/performance on inference. (investing.com)

Financial engineering as strategic glue

The AMD agreement illustrates how Altman aligns incentives: OpenAI’s warrant in AMD vests as it deploys 1–6 GW of AMD GPUs and as AMD meets escalating share‑price thresholds, letting OpenAI benefit from AMD’s upside while securing capacity. AMD describes the pact as delivering “tens of billions of dollars” in revenue and being “highly accretive” to EPS. Lisa Su said the partnership “brings the best of AMD and OpenAI together to create a true win‑win.” Markets agreed, sending AMD up more than 20% on the news. (amd.com)

Nvidia’s planned staged investment creates similar alignment—funding the very infrastructure that will consume Nvidia systems, and tying OpenAI’s roadmap to Nvidia’s platform cadence. Huang underscored the scale and intent; Altman emphasized that “everything starts with compute,” positioning infrastructure as the foundation of future economic growth. (investor.nvidia.com)

Why this matters

Industrial policy by private contract: These megadeals effectively coordinate a national (and allied) build‑out of power‑hungry AI datacenters without a single corporate owner—akin to keiretsu‑style interlocks where suppliers and customers share financial exposure.

Capital intensity as moat: With OpenAI valued around $500 billion after a recent secondary and still burning cash to scale, the web of pre‑committed supply and co‑investment raises barriers to entry for smaller rivals while accelerating time‑to‑capacity. (reuters.com)

Diversification and resiliency: Multi‑sourcing (Nvidia, AMD, and custom Broadcom silicon) plus multi‑cloud (Oracle alongside existing hyperscale partners) hedge technical and geopolitical risks—and give OpenAI leverage in price and priority allocation. (amd.com)

Risks and fault lines

Systemic concentration: Circular dependencies—vendors investing in a top customer whose spend returns to those vendors—invite comparisons to past speculative build‑outs; a shock in one node (chips, energy, financing) could propagate quickly.

Antitrust and procurement scrutiny: Warrants, exclusivities, and preferred‑supplier terms across market leaders may draw regulatory attention if rivals argue foreclosure or discriminatory access to cutting‑edge compute.

Execution bottlenecks: Delivering 10+ GW by 2026–2027 requires breakthroughs in siting, power procurement, HBM supply, advanced packaging, and grid interconnect timelines—areas historically measured in years, not quarters. (investor.nvidia.com)

Key takeaways

OpenAI’s AMD deal (6 GW plus up to 10% equity via warrants) formalizes a second major chip lane beyond Nvidia and tightly couples partner incentives. (amd.com)

Nvidia’s LOI (10 GW; up to $100B intended investment) and Oracle’s reported $300B compute contract anchor the capital stack for Altman’s “AI Inc.” build‑out. (investor.nvidia.com)

Stargate’s U.S. campus network is the physical manifestation of this strategy—turning multi‑year offtakes and staged investments into power, racks, and jobs at national scale. (openai.com)

The model trades corporate control for velocity and shared risk; its success will hinge on execution across chips, power, and policy over the next 24–36 months.

OpenAI Looks to Take 10 Percent Stake in AMD Through AI Chip Deal

Cnbc • John Gruber • October 6, 2025

AI•Funding•OpenAI•AMD•GPUs

Key Points

OpenAI and AMD have reached a deal that could see Sam Altman’s company take a 10% stake in the chipmaker

OpenAI will deploy up to 6 gigawatts of AMD Instinct GPUs over multiple years, beginning with a 1-gigawatt rollout in 2026.

AMD issued OpenAI a warrant for up to 160 million shares, with vesting tied to deployment and share price milestones.

AMD stock skyrockets 35% as OpenAI looks to take stake through AI chip deal

OpenAI and Advanced Micro Devices have reached a deal that could see Sam Altman‘s company take a 10% stake in the chipmaker.

AMD stock skyrocketed 23.71% on Monday following the news.

OpenAI will deploy 6 gigawatts of AMD’s Instinct graphics processing units over multiple years and across multiple generations of hardware, the companies said Monday. It will kick off with an initial 1-gigawatt rollout of chips in the second half of 2026.

“We have to do this,” OpenAI President Greg Brockman told CNBC’s “Squawk on the Street.” “This is so core to our mission if we really want to be able to scale to reach all of humanity, this is what we have to do.”

Brockman added that the company is already unable to launch many features in ChatGPT and other products that could generate revenue because of the lack of compute power.

As part of the tie-up, AMD has issued OpenAI a warrant for up to 160 million shares of AMD common stock, with vesting milestones tied to both deployment volume and AMD’s share price.

Is Token Consumption Growth Slowing Down?

Tomtunguz • October 9, 2025

AI•Data•Google•Token Consumption•Data Centers

Philip Schmid dropped an astounding figure1 yesterday about Google’s AI scale : 1,300 trillion tokens per month (1.3 quadrillion - first time I’ve ever used that unit!).

Now that we have three data points on Google’s token processing, we can chart the progress

.

In May, Google announced at I/O2 they were processing 480 trillion monthly tokens across their surfaces. Two months later in July, they announced3 that number had doubled to 980 trillion. Now, it’s up to 1300 trillion.

The absolute numbers are staggering. But could growth be decelerating?

Between May & July, Google added 250T tokens per month. In the more recent period, that number fell to 107T tokens per month.

This raises more questions than it answers. What could be driving the decreased growth? Some hypotheses :

Google may be rate-limiting AI for free users because of unit economics.

Google may be limited by data center availability. There may not be enough GPUs to continue to grow at these rates. The company has said it would be capacity constrained through Q4 2025 in earnings calls this year.

Google combines internal & external AI token processing. The ratio might have changed.

Google may be driving significant efficiencies with algorithmic improvements, better caching, or other advances that reduce the total amount of tokens.

I wasn’t able to find any other comparable time series from neoclouds or hyperscalers to draw broader conclusions. These data points from Google are among the few we can track.

Data center investment is scaling towards $400 billion this year.4 Meanwhile, incumbents are striking strategic deals in the tens of billions, raising questions about circular financing & demand sustainability.

How Google Is Walking the AI Tightrope

Wsj • October 11, 2025

AI•Tech•Google•Search•GenerativeAI

Core argument

The piece contends that the tech giant is “trying to have it both ways” with its search business—projecting confidence that search remains healthy and indispensable while simultaneously pivoting to AI-driven experiences that could upend how results are produced, consumed, and monetized. The balancing act is to showcase leadership in generative AI without eroding the very behaviors, partner ecosystems, and ad models that have powered search for decades.

Dual narrative in practice

Innovation story: Emphasizes rapid integration of generative features to keep users engaged and demonstrate product velocity.

Continuity story: Reassures that core search intent, reliability, and commercial performance remain intact, even as interfaces and result compositions evolve.

Risk framing: Positions AI as additive—improving relevance and user satisfaction—rather than as a substitute that cannibalizes queries, clicks, and downstream traffic.

Strategic tensions

User experience vs. monetization: Rich, synthesized answers can reduce the need to click, pressuring ad inventory and partner referrals, yet they may also raise user satisfaction and retention.

Openness vs. control: Curating AI outputs at scale requires tight guardrails, even as the company courts developers and publishers who want transparency and agency.

Speed vs. trust: Moving quickly on AI features competes with the need to maintain quality, reliability, and brand safety—especially in high-stakes queries.

Platform leadership vs. ecosystem health: Elevating in-product answers can marginalize third-party content sources that historically supplied authoritative information to searchers.

Operational implications

Product metrics could shift from click-through rates toward measures of “answer utility,” session satisfaction, and multi-step task completion.

Advertising may migrate toward new creative formats and placements integrated within AI summaries, requiring revised measurement, attribution, and auction dynamics.

Publisher relationships will hinge on traffic guarantees, clearer attribution in AI outputs, and revenue-sharing constructs that preserve incentives to produce high-quality content.

What to watch next

Changes to result page design that signal how prominently AI summaries appear relative to traditional links and ads.

Evidence of query displacement (fewer follow-up clicks) versus query expansion (more exploratory sessions).

New commercial models aligning AI answers with advertiser goals and publisher sustainability.

Policy and compliance guardrails as synthesized results intersect with consumer protection, copyright, and competition concerns.

Bottom line

The strategy seeks to keep search indispensable while making AI unavoidable—an attempt to modernize the experience without undermining the economic engine that funds it.

Sam Altman on Sora, Energy, and Building an AI Empire

Youtube • a16z • October 8, 2025

AI•Tech•Sora

Overview

A wide-ranging conversation covering OpenAI’s video model Sora, the compute-and-energy constraints behind modern AI, and the playbook for scaling an AI-first company from product to platform. The discussion links technical progress with operational realities: data, training efficiency, energy procurement, partnerships, safety, and distribution. The throughline is that next-gen AI requires simultaneously advancing model quality, user experience, and the physical and financial infrastructure that makes it run at global scale.

Sora and the product roadmap

Frames generative video as a new creative primitive, not just a feature—emphasizing controllability, editing tools, and integration with existing creative workflows.

Highlights rapid iteration cycles: improvements in temporal coherence, physics, and multi-shot/story control, plus tighter promptability and red-teaming guardrails.

Envisions Sora as both a consumer creation app and a developer platform with APIs that plug into media, marketing, and enterprise content pipelines.

Compute, energy, and the infrastructure stack

Argues that the bottleneck for frontier models is shifting from algorithms to energy- and compute-supply, requiring long-term capacity planning.

Describes a layered strategy: model efficiency (training/inference), specialized hardware and data-center design, and diversified energy procurement to stabilize costs and availability.

Positions low-carbon baseload (including advanced nuclear and firmed renewables) as strategically important for predictable inference economics over multi-year horizons.

Building an AI company into a platform

Product-led growth anchored by a breakout application (e.g., Sora) that seeds a developer ecosystem, third-party tools, and enterprise integrations.

Deep partnerships across the stack—cloud, silicon, and energy—to secure capacity, derisk supply chains, and align incentives for long-term scaling.

Distribution loops: user-generated content drives virality; APIs and enterprise features drive retention and revenue diversification.

Culture and org design: small, fast, and safety-conscious teams; aggressive red-teaming; shipping guardrails with features rather than as afterthoughts.

Safety, provenance, and policy

Emphasis on usage policies, watermarking/provenance signals, and consent frameworks for likeness/voice to reduce misuse while preserving creative potential.

Acceptance that safety is an ongoing systems problem—tooling, audits, rate limits, and model-level mitigations evolve with new capabilities and adversarial behavior.

Implications

Creative industries see lower concept-to-canvas friction; agencies and studios retool around prompt-engineering, editing, and post-production orchestration.

Enterprises adopt video-generation for training, support, and marketing, contingent on policy compliance and asset provenance.

Macro takeaway: winners will pair product velocity with control over compute and energy inputs, translating technical advances into durable advantages.

Key takeaways

Video is becoming a first-class AI modality, demanding better control, safety, and integration.

Sustainable scale hinges on efficiency plus secured compute and energy supply.

Platform status comes from coupling consumer breakout products with robust APIs, partnerships, and governance.

Sora, AI Bicycles, and Meta Disruption

Stratechery • Ben Thompson • October 6, 2025

AI•Tech•Sora•Generative Video•Meta

Overview

Sora’s breakout moment underscores a broader shift: generative AI that lowers the cost of creation is reaching mainstream cultural consciousness. The core idea is straightforward yet disruptive: when tools transform text prompts into rich media, they unlock dormant creativity at scale. That dynamic benefits society by amplifying individual expression and expanding the supply of stories, ads, education, and entertainment. At the same time, it threatens incumbents whose power is concentrated in distribution and network effects—particularly platforms like Meta—because the locus of value moves upstream to creation and the models that enable it.

Why virality matters

Virality signals product-market fit for creativity: ordinary users can produce compelling content with minimal skill ramp, compressing the gap between idea and artifact.

Lower friction → more experiments: when iteration cycles shrink from days to minutes, creators test more concepts, discover new aesthetics, and find audiences faster.

Cultural flywheel: shareable outputs catalyze more prompts, remixes, and formats, pulling new users into the creative loop and accelerating capability discovery.

Good for humanity: expanding the creative frontier

Democratized authorship: anyone can storyboard, direct, and “film” scenes without budgets, crews, or gear, expanding who gets to make things.

New learning pathways: educators and students can prototype visuals and narratives to explore complex topics, shifting from passive consumption to active synthesis.

Economic inclusion: small businesses and solo creators gain production values previously reserved for studios, unlocking more competitive marketing and storytelling.

Idea velocity: when expression costs fall, more ideas see daylight. Even if many are mediocre, the aggregate yield of high-quality creations rises.

Bad for Meta: erosion of incumbent advantages

From feed to foundry: if the creative act becomes the center of gravity, value accrues to the model/provider that enables creation rather than the social graph that distributes it. Meta’s historical strength—attention capture via feeds—faces pressure as users spend more time in prompt-and-edit workflows outside Meta’s apps.

Commoditizing distribution: as creators export and cross-post AI-native media everywhere, distribution looks interchangeable. That weakens walled gardens and ad targeting moats built on proprietary engagement data.

Shifts in ad economics: brand and performance advertisers may prioritize rapid in-house generation over buying reach alone, reducing dependence on platform-native creative tools and formats.

Platform risk: if the most compelling AI outputs originate elsewhere, Meta must either build best-in-class models, broker deep integrations, or become a follower in a market that rewards cutting-edge generation quality.

Strategic implications

Integration vs. independence: platforms that seamlessly integrate creation (generation, editing, rights management) with distribution can recapture value. Otherwise, standalone creative tools will siphon attention and loyalty.

New discovery mechanisms: as AI-native content floods channels, curation and authenticity signals (watermarking, provenance, taste layers) become differentiators. Recommendation quality must adapt to higher volume and stylistic diversity.

Workflow gravity: creators will coalesce around tools that offer fast iteration, controllability, and asset reuse. Features like style continuity, character persistence, and prompt versioning will shape lock-in more than follower counts.

Policy and trust: the more powerful the tools, the greater the need for safety rails, disclosure norms, and content provenance—areas where platforms can either lead or lag.

Key takeaways

Sora’s virality is a proxy for a step-change in accessible creativity.

Creation-centric value threatens distribution-centric incumbents, particularly Meta’s feed-driven model.

The winners will be those who compress the loop from idea to audience—owning generation quality, editability, and cross-platform portability.

For society, lower creative barriers expand participation, education, and entrepreneurial opportunity, even as moderation, provenance, and discovery challenges grow.

Sora update #1

Sam Altman • October 3, 2025

AI•Tech•Sora

We have been learning quickly from how people are using Sora and taking feedback from users, rightsholders, and other interested groups. We of course spent a lot of time discussing this before launch, but now that we have a product out we can do more than just theorize.

We are going to make two changes soon (and many more to come).

First, we will give rightsholders more granular control over generation of characters, similar to the opt-in model for likeness but with additional controls.

We are hearing from a lot of rightsholders who are very excited for this new kind of “interactive fan fiction” and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all). We assume different people will try very different approaches and will figure out what works for them. But we want to apply the same standard towards everyone, and let rightsholders decide how to proceed (our aim of course is to make it so compelling that many people want to). There may be some edge cases of generations that get through that shouldn’t, and getting our stack to work well will take some iteration.

In particular, we’d like to acknowledge the remarkable creative output of Japan--we are struck by how deep the connection between users and Japanese content is!

Second, we are going to have to somehow make money for video generation. People are generating much more than we expected per user, and a lot of videos are being generated for very small audiences. We are going to try sharing some of this revenue with rightsholders who want their characters generated by users. The exact model will take some trial and error to figure out, but we plan to start very soon. Our hope is that the new kind of engagement is even more valuable than the revenue share, but of course we we want both to be valuable.

Please expect a very high rate of change from us; it reminds me of the early days of ChatGPT. We will make some good decisions and some missteps, but we will take feedback and try to fix the missteps very quickly. We plan to do our iteration on different approaches in Sora, but then apply it consistently across our products.

The AI capex endgame is approaching

Ft • October 2, 2025

AI•Funding•Hyperscalers•Capex•EU AI Act

What’s happening

The piece argues that the “capex endgame” for AI is drawing closer: hyperscalers have pushed spending, valuations, and inter-company deals to levels that resemble late‑cycle dynamics in past tech buildouts. The risk is not that AI “fails,” but that capex overshoots demand, leaving suppliers exposed if buyers retrench. The comparison is to prior infrastructure booms that ended when overcapacity, regulation, or buyer budgets collided with optimistic forecasts. (ft.com)

How we got here: a historic capex surge

Citi now projects AI infrastructure spending to exceed $2.8 trillion by 2029, with capex around $490 billion in 2026 and an added 55GW of power needed by 2030; roughly half of all spend could land in the U.S. (reuters.com)

Individual plans are eye‑watering: Alphabet flagged roughly $75 billion of 2025 capex “primarily for servers” and data centers; Meta guided to $60–65 billion and says it will end 2025 with more than 1.3 million GPUs and about 1 GW of incremental compute online. Nvidia, for its part, maintains the boom is early, with total AI infrastructure spend reaching $3–4 trillion by 2030. (cnbc.com)

“Neocloud” and multi‑party arrangements are multiplying. Meta agreed to a multi‑year, $14.2 billion AI infrastructure deal with CoreWeave, emblematic of the circular, interdependent contracts tying model developers, clouds, and chip suppliers together. (reuters.com)

Stress points: funding, power, regulation, and efficiency competition

Funding mix is tilting toward debt as free cash flow gets absorbed by data center buildouts; Oracle’s recent $18 billion multi‑tranche bond sale underscores the rising use of leverage to finance AI capacity. If borrowing costs or credit appetite worsen, capex could slow abruptly. (investing.com)

Power remains a hard constraint. Citi’s 55GW estimate implies massive grid upgrades and siting challenges this decade; localized energy strain could force project deferrals or reprioritization. (reuters.com)

Europe’s AI Act is phasing in, with prohibited uses already in effect (from February 2, 2025), obligations for general‑purpose AI models from August 2, 2025, and most other provisions on August 2, 2026; fines can reach 7% of global turnover. Compliance costs and uncertainty could weigh on rollouts, especially in regulated sectors. (digital-strategy.ec.europa.eu)

Efficiency‑first challengers intensify price pressure. China’s DeepSeek continues to push lower‑cost, more efficient model variants and has cut API prices, a trend that could compress monetization even as compute needs climb. If cheaper alternatives meet “good‑enough” thresholds, hyperscaler ROI math tightens. (reuters.com)

What the ‘endgame’ looks like

Historically, tech capex booms don’t end because the technology is a mirage; they end when supply outruns paying demand or rule changes alter economics. The scenario to watch is a buyer pullback: if a few hyperscalers temper 2026–2027 build plans, orders to chipmakers, equipment vendors, and “neoclouds” can fall faster than these firms can reduce fixed costs, pressuring revenues and cash burn. The article frames this as a classic late‑cycle risk where vendor financing, partner prepayments, and interlocking deals amplify the unwind once growth assumptions reset. (ft.com)

After the bust: productive residue vs. systemic risk

Drawing on William Janeway’s “productive bubbles,” equity‑funded tech manias can still seed enduring public goods: cheap fiber after 2000, and potentially an AI‑ready compute, power, and networking footprint now. As Janeway puts it, “bubbles have been necessary drivers of economic progress,” with equity bubbles typically less systemically dangerous than credit‑led ones—though late investors often incur heavy losses. That history suggests today’s overbuild, if it comes, could leave valuable infrastructure even as valuations reset. (tandfonline.com)

Investor implications

Upstream beneficiaries (GPUs, networking, power, specialty clouds) remain leveraged to 2025–2026 buildouts, but are most exposed to a sudden order gap if hyperscalers pause. Watch guidance cadence and backlog quality.

Demand risk is asymmetric: a few mega‑customers drive most orders. New regulation and cheaper model competition can dent monetization assumptions at the platform layer while leaving suppliers with stranded capacity.

Medium term, reset cycles often reprice winners without killing the theme. Survivors of a capex correction may emerge with stronger moats once weaker balance sheets and speculative financing clear.

Key takeaways

AI capex is enormous and front‑loaded; $490B by 2026 and >$2.8T by 2029 set a high bar for returns. (reuters.com)

Buyer concentration, debt‑funding, power constraints, and EU regulation are the main tripwires for a 2026–2027 slowdown. (investing.com)

Competitive efficiency (e.g., DeepSeek‑style models) can compress pricing even as compute demand grows. (reuters.com)

If a correction arrives, expect pain for late‑cycle suppliers—but lasting infrastructure gains consistent with past “productive bubbles.” (tandfonline.com)

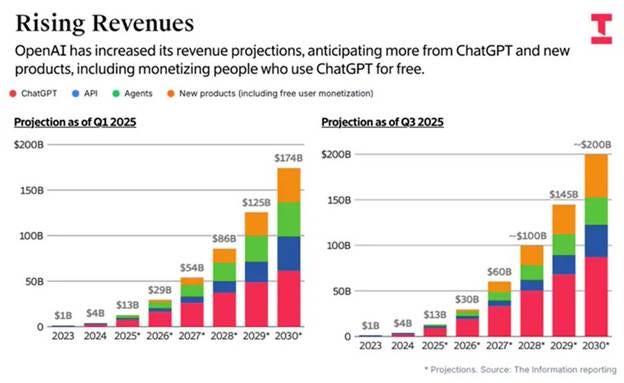

Will OpenAI reach $300BN in Revenue?

Youtube • 20VC with Harry Stebbings • October 2, 2025

AI•Tech•OpenAI•Revenue Projections•AI Infrastructure

Framing the Question

The central prompt asks whether a frontier AI company could scale to $300B in annual revenue—a figure that would place it among the most valuable global platforms. To evaluate plausibility, consider business mix, pricing power, addressable markets, infrastructure constraints, and competitive dynamics. The path is less about one product exploding than about a layered platform capturing value across multiple use cases and distribution channels.

Potential Revenue Pillars

Enterprise AI subscriptions: Per-seat copilots for knowledge work (code, documents, sales, support, design) priced on a monthly basis. A back-of-envelope path: 100M active professional users paying an average blended $200–$250/month could approach $240–$300B annually.

API and platform fees: Usage-based revenue from developers and enterprises integrating models into products. If AI becomes a default layer in apps, per-token and fine-tuning revenues compound at internet scale.

Agent marketplace and revenue share: A “store” for domain-specific agents/apps with take rates similar to mobile app stores or SaaS marketplaces, plus enterprise private marketplaces.

Vertical solutions: Industry-tailored offerings (healthcare documentation, legal drafting, financial analysis, contact centers) with higher ARPU and service attach.

Data and model services: Fine-tuning, retrieval-augmented generation, and enterprise data pipelines sold as managed services.

What Must Be True

Product-market fit at scale: Copilots must deliver measurable productivity gains (e.g., reduced cycle time, higher win rates, lower support handle time) that justify multi-hundred-dollar seat pricing.

Distribution leverage: Deep partnerships with hyperscalers, operating systems, productivity suites, and CRMs to reach hundreds of millions of users, plus bottom-up developer adoption via APIs.

Reliability and trust: Enterprise-grade uptime, traceability, governance, and strong content safety to satisfy risk, compliance, and regulatory scrutiny.

Continuous model edge: Sustained performance and cost advantages through model innovation, inference optimization, and hardware co-design to keep unit economics favorable at massive scale.

Key Constraints and Risks

Compute and capital intensity: Training and inference costs, supply chain bottlenecks, and dependence on specialized hardware could compress margins unless offset by efficiency gains and scale purchasing.

Competitive bundling: Incumbents can bundle AI into existing suites, potentially capping standalone ARPU or forcing price wars.

Data rights and compliance: Licensing, privacy rules, model provenance, and audits may add cost and slow velocity, especially in regulated sectors.

Platform disintermediation: Open-source models and on-device inference may erode API volumes unless differentiated by superior performance, tooling, or compliance features.

Benchmarks and Scenario Thinking

A $300B revenue run-rate would be in the same league as the largest tech platforms. Getting there likely requires a diversified mix: for example, half from enterprise subscriptions and seats, a third from usage-based APIs/agents, and the remainder from industry verticals and services. Alternatively, a consumer-plus-enterprise blend could reach similar scale if consumer assistants achieve massive daily active usage with premium tiers and third-party services flowing through agents.

Implications if Achieved

Software value capture shifts toward AI orchestration layers, with agents mediating user intent across applications.

Enterprise IT spend re-allocates from point tools to unified AI platforms, favoring ecosystems with strong governance and extensibility.

Labor productivity gains reshape knowledge-work roles; organizations that systematize “AI-first” workflows widen performance gaps.

Regulatory frameworks mature around transparency, safety, and auditability, entrenching moats for providers that meet high compliance bars.

Key Takeaways

The $300B threshold is conceivable only with multi-pronged monetization—seats, usage, marketplaces, and vertical solutions—at global scale.

Sustained technical edge, distribution partnerships, and enterprise trust are the core levers; compute efficiency and unit economics are make-or-break.

Competitive and regulatory forces will shape pricing power; executing on reliability and governance is as crucial as model quality.

OpenAI Lets ChatGPT Users Connect With Spotify, Zillow In App

Bloomberg • October 6, 2025

AI•Tech•ChatGPT•Spotify•Zillow

OpenAI is introducing a way for people to trigger tasks in third-party apps directly inside ChatGPT, part of a broader push to make the chatbot a central gateway to digital services. Announced Monday during the company’s annual developer event, the option keeps users in the conversation while enabling outcomes that rely on external platforms. A person planning the weekend can, for example, ask ChatGPT to assemble a playlist; the assistant then connects with Spotify to surface tailored suggestions without leaving the chat. A home seeker can specify a three-bedroom place in a particular neighborhood, and ChatGPT will tap Zillow to pull matching listings. The shift reframes ChatGPT from a system that only produces text responses into one that can also initiate actions through popular consumer services, cutting down on the friction of switching between apps. By putting these connections behind natural‑language prompts, OpenAI aims to compress the steps between intent and execution and position the chatbot as an operating layer for everyday tasks. Early examples emphasize entertainment and real estate, pointing to how the company envisions integrations powering common scenarios like music discovery and property search directly within the chat experience.

‘Sora’s Slop Hits Different’

Spyglass • John Gruber • October 8, 2025

AI•Tech•Sora

MG Siegler, writing at Spyglass:

I think that’s the real revelation here. It’s less about consumption and more about creation. I previously wrote about how I was an early investor in Vine in part because it felt like it could be analogous to Instagram. Thanks in large part to filters, that app made it easy for anyone to think they were good enough to be a photographer. It didn’t matter if they were or not, they thought they were — I was one of them — so everyone posted their photos. Vine felt like it could have been that for video thanks to its clever tap-to-record mechanism. But actually, it became a network for a lot of really talented amateurs to figure out a new format for funny videos on the internet. When Twitter acquired the company and dropped the ball, TikTok took that idea and scaled it (thanks to ByteDance paying um, Meta billions of dollars for distribution, and their own very smart algorithms).

In a way, Sora feels like enabling everyone to be a TikTok creator.

I don’t want to predict if Sora is a fad or has staying power, but so far I enjoy it in a way that I haven’t enjoyed a new social network in years. It’s just fun to dash off a stupid video with no more work than a quick text prompt, and the friends I’m following are making some damn funny clips every day.

Media

Bari Weiss’s CBS News and MSNBC both just laid out their new journalistic principles — and they’re fascinatingly different

Niemanlab • October 6, 2025

Media•Journalism•CBSNews

Lot of news coming out of the networks this morning: Bari Weiss, founder of the pro-Israel, anti-woke Free Press, has sold the four-year-old news site to CBS parent company Paramount for a reported $150 million and is the new editor-in-chief of CBS News. And the left-leaning cable channel MSNBC has begun its split from NBC News, with plans to be fully independent by the end of October.

Both organizations are marking the occasion by sending top-10 lists of their values and principles to staff — and these lists offer a window into the different ways CBS and MSNBC are thinking about maintaining old audiences and reaching new ones.

Here are Bari Weiss’s “10 core journalistic values” for CBS (via Brian Stelter):

Journalism that reports on the world as it actually is.

Journalism that is fair, fearless, and factual.

Journalism that respects our audience enough to tell the truth plainly — wherever it leads.

Journalism that makes sense of a noisy, confusing world.

Journalism that explains things clearly, without pretension or jargon.

Journalism that holds both American political parties to equal scrutiny.

Journalism that embraces a wide spectrum of views and voices so that the audience can contend with the best arguments on all sides of a debate.

Journalism that rushes toward the most interesting and important stories, regardless of their unpopularity.

Journalism that uses all of the tools of the digital era.

Journalism that understands that the best way to serve America is to endeavor to present the public with the facts, first and foremost.

And here are MSNBC’s “official 10 principles” from Brian Carovillano, SVP of standards and editorial partnerships for news (via Poynter):

Integrity: We uphold the highest ethical standards. We respect the law when reporting the news. We advocate for journalists’ rights. We protect and defend press freedom and the First Amendment. We respect our colleagues, our sources and the communities we cover.

Accuracy: We aim to be accurate in our reporting 100 percent of the time. If we establish that our reporting is flawed, we take prompt action to correct or clarify the mistake.

Fairness: We report the news with an open mind. We aim to give the subject(s) of our original reporting an opportunity to comment before publication.

Opinion: The views expressed by our opinion journalists and contributors are based on accurate, reported facts.

Our Sources: Our objective is to rely on sources we can identify, by name, in our reporting. When anonymity is the only way to report critical information, we aim to have sources with firsthand knowledge and to be transparent about why we granted them anonymity.

Some Lakers Games This Season Will Be Broadcast Live in Immersive Video for Vision Pro

Techradar • John Gruber • October 9, 2025

Media•Broadcasting•Apple Vision Pro•LosAngeles Lakers•Immersive Video

Overview

Apple is bringing live sports to its spatial video format with select Los Angeles Lakers games streamed in Apple Immersive for Vision Pro this season. Jacob Krol reports that while it’s “not every game,” the broadcasts will be exclusive to Apple’s $3,500 spatial computer and designed to put viewers “right in the middle of the action.” The move follows Apple’s experimentation with premium capture for live events—Krol notes that Apple TV+’s Friday Night Baseball has already been “capturing games with the iPhone 17 Pro and 17 Pro Max,” a hint that Apple’s live-production toolkit was expanding even before this basketball initiative. Together, these steps signal a broader push to define what live sports can look and feel like in mixed reality.

What’s New and How It Works

The immersive Lakers streams will rely on specialized camera systems positioned courtside and beneath each basket, offering vantage points that conventional TV rarely provides. According to Krol, “special cameras that support the format will be set courtside and under each basket,” and the games “will be shot using a special version of Blackmagic Design’s URSA Cine Immersive Live camera.” That hardware choice suggests Apple is prioritizing high-fidelity stereoscopic capture, wide field of view, and low-latency pipelines suited to a fast-paced sport. The dedicated Apple Immersive format should translate those feeds into depth-rich perspectives for Vision Pro, aligning with the headset’s emphasis on spatial presence over flat screens.

Significance for Apple, the NBA, and Fans

For Apple, live sports in Apple Immersive addresses a key content gap. On-demand immersive videos have showcased the Vision Pro’s cinematic strengths, but the stickiness of a platform often depends on time-sensitive, must-watch programming. Basketball is an ideal proving ground: constant motion, intimate court size, and star-driven narratives amplify the payoff of sitting “courtside” from home. For the NBA and the Lakers brand, the partnership offers a premium upsell to highly engaged fans, testing whether immersive viewing can command attention—and perhaps, over time, incremental revenue—beyond traditional broadcasts and league apps.

Production and Experience Considerations

Delivering a convincing courtside feel at 60 or 90 feet per second is technically demanding. Camera placement near the stanchions and sidelines must balance immersion with safety and unobtrusiveness. The URSA Cine Immersive Live rigs imply robust low-light performance and rapid autofocus to handle fast breaks and tight drives. Latency, motion smoothing, and comfortable depth mapping will be critical; any mismatch can cause discomfort in head-worn displays. While Apple has not promised every Lakers game, the selective schedule may help the company perfect pipelines, refine compression, and tune the experience based on real-world feedback.

Strategic Context

Interestingly, as John Gruber observes, it’s “kind of weird” that Apple’s first live immersive push isn’t its own Friday Night Baseball broadcasts, given Apple already controls those productions. That choice may reflect basketball’s camera geometry advantages, the Lakers’ global draw, or simpler rights/venue logistics for spatial rigs. It also suggests Apple is content to move pragmatically rather than uniformly across all properties—launch where the payoff is clearest, then scale. If the Lakers tests resonate, expect more teams and leagues to follow, and for Apple to deepen integrations that make live immersive sports a marquee Vision Pro feature.

What Viewers Can Expect

Vision Pro owners should anticipate multiple vantage points that feel closer and more dimensional than a conventional TV telecast, with dynamic angles from under-basket and sideline positions. The experience will likely emphasize presence—sensing player scale, court spacing, and crowd energy—over traditional commentary-led storytelling. Because the streams are exclusive to Vision Pro, they double as a showcase for Apple’s spatial hardware and pipelines, giving early adopters a headline use case that differentiates the device from standard 2D streaming.

Key Takeaways

Select Lakers games will stream live in Apple Immersive exclusively on Vision Pro this season.

Specialized production will use a variant of Blackmagic’s URSA Cine Immersive Live, with cameras courtside and under each basket for depth-rich views.

Apple previously experimented with premium capture on Friday Night Baseball using iPhone 17 Pro models, foreshadowing this live sports push.

The rollout focuses on experience quality over breadth—“not every game”—to optimize capture, latency, and comfort.

The initiative positions Vision Pro as a premium destination for live sports, testing demand and paving the way for broader league adoption.

Can Cory Doctorow’s Book ‘Enshittification’ Change the Tech Debate?

Ny times • October 5, 2025

Media•Publishing•Enshittification•Digital Platforms•Antitrust

Overview

Cory Doctorow’s new book is presented as both a balm and a playbook for a widely shared anxiety: the sense that the internet’s dominant platforms have steadily degraded for users, workers, and creators. The piece frames the work as an intervention in the broader tech debate, suggesting it gives readers language to name what feels broken and concrete ways to push back. The promise is twofold—comfort in understanding a pattern, and solutions that move beyond nostalgia or resignation toward practical change.

The Feeling That Platforms Have Gotten Worse

The core premise centers on everyday, cumulative harms that now feel unavoidable online: cluttered feeds, aggressive monetization, paywalls and fees where basic functionality used to be free, intrusive tracking, diminishing reach for creators unless they pay, and customer service that has been automated out of existence. Rather than treating these as isolated inconveniences, the book treats them as symptoms of a broader dynamic in platform capitalism—one where incentives shift as companies grow, consolidate, and seek ever-higher returns from users and business customers.

What “Comfort” Looks Like

Naming the pattern validates lived experience. By giving readers a coherent framework for why platforms decay, the book offers relief from the idea that the problem is merely personal preference or user error.

Systemic, not individual. It reframes frustration away from “bad users” or “bad luck” and toward structural incentives in ad markets, investor pressure, and winner-take-most network effects.

Historical context. Understanding that cycles of user-friendly beginnings followed by extraction have precedent helps readers place today’s internet within a longer story of consolidation and lock-in.

What “Solutions” Mean in Practice

The work is cast as solutions-forward, emphasizing that decline is not destiny. Proposed directions are practical and actionable rather than utopian:

Competition and choice: Encourage interoperability and reduce switching costs so users and creators aren’t trapped when platforms worsen.

Portability and control: Strengthen rights to move data, audiences, and purchases across services, so leaving a bad platform doesn’t mean starting from zero.

Governance and accountability: Improve transparency around algorithms, fees, and terms; restore real customer service; and adopt design choices that prioritize user agency.

Policy levers: Use antitrust scrutiny, merger review, and pro-competitive rules to curb gatekeeper power and prevent cross-market tying that entrenches dominance.

Collective action: Support unions, creator collectives, and user advocacy that can negotiate fairer terms and resist unilateral changes to payouts or APIs.

Why This Matters Now

By linking personal frustration to structural analysis, the book aims to shift the tech debate from vibes to vocabulary and from doom to direction. The cultural impact is to legitimize dissatisfaction as rational and to show that better outcomes are possible when defaults change. For creators and small businesses, the immediate implication is strategic: diversify channels, build portable relationships (email lists, open protocols), and adopt tools that reduce dependency on any single platform. For policymakers and regulators, the takeaway is to focus less on speech adjudication and more on market structure, switching costs, and deceptive design. For product leaders, the argument suggests long-term value comes from trust, interoperability, and service quality rather than short-term extraction.

Potential Counterpoints Addressed

While some may argue that platform tightening is necessary for sustainability, the frame here is that sustainability should align with user benefit, not be a pretext for eroding it. Others may claim that users tolerate trade-offs for convenience; the rejoinder is that genuine choice is constrained by lock-in and network effects, and that healthier competition can deliver both convenience and respect for users.

Key Takeaways

The book positions the decline of digital platforms as a predictable pattern, not random decay.

Comfort comes from naming and understanding that pattern; solutions come from restoring competition, portability, and accountability.

Practical steps span individual, collective, and policy levels, emphasizing interoperability, data rights, and antitrust scrutiny.

The broader contribution is to move the tech conversation toward concrete reforms that make online life measurably better for users and creators.

Hollywood is Already Falling Behind on AI Video

Bloomberg • Lucas Shaw • October 5, 2025

Media•Film•AIVideo

Context

The central point is clear: the entertainment industry is determined not to repeat the strategic missteps it made during the early internet era, when incumbents resisted change, fought new distribution technologies, and ceded ground to faster-moving entrants. In the current wave, the pressure point is AI-generated video—tools that can produce convincing moving images from text or minimal inputs—promising faster production cycles, lower costs, and new creative formats that challenge Hollywood’s legacy advantages.

What “a repeat of the internet era” means

Defensive posture over innovation: In the web’s early days, rights holders prioritized legal battles and windowing over product reinvention, allowing platforms and pirates to shape consumer behavior.

Missed platform leverage: Tech companies built global, direct-to-consumer relationships while studios clung to intermediated models, weakening bargaining power over time.

Slow capability adoption: Digital workflows, data-driven greenlighting, and direct engagement were adopted unevenly, creating openings for new players and business models.

Why AI video heightens the stakes

Speed and cost: Generative tools compress previsualization, storyboarding, and VFX timelines—from weeks to hours—threatening traditional production budgets and schedules.

Creative expansion: Rapid iteration enables smaller teams to prototype scenes, styles, and entire shorts, eroding the barrier of scale that protected incumbents.

IP and brand risk: Synthetic content multiplies risks around likeness, style imitation, and derivative works—areas where unclear rights frameworks can stall adoption or spark backlash.

Distribution dynamics: If AI-native platforms set norms for discovery, licensing, and creator economics, studios risk losing the customer relationship again.

Emerging playbook

Proactive rights frameworks: Building standardized consent, compensation, and attribution for talent, visual styles, and datasets—baked into contracts and production pipelines.

Capability integration: Making generative tools part of pre-production and post-production toolchains (previz, animatics, localization, catalog enhancements) rather than sidelined pilots.

Portfolio experimentation: Low-risk trials in marketing assets, trailers, and shorts to learn at speed, while ring-fencing marquee IP for tighter controls.

Talent partnerships: Upskilling writers, editors, and VFX teams; creating guild-aligned guidelines and revenue-sharing to avoid zero-sum narratives.

Data advantage: Using first-party archives, style bibles, and asset libraries to train or fine-tune compliant models that differentiate studio output.

Implications

Margin reshaping: Savings in workflows could offset rising content risk, but only if rights and reputational issues are governed upfront.

New gatekeepers: Model providers and distribution platforms may become the next power centers unless studios secure favorable access and co-development rights.

Audience expectations: Viewers conditioned to rapid, personalized, and interactive formats may push Hollywood to rethink runtimes, release windows, and franchise development.

Key takeaways

The lesson from the internet era is speed with structure: embrace the technology while codifying rights, safety, and economics early.

Competitive advantage will hinge on rights-aware model use, integrated pipelines, and talent alignment—not one-off pilots.

Those who set practical standards for AI video inside production will shape the market—and avoid repeating the past.

Bari Weiss to lead CBS News after Paramount buys The Free Press

Fastcompany • October 6, 2025

Media•Journalism•CBSNews

Paramount said Monday that it has bought the news and commentary website The Free Press and installed its founder, Bari Weiss, as the editor-in-chief of CBS News, saying it believes the country longs for news that is balanced and fact-based.