This week’s video transcript summary is here. You can click on any bulleted section to see the actual transcript. Thanks to Granola for its software - Transcript and Summary

Editorial

Democratization at the Gate?

There is a new acronym in town - PVC.

Public Venture Capital is simple to describe and hard to do: put venture-backed private company exposure into a public market wrapper so everyday investors can buy it.

This week, PVC became a category.

Robinhood announced Robinhood Ventures (Ticker: RVI), Powerlaw (ticker: PWRL) is heading toward a Nasdaq listing, Fundrise (ticker: VCX) is moving through conversion terms, and Destiny Tech 100 (ticker: DXYZ) has already shown what happens when retail demand meets private-tech scarcity. It trades at a premium to its underlying asset value; it raised $244m in new capital in Q4 2025 alone, selling new shares at the premium price.

And of course my own SignalRank is on course to itself become a PVC.

Robinhood CEO and founder Vlad Tenev hasn’t hedged his bets:

Here is my thesis in one sentence: PVC can be one of the most important capital-market upgrades of this decade, but only if the offerings truly benefit retail investors over the short and long term.

Why does this matter now?

Because private markets got very large while most public investors were locked out of the highest-growth years. Institutions captured most pre-IPO upside. PVC is the first serious attempt to reopen that door at scale. If it works, more households participate in innovation upside, more capital reaches new company formation, and venture becomes less dependent on a narrow LP class.

All of the players in the space share that ambition - Robinhood Ventures (Ticker: RVI), Powerlaw (ticker: PWRL), Fundrise (ticker: VCX), Destiny Tech 100 (ticker: DXYZ), and SignalRank (ticker: To Be Decided). The keyword is democratization of private markets, enabling the ordinary investor to benefit from high growth prior to IPO.

If PVC fails to deliver that growth the opposite happens. Retail gets sold access without economics, the first hard drawdown destroys trust, regulators step in with a broad brush, and a useful innovation gets labeled as another cycle-era packaging trick. That is why this is not a niche product conversation. It is market design.

The Figma example is worth considering. It had private buyers securing secondary market shares before it went public. Figma secondary purchases refer to transactions where existing shareholders, such as employees and early investors, sell their shares to new investors, allowing for liquidity outside of a traditional IPO. Following a failed acquisition by Adobe, Figma saw significant secondary tender offers, including a mid 2024 round that valued the company at $12.5 billion. This was after a $20 billion acquisition by Adobe fell through. Between those events secondary markets were pricing Figma between those two numbers. There were many buyers. Figma IPO’s and its stock grew to $60 billion. It fell as low as $6 billion. Today Figma trades publicly at $13 billion. When you bought really matters to your outcome.

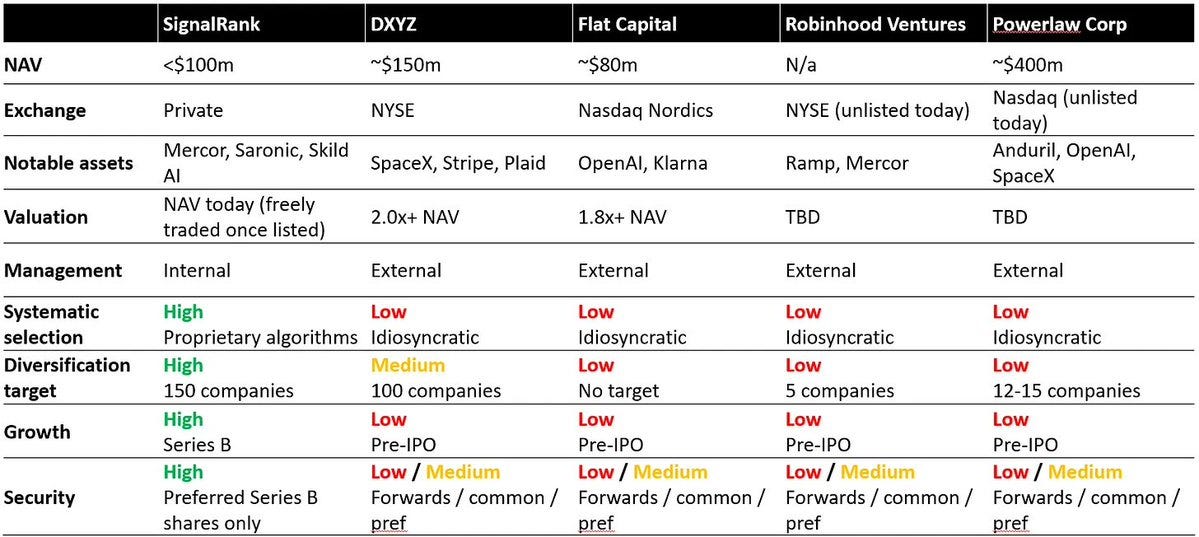

So, for readers new to PVC (and as PVC is so new that means everybody), there are four structural questions that decide whether you are buying real venture exposure or an over-priced basket of assets.

First: stage of entry. A fund buying at Series B is playing a different return game than a fund buying late pre-IPO blocks. Same company, different outcome profile. Late entry can still work, but the multiple headroom is usually smaller and timing risk is higher.

Second: instrument quality. Preferred equity with protective terms is not the same asset as common-share forwards, secondary strips, or layered SPVs. In bull markets this difference gets ignored. In stressed markets this difference becomes the whole return story. And tender-offers, which due to reasonable liquidity demands, are becoming popular, are not always offering preferred shares.

Third: portfolio construction. Venture returns follow a power law. A concentrated basket can produce excellent outcomes, but it can also behave like single-theme speculation. If a wrapper markets itself as broad venture access while holding a narrow set of expensive late-stage names, that mismatch matters. It can bite the investor and poison the well that PVC promises.

Fourth: fee architecture. Headline fees are rarely the full picture. Sales loads, embedded vehicle costs, liquidity mechanics, and valuation lag all change investor outcomes. If total drag is hard to explain in plain language, assume it is too high.

Now to the different approaches. I see one productive path and one that can turn out badly for this promising new asset class.

The productive path treats PVC as capital formation infrastructure. It enters early enough to preserve upside, uses cleaner instruments, discloses concentration honestly, and keeps fee stacks legible. Most important, it helps channel public capital into new company creation, not just into recycled late-stage inventory. Where the liquidity goes is an important consideration.

The more dangerous path treats PVC as a short term fix for LP, GP, founder and employee liquidity and ignores long term wealth growth for those who buy into the PVC assets.

It gathers famous logos, sells democratization language, enters late, accepts weaker instruments, layers fees, and hopes brand momentum outruns structural drag. That approach can gather assets quickly, but it usually transfers timing and quality risk to the least protected buyer.

Access to venture growth for retail investors is, itself, progress, and imperfect access is still better than exclusion. But better is better.

Lower minimums and daily liquidity are meaningful improvements over traditional 10-year lockups. But if access is not aligned with retail economics it does not democratize upside. It democratizes disappointment.

PVC products share a common goal but…

What stage, what security, what concentration, what all-in fee drag, and what structure? These are key questions that determine the likelihood of success.

My view is that PVC is here. It is a good thing, a new way for ordinary investors to access previously forbidden wealth growth. The only open question is whether we build it as a durable bridge between public investors and private innovation, or as a short-cycle wrapper built to harvest name-recognition based demand. The category will be defined by that choice.

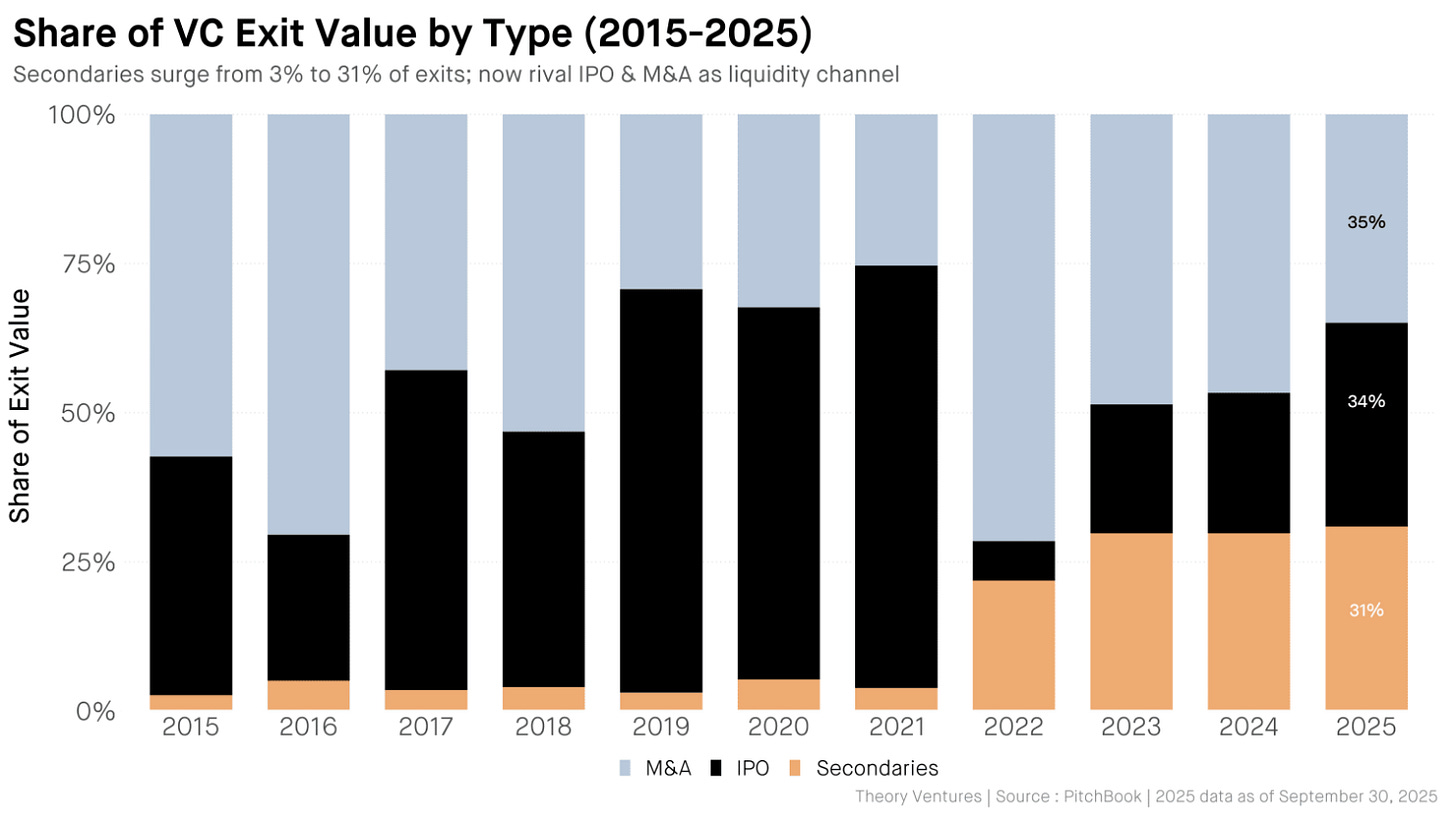

Tomasz Tunguz (see below) charts the rise of private secondary sales (the rust color)

The market built on top of that is not the same as a market built on pure venture entry into preferred share ownership.

Democratization of access to quality really matters.

Yascha Mounk (see Essays below) writes about democratization of the humanities this week. Quality matters there too.

… I consider the ability to make a novel, interesting, and plausible argument about politics to be one important indicator of intelligence and creativity, and that I devoted a long stretch of my early adult life to developing the ability to do so at a high level. So when, still jet-lagged from a recent trip to Europe, I woke up well before the crack of dawn a few days ago, I decided to see whether the newest AI models would be capable of writing a competent academic paper in my field of study, political theory. The result both elated and depressed me.

He used Claude to write a piece he was interested in:

But on the whole, the outcome was depressingly good: I am confident that it could, with minor revisions, be published by a serious journal.

He concludes that when anybody can use AI to write excellent work the value of that work is commoditized:

In some ways, the Age of AI will make the humanities more important than ever. Disciplines from literature to philosophy are needed to help us answer questions about how we can find a place in the world when we are much less needed than before, and what it is to be human when we are no longer the only ones capable of doing some of the things of which our species was once uniquely capable. But at a time when artificial intelligence can jump through the hoops that have over the past decades come to define an academic career in the humanities with growing ease, a radical reimagination of how we pursue and impart meaningful knowledge in these fields is desperately in order.

Once again, democratization requires nuance. it will be great that everybody has access to top knowledge tools and can self-learn using AI as a mentor, teacher, discussion partner. That is all good. But bad actors leveraging it for less worthy intent has to be avoided.

Contents

Essays

On Dwarkesh Patel’s 2026 Podcast With Dario Amodei

Author: Zvi Mowshowitz

Date: 2026-02-16

Publication: Don’t Worry About the Vase

Zvi frames the post as a point‑by‑point breakdown of Dwarkesh Patel’s interview with Dario Amodei, stressing continuity in Amodei’s rapid‑progress timelines and the “geniuses in a data center” forecast. He notes Anthropic’s explosive commercial momentum as evidence that capability is real and already monetizing at scale, while also acknowledging that labs can’t simply overextend without risking collapse.

The central disagreement is about diffusion. Zvi pushes back hard on the claim that adoption lags are just an excuse; he argues procurement friction, training costs, workflow redesign, and human resistance are the real bottlenecks that slow impact even when models are capable. This diffusion gap, he says, is the difference between impressive demos and enterprise transformation, and it explains why coding predictions tend to overstate near‑term labor displacement.

He also highlights what the interview barely touched: alignment and catastrophic risk. The relative silence around those topics, in his view, signals a shift in the field’s center of gravity toward growth, geopolitics, and deployment. The net take is a future of very fast capability gains paired with messy, human‑constrained rollout and a safety conversation that is being deprioritized rather than resolved. Read more: On Dwarkesh Patel’s 2026 Podcast With Dario Amodei

The Humanities Are About to Be Automated

Author: Yascha Mounk

Date: 2026-02-16

Publication: Persuasion

Mounk argues that the gap between AI skeptics and believers has widened dramatically, and that the humanities are now in deep denial about just how capable modern models have become. He describes recent systems as able to reason through long chains, build practical tools, and even generate novel findings in science, while many humanities scholars still dismiss them as “stochastic parrots.”

To test the claim, he asks Claude Opus 4.6 to draft a publishable political theory paper. With minimal human steering, the model generates a paper with a coherent thesis, citations, and disciplinary conventions, centered on a concept of “epistemic domination” drawn from Tocqueville and Mill. Mounk’s takeaway is blunt: the draft is “depressingly good” and could plausibly clear peer review with modest edits.

That result, he argues, makes the current academic publishing treadmill indefensible. If AI can already produce the sort of erudite, niche scholarship that defines humanities careers, then the field must reimagine its purpose. The humanities may become more important for helping society interpret the human condition, but the traditional gatekeeping of publishable papers can no longer justify itself. Read more: The Humanities Are About to Be Automated

Updated Thoughts on AI Risk

Author: Noah Smith

Date: 2026-02-16

Publication: Noahpinion

Noah Smith explains why his tone on AI risk has darkened since 2023. He says the core change isn’t just mood; it’s the shift from chatbots to agentic systems that can write and execute code at scale. Once software can be produced and maintained by AI, new classes of failure move from hypothetical to plausible.

He lays out a new risk: the “machine stops” scenario. If critical infrastructure becomes fully vibe‑coded and human expertise atrophies, a software failure or malicious update could cripple agriculture or logistics, creating civilizational fragility. He cites evidence that AI assistance can erode human skill acquisition and argues that over‑optimized systems can collapse under stress, similar to brittle supply chains.

His top worry remains AI‑enabled bioterrorism. Automated labs and AI‑driven design make it increasingly possible for a bad actor to generate lethal pathogens at scale. Robotics‑driven extermination is still a longer‑term risk, but he urges more focus on biosecurity and systemic hardening now. Read more: Updated Thoughts on AI Risk

Solve Everything: How We Get to Abundance by 2035

Author: Peter Diamandis & Alex Wissner-Gross

Date: 2026-02-15

Publication: Metatrends / SolveEverything.org

Solve Everything is a manifesto for how societies move from scarcity to abundance. It frames progress as a four‑stage cycle: make a domain legible, build a harness to control it, form institutions that allocate trust and capital, and finally collapse the unit cost so the capability becomes widely available. The project’s shorthand is that today’s “telescope” is benchmarking, and today’s harness is an industrial intelligence stack.

The essay walks through prior revolutions to illustrate the pattern. The scientific revolution used the scientific method and reproducibility as the institutional harness; the industrial revolution paired heat engines with factory discipline and standards; the digital revolution built on protocols and permissionless composability. Each era created new institutions that turned technical power into durable economic advantage.

For the intelligence revolution, the author argues the prestige should shift from lone “heroes” to “harness builders” who make AI reliable and governable. He calls for public benchmark authorities, outcome‑based contracts, and operational throughput ledgers that tie AI to measurable outputs. The punchline: if you want to capture the gains of abundance, build the harness, not the hero product. Read more: Solve Everything

Thin Is In

Author: Ben Thompson

Date: 2026-02-17

Publication: Stratechery

Ben Thompson argues that AI shifts the center of gravity from thick local software to thin clients backed by powerful cloud models. In his framing, if intelligence and context are increasingly server-side, the end-user device matters more for interface design, trust, and distribution than for heavyweight on-device computation.

He connects this to earlier platform eras where local processing and native apps created durable moats. In an AI-first stack, those moats can narrow as capability is accessed through APIs and agents, making product differentiation more dependent on workflow integration, identity, and relationship ownership.

For venture and platform strategy, the takeaway is practical: the most defensible layer may be the interface and distribution rail that captures user intent, not the model itself. That maps directly to this week’s theme of routing power and where value accrues in the next AI cycle. Read more: Thin Is In

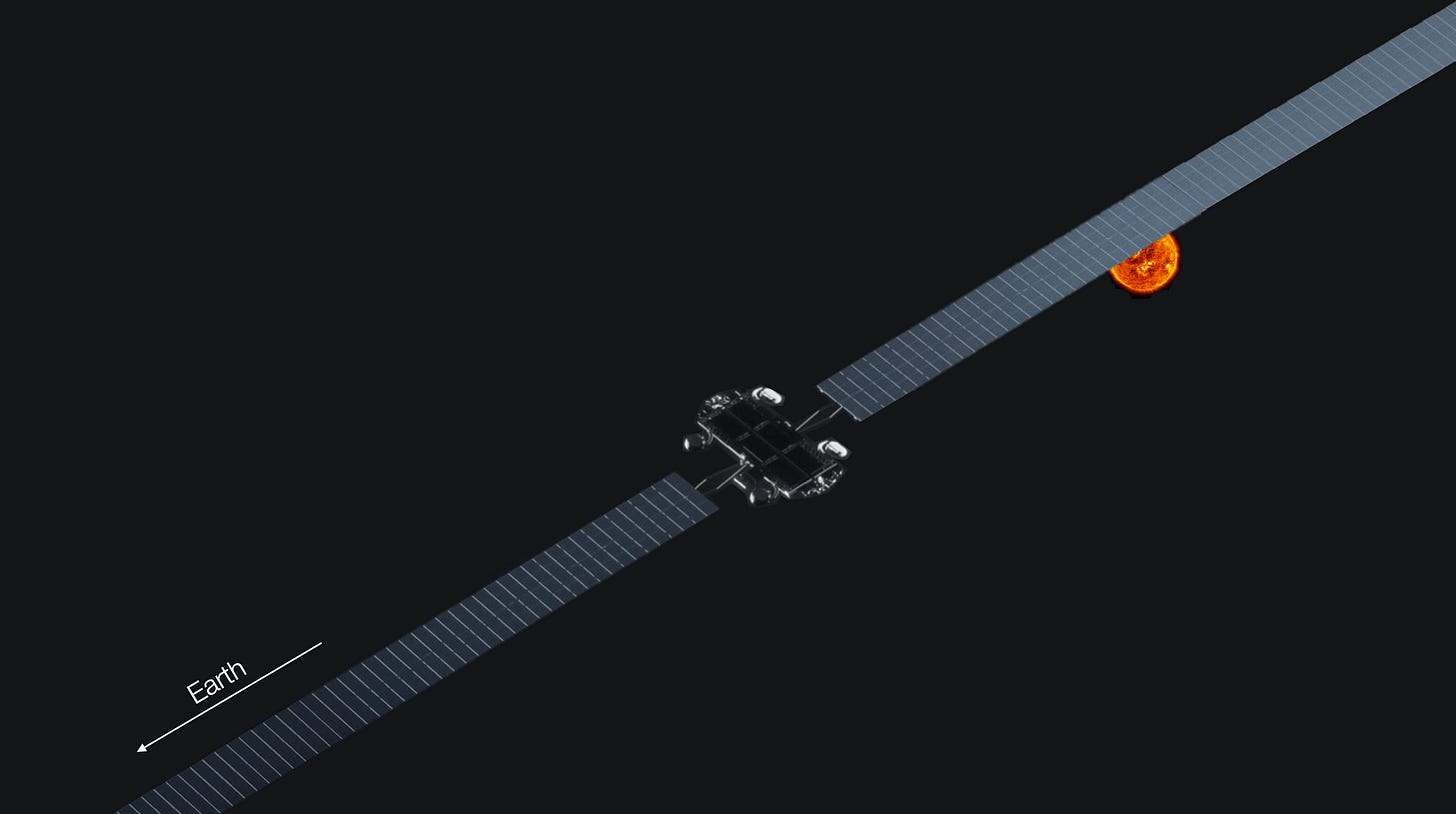

Can We Build AI in Space?

Author: Tomas Pueyo

Date: 2026-02-18

Publication: Uncharted Territories

Pueyo examines Elon Musk’s claim that AI growth will soon be constrained by terrestrial power, making space‑based datacenters inevitable. He runs cost and physics estimates and concludes that orbital data centers are already in the same order of magnitude as land‑based ones, and could plausibly become cheaper as launch costs fall.

The core case is about mass and energy. In orbit, solar panels can receive near‑continuous sunlight, increasing output dramatically and eliminating the need for heavy batteries. With the right orbit, panels can stay illuminated while avoiding Earth’s shadow, which reduces weight and improves energy efficiency.

He then works through engineering constraints: orbit selection, latency (still low enough for most AI workloads), lighter solar panels in microgravity, GPU weight versus launch cost, and the need for radiation shielding. The result is a technical roadmap suggesting space data centers are no longer science fiction, but a credible alternative for power‑hungry AI growth. Read more: Can We Build AI in Space?

Power in the Age of Intelligence

Author: Packy McCormick

Date: 2026-02-18

Publication: Not Boring

Packy McCormick argues that the traditional focus on software moats misses the real story of the AI era. As code and intelligence become abundant, the scarce asset is “high ground”—the complementary assets like distribution, manufacturing, and customer relationships that let firms capture value from technological progress.

He uses market anomalies like Stripe vs. Adyen and Ramp vs. Brex to argue that valuations reflect who is positioned to own this high ground, not who has the best product alone. The essay frames the era as a “winner takes more” dynamic, where companies that seize high ground can expand outward and dominate entire industries.

The takeaway is strategic: don’t obsess over protecting small castles; build or back companies that can capture the high ground in large markets and wield abundant inputs against weaker competitors. In this lens, AI doesn’t just change products—it restructures power and concentration across industries. Read more: Power in the Age of Intelligence

A Guide to Which AI to Use in the Agentic Era

Author: Ethan Mollick

Date: 2026-02-18

Publication: One Useful Thing

Ethan Mollick says “using AI” now means more than chatting with a bot. In the agentic era, you have to choose among models, apps, and harnesses—because the same model behaves very differently depending on the tooling wrapped around it.

He breaks down the big models (GPT‑5.2/5.3, Claude Opus 4.6, Gemini 3 Pro) and explains how apps and harnesses translate raw capability into real work. Claude Code and Claude Cowork exemplify harnesses that can take actions, plan, and execute multi‑step tasks, while NotebookLM focuses on research synthesis and knowledge management.

He also flags riskier tools like OpenClaw—powerful but insecure local agents—as signs of where the market is heading. His pragmatic advice: start with a top model, then experiment with specialized apps and harnesses on real tasks to learn where each tool excels. Read more: A Guide to Which AI to Use in the Agentic Era

Venture

Introducing Robinhood Ventures Fund I (RVI)

Author: Robinhood

Date: 2026-02-17

Publication: Robinhood Newsroom

Robinhood says RVI is expected to list on the NYSE in the coming weeks at an expected $25 per share, with IPO Access available in-app before trading begins. The fund is framed as a closed-end public vehicle for non-accredited investors to get exposure to private companies that are usually hard to access directly.

The initial portfolio disclosures include names like Databricks, Revolut, Mercor, Airwallex, Oura, Boom, and Ramp, with Stripe noted as a signed post-IPO purchase target. Structurally, Robinhood positions this as lower-friction than traditional venture vehicles: no accreditation minimums, no performance fee, and public-market liquidity after listing.

Fee design is part of the pitch. Robinhood discloses a 2.0% annual management fee, temporarily reduced to 1.0% for the first six months after IPO. The practical implication is that RVI may become the category’s first broad retail-distribution test: if it trades well, more venture wrappers are likely to follow quickly.

Read more: Introducing Robinhood Ventures Fund I (RVI)

Powerlaw to List on Nasdaq, Giving Investors Access to OpenAI and SpaceX

Author: Investing.com (reporting on filing details)

Date: 2026-02-17

Publication: Investing.com

Powerlaw Corp., part of Akkadian Ventures’ Powerlaw Capital Group, filed to list on the Nasdaq Global Market under the ticker PWRL. The fund specializes in buying private‑company shares from existing holders and has built a portfolio of AI and defense tech stakes, including OpenAI, Anthropic, SpaceX, and Anduril.

According to the filing, Powerlaw has more than $1.2 billion in assets under management and a portfolio of roughly $355 million at cost across 18 companies, with 99% of investments tied to private tech. The largest holdings include OpenAI, SpaceX, and xAI, and the filing notes SpaceX’s February 2026 acquisition of xAI.

The listing is structured as a resale of roughly 43 million shares by existing stockholders rather than a traditional underwritten IPO. Stifel will act as financial advisor and help determine the opening price, positioning the fund as a vehicle for retail access to late‑stage private tech exposure. Read more: Powerlaw to List on Nasdaq, Giving Investors Access to OpenAI and SpaceX

VCX by Fundrise

Author: Fundrise

Date: 2026-02 (page data as of 2026-01-31)

Publication: Fundrise

Fundrise’s Innovation Fund (VCX) is positioning itself as a public‑market vehicle for private‑tech exposure, with plans to list on the NYSE. The pitch emphasizes democratizing access to late‑stage tech growth that has increasingly stayed private and out of reach for individual investors.

The fund is described as multi‑stage, investing from early to late rounds and holding post‑IPO where relevant. It targets AI, data infrastructure, fintech, and software, with top holdings including Databricks, Anthropic, OpenAI, Anduril, Ramp, and SpaceX. As of January 31, 2026, the fund reports a $524M NAV and a 2.5% annual management fee, with the portfolio heavily weighted toward private holdings.

Fundrise provides a sector and holdings breakdown along with performance snapshots and historical return charts. The page frames VCX as a long‑term growth vehicle that blends venture exposure with public‑market liquidity once listing approval is complete. Read more: VCX by Fundrise

A Third, A Third, A Surprising Third

Tomasz Tunguz

Venture Capitalist at Theory

A decade ago, secondaries barely registered. They accounted for roughly 3% of exit value in 2015. Today they claim 31% : nearly $95b in the trailing twelve months.

The shift accelerated after 2021’s IPO bonanza. When public markets closed their doors in 2022, investors found alternative routes. Secondaries absorbed demand that would have flowed to traditional exits. When Goldman Sachs acquired Industry Ventures, the transaction signaled secondaries have arrived. Morgan Stanley followed with EquityZen, then Charles Schwab announced its acquisition of Forge Global. Wall Street recognized the structural change before most of venture did.

This matters for founders & investors. When IPOs dominated exits, fund models assumed a small number of public offerings would generate the bulk of returns.

Now liquidity arrives through multiple doors. A founder might sell secondary shares to patient capital while the company remains private. A GP might move positions through continuation vehicles. An LP might trade fund stakes on an increasingly liquid secondary market.

The 830 unicorns holding $3.9t in aggregate post-money valuation cannot all exit through IPOs. The math doesn’t work. At 2025’s pace of 48 VC-backed IPOs, clearing the unicorn backlog would take seventeen years. Secondaries provide a release valve that traditional exits cannot.

Companies like OpenAI have embraced this reality, running employee tender offers while voiding unauthorized secondary transfers. The largest private companies now manage their own liquidity programs rather than waiting for public markets.

Today, secondary liquidity concentrates in the top 20 names. SpaceX, Stripe, OpenAI. For the founder of company #50, the secondary market remains largely theoretical. For secondaries to succeed as a broad asset class, buyers must underwrite positions in companies without household recognition. As the market grows, this coverage gap becomes opportunity…

India Doubles Down on State-Backed Venture Capital, Approving $1.1B Fund

Author: TechCrunch Staff

Date: 2026-02-14

Publication: TechCrunch

India has approved a ₹100 billion (~$1.1B) state‑backed venture capital program that will invest through private funds, focusing on deep tech and advanced manufacturing. The structure is a fund‑of‑funds designed to steer capital into high‑risk sectors that need long time horizons and heavier investment.

The approval follows a January 2025 budget announcement and builds on a 2016 program that committed ₹100 billion to 145 funds, which then invested more than ₹255 billion in 1,370+ startups. The new initiative aims to broaden early‑stage support, extend beyond major cities, and strengthen smaller domestic VC firms.

The decision arrives amid policy changes to make India more startup‑friendly—extending the “startup” designation to 20 years and raising revenue thresholds for benefits—while private funding has cooled. Officials highlight rapid growth in the startup base and position the fund as a strategic move ahead of major AI and tech summits. Read more: India Doubles Down on State-Backed Venture Capital, Approving $1.1B Fund

OpenClaw & The Acqui-Hire That Explains Where AI Is Going

Author: Jeff Becker

Date: 2026-02-16

Publication: Monday Morning Meeting

Jeff Becker explains that OpenAI didn’t acquire OpenClaw; it hired Peter Steinberger, the solo developer behind the viral open‑source agent previously known as Clawdbot/Moltbot. Steinberger’s backstory includes building PSPDFKit, selling shares in 2021, and then creating a WhatsApp‑style AI agent that exploded on GitHub.

The project’s surge brought complications: a trademark complaint from Anthropic over “Clawd,” a brief account hijack that spawned a scam token, and a series of renames before landing on OpenClaw. Steinberger ultimately joined OpenAI, reportedly on the condition that the project remain open‑source under an independent foundation.

Becker’s core argument is that the real AI battleground is the agent layer. OpenAI needed agent expertise, Anthropic inadvertently pushed a key developer toward a rival, and the hire signals how valuable simple, obsessive, consumer‑grade agent software has become in the race for the next platform layer. Read more: OpenClaw & The Acqui-Hire That Explains Where AI Is Going

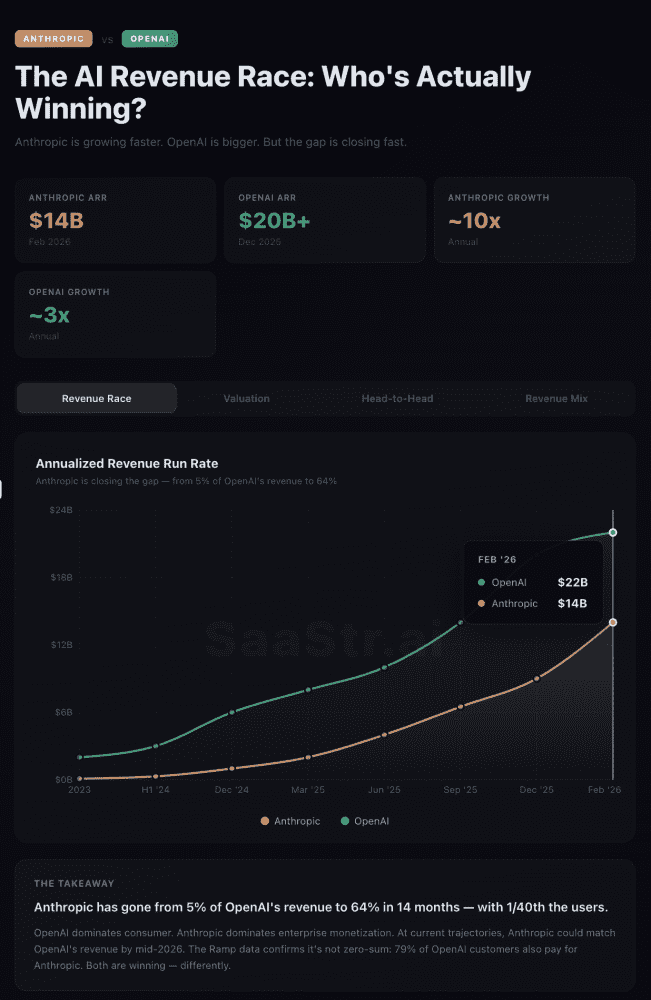

Anthropic Just Hit $14 Billion in ARR — Up From $1 Billion Just 14 Months Ago

Author: Jason Lemkin

Date: 2026-02-15

Publication: SaaStr

SaaStr reports that Anthropic closed a $30B Series G at a $380B post‑money valuation and disclosed $14B in annualized revenue. The post frames this as the fastest‑scaling B2B software trajectory on record, leaping from roughly $1B ARR in late 2024 to $14B in February 2026.

It lays out the growth curve—$4B mid‑2025, $9–10B by year‑end—and argues there’s no historical peer for the pace. The write‑up highlights Claude Code as a category driver, claiming $2.5B ARR only nine months after launch and rapid expansion in enterprise subscriptions.

Customer metrics underscore enterprise adoption, with large‑spend accounts rising sharply. The piece concludes that Anthropic is no longer a “promising startup” but a hyperscale platform reshaping enterprise software economics. Read more: Anthropic Just Hit $14 Billion in ARR — Up From $1 Billion Just 14 Months Ago

The AI Acqui-Hire Wave

Author: Tomasz Tunguz

Date: 2026-02-14

Publication: Tomasz Tunguz

Tomasz Tunguz analyzes a surge in AI acqui‑hires, noting 5,700 AI/ML acquisitions from 2020–2025 with only 21% disclosing deal value. The majority are small team buyouts—talent moves without the headline‑grabbing valuations of the biggest exits.

He shows that the most active acquirers are not always the usual suspects: Accenture tops the list, Apple follows, while Google and Microsoft rank lower. The data suggest incumbents are buying execution capacity and speed rather than just technology.

Deal sizes at the upper end are growing—the 75th percentile rose from $82M in 2020 to $248M in 2025—but the undisclosed majority likely falls below that range. The conclusion is that AI talent acquisition has become the dominant, quieter form of exit. Read more: The AI Acqui-Hire Wave

Will I Be Paid in Tokens?

Author: Tomasz Tunguz

Date: 2026-02-17

Publication: Tomasz Tunguz

Tunguz describes how his personal AI inference spending ballooned from a few hundred dollars a month to a $100K annualized run rate once he layered on multiple agents and automated daily workflows. He then migrated to an open‑source model and, using historical task data to test parity, cut costs to about 12% of prior spend.

He argues that inference costs are becoming a fourth component of engineering compensation alongside salary, bonus, and equity. Using Levels.fyi benchmarks, he estimates that $100K in annual inference can add more than 20% to fully loaded engineer costs.

The implication is a new CFO question: what productivity are you getting per inference dollar? He suggests that teams will increasingly track “productive work per dollar of inference” and that token budgets will become part of performance and compensation conversations. Read more: Will I Be Paid in Tokens?

Crunchbase Data: The AI Boom Has Drastically Changed Who’s Funding The Hottest Companies In 2025 Vs. 2021

Author: Gené Teare

Date: 2026-02-19

Publication: Crunchbase News

Gené Teare compares who backed the hottest companies in the 2021 funding peak versus 2025 and shows that the AI cycle has significantly reordered the investor set. The analysis highlights how concentration has increased around firms with the balance sheets and distribution to keep participating in mega-rounds.

This data point is useful for this week’s PVC framing because it quantifies a capital-formation power shift. If the investor base around top AI companies is narrowing, public venture wrappers will likely compete less on “access” and more on entry quality, concentration risk, and fee structure.

Teare’s side-by-side lens on 2021 vs. 2025 helps separate cyclical enthusiasm from structural change. It supports the editorial claim that this phase of venture is increasingly defined by who controls durable access to late-stage AI exposure. Read more: Crunchbase Data: The AI Boom Has Drastically Changed Who’s Funding The Hottest Companies In 2025 Vs. 2021

AI

After Spooking Hollywood, ByteDance Will Tweak Safeguards on Seedance 2.0

Author: Jess Weatherbed

Date: 2026-02-16

Publication: The Verge

ByteDance says it will strengthen safeguards on its Seedance 2.0 AI video model after Disney, Paramount, and Hollywood trade groups accused it of widespread copyright infringement. Viral clips featuring famous actors and characters sparked the complaints and a wave of cease‑and‑desist letters.

The Verge reports that Disney alleged the model was creating and distributing derivative works of protected characters, while Paramount demanded removal of infringing material. Industry groups like the MPA and SAG‑AFTRA also condemned the tool, saying it undermines consent and livelihoods.

ByteDance responded that it respects intellectual property and is taking steps to prevent unauthorized use of likenesses and IP. The episode highlights rising pressure on generative‑video providers to implement meaningful guardrails as legal scrutiny intensifies. Read more: After Spooking Hollywood, ByteDance Will Tweak Safeguards on Seedance 2.0

DeepSeek 2: The Movie

Author: M.G. Siegler

Date: 2026-02-16

Publication: Spyglass

M.G. Siegler frames Seedance 2.0 as another “watershed” moment for AI video, akin to the hype cycle around DeepSeek, and notes the outsized panic coming from Hollywood. He argues the model’s ability to recreate cinematic scenes with minimal prompts explains the frenzy, but he’s skeptical that this means the end of filmmaking.

He emphasizes the legal gray zone: apparent training on copyrighted footage, explicit infringement in remixed scenes, and an inevitability of lawsuits and takedown demands. ByteDance’s willingness to comply with removal requests suggests the model will be constrained, much like earlier video generators that became less viral after guardrails tightened.

His broader take is that recognizable IP drives virality; without Hollywood talent, the content becomes far less shareable. The longer‑term shift is a broadened talent pool and new creator pathways, not the wholesale collapse of the industry. Read more: DeepSeek 2: The Movie

Claude Opus 4.6 vs GPT-5.3 Codex: Which AI Coding Model Should You Use?

Author: Nilesh Barla

Date: 2026-02-14

Publication: Adaline Labs

Adaline Labs compares Claude Opus 4.6 and GPT‑5.3 Codex as complementary roles rather than direct rivals. Opus is framed as the senior architect—strong at planning, deep context, and repo‑wide refactors—while Codex is positioned as the fast, terminal‑driven implementer that iterates quickly and reliably.

The essay highlights a practical workflow: use Opus to design and reason about architecture, switch to Codex for execution, bug fixes, and test writing, then return to Opus for audit and coherence. It also discusses tradeoffs between long‑context reasoning and retrieval‑based prompts, plus the cost realities that influence which model you default to.

The outcome is a routing guide for real PR work: match the model to the task’s scope and risk instead of debating benchmarks. The piece treats the simultaneous release of Opus 4.6 and Codex 5.3 as a signal that tooling choices now matter as much as raw capability. Read more: Claude Opus 4.6 vs GPT-5.3 Codex: Which AI Coding Model Should You Use?

“Automate the Entire Company”

Author: Nikhil Basu Trivedi

Date: 2026-02-16

Publication: Next Big Thing

Nikhil Basu Trivedi recounts a portfolio company’s 2026 goal: “automate the entire company.” The vision is that if the team goes on vacation, engineering, sales, growth, and finance workflows still run through agents—writing code, prioritizing leads, running experiments, and handling payments without human involvement.

He argues this was unthinkable a few months ago but now feels realistic due to rapid advances in agentic systems. He points to examples from WindBorne, where agents monitor training runs and coordinate work in chat, and cites other companies experimenting with Slack‑to‑agent workflows that move people from execution to supervision.

The broader claim is that we’re moving toward companies where the default is directing AI rather than doing the work directly. As automation spreads across functions, human taste and decision‑making become the scarce inputs, while agentic infrastructure becomes a competitive advantage. Read more: “Automate the Entire Company”

Regulation

Microsoft Copilot Bug Exposed Confidential Emails; EU Parliament Blocks AI on Lawmakers’ Devices

Author: TechCrunch Staff

Date: 2026-02-17 / 2026-02-18

Publication: TechCrunch

Microsoft confirmed that a bug allowed Copilot Chat to summarize confidential emails for weeks, even when customers had data‑loss‑prevention rules in place. The issue, first reported by BleepingComputer, meant emails labeled confidential were still processed by Copilot in Microsoft 365.

Admins could track the incident (CW1226324), and Microsoft began rolling out a fix earlier in February. The company declined to say how many customers were affected, but acknowledged the bug could expose sensitive internal communications to the AI system.

The disclosure landed amid rising governance concerns: the European Parliament’s IT department separately blocked built‑in AI features on lawmakers’ devices out of fear that confidential correspondence could be uploaded. Together, the events highlight how AI features are colliding with enterprise security policies. Read more: Microsoft Copilot Bug

Anthropic and the Pentagon Are Reportedly Arguing Over Claude Usage

Author: Anthony Ha

Date: 2026-02-15

Publication: TechCrunch / Axios

TechCrunch reports that the Pentagon is pressing AI companies to allow military use for “all lawful purposes” and that Anthropic has resisted the broadest permissions. Axios says the same demand has been made to OpenAI, Google, and xAI, with firms showing varying degrees of flexibility.

Anthropic is described as the most resistant, and the Defense Department is reportedly threatening to cancel a $200M contract if usage terms remain restrictive. The dispute follows earlier reporting that Claude had been used in a U.S. military operation, intensifying scrutiny of how the model is deployed.

Anthropic’s position is that it maintains hard limits around fully autonomous weapons and mass domestic surveillance. The episode turns safety policies into procurement leverage, showing how government contracts are now stress‑testing AI governance commitments. Read more: Anthropic and the Pentagon Are Reportedly Arguing Over Claude Usage

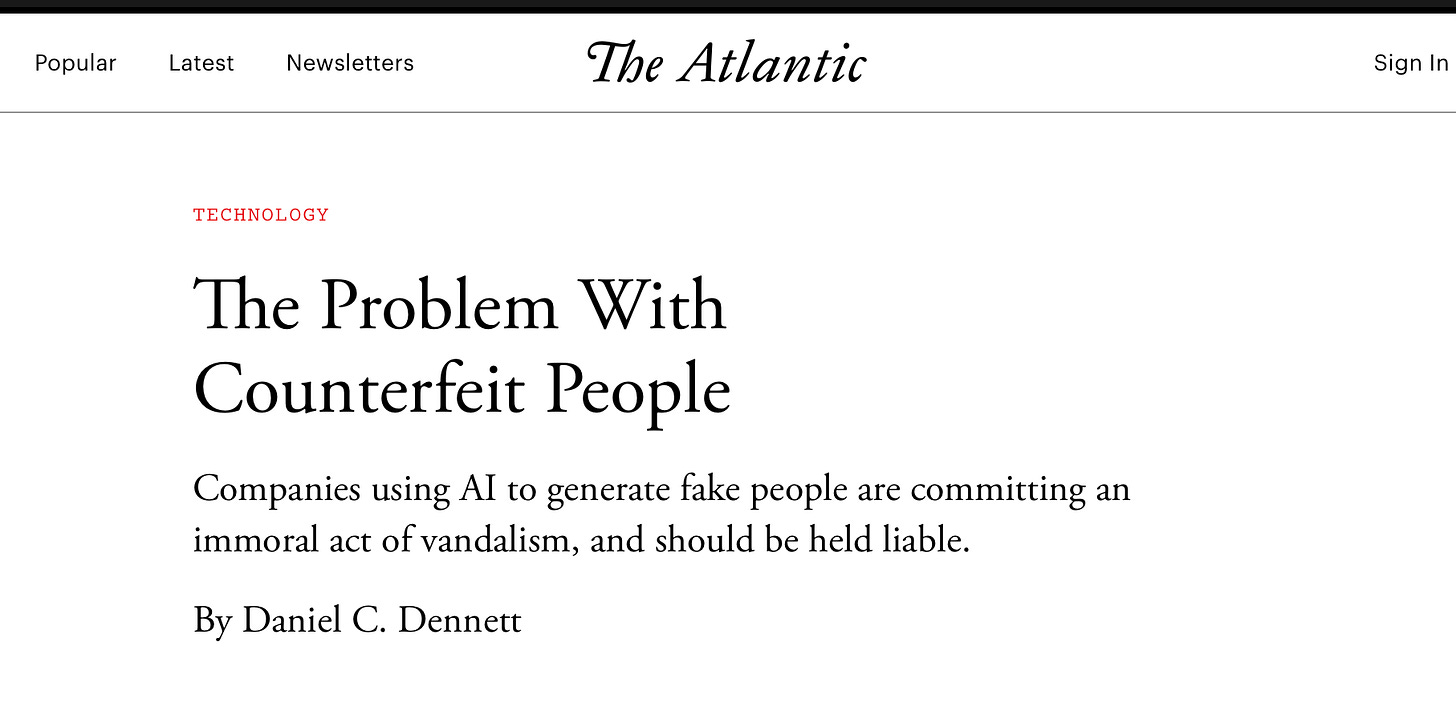

We URGENTLY Need a Federal Law Forbidding AI from Impersonating Humans

Author: Gary Marcus

Date: 2026-02-14

Publication: Marcus on AI

Gary Marcus argues that AI impersonation has crossed a threshold and now requires federal legislation. He invokes Daniel Dennett’s “counterfeit people” warning and says deepfake video and voice tools have become cheap, realistic, and dangerously scalable.

He cites real‑world scams—like a deepfaked video that allegedly cost a victim hundreds of thousands of dollars—and notes emerging capabilities that let agents place calls while posing as humans. In his view, 2026 could see more impersonation fraud than all prior years combined.

Marcus calls for a law forbidding machine output from being presented as human, banning non‑consensual cloning of voices and likenesses, and allowing only narrow parody exceptions. He warns against corporate lobbying that could weaken enforcement and insists the window for action is now. Read more: We URGENTLY Need a Federal Law Forbidding AI from Impersonating Humans

Longtime NPR Host David Greene Sues Google Over NotebookLM Voice

Author: Anthony Ha

Date: 2026-02-15

Publication: TechCrunch

David Greene, longtime host of NPR’s “Morning Edition,” is suing Google, claiming NotebookLM’s male podcast voice is based on his cadence and vocal mannerisms. He says friends and colleagues alerted him to the resemblance, which convinced him the AI voice appropriated his identity.

The suit targets Google’s NotebookLM feature that generates AI‑hosted podcasts from documents. Greene argues that his voice is central to his professional identity and that the tool’s similarities amount to unauthorized use of his likeness.

Google denies the claim, saying the voice is based on a paid professional actor. The dispute echoes the 2024 Scarlett Johansson incident and could shape how right‑of‑publicity claims apply to AI‑generated voices. Read more: Longtime NPR Host David Greene Sues Google Over NotebookLM Voice

Politics

Trump’s AI Push Fuels Revolt in MAGA Heartlands

Author: Joe Miller

Date: 2026-02-18

Publication: Financial Times

The Financial Times reports that communities in Republican-leaning regions are organizing against the AI datacenter buildouts required by the administration’s own pro-AI agenda. Local opposition focuses on power prices, water usage, and the sense that national AI policy is imposing costs on small towns while enriching distant tech firms.

The backlash is turning infrastructure siting into a political wedge. As AI power demand rises, the article frames a conflict between national strategy and local consent that could shape U.S. AI deployment timelines as much as GPU supply.

Read more: Trump’s AI Push Fuels Revolt in MAGA Heartlands

Labor

The Great Computer Science Exodus (And Where Students Are Going Instead)

Author: TechCrunch Staff

Date: 2026-02-15

Publication: TechCrunch

TechCrunch reports that UC system computer science enrollment fell again, even as overall college enrollment rose nationwide. It’s the first sustained decline since the dot‑com era, and it has sparked concern that students are abandoning traditional CS paths.

The article argues the shift is less an exodus than a migration toward AI‑focused degrees. Schools like UC San Diego have launched AI majors, MIT’s AI and decision‑making program has become one of its largest, and a wave of universities is creating new AI colleges and departments.

The piece contrasts U.S. hesitancy with China’s aggressive AI‑literacy push and notes internal campus tensions as faculty debate how quickly to adapt curricula. The takeaway: students still want tech careers, but they are re‑routing into AI‑native programs that feel more future‑proof. Read more: The Great Computer Science Exodus (And Where Students Are Going Instead)

Interview of the Week

Books are Dying Again

Author: Andrew Keen

Date: 2026-02-19

Publication: Keen On America (Episode 2804)

Startup of the Week

Emergent — India’s Vibe-Coding Platform Hits $100M ARR in 8 Months

Author: Jagmeet Singh, Ivan Mehta

Date: 2026-02-17

Publication: TechCrunch

Founded: 2025 | HQ: San Francisco / Bengaluru | Valuation: $300M (SoftBank, Khosla)

Emergent, an India‑founded vibe‑coding platform, says it reached $100M in annual run‑rate revenue just eight months after launch. The company claims 6M users across 190 countries, 150K paying customers, and more than 7M apps built on the platform.

TechCrunch reports that roughly 70% of users have no prior coding experience, with small businesses using the tool for CRMs, ERP systems, and logistics workflows. Most new projects are mobile‑first, prompting Emergent’s rollout of iOS and Android apps that let users build and publish directly from phones.

The startup monetizes through subscriptions, usage fees, and deployment/hosting, and says gross margins are improving. It is testing an enterprise offering and recently raised $70M at a $300M valuation, positioning itself against Replit, Lovable, and other AI‑native app builders. Read more: Emergent hits $100M ARR

Post of the Week

Mapping the Platforms Democratizing Access to Venture Capital

Author: SignalRank

Date: 2026-02-17

Publication: X / SignalRank

Rob Hodgkinson mapped the platforms now pitching retail access to private venture portfolios, comparing RVI, DXYZ, Powerlaw, VCX, and SignalRank’s own INDX across stage focus, portfolio construction, and fee structure. The post lands right as multiple vehicles move toward public listings, making the category comparison unusually timely.

It’s a compact snapshot of the new PVC landscape and pairs with this week’s editorial framing on how public wrappers can either expand venture formation or repackage late-stage risk.

Read more: Mapping the Platforms Democratizing Access to Venture Capital

A reminder for new readers. Each week, That Was The Week, includes a collection of selected essays on critical issues in tech, startups, and venture capital.

I choose the articles based on their interest to me. The selections often include viewpoints I can't entirely agree with. I include them if they make me think or add to my knowledge. Click on the headline, the contents section link, or the ‘Read More’ link at the bottom of each piece to go to the original.

I express my point of view in the editorial and the weekly video.