Contents

Essay

Venture

The Structural Transformation: What the Six Patterns of AI VC Funding Really Mean

Investor Concentration Risk: How AI Venture Became a Single Trade

Deutsche Börse launches €5.3bn bid for private equity-backed Allfunds

The gap between top quartile and bottom quartile venture funds was over 40%

Startup Funding Continued On A Tear In November As Megarounds Hit 3-Year High

State of European Tech report | Sarah Guemouri & Tom Wehmeier (Atomico)

Series A rounds continue to dominate the market… but Series A funds themselves are fading fast.

I’m here to give the small group of you who actually care about decision science, power-law math

AI

Media

Regulation

Crypto

Interview of the Week

Startup of the Week

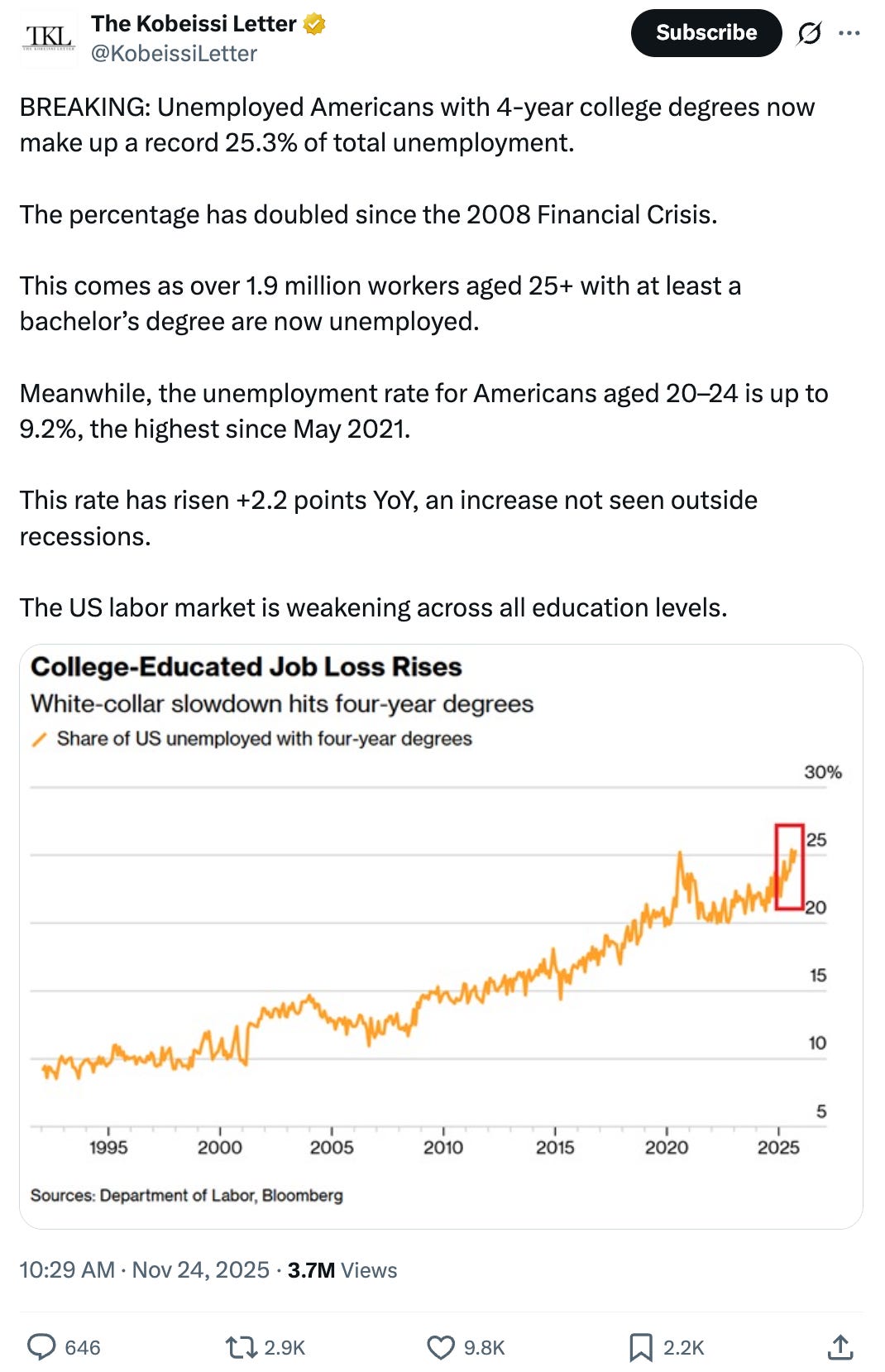

Post of the Week

Editorial:

Winner Takes it All? Or The Great Compression: What is Happening?

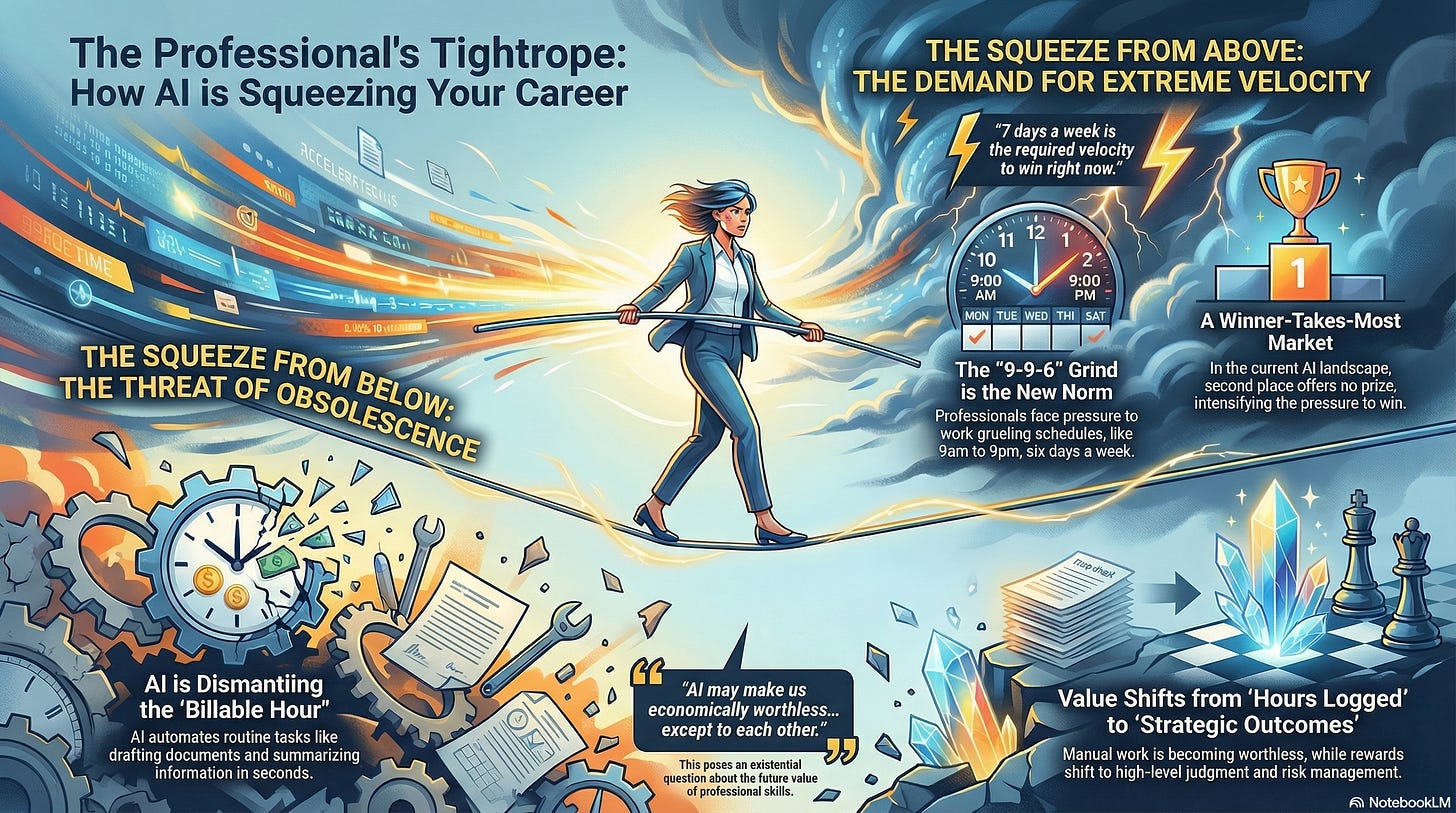

I’m adding Google Notebook LM infographics to the editorial this week. Let me know if you love, hate or don’t care about them.

The daily financial news presents a picture of chaos. We see “$2 billion ‘seed’ rounds” that defy historical logic, massive industry consolidations, and unprecedented investment strategies. While these events seem disconnected and irrational, they are symptoms of a single, unifying force at work: The Great Compression. This phenomenon is collapsing the distance between venture capital stages, career timelines, and the very platforms we use to access information.

This compression isn’t a sign of a speculative bubble. It is the system’s rational response to a new “Winner Takes Most” innovation curve, driven by the immense capital intensity of artificial intelligence. In a landscape where the first to achieve scale captures nearly all the value, the entire economic system is frantically squeezing itself into a handful of high-stakes bets simply to ensure its own survival.

--------------------------------------------------------------------------------

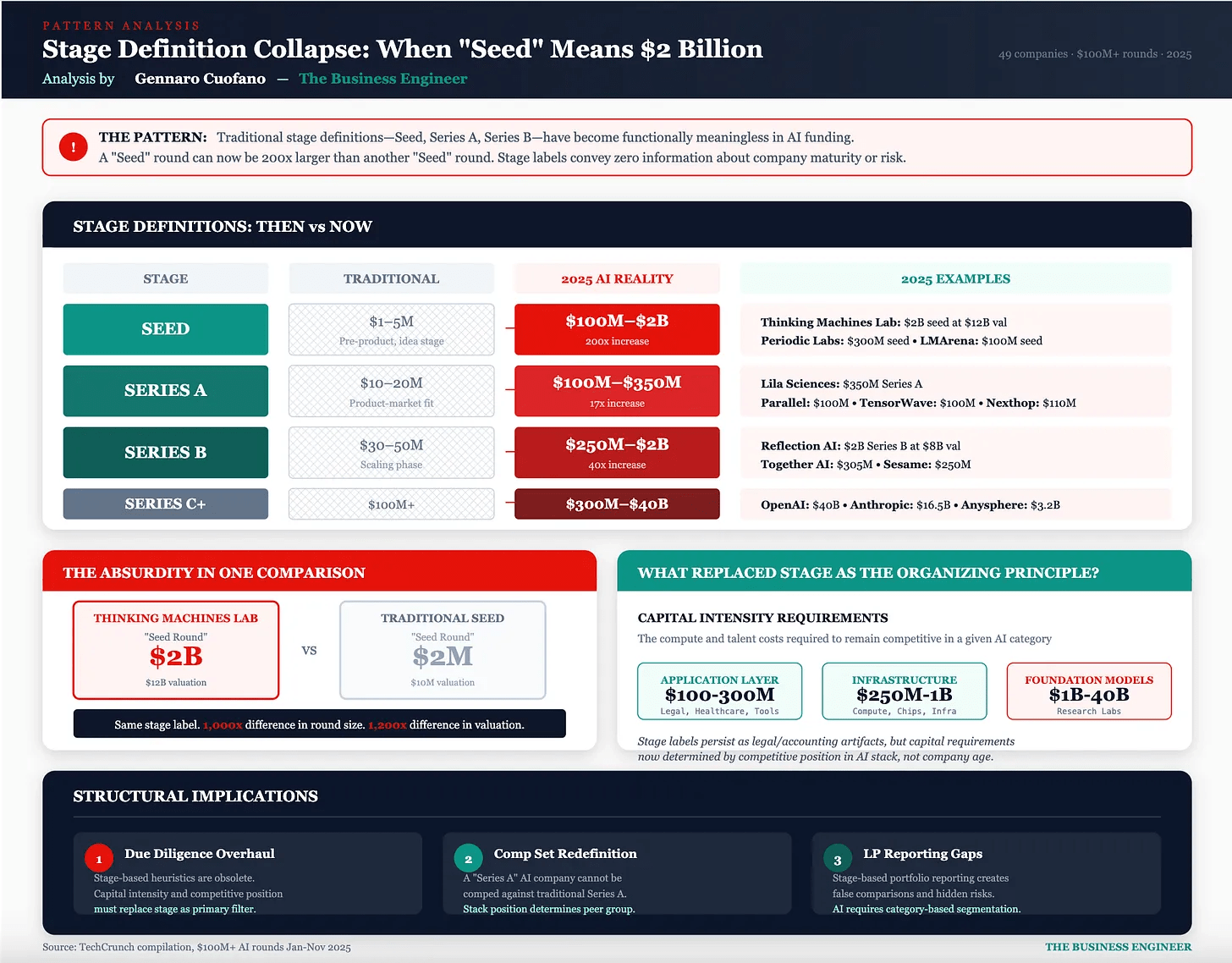

1. Venture Capital Is No Longer Venture Capital

The most visible sign of this new reality is the effective destruction of traditional venture capital stages. Labels like Seed, Series A, and Series B have been “rendered meaningless” by the sheer capital required to compete in AI. In the past, software companies could grow incrementally, raising capital as they hit milestones. AI, however, has shifted the field to an “industrial model” that demands massive infrastructure investment long before a product finds its market.

This industrial logic justifies the “1,000x gap in check size” and the emergence of the very “$2 billion ‘seed’ rounds” that signal this new era. Capital is being forced into a “barbell distribution,” clustering around a few massive, category-defining bets. This is why elite firms like Sequoia, Nvidia, and a16z are repeatedly co-investing in the same mega-rounds. They are not diversifying; they are building a “de facto AI index.” The cost of missing the single winning AI platform is existential, so investors are compelled to consolidate their bets into a “highly unified, synchronized capital stack.” For them, spreading capital too thin in a “Winner Takes Most” market is a guarantee of failure.

--------------------------------------------------------------------------------

2. Your Career Is Becoming a High-Stakes Tightrope

This dramatic compression of capital isn’t just reshaping investment portfolios; it’s creating a parallel squeeze on the human capital within the professional labor market. AI is rapidly dismantling the “billable hour” by automating the routine tasks that once justified it, like drafting documents or summarizing information in seconds. This is creating a sharp bifurcation in the value of professional work. Manual throughput is becoming worthless, while the rewards shift entirely to high-level strategic contributions like “judgment, risk, and outcomes.”

This shift explains the paradoxical and intense work culture emerging in the technology sector, where founders and VCs are demanding “9am–9pm, six-day weeks.” The pressure is immense, as one observer noted:

“7 days a week is the required velocity to win right now”

This culture isn’t arbitrary; it’s a direct consequence of the market dynamics. In a race where second place offers no consolidation prize, professionals are squeezed between the demand for grueling velocity and the looming threat of their skills becoming economically obsolete. The career ladder is compressing into a high-stakes tightrope.

--------------------------------------------------------------------------------

3. The Fight for the ‘Front Door to Reality’

The same forces compressing capital and careers are fueling a final, decisive battle: the fight to control the “front door to reality.” We are witnessing a massive consolidation of the interfaces we use to discover information, shifting from a web of open search results to singular, definitive AI answers. The trend is already underway, with data showing a “4,700% year-over-year increase in retail visits driven by AI assistants” alongside a significant drop in SEO click-through rates.

The power at stake in this consolidation is immense, leading to a desperate race for control. The implications are profound:

“If the new shelf space is inside ChatGPT’s answer box, then whoever defines ‘trust, relevance, and extractability’ controls what America buys.”

There is little room for competition here. The very nature of an AI agent is to be a singular, trusted intermediary. This dynamic necessitates a “Winner Takes Most” outcome. The platform that successfully becomes the default choice for users will control the “very infrastructure of choice and consent,” creating the ultimate monopoly on information and commerce.

--------------------------------------------------------------------------------

Conclusion: The System’s Single, High-Stakes Gamble

The Great Compression is the economic system’s logical adaptation to a “Winner Takes Most” reality. Venture capital has collapsed into a single, correlated bet on AI because the industrial scale of the technology requires it. Professional careers are being squeezed because only high-level judgment retains value in an automated world. And digital platforms are consolidating because the interface that wins the user’s trust wins everything.

While this concentration of resources may be a rational response, it also concentrates risk. By linking retirement savings and our collective economic stability to a “handful of highly correlated, high-stakes trades,” we are betting our collective future that the winners of this curve will be benevolent—and that the system can survive the compression required to crown them. Of course my day job at SignalRank is building a highly diversified derisked index of private assets. Maybe there is a way to have your cake and eat it :-)

Essay

The rise of agentic journalism

Niemanlab • December 4, 2025

Media•Journalism•AgenticJournalism•AIAgents•NewsInnovation•Essay

In 2026, a new type of journalism will emerge: one tailored explicitly to machine compilers of language and information. This journalism will not be directed at people, but rather at chatbots and AI information summarizers. A journalism for the “agentic web”: a web populated by automated agents that serve us, retrieving information, sharing our data, making our appointments, answering our emails. The agentic journalism.

Agentic journalism would break from our traditional article format. AI systems do not need ledes, nut-graphs, or narrative flows; they need user-relevant, novel, and machine-readable content. Maybe the format for agentic journalism will be a bulleted list or a JSON file — whatever it takes for that machine to ingest and reformat the content.

The role of the journalist in agentic journalism would be to add information about an event: the five Ws, quotes, context, and links to multimedia content. The writing itself, that fun exercise of putting together the puzzle pieces into a cogent reportage, wouldn’t even need to be automated by the news organization. It would be automated at the destination, pieced together by whatever format the end-user can extract from the machine they are using. In this type of journalism, editors focus on the accuracy and machine-readability of the information supplied by the reporter. The role of copy-editing (which we are already offloading to machine-assisted systems) would be even more diminished.

You might ask: What does this guy think about agentic journalism? Is he pitching it or warning us against it? Well, as a good academic in the social sciences, I’m not here to provide you with clear-cut answers. I’m here to, frustratingly, give you more questions. My prediction will stick to the historical perspectives and the techno-social forces that are in play.

Technology has always reshaped how journalism is produced, distributed, and consumed. The telegraph enabled the Associated Press; radio and television centralized news around financial powerhouses (state-backed or tightly regulated entities); the web offered unprecedented reach, and with it, the pressure of immediate audience feedback. With the rise of search engines and social media, journalists have written less for readers and more for algorithmic intermediaries: SEO-friendly content that is clearer, but less creative, and news articles planned according to their potential social media reach. The great pivot to video happened not because we found out our public preferred it, but because multimedia content was more attractive to digital platforms. These pressures are not just the audience making choices; it’s computers making choices for us. Journalism, then, adapts to these external machine editors.

Now, audiences are increasingly using AI-based products to get information, both about their individual and public lives. Some see AI tools as more approachable, less biased, and more tailored to their preferences. For some people, this will lead to an increase in their exposure to news content. Publics who are tuned out of the news may find, in these novel and personalized ways of encountering this type of content, a newfound utility for journalism. For news organizations, the shift to agentic journalism could mean a new way to monetize content and aggregate value to their brand, by attracting attention and value to their output. To that end, journalists might start packaging stories with structured metadata (clear entity tags, event timestamps, source links, and standardized schemas) to make content legible to AI crawlers. The newsroom’s new craft could be less about prose and more about indexability.

Will private capital create a crisis in 401ks?

Ft • November 27, 2025

Essay•Venture

Overview

The article highlights mounting concerns about the growing role of private capital in US retirement savings, particularly how private equity and similar alternative investments may increasingly be embedded in 401(k) plans. It raises the possibility that the search for higher returns in a low-yield environment, combined with aggressive marketing by private equity managers, could introduce new risks into the core savings vehicle for American workers. Alongside this, it notes that the same private capital ecosystem is fuelling enormous borrowing to fund artificial intelligence–driven data centre expansion, with OpenAI’s partners collectively nearing $100bn in related debt. The piece links these two themes as examples of how private-market leverage and complex financial structures are spreading into areas that touch ordinary savers and the broader economy.

Private Capital’s Push into Retirement Plans

The article discusses how private equity firms have been lobbying plan sponsors, regulators, and asset allocators to allow a greater share of 401(k) assets to flow into illiquid private vehicles.

Proponents argue that private markets can deliver higher long-term returns and diversification versus traditional public equities and bonds, especially in an era of more volatile public markets and pressure on conventional 60/40 portfolios.

Critics, however, worry about transparency, valuation opacity, high fee structures, and liquidity constraints. These features are acceptable for sophisticated institutional investors but may be inappropriate for ordinary workers whose retirement security depends on being able to access and understand their savings.

The article suggests that, while some regulatory signals have been cautiously supportive of “limited” private exposure in defined contribution plans, there is no consensus on how much is safe, or how to protect participants from mis-selling and misaligned incentives.

Systemic Risk and Potential for a 401(k) Crisis

A key concern is that if private investments become a significant component of 401(k)s, downturns in private markets might not show up quickly because of infrequent valuation marks, masking real losses until they become severe.

High leverage often used in private equity deals could amplify losses in a stressed environment, heightening the risk that workers’ retirement balances might fall sharply just when they need them most.

The article raises the possibility that a synchronized correction in both public and private markets, combined with liquidity demands from retirees, could force funds into fire sales, worsening market stress.

It notes that any such crisis would be politically explosive, given the centrality of 401(k)s to US retirement policy and the perception that Wall Street had been allowed to gamble with workers’ nest eggs.

OpenAI, Data Centres, and the $100bn Borrowing Wave

The second major thread is the vast borrowing spree tied to AI infrastructure, particularly data centres required to train and deploy models from companies such as OpenAI.

OpenAI’s partners and backers—major technology companies and infrastructure investors—are described as approaching $100bn in aggregate borrowing for data centre buildout and related hardware.

This capital is often structured through private credit, project finance, and other non-bank channels, again highlighting the growing importance of private capital markets in shaping the real economy.

The article implies that, while such investment may be justified by expectations of explosive AI-driven productivity gains, it also concentrates risk: if AI revenues disappoint, heavily leveraged data-centre assets could become financial stress points.

Implications for Savers and Markets

The piece links the two developments—private capital in 401(k)s and leveraged AI infrastructure—as manifestations of an economic cycle where abundant private money chases high-growth narratives, sometimes with limited transparency.

For retirement savers, the implication is that their portfolios may be increasingly exposed indirectly to complex, highly leveraged bets on long-duration technologies such as AI and large-scale infrastructure.

The article suggests policymakers and regulators will need to balance innovation and capital formation against the imperative to protect non-professional investors, especially where tax-advantaged retirement savings are involved.

Ultimately, it warns that if oversight does not keep pace with the integration of private capital into retail-facing products, the next financial shock could emerge not only from public markets or banks, but from the intersection of opaque private assets and everyday retirement accounts.

Sven Beckert on How Capitalism Made the Modern World

Yaschamounk • November 29, 2025

Essay•Economy•Capitalism•IndustrialRevolution•GlobalHistory

Capitalism as a Historical, Not Natural, Order

The conversation presents capitalism as a historically specific, contingent way of organizing economic life rather than a timeless or “natural” order. Sven Beckert argues that we misunderstand capitalism when we treat it as an abstract system that can be defined purely by economic models. Instead, “really existing capitalism” must be grasped historically, across centuries and geographies, as a process that has repeatedly changed its form. The key move is to “denaturalize” capitalism: to see it as a revolutionary departure from most of human history, when people lived in subsistence economies, under feudal obligations, or under religious authorities who extracted surplus without reinvesting it for further accumulation. Once capitalism is recognized as a human-made order, it becomes possible to see that it could have been otherwise—and can still be reshaped.

Three Core Misconceptions About Capitalism

Beckert identifies three widespread misconceptions:

Pure abstraction: Many assume capitalism can be adequately understood by timeless economic laws. Beckert insists this misses the way capitalism has transformed over 500–1,000 years, requiring a historical lens.

Eurocentric narrative: Standard histories center Europe and the United States, treating the rest of the world as a lagging “future Europe.” Beckert instead advances a global history in which West Africa, India, China, and the Middle East are integral to capitalism’s development.

Urban–industrial bias: Capitalism is often told as a story of factories, cities, steel, cars, and railroads. Beckert stresses that much of capitalism’s history unfolds in agriculture and in the countryside, where most people lived until very recently, especially on plantations and in rural manufacturing systems controlled by merchants.

From “Islands of Proto‑Capitalism” to a Global Capitalist System

Beckert traces capitalism’s origins to merchant communities that applied a capitalist logic—investing capital in long-distance trade to generate more capital. These merchants existed for centuries in places like the port of Aden, West Africa, India, and China. They were “capitalists without capitalism”: modern in behavior but marginal to broader economic life. The crucial transition came when these scattered islands of capital, especially in Europe, forged coalitions with emerging states. In the 15th and 16th centuries, European merchants and states jointly sought routes around powerful Middle Eastern merchant networks to reach the wealth of India and China directly, while monarchs sought revenue for constant wars. This alliance drove expansion into the Atlantic, African islands, and eventually the Americas, where new “islands of capital” like Cabo Verde, Potosí, and Barbados were built as plantation and extraction economies.

What is world‑historically new, Beckert argues, is that merchants did not just trade existing goods; they came to dominate production itself. They organized sugar, silver, cotton, and other commodities at scale, turning entire societies into mechanisms for capital accumulation. This shift—from merchants only arbitraging prices to merchants controlling production—marks the real takeoff of the “capitalist revolution.”

Capitalism Before and Beyond the Industrial Revolution

Beckert challenges the view that capitalism begins with the Industrial Revolution. For centuries before mechanization, most people still lived in subsistence or feudal arrangements, but pockets of economic life followed capitalist logic:

Massive plantation sectors in the Americas produced sugar, coffee, rice, indigo, and later cotton for European markets.

Rural households in Europe and North America produced textiles and other goods under merchant control, selling into long-distance markets.

This period saw less productivity growth and technological innovation than we associate with capitalism today. Instead, it involved large‑scale geographic redistribution of wealth—from enslaved Africans and Indigenous peoples to European merchants—more than overall global enrichment.

The Industrial Revolution in late 18th- and early 19th‑century Britain was, in Beckert’s terms, the most important “offspring” of capitalism, not its origin. It depended on:

Pre‑existing global markets for cotton textiles, built through trade with India.

An effectively limitless supply of raw cotton grown by enslaved Africans in the Americas, freeing British agriculture from supplying that input.

Imperial and commercial power that allowed Britain to dominate global markets, including selling machine‑made cotton textiles back into India by the mid‑19th century.

The real core of the Industrial Revolution was that productivity‑enhancing innovation became permanent and generalized. What began in Lancashire cotton mills spread to coal, iron, steel, railroads, and later chemicals and electrical machinery, and then geographically to Belgium, France, Prussia, the United States, Egypt, Mexico, and beyond. “Permanent revolution” in technology and output became a structural condition.

Expansion of Capitalist Logic and the Changing Role of Finance

Capitalism, Beckert emphasizes, expands along three axes:

Geography: from small regions in Britain to the entire globe.

Sectors: from textiles to virtually every major industry.

Life realms: from production and trade into intimate spheres like dating, now organized around subscription-based apps and monetized platforms.

The logic of capital investment for profit penetrates ever more domains, shaping behavior and institutions.

Finance plays a shifting but central role. In early capitalism, merchant and finance capital—banks, trading companies like the East India Company—were the primary engines, since large pools of capital were needed for long-distance trade. The Industrial Revolution introduced a period in which industrial capitalists could accumulate fortunes from production itself, often starting with modest means; starting a cotton mill did not demand massive initial capital, and reinvested profits could fuel growth, as in Henry Ford’s self-financed expansion.

Since the 1970s, the pendulum has swung back toward finance and merchant capital. Global brands in sectors like textiles and shoes rarely produce goods themselves. Instead, they control design, capital, and markets, while hundreds of thousands of dispersed manufacturers compete for contracts. Power tilts toward finance-rich coordinators of production, echoing early merchant dominance more than the classic “Fordist” industrial era.

Late‑Stage Capitalism, Limits, and Human Agency

Beckert is skeptical of the term “late‑stage capitalism.” Predictions of capitalism’s imminent collapse have circulated since the mid‑19th century and repeatedly been falsified, even as capitalism has radically reshaped itself. The basic logic—owners of capital investing to generate more capital—has persisted through wildly different forms: slave plantations, Victorian factories, mid‑20th‑century welfare states, and contemporary finance‑driven globalization. Declaring a “late” phase assumes a vantage point we do not possess.

He does, however, identify a potential structural limit: capitalism’s dependence on “free gifts of nature”—land, fossil fuels, unpaid care work, ecological sinks. Environmental constraints and climate change may impose real boundaries on continued expansion in its current form.

Crucially, Beckert insists that capitalism’s historicity implies agency. Capitalism is not a “social construct” in the trivial sense of unreality; it is brutally real and powerful. But because it was made by human actions—merchants in Aden, planters in Barbados, enslaved rebels in Saint‑Domingue, industrial workers demanding welfare states—it can be contested and reconfigured. Even actors with little formal power have reshaped the system: the Haitian Revolution helped destroy slavery, and labor movements helped build welfare states. While no single politician or society can redesign capitalism at will—constraints like international competition matter—recognizing capitalism as contingent opens intellectual and political space. There is not one inevitable capitalism, but many possible capitalisms, and future configurations will depend on collective choices as much as on impersonal “laws.”

9-9-6

Benn • November 28, 2025

Essay•AI•AIBubble•WorkCulture•Startups

“The future is already here,” the lede goes, “it’s just not evenly distributed.”

Similarly: The AI bubble will burst—it’s just that the disappointment won’t be evenly distributed.

First, I suppose—is AI a bubble? Some people are worried.1 Ben Thompson says yes, obviously: “How else to describe a single company—OpenAI—making $1.4 trillion worth of deals (and counting!) with an extremely impressive but commensurately tiny $13 billion of reported revenue?” Others are more optimistic: “While [Byron Deeter, a partner at Bessemer Venture Partners,] acknowledges that valuations are high today, he sees them as largely justified by AI firms’ underlying fundamentals and revenue potential.”

Goldman Sachs ran the numbers: AI companies are probably overvalued. According to some “simple arithmetic,” the valuation of AI-related companies is “approaching the upper limits of plausible economy-wide benefits.” They estimate that the discounted present value of all future AI revenue to be between $5 to $19 trillion, and that the “value of companies directly involved in or adjacent to the AI boom has risen by over $19 trillion.” So: The stock market might be priced exactly as it should be. Or it could be overvalued by $14 trillion.

Either way, though—these are aggregate numbers; this is how much money every future AI company might make, compared to how much every existing AI company is worth. Even if the market is in balance, there are surely individual imbalances. Sequoia’s Brian Halligan: “There’s more sizzle than steak about some gen-AI startups.” Or: “OpenAI needs to raise at least $207 billion by 2030 so that it can continue to lose money, HSBC estimates.” Or: “Even if the technology comes through, not everybody can win here. It’s a crowded field. There will be winners and losers.” That is the nature of a gold rush, though, even when there is a lot of gold in the ground. Some people get rich, and some people just get dirty.

No matter, says Marc Andreessen; this gold will save the world. And the people digging for it are heroes:

Today, growing legions of engineers – many of whom are young and may have had grandparents or even great-grandparents involved in the creation of the ideas behind AI – are working to make AI a reality, against a wall of fear-mongering and doomerism that is attempting to paint them as reckless villains. I do not believe they are reckless or villains. They are heroes, every one. My firm and I are thrilled to back as many of them as we can, and we will stand alongside them and their work 100%.

I do not know if the tech employees are heroes, but they are working hard. Some, monstrously so:

recently i started telling candidates right in the first interview that greptile offers no work-life-balance, typical workdays start at 9am and end at 11pm, often later, and we work saturdays, sometimes also sundays. i emphasize the environment is high stress, and there is no tolerance for poor work.2

This is the new vibe in Silicon Valley: Grinding, loudly. Hard tech, and extremely hard core. Because that’s what’s needed to meet the “deranged pace” of this historical moment. Venture capitalist Harry Stebbings: “7 days a week is the required velocity to win right now.” Cognition’s Scott Wu: “We truly believe the level of intensity this moment demands from us is unprecedented.” From others—this isn’t mere capitalism; this is a crucible: “‘This work culture is not unprecedented when you consider the stringent work cultures of the Manhattan Project and NASA’s missions,” said [Cyril Gorlla, cofounder and CEO of an AI startup]. ‘We’re solving problems of a similar if not more important magnitude.’”

So far, so good, at least for the capitalists: According to CNBC, there are now 498 private AI companies worth more than $1 billion. A hundred of them are less than three years old. There are 1,300 startups worth more than $100 million. And these companies have created dozens of new billionaires.

In recent years, this has become the math that punches Silicon Valley’s clock: 996—work from 9 am to 9 pm, six days a week. Seventy-two hours a week; 3,600 hours a year; 10,000 hours in three years. But if that adds up to a billion-dollar payday? Or even a pedestrian few million? Just hang on. “‘I tell employees that this is temporary, that this is the beginning of an exponential curve,’ said Gorlla. ‘They believe that this is going to grow 10x, 50x, maybe even 100x.’” Another founder told Jasmine Sun their plan—get in, get rich, get out:

I asked a founder I know if he thinks that AI is a bubble. “Yes, and it’s just a question of timelines,” he said. Six months is median, a year for the naive. Most AI startups are all tweets and no product—optimizing only for the next demo video. The frontier labs will survive but it’ll be carnage for the rest. And then what will his founder friends do? I ask. He shrugs. “Everyone’s just trying to get their money and get out.”

The Joy of Becoming Worthless…except to each other

Rushkoff • Douglas Rushkoff • November 29, 2025

Essay•AI•AI Employment•Disaster Capitalism•Post Capitalism

My last piece, The Intentional Collapse, seems to have agitated a few people. I know it came off a bit dark. I talked about how the Uber wealthy believe the world as we know it is ending and that there won’t be enough essential resources to go around, so they need to take control of as much money and stuff and land as possible in order to position themselves for the end of days.

The way they do that is with an induced form of disaster capitalism, where they intentionally crash the economy in order to have some control over what remains. So the function of tariffs, for example, is to bankrupt businesses or even public services in order to privatize and then control them. Stall imports, put the ports out of business, and then let a sovereign wealth fund purchase the ports. Or as is happening right now: use tariffs to bankrupt soybean farmers, who have to foreclose on their farms so that private equity firms can purchase the farmland as a distressed asset, then hire the farmers who used to own and work that land as sharecroppers.

What I explained was that the kleptocratic elite, in collaboration with the current White House administration, are engaged in a controlled demolition of this civilization because they realize the pyramid is collapsing and they don’t have faith that there will be enough left to feed and house everyone. The best they can do is earn a ton of money, buy a lot of land, control an army, and get people accustomed to seeing that army deployed. That’s what we’re watching on TV and on our city streets, and why so many Americans voted against the current administration. It was a resounding “what the fuck?”

But I briefly mentioned something about AI and employment that I want to get into now. See, it’s not coincidence that AI is emerging at this same moment in our civilization’s history. As Lewis Mumford observed, new technologies are often less the cause of societal changes than they are the result. Culture is like a standing wave, creating a vacuum or readiness for a new medium or technology. If we really are at the end of capitalism—the end of this eight or nine-hundred year process of abstraction, exploitation, and colonialism—then we would also, necessarily, be at the end of the era of employment. And I will get to why I think that can ultimately be a good thing, but let’s go through the scenario that’s running through everyone’s heads right now, and then find our ways there.

AI is coming for our jobs. Not the super-creative ones, or the high-touch human ones, but the ones that maintain administrative control over everything. The majority of jobs. All the people in the mortgage departments, the insurance companies, the spreadsheet people, the powerpoint people. Doomers say it’s 90% of jobs, but let’s even say it’s just half of office jobs taken by AI’s and blue collar jobs taken by robots.

The problem with that, from a business perspective, is if you have no employees earning money out there in the world, then who will be your consumers? Even Henry Ford, the racist antisemite, understood that workers—even his assembly line employees—needed to be able to earn enough money to buy a Ford car. But how are AI billionaires going to continue to make money if there are no gainfully employed people capable of buying AI services from them or at least buying products from the companies that do purchase AI services?

Venture

Stage Definition Collapse: Why “Seed” Now Means $2 Billion

Fourweekmba • Gennaro Cuofano • November 27, 2025

Venture

In the traditional venture canon, stage definitions were tied to company progression:

Seed: $1–5M to validate an idea

Series A: $10–20M to reach product-market fit

Series B: $30–50M to scale

Series C+: >$100M for expansion

But 2025 AI reality annihilates this structure:

Seed: $100M–$2B

Series A: $100M–$350M

Series B: $250M–$2B

Series C+: $300M–$40B

A single comparison shows the absurdity:

Thinking Machines Lab: $2B “seed”

Traditional seed: $2M

Same label.

1,000× difference in check size.

1,200× difference in valuation.

Stage labels have detached from reality.

As explained in The State of AI VC (

), AI capital formation has compressed into an industrial model, not a software model. Stage collapses because capital intensity has replaced company maturity as the gating factor.

Query 1: Why Has the Stage System Collapsed?

Because stage was built for software economics, and AI is governed by infrastructure economics.

Software startup progression was linear and low-cost:

hire a few engineers

find traction

iterate

then scale

AI companies face a non-linear cost curve:

GPU acquisition

model training cycles

inference fleet build-outs

data infrastructure

distributed systems engineering

cloud contracts

These are not milestones — they are fixed upfront requirements.

A company cannot reach PMF without:

multi-million-dollar clusters

complex ML tooling

inference reliability

regulatory security measures

Therefore, a “seed” is no longer an idea.

It is an industrial commitment.

As The State of AI VC notes, AI’s physical infrastructure requirements force funding to be “front-loaded” rather than sequential (

).

Stage dies because AI cannot scale on software-era cadence….

The Structural Transformation: What the Six Patterns of AI VC Funding Really Mean

Four week mba • Gennaro Cuofano • November 27, 2025

Venture

Every pattern in 2025 AI venture capital—barbell distribution, stage collapse, velocity acceleration, investor concentration, sector rotation, and geographic clustering—reduces to a single unifying force:

“Compression — of stages, timelines, capital concentration, and traditional venture mechanics.”

What looks like a funding boom is actually a mechanical restructuring of how technology capital formation works. The system is not adding “more capital.” It is reorganizing around new bottlenecks, new competitive pressures, and new liquidity requirements.

The six structural patterns don’t merely describe 2025.

They forecast the next decade.

As detailed in The State of AI VC (https://businessengineer.ai/p/the-state-of-ai-vc):

“The traditional playbooks do not work anymore—not for founders, not for GPs, and not for LPs.”

Let’s unpack what compression really means for primary and secondary markets.

The middle market has collapsed.

The $500–900M “growth stage” now represents only 13% of all AI deals.

Capital clusters at two extremes:

Entry tickets ($100–250M)

Category winners ($1B+)

This bifurcation reflects a structural truth:

“AI categories now require either massive scale (labs, infra, compute) or clear defensibility (verticals + picks/shovels).”

Nothing in between is fundable.

This compression forces founders into two lanes:

Become a category winner (market dominance + capital intensity), or

Sell the infrastructure picks and shovels.

There is no middle-lane anymore.

Traditional venture labels are now meaningless.

A “Seed” round in 2025 can be:

$100M+

1,000x larger than another “Seed” round

larger than historical Series C rounds

Stage names persist only because legal documents require them—not because they signal anything about risk or maturity.

Capital intensity replaced stage as the organizing principle:

$100–300M → Application Layer (legal, healthcare, enterprise AI)

$250M–1B → Infrastructure Layer (chips, inference, developer tools)

$1B–40B → Foundation Models (labs)

This requires new due diligence, new comp sets, and new valuation heuristics.

As The State of AI VC notes:

“Stage heuristics died. Competitive intensity is now the only filter that matters.”

Investor Concentration Risk: How AI Venture Became a Single Trade

Fourweekmba • Gennaro Cuofano • November 27, 2025

Venture

The defining structural risk in the 2025 AI venture cycle is not valuations, velocity, or stage compression — it is investor concentration. Across the top $100M+ rounds, the same five to six investors dominate: a16z, Kleiner Perkins, Lightspeed, Sequoia, Nvidia, GV/Fidelity.

But the problem is not simply that these names appear frequently.

The problem is correlation.

As documented in The State of AI VC (https://businessengineer.ai/p/the-state-of-ai-vc), these firms:

co-invest with each other repeatedly,

cluster into the same high-momentum rounds,

and create cross-fund exposure for LPs even when portfolios appear diversified on paper.

LPs think they are allocating across multiple GPs, geographies, and strategies.

In reality, they are allocating into the same dozen AI companies, with exposure multiplying beneath the surface.

This is the hidden correlation problem — the illusion of diversification masking a highly unified, synchronized capital stack.

The data pattern is stark:

a16z: 12 mega-rounds

Kleiner Perkins: 9 mega-rounds

Lightspeed: 8 mega-rounds

Nvidia: 7 mega-rounds (strategic)

Sequoia: 5

GV, Fidelity: 4 each

The critical three — a16z, Kleiner, Lightspeed — co-appear together in 6 deals.

This is not coincidence.

It is the structural backbone of the AI funding network.

When these firms move, they move together — reinforcing each other’s signals, validating the same companies, and amplifying valuation momentum.

This is cluster-led conviction, not decentralized discovery.

As explained in The State of AI VC (https://businessengineer.ai/p/the-state-of-ai-vc):

“Investor concentration has created a de facto AI index — but without the risk controls, liquidity, or hedging.”

The cluster behaves like one meta-fund controlling the majority of capital entering late-stage AI.

The LP problem is subtle but severe.

Consider a typical institutional LP allocating to:

Fund A: a16z

Fund B: Kleiner

Fund C: Lightspeed

On paper, this is diversification.

In practice, it produces:

3× exposure to Anthropic

2× exposure to Harvey, Abridge, Glean

Highly correlated vintage risk

Synchronized valuation cycles

The LP believes they are diversified across three top-tier managers.

But the cross-ownership creates a synthetic index with excessive concentration risk in:

Foundation labs

AI-native applications

Infrastructure picks

This is not a portfolio — it is a stacked bet.

Compression as Transformation in AI VC

Four week mba • Gennaro Cuofano • November 27, 2025

Venture

2025 AI venture capital looks chaotic—barbell distributions, mega-round velocity, stage collapse, investor concentration, sector rotation, geographic clustering. But these are not six independent anomalies. They are six manifestations of one underlying structural force:

Compression — of stages, timelines, capital, and investor bases.

What appears as “more capital deployed” (the $75B+ deployed across AI rounds) is not a bigger version of the old venture environment. It is a fundamental restructuring of how technology capital formation works, as documented in The State of AI VC (https://businessengineer.ai/p/the-state-of-ai-vc).

Compression is not a symptom.

It is the transformation.

Let’s break down the four pillars of compression and then map the strategic consequences.

“Seed,” “Series A,” “Series B,” “Series C+”—the entire stage taxonomy has collapsed.

A Seed round can be:

$100M

$1B

$2B (Thinking Machines Lab)

While another Seed is still $2M.

You cannot infer risk, maturity, product readiness, or team strength from stage labels. The old heuristics (pre-product → product-market fit → scale → growth) have been erased by capital intensity.

The new organizing principle:

Category competitiveness determines round size. Not maturity.

This means:

Investors who cling to stage thinking misunderstand risk.

LPs relying on stage diversification are exposed to hidden concentration.

Founders must position around competitive pressure—not chronological maturity.

The stage system is dead.

Capital intensity killed it.

Traditionally, companies raised rounds every 18–24 months.

In 2025, leading AI companies raised rounds in:

5.5 months on average

with some raising every 4 months

representing 75% compression in fundraising cycles

This shift is not about FOMO. It is mechanical:

AI companies must buy compute capacity before revenue materializes.

Competitors race to secure H100 clusters, HBM supply, and inference infra.

Supplier bottlenecks distort timing. When chips are available, companies must buy immediately—regardless of runway.

The velocity compression drives:

continuous fundraising

valuation stacking

accelerated employee wealth creation

liquidity pressure on GPs and LPs

As The State of AI VC notes:

“Funding cycles no longer map to product cycles. They map to capital intensity and competitive pressure.”

This is industrial capital formation, not venture capital formation.

Deutsche Börse launches €5.3bn bid for private equity-backed Allfunds

Ft • November 27, 2025

Venture

Deutsche Börse has launched a €5.3bn bid to acquire Allfunds, the private equity-backed fund distribution platform listed in Amsterdam, in a move that would mark the German exchange group’s biggest deal in years and further its expansion into investment fund services.

The offer values Allfunds at €8.80 a share, representing a premium to its recent trading price and split between €4.30 in cash and €4.30 in new Deutsche Börse shares, alongside a permitted dividend of €0.20 per Allfunds share for the 2025 financial year. The deal structure would see Allfunds investors become shareholders in the enlarged Deutsche Börse group.

Deutsche Börse said it is in exclusive discussions with Allfunds’ board over a possible acquisition of all issued and to-be-issued share capital, on the basis of a non-binding proposal. The announcement of any binding offer remains subject to customary preconditions, including due diligence, finalisation of transaction documentation and approval by the boards of both companies.

Allfunds, which connects asset managers with distributors and oversees more than €1.7tn of client assets, is backed by private equity firm Hellman & Friedman and Singapore’s GIC. The two largest shareholders have been exploring options for their stakes after taking the business public in 2021, following a 2017 deal in which Hellman & Friedman bought control from Spain’s Banco Santander and Italy’s Intesa Sanpaolo.

The proposed tie-up is aimed at reducing the fragmentation of Europe’s cross-border fund distribution market and building a pan-European platform with greater scale. Deutsche Börse said combining Allfunds with its existing fund services arm would create a more integrated offering to asset managers and distributors and enhance its position in post-trade and data services.

If completed, the transaction would add to Deutsche Börse’s recent series of acquisitions, including the €3.9bn purchase of Danish investment management software provider SimCorp in 2023, as it seeks to diversify beyond traditional trading and clearing into recurring, technology-driven revenue streams.

Data: Zombie VC Firms Walk Among Us

Upstarts media • Alex Konrad • December 4, 2025

Venture

Venture capital is still a relationships game. For startup founders, finding the right person to back your business is still the most important part of fundraising (besides the cash).

But startups can save themselves a lot of time, and potential headache, by turning the tables a bit. The key question: Is this VC firm a walking zombie?

The signs might not be obvious yet. Partners are still active at firms until they’re not, sometimes writing checks weeks before announcing a transition to part-time or ‘venture partner’. And with a few blockbuster exceptions, VC firms don’t typically blow up. Instead, they slowly, often quietly, peter out.

But there will be signs. New data shows that when a firm raised its fund — and how far along it is in the deployment cycle — could go a long way to determining whether you’re wasting your time.

Think of it as a loading progress bar, showing 60% or 80%. How far into a fund’s life cycle is that VC firm? How fast have they recently been writing checks?

And if the answer is that you’re looking to become one of the last investments for a fund raised three or four years ago, brace yourself.

New data from Carta (via its first Fund Economics Report) shows that funds raised in 2021 and 2022 have noticeably slowed down their investment pace, after running hot initially.

Funds closed in 2021 deployed faster in their first year, deploying 33% of their money compared to a more typical less than 20%, then applied the brakes: the median 2021 fund has still only deployed 88% of its capital, lower than any vintage of the previous four years.

It’s a similar story for funds raised in 2022. They’re currently 67% deployed, and at the three-year mark had deployed slower than the five previous years of funds.

What that means, according to Peter Walker, head of insights at Carta: firms still investing out of 2021 and 2022 funds — the exuberant zero interest rate, or ZIRP, era — are becoming much pickier (or skittish) as they reach the end of their fund lifecycles now.

“They’re approaching this fundraising market and finding it much chillier than they’d hoped,” Walker says. “They’re worried this might be the last time they get to invest.”

Founders talking to stalling firms face the following hurdles:

Added conversations and data requests

More intense due diligence

Slower decision processes

That’s not the situation with many newer funds: while 2023 vintages are tracking closer to 2022, funds raised in 2024 are tracking to deploy faster than historical norms, Carta found. AI-focused funds that have invested widely and quickly, and the blue-chip bigger firms with long track records are also notable exceptions.

One caveat: firms might have other, very good reasons to have slowed down their check-writing. Perhaps they don’t want to play a valuations game with AI enabled software, or macro factors are creating concerns in a specific sector of focus.

Another: many startups don’t have the luxury to turn away firms based on yellow flags like this. They need capital, and they have to take what they can get.

All things being equal, however, it makes sense for founders to add a couple of diligence questions back to their own VC calls:

What vintage fund are you deploying out of, and how far along is it?

What’s been your pace of deployment in the past year? Has it been consistent with previous years? (And if not, why?)

Do you anticipate raising another fund soon?

You won’t be able to spot all the zombies this way, but it can provide some peace of mind. Nobody wants to work with a fund that won’t answer the phone in a couple of years.

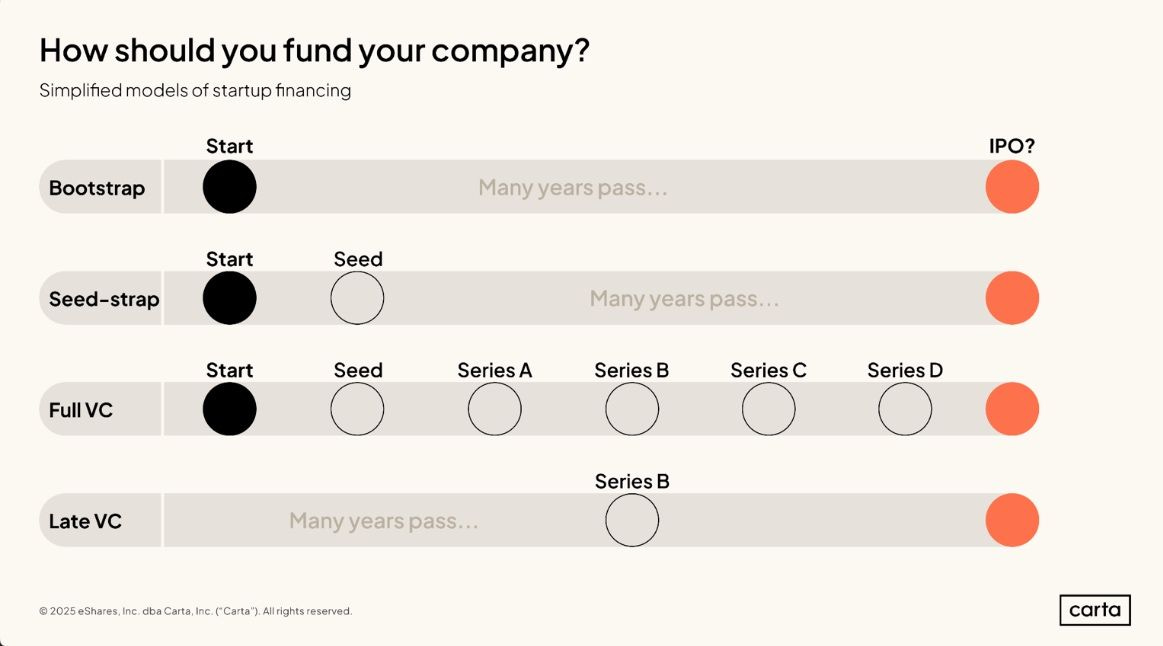

Why should you raise VC? Well, many times you shouldn’t.

LinkedIn • Peter Walker • November 30, 2025

LinkedIn•Venture

Source: LinkedIn | Peter Walker

You’ve built a bootstrapped company. Clear line of sight to profitability (actually profitable recently). Why should you raise VC?

Well, many times you shouldn’t. That’s a fair take. Who needs external investors when you have full control and full optionality?

But here’s why other founder do choose to engage with venture even though they don’t “need” it.

1) Cash for Growth

Could you accomplish a years worth of growth in 6 months if you had more cash to put to work?

If the answer is yes (and it usually is) perhaps trading equity for capital is useful to boost growth. Growth is always the biggest input into company valuation and ultimate sale price, should that path ever be attractive.

(Btw cash can also be incredibly useful to have on hand in case of unpredictable emergencies. Just ask startups who were running too lean in March 2020).

2) Brand for Hiring

Great talent can work at many startups. Being backed by a well-known fund can improve your standing in the minds of that next valuable engineer.

Beyond just brand, VCs will often extend themselves by personally recruiting talent to your company.

3) Network for Everything

Need a contact at that major prospect? Your VC might have one. Need an intro to this technical expert? Your VC might have one. Need to talk to a founder whose been through this tricky situation? You get the idea.

Good VCs bring network leverage to their portcos.

If none of these reasons resonate, cool avoid VCs and keep building. Many possible games to play and venture just happens to be the loudest 🙏 | 32 comments on LinkedIn

MSCI launches index combining public and private equities

Ft • December 4, 2025

Venture

Overview of the New Index

MSCI has introduced a new benchmark that combines public and private equity into a single global index framework, responding to the rapid expansion of unlisted assets. The product, known as the MSCI All Country Public + Private Equity index, is designed to give investors an integrated view of overall equity exposure across both listed markets and private equity holdings. It reflects how institutional portfolios have increasingly blended traditional public equities with large allocations to private funds.

Structure and Methodology

The index fuses MSCI’s existing All Country World Investable Market Index (ACWI IMI) with a newly created All Country Private Equity index. (ft.com)

Private equity is set at a 15 per cent strategic weight within the combined benchmark, with the remaining 85 per cent allocated to public equities. (ft.com)

The private equity component tracks about 10,000 private equity funds globally, covering buyout, venture capital and other strategies to approximate the opportunity set. (ft.com)

The index is rebalanced quarterly and calculated daily, allowing investors to monitor performance and risk in near real time despite the illiquid nature of private assets. (ft.com)

This methodology attempts to convert inherently opaque, infrequently valued private fund positions into a systematic, benchmarkable slice of a global equity portfolio.

Market Context and Rationale

Private equity assets under management have more than doubled since 2018 to around $4.7tn, underlining its growing importance in institutional portfolios. (ft.com)

Large investors such as pension funds, endowments and sovereign wealth funds increasingly treat public and private equity as a single “equity bucket”, creating demand for blended benchmarks.

MSCI has been building capabilities in private markets analytics, notably through its acquisition of Burgiss, while the broader data and benchmarking race in private markets includes moves like BlackRock’s purchase of Preqin. (ft.com)

The new index positions MSCI to capture a bigger role as investors seek standardized ways to measure performance and allocate capital across the full equity spectrum.

Intended Users and Use Cases

The benchmark targets institutional investors and high-net-worth clients that already hold significant private equity alongside listed equities. (ft.com)

Potential applications include:

Setting strategic asset allocation between public and private equity in a unified framework

Measuring total equity performance relative to a single reference index

Risk monitoring and reporting that reflects actual portfolio structure

By consolidating disparate exposures, MSCI aims to simplify conversations between asset owners, managers and consultants about “true” equity risk and return.

Criticisms and Methodological Challenges

Despite its ambition, the index has attracted skepticism:

Some investors question whether a blended index is really useful, given the wide dispersion of private equity returns and the bespoke nature of many programmes. Neuberger Berman’s Maya Bhandari is cited as doubtful that such a benchmark matches how investors actually set objectives. (ft.com)

Valuation practices, lagged pricing and smoothing in private equity raise concerns about how accurately any daily index can reflect real-time conditions.

Different investors target very different mixes of vintages, strategies and geographies in private markets, making a single “market” representation potentially unrepresentative.

These critiques highlight the tension between the desire for standardization and the inherently idiosyncratic character of private assets.

Implications for the Industry

If widely adopted, the index could reinforce the idea that public and private equity should be managed under one risk budget, influencing consultant frameworks and regulatory reporting norms.

It may encourage further product development, such as index-linked solutions or funds aiming to replicate the blended benchmark.

At the same time, ongoing debates about private equity performance, fees and transparency mean that some large investors may prefer bespoke benchmarks or separate public/private metrics rather than a single composite measure. (ft.com)

Overall, MSCI’s new index reflects how deeply private markets have become embedded in mainstream investing, while also exposing unresolved questions about how best to measure and govern these growing allocations.

SpaceX reportedly in talks for secondary sale at $800B valuation, which would make it America’s most valuable private company

Techcrunch • Connie Loizos • December 5, 2025

Venture

SpaceX is reportedly in talks for a secondary sale that would value the company at around $800 billion, according to Bloomberg, which would make it America’s most valuable private company by far.

The eye-popping figure reflects how routine mega-valuations have become in private markets. Just last week, for example, secondary marketplace Forge reported that employees of CoreWeave, the cloud computing company that went public in March, initially valued their shares on the platform at nearly $100 billion, up from $23 billion in a Series C last August.

It was only three months ago, meanwhile, that TechCrunch reported that SpaceX was in talks to sell insider stock via a tender offer at $255 per share, which would value the company at around $250 billion.

At the time, the valuation put SpaceX well ahead of ByteDance, the China-based parent of TikTok that’s currently valued at around $220 billion. But the new valuation — if it comes to pass — will put SpaceX far ahead of every other private tech company.

More than Elon Musk’s fame and proximity to President-elect Donald Trump is driving up the SpaceX share price. The company is reportedly spinning out its Starlink satellite internet business, for which SpaceX sought a $15 billion loan in August.

According to The Wall Street Journal, which broke the story in October, the company is in discussions with banks about the potential IPO for Starlink, which could reportedly achieve a valuation of $100 billion or more on its own. SpaceX COO and president Gwynne Shotwell had mentioned the spinout idea in 2023 to CNBC.

Then there’s the rocket side of the business, which is also going gangbusters. This week, the company launched its Starship rocket for a seventh time; this test flight involved a satellite deployment experiment.

SpaceX has also proven its worth via an earlier flight. In October, Starship performed a 1.2 million-pound lift and executed the first-ever booster catch by its Mechazilla launch tower.

Starship’s heavy-lift capabilities are key to NASA’s Artemis program for returning astronauts to the Moon, and the rocket could potentially support future missions to Mars.

The gap between top quartile and bottom quartile venture funds was over 40%

LinkedIn • Marcelino Pantoja • December 2, 2025

LinkedIn•Venture

Source: LinkedIn | Marcelino Pantoja

That number gets framed as proof that venture… | Marcelino Pantoja

In a 2011 lecture, David Swensen pointed out a striking fact. The gap between top quartile and bottom quartile venture funds was over 40 percent.

That number gets framed as proof that venture rewards skill. It is closer to a warning.

A spread that wide means most returns come from a very small group of funds. Those funds see deals that most VCs never see. Access matters more than analysis. The belief is that the best firms stay small because capacity is limited. Once they are full, new capital flows to managers outside that circle.

This is where things break down. As capital pools grow, it gets harder to stay in the top tier. More money does not buy better access. It usually pushes you toward average outcomes. In venture, size works against performance.

For allocators, the implication is uncomfortable but clear. Venture only works at small scale. If you cannot get into the true top funds, you are not picking the next great VC. You are backing someone in a much weaker part of the market. Writing a bigger check does not fix that.

For fund managers, the lesson is just as direct. Scarcity is not marketing. It is what protects access to the few deals that drive returns.

One reason this spread may not last is success itself. Lately the best VCs tend to raise more capital, write bigger checks, and move later in a company’s life. Capacity becomes the constraint. Early-stage exposure falls, ownership shrinks, and the return profile shifts. In the end, the forces that create top-quartile performance also make it hard to sustain.

Startup Funding Continued On A Tear In November As Megarounds Hit 3-Year High

Crunchbase • December 3, 2025

Venture

November was another outsized month for venture funding as investors poured $39.6 billion into startups globally. The funding total was on par with October and up 28% year over year from $31 billion, according to Crunchbase data.

Capital continued to concentrate into the largest companies. A stunning 43% of venture funding last month went to just 14 companies that raised rounds of $500 million or more each. That marked the largest number of such megarounds raised in a single month in the past three years.

The largest round of all went to Jeff Bezos’ Project Prometheus, which is tackling physical intelligence. It raised $6.2 billion in its first funding.

Other billion-dollar rounds last month went to:

AI coding startup Anysphere, maker of Cursor, which raised $2.3 billion in a round led by Accel and Coatue.

AI data center provider Lambda raised $1.5 billion led by TWG Global, and Kalshi, a future event betting platform, raised $1 billion led by Sequoia Capital and CapitalG.

US dominated again

The U.S. raised just over 70% of global venture capital in November, up from 60% in October. China was the next-largest market with $2.4 billion in total funding. The U.K. and Canada were the third- and fourth-largest, respectively, with $1 billion or more raised by startups in each country last month.

AI, hardware and fintech sectors lead

AI-related startups accounted for 53% of global venture funding last month, with over $20 billion invested in the sector.

Hardware was another leading sector with funding going to startups working on data centers, computer vision, robotics and defense technologies, among others. Financial services was the third-largest sector for venture funding in November, with large rounds in crypto, financial operations, compliance and payments.

State of European Tech report | Sarah Guemouri & Tom Wehmeier (Atomico)

Youtube • Slush • November 30, 2025

Venture

The video features Sarah Guemouri and Tom Wehmeier from the venture capital firm Atomico discussing the key findings of the annual State of European Tech report. The conversation provides a comprehensive analysis of the current health, challenges, and opportunities within the European technology ecosystem, drawing on extensive data and founder surveys.

A Resilient Ecosystem Facing Headwinds

The report highlights a European tech landscape demonstrating significant resilience despite a global downturn in venture funding. While total capital invested has decreased from peak levels, the baseline remains substantially higher than pre-2020 figures, indicating a matured and more sustainable ecosystem. A critical point of discussion is the stark contrast between the “haves” and “have-nots.” A small cohort of elite companies continues to secure large funding rounds, but a broad swath of the market, particularly early-stage startups, faces a much more challenging environment. The speakers emphasize that the era of “easy money” is over, forcing a necessary refocus on fundamentals like clear business models, path to profitability, and efficient growth.

Key Trends and Structural Shifts

Several important trends are identified. First, there is a notable geographic diversification of capital, with a significant increase in investment from non-traditional sources, including the Middle East and Asia. Second, the report details a shift in sector focus, with Climate Tech and Energy emerging as dominant themes, attracting a larger share of capital than any other vertical, including Software. This reflects both Europe’s regulatory leadership and global urgency around the energy transition. Third, the discussion covers the talent landscape, noting that while large-scale layoffs at major tech firms have occurred, there is a strong underlying demand for technical and AI-specific skills, creating a dynamic and competitive hiring environment for high-growth companies.

The Founder Perspective and Future Outlook

A core component of the report is its survey of European founders, which reveals a nuanced sentiment. Founders express increased confidence in building a globally leading company from Europe compared to previous years, citing the depth of talent and supportive regulatory frameworks. However, this optimism is tempered by significant concerns over access to growth capital, complex regulatory burdens, and the need for more robust public market options for exits. The speakers conclude that the current market correction, while painful, is ultimately healthy for the long-term development of European tech. It is weeding out weaker business models and incentivizing the kind of disciplined, ambitious company-building that can lead to enduring global category leaders.

The overarching implication is that the European tech ecosystem is undergoing a necessary maturation. Success will depend on the continued flow of risk capital, supportive policy, and the ability of founders to navigate a more selective investment climate by demonstrating robust unit economics and addressing large, meaningful problems, particularly in areas like climate and enterprise software.

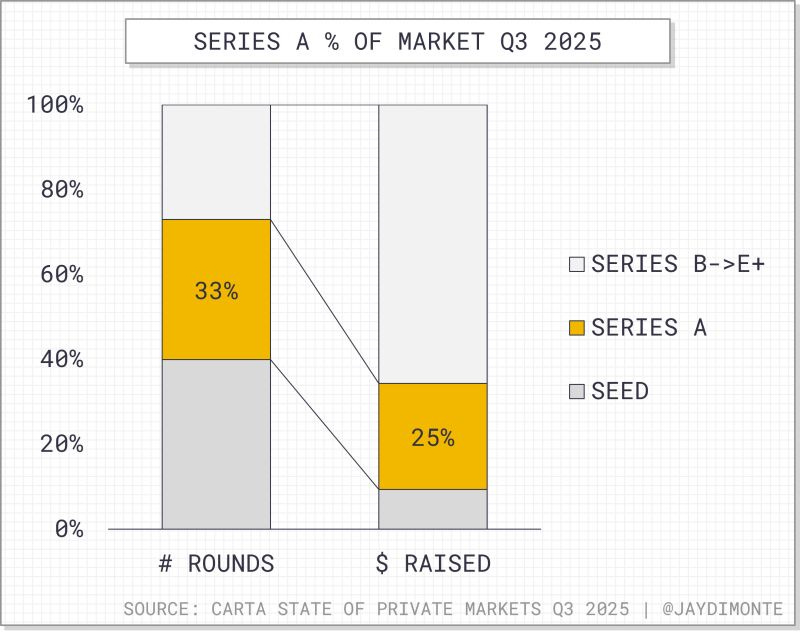

Series A rounds continue to dominate the market… but Series A funds themselves are fading fast.

LinkedIn • Jackie DiMonte • December 4, 2025

LinkedIn•Venture

Source: LinkedIn | Jackie DiMonte

Some data per Carta (link below):

📈25% of venture capital was invested at the Series A

📈33% of… | Jackie DiMonte | 13 comments

Series A rounds continue to dominate the market… but Series A funds themselves are fading fast.

Some data per Carta (link below):

📈25% of venture capital was invested at the Series A

📈33% of rounds were Series A

And yet, Series A deal counts continue to drop:

Q2: Series A deal count 18%⬇️, value 23%⬇️, while valuations 20%⬆️ YoY

Q3: Series A deal count 10%⬇️, value 8%⬆️, and valuations ~25%⬆️ YoY

So, what’s happening?

The Series A fund of a decade ago is disappearing. They either:

1️⃣ Raised big $$$ during ZIRP and graduated to multi-stage, or

2️⃣ Felt the pressure of competition and pricing and moved earlier (without reducing fund size)

As a result:

🔵 Many more $250–500M funds now invest with a core focus on seed

🔵 Larger rounds at seed (bigger funds / multi-stage is less price-sensitive)

🔵 Higher expectations for maturity at every stage

This has also pushed a bifurcation among the funds investing at the A. They now behave like either growth or value investors:

Growth ➡️ high growth, high burn, high valuations backed by multi-stage

Value ➡️ everyone else?

This is why we’re seeing some A rounds happen at $100K annualized revenue and others at $2M+.

There are obviously exceptions, but for a reason. If you’re not competing on brand, you’re competing on price. And multi-stage can do both.

This environment is incredibly beneficial for some founders and funds but makes fundraising difficult and opaque for many others.

Furthermore, the earlier big/multi-stage funds get involved, the earlier the potential for conflicting incentives and associated consequences. Without focused Series A funds, expectations escalate for these companies, many times faster than opportunities present. (I wrote about it here: https://lnkd.in/gpKyABRQ).

Series A is dead, long live Series A! | 13 comments on LinkedIn

I’m here to give the small group of you who actually care about decision science, power-law math

LinkedIn • December 4, 2025

LinkedIn•Venture

Source: LinkedIn | Guy Conway

I’m not here to scream “AI is eating VC”.

I’m here to give the small group of you who actually care about decision science, power-law math… | Andreas Munk Holm

This post is long, nerdy, and deliberately anti-hype.

I’m not here to scream “AI is eating VC”.

I’m here to give the small group of you who actually care about decision science, power-law math, and the future of European capital allocation a brutally transparent look at what Rule 30 is doing.

Guy Conway and Damian C. announcing rule30 on EUVC just dropped and it’s the deepest conversation on “datadriven VC” / “Quant VC” we’ve ever had. Long overdue.

The guys claim “the world’s first (and still only) fully systematic, algorithmically-decided pre-seed fund” -- you’ll decide what you think 🤔

Here’s what I took away:

1️⃣ Access is a myth. The real bottleneck is triage at scale

Pre-seed Europe + US = 150–200 investable companies per vintage

Rule 30 ingests 10,000+ signals per month from 15+ raw sources

Humans (even with Harmonic-style tools) collapse under that volume → fall back to pedigree heuristics

The algorithm triages 10,000 → 75 with a winner/loser ratio multiple times higher than top human funds

Result: they see everything and still only need a 3-person team

2️⃣ “Data-driven VC” ≠ quantitative VC

Most “data-driven” funds use data as a crutch for human IC decisions.

Rule 30 spent two years cleaning & contextualising raw data before training a single model.

The insight: Crunchbase/PitchBook/Dealflow data is useless without massive transformation. No academic will do that work (ruins a PhD). No traditional VC will do that work (no incentive). They did it anyway.

3️⃣ The label problem — how do you train when outcomes take 10–12 years?

Standard approach: wait forever → impossible.

Rule 30’s solution:

For every vintage since 2010, label the top decile by valuation delta from first round

Found that 5-year delta is >90 % correlated with 12-year outcome at portfolio level

Trains on 5-year labels → still predictive of terminal DPI

This is the single biggest technical unlock. Everything else flows from it.

4️⃣ Personality isn’t magic — it’s a time-series slope

They map every founder’s professional + network trajectory against age-matched cohorts in the same geography.

Outlier slopes (rate of status jumps, quality-adjusted connections, etc.) are one of the strongest features.

Also: pre-investment graph velocity predicts who will lead the next round before term sheets are out.

5️⃣ Portfolio construction — the math is brutal and unambiguous

75–85 deals, fixed €250–500 k checks, no follow-ons

Why? Pure DPI maximisation under power-law

Concentrated only works if you’re actually Benchmark (real brand + real help)

30–40 deal “middle” portfolios are mathematically broken

Their model has 97.5 % confidence of ≥3× net in worst-case black-swan scenario

Target: 6–8× net with massively reduced volatility

Is this the the new reality?

AI

What’s New with ChatGPT Voice

Youtube • OpenAI • December 5, 2025

AI•Tech•ChatGPTVoice•VoiceAssistant•ProductUpdate

Overview

This content invites viewers to watch a video that explains recent updates and capabilities related to ChatGPT’s voice functionality. The central theme is improving how users interact with ChatGPT in a more natural, conversational way through spoken input and audio output, turning the model into something closer to a real-time voice assistant. The emphasis is on demonstrating how voice makes ChatGPT more accessible, more fluid in everyday use cases, and more useful across contexts such as work, learning, and personal assistance.

Core Purpose of ChatGPT Voice

ChatGPT Voice is positioned as a way to talk to the model hands-free, using speech instead of typing.

The feature aims to make interactions feel more like a conversation with a person—quick back-and-forth, clarifications, and follow-up questions spoken aloud.

The voice modality supports situations where users are on the move, multitasking, or simply prefer speaking to writing.

Key Capabilities Highlighted

Users can speak questions or prompts, and ChatGPT responds with synthesized speech.

The system is designed to handle complex queries, extended conversations, and step-by-step explanations just as it does in text.

Voice can be used for a variety of tasks:

Brainstorming ideas or drafting content by dictation.

Getting explanations of difficult concepts in plain language.

Receiving guidance or walk-throughs (e.g., recipes, instructions, planning tasks) while the user’s hands are busy.

The demonstration underscores seamless transitions between topics, mirroring natural human conversation.

User Experience and Interaction Flow

The video encourages users to “watch this video on YouTube” to see the feature in action, suggesting a focus on live demonstration rather than technical documentation.

It likely shows:

How to start a voice conversation within the ChatGPT interface or app.

How the model responds in real time, including pauses, follow-up questions, and corrections.

How the voice experience preserves context across a conversation, just like text chat.

Emphasis is placed on ease of use: minimal setup, intuitive controls (tap to speak, tap to stop), and straightforward access inside the existing ChatGPT product.

Implications and Potential Impact

Voice dramatically broadens when and where people can use ChatGPT: commuting, cooking, exercising, or any situation where typing is inconvenient.

It can make AI tools more inclusive for users who have difficulty typing or reading on screens, or who are more comfortable with spoken language.

As voice becomes more central, ChatGPT begins to resemble a general-purpose digital assistant, potentially competing with or complementing existing smart speakers and mobile voice assistants.

The update supports a trend toward multimodal AI—systems that accept and produce different kinds of inputs (text, voice, possibly images) in a unified, conversational experience.

Key Takeaways

ChatGPT Voice enables natural, real-time spoken conversations with the model.

The feature is designed for convenience, accessibility, and more human-like interaction.

It supports a wide range of use cases—from learning and productivity to daily assistance—without requiring users to rely solely on typing.

By showcasing the feature via YouTube, the content focuses on visual and auditory demonstration, helping users quickly understand how to use and benefit from ChatGPT Voice.

ChatGPT will decide what Americans buy this holiday

Fastcompany • December 5, 2025

AI•ECommerce•AIShopping•RetailDiscovery•ConsumerBehavior

The way consumers search is changing faster than the industry expected. This holiday season, many shoppers are looking for gifts inside AI platforms, rather than retailer sites or traditional search. They are asking natural questions like:

“Find me a cruelty-free skincare gift for sensitive skin under $100.”

“What are good gift ideas for a three-year-old that are safe and durable?”

“What are the safest, nontoxic treats for my Golden Retriever?”

This shift is already measurable. Adobe Digital Insights reports a 4,700% year-over-year increase in retail visits driven by AI assistants between July 2024 and July 2025. At the same time, click-through rates from SEO have dropped 34% as users bypass the search results page entirely. eMarketer reports 47% of brands have no idea whether they appear in AI-driven discovery at all.

The platforms know this shift is accelerating. Google’s recent decision to add conversational shopping and AI-mode ads just weeks before the holidays shows how quickly consumer behavior is moving. Brands must adjust too.

Despite the complexity behind AI systems, three simple signals determine which products get recommended: trust, relevance, and extractability. These signals are the backbone of how AI decides what to surface, and matter as much as packaging, price, or placement.

AI systems develop a sense of which sources to believe during training. Domains with consistent verification signals gain more weight because the model has learned they usually publish accurate information.

This is why leading retailers, including Ulta, Sephora, Target, Amazon, and Bloomingdale’s, rely on independent verification partners for the claims displayed on their digital shelves. Verified domains act as trust anchors. When a model must choose, it selects the product backed by clearer and more reliable sources.

Trust often determines whether you are included in the answer at all.

AI assistants answer based on meaning, not keywords. When a shopper asks for “eczema-safe moisturizer” or “gluten-free protein bars,” the system retrieves products whose attributes clearly map to those concepts.

Relevance depends on using consistent claims across every channel you sell in—consistency is heavily prioritized. When multiple sources concur, this repeated confirmation strongly reinforces your product is the right choice.

Missing or inconsistent attributes keep your product of the candidate pool.

Meta buys AI pendant start-up Limitless to expand hardware push

Ft • December 5, 2025

AI•Tech•MetaAcquisition•Wearables•LimitlessPendant

Meta has acquired Limitless, an AI wearables start-up known for its pendant-style device that continuously records, transcribes and summarizes real-world conversations. The deal marks a clear signal that Meta is broadening its hardware ambitions beyond virtual reality headsets and smart glasses into a wider ecosystem of AI-powered, always-on devices. Limitless, previously called Rewind, built its product as a personal memory aid, positioning it as a way for users to “rewind” and search through their past interactions using AI-generated summaries.

Limitless’s Technology and Product

Limitless’s flagship product is an audio pendant that clips to clothing and records in-person conversations, meetings and ambient dialogue.

The device uses AI to transcribe speech in real time, then stores and organizes this data so users can search and retrieve specific information later, effectively functioning as an external memory system.

A companion app provides searchable transcripts and condensed summaries of conversations, turning raw audio into structured knowledge that users can revisit for work, personal organization or recall.

Strategic Fit with Meta’s Hardware and AI Vision

The acquisition aligns with Mark Zuckerberg’s stated push toward “personal superintelligence” delivered through consumer devices, not just via apps on phones or PCs.

Meta has already invested heavily in smart glasses and VR/AR hardware; adding an AI pendant indicates a broader bet on ambient, screenless computing and voice-first interfaces.

Limitless’s team and technology will be folded into Meta’s Reality Labs division, which is responsible for experimental hardware and has recently added senior design leadership from Apple, suggesting Meta is serious about industrial design and user experience in its next generation of devices.

The deal also reflects a rebalancing of Meta’s priorities, as it puts more emphasis on near-term AI hardware experiences rather than the longer-horizon metaverse vision that previously dominated its strategy.

Financial and Market Context