Contents

Essays

Bubble?

AI

As consumers ditch Google for ChatGPT, Peec AI raises $21M to help brands adapt

TurboTax gets an AI upgrade as Intuit inks major deal with OpenAI

Microsoft and Nvidia to invest up to $15bn in OpenAI rival Anthropic

Gemini 3 may be the moment Google pulls away in the AI arms race

Robinhood’s Vlad Tenev on AI, Prediction Markets, and the Future of Trading

Can business schools really prepare students for a world of AI? Stanford thinks so

ChatGPT Group Chats, Meta and the Encryption Trade-off, Network Effects and Ad Models

The Next Billion-Dollar Opportunity in Voice AI Just Unlocked: Licensed Voice/Image Rights

Theil’s Hedge Funds Sells Entire Nvidia Stake | Bloomberg Tech

AI Isn’t a Bubble But a Long-Term Opportunity, JPMorgan’s Erdoes Says

Venture

‘Our funds are 20 years old’: limited partners confront VCs’ liquidity crisis

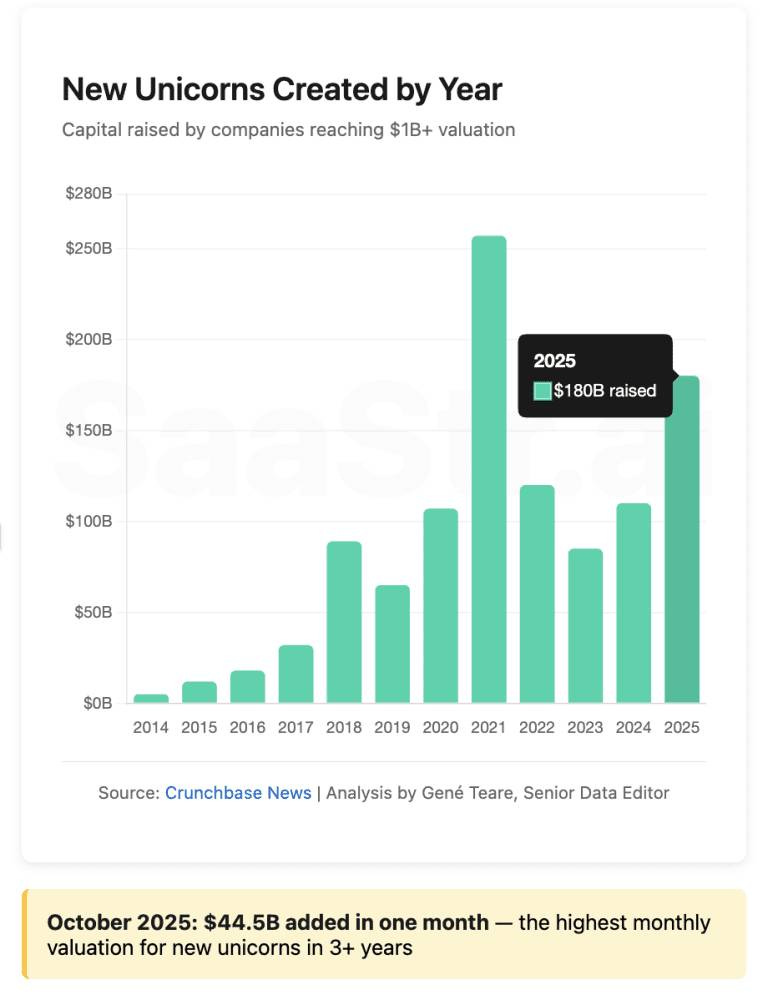

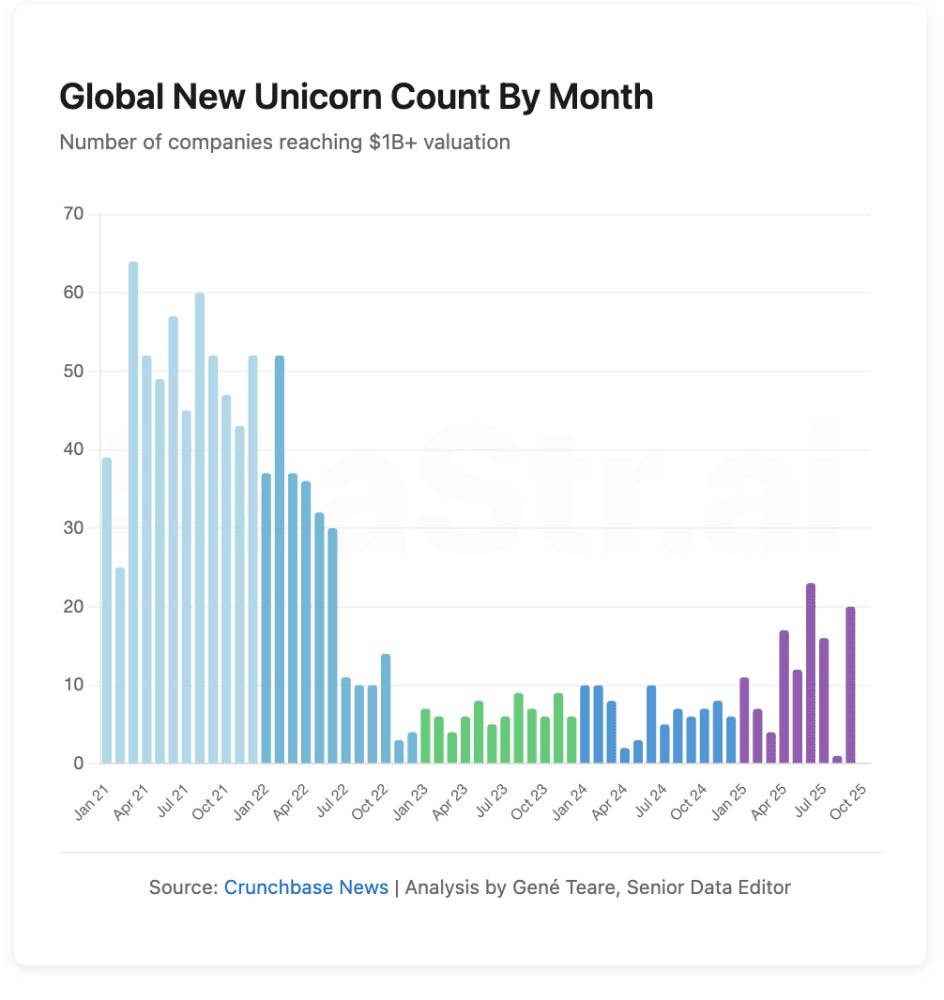

Unicorns Pick Up For The Second Month In A Row, Adding Close To $45B To The Board

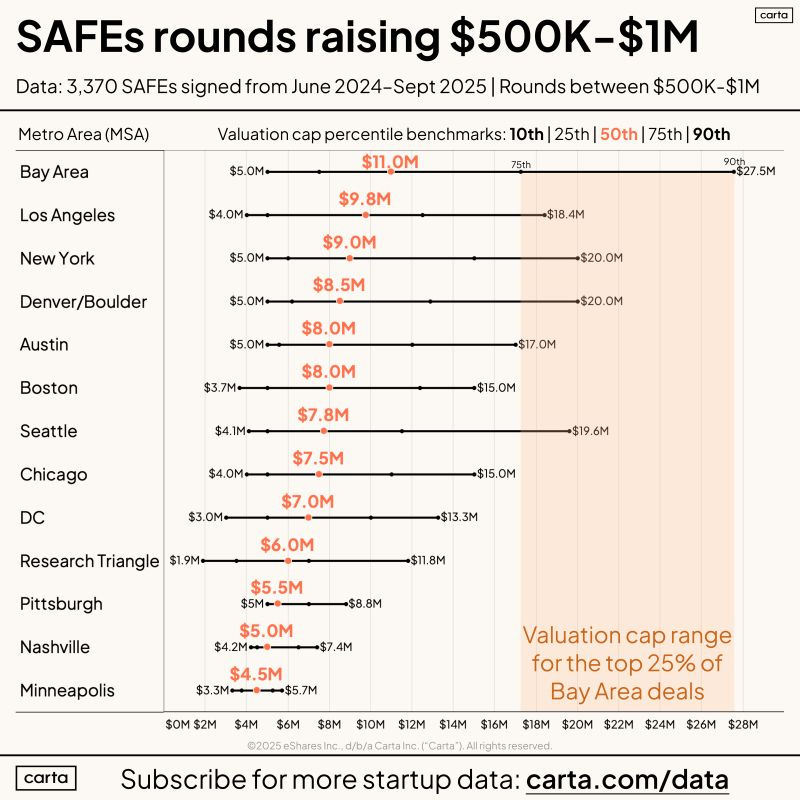

How to determine the right valuation for your startup | Peter Walker posted on the topic | LinkedIn

Portfolio valuations need to stand the scrutiny of auditors #valuations #fundaudit

Seedcamp’s investment in Function Health’s $298M Series B via our Select Fund

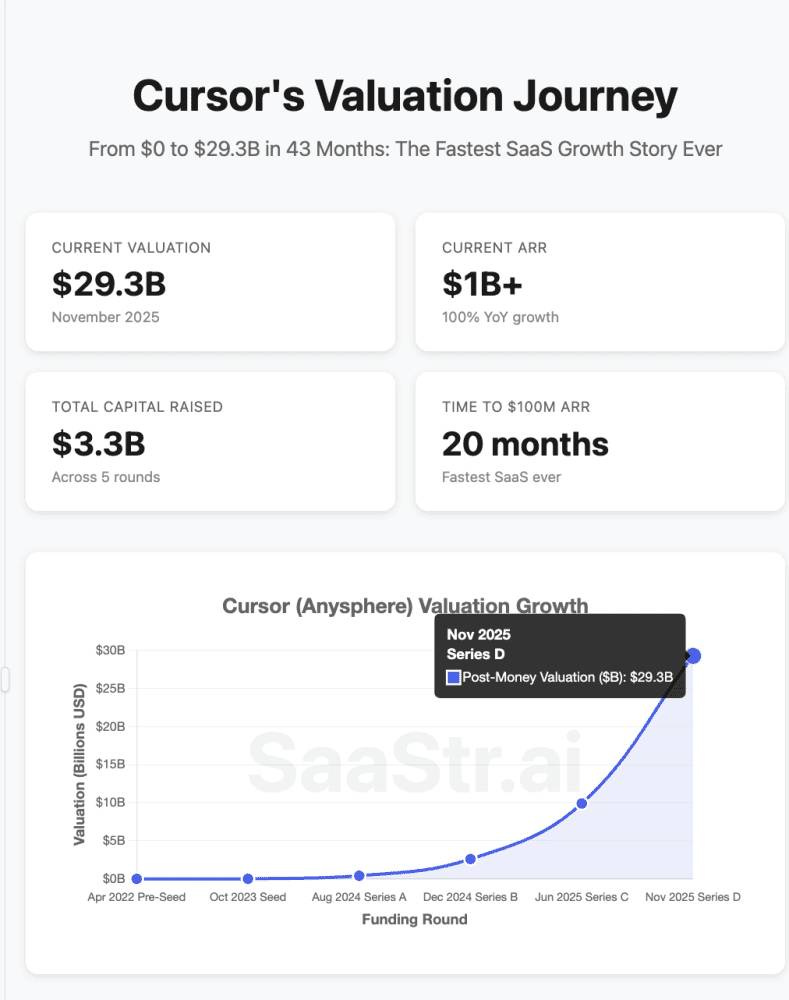

Cursor Hit $1B ARR in 24 Months: The Fastest B2B To Scale Ever?

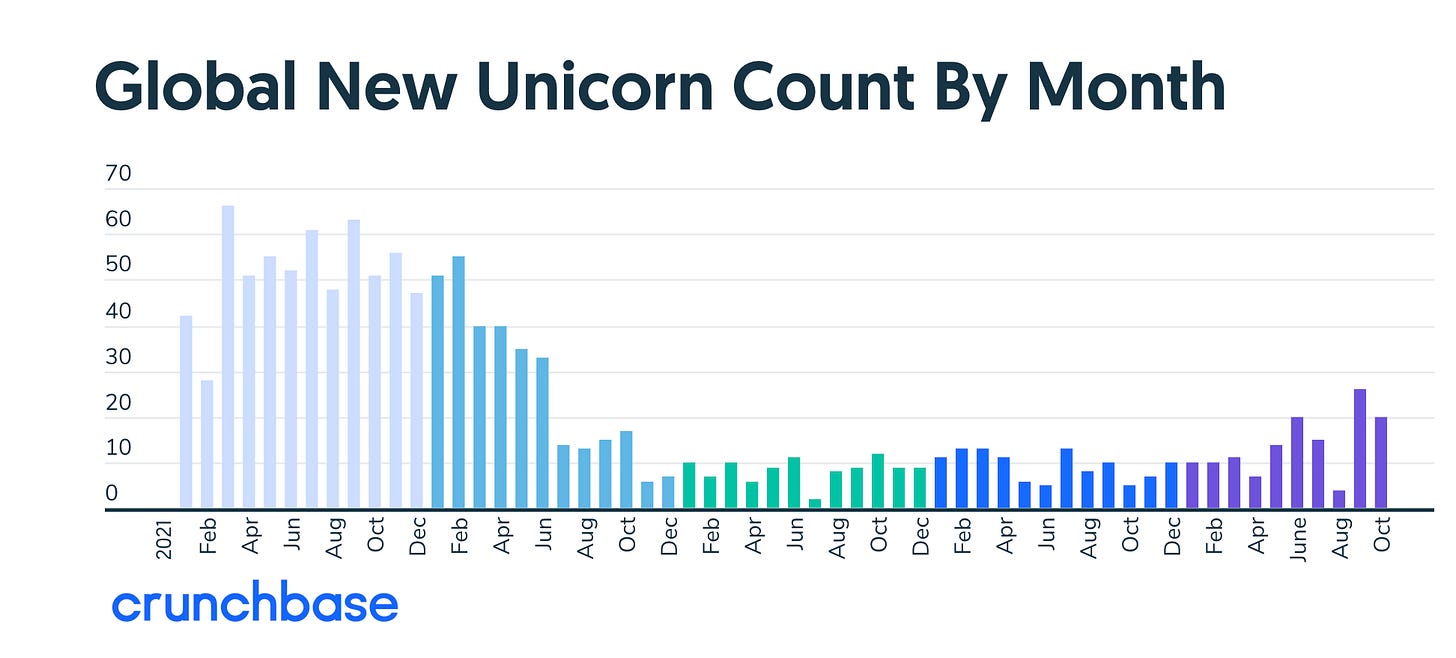

Crunchbase: We’re At The Highest Level of Unicorn Production in 3+ Years

Regulation

GeoPolitics

Interview of the Week

Startup of the Week

Post of the Week

Editorial:

AI, Agents & the New Age of Progress: Work, Poverty & the Future.

If you read the headlines this week, you might believe the internet is collapsing. Between Fast Company’s warning that AI browsers are “trying to kill” the open web and Niall Ferguson’s dark diagnosis of an “OpenAI House of Cards,” the prevailing mood is one of defensive panic. The narrative is simple: AI is a parasite eating its host, stealing the clicks that fund human creativity, and inflating a financial bubble that will inevitably burst.

It is a compelling story. It is also wrong.

We are not witnessing the destruction of the web, or the end of a house of cards, but the beginning of a new age of progress. The link between the AI browser and the end of poverty may seem weak, but there is a straight line from automation of tasks to the end of work, money and poverty, as Elon Musk predicted this week.

The panic over “stolen clicks” misses the much larger structural shift underway: the merger of the internet, the browser, the brain and the real world via robotics. We are moving from a world of passive consumption to one of active delegation and action, a shift that, if managed correctly, doesn’t just save the web’s economics but potentially solves the problem of labor and poverty.

Baby Steps: The Browser Wakes Up

For thirty years, the “browser” has been a dumb window—a pane of glass we look through to find information. But as Tanay Jaipuria notes in “The Rise of Background Agents,” that era is ending. We are transitioning from “chatting with” AI to “assigning tasks to” AI. The future isn’t a smarter search bar; it is a background process that books your travel, refactors your code, and manages your life while you sleep.

This is the “Intelligent action based UI.” In this model, the browser ceases to be a window and becomes an agent. Fast Company worries this will “kill the open web” by removing the need to visit websites. “If an agent can read every review... and buy the product without you ever visiting a website,” they argue, the ad model collapses.

They are right about the ad model, but wrong about the consequence.

This is a micro problem. But it does have a solution. The solution isn’t to force AI to send us back to ad-cluttered pages we hate; it is to integrate an authoritative “links database” into the AI itself.

We need a business model transition where “paid links” and attribution move from the webpage to the interface.

If an AI agent uses a publisher’s review to make a purchase decision, the value transfer should happen between the AI platform and the seller. The web doesn’t die; it just gets a new, more efficient front door.

But the implications of AI agents carrying out tasks is much larger. Especially when they become embedded in robotics.

Bigger Steps: The Economics of Optional Work

If we solve the interface problem, we unlock the economic promise that Elon Musk hinted at this week. In a viral clip, Musk argued that in a future of abundant intelligence and robotics, “work will be optional” and “currency becomes irrelevant.”

It is easy to dismiss this as sci-fi hyperbole, but the economic logic is sound. If the marginal cost of intelligence (via Gemini 3.0) and the marginal cost of labor (via humanoid robotics) trend toward zero, the cost of goods and services must follow. We are entering an era of deflationary abundance.

However, the “end of work” shouldn’t mean the end of purpose. As David Friedberg argued in a sharp rebuttal to Rep. Ro Khanna, trying to protect existing jobs by stalling technology is a trap. “If you had done this with the emergence of the tractor to protect loss of jobs,” Friedberg notes, we would still be subsistence farmers. The goal isn’t to preserve the drudgery of the present, but to automate it away so that human labor becomes a choice, not a survival mechanism.

Problem to Solve: The Policy Gap

This utopian outcome—a world where the internet supports billions of intelligent agents and work is a hobby—is pretty inevitable. But who benefits from that is a policy choice.

Without a new framework, the “Background Agents” that own our attention will be owned by a tiny oligopoly—a fear reinforced by Microsoft and Nvidia’s massive $15 billion investment in Anthropic. If we allow the “rich to get richer” without structural reform, the abundance of AI will be hoarded, not shared.

This is where Peter Leyden’s argument for “A New Progressive Era” becomes the most critical piece of the puzzle. Leyden compares our current moment to the Gilded Age—a time of terrifying inequality and rapid technological change that eventually birthed the middle class through aggressive political reform. He wants a new kind of state and government to realize this potential, whereas I am a sceptic on trusting Government (with a big G) to deliver it. But we agree on the opportunity.

We are at that same crossroads. The technology to liberate us from toil is arriving. The browser is evolving into a tool of immense power, but that is a baby step. The question for 2026 is not “Will the bubble burst?” but “Will we build the rules to ensure abundance is distributed?”

The web can be saved. Work can be optional. Poverty can be a thing of the past. But only if we stop panicking about preserving the past and start legislating for the future. For that we need a bottoms up desire for the things Elon Musk articulated this week (see post of the week for that)

Essay

A New Progressive Era Is Emerging

Peterleyden • Peter Leyden • November 19, 2025

Essay•GeoPolitics•Progressive Era•Artificial Intelligence•US Politics

A wave of new general-purpose technologies transforming America. The entrepreneurs and investors behind the technologies capturing vast amounts of wealth. Tech titans exerting unprecedented power in politics amid the increasing corruption of government. The nation experiencing mounting income inequality and the rise of populists on the right and left.

Many might say that’s a good description of America today, but it could also describe the country in the late-19th century, sometime around 1895. That was the high point of what Americans still call “The Gilded Age of the Robber Barons,” industrialists who amassed spectacular fortunes around the general-purpose technologies of their time, including electricity. The most powerful of them, such as those who controlled the vast networks of railroads, also controlled many elected officials at all levels of government. This helped ensure they got what they wanted, even if it was against the interests of the masses, leading to the rise of angry movements on the right and left in the forms of rural prairie populists and urban socialists.

But America at the turn of the 20th century did not devolve into an authoritarian plutocracy as many feared. Nor did the populists on the right or left ever amass the power needed to transform America along the lines of their more extreme visions.

What actually happened? Intellectuals and educated professionals, upper-middle-class elites from both the Republican and Democratic parties, thinkers and doers from left-of-center and right-of-center on the political spectrum, ignited a reform movement that, over the next 25 years, transformed how America’s economy and society worked.

What started as an elite endeavor of big ideas quickly attracted broad-based support from the mainstream middle and working classes. With a roughly 60/40 majority, the coalition was able to drive many structural changes in America, including fundamental amendments to the U.S. Constitution.

We now call this period of great reform from 1895 to 1920 — a time that remade America in general and its urban areas in particular — The Progressive Era.

I think a strong case can be made that America today could be entering a similar era of structural reform and great progress — one that may be eventually seen as The 21st-century Progressive Era.

Despite widespread fears from left-of-center, America will not devolve into a right-wing autocracy controlled by a plutocracy of billionaires with a nod from the tech titans — even given MAGA and President Donald Trump’s authoritarian tendencies.

Despite widespread fears from the right-of-center, America won’t fall under the control of the far left, with old-school socialism and big government bureaucrats at the helm. That’s even more of a distant dream.

I think the most probable outcome of our current juncture will be the emergence of a new majority of smart, practical, common-sense Americans. They will embrace the realities of powerful new general-purpose technologies like artificial intelligence, while recognizing the need to restructure the economy and reform society to ensure the techs’ benefits are shared by all.

We’re still in the early stages, but a realignment within politics and the acceleration of great progress may well be in our near future.

New York Is An Industry Town

Digitalnative • Rex Woodbury • November 19, 2025

Essay•AI•New York City•Applied AI•Startup Ecosystems

San Francisco and New York are the two biggest startup hubs in the world.

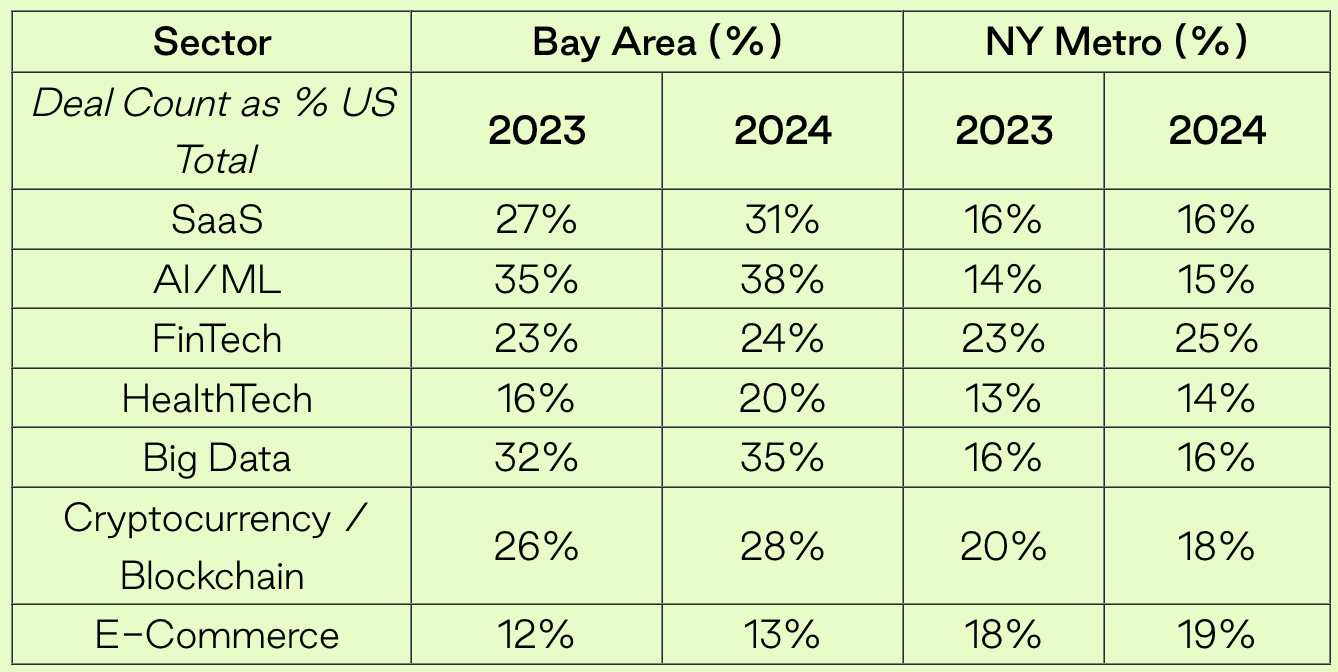

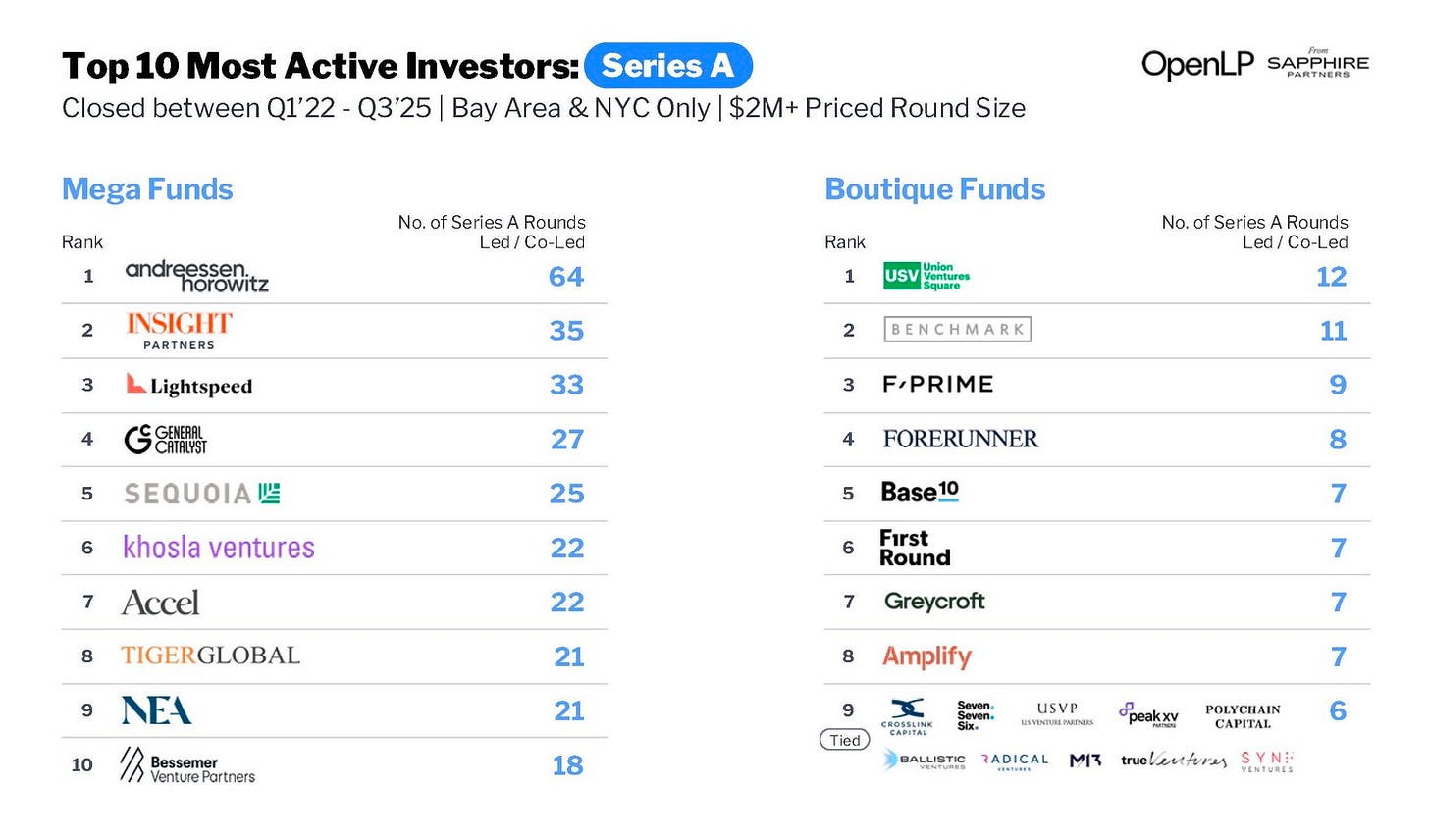

The Bay Area ranks first, with ~25% of U.S. venture deals (measured as a percent of the total number of deals). New York follows with ~14% of deals last year. Here’s a breakdown by sector:

This is U.S. data, but we see the same trend globally, with SF and NYC meaningfully outpacing 3rd-place Beijing. For many years, we’ve had a clear 1 and a clear 2.

This gets at a familiar debate in tech: NYC vs. SF.

I lived in the Bay for five years before moving back to New York a few years ago. The right answer is: both cities are great places to build startups! There’s a reason they rank 1 and 2, and they probably will for years to come.

AI has been a deus ex machina for San Francisco, which saw a pandemic exodus that spurred lots of hand-wringing and “SF is dead” proclamations. Those proclamations were always overblown; SF never lost its seat as the nucleus of technology.

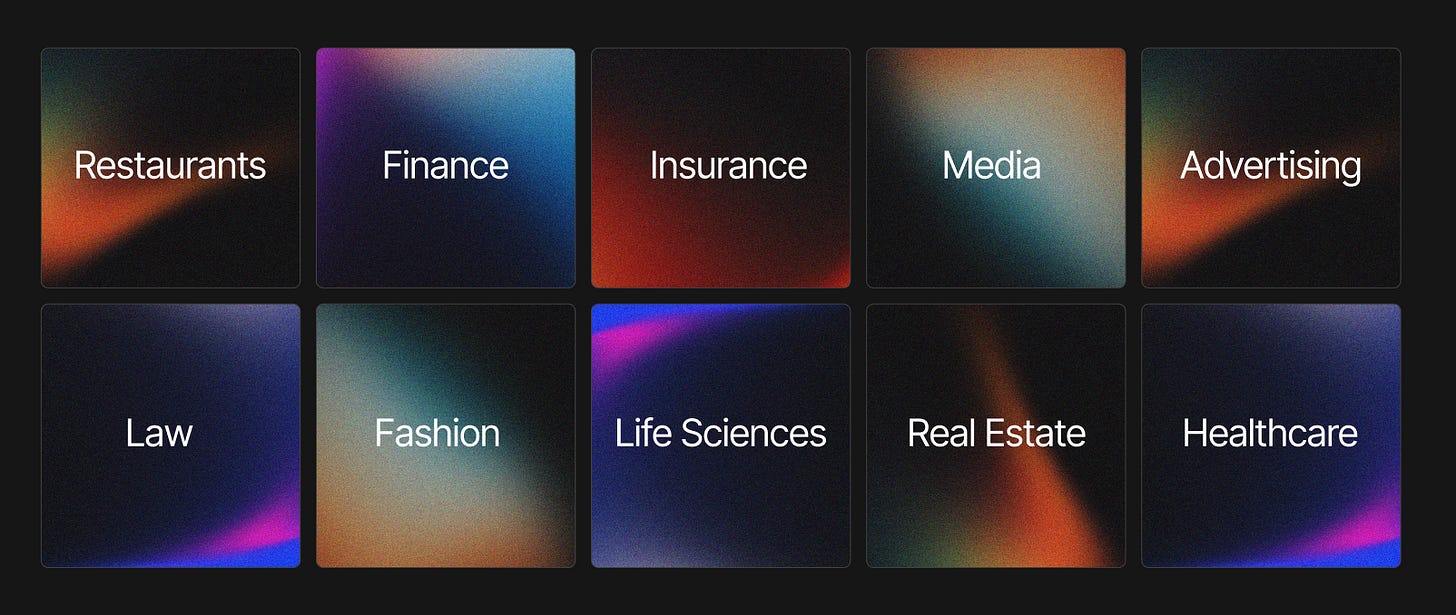

But New York has boomed in recent years, and I think its star is only rising. The Bay dominates for foundation models and infrastructure companies. But New York is perfectly suited to applied AI. Why? Well, because New York is an industry town.

You could make the argument that New York is a (the?) global epicenter for a dozen major industries. To take 10 examples:

Let’s tick through New York industries and look at opportunities for reinvention.

Presentations — Benedict Evans | Benedict Evans | 40 comments

LinkedIn • Keith Teare • November 19, 2025

LinkedIn•Essay

Source: LinkedIn | Author

Twice a year, I produce a big presentation exploring macro and strategic trends in the tech industry.

“You don’t need to learn to code” = BAD ADVICE

Youtube • 20VC with Harry Stebbings • November 17, 2025

Essay•AI•Coding•Startups•Founders

Core Argument

The content presents a concise argument that the common advice “you don’t need to learn to code” is misleading, especially for ambitious people working in technology, startups, or product-building environments. The central thesis is that while not everyone needs to become a professional software engineer, understanding how to code confers a significant strategic edge in terms of creativity, execution speed, and credibility with technical teams. Coding is framed less as a narrow technical specialty and more as a foundational literacy for modern builders and operators, akin to being able to read a balance sheet in business or use spreadsheets in finance. The message targets non-technical founders, operators, and aspiring entrepreneurs who might otherwise rationalize away the effort required to develop technical fluency.

Why Coding Matters Beyond Engineering Roles

Coding is portrayed as a force multiplier for anyone who wants to build products, automate workflows, or experiment quickly.

Even a basic ability to write scripts, prototype interfaces, or manipulate data can eliminate friction, reduce dependency on others, and accelerate iteration cycles.

Technical fluency enables better communication with engineers: asking for realistic timelines, understanding tradeoffs, and scoping work in ways that are implementable.

Rather than being treated as optional, coding is positioned as a skill that expands the surface area of what an individual can do on their own, especially in early-stage environments where resources are limited.

Reframing “You Don’t Need to Learn to Code”

The statement “you don’t need to learn to code” is critiqued as comforting but ultimately disempowering advice for people who want to be builders.

The underlying implication is that this phrase often becomes a convenient excuse to stay in a comfort zone of purely non-technical tasks, relying on others for core product execution.

The content suggests that while no one is literally forced to learn to code, those who do will be able to see more opportunities, validate ideas faster, and make more informed decisions.

In a world increasingly shaped by software, not learning to code is framed as voluntarily giving up leverage.

Impact on Founders and Operators

For founders, knowing how to code—even modestly—can:

Help them build the first version of a product themselves.

Make them more credible to high-caliber engineers, who respect leaders that understand the work.

Reduce early hiring pressure and extend runway by delaying the need for a large technical team.

For operators (in roles like growth, ops, or product), coding skills unlock:

Automation of repetitive workflows.

Custom internal tools tailored to the team’s actual needs.

Data-driven decision-making through simple scripts or dashboards rather than waiting on engineering resources.

The overarching impact is that individuals with coding skills can move from “asking others to do things” to “directly changing the product or system” themselves.

Broader Implications

Coding is implicitly framed as a modern career hedge: as automation, AI, and software increasingly shape industries, being able to interact with these systems at a technical level makes one more resilient and adaptable.

The message also hints at a cultural shift: in tech-centric ecosystems, the distinction between “technical” and “non-technical” is eroding, and those who bridge the gap gain outsized influence.

By challenging the idea that coding is optional, the content encourages a mindset of ownership—if you want to build, you should embrace the skills that let you build directly, rather than outsourcing the core of your creative power.

Key Takeaways

Coding is not only for engineers; it is a leverage skill for anyone serious about building products or companies.

The comforting narrative that you can succeed in tech without any coding knowledge is challenged as bad advice for ambitious builders.

Even basic technical proficiency dramatically increases speed, autonomy, and credibility in early-stage and high-growth environments.

In a software-driven world, choosing not to learn to code is effectively choosing to limit your scope of impact and control over what you create.

Student Debt as Modern American Serfdom: A Mother Stole $200,000 in Her Daughter’s Name

Keenon • November 18, 2025

Essay•Education•Student Debt•Bankruptcy•Debt Collective

It’s the ultimate financial nightmare. Kristin Collier, a young student in Minnesota, woke up one morning to discover that her mother had taken out $200,000 in Kristin’s name. Collier tells this story in What Debt Demands, a book about America’s student debt crisis that is both personal and political. Collier, who proudly defines herself as a “democratic socialist”, believes that student debt is a form of modern American serfdom. So what to do? She argues for massive debt cancellation, free public higher education funded by taxes on stock trades, and restoring bankruptcy protections that existed before 2005. But with the average American now carrying $105,000 in debt and one in four households living paycheck to paycheck, can any political initiative—a Mamdani democratic socialist style or otherwise—actually address this crisis before it triggers a nightmarish financial crisis in the broader economy?

Student Debt Has Become Inescapable Serfdom Since 2005, student loans—both federal and private—are nearly impossible to discharge through bankruptcy. Borrowers must meet an “undue hardship” standard so stringent that people are literally having their Social Security payments garnished in retirement to pay off loans taken out at age 20. Unlike mortgages or credit card debt, education debt follows you for life.

Private Student Lenders Operate Like Subprime Mortgage Predators During the mid-2000s, banks offered “direct consumer private loans” up to $30,000 with no school certification required, transferred straight to bank accounts, with interest rates of 10-12%. A $30,000 loan could balloon to $100,000. Collier’s mother was able to take out eight separate loans totaling $200,000 using only a Social Security number and forged signature—the system had no safeguards because lenders prioritized profit over verification.

Biden’s Big Moves Failed, But Smaller Wins Succeeded Biden’s signature executive action to cancel $10,000-$20,000 in federal student debt (which would have freed 20 million borrowers) was blocked by courts, as was his generous SAVE income-driven repayment plan. However, his reforms to Public Service Loan Forgiveness, existing income-driven repayment programs, and borrower defense protections have canceled billions in debt—demonstrating that incremental administrative changes work better than bold executive action in our current legal landscape.

The Debt Crisis Extends Far Beyond Students With average American consumer debt at $105,000 and one in four households living paycheck to paycheck, we’re potentially heading toward systemic economic collapse. The issue isn’t just student loans—it’s medical debt, rental debt, and a broader affordability crisis. Collier’s organization, the Debt Collective (born from Occupy Wall Street), treats this as a collective action problem requiring a union of debtors across all categories.

Debt Creates Psychological Haunting, Not Just Financial Burden Collier describes debt as both “presence and absence”—a constant bodily heaviness and dread. She feared her credit card would be rejected at grocery stores, dreaded checking her bank account, assumed every unknown phone number was a debt collector. This shame is culturally reinforced: Americans are taught that unpayable debt reflects personal moral failure, even when the system itself is predatory. One borrower told her he avoided dating entirely because he was too ashamed to reveal his debt burden.

Prediction Markets to Rival Stocks Within Years, Kalshi CEO Says

Bloomberg • November 18, 2025

Essay•Geo Politics•Prediction Markets•Financial Innovation•Market Structure

Overview of Rapid Growth in Prediction Markets

Prediction markets, platforms where people trade contracts based on the outcomes of future events, are expanding much faster than earlier industry expectations. The key claim is that these markets could grow to rival traditional stock exchanges within a few years. This reflects a broader shift in how individuals and institutions seek to express views on real-world events, manage risk, and access financial instruments tied to politics, economics, and other measurable outcomes.

Key Drivers of Accelerated Expansion

The rapid pace of growth suggests surging demand from both retail users and more sophisticated traders who see prediction markets as an alternative or complement to conventional financial assets.

Market participants are increasingly attracted by the ability to trade on clearly defined outcomes—such as election results, macroeconomic indicators, or policy decisions—rather than only on the performance of companies or indexes.

A faster-than-expected adoption curve implies improving market infrastructure, easier user interfaces, and greater regulatory clarity, all of which help reduce friction for new users and capital inflows.

Comparison to Traditional Stock Exchanges

The central argument is that prediction markets could reach a scale comparable to stock exchanges in a relatively short period of time. That means not just niche speculation, but significant volumes of capital and liquidity.

While stock exchanges focus on ownership stakes in companies, prediction markets provide direct exposure to “event risk.” As these markets deepen, they may begin to serve similar functions to stock markets in terms of price discovery and hedging, but for a wider array of real-world outcomes.

If prediction markets do approach the size of stock exchanges, they could become a primary venue for expressing views on macro events, much as equities are for corporate prospects today.

Implications for Finance, Risk Management, and Information

For investors and traders, prediction markets offer a new toolset to hedge or speculate on specific events—such as elections, regulatory decisions, or economic releases—that currently must be managed indirectly via equities, bonds, or derivatives.

Broader and deeper prediction markets could improve the quality of public information. As more participants trade on their beliefs and information, market prices may become a widely referenced “probability signal” for key events, similar to how stock prices signal expectations about corporate performance.

The convergence in scale between prediction markets and stock exchanges would blur traditional boundaries between speculative trading, risk management, and information aggregation, potentially reshaping parts of the financial ecosystem.

Strategic and Regulatory Considerations

Rapid growth raises strategic questions for existing financial institutions. Banks, hedge funds, and asset managers may need to integrate prediction markets into their analytics, risk frameworks, or even product offerings.

Regulators will likely face pressure to clarify how these markets are classified and supervised—whether as derivatives, gaming, or a new financial category—given their potential impact and the size envisioned in the near future.

If prediction markets do rival stock exchanges in a few years, policymakers will need to consider how these platforms influence public expectations and decision-making, particularly for politically sensitive or systemically important events.

Critical Takeaways

Prediction markets are expanding at a pace that significantly exceeds prior expectations.

Their proponents foresee them operating at a scale comparable to traditional stock exchanges within a relatively short time frame.

This trajectory suggests large implications for how markets aggregate information, price event risk, and complement or compete with existing financial infrastructure.

🔮 How Europe outsourced its future to fear

Exponentialview • November 19, 2025

Essay•Regulation•PrecautionaryPrinciple•Europe•AI

Hi, it’s Azeem, here with a special guest essay.

Europe once stood alongside the United States as a central force shaping global technology and industry. Its relative decline in the digital era is often pinned on regulation and bureaucracy.

But our guest, Brian Williamson – Director at Communications Chambers and a long-time observer of the intersection of technology, economics and policy – argues the deeper issue is a precautionary reflex that treats inaction as the safest choice, even as the costs of standing still rise sharply.

Over to Brian.

If you’re an EV member, jump into the comments and share your perspective.

It’s time to jettison the precautionary principle

“Progress, as was realized early on, inevitably entails risks and costs. But the alternative, then as now, is always worse.” — Joel Mokyr in Progress Isn’t Natural

Europe’s defining instinct today is precaution. On AI, climate, and biotech, the prevailing stance is ‘better safe than sorry’ – enshrined in EU law as the precautionary principle. In a century of rapid technological change, excess precaution can cause more harm than it prevents.

The 2025 Nobel laureates in Economic Sciences, Joel Mokyr, Philippe Aghion, and Peter Howitt, showed that sustained growth depends on societies that welcome technological change and bind science to production; Europe’s precautionary reflex pulls us the other way.

In today’s essay, I’ll trace the principle’s origins, its rise into EU law, the costs of its asymmetric application across energy and innovation, and the case for changing course.

How caution became doctrine

The precautionary principle originated in Germany’s 1970s environmental movement as Vorsorgeprinzip (literally, ‘foresight principle’). It reflected the belief that society should act to prevent environmental harm before scientific certainty existed. Errors are to be avoided altogether.

The German Greens later elevated Vorsorgeprinzip into a political creed, portraying nuclear energy as an intolerable, irreversible risk.

The principle did not remain confined to Germany. It was incorporated at the EU level through the environmental chapter of the 1992 Maastricht Treaty, albeit as a non‑binding provision. By 2000, the European Commission had issued its Communication on the Precautionary Principle, formalizing it as a general doctrine that guides EU risk regulation across environmental, food and health policy.

Caution can cut both ways

Caution may be justified when uncertainty is coupled with the risk of irreversible harm. But harm doesn’t only come from what’s new and uncertain; the status quo can be dangerous too.

In the late 1950s, thalidomide was marketed as a harmless sedative, widely prescribed to pregnant women for nausea and sleep. Early warnings from a few clinicians were dismissed, and the drug’s rapid adoption outpaced proper scrutiny. As a result of thalidomide use, thousands of babies were born with limb malformations and other severe defects across Europe, Canada, Australia, New Zealand and parts of Asia. This forced a reckoning with lax standards and fragmented oversight.

In the US, a single FDA reviewer’s insistence on more data kept the drug off the market – an act of caution that became a model for evidence‑led regulation. In this instance, demanding better evidence was justified.

Irreversible harm can also arise where innovations that have the potential to reduce risk are delayed or prohibited. Germany’s nuclear shutdown is the clearest example. Following the Chernobyl and Fukushima accidents — each involving different reactor designs and, in the latter case, a tsunami — an evidence‑based reassessment of risk would have been reasonable. Instead, these events were used to advance a political drive for nuclear phase‑out which was undertaken without a rigorous evaluation of trade‑offs.

Germany’s zero‑emission share of electricity generation was about 61% in 2024; one industry analysis found that, had nuclear remained, it could have approached 94%. The missing third was largely replaced by coal and gas, which raises CO₂ emissions and has been linked to higher air‑pollution mortality (about 17 life‑years lost per 100,000 people).

In Japan, all nuclear plants were initially shut after Fukushima. They overhauled the regulation and restarted permits on a case-by-case basis, under new, stringent safety standards. They never codified a legalistic ‘precautionary principle’ and have been better able to adapt. Europe often seeks to eliminate uncertainty; Japan manages it.

A deeper problem emerges when caution is applied in a way that systematically favours the status quo, even when doing so delays innovations that could prevent harm.

A Swedish company, I‑Tech AB, developed a marine paint that prevents barnacle formation, which could improve ships’ fuel efficiency and cut emissions. Sixteen years after its initial application for approval, the paint has not been cleared for use in the EU, though it is widely used elsewhere. The EU’s biocides approval timelines are among the longest globally. Evaluations are carried out in isolation rather than comparatively, so new substances are not judged against the risks of existing alternatives. Inaction is rewarded over improvement.

This attitude of precaution has contributed to Europe’s economic lag. Tight ex‑ante rules, low risk tolerance and burdensome approvals are ill‑suited to an economy that must rapidly expand clean energy infrastructure and invest in frontier technologies where China and the United States are racing ahead. The 2024 Draghi Report on European competitiveness recognized that the EU’s regulatory culture is designed for “stability” rather than transformation:

“[W]e claim to favour innovation, but we continue to add regulatory burdens onto European companies, which are especially costly for SMEs and self-defeating for those in the digital sectors. ”

Yet nothing about Europe’s present circumstances is stable. Energy systems are being remade, supply chains redrawn and the technological frontier is advancing at a pace unseen since the Industrial Revolution.

AI and the costs of stagnation

Like nuclear energy, AI may carry risks, but also holds the potential to dramatically reduce others - and the greater harm may lie in not deploying AI applications rapidly and widely.

This summer, 38 million Indian farmers received AI‑powered rainfall forecasts predicting the onset of the monsoon up to 30 days in advance. For the first time, forecasts were tailored to local conditions and crop plans, helping farmers decide what, when, and how much to plant – and avoid damage and loss.

AI browsers need the open web. So why are they trying to kill it?

Fastcompany • November 19, 2025

AI•Browsers•OpenWeb•Regulation•DataPrivacy•Essay

For those of us who earn a living publishing content on the open internet, Amazon’s lawsuit against AI startup Perplexity can seem darkly amusing. Perplexity is among the many AI companies that has spent years extracting value from the internet in exchange for little. Its crawlers have synthesized endless amounts of content from publishers, even working around publishers’ attempts to block this behavior, all so Perplexity can summarize content without having to send traffic to the websites themselves.

Now Perplexity and its rivals are going a step further, with a new wave of AI browsers that can navigate pages automatically. Perplexity has Comet, OpenAI has ChatGPT Atlas, Opera has Neon, and others are on the way. The pitch is that AI “agents” will soon be able to trudge through the web on your behalf, booking your flights, buying your groceries, and shopping on sites like Amazon. Both Perplexity and OpenAI view these browsers as imperative in their goals to build AI “operating systems” that can manage your life.

Amazon, which has a lot to lose if people stop accessing its website directly, is suing to stop that from happening. It’s been trying to block Perplexity, but so far to no avail.

Therein lies the irony: These AI browsers promise a future where you’ll never have to visit a website again, yet that promise depends on having viable websites to crawl through in the first place. Amazon’s lawsuit is a sign that these two goals may be incompatible.

For companies like Perplexity and OpenAI, web browsers are suddenly important because they open the door to content and data that would otherwise be inaccessible. Consider Amazon. If you’re just using ChatGPT’s website, you might ask it to recommend a few Amazon items or summarize a product’s user reviews, but its answers wouldn’t include any personal data from Amazon’s site. By contrast, ChatGPT Atlas and Perplexity Comet can access Amazon exactly as it appears in your own browser window. That means they can crawl through your order history or weigh in on Amazon’s personalized product recommendations.

Perplexity says these “agentic” browsers make for a better shopping experience, which is why Amazon should embrace them—but Perplexity also stands to benefit in other ways. By understanding things like your order history, personalized recommendations, and all the questions you asked Perplexity’s AI to arrive at a particular product, the company can build a much richer user profile for things like targeted advertising.

“You’ve gone from behavior tracking to psychological modeling,” says Eamonn Maguire, who leads the machine learning team at Proton. “Where you have traditional browsers tracking what you do, AI browsers infer why you do it.”

This isn’t speculation. Perplexity CEO Aravind Srinivas said on the TBPN podcast earlier this year that its browser will enable “hyper-personalized” ads by understanding more about users’ personal lives. “What are the things you’re buying, which hotels are you going to, which restaurants are you going to, what are you spending time browsing, tells us so much more about you,” Srinivas said.

The hot new investment trend is the ‘Total Portfolio Approach’. Does it work?

Ft • Nangle • November 16, 2025

Essay•Venture

Overview of the “Total Portfolio Approach”

The article introduces the “Total Portfolio Approach” (TPA) as a fashionable but still loosely defined trend in institutional asset allocation. It is presented as an attempt to rethink how large investors — particularly pension funds and sovereign wealth funds — construct their portfolios in a world of lower expected returns, more frequent macro shocks, and increasingly complex alternative assets. Rather than treating each asset class in isolation, the approach aspires to manage all holdings as a single, integrated pool, aligned tightly to an institution’s objectives and risk tolerance, and more responsive to changing market conditions.

Key Features and Ambitions of TPA

TPA seeks to move beyond traditional siloed asset allocation (equities vs bonds vs alternatives) toward a holistic risk-and-return view of the entire balance sheet.

It places strong emphasis on understanding the underlying drivers of risk (such as equity beta, interest-rate exposure, inflation sensitivity and illiquidity) across all asset classes, rather than just their labels.

Proponents argue it enables more dynamic allocation, faster rebalancing, and clearer trade-offs between, for example, liquidity needs, long-term return targets, and tolerance for drawdowns.

The approach is often associated with large, sophisticated asset owners that can build internal teams, analytics and governance structures to support it.

Why It Has Become Popular

The concept has gained traction in the wake of several market and macro developments:

A decade-plus of low interest rates and high asset prices has eroded expected returns from traditional 60/40 portfolios, pushing institutions toward alternatives and more complex strategies.

Episodes such as the global financial crisis, the Covid shock and inflation spikes have exposed weaknesses in static allocation frameworks and stress-tested liquidity assumptions.

Regulators and stakeholders increasingly scrutinize funding gaps, drawdown risks and liquidity profiles, incentivizing asset owners to demonstrate more integrated, risk-based decision-making.

Consulting firms and asset managers have promoted TPA as a modern, more “scientific” framework, contributing to its buzz and branding.

Conceptual Strengths but Practical Vagueness

While the article acknowledges the intuitive appeal of looking at the portfolio as a whole, it underscores how fuzzy the concept can be in practice:

There is no single, agreed definition of TPA; different institutions and consultants use the label for quite varied practices.

In many cases, what is marketed as TPA can be little more than enhanced risk reporting or factor-based decomposition of existing portfolios, without fundamentally changing governance or decision rights.

Implementing a true total portfolio framework requires substantial organizational change: clear objectives, risk-budgeting at the total fund level, and centralized decision-making that can override asset-class silos.

Without this deep integration, the label risks becoming a buzzword that overpromises and under-delivers.

Governance, Incentives and Human Factors

The article stresses that the main constraints on TPA are less about mathematics and more about institutions and people:

Asset owners often have long-established committee structures with separate equity, fixed income and alternatives teams, each defending their domain.

Incentive systems, benchmarks and performance evaluations tend to be asset-class specific, which can conflict with total-portfolio optimization.

Moving to TPA implies reconfiguring roles, consolidating authority, changing performance metrics and, in some cases, reducing autonomy for individual teams — steps that can be politically difficult.

The article suggests that any genuine adoption of TPA must be accompanied by explicit changes in governance and accountability, not just new risk dashboards.

Does It Actually Work?

Evidence for TPA’s superiority remains mixed and somewhat anecdotal:

Some high-profile funds that describe themselves as using a TPA-like framework point to more coherent risk management, better alignment with liabilities and improved liquidity planning.

However, performance differences are hard to disentangle from other factors such as strategic asset allocation decisions, internal skill and risk appetite.

The approach does not free investors from the need to make difficult calls on long-term returns, inflation and correlations; it merely frames those calls differently.

The article implies that, far from being a magic bullet, TPA is best viewed as a governance and process upgrade that may or may not lead to better returns, depending on how rigorously it is implemented.

Implications and Takeaways

The central message is that total portfolio thinking is directionally sound — especially for large, complex asset owners — but its effectiveness depends on depth of implementation rather than the label itself. If institutions are willing to overhaul governance, align incentives to total-fund outcomes and invest in robust risk analytics, TPA can help clarify trade-offs, prevent hidden risks and improve resilience. If not, it risks becoming another “buzzy but fuzzy” concept in asset management marketing.

Bubble?

Nvidia reports strong growth from bumper AI chip sales

Ft • November 19, 2025

AI•Tech•Nvidia•Semiconductors•AI Chips•Bubble?

Overview

The article focuses on Nvidia’s latest earnings update, highlighting that the company is experiencing powerful growth driven by surging demand for its artificial intelligence (AI) chips. As a key supplier of graphics processing units (GPUs) used to train and run large AI models, Nvidia’s results are presented as a critical indicator of the health, momentum and sustainability of the broader AI boom. The piece frames the company’s financial performance not just as a corporate success story, but as a bellwether for enterprise and cloud investment in AI infrastructure worldwide.

Nvidia as an AI bellwether

Nvidia’s earnings are portrayed as a proxy for overall AI infrastructure spending, because its chips underpin data-centre buildouts at major hyperscalers, cloud providers and leading AI labs.

Strong sales growth in Nvidia’s data centre segment is interpreted as evidence that companies are accelerating AI deployments rather than pulling back.

The article underscores that investors and industry observers closely track Nvidia’s quarterly figures to gauge whether AI spending is broadening beyond early experiments into large-scale, revenue-generating production workloads.

Growth drivers and demand signals

The central demand driver is the “bumper” volume of AI chips ordered by cloud platforms, large enterprises and AI start-ups seeking the compute capacity to train frontier models and run inference at scale.

The article notes that AI workloads—from generative AI to recommendation engines and analytics—are increasingly concentrated on Nvidia’s GPU platforms, reinforcing its market dominance.

Strong earnings are linked to multi-year capital expenditure plans by major tech platforms that are building or expanding AI-optimized data centres, suggesting that the demand pipeline is not merely cyclical but part of a structural shift.

Implications for the AI ecosystem

Because Nvidia sits at the core of the AI hardware stack, robust results imply continued funding and confidence across the AI value chain, including model developers, enterprise software vendors and cloud infrastructure providers.

The article suggests that Nvidia’s performance can signal whether the AI sector is overheating or entering a more mature, durable growth phase. Strong current numbers hint that customers still see tangible value in AI applications despite concerns about hype and high compute costs.

At the same time, the reliance of so many AI players on a single key supplier raises strategic questions about concentration risk, pricing power and potential bottlenecks in chip availability.

Market and strategic considerations

The piece implies that Nvidia’s success strengthens its bargaining power with cloud and enterprise customers, potentially affecting pricing, allocation of supply and the pace at which competitors can gain share.

It highlights that investors will interpret these earnings as a signal for broader equity markets, particularly tech and semiconductor stocks that are exposed to AI spending cycles.

Nvidia’s trajectory may shape how aggressively rivals—including alternative chipmakers and custom in-house accelerators from big tech firms—invest to challenge its dominance in key AI workloads.

Broader economic and technological impact

Continued strong AI-chip sales point to AI becoming a foundational layer of digital infrastructure, similar to previous waves of cloud and mobile computing.

The article suggests that as organizations race to integrate AI into products and workflows, Nvidia’s chips and accompanying software ecosystem will remain crucial enablers, influencing the speed and scope of AI adoption across industries.

Nvidia’s earnings thus resonate beyond the semiconductor sector, offering a snapshot of how quickly AI is moving from experimentation to scaled deployment, and how much capital global companies are willing to commit to this transition.

Key takeaways

Nvidia’s robust earnings underscore intense and ongoing demand for AI compute, positioning the company as a central beneficiary of the AI boom.

Because Nvidia is deeply embedded in the AI infrastructure stack, its performance serves as a leading indicator for the overall health and direction of the AI sector.

The results reinforce the view that AI is in a major investment phase, with significant implications for technology markets, corporate strategy and the pace of AI adoption across the global economy.

Nvidia’s Strong Results Show AI Fears Are Premature

Wsj • November 20, 2025

AI•Tech•Nvidia•AIChips•MarketSentiment•Bubble?

Overall Argument and Market Context

The article argues that concerns about a slowdown in artificial-intelligence spending are premature in light of the chip maker’s latest earnings and guidance. Despite a sharp selloff that has dragged down its share price and overall valuation, the company reports that demand for its AI chips remains exceptionally strong and is likely to stay elevated through at least next year. This disconnect between investor fears and the company’s operational performance has left a business worth around $4.5 trillion looking comparatively “cheap” relative to its growth outlook and market position.

Evidence of Strong, Durable AI Demand

The company reports that orders for its flagship AI accelerators and related data-center products remain robust, with customers signaling multi-quarter, and in some cases multi-year, deployment plans.

Management indicates that hyperscale cloud providers, large enterprises, and emerging AI-native startups all continue to expand their infrastructure buildouts rather than pausing or cutting back.

Forward-looking commentary points to a strong demand pipeline “through next year,” suggesting that AI infrastructure spending is not just a short-lived boom but an ongoing investment cycle.

The article frames this as evidence that current market pessimism about a looming plateau in AI spending is not yet visible in the company’s actual order book or guidance.

Valuation Reset and “Cheapness” Argument

Following recent market volatility and a sector-wide selloff in high-growth technology names, the chip maker’s stock has declined enough to compress its valuation multiples.

On metrics such as price-to-earnings or price-to-sales (relative to its growth rate and margin profile), the company is depicted as inexpensive for a business of its scale, profitability, and strategic centrality to the AI ecosystem.

The article suggests that investors have priced in a meaningful deceleration in AI spending that is not supported by the company’s reported fundamentals.

This gap between perception and reality is presented as an opportunity: a $4.5 trillion business, deeply embedded in one of the most important technology shifts, trading at levels that imply far weaker prospects than its current demand trends suggest.

Implications for AI Cycle and Investor Sentiment

The strength of demand through next year undercuts the narrative that AI is a short-term hype cycle already heading toward saturation. Instead, AI infrastructure buildout is characterized as a multi-year, possibly decade-long transformation of data centers and enterprise computing.

If the company’s guidance proves accurate, it will likely force investors to reassess assumptions about the longevity and magnitude of the AI spending wave, with potential knock-on effects for other chip makers, cloud providers, and AI software firms.

Persistent demand at scale reinforces the company’s position as a central “arms supplier” to the AI revolution, making it difficult for rivals to materially erode its lead in the near term.

The article implies that sentiment may be more cyclical than fundamentals: fear-driven selling can temporarily obscure the structural nature of AI investment, but sustained earnings strength will eventually re-anchor valuations.

Key Takeaways and Outlook

The core message is that current anxiety about an imminent AI slowdown is not corroborated by this chip maker’s results or outlook.

The company’s indication that demand remains strong into next year supports the view that AI infrastructure spending is still in an early to middle phase, not at its peak.

Given its market dominance and scale, the company’s experience is a bellwether for the broader AI hardware ecosystem; its strong results suggest that AI adoption and monetization across industries are continuing to advance.

For investors, the combination of robust demand and a lower valuation after a selloff is framed as an unusual alignment of long-term opportunity with near-term price weakness.

Wall Street’s Worried About an AI Bubble. Nvidia Just Delivered an Answer

Bloomberg • November 20, 2025

AI•Tech•Nvidia•AI Bubble•Earnings•Bubble?

Market Reaction and AI Bubble Fears

Nvidia’s latest earnings report has temporarily eased concerns that the rapid growth in artificial intelligence represents a speculative bubble rather than a durable technological shift. Strong financial results, driven by intense demand for Nvidia’s AI-focused chips, suggest that revenue is still catching up to the hype rather than collapsing under it. The company’s performance signals that enterprise and cloud customers continue to invest heavily in AI infrastructure, validating expectations that AI will remain a central driver of tech spending in the near term. At the same time, the relief is cautious: investors view this report as a reprieve rather than definitive proof that AI valuations are fully justified over the long run.

Drivers of Demand and Growth Pressures

Nvidia’s leadership in graphics processing units (GPUs) places it at the core of the current AI boom, with its hardware powering data centers, training large models, and running inference at scale.

Global demand comes from hyperscale cloud providers, big tech platforms, and a growing mix of enterprises seeking to deploy generative AI and advanced analytics.

This demand has created enormous expectations for Nvidia’s ability to continuously scale production and innovate newer, more efficient chips to stay ahead of competitors.

The company’s ability to keep pace with worldwide orders has become both its biggest strength and most immediate challenge. Maintaining high growth requires securing manufacturing capacity, managing complex supply chains, and ensuring that software and ecosystem support remain compelling enough to keep customers locked in.

Strategic and Operational Challenges

Nvidia must navigate an environment where rivals and alternative architectures (including custom AI chips from large cloud providers) are rapidly emerging.

Geopolitical and regulatory factors, including export controls and national security concerns, can constrain where and how Nvidia sells its most advanced products.

The pressure to deliver next-generation chips on tight timelines raises risks around execution, costs, and potential product delays.

These factors mean that even with strong current earnings, Nvidia is “not out of the woods.” The company has to balance short-term performance with long-term investments in R&D, software frameworks, and partnerships to maintain its central role in AI infrastructure.

Implications for the Broader AI Narrative

Nvidia’s results are a key barometer for broader sentiment about AI. Solid earnings and sustained demand weaken the argument that the sector is purely speculative, instead indicating that real spending and real workloads are following the hype. However, the article suggests that questions remain about how evenly AI benefits will be distributed across the tech ecosystem, and whether all high-flying valuations tied to AI will ultimately be supported by fundamentals.

For investors and policymakers, Nvidia’s position highlights how concentrated the AI hardware layer currently is, underscoring systemic risks if one company’s supply, technology roadmap, or regulatory environment falters. For companies adopting AI, Nvidia’s trajectory serves as a signal that while the AI buildout is real and ongoing, it is also subject to constraints—capacity, competition, and policy—that could shape the pace and geography of AI deployment.

Key Takeaways

Nvidia’s earnings have temporarily soothed Wall Street’s anxiety about an imminent AI bubble burst.

Global demand for AI chips remains intense, validating near-term expectations for continued AI infrastructure buildout.

The company still faces substantial challenges: supply constraints, competition, and geopolitical and regulatory pressures.

Nvidia’s performance is a proxy for the broader health and durability of the AI investment cycle, and its ability to navigate these challenges will heavily influence whether today’s AI boom proves sustainable.

OpenAI’s House of Cards

Niallferguson • November 18, 2025

Essay•AI•AI Bubble•OpenAI•Financial History

Fans of Dr. Seuss will know by heart the key stanzas of Green Eggs and Ham.

Do you like

green eggs and ham?

I do not like them,

Sam-I-Am.

I do not like

green eggs and ham.

For those who have never had to read a bedtime story, allow me to explain. An irrepressible little creature, Sam-I-Am, spends the entirety of the book pitching green eggs and ham—on the face of it, an unappetizing dish—to a skeptical and increasingly irascible larger creature. With every page, the pitch grows more elaborate. Would you like them on a boat? With a goat? In the rain? On a train? Surely, there must be some context in which green eggs would be appealing fare. By the time Sam prevails, his hapless victim inhabits a scene of chaos.

When you come to think of it, there is often someone called Sam trying to sell you something you don’t initially want. In the 1920s, as I learned from Andrew Ross Sorkin’s 1929: Inside the Greatest Crash in Wall Street History—and How It Shattered a Nation, it was Sam Crowther’s article, “Everybody Ought to Be Rich”—exhorting housewives to buy stocks with margin credit. A few years ago, it was Sam Bankman-Fried with his crypto exchange, FTX. At the height of his fame, Bankman-Fried declared, “I want FTX to be a place where you can do anything you want with your next dollar. You can buy bitcoin. . . . You can buy a banana.” And you could also have bought green eggs and ham—until FTX blew up and Sam landed in prison.

A lot of the applications of generative artificial intelligence remind me of green eggs and ham. Take OpenAI’s Sora 2.0. With a few prompts, you can generate soft-porn videos of scantily clad girl manga elves. This is also one of the ways Elon Musk tries to sell xAI’s Grok. But why would I want to watch such videos, any more than I want to eat green eggs and ham?

Financial history can help us here. If you’re unsure if there’s an AI bubble, refer to the historian Charles Kindleberger’s five-stage model:

Displacement: Some change in economic circumstances creates new and profitable opportunities for certain companies.

Euphoria or overtrading: A feedback process sets in whereby rising expected profits lead to rapid growth in share prices.

Mania or bubble: The prospect of easy capital gains attracts first-time investors and swindlers eager to defraud them.

Distress: The insiders discern that expected profits cannot possibly justify the now-exorbitant price of the shares and begin to take profits by selling.

Revulsion or discredit: As share prices fall, the outsiders stampede for the exits, causing the bubble to burst altogether.

We are currently at stage 3.

AI

Google releases Gemini 3.0 model, closes gap on ChatGPT

Youtube • CNBC Television • November 18, 2025

AI•Tech•Gemini

Overview

The segment discusses Google’s release of its Gemini 3.0 AI model and how it alters the competitive dynamics with OpenAI’s ChatGPT. The focus is on whether Gemini 3.0 meaningfully closes the performance and product gap, what new technical capabilities it brings, and how it fits into Google’s broader AI and business strategy. Commentary emphasizes user scale, multimodal and agentic features, and the importance of integration across Google’s ecosystem in challenging ChatGPT’s lead.

Key Features and Technical Advances of Gemini 3.0

Gemini 3.0 is positioned as Google’s next major flagship model upgrade over the Gemini 2.x and 2.5 series, aimed squarely at competing with GPT‑5–class systems.

The model focuses heavily on:

More capable multimodal reasoning across text, code, images, audio, and video.

Larger context windows for handling long documents and complex multi‑step tasks.

Improved “agentic” behavior: tool use, function calling, and orchestrating workflows rather than just answering prompts.

Commentators note that Google has iterated quickly from Gemini 1.5 to 2.0, 2.5, and now 3.0, suggesting a maturing release cadence and more stable platform for developers.

While not framed as a “disruptive” breakthrough, Gemini 3.0 is described as a substantial quality and usability lift that makes the experience feel closer to—if not on par with—top OpenAI models in many everyday tasks.

User Scale, Ecosystem, and Business Context

Google’s Gemini ecosystem reportedly serves hundreds of millions of users, with management highlighting momentum toward ChatGPT’s scale.

Gemini is deeply embedded across:

Search and Chrome

Android phones and the Gemini app

Workspace tools such as Gmail, Docs, Sheets, and Meet

Cloud and developer products via the Gemini API and AI Studio

This integration allows Google to:

Offer a powerful free tier to a massive installed base.

Monetize indirectly through search and advertising, as well as cloud usage, rather than relying solely on paid subscriptions.

The segment underscores that this business model gives Google financial and distribution advantages, enabling sustained AI investment without the same cash‑burn concerns facing some rivals.

Comparison with ChatGPT and Competitive Dynamics

Analysts frame Gemini 3.0 as “closing the gap” with ChatGPT rather than clearly surpassing it across the board.

Areas where Gemini 3.0 is seen as particularly competitive or superior include:

Tasks that depend on up‑to‑date web search or deep integration with Google services.

Workflow and productivity use cases inside Workspace, where it can automate email replies, summarization, and document drafting.

ChatGPT still appears ahead in:

Brand power and developer mindshare.

Certain reasoning and coding benchmarks, depending on the specific OpenAI model used for comparison.

The narrative suggests a shift from a one‑horse race to a more balanced duopoly, with Gemini now considered a credible first‑tier option for many enterprise and consumer use cases.

Implications and Outlook

For investors, Gemini 3.0 is framed as a strategic response that helps protect Google’s core search and advertising franchise from disruption by independent AI assistants.

For developers and enterprises, the launch signals:

A more unified, long‑term model family they can build against.

Stronger multimodal, long‑context, and agentic capabilities suited for complex applications.

For consumers, competition between Gemini 3.0 and ChatGPT is expected to drive:

Faster product improvements.

More generous free tiers and bundled capabilities, as providers vie for attention and usage.

The segment concludes that while OpenAI remains a powerful frontrunner, Gemini 3.0 marks a turning point where Google is no longer seen as a laggard, but as a serious co‑leader in large‑scale generative AI.

As consumers ditch Google for ChatGPT, Peec AI raises $21M to help brands adapt

Techcrunch • November 17, 2025

AI•Funding•Peec AI•Generative Engine Optimization•AIsearch

With consumers increasingly asking questions of ChatGPT — not Google — product discovery is changing. And the promise to give brands visibility and control over this fast-growing search channel has made Peec AI one of Europe’s hottest startups.

Just four months after its Seed round led by 20VC, the Berlin-based startup has raised a $21 million Series A led by European VC firm Singular. CEO Marius Meiners declined to disclose the valuation, but said it had tripled and was now above $100 million.

This comes after Peec AI grew its annual recurring revenue to more than $4 million in only ten months since its launch, attracting 1,300 companies and agencies to its platform.

These customers use Peec AI to monitor how their brands appear in AI-powered searches. But beyond analytics on visibility and ranking, Peec AI also tracks sentiment — and which sources shape these answers.

These insights are what make Generative Engine Optimization (GEO) possible — a way for marketing teams to optimize their brand’s presence in AI search results, similar to how SEO works for traditional search engines. With this promise, the startup says it is now adding some 300 customers a month, and its new funding will accelerate this growth while also supporting expansion plans.

Thanks to its new round, which was also backed by Antler, Combination VC, identity.vc, and S20, the startup plans to hire some 40 people in the next six months. These roles are mostly based in Berlin, where Meiners met his two cofounders in Antler’s Winter 2024 cohort: Tobias Siwonia is now Peec AI’s CTO, and Daniel Drabo is its CRO.

Expanding fast and being visible may be key to winning in an emerging category that could soon become crowded, with competitors already including New York-based Profound and Austrian startup OtterlyAI.

To help attract more talent, the 20-person startup is currently advertising itself on large outdoor ads throughout Germany’s capital city. But beyond its Berlin plans, Meiners told TechCrunch that Peec AI also plans to open a sales-focused office in New York City in the second quarter of next year.

As more GEO-focused tools become available, and SEO dashboards add AI tracking capabilities, Peec AI hopes to differentiate itself by offering marketing teams a dashboard that expands in scope while remaining simple to use, despite the fast-changing nature of AI searches.

Instead of revolving around keywords like SEO tools, Peec AI’s dashboard centers on prompts for which brands would like to show up well in search results. Customers can track up to 25 prompts for €75 per month ($87), increasing to 100 prompts for €169 per month ($196). Both plans offer free trials, unlike its enterprise offering, which starts from €424 per month ($493).

TurboTax gets an AI upgrade as Intuit inks major deal with OpenAI

Fastcompany • Taylor Hatmaker • November 18, 2025

AI•Tech•Intuit•OpenAI•ChatGPT

AI can do your taxes now—sort of.

The tax software giant Intuit just struck a new deal with OpenAI that will weave AI deeply into its portfolio of financial apps, including the ones many Americans use to file their taxes.

In the multiyear deal, Intuit will pay ChatGPT maker OpenAI more than $100 million annually to implement its artificial intelligence models across products like TurboTax, personal finance manager Credit Karma, email marketing platform Mailchimp, and the accounting tool QuickBooks. Through the partnership, Intuit’s products will also become accessible directly through ChatGPT—the latest lucrative business integration for OpenAI.

“We are taking a massive step forward to fuel financial success for consumers and businesses, unlocking growth for both companies,” Intuit CEO Sasan Goodarzi said. “Our partnership combines the power of Intuit’s proprietary financial data, credit models, and AI platform capabilities with OpenAI’s scale and frontier models to give users the financial advantage they need to prosper.”

Intuit owns a big swath of the financial software market, and all of those apps will be popping up in ChatGPT soon to steer users toward personalized recommendations for credit cards and loans and to answer their tax and personal finance questions.

Intuit has been gravitating toward AI for a while now. Late last year, the company introduced AI-powered features into QuickBooks, inviting its users to automate rote, time-consuming tasks like sending invoices. Intuit insisted that it was being intentional about its implementation of AI, particularly given the rush for every business to boast about its AI capabilities.

“The idea is not to just have random sprinkles of AI across the product,” Dave Talach, Intuit senior vice president of the QuickBooks platform, told Fast Company at the time. “We’ve been thoughtful about approaching AI, not just for the sake of AI, but we want it to show up in a cohesive way in the product that is coherent to the customer.”

In June, QuickBooks released a set of AI agents for QuickBooks designed to get familiar with a company’s business and operations, taking over tasks to speed up bookkeeping and accounting. At the time, Intuit CEO Goodarzi emphasized that the company moved deliberately in building out its AI because it knows that missteps and inaccuracies are high stakes for the financial tools its customers rely on. “If it screws up, it’s a big problem,” he told Fast Company.

CHATGPT IS A PLATFORM NOW

OpenAI’s new partnership with Intuit is just the latest third-party integration for ChatGPT. In late September, OpenAI took what it called “first steps toward agentic commerce” with integrations for Shopify and Etsy, and went on to ink a deal with PayPal last month.

OpenAI also recently introduced a developer kit that would open its hit chatbot platform to third-party apps—a major shift for the chatbot that stands to remake the way that its 700 million-plus weekly users find and do things online. ChatGPT’s first wave of apps included Zillow, Spotify, Canva, and Expedia, with apps from DoorDash, Peloton, Uber, and Target in the works.

OpenAI’s recent moves point to the company’s vision of ChatGPT as an all-encompassing hub of utility that gives internet users little reason to go elsewhere. Those decisions coincide with OpenAI’s seismic shift away from its complex nonprofit roots into a more traditional for-profit company, although it technically will remain under the wing of a nonprofit.

Microsoft and Nvidia to invest up to $15bn in OpenAI rival Anthropic

Ft • November 18, 2025

AI•Funding•Anthropic•Microsoft•Nvidia

Overview

A major artificial intelligence start-up has entered into a far-reaching strategic alliance with Microsoft and Nvidia that could see total investment reach up to $15bn. As part of the deal structure, the AI company has committed to purchase $30bn worth of computing capacity from Microsoft, which will be delivered via data centres heavily powered by Nvidia’s advanced chips. The arrangement underscores how access to cutting-edge compute – especially Nvidia GPUs delivered through hyperscale cloud platforms – has become the central economic bottleneck and competitive moat in the generative AI race.

Structure of the Investment

The headline figure of “up to $15bn” reflects a mix of direct equity investment, cloud credits, and long-term infrastructure commitments rather than a single cash infusion.

Microsoft’s role is twofold:

Capital provider and strategic partner in AI model development and commercialization.

Primary cloud and compute supplier, locking in a huge, multi‑year customer for its Azure platform.

Nvidia’s role is primarily on the infrastructure and hardware side, supplying the GPUs and systems that power Microsoft’s data centres, which in turn provide the compute capacity contracted by the AI start-up.

This triangular structure tightly couples three layers of the AI stack: foundational model builder, cloud platform, and semiconductor provider, concentrating power among a small group of already dominant players.

Compute Commitments and Technical Implications

The AI start-up’s commitment to buy $30bn in computing capacity signals confidence that demand for its models and AI services will justify enormous infrastructure usage over time.

The compute will run in Microsoft data centres that are specifically optimized around Nvidia GPUs and networking, reflecting Nvidia’s continued dominance in training and serving large models.

Such a long-dated compute contract effectively functions like a capital-expenditure proxy for the start-up: instead of building its own data centres, it rents hyperscale capacity at massive scale, shifting costs to an operational model but committing to very large volumes.

This confirms a broader industry trend: leading AI labs are becoming anchor tenants of a handful of hyperscale clouds, rather than building standalone infrastructure from scratch.

Strategic and Competitive Impact

For Microsoft:

Deepens its portfolio of leading AI partners, complementing its existing high-profile alliances.

Locks in billions of dollars in future cloud revenue and strengthens Azure’s position as the preferred platform for frontier AI workloads.

For Nvidia:

Reinforces demand visibility for its most advanced chips, justifying ongoing heavy investment in GPU manufacturing and networking technologies.

Solidifies its status as the default choice for large AI compute, despite emerging competition from custom accelerators and rival chipmakers.

For the AI start-up:

Secures privileged access to scarce, top-tier compute resources, which are a prerequisite for training and deploying cutting-edge models.

Gains strategic backing from two of the most important companies in the AI value chain, which can accelerate productization, go‑to‑market efforts, and enterprise adoption.

At the ecosystem level, the deal intensifies concerns about concentration of power in AI, as both capital and compute continue to cluster around a small set of technology giants and a single dominant chip vendor.

Broader Market and Policy Implications

Such large, exclusive partnerships may attract regulatory attention, particularly around competition in cloud computing, semiconductors, and AI services.

Smaller AI start-ups may find it increasingly difficult to secure sufficient compute at competitive prices, potentially limiting innovation to those with access to hyperscale cloud partnerships or vast capital.

The size of the compute commitment ($30bn) illustrates how AI development has shifted from a software‑centric activity to one dominated by infrastructure economics, energy usage, and semiconductor supply chains.

Overall, this alliance highlights that in frontier AI, the core scarce asset is not just talent or algorithms but industrial-scale compute, tightly bound to a few cloud and chip incumbents whose strategic partnerships will shape the trajectory of the entire sector.

Gemini 3 may be the moment Google pulls away in the AI arms race

Fastcompany • Mark Sullivan • November 19, 2025

AI•Tech•Gemini

Google announced its widely anticipated Gemini 3 model Tuesday. By many key metrics, it appears to be more capable than the other big generative AI models on the market.

In a show of confidence in the performance (and safety) of the new model, Google is making one variant of Gemini—Gemini 3 Pro—available to everyone via the Gemini app starting now. It’s also making the same model a part of its core search service for subscribers.

The new model topped the scores of the much-cited LMArena benchmark, a crowdsourced preference of various top models based on head-to-head responses to identical prompts. In the super-difficult Humanity’s Last Exam benchmark test, which measured reasoning and knowledge, the Gemini 3 Pro scored 37.4% compared to GPT-5 Pro’s 31.6%. Gemini 3 also topped a range of other benchmarks measuring everything from reasoning to academic knowledge to math to tool use and agent functions.

Gemini has been a multimodal model from the start, meaning that it can understand and reason about not just language, but images, audio, video, and code—all at the same time. This capability has been steadily improving since the first Gemini, and Gemini 3 reached state-of-the-art performance on the MMMU-Pro benchmark, which measures how well a model handles college-level and professional-level reasoning across text and images. It also topped the Video-MMMU benchmark, which measures the ability to reason over details of video footage. For example, the Gemini model might ingest a number of YouTube videos, then create a set of flashcards based on what it learned.

Gemini also scored high on its ability to create computer code. That’s why it was a good time for the company to launch a new Cursor-like coding agent called Antigravity. Software development has proven to be among the first business functions in which generative AI has had a measurably positive impact.

Benchmarks are telling, but as the response to OpenAI’s GPT-5.1 showed, the “feel” or “personality” of a model matters to users (many users thought GPT-5 was a dramatic personality downgrade from GPT-4o). Google DeepMind CEO Demis Hassabis seemed to acknowledge this in a tweet Tuesday. “[B]eyond the benchmarks it’s been by far my favorite model to use for its style and depth, and what it can do to help with everyday tasks.” Of course users will have their own say about Gemini 3’s communication style, and how well it adapts to user preferences and work habits.

With the release of Google’s third-generation generative AI model, it’s a good time to look at the wider context of the race to build the dominant AI models of the 21st century. The contest, remember, is only a few years old. So far, OpenAI’s models have spent the most time atop the benchmark rankings, and, on the strength of ChatGPT, have garnered most of the attention of all the players in the emerging AI industry.

From the start, Google has enjoyed some distinct advantages. It’s been investing in AI talent and research for decades, starting long before OpenAI became a company in 2015. It began developing machine learning techniques for understanding search intent, defining page rank, and for placing ads as far back as 2001. It bought London-based AI research lab DeepMind back in 2014, and DeepMind has been responsible for some of Google’s biggest AI accomplishments (AlphaGo, AlphaFold, Gemini models).

Gemini 3.0 and Google’s custom AI chip edge

Youtube • CNBC Television • November 19, 2025

AI•Tech•Gemini

Overview

The content centers on a YouTube video discussing Gemini 3.0 and Google’s advantage from developing custom AI chips. The central theme is how Google’s vertically integrated approach—owning both the large language model (Gemini) and the underlying hardware—could provide efficiency, performance, and cost benefits in the rapidly intensifying AI race. The discussion highlights the strategic importance of custom silicon for AI workloads and frames Gemini 3.0 as a key part of Google’s broader product and infrastructure ecosystem rather than a standalone model release.

Gemini 3.0 in Google’s AI Strategy

Gemini 3.0 is presented as the latest generation of Google’s flagship AI model family, aimed at competing with leading frontier models on reasoning, multimodality, and coding.

The video emphasizes how Google is pushing Gemini deeper into its consumer and enterprise products—search, workspace tools, cloud offerings, and Android—making Gemini a foundational layer across the company.

A key thread is that model capability alone is no longer differentiating; integration into products and the ability to run at scale and low latency are increasingly important.

Custom AI Chips and Vertical Integration

The segment focuses on Google’s in‑house AI accelerators (such as TPUs and other custom chips) as a major competitive lever.

By designing chips specifically optimized for Gemini and related workloads, Google can:

Improve inference efficiency (more tokens per second per watt).

Reduce serving costs for high‑traffic AI features.

Fine‑tune the hardware–software stack for latency‑sensitive applications like search and assistant experiences.

The video contrasts this with companies that must rely primarily on third‑party GPUs, arguing that owning the chip stack can smooth supply constraints and give more predictable capacity planning.

Competitive Positioning in the AI Race

The discussion situates Google among AI leaders that combine cloud platforms, advanced models, and specialized hardware.

Custom chips are framed as a response to exploding demand for AI compute, giving Google more control over unit economics as usage scales.

The host notes that AI infrastructure has become a strategic battleground: the ability to secure, design, and operate compute at massive scale can decide which players can profitably deploy increasingly large models.

Gemini 3.0 is portrayed as both a technological and a signaling milestone—demonstrating that Google is still moving quickly in foundational models while leveraging its long‑running hardware investments.

Implications for Cloud, Developers, and Investors

For cloud customers and developers, Google’s chip edge could translate into:

More competitive AI pricing or higher‑performance tiers for model serving.

Access to models that are tightly optimized for Google’s infrastructure, possibly improving reliability and throughput for enterprise applications.

For Google’s broader business, custom silicon plus Gemini 3.0 strengthens:

Differentiation of Google Cloud versus other hyperscalers.

The defensibility of Google’s search and productivity tools as they become more AI‑centric.

The video implies that, for investors, the key questions are whether this integration will:

Sustain margins in the face of massive AI capex.

Help Google keep pace with or surpass rival ecosystems that also pair models with specialized hardware.

Key Takeaways

Gemini 3.0 is depicted as a major step in Google’s AI roadmap, but its significance is amplified by Google’s ownership of the full stack—from data centers and custom chips to end‑user products.