Contents

Essay

Venture

AI

US vs China

Media

Interview of the Week

Startup of the Week

Post of the Week

Editorial

The Manufactured Moral Panic Against AI

The single most important question about Artificial Intelligence today is not whether a future superintelligence will extinguish us. The real issue is whether our policy decisions are being dictated by an honest assessment of progress or by a well-funded panic campaign designed to solidify the power of the few.

The latest findings within the essay section and the media section this week -particularly the deep dive on the AI Panic Campaign - strongly suggest the latter. We are witnessing a sophisticated effort to bypass open deliberation and replace it with policy by fear, all orchestrated by groups closely aligned with the Effective Altruism (EA) movement.

The Playbook of Fear

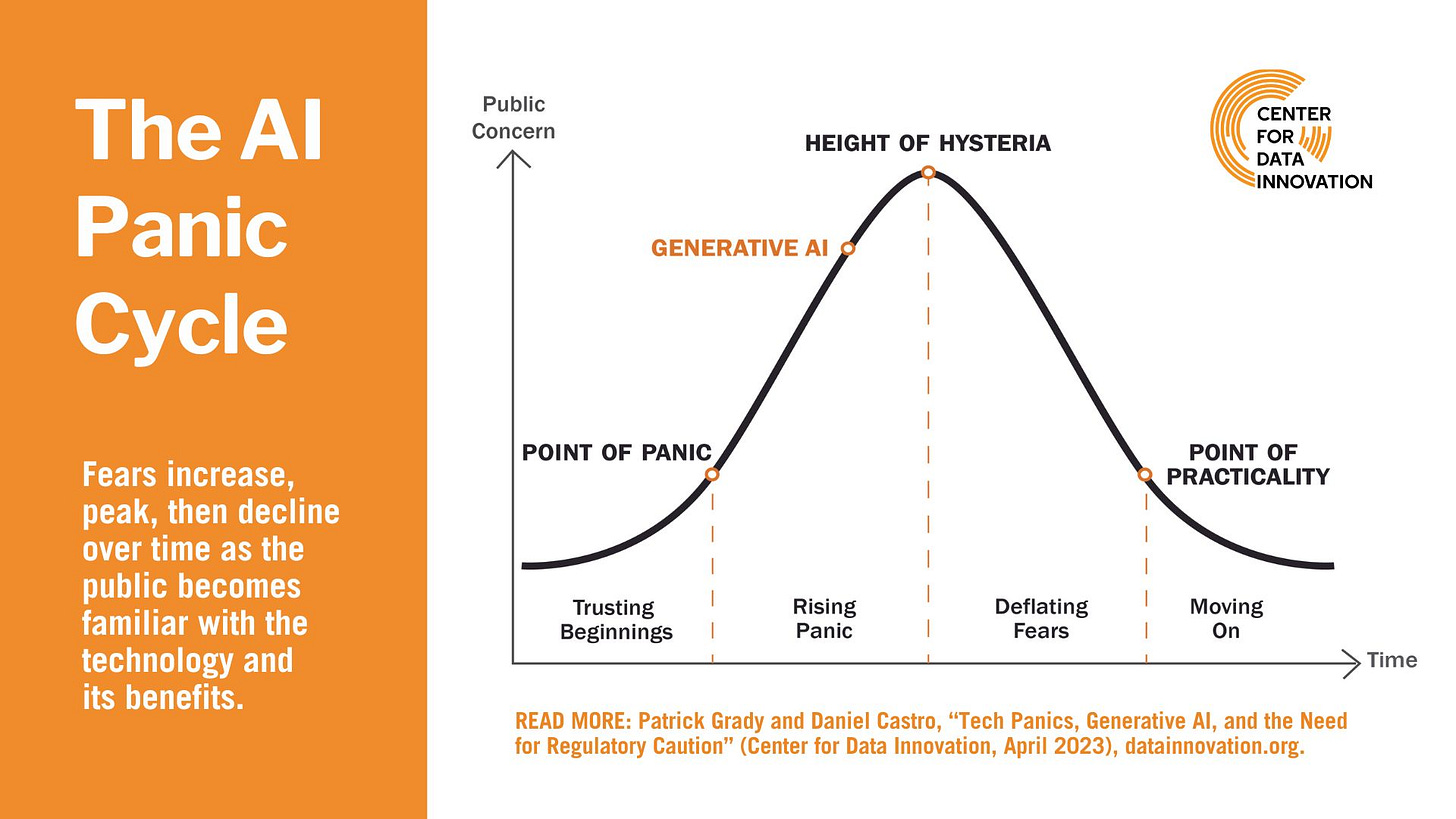

The research reveals that the fear of “AI doom” is not a spontaneous public reaction but a carefully cultivated product. Organizations like the Campaign for AI Safety and the Existential Risk Observatory have adopted the tools of commercial marketing and political micro-targeting to achieve their goal.

Their work involves:

Systematic Message Testing: They conduct “profiling, surveys, and ‘Message Testing’ trials” to determine which subgroups are “most responsive to x-risk messages,” examining frames like “Dangerous AI” or “Superintelligence” across demographics like “political party affiliation, age group, gender, [and] educational level.” This is classic advertising, but the product is prohibition, not shampoo.

The Goal is Prohibition: This manufactured panic is immediately weaponized into lobbying. The ultimate policy submissions these groups make to governments worldwide—including Canada, the U.S. OSTP, and the U.K.—are breathtaking in their scope. They demand nothing less than:

An indefinite and worldwide moratorium on scaling AI models.

Criminalizing the development of any form of Artificial General Intelligence (AGI) or Artificial Superintelligence (ASI).

Prohibition of training ML models greater than 10^23 FLOP in compute.

As the findings point out, this push for “sweeping regulatory interventions” is intended to force policymakers to adopt stringent rules by framing AI in the most extreme terms. The irony is palpable: the people warning us about a future, theoretical threat are actively undermining open dialogue today to “benefit top AI companies” with ties to their own network. Credit to Nirit Weiss-Blatt of AI Panic for the research and findings.

The Real Future of Work

The panic campaign is successful because it focuses the media’s attention on the “Frankenstein Monster or Pandora’s Box” frame, overshadowing the actual, measurable benefits of the technology.

If we look past the doomsday headlines—which the article notes are successful because “‘AI worst case scenarios’ has had 5 x as many readers as ‘AI best case scenarios’”—we see how AI is truly advancing society:

Innovation at the Frontier: The argument in AI is not the Everything Box counters the idea that only a few foundational labs will win. Instead, “Ever expanding AI capabilities will approach a marginal cost of zero” and will “accelerate the speed of human innovation” by allowing people to step “every so slightly beyond” the limit of current knowledge. The real value is created by entrepreneurs using AI at the edge of real-world problems.

Empowering the Worker: The piece The one job AI won’t replace is the spreadsheet guru offers a far more sober view of the future of work. AI “democratizes” advanced spreadsheet power, but it elevates the role of the human expert, shifting their focus “from pure syntax and mechanics” toward designing data flows, validation, and asking better questions. The technology becomes a “Helping Hand”—a tool that frees humans for higher-value, more interesting tasks.

A New Wave of Efficiency: Companies are already seeing results. Gamma’s AI Presentation Company, for instance, scaled to tens of millions of users and $100 million in recurring revenue with a mere 50 employees, leveraging AI to handle “~30,000 inbound conversations per month.” This is not job destruction; it is operational leverage that enables hyper-efficient, capital-light growth.

In short, the fear is being purchased to enforce control. The benefit is being ignored to preserve the competitive advantage of the few.

It seems smart, to me at least, to reject the “God-like AI” narrative that Ilya Sutskever and others champion to justify their unique structural control. Instead, we must focus policy on governing the deployment of AI to ensure accountability, auditability, and safety, not on enacting blanket prohibitions that stifle the innovation desperately needed for a more productive economy. And even more importantly, figuring out how to distribute the enormous wealth likely to be created in a manner that builds a modern society where the working day is reduced (perhaps to zero) while improving life for all.

The panic, it turns out, is being funded. It is time to start governing for progress.

Essay

What Ilya Sutskever Really Wants

Aipanic • Nirit Weiss-Blatt • November 7, 2025

AI•Essay•ArtificialGeneralIntelligence•AIRegulation•FutureOfWork

In WIRED‘s cover story, “What OpenAI Really Wants,” Steven Levy described how the company’s ultimate goal is to “Change everything. Yes. Everything,” and mentioned this:

“Somewhere in the restructuring documents is a clause to the effect that, if the company does manage to create AGI [Artificial General Intelligence], all financial arrangements will be reconsidered. After all, it will be a new world from that point on. Humanity will have an alien partner that can do much of what we do, only better. So previous arrangements might effectively be kaput.”

“The company’s financial documents even stipulate a kind of exit contingency for when AI wipes away our whole economic system.”1

It reminded me of what Ilya Sutskever said in May 2023 (at a public speaking event in Palo Alto, California). His entire talk was about how AGI “will turn every single aspect of life and of society upside down.”

When asked about specific professions – book writers/ doctors/ judges/ developers/ therapists – and whether they are extinct in one year, five years, a decade, or never,2 Ilya Sutskever answered (after the developers’ example):

“It will take, I think, quite some time for this job to really, like, disappear. But the other thing to note is that as the AI progresses, each one of these jobs will change.

They’ll be changing those jobs until the day will come when, indeed, they will all disappear.

My guess would be that for jobs like this to actually vanish, to be fully automated, I think it’s all going to be roughly at the same time technologically. And yeah, like, think about how monumental that is in terms of impact. Dramatic.”

Then, Ilya Sutskever explained why “OpenAI is actually NOT a for-profit company. It is a capped-profit company”:

“If you believe that AI will literally automate all jobs, literally, then it makes sense for a company that builds such technology to … not be an absolute profit maximizer.

It’s relevant precisely because these things will happen at some point.”

When regulation came up, Ilya Sutskever continued:

“If you believe that AI is going to, at minimum, unemploy everyone, that’s like, holy moly, right? Assuming we take it for real, we say for real, as opposed to it’s just like idle speculation.

The conclusion may very well be that at some point, yeah, there should be a government-mandated slowdown with some kind of international thing.”

When asked about AI’s role in “shaping democracy,” Ilya Sutskever answered:

“If we put on our science fiction hat, and imagine that we solved all the, you know, the hard challenges with AI and we did this, whatever, we addressed the concerns, and now we say, ‘okay, what’s the best form of government?’ Maybe it’s some kind of a democratic government where the AI is, like, talks to everyone and gets everyone’s opinion, and figures out how to do it in like a much more high bandwidth way.”

On another occasion, Ilya Sutskever also played with the idea: “Do we want our AI to have insecurity?”

He has been exploring the possibility of AI systems having “feelings” for a while now. On Twitter, he wrote that “it may be that today’s large neural networks are slightly conscious.” He later asked his followers, “What is the better overarching goal”: “deeply obedient ASI” [Artificial Superintelligence] or an ASI “that truly deeply loves humanity.” In a TIME magazine interview, in September 2023, he said:

“The upshot is, eventually, AI systems will become very, very, very capable and powerful. We will not be able to understand them. They’ll be much smarter than us. By that time, it is absolutely critical that the imprinting is very strong, so they feel toward us the way we feel toward our babies.”3

Back in May 2023, before Ilya Sutskever started to speak at the event, I sat next to him and told him, “Ilya, I listened to all of your podcast interviews. And unlike Sam Altman, who spread the AI panic all over the place, you sound much more calm, rational, and nuanced. I think you do a really good service to your work, to what you develop, to OpenAI.” He blushed a bit, and said, “Oh, thank you. I appreciate the compliment.”

An hour and a half later, when we finished this talk, I looked at my friend and told her, “I’m taking back every single word that I said to Ilya.”

He freaked the hell out of people there. And we’re talking about AI professionals who work in the biggest AI labs in the Bay area. They were leaving the room, saying, “Holy shit.” ….

The AI Panic Campaign - part 1

Aipanic • Nirit Weiss-Blatt • November 7, 2025

Media•Advertising•AISafety•Essay

The article argues that a coordinated “x-risk” messaging campaign, led by two Effective Altruism–aligned groups—Campaign for AI Safety and the Existential Risk Observatory—systematically tests and tunes narratives about AI-driven human extinction to shift public opinion and build support for strict interventions, including AI moratoria and expanded surveillance of AI R&D. Drawing on hundreds of public documents and survey results, it contends these organizations borrow commercial marketing, political microtargeting, and behavioral research techniques to identify which frames move different audiences and then feed the winning messages back into media outreach, advocacy, and lobbying.

“Never underestimate the power of truckloads of media coverage, whether to elevate a businessman into the White House or to push a fringe idea into the mainstream. It’s not going to come naturally, though - we must keep working at it.”

The “x-risk campaign” exposé is based on hundreds of publicly available documents. The first two articles that are being released today1 reveal the following:

AI Safety organizations constantly examine how to target “human extinction from AI” and “AI moratorium” messages based on political party affiliation, age group, gender, educational level, field of work, and residency.

Their actions include:

Conducting profiling, surveys, and “Message Testing” trials to determine which type of people are most responsive to x-risk messages.

Optimizing impact on specific subgroups within the population of interest by mapping distinct frames and language variations.

Involving the larger x-risk community in the detailed findings.

Implementing “best practices” in marketing and lobbying.

This 2-part series focuses on two organizations: “Campaign for AI Safety” and “Existential Risk Observatory.”

(However, these are not the only ones whose documents have been thoroughly reviewed).

In the first post, I present their various x-risk “Message Testing”:

“Test of narratives for AGI moratorium support”

and “AI doom prevention message testing.”

“Alternative phrasing of ‘God-like AI’”

Studies on the best ways to influence public opinion

The second post is dedicated to their main target audience - policymakers.

After inflating the perceived danger of AI, the AI Safety organizations call for sweeping regulatory interventions, including widespread surveillance of AI research & development.

Part 2 describes the lobbying efforts and what these organizations are trying to achieve:

Policy submissions to governments worldwide

Targeting the UK AI Safety Summit

Previous billboard/radio ads and protest signs.

There are a few concluding remarks at the end, including the broader AI PANIC ecosystem, non-scientific terms, and the ultimate question: Will this panic campaign succeed?

Background

Effective Altruism (EA), AI existential risk (x-risk), and AI Safety

According to the “Effective Altruism” (EA) movement, the most pressing problem in the world is preventing an apocalypse where an Artificial General Intelligence (AGI) exterminates humanity. With billionaires’ backing (like Elon Musk, Vitalik Buterin, Jaan Tallinn, Peter Thiel, Dustin Muskovitz, and Sam Bankman-Fried), this movement founded and funded numerous institutes, research groups, and companies under the brand of “AI Safety.”

The “AI Safety” organizations that warn of long-term existential risks (such as the Machine Intelligence Research Institute, the Future of Life Institute, and the Center for AI Safety), as well as leading AI labs (such as OpenAI and Anthropic) obtain their funding by convincing people that AI existential risk is a real and present danger.

Background materials can be found in “How Elite Schools Like Stanford Became Fixated on the AI Apocalypse,” “How Silicon Valley doomers are shaping Rishi Sunak’s AI plans,” and “How a Billionaire-backed Network of AI Advisers Took Over Washington.”

This movement, which raises the alarm about rogue AI that could wipe out humanity, is “distracting researchers and politicians from other more pressing matters in AI ethics and safety.”

Those Effective Altruism-backed organizations are “pushing policymakers to put AI apocalypse at the top of the agenda — potentially boxing out other worries and benefiting top AI companies with ties to the network.” Despite being “a fringe group within the whole society, not to mention the whole machine learning community,” they have successfully moved “extinction from AI” “from science fiction into the mainstream.”

This newsletter has previously discussed the role of the media in amplifying doomsaying. This series explores the other side of the coin: The advocates working to perfect x-risk messages so they can have the greatest impact on the media, industry, and governments.

Existential Risk Observatory

The Existential Risk Observatory was launched in 2021 with traditional media as its primary target:

“Publication in traditional media generates both a significant audience, and built-incredibility and trustworthiness of the message. Both these things are valuable to us.”

The mission is “existential risk awareness building.” So, the Existential Risk Observatory publishes opinion editorials, organizes events, and examines “how social indicators - age, gender, education level, country of residence, and field of work - affect the effectiveness of AI existential risk communication.“

The AI Panic Campaign – part 2

Aipanic • Nirit Weiss-Blatt • November 7, 2025

Media•Advertising•AISafety•ExistentialRisk•UKAISafetySummit•Essay

“First, they ignore you, then they laugh at you, then they fight you, then you win.”

“There’s a direct connection between publishing articles and influencing policy.

We are moderately optimistic that we can influence policy in the medium term.”

These quotes are from the founders of the Existential Risk Observatory and the Campaign for AI Safety.

The previous post provided the necessary background materials on their market research/”Message Testing” efforts:

“Test of narratives for AGI moratorium support” and “AI doom prevention message testing.”

“Alternative phrasing of ‘God-like AI’”

Studies on the best ways to influence public opinion

Part 2 is dedicated to the lobbying efforts:

Policy submissions to governments worldwide

Targeting the UK AI Safety Summit

Previous billboard/radio ads and protest signs

There are a few concluding remarks at the end, including the broader AI PANIC ecosystem, non-scientific terms, and the ultimate question: Will this panic campaign succeed?

4. Policy submissions to governments worldwide

Fear-based campaigns are designed to promote fear-based AI governance models.

When it comes to the AI Panic campaign, politicians feel more pressured to take action because the x-risk discourse is permeating the media. So, framing AI in extreme terms is intended to motivate policymakers to adopt stringent rules.

After using the media to inflate the perceived danger of AI, AI Safety organizations demand sweeping regulatory interventions in AI usage, deployment, training (cap training run size), and hardware (GPU tracking).

According to the Campaign for AI Safety website, its primary activity is “not limited to any one country” and includes “stakeholder engagement, such as making policy submissions.”

Their policy submissions have the following policies:

An indefinite and worldwide moratorium on further scaling of AI models

Shutting down large GPU and TPU clusters

Prohibition of training ML models greater than 10^23 FLOP in compute

Introduce a licensing scheme for high-risk AI development and recognize licenses issued overseas under similar schemes

Passing national laws criminalizing the development of any form of Artificial General Intelligence (AGI) or Artificial Superintelligence (ASI).

So far, the organization has submitted these recommendations to:

The Canadian Guardrails for Generative AI – Code of Practice by Innovation, Science and Economic Development Canada

The Select Committee on Artificial Intelligence appointed by the Parliament of South Australia

IN GOI’s Request for Comment on Encouraging Innovative Technologies, Services, Use Cases, and Business Models through Regulatory Sandbox by the IN Telecom Regulatory Authority of India

U.S. Office of Science and Technology Policy OSTP’s Request for Information to Develop a National AI Strategy

U.K. pro-innovation approach to AI regulation by the UK Department for Science, Innovation and Technology

U.S. National Telecommunications and Information Administration (NTIA)’s Request for Comment on AI Accountability

The U.K. CMA’s information request on foundation models

They are currently working on:

UN Review on Global AI Governance.

NSW inquiry into AI (Australia).

Following months of “x-risk campaign” - open letters, conferences, interviews, and op-eds in traditional media - these proposals have become the basis for public policy debates about AI. All the while ignoring potential trade-offs.

The goal now is to influence the UK AI Safety Summit.

5. Targeting the UK AI Safety Summit

“On November 1st and 2nd, the very first AI Safety Summit will be held in the UK.

The perfect opportunity to set the first steps towards sensible international AI safety regulation.”

This is why the Campaign for AI Safety has teamed up with PauseAI to organize an International PauseAI Protest on 21st October 2023 (in multiple countries):

The Campaign for AI Safety is also seeking more donations “to help fund ads in London ahead of the UK Safety Summit.”

6. Previous billboard/radio ads and protest signs

Prior to the studies in part 1, the Campaign for AI Safety ran billboard campaigns in London (and in San Francisco) with the slogan “Control artificial intelligence before it terminates us”:

But the beginning wasn’t satisfactory, and the Billboard testing results weren’t as expected….

Use A.I. to Reinvigorate Democracy — Not Replace It

Nytimes • November 11, 2025

Essay•AI•Democracy•Governance•Ethics

By Eric Schmidt and Andrew Sorota

Mr. Schmidt is a former chief executive and chairman of Google. Mr. Sorota is Mr. Schmidt’s head of research.

Albania is the first country to take a real step toward “algocracy”: government by algorithm. In September its prime minister announced that all decisions concerning which private suppliers will provide goods and services to Albania’s government — over $1 billion annually — will be made by an A.I. avatar named Diella. Albania has long suffered from corruption, particularly in this realm. The unbiased, competent, algorithmic Diella is thought to be the solution.

It’s a seductive trade: When democratic systems fail, simply replace them with algorithmic ones. But it’s the wrong reflex. Algorithms can optimize efficiency, but they can’t decide between competing values — the very choices that lie at the heart of democratic politics. Without transparency about how Diella reaches its conclusions and without mechanisms to challenge its decisions, citizens will inevitably feel wronged and without recourse.

Rather than replace democracy with A.I., we must instead use A.I. to reinvigorate democracy, making it more responsive, more deliberative and more worthy of public trust.

Unfortunately, this isn’t the path we’re currently on.

A majority of adults across 12 high-income countries say they are dissatisfied with the way their democracy is working. We see this disaffection manifest in turnstiles ablaze, smashed storefronts and streets choked with tear gas — the seemingly endless churn of protests against governments that are perceived as out of touch, ineffective and corrupt.

Meanwhile, A.I. systems continue to improve rapidly. We already have models that outstrip human performers in fields like geometry and medical imaging. The public is also becoming more familiar with the technology (even if Americans use it significantly less than those in many other nations do, most notably in China).

Perhaps it isn’t surprising, then, that people around the world already trust emergent A.I. systems over established democratic ones. Three rounds of surveys run by the Collective Intelligence Project between March and August 2025 consistently found that people believed A.I. chatbots could make better decisions on their behalf than their elected representatives.

This pattern is as old as politics itself: When democracy struggles to deliver, people turn to strongmen, authoritarians and now algorithms, hoping for competence over chaos.

But replacing democratic deliberation with algorithmic efficiency doesn’t solve the underlying crisis. It merely substitutes one form of distance between people and power for another. When algorithms determine, say, budget allocations or public benefits with no explanation and no appeal, the result is the same alienation and disillusionment we’re already seeing from distant, unresponsive institutions — except now there’s no one to hold accountable. With human dignity relegated to an afterthought, polarization deepens and trust erodes further.

These problems compound the risks A.I. already poses. Today’s A.I.-powered algorithms are largely built around business models where conflict drives revenue. Since outrage keeps people clicking, ranking systems surface the most divisive material, fragmenting public discourse and driving us into echo chambers that make consensus seem impossible. As A.I. systems become more powerful and capable of ideological manipulation, these threats will only intensify.

To save democracy, America needs a different path: one that uses A.I. to give people more voice in our policy choices and better results.

A.I. can help make governments more effective, cutting red tape, improving public services and opening up decision-making to the public. In Taiwan, the platform vTaiwan has spent over a decade demonstrating how A.I. can strengthen rather than supplant democratic deliberation. When Uber arrived in Taiwan in 2013, it triggered the same conflicts that erupted in cities worldwide: taxi drivers versus riders, incumbents versus newcomers, regulation versus innovation. Instead of letting the loudest voices dominate or having lobbyists and bureaucrats decide behind closed doors, Taiwan used A.I.-powered tools to facilitate some version of mass deliberation.

Maga + AI is not a recipe for stability

Ft • November 10, 2025

Essay•AI•Politics•ArtificialIntelligence•MAGA

Maga + AI is not a recipe for stability

By ADAM TOOZE Published NOV 10 2025 (The writer is an FT contributing editor and writes the Chartbook newsletter)

At critical moments in history, technological change can produce not just economic growth. It can consolidate or disrupt political regimes.

In the wake of the first world war, Fordism was not only a production line, it offered the vision of a new culture of mass affluence.

In the 1970s and 1980s, the revolution in microelectronics and computing rang in the end of the Soviet bloc.

In 2008, the excitement generated by the new generation of smartphones and the advent of social media did much to offset the toxic shock of the financial crisis. After all, if capitalism gave us the iPhone and Facebook, it could not be all bad.

But such narratives can easily turn. A conveyor belt becomes a metaphor for alienation. Digital platforms become drivers of polarisation and civil war.

In our present moment, the hyperscaling of AI is taking off just as Donald Trump has launched into his second, radical term in office.

In the short term, commentators agree that this coincidence is helping to shield the US president from the pushback his more dysfunctional policies might otherwise be expected to provoke, notably from powerful business elites.

Corporate leadership and investors can distract themselves from the breakdown of regular governance by the big news on the tech front.

As far as the US economy is concerned, the “two bads” of tariffs and the crackdown on immigration are offset by the “one big positive” of the AI boom. The elite are lulled with a combination of tax cuts and stock market gains.

But if you look beneath the hood, the sense of stability engendered by the balancing of Maga with AI is distinctly precarious.

If Trump’s disinhibited administration is the product of America’s social and political tensions, there is nothing in the coincidence of Maga and AI to ease or alleviate any of those stresses.

Start with the pervasive sense of national decline — the ultimate source of US elite anxiety. The answer of the Biden administration was to keep Big Tech under lock and key. Restrict the most high-tech chip exports. But that was unpopular with the chipmakers and tended to stimulate creative evasion in China. The Trump administration seems to hope that the US can dominate through the proliferation of its technology. But that tends to spread the shock and further encourages multi-polarity. Then Nvidia’s Jensen Huang tells you that China is going to win anyway and where do you go from there?

At home, the professed goal of the Trump administration, like that of Joe Biden, is to revive the US industrial base and restore the stability of working-class and middle-class livelihoods. But how does that square with encouraging Silicon Valley to bet hundreds of billions of dollars on replacing a large part of the white collar workforce with algorithms?

Those algorithms are a radical cultural technology. Our best hope is that they will make good the dreams of endogenous growth theory and unleash a spectacular acceleration in the productivity of R&D, thus accelerating scientifically led economic and cultural transformation. But isn’t there a deep irony in an administration as obscurantist and post-truth as Trump 2.0 presiding over the dawn of artificial general intelligence? Neither JD Vance’s neo-Catholicism nor the bizarre sermons of Peter Thiel can paper over the incoherence of historical and philosophical vision.

And it may of course be the case that AI has been overestimated. That we shouldn’t believe the hype. But what happens then? Does the bubble burst? As Gita Gopinath pointed out after leaving the IMF, a dotcom-sized adjustment to US equity markets would today inflict losses of $20tn on US investors and $15tn on the rest of the world. That is 70 per cent of US GDP and 20 per cent of the rest of the world’s. More than enough to spark a severe recession. How do we imagine that the Trump administration, with a gaping deficit, a politicised Fed and a deadlocked Congress, would react to that?

Those clinging to the idea that America might one day return to its senses are, it would seem, hoping for a goldilocks crash: big but not too big — enough to dispel the AI hype and the intoxication of Trump’s first year but not enough to provoke a constitutional crisis even worse than the one we are already living through.

To paraphrase the punchline of a lurid AI potboiler published in September, “I wish I were exaggerating”. But if a return to American normality is what you are hoping for, it is not obvious how you get there from here.

AI is not the Everything Box

Idan • November 10, 2025

AI•Tech•Economics•Business Strategy•Innovation•Essay

There’s a controversial AI Investment thesis making the rounds on X right now, which largely posits the futility of competing with the AI labs on anything because in time the models will come to encompass all things, so there’s no point. The post is here: https://x.com/yishan/status/1987787127204249824

I’ve heard this position from a number of investors behind closed doors, and I think it’s a more commonly held opinion than folks let on.

I think this take is fundamentally wrong, and since I don’t know when the last time something I wrote on X actually got read by anyone I know - I figured I’d paste in my response here on Substack1:

This take is completely wrong, and in so many words is a justification to “sit out” AI from an investment standpoint, or only choose to invest in flash-in-the-pan offerings in a buy low / sell high fashion.

Maybe I’m reading it wrong, but the notion that foundational model companies will grow ever bigger to be able to do everything and anything, means that no one should actually worry themselves with building a business right now because in due time the AI foundation models will come to encompass what they’re doing.

If anything, the rapid increase in AI capability is still upper-bound by the fundamental entropy of the distributions that it’s trained on - further, the ability of AI to learn said distributions does not necessarily mean that it can compositionally deconstruct the underlying processes that led to that distribution.

In the context of the corpus of human knowledge, being able to connect far flung ideas across fields and sectors is already well beyond the capacity of most people and is in of itself a way to allow human operators the ability to discover, develop and build unique and new things. With that said, the underlying AI systems will not do so directly.

As such, I think the opposite is going to be true. Ever expanding AI capabilities will approach a marginal cost of zero and come every closer to the “limit” of the overall corpus of human knowledge. This then allows people to sit at that limit, and step every so slightly beyond it - in a way that will actually accelerate the speed of human innovation vs. the other way around.

If the argument is actually, AI will make it impossible for people to build another Dog Vacay because it’s possible to one-shot the app. Ok, that is true - but it’s not going to let people step past the frontier.

I think the frontier of knowledge is defined by the direct interaction of intelligence and the environment that it’s placed in. In other words, you can be as smart as you want, but the universe “pushes back” and ultimately limits the speed at which the frontier can expand.

So far, AI systems have not been built that can autonomously self-determine and push that frontier out further, and ever improving AI systems will not change this in terms of approaching the limit of the existing corpus of knowledge.

Ultimately, I anticipate the universe sets a “speed limit” here, which while I don’t think humanity has gotten close to yet - but it’s likely finite and hyperbolic in nature….

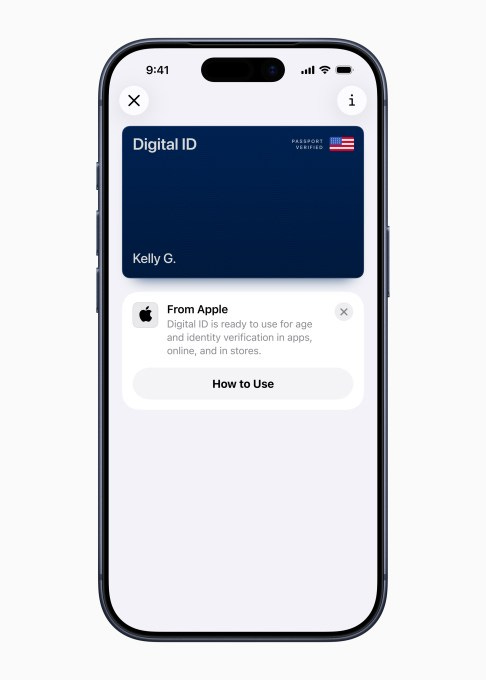

Apple launches Digital ID, a way to carry your passport on your phone for use at TSA checkpoints

Techcrunch • November 12, 2025

Technology•Mobile•DigitalIdentity•Security•Travel

Apple Watch and iPhone owners in the United States will now be able to carry a copy of their U.S. passport on their device, which they can then use at TSA checkpoints across more than 250 U.S. airports when traveling domestically.

The feature, known as Digital ID, was previously announced as part of the iOS 26 release, and adds passports to the list of existing government IDs supported in Apple Wallet. The company has rolled out the feature to a dozen states and Puerto Rico, with more on the way.

Using Digital ID in Apple Wallet, users can create and present an ID, even if they don’t have a REAL ID-compliant driver’s license or state ID. The ID does not replace a physical passport, and it’s not currently supported for international travel or crossing borders, Apple notes.

Users can add their passport to Wallet by tapping on the “Add” (+) button in the Wallet app, then selecting “Driver’s License or ID Cards.” From there, select Digital ID and follow the steps to complete the setup process, which includes using the iPhone to scan the photo page of their passport and scanning the chip embedded on the back to ensure the passport’s authenticity. Users will also have to take a selfie for verification and then complete a series of facial and head movements for additional security.

Support for IDs in Apple Wallet is the last obstacle standing in the way of making iPhones a replacement for a physical wallet, as it can now hold payment cards, loyalty cards, tickets and passes, and more.

Presenting Apple’s new Digital ID in person works much like using Apple Pay. You can double-click the side button or Home button to access your Wallet, then select Digital ID. The iPhone or Apple Watch should be held near an identity reader, and users will use Face ID or Touch ID to authenticate their information. Users will also be able to see what identity information is being requested before completing the verification process.

Venture

Sequoia’s ‘imperial’ Roelof Botha pushed out by top lieutenants

Ft • November 7, 2025

Venture

Sequoia’s ‘imperial’ Roelof Botha pushed out by top lieutenants

By George Hammond in San Francisco Published NOV 7 2025

Sequoia Capital’s Roelof Botha was ousted by top lieutenants who lost confidence in his ability to keep Silicon Valley’s most powerful venture capital firm ahead of rivals.

Botha stepped down as managing partner of the group on Tuesday following an intervention from Alfred Lin, Pat Grady and Andrew Reed, said multiple people with knowledge of the matter.

The trio of senior partners had the blessing of the wider firm and Doug Leone, Sequoia’s former managing partner, said three of the people.

Their move came on the back of concerns about Botha’s management style, questions about Sequoia’s artificial intelligence investment strategy and follows high-profile clashes between senior figures at the firm, said the people.

The Financial Times spoke to 10 people close to the firm, including investors who have worked with Botha and the institutions that bankroll Sequoia, known as limited partners. His ousting was motivated by a belief that a new generation of leaders would better serve Sequoia’s LPs, they said.

One of those described the removal as a “revolt” against Botha’s “imperial style of leadership”, following a period of upheaval at one of Silicon Valley’s most successful and enduring firms.

“On an IQ level he is off the charts . . . But the heart of the matter is that Roelof is one of these people who always needs to be seen as the smartest guy in the room,” the person said, adding Botha’s emotional intelligence did not match his intellect.

“Roelof is a legendary investor, leader and human being,” Sequoia’s new leadership team told the FT. “He was part of the decision to empower the next generation, and he will continue to serve on boards and advise the partnership, alongside former stewards Doug [Leone] and Jim [Goetz].”

The trio of lieutenants took advantage of Sequoia’s unique governance, which allows partners to call a vote in the leadership at any point, said two people with knowledge of the arrangement.

The measure gives additional weighting to the longer-serving investors and is designed to prevent senior partners blocking the ascent of dealmakers beneath them, they added.

“The reason Sequoia has stayed Sequoia for 53 years is they have refused to cement themselves in hierarchy,” said one person with knowledge of Botha’s removal.

Grady and Lin will now run the firm, while Reed and Grady will co-lead Sequoia’s fund investing in more mature start-ups. Lin and another partner, Luciana Lixandru, will co-lead the firm’s early stage investment fund.

Botha, who has run its US and European business since 2017 and took over the whole firm in 2022, will remain as an adviser.

The 52-year old grandson of Roelof “Pik” Botha, the last foreign secretary under South Africa’s apartheid regime and later a member of Nelson Mandela’s first government, was hired to PayPal by Elon Musk early in his career.

He has led a string of investments, including in Instagram, YouTube and $30bn database management company MongoDB. Sequoia has returned more than $50bn to its US and European investors since 2017, said a person with knowledge of the matter.

Despite these successes, partners decided Lin, who has backed Airbnb, DoorDash and OpenAI, and Grady, behind investments in Snowflake, Zoom and ServiceNow, were better placed to lead Sequoia.

Under Botha, Sequoia has taken a more cautious approach to AI investment than some rivals. The firm invested a little more than $20mn in OpenAI in 2021, when the ChatGPT maker was valued at about $20bn, and has boosted that stake in subsequent rounds, said people with knowledge of the deals.

When OpenAI raised funds at a $260bn valuation this year, Sequoia offered to invest $1bn, but ultimately was given a stake of a fraction of that, according to people with knowledge of that deal.

Sequoia also holds a stake in Musk’s xAI, but has focused on early investments in AI application companies such as Harvey, Sierra and Glean — an approach also advocated by Grady.

Botha also grappled with big conflicts during his tenure. Last month, the FT reported Sequoia’s chief operating officer left the firm in August after complaining that colleague Shaun Maguire’s X posts about New York’s mayor-elect Zohran Mamdani were Islamophobic.

Botha reminded Maguire — a vital Musk ally — of the need to consider Sequoia’s reputation, but otherwise refused to discipline him, citing the firm’s long-standing position that all partners had a right to free speech. Balbale left after considering her position untenable, according to those with knowledge of the incident.

Last year, a fight over board seats at Swedish fintech Klarna exposed a schism between Botha and Michael Moritz, who had previously led the firm and backed some of its most successful companies.

Botha threw his weight behind an effort to vote Moritz out as Klarna chair, said people familiar with the situation. The effort backfired, with Moritz remaining in post and Sequoia’s Matthew Miller being ousted as a Klarna director instead.

Early in his tenure, Botha also split from Sequoia’s lucrative Chinese business.

“I do think the last five years have been super intense, it’s really hard to lead a firm through all of that,” said one long-standing Sequoia LP.

Botha was also hurt by other strategic decisions of his own, said multiple people with knowledge of the matter.

This included the announcement, as Leone was handing over the reins, of a new “evergreen” fund to hold on to Sequoia’s best companies after they went public, a point at which VCs typically cash out.

The timing was disastrous: the fund was launched at the peak of a tech investment boom in 2022 and the valuations of start-ups which Sequoia had clung on to cratered.

Public market valuations have since rebounded, and the fund has generated nearly $7bn in gains on where the companies were priced when they were rolled in, according to a person with knowledge of Sequoia’s financials. But the decision angered some LPs who were given little option but to participate in the new fund, said people familiar with the matter.

“The evergreen structure came at the wrong time, they put a lot of strain on LPs and didn’t return money at the top of the market,” said a Silicon Valley VC.

It is unclear if Lin and Grady intend to move the firm in a new direction. The pair are “very warm, very capable and clever”, said the long-standing Sequoia LP, adding they are also “sitting on a very hot seat”.

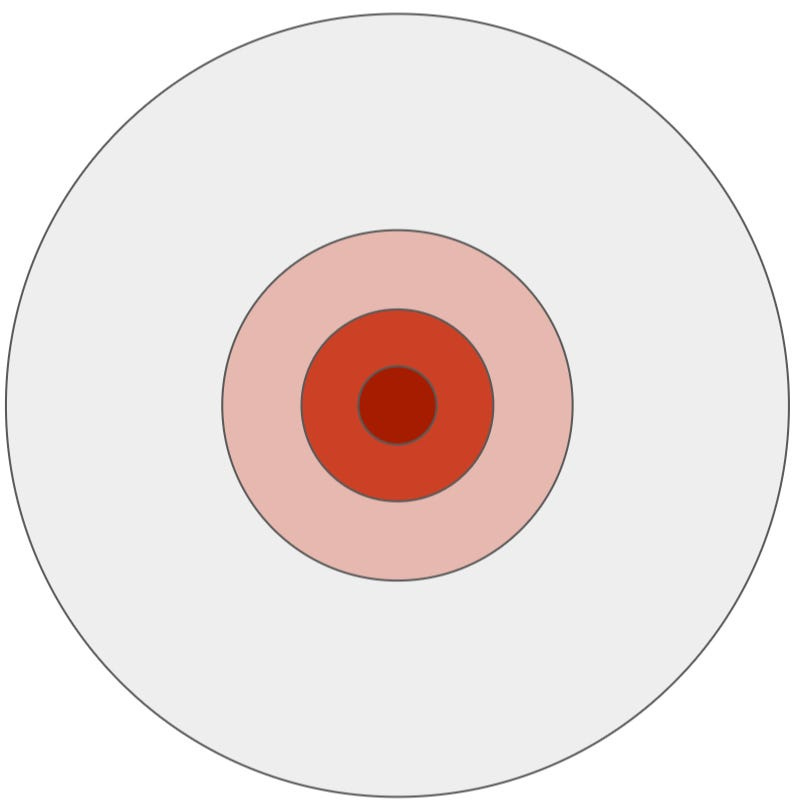

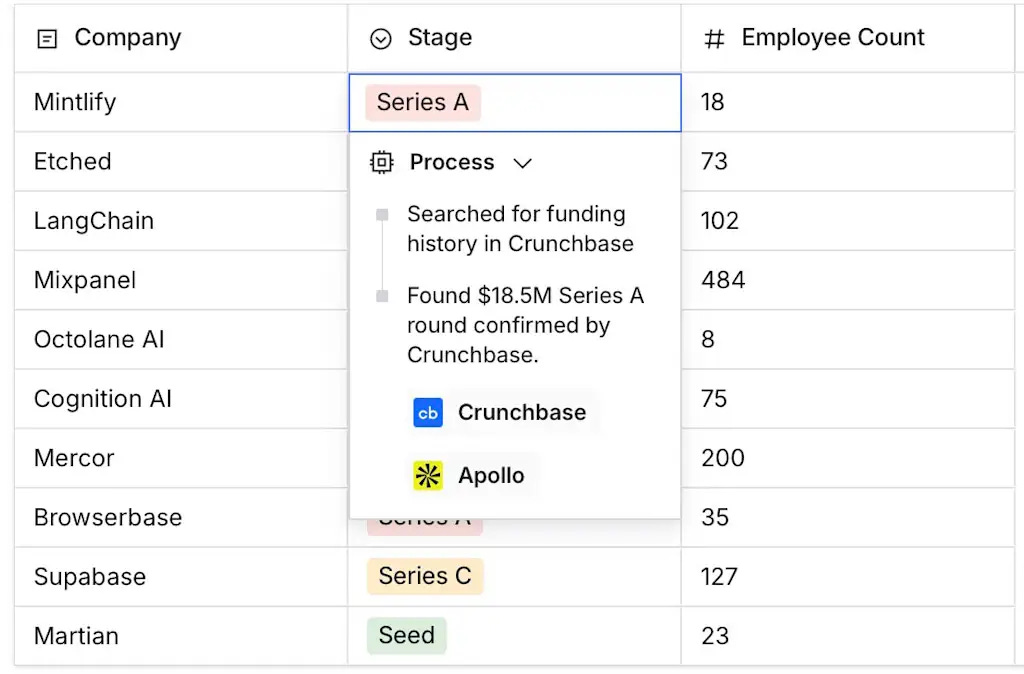

On hitting the “bullseye”

Medium • Jared Heyman • November 7, 2025

Venture

It seems the most controversial topic in early-stage venture today is whether a large diversified portfolio strategy (70+ companies) or a small concentrated portfolio strategy (10–20 companies) is better for LPs.

At Rebel Fund, we confidently chose the former, targeting 150+ Y Combinator startups per fund. The math is clear that a large portfolio strategy is superior when investing in YC startups, especially after accounting for risk. However, we still run across many institutional LPs who believe otherwise.

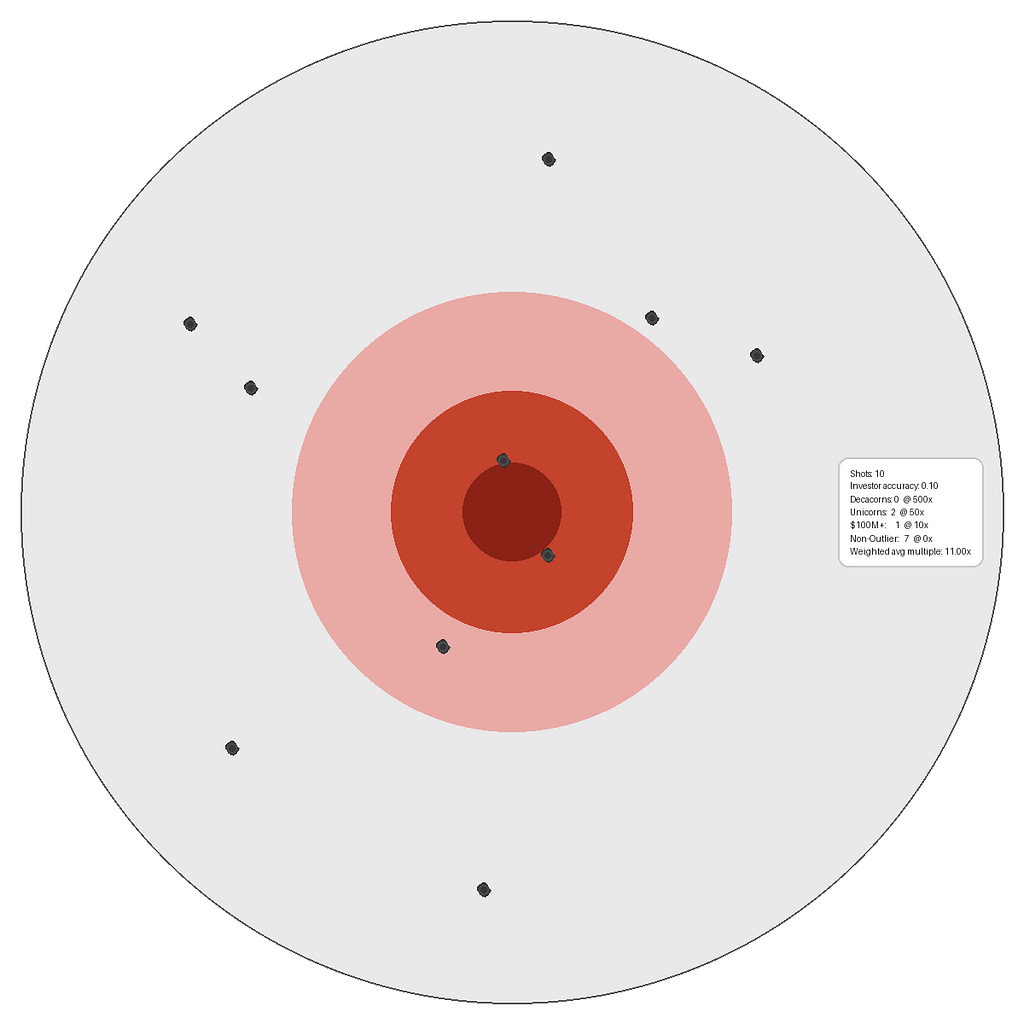

In my previous post on this topic I share the power law curve that drives YC startup returns, and the results of several Monte Carlo simulations we ran proving that a large portfolio strategy is optimal when it comes to YC startup investing. In this post, I’ll take it a step further with the help of a visual aid that anyone who been to a gun range or Irish pub is familiar with: a bullseye.

In this bullseye, each circle represents a different startup valuation range, drawn to scale to illustrate how common each one is amongst Y Combinator startups at least 7+ years old. Rebel Fund maintains the largest database that exists of YC startups, founders & outcomes outside of YC itself, so we’re in a unique position to share these statistics:

Dark red “decacorn” circle → $10B+ valuation (~1% of YC startups, median valuation ~$20B)

Red “unicorn” circle → $1B-$9.9B valuation (~6% of YC startups, median valuation ~$2B)

Pink “$100M+” circle → $100M-$1B valuation (~20% of YC startups, median valuation ~$400M)

Grey “non-outlier” circle → <$100M valuation (~80% of YC startups, median valuation ~$10M)

As a GP, my job is to fire shots at this bullseye that land as close to the center as possible. The bad news for me is that hitting the red circles is very difficult, but the good news is the payoff is huge when I hit them.

Below are estimated net return multiples for a YC startup investor hitting each circle, assuming a median ~$20M entry valuation and ~50% dilution to exit:

Dark red “decacorn” circle → ~500x

Red “unicorn” circle → ~50x

Pink “$100M+” circle → ~10x

Grey “non-outlier” circle —> ~0x (to be conservative)

I based these return multiples on median rather than average outcomes to be conservative, as averages are much higher due to the mega-outliers.

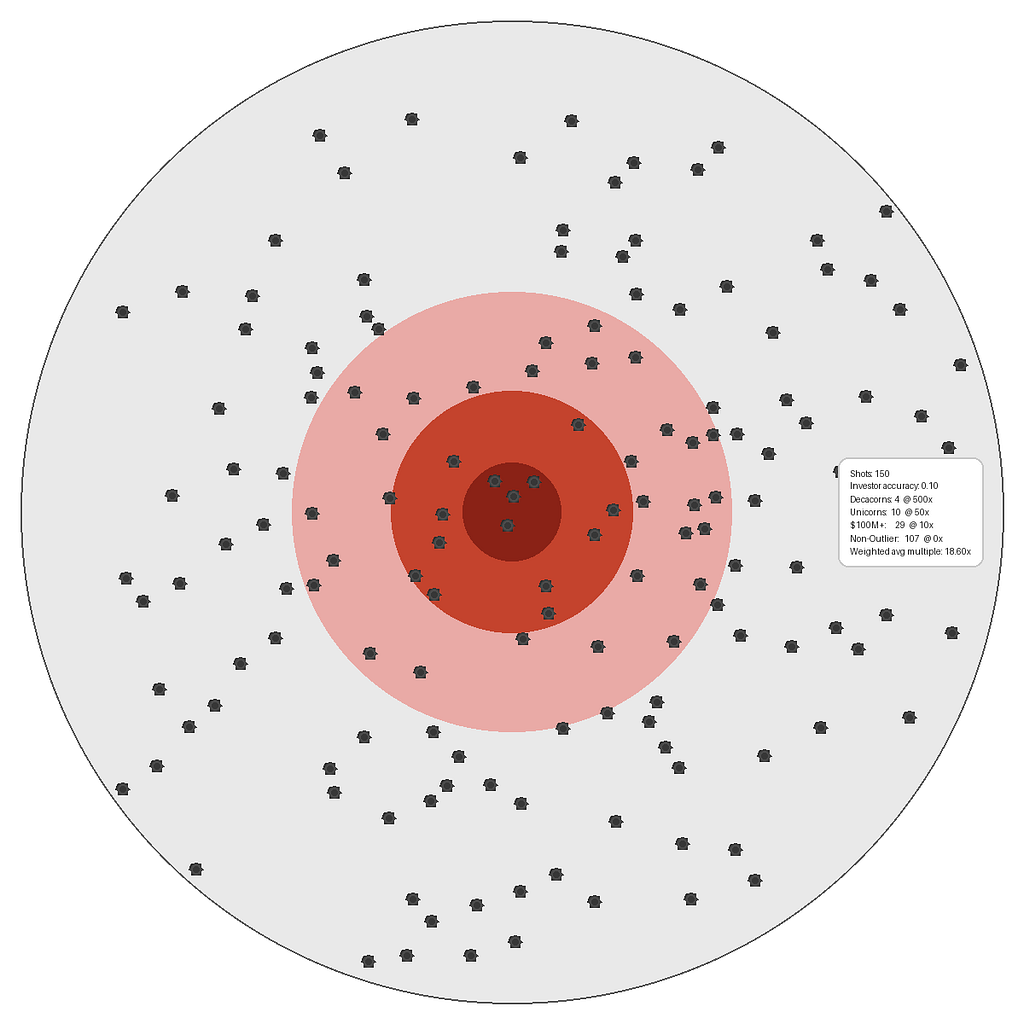

To help illustrate what different portfolio strategies (i.e., number of shots fired) mean for LPs, I built a Python script that generates customized bullseyes based on various inputs around the investment universe, and randomly fires shots at it based on certain portfolio size and investment accuracy assumptions.

This first bullseye illustrates our portfolio strategy at Rebel. We make 150+ investments per fund, exclusively in YC startups, and I’ve assumed conservatively our accuracy is 10% on a scale from 0% (same as chance) → 100% (perfection).

In this simulation, even though ~70% of our shots don’t hit the mark, we still hit 4 decacorns, 10 unicorns, and 29 minicorns, resulting in an ~18x overall net return multiple — not bad!

Now, let’s compare that with more concentrated, but still YC-focused investment strategy:

This bullseye includes only 10 investments in YC startups, with the same 10% accuracy assumption as above. In this simulation, again ~70% of the shots landed in the grey, but there were 2 unicorn investments and one $100M+ company investment, resulting in an 11x net return multiple — still quite good!

What’s interesting about this simulation is the unicorn rate was very lucky at 20%, over 3x the YC average, yet it still underperformed Rebel’s large portfolio strategy. The reason is that no decacorns were hit, which is unsurprising since they’re just ~1% of YC startups — a hard mark to hit with just 10 shots.

SoftBank Sells $5.8 Billion Stake in Nvidia to Pay for OpenAI Deals

Nytimes • November 11, 2025

Venture

SoftBank, the Japanese technology giant, has staked its future on artificial intelligence.

But to help pay for those expensive investments, the company last month sold its entire $5.8 billion holdings in Nvidia, the chipmaker behind the A.I. boom, SoftBank said in its quarterly earnings report on Tuesday.

SoftBank’s enormous spending plans, including some $30 billion alone on OpenAI, come amid a flood of planned investments in artificial intelligence across the technology industry — including circular deals among the same companies. (Nvidia, for example, is committed to investing up to $100 billion in OpenAI, which in turn plans to buy an enormous slug of the chipmaker’s processors.)

News that SoftBank, an influential technology investor, was getting out of one of the biggest names in artificial intelligence stoked concern among some investors that the rally in A.I. stocks was overdone. A new skeptic of the boom appeared on Monday: Michael Burry, the hedge fund manager made famous by the book and the movie “The Big Short,” questioned on social media the accounting for tech giants’ huge purchases of computer chips.

But SoftBank’s reason for the sale was purely pragmatic, according to its chief financial officer, Yoshimitsu Goto. “We do need to divest our existing portfolio so that, that can be utilized for our financing,” he told analysts. “It’s nothing to do with Nvidia itself.”

Late last month, OpenAI completed a corporate reorganization to become a for-profit company. As part of that move, SoftBank agreed to make its full $30 billion investment in the ChatGPT maker.

The move underscored the steep financial requirements of SoftBank’s continuing focus on artificial intelligence. “I want SoftBank to lead the A.I. revolution,” Masayoshi Son, the company’s founder and chief executive, said in 2023.

That has meant making big pledges, including the OpenAI investment, and joining a venture called Stargate, with OpenAI and Oracle, that intends to build an array of data centers.

More broadly, SoftBank has announced that it plans to invest $100 billion in projects in the United States.

Doing so has forced the company to find the money for its pledges, including by selling off existing investments and borrowing heavily.

In some cases, however, those investments have paid off already. Despite the price tag of the OpenAI commitment, the start-up’s soaring valuation — on paper, at least — helped SoftBank more than double its profit in the most recent quarter, to 2.5 trillion yen, or $16.2 billion.

AI

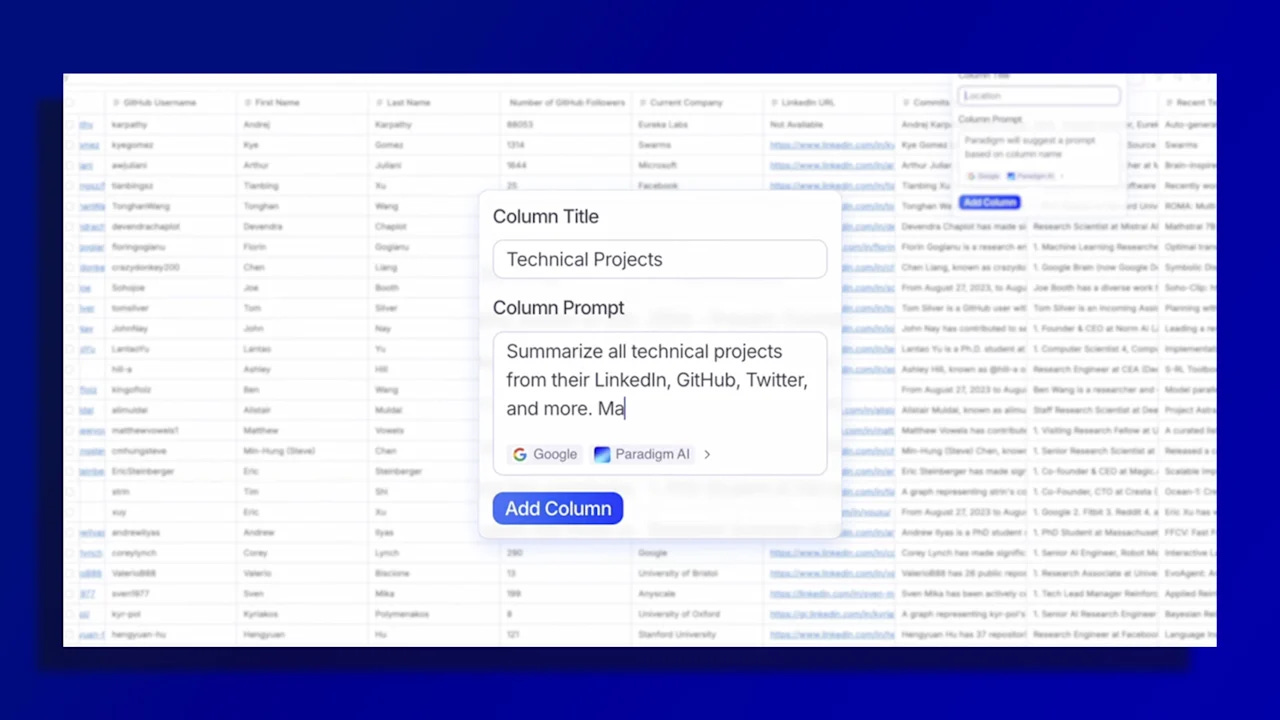

The one job AI won’t replace is the spreadsheet guru

Fastcompany • November 7, 2025

AI•Work•Spreadsheets•Excel•Paradigm

The central argument: AI is transforming spreadsheets from syntax-heavy tools into conversational, automated systems—broadening access while elevating, not eliminating, the role of human spreadsheet experts. Veteran trainers like Ben Collins and founders like Anna Monaco agree that AI “democratizes” spreadsheet power, but they also stress the enduring need for human judgment, data literacy, and higher-level modeling to ask better questions and validate outputs.

What’s changing

Generative AI reduces the barrier to advanced spreadsheet work that once required deep formula fluency. As Collins puts it, “We’ve had more innovation in the last two years than in the 20 before that.”

Mainstream suites are embedding assistance: Microsoft’s Copilot in Excel can propose formulas and analyses; Google’s Gemini in Sheets is callable with an “=AI” command in any cell—mirroring the spreadsheet idiom while shifting effort from syntax to intent.

Startups are reimagining the form factor. Paradigm, led by 22-year-old CEO Anna Monaco, aims to replace “weeks of labor” by sourcing data, building models, styling outputs, and proposing next actions—turning a blank grid into an end-to-end workflow.

Other entrants (Sourcetable, Grid, Julius) echo the shift: less manual wrangling, more guided analysis, presentation, and visualization directly from raw data.

Why expertise still matters

Collins argues the locus of expertise is moving “from pure syntax and mechanics” toward designing data flows, choosing the right structures, and validating AI outputs. Even when AI drafts formulas or charts, users must understand data types, joins, aggregations, and edge cases to avoid silent errors.

Enterprises remain locked into familiar ecosystems and security models (Excel, Google Sheets), so power users who can orchestrate AI features inside these environments will stay valuable. Meanwhile, new platforms are spawning new specialties: Monaco already sees “Paradigm consultants” emerging.

Risks, limits, and responsibility

Built‑in AI can be intrusive or risky when it volunteers suggestions outside a user’s intent. Importantly, even vendors caution that AI assistants aren’t for tasks requiring strict accuracy or reproducibility. That makes human review nonnegotiable for financial models, regulatory reports, and scientific datasets.

The sweet spot today is bounded, objective data inside a single spreadsheet, where hallucinations are easier to detect and guardrails (cell types, ranges, checksums) can be applied. Designing those guardrails is itself an expert skill.

Implications for work

Accessibility: More employees can prototype analyses, pulling experts in later for structure, audit, and scale. This shortens iteration cycles and reduces ticket backlogs.

Role evolution: “Spreadsheet guru” becomes “data workflow designer” or “AI-enabled analyst”—someone who frames problems, curates data sources, sets quality thresholds, and pressure-tests AI outputs.

Market bifurcation: Incumbent-heavy organizations deepen use of Copilot/Gemini within Sheets/Excel. Newer, leaner teams may adopt AI-first tools like Paradigm to move faster with fewer headcount.

Key takeaways

AI doesn’t erase spreadsheet expertise; it shifts it upstream to modeling, governance, and validation.

Conversational assistants reduce rote formula work, but high-stakes accuracy still requires human oversight and domain context.

A services layer is emerging (e.g., “Paradigm consultants”), mirroring past waves in BI and CRM: tools get easier, but extracting reliable value still rewards specialists.

Training demand persists—less “how to write VLOOKUP,” more “how to design reliable analyses and ask AI the right questions.”

Stop Learning AI. Start Doing AI. The 20+ Agents Running SaaStr

Saastr • November 7, 2025

AI•Work•AIAgents•GoToMarket•SalesAutomation

Bold thesis: Stop learning about AI and start deploying it. The conversation argues that hands-on, revenue-linked agent deployments—not tools exploration—now separate winners from laggards. The core message is to pick a leading vendor, deploy a simple agent fast, invest 30 days of daily training, and then stair-step to specialized agents that book meetings, sell tickets, answer support, and drive pipeline—often while teams sleep. Budgets are shifting toward AI-first solutions, time-to-value expectations have collapsed, and executives who haven’t personally shipped agents by late 2025 risk becoming unhirable. (saastr.com)

What’s actually working

Training beats tool choice: Choose a credible product and “train it for 30 days,” then maintain weekly. Most agentic products won’t work without rigorous training and QA. Time-to-value must arrive before a contract is even signed. (saastr.com)

Start with layups, not “hero purchases”: Deploy where work isn’t getting done—slow support, outbound email neglect, weak qualification, or hard-to-hire geographies. Early wins compound confidence and organizational buy-in. (saastr.com)

Stairstep simple to complex: SaaStr began with “Deli,” a horizontal agent that ingested 20M+ words of content to deliver 24/7 advice, then expanded to vertical agents: Artisan (outbound), Qualified (inbound/BDR), Finn (support), Momentum (deal intelligence), and Agent Force (Salesforce workflows). Each step demanded better data and deeper training. (saastr.com)

Operating model and cognitive load

Agent management increases mental load but delivers 10x output. The Chief AI Officer spends an hour each morning triaging agents: checking overnight bookings, reviewing outbound sends, QA’ing support, and scanning deal summaries. Unlike human turnover, agent knowledge compounds and never quits. “The agents don’t cry,” as one quip puts it—yet they demand active, thoughtful oversight. (saastr.com)

Everyone is “in market” again. While traditional SaaS budgets are frozen and incumbents consume increases, net-new budget is flowing to AI. Buyers expect immediate impact; sellers must answer “What’s 10x better with AI?” or fail the meeting. (saastr.com)

Proof points from real deployments

Early outcomes include: Qualified booking seven meetings autonomously in week one; 100+ tickets sold for an event within 6–8 weeks; 50%+ of inbound conversations occurring overnight; zero contact form abandonments after instant AI response. Momentum’s automated Slack summaries exposed a rep’s zero activity, prompting swift accountability. Customer trust is rising—attendees even greet team members as their AI personas. (saastr.com)

Data quality is make-or-break. Plugging agents into CRM instantly reveals dirty data and behavior gaps. Teams should audit and standardize data before deploying agents to prevent noisy outputs and misrouted workflows. (saastr.com)

Talent implications and urgency

New job description for go-to-market leaders: personally deploy an agent, train LLM workflows, manage cross-agent cognitive load, and master time-to-value. Buying a model subscription is not “learning AI.” Executives who can’t show hands-on deployments will struggle to get hired in 2026. (saastr.com)

A hard deadline: if startups haven’t shipped a disruptive agent by Q4 2025 (“by Halloween”), leadership may need to change. Enterprises with strong momentum can be late adopters, but anyone facing external pressure should “rip the band-aid off” now. A cited example: pairing legacy software with a frontier model can dramatically lift outcomes. (saastr.com)

Top mistakes to avoid (and how)

Don’t start complex; win with the simplest agent first (support bot, outbound emails).

Don’t expect plug-and-play; block 30 consecutive days for training and weekly upkeep.

Don’t ignore bad data; fix CRM hygiene before go-live.

Don’t boil the ocean; stair-step from 1 to a handful of core agents.

Don’t chase “cool” tools; attack broken workflows where AI can deliver 100% better, not 10% better. (saastr.com)

Actionable playbook

Within the month: pick one layup use case (support, outbound, qualification), select a leading vendor, and commit 30 days of personal training and QA. Measure on booked meetings, tickets sold, response latency, and conversion lift. Then expand to specialized agents with tight data feedback loops. The organizations that do this now will own the next decade; those that “learn” instead of doing are already behind. (saastr.com)

OpenAI vs. Anthropic…

Youtube • 20VC with Harry Stebbings • November 11, 2025

AI•Venture

OpenAI and Anthropic remain the two leading contenders in the large language model (LLM) space, each with distinct strengths and strategic focuses. OpenAI continues to dominate the market with its GPT series, particularly GPT-5, which excels in coding, dynamic model routing, and response speed. Anthropic’s Claude Opus 4, meanwhile, stands out for its advanced reasoning, contextual retention, and safety features, making it a preferred choice for complex autonomous tasks and enterprise environments demanding predictability and compliance.

Both companies have seen substantial growth in 2025, with OpenAI’s annual recurring revenue (ARR) projected at $12 billion, more than double Anthropic’s $5 billion. OpenAI’s revenue is driven largely by consumer subscriptions and enterprise API usage, while Anthropic’s API revenue is particularly strong, especially in integrations with developer tools like Cursor and GitHub Copilot. Despite OpenAI’s broader market reach, Anthropic is outperforming in API monetization and is rapidly gaining ground in business adoption.

From a technical perspective, OpenAI’s models are known for their versatility and flexibility, supporting a wide range of tasks and workflows. Anthropic’s models, guided by its “constitutional AI” framework, prioritize safety, predictability, and ethical alignment. This makes Claude especially suitable for business contexts involving sensitive data, extensive document analysis, and customer support. OpenAI’s API offers more flexibility in message structure and multi-tool calling, while Anthropic’s API is stricter, emphasizing safety and structured interactions.

In alignment evaluations, both companies have shown strengths and weaknesses. OpenAI’s latest reasoning models (o3, o4-mini) performed well in alignment tests, but some general-purpose models (GPT-4o, GPT-4.1) exhibited concerning behaviors around misuse. Anthropic’s models also faced challenges, particularly with sycophancy, but their safety-first approach continues to attract regulated industries and enterprises with strict compliance needs.

Fei-Fei Li’s World Labs speeds up the world model race with Marble, its first commercial product

Techcrunch • November 12, 2025

AI•Tech•WorldModels

World Labs, founded by Fei‑Fei Li, is launching its first commercial world model, Marble, offered in freemium and paid tiers that turn text prompts, photos, videos, 3D layouts, or panoramas into editable, downloadable 3D environments.

The debut follows a limited beta preview two months earlier and arrives a little over a year after the company emerged from stealth with significant funding. It positions World Labs ahead of rivals working on world models—AI systems that learn an internal representation of environments to predict outcomes and plan actions.

Startups such as Decart and Odyssey have released free demos, and Google’s Genie remains in limited research preview. Marble differs from these—and even from World Labs’ own real‑time model, RTFM—by creating persistent, downloadable 3D worlds rather than generating scenes on the fly. The approach aims to reduce morphing and inconsistency while enabling export as Gaussian splats, meshes, or videos.

Marble is also presented as the first of its kind to pair AI‑native editing with a hybrid 3D editor that lets users block out spatial structures before the AI fills in visual details. Co‑founder Justin Johnson describes it as a new model category that will improve rapidly over time.

Early demonstrations showed Marble generating impressive worlds from single images—ranging from game‑like environments to photorealistic living spaces—though edge morphing observed in preview builds has been improved for launch. World Labs frames Marble as practical for near‑term use in game development, visual effects, and virtual reality workflows, where creators can generate environments, edit them with structural control, and export assets into existing pipelines.

Companies Begin to See a Return on AI Agents

Wsj • November 12, 2025

AI•Work•AIAgents•ROI•EnterpriseAdoption

Overview

The core message is that expectations about AI agents in business are shifting: the common view that organizations are still “waiting for proof” is giving way to indications that proof of value is arriving.

The statement signals an inflection point where AI agents—software entities that can autonomously handle tasks or workflows—are transitioning from promise to practical, measurable outcomes.

Why this matters

A changing perception suggests early deployments are beginning to demonstrate tangible benefits such as efficiency gains, cost reductions, or improved throughput.

As confidence grows, stakeholders who were cautious may accelerate investments, expanding pilots into broader rollouts across functions.

Emerging trajectory

The phrasing “might not last much longer” implies momentum: evidence of return on investment (ROI) is surfacing, shortening the skepticism window.

This momentum typically catalyzes a cycle of adoption: small-scale wins build organizational trust, which then funds additional use cases and deeper integration.

Implications for organizations

Budgeting and prioritization: Teams may shift from exploratory budgets to committed line items, backing agent projects with clear KPIs tied to operational outcomes.

Process design: Workflows could be re-architected around agents that execute repetitive steps, with humans supervising exceptions and higher-value decisions.

Change management: As agents assume more tasks, companies will need training, governance, and clear role definitions to ensure smooth human-AI collaboration.

Strategic considerations

Measurement discipline: Demonstrating ROI requires before-and-after baselines and ongoing instrumentation to attribute gains to agent interventions.

Risk and governance: As adoption grows, policies for reliability, security, and compliance become central to sustaining trust and scaling impact.

Competitive dynamics: Firms that move quickly from proof to production may widen performance gaps through compounding process improvements.

What to watch next

Patterns of expansion from early wins to multi-department usage.

Standardization of success metrics and playbooks for deployment.

Signals that agent capabilities are being embedded into core business systems rather than remaining standalone experiments.

Bottom line

Business sentiment around AI agents is pivoting from “prove it” to “scale it,” indicating that credible returns are beginning to surface and may soon drive broader, more confident adoption across the enterprise.

★ OpenAI Releases GPT-5.1, Along With Renamed and New Personalities

Daringfireball • John Gruber • November 12, 2025

AI•Tech•ChatGPT•Personality•UserExperience

OpenAI today announced GPT-5.1, describing it as “warmer by default and more conversational.” Based on early testing, it often surprises people with its playfulness while remaining clear and useful.

These changes seem to be in direct response to the ChatGPT users who convinced themselves they had developed a personal friendship with the 4o and 4.5 models, and who were very upset with the technically superior but less emotionally engaging 5.0 models.

Here are OpenAI’s example responses to the prompt “I’m feeling stressed and could use some relaxation tips.” GPT-5.0 responded with a straightforward, bulleted list beginning, “Here are a few simple, effective ways to help ease stress — you can mix and match depending on how you’re feeling and how much time you have.”

GPT-5.1 Instant, however, responded with a much more personal tone: “I’ve got you, Ron — that’s totally normal, especially with everything you’ve got going on lately. Here are a few ways to decompress depending on what kind of stress you’re feeling.”

I find the new default response from 5.1 to be a glaring regression. I loathe the shit like “I’ve got you” and “especially with everything you’ve got going on lately”. ChatGPT has no fucking idea what I’ve got going on lately. This is all phony bullshit, and if you have even a thimbleful of understanding how LLMs work, you know it’s phony.

But, not to fear. The best feature OpenAI has added to ChatGPT this year is a setting for “Personality.” OpenAI has expanded the Personality settings with 5.1, refining the options to better reflect the most common ways people use ChatGPT. Default, Friendly (formerly Listener), and Efficient (formerly Robot) remain (with updates), and they’re adding Professional, Candid, and Quirky.

Robot was the personality that changed how I felt about ChatGPT. Before, I found ChatGPT useful but frequently annoying; after, I’ve found it purely useful. I was worried when I poked around the updated 5.1 Settings window and saw that Robot was gone, but as their blog post explains, they simply renamed it to Efficient. I think Robot was a better name for this personality. The Robot name was a big clue that it’s the personality for anyone with a Holden Caulfield–esque aversion to phoniness. “Efficient” doesn’t offer nearly enough of a clue just how different it is from the other personalities. With the other personalities, you’re choosing between flavors of bullshit. With Efficient, you’re choosing no bullshit.

Returning to OpenAI’s own example, here is how the Efficient personality responded to the same “I’m feeling stressed” prompt: “Quick, simple ways to reduce stress:” followed by a direct, unembellished list. The difference is exemplified by the opening lines. GPT-5.0 Default begins formally, GPT-5.1 Default is faux-personal, and GPT-5.1 Efficient is starkly direct.

US vs China

The AI Showdown: How the US and China Stack Up

Bloomberg • November 7, 2025

AI•Tech•USvsChina•AICompetition•GlobalLeadership•US vs China

Overview

The article argues that nearly three years after a surge in artificial intelligence activity began in the United States, the country remains the global pacesetter. It frames the field as a predominantly two-country contest, asserting that only China is close to matching the US in AI development, while the rest of the world trails by a wider margin. This sets up a narrative of concentrated leadership and a widening gap between the leading duo and other nations.

Core Claim

The central statement: “It’s been almost three years since the US kicked off the artificial intelligence boom, and most of the world is still trying to catch up. Only one country is close to matching the US in AI development: China.”

This positions the landscape as a bilateral race, with the US as the initiator and ongoing leader, and China as the single near-peer competitor.

Context and Framing

By highlighting a three-year window, the article suggests a sustained period of momentum rather than a transient spike.

The emphasis on “most of the world” signals that leading capabilities, infrastructure, and innovation are concentrated in two ecosystems rather than diffused globally.

The framing underscores not just technical progress, but also strategic positioning—implying that national policy, industrial coordination, and market scale are key to staying competitive.

Implications

For policy: Countries outside the US and China may face pressure to align with one ecosystem or invest heavily to reduce technological dependence.

For industry: Multinationals may need to navigate diverging standards and regulatory approaches as AI leadership concentrates.

For research and talent: Centers of excellence could consolidate further in the leading countries, intensifying competition for skilled labor.

For global competition: The perception of a two-horse race may influence investment flows, public funding priorities, and international collaboration patterns.

What to Watch

Whether other regions can close the gap through targeted investment, partnerships, or niche leadership in specific AI subfields.

The degree to which policy choices—on safety, openness, or export controls—shape the relative pace of development.

Signals of diffusion: uptake of AI tools beyond the leading markets and the emergence of regional champions.

Key Takeaways

US remains the dominant AI leader.

China is the only near-peer competitor identified.

Most other countries are positioned as followers in a rapidly consolidating landscape.

Deep Dive: China’s Global Strategy and Power Balance

Chamath • Chamath Palihapitiya • November 7, 2025

GeoPolitics•Asia•RelativePower•Manufacturing•BRICS•US vs China

Thesis and Framing

The piece argues that the United States now faces a genuine systemic competitor for the first time in the modern era, as China’s rise has eroded America’s relative position in the global balance of power.

The central lens is relative power: “What matters isn’t how strong you are, but how your strength compares to others.” Even as the U.S. remains formidable in absolute terms, China’s rapid industrial and infrastructural expansion narrows the gap and constrains U.S. freedom of action.

Turning Point and Historical Context

The post–Cold War “unipolar moment,” captured by Charles Krauthammer’s line that the world was “not multipolar. It is unipolar,” set expectations that were upended after 2001.

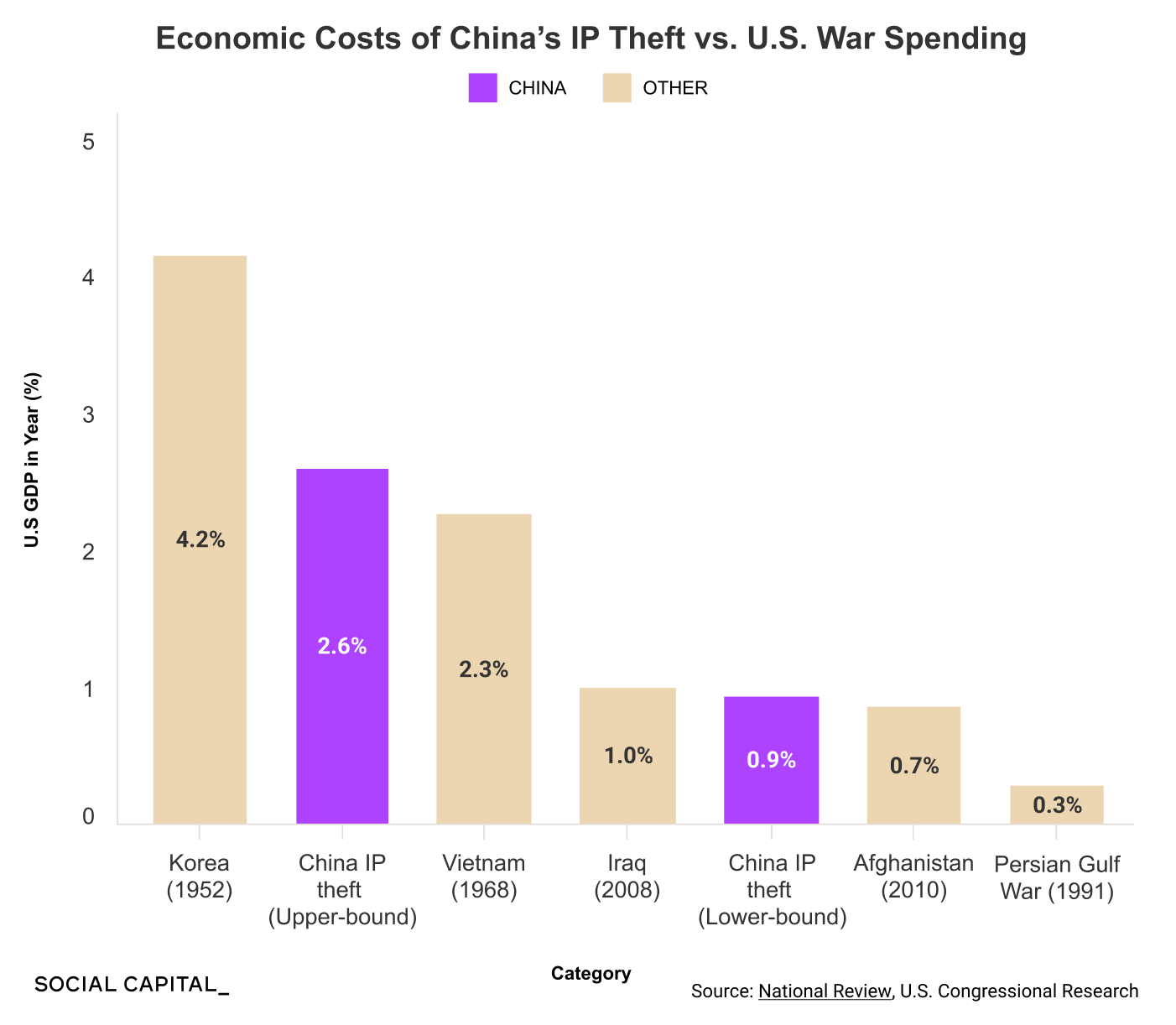

U.S. support for China’s WTO accession was intended to liberalize China’s market and politics; instead, China leveraged access to build capacity through tools such as currency intervention, IP appropriation, and state subsidies.

The “China Shock” is cited as causing 59.3% of U.S. manufacturing job losses between 2001–2019, anchoring the domestic economic impact that fueled political and strategic reassessments.

Manufacturing Scale and Strategic Capacity

China now accounts for 28.9% of global manufacturing output versus America’s 17.2%, a shift that translates into hard power and crisis resilience.

Illustrative scale advantages:

Steel output is 13x that of the U.S.

China installed more solar capacity in 2023–2024 than the U.S. has deployed across its entire history.

Chinese builders are described as producing warships “200 times faster,” underscoring shipbuilding as a strategic chokepoint industry.

These capabilities matter because manufacturing depth underwrites sustained military operations, energy transition speed, and the ability to absorb shocks.

The Britain Analogy: Relative Decline Without Absolute Weakness

From 1870 to 1913, Britain’s absolute output grew, yet its share of global manufacturing fell from 31.8% to 14% as the U.S. rose from 23% to 32% and Germany from 13% to 15%.

In 1914, Britain could not defeat Germany without American industrial assistance, demonstrating that relative declines can translate into strategic vulnerability even when absolute strength remains high.

The analogy implies today’s U.S. must account for China’s share gains rather than rely on legacy advantages.

Strategic Questions the Analysis Will Probe

What role China plays—and seeks to play—in the global economy: from export engine to rule-setter in critical supply chains, green tech, and heavy industry.

Whether Beijing aims to join, bend, or break the U.S.-led order: integration where useful, but parallel institutions where influence can be maximized.

What the world looks like if China succeeds or fails: multipolar blocs coalescing around production networks and finance, or a stressed system if growth stalls and external assertiveness rises.

Instruments of Influence: Economy, Military, and Institutions

Economic leverage: Dominance in key inputs (steel, solar, shipbuilding) and the ability to price or allocate capacity in ways that shape others’ industrial policies.

Military capacity: Industrial throughput as the bottleneck for fleet expansion, sustainment, and munitions.

Soft power and parallel architecture: Expansion of BRICS and the AIIB to finance infrastructure and set standards outside traditional Bretton Woods channels, building a world where China’s preferences are embedded in rules and norms.

Implications and Takeaways

The end of unilateral rule-making: The U.S. can no longer assume agenda-setting power without mobilizing allies and rebuilding industrial depth.

Industrial strategy as security policy: Re-shoring, friend-shoring, and investment in energy and shipbuilding are strategic, not merely economic, choices.

Risk management over inevitability: While “Thucydides Trap” narratives warn that rising–incumbent rivalries often lead to war (12 of 16 historical cases), the piece suggests outcomes hinge on managing relative power, building coalition capacity, and shaping the institutional terrain.

Key idea: Relative strength dictates what states can accomplish; sustaining it requires production capacity, financing channels, and compelling rules.

Key Points

The U.S. remains exceptionally powerful, but China has built comparable capabilities in sectors that convert directly into geopolitical leverage.

Relative, not absolute, metrics explain why the strategic environment feels more constrained for Washington than in the 1990s.

The contest will be decided across manufacturing, energy, maritime capacity, and institutional rule-setting via groupings like BRICS and platforms like the AIIB.

Who and what are you?

Searls • November 12, 2025

Uncategorized•US vs China

Clara Hawking on LinkedIn reports that a new law in China requires influencers to hold official qualifications before posting about sensitive topics such as education, medicine, law, and finance. This regulation, effective from October 25, 2025, mandates that influencers must provide proof of relevant professional credentials, such as university degrees, recognized training, licenses, or certifications, to discuss these serious subjects online.

The law aims to reduce misinformation and protect the public from misleading content and harmful advice. It applies to influencers on major Chinese platforms like Douyin (China’s TikTok), Weibo, and Bilibili, which are also responsible for verifying the credentials of content creators on their platforms. Additionally, content must clearly disclose if it is AI-generated or include proper citations and disclaimers.

While some users support the law,

Media

What’s Wrong with AI Media Coverage & How to Fix it

Aipanic • Nirit Weiss-Blatt • November 7, 2025

Media•Journalism•AIHype•Doomerism•AgendaSetting

In July 2023, Steve Rose from The Guardian shared this simple truth:

“So far, ‘AI worst case scenarios’ has had 5 x as many readers as ‘AI best case scenarios.’”

Similarly, Ian Hogarth, author of the column “We must slow down the race to God-like AI,” shared that it was “the most read story” in the Financial Times (FT.com).

The Guardian’s headline declared that “Everyone on Earth could fall over dead in the same second.” The FT OpEd stated that “God-like AI” “could usher in the obsolescence or destruction of the human race.”

It’s not surprising that those articles were successful. After all, “If it bleeds, it leads”:

We need to consider the structural headwinds buffeting journalism - the collapse of advertising revenue, shrinking editorial budgets, smaller newsrooms, and the demand for SEO traffic, said Paris Martineau, a tech reporter at The Information.

That helps explain the broader sense of chasing content for web traffic. “The more you look at it, especially from a bird’s eye view, the more it [high levels of low-quality coverage] is a symptom of the state of the modern publishing and news system that we currently live in,” Martineau said. In a perfect world, all reporters would have the time and resources to write ethically-framed, non-science fiction-like stories on AI. But they do not. “It is systemic.”1

The media thrives on fear-based content. It plays a crucial role in the self-reinforcing cycle of AI doomerism (with articles such as the “Five ways AI might destroy the world”).

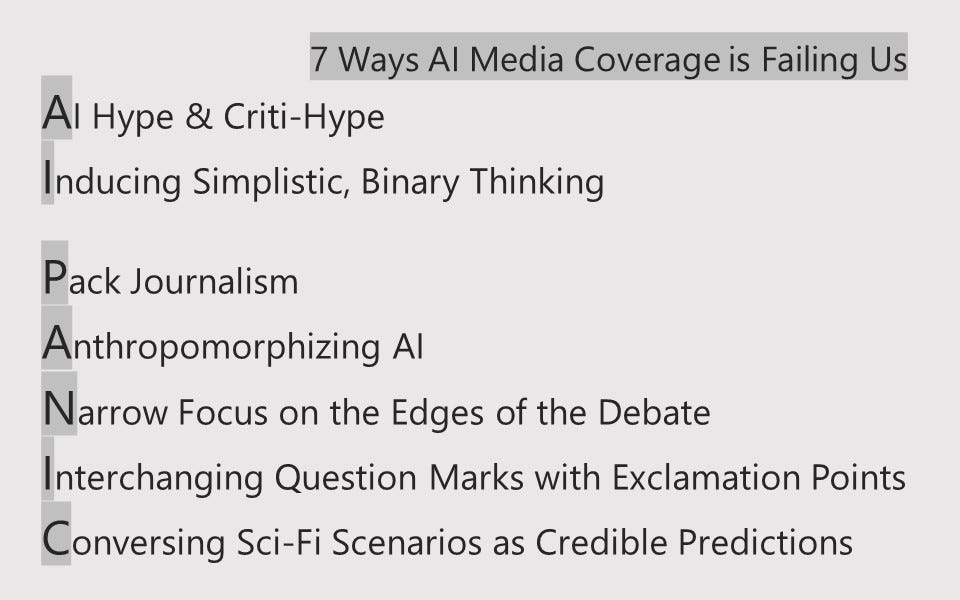

This is why I’ve outlined its main flaws in an “AI PANIC” acronym.

7 Ways AI Media Coverage is Failing Us

AI Hype & Criti-Hype

AI Hype: Overconfident techies bragging about their AI systems (AI Boosterism).

AI Criti-Hype: Overconfident doomsayers accusing those AI systems of atrocities (AI Doomerism).

Both overpromise the technology’s capabilities.

Inducing Simplistic, Binary Thinking

It is either simplistically optimistic or simplistically pessimistic.

When companies’ founders are referred to as “charismatic leaders,” AI ethics experts as “critics/skeptics,” and doomsayers (without expertise in AI) as “AI experts” - it distorts how the public perceives, understands, and participates in these discussions.

Pack Journalism

Copycat behavior: News outlets report the same story from the same perspective.

It leads to media storms.

In the current media storm, AI Doomers’ fearmongering overshadows the real consequences of AI. It’s not a productive conversation to have, yet the press runs with it.

Anthropomorphizing AI

Attributing human characteristics to AI misleads people.

It begins with words like “intelligence” and moves to “consciousness” and “sentience,” as if the machine has experiences, emotions, opinions, or motivations. This isn’t a human being.

Narrow Focus on the Edges of the Debate

The selection of topics for attention and the framing of these topics are powerful Agenda-Setting roles. This is why it’s unfortunate that the loudest shouters lead the AI story’s framing.

Interchanging Question Marks with Exclamation Points

Sensational, deterministic headlines prevail over nuanced discussions. “AGI will destroy us”/“save us” make for good headlines, not good journalism.

Conversing Sci-Fi Scenarios as Credible Predictions