Contents

Essay

AI

We’re Finally Getting a Clearer Look at the Future of Wearables

OpenAI and Databricks Strike $100 Million Deal to Sell AI Agents

Google DeepMind unveils new robotics AI model that can sort laundry

Bain & Co.’s David Crawford: The world is still $800B short to keep pace with AI demand

OpenAI Unveils Plans for Seemingly Limitless Expansion of Computing Power

Venture

Binance co-founder Zhao aims to open $10bn portfolio to outside investors

Behind the Numbers: A Closer Look at Carta’s Q2 2025 Private Market Data

More Thoughts on the Existential Crisis in Seed: One Question, Two Views of the World

The Automated VC | Yohei Nakajima, Founding Partner, Untapped VC

GPs, here’s how you should break down your 30 minute pitch #Fundraising #investorrelations

These Are The Speediest Companies To Go From Series A To Series C

Crypto

GeoPolitics

Media

Regulation

Interview of the week

Startup of the week

Post of the week

Editorial: OpenAI Just Shifted the Interface — and the Power

OpenAI didn’t just launch a feature this week; it shipped a habit. ChatGPT Pulse stops waiting for prompts and starts shaping your day—briefing you in the morning, stitching calendar and email, and proposing next actions. It’s a move from “ask and answer” to “wake and guide.”

This is the consumer hard‑install moment. If AI is a consumer‑first business, Pulse is the point it becomes a daily consumer product. The trajectory is obvious: a proactive assistant becomes the front door to intent, and action, not a page of links nor a social feed. Altman’s shorthand ambition is to replace your morning doom‑scroll with a tailored briefing.

Two things snap into focus.

1) The front door is migrating from search/feeds to the proactive assistant—and bundling wins

OpenAI’s usage data shows ChatGPT already bundles guidance + writing/editing + information seeking for a mass audience; non‑work usage is now the majority. In other words, a general interface has quietly become a must have for everyday life.

“You can bundle, or you can unbundle,” Jim Barksdale famously said. Pulse is the bundle getting sticky. Around it, surfaces are aligning toward “ambient, not app”: Meta’s Ray‑Ban Display frames keep pushing hands‑free capture/assist; audio‑first companions like Huxe (ex‑NotebookLM devs) are exploring always‑on “live stations.” The trendline matters more than any single feature: the assistant wants your shoulder, not your screen.

Media is repositioning around the new front door. The New York Times is standardizing AI‑assisted investigative tools “as a force multiplier” while maintaining verification; publishers deploying dynamic paywalls report material revenue lifts when they algorithmically price access. If assistants become the default route to answers, the content supply chain is repriced in real time.

2) Consumer pull is driving industrial‑scale push—compute, energy, rights become the moat

Demand at the edge needs factories in the core. Nvidia’s commitment alongside a multi‑gigawatt OpenAI buildout is the clearest tell: capacity itself becomes product. NVidia’s revenue is mapped exactly to the growth of power capacity as its GPUs are the primary consumers of power. And as LLM’s move to “thinking” and acting, the tokens required grow exponentially. Nvidia and OpenAi revenue is founded on tokens. Each token gets cheaper, but more are consumed.

“We want to create a factory that can produce a gigawatt of new AI infrastructure every week.” — Sam Altman

The rest of the stack must follow: chips, HBM, optics, data centers, and—crucially—power. The answer isn’t “more cloud” but a compute gradient from device → tower → regional hub → hyperscale, with federation, privacy, and latency collapse by design.

Enterprise rails are snapping into place. The OpenAI–Databricks pact packages agents with governance and auditability—how pilots scale to production. Meanwhile, DeepMind’s robotics work that splits “one that thinks” and “one that does” suggests reasoning‑first systems edging from demo to utility. Data and AI is the next revolution. Lots to innovate on there.

The quiet tension: as assistants own the day, gatekeepers re‑emerge

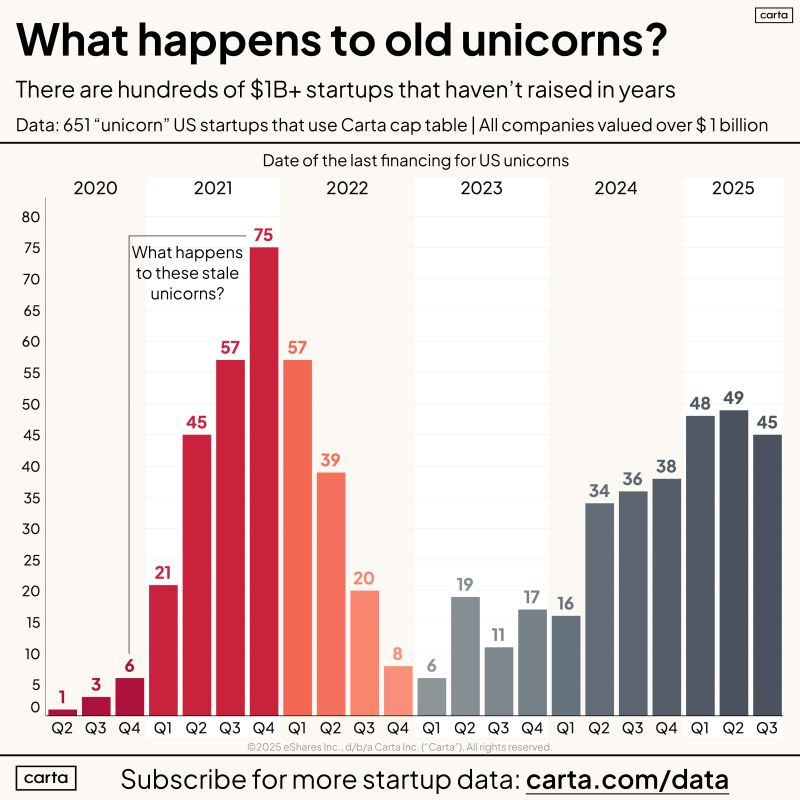

If Pulse becomes the default morning briefing, defaults become policy. We’re watching platforms revisit their rules, governments revisit platforms, and publishers revisit pricing. Reddit is negotiating dynamic, usage‑based licensing rather than flat fees—precisely because answers are now assistant‑rendered, not link‑clicked. Who sets pricing, presentation, and payout at the front door?

Our stance: the next trillion‑dollar platforms will control three assets in combination

Attention. The proactive assistant that captures intent before a search is typed (Pulse).

Compute. Gigawatts of reliable, efficient capacity that collapse latency and cost.

Monetization and Settlement. Programmable rights and revenue‑sharing so Reddit, newsrooms, and creators get paid—and AIs do too when they drive outcomes.

What to watch next

Pulse retention & completion: Does it displace search and email triage by default?

Reddit’s pricing experiments: Does pay‑per‑crawl/usage become a standard signal?

The first‑GigaWatt milestones: Grid interconnects, liquid cooling, first deployments on schedule.

Surfaces: Do wearables and audio become the assistant’s preferred skin?

Enterprise rails: Do Databricks‑aligned agents pull procurement from pilots to P&L?

Bottom line: Consumer demand is pulling an industrial revolution behind it. Build the door, build the grid, build the ledger—and the trillions will follow.

Essay

The Compute Gradient

State of the future • Lawrence Lundy-Bryan • September 23, 2025

Essay•AI•EdgeComputing

I. Rethinking AI’s Energy Problem

In July, Jonno wrote in The Energy Theory of Everything that:

“Intelligence is a function of electricity. AI turns energy into intelligence. 0.3 Wh is the electricity cost of a single GPT-4o query. Now imagine an AI agent that fires 100 calls per task, with a power user running 1 00 tasks daily. That is 3 kWh per user per day. Scale to one million users and the total hits 1.1 TWh a year – Oxfordshire’s household demand – before you even count model-training. The IEA expects global data-centre demand to double to 945 TWh by 2030 even taking into account potential hardware efficiency gains.”

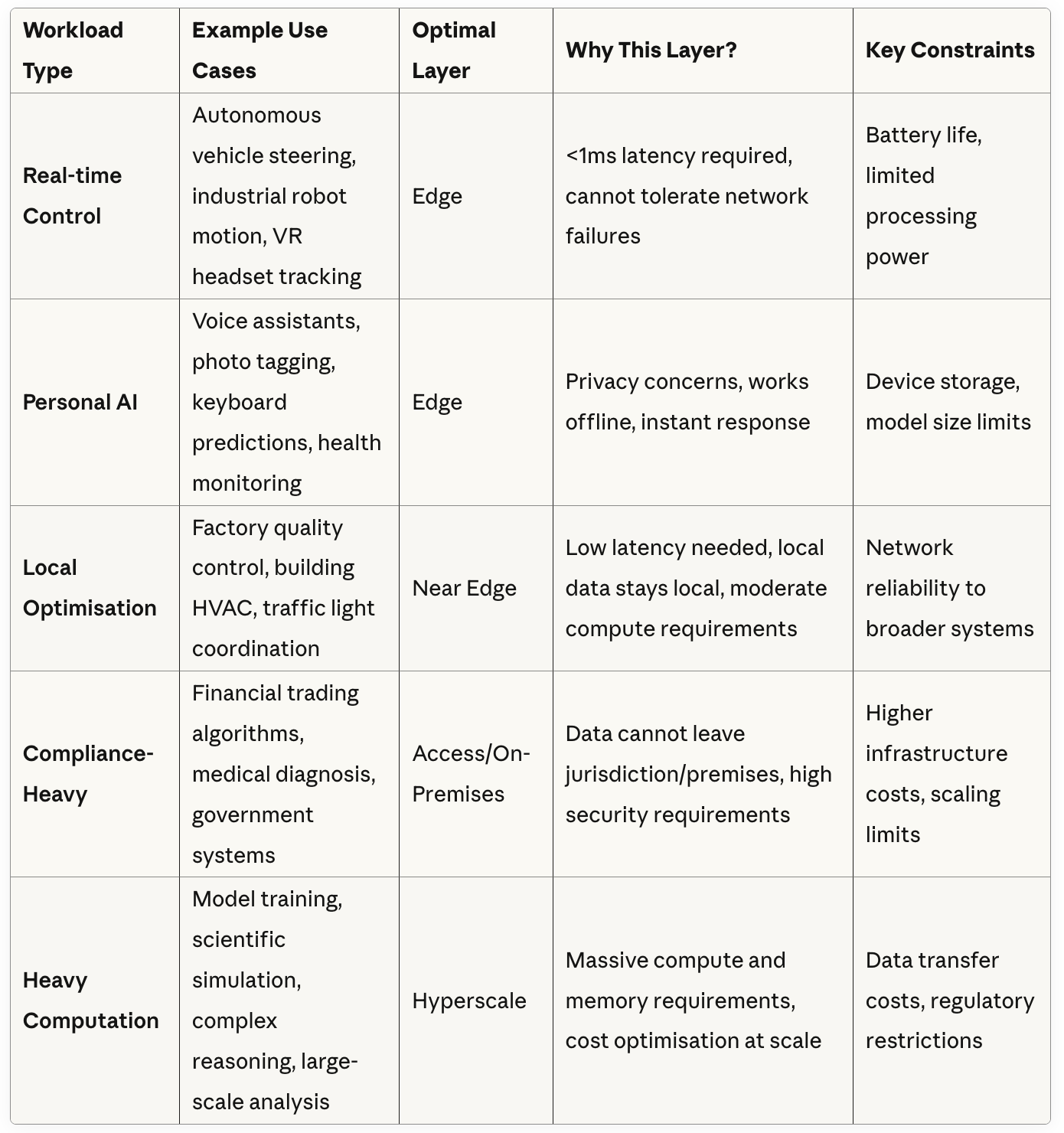

One way to rethink the problem is through what might be called ‘The Compute Gradient’.

Our claim: inference shouldn’t be assumed “in the cloud” or even “mostly in the cloud.” It should be placed along a gradient—dynamically—based on policy and physics: latency budgets, privacy/regulatory constraints, data gravity, link quality/cost, power, and failure tolerance.

“Programmable” means you can move workloads, split them, or fail them over, at build-time and at run-time.

Edge: Your phone, headset, or car runs models locally, instant response, private, but power-limited.

Near edge: A cell tower or factory gateway processes streams nearby, low delay, modest compute.

Access: Think enterprise edge & co-lo: A server rack in a hospital or trading floor, keeps data on-site, but costly to run.

Regional hubs: A city-level data centre, good balance of compliance and speed, medium capacity.

Hyperscale: The vast cloud mega-centre, huge power and latest chips, but farther away and pricier to move data.

Distributed and federated approaches will cut across the gradient. Instead of pulling all data into the cloud, models train or infer locally and only share updates. Google’s Gboard keyboard as we all know and love, or Apple’s Private Cloud Compute blends on-device and secure server inference. These patterns let intelligence improve collectively without centralising raw data, pushing compute closer to where information originates.

ChatGPT and the Great Bundling

Tanayj • September 22, 2025

Essay•AI•ChatGPT

ChatGPT released a really interesting paper last week that went deep into how it’s being used by its over 700 million monthly active users over the last year.

About 73% of its usage is now for non-work tasks, with 23% for work tasks. But perhaps most interesting was the graphic below which broke down how people are using ChatGPT for different use cases and different “jobs to be done”. It clearly shows that ChatGPT has become an all-in-one assistant across personal and work tasks and multiple jobs to be done.

Some of these jobs to be done required other websites or tools to serve the same need, and some of these jobs to be done are things where there wasn’t a good parallel prior to the advancements in LLMs. Let’s discuss further.

The bundle that is ChatGPT

“There are only two ways to make money in business. You can bundle, or you can unbundle.” — Jim Barksdale.

Today, it’s clear that ChatGPT is basically used as an all-in-one tool that bundles and absorbs many jobs that used to live in separate products.

Some of the top use cases of ChatGPT per the chart are:

Practical Guidance (28.3%) which includes brainstorming ideas, getting how-to type help on aspects of life, fitness, self care as well as tutoring or teaching. These are things which people relied on websites such as YouTube, Reddit, etc before for the former, and YouTube, KhanAcademy and Chegg for the latter, but were not personalized or adaptable to any question.

Writing and editing (28.1%) including writing drafts of posts, emails, etc, editing and summarizing existing text. This is something which may have been done by things like spellcheck, Grammarly, and parts of these (first drafts from scratch) really weren’t possible or accessible before.

Seeking Information (21.3%) which is very clearly a form of search and information retrieval which people relied on Google, specific vertical blogs and forums for.

Below is a version of the chart showing what various tools and products ChatGPT can “substitute” across the various use cases by fulfilling the job to be done itself.

Bundling wins here for simple reasons. One interface replaces a pile of tabs/apps, which cuts switching costs and makes the next step instant. A single memory carries context across jobs, so the draft you wrote informs the summary you ask for, which informs the email you send. Coverage matters too. ChatGPT is good enough across many jobs in the same session, which is how real work and life actually happen and so if you aren’t a frequent user of a use case, you never need to bother finding the best product for it. And then of course, speed improves because the model does the next action (giving you the answer) rather than handing you links.

But it is not just that ChatGPT can perform many jobs other tools could do. It enables jobs that are bigger and more fluid because of how LLMs work. The paper frames usage as Asking, Doing, and Expressing. Asking is about getting information or advice. Doing is asking the model to produce an output you can plug into a process. Expressing is sharing views or feelings.

Across the sample, about 49% of messages are Asking, 40% are Doing, and 11% are Expressing. For work messages, about 56% are Doing, and most of those are writing tasks. By late June 2025 the split was 51.6% Asking, 34.6% Doing, and 13.8% Expressing.

Abundant Intelligence

Sam altman • Sam Altman • September 23, 2025

Essay•AI•Compute•Infrastructure•Semiconductors

Growth in the use of AI services has been astonishing; we expect it to be even more astonishing going forward.

As AI gets smarter, access to AI will be a fundamental driver of the economy, and maybe eventually something we consider a fundamental human right. Almost everyone will want more AI working on their behalf.

To be able to deliver what the world needs—for inference compute to run these models, and for training compute to keep making them better and better—we are putting the groundwork in place to be able to significantly expand our ambitions for building out AI infrastructure.

If AI stays on the trajectory that we think it will, then amazing things will be possible. Maybe with 10 gigawatts of compute, AI can figure out how to cure cancer. Or with 10 gigawatts of compute, AI can figure out how to provide customized tutoring to every student on earth. If we are limited by compute, we’ll have to choose which one to prioritize; no one wants to make that choice, so let’s go build.

Our vision is simple: we want to create a factory that can produce a gigawatt of new AI infrastructure every week. The execution of this will be extremely difficult; it will take us years to get to this milestone and it will require innovation at every level of the stack, from chips to power to building to robotics. But we have been hard at work on this and believe it is possible. In our opinion, it will be the coolest and most important infrastructure project ever. We are particularly excited to build a lot of this in the US; right now, other countries are building things like chips fabs and new energy production much faster than we are, and we want to help turn that tide.

Over the next couple of months, we’ll be talking about some of our plans and the partners we are working with to make this a reality. Later this year, we’ll talk about how we are financing it; given how increasing compute is the literal key to increasing revenue, we have some interesting new ideas.

Tech can fix most of our problems (if we let it)

Noahpinion • September 25, 2025

Essay•AI•Disinformation•ConspiracyTheories•ContentModeration

Photo by Dieter Rabich via Wikimedia Commons

The other day, I was invited to a dinner with some foundation people, technologists, and journalists, to talk about AI. At dinner, the topic of AI-powered disinformation came up, and someone suggested that AI itself could provide a solution. One of the guys at the dinner thundered: “We can’t just tech our way out of the problem!”.

Until then I had hung back and stayed out of the conversation, preferring to listen to the arguments of people who knew more about the topic than I did. But the bald assertion that there’s no technological solution to the disinformation problem bothered me, especially because it was presented without any supporting evidence or rationale. I reminded him that Costello et al. (2024) found that just talking to an AI helped reduce belief in conspiracy theories:

Widespread belief in unsubstantiated conspiracy theories is a major source of public concern…Here, we…ask whether it may be possible to talk people out of the conspiratorial “rabbit hole” with sufficiently compelling evidence…

Across [our] two experiments, 2190 Americans articulated—in their own words—a conspiracy theory in which they believe, along with the evidence they think supports this theory. They then engaged in a three-round conversation with the LLM GPT-4 Turbo, which we prompted to respond to this specific evidence while trying to reduce participants’ belief in the conspiracy theory (or, as a control condition, to converse with the AI about an unrelated topic)…

The treatment reduced participants’ belief in their chosen conspiracy theory by 20% on average. This effect persisted undiminished for at least 2 months; was consistently observed across a wide range of conspiracy theories, from classic conspiracies involving the assassination of John F. Kennedy, aliens, and the illuminati, to those pertaining to topical events such as COVID-19 and the 2020 US presidential election; and occurred even for participants whose conspiracy beliefs were deeply entrenched and important to their identities. Notably, the AI did not reduce belief in true conspiracies. Furthermore, when a professional fact-checker evaluated a sample of 128 claims made by the AI, 99.2% were true, 0.8% were misleading, and none were false. The debunking also spilled over to reduce beliefs in unrelated conspiracies, indicating a general decrease in conspiratorial worldview, and increased intentions to rebut other conspiracy believers.

In fact, this is just one possible way that AI could help fix the disinformation problem. AI could also act as a scalable content moderator — what I’ve referred to as a “Digital Walter Cronkite”. Or AI assistants could help people figure out when someone online is lying to them.

Of course, we don’t yet know if those solutions will work at scale, especially if AI gets leveraged to produce more disinformation in the first place. Nor do we know how to get regular people to use the AI solutions in the real world — you can’t just order everyone to talk to ChatGPT every time they hear a conspiracy theory. But while technological solutions are still speculative, the research literature is encouraging, and it makes absolutely no sense to dismiss the possibility that AI will eventually function more as an engine of truth in our society than as an engine of lies.

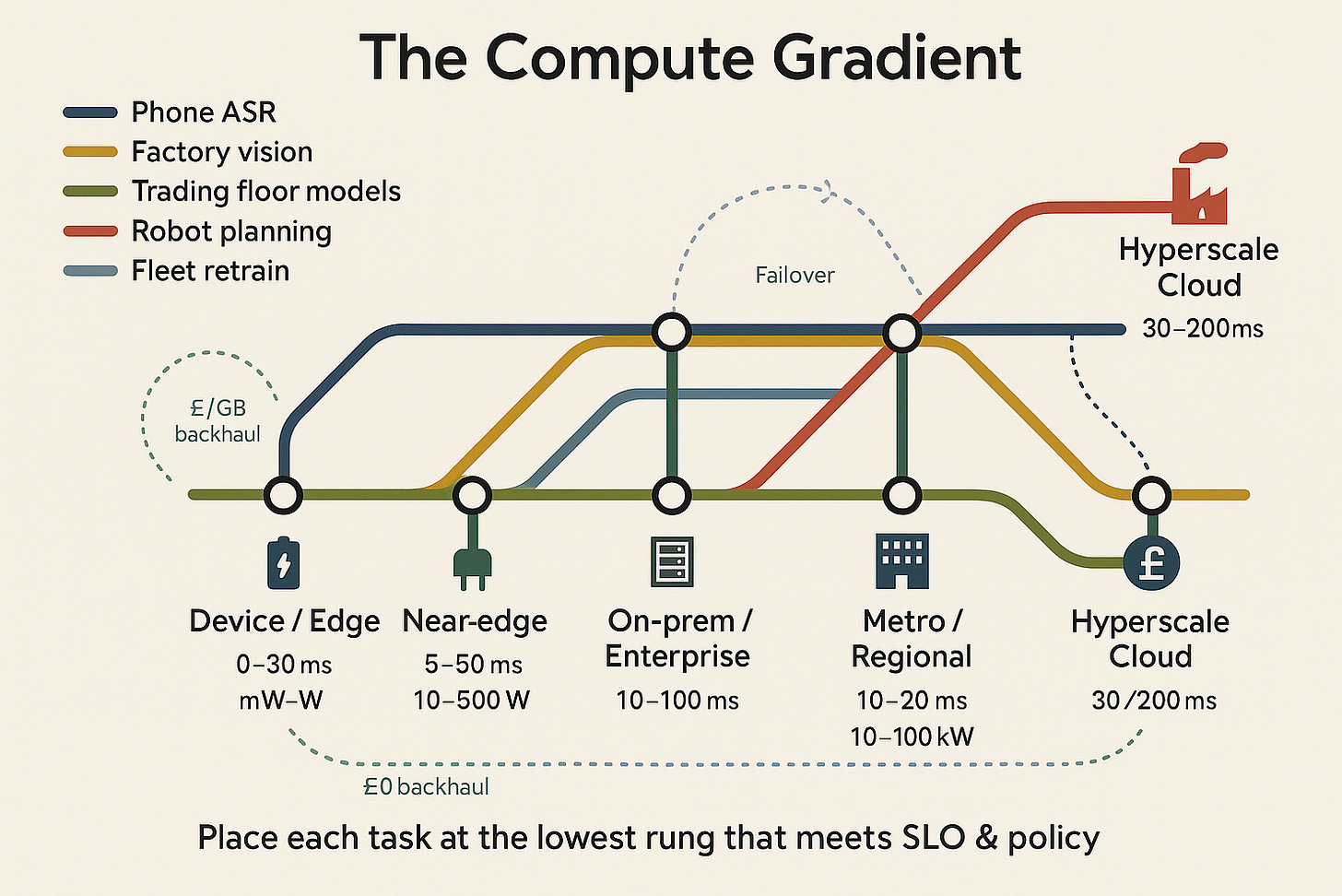

What will happen to the hundreds of unicorns who haven’t raised since 2021?

Peter Walker - Linkedin • September 21, 2025

Venture•Essay

Overview

The post examines how “unicorn” creation and broader startup activity have reset from the 2021 boom to a more selective, fundamentals-first market. It argues that unicorn valuations have become rarer and later-stage, while exits increasingly come via acquisitions rather than IPOs. As one line puts it, “Unicorns are no longer easy to find,” reflecting a shift away from the ZIRP-era surge. (linkedin.com)

Unicorn creation and where the 2021 cohort stands

Pace of new unicorns has slowed markedly compared with 2021’s glut: in the first half of 2024, an average of 21 companies per quarter (US, on one large cap-table platform’s dataset) reached $1B+, versus 333 new unicorns in 2021. The newer unicorns skew later-stage, are slightly more diversified across industries, and remain West Coast–weighted. (linkedin.com)

A retrospective on unicorns minted through 2021 shows a bifurcated picture: about half have not raised since their unicorn round (many stockpiled cash in 2021 and cut burn), and among those that did raise later, nearly half took down rounds—evidence that many were “over their skis” on valuation. (buzzsprout.com)

A complementary analysis of pre‑2022 unicorns tracked 14 known shutdowns and 78 exits (M&A or IPO). Of the remaining cohort, roughly 29% raised some financing post‑2021, but only about 21% managed a true “primary” up-the-stack round with new investors; down rounds accounted for just under 13% of the total, and nearly 45% of post‑2021 unicorn rounds were down rounds—exceptionally high by historical standards. (linkedin.com)

Fundraising patterns and sector signals

Later-stage software fundraising medians in 2024 placed a significant share of Series D/E+ rounds at or above unicorn territory, but the distribution was wide and highly selective. Median post‑money valuations cited for software: ~$52M (Series A), ~$140M (Series B), ~$238M (Series C), ~$637M (Series D), and ~$1.2B (Series E+), underscoring that only stronger companies are clearing late‑stage markups. (linkedin.com)

At the earlier stages, 2024 Series A activity clustered in SaaS (including AI), biotech, and healthtech by volume. AI‑SaaS led median valuations; renewables led median cash raised—signaling investor appetite for capital‑intensive categories with clearer unit economics or secular tailwinds. (linkedin.com)

Liquidity: M&A thaw vs. IPO drought

Startup M&A has been the practical outlet for liquidity: Q3 2024 saw 163 acquisitions within one large private‑market dataset—the highest since mid‑2022—and 2024 ended with about 590 acquisitions on that platform, a 10–15% YoY increase. However, the post stresses that many deals are small and do not necessarily generate meaningful proceeds for common shareholders. (linkedin.com)

By contrast, the IPO window remained largely shut through 2024, keeping many late‑stage companies private longer and forcing boards to weigh trade‑offs between down‑rounds, structured growth financing, or strategic sales. (linkedin.com)

Implications

For founders: Expect higher bars for traction (e.g., stronger ARR thresholds at Series A), rigorous capital efficiency, and realistic pricing as investors triangulate valuation to durable growth and margin structure. Sector selection matters: categories like AI‑SaaS, cybersecurity, and renewables continue to command premium medians or larger checks, but scrutiny of metrics is intense. (linkedin.com)

For investors: The backlog of 2021‑vintage unicorns implies continued recapitalizations, down rounds, or consolidation. Secondary and M&A markets are functioning release valves, but returns will vary widely by entry price and structure. A disciplined approach to follow‑ons—paired with opportunistic acquisition theses—appears prudent in 2025. (buzzsprout.com)

Key takeaways

Unicorns are being minted at a far slower, later‑stage cadence than in 2021; creation is now the exception, not the norm. (linkedin.com)

A large share of pre‑2022 unicorns have either not returned to market or have accepted valuation resets; shutdowns and selective exits are accumulating. (linkedin.com)

With IPOs scarce, M&A is up and serving as the primary path to liquidity, though not all deals deliver value for employees and early holders. (linkedin.com)

Sector dispersion persists: AI‑SaaS leads valuations; renewables, hardware‑adjacent, and cybersecurity show strength in check sizes or investor interest; fundamentals trump narratives. (linkedin.com)

AI

Reddit Wants a Lot More Money From AI Companies

Nymag • John Herrman • September 22, 2025

AI•Data•Reddit

For years now, on a wide range of topics, Google Search has been sending lots of people to Reddit. At first, this wasn’t the company’s idea: Users, unimpressed by spammy, cluttered, inauthentic results, started appending the site’s name to their queries on their own. Gradually, both Google and Reddit embraced this dynamic. For Google, that meant giving more visibility to Reddit posts by default; for Reddit, it mostly just meant welcoming a flood of traffic, which was timed nicely for its IPO: The company’s stock price has increased by more than 400 percent since its listing in 2024, while its revenue has doubled.

As this co-dependence became outwardly obvious, another aspect of their relationship — this one more formal — was being negotiated in private. Google didn’t just need Reddit to fill out its search product. It needed Reddit to train its AI models and to provide those models with fresh material to retrieve, summarize, and synthesize once they were deployed in products. Reddit’s formal licensing deal with the company — like its deals with OpenAI — was carefully portrayed as separate from the companies’ alignment on Search. As Google’s AI and search products continue to merge, this division may cease to make sense. At the same time, as Reddit’s role as a source of at least relatively human online content becomes even starker, the platform is looking for an adjustment to its terms. From Bloomberg:

Reddit Inc. is in early talks to strike its next content-sharing agreement with Alphabet Inc.’s Google, aiming to extract more value from future deals now that its data plays a prominent role in search results and generative AI training.

Reddit, more than a year and a half after its first data-sharing deal with Google for a reported $60 million, is in talks for deeper integration with Google’s AI products, according to executives familiar with the discussions.

In short, Google uses Reddit to prop up its wildly profitable but conspicuously shoddy search product, the proceeds from which go to building AI tools, which also depend on Reddit but will likely send it less traffic than Search has. A one-off deal this time around would likely be much larger, and the company, which recently expressed its support for a usage-based AI licensing standard, is also considering “dynamic pricing, where the social platform can be paid more as it becomes more vital to AI answers.”

Nvidia and OpenAI are mostly performing for the algorithm

Ft • September 22, 2025

AI•Funding•Nvidia•OpenAI•DataCenters

Nvidia and OpenAI outlined a strategic partnership to deploy at least 10 gigawatts of Nvidia systems — representing millions of GPUs — to build OpenAI’s next-generation AI infrastructure. The plan is framed by a letter of intent under which OpenAI will purchase Nvidia systems for large-scale training and inference while Nvidia, in parallel, intends to invest up to $100 billion in OpenAI as each gigawatt of capacity is brought online.

The first phase targets delivery in the second half of 2026 on Nvidia’s Vera Rubin platform. An initial $10 billion tranche is tied to completion of the first gigawatt, with subsequent investments scheduled progressively alongside further deployments. Nvidia’s stake is designed to be non‑controlling, while OpenAI designates Nvidia as a preferred strategic compute and networking partner and the two companies commit to co‑optimise software and hardware roadmaps for efficiency and scale.

The arrangement seeks to ease compute bottlenecks that have constrained the training and serving of frontier models. It complements existing collaborations with hyperscalers and infrastructure providers, and is intended to accelerate the rollout of new capabilities to hundreds of millions of users and enterprise developers. The phased structure aligns funding with infrastructure readiness, power availability and supply-chain cadence, while concentrating most of the buildout in high‑capacity data center locations.

Beyond financing mechanics, the deal’s architecture underscores how AI’s center of gravity is shifting to capital‑intensive “AI factories” that integrate chips, networking, power and software stacks. It also highlights the feedback loop between narrative and market dynamics: announcements at this scale shape perceptions of technical leadership and strategic momentum at the same moment they provision the compute needed for the next models.

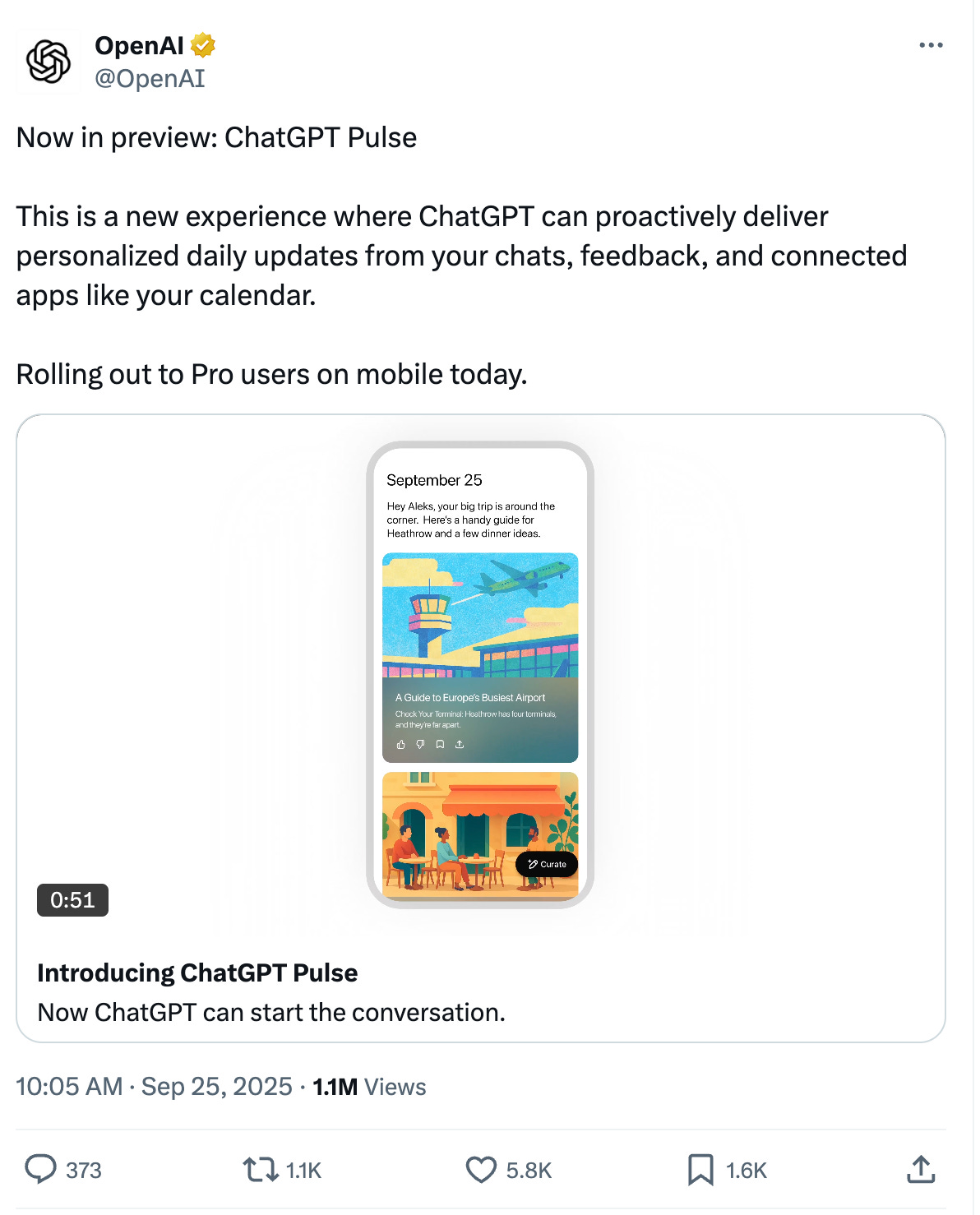

Introducing ChatGPT Pulse

Youtube • OpenAI • September 25, 2025

AI•Tech•ChatGPTPulse•PersonalizedUpdates•Mobile

We’re releasing a preview of ChatGPT Pulse to Pro users on mobile. Pulse is a new experience where ChatGPT proactively does research and delivers personalized updates based on your chats, feedback, and connected apps like your calendar. These updates appear as topical visual cards you can scan quickly or open for more detail, helping each day start with a focused set of information. We’ll learn from early use before broader rollout, with the goal of making it available to everyone.

Made for you once a day, every day: ChatGPT can now do asynchronous research on your behalf. Each night, it synthesizes information from your memory, chat history, and direct feedback to determine what’s most relevant to you, then delivers personalized, focused updates the next day. Examples include follow‑ups on topics you discuss often, quick dinner ideas for that evening, or next steps toward a longer‑term goal. You can connect Gmail and Google Calendar to provide context for more relevant suggestions; these integrations are off by default and can be turned on or off anytime. Topics shown in Pulse pass safety checks.

You decide what shows up. You can ask ChatGPT what to research for you each day and tap “curate” to request future editions—like a Friday roundup of local events or a focus on a specific topic. Give quick feedback with a thumbs up or thumbs down, and view or delete your feedback history. Over time, your guidance makes Pulse more personal and useful.

Meant to work for you, not to keep you scrolling: Every morning, ChatGPT delivers a curated set of the most relevant updates so you can get back to what matters. Each update is available for that day only unless you save it as a chat or ask a follow‑up, which adds it to your conversation history. Expand any update to dive deeper, request next steps, or save it for later.

Availability and controls: Pulse is currently a product preview available to Pro users on iOS and Android (not on web or desktop). It requires Memory to be turned on and can be switched off anytime in settings. You can also remove Pulse from the main conversation view while keeping it accessible from the sidebar. Pulse items expire after a day unless saved; interactions may contribute to model improvement only if you have “Improve the model for everyone” enabled.

What’s next: Pulse is the first step toward a more proactive ChatGPT that connects conversation, memory, and your apps to research, plan, and take helpful actions on your behalf at the right moments throughout the day.

We’re Finally Getting a Clearer Look at the Future of Wearables

Nymag • John Herrman • September 25, 2025

AI•Tech•Wearables•SmartGlasses•RayBanDisplay

Meta CEO Mark Zuckerberg, whose company has invested tens of billions of dollars in headset and glasses technology, has lately been making the argument that we’re moving toward a post-smartphone world. “The promise of glasses is to preserve this sense of presence that you have with other people,” he said earlier this month, appealing to a general feeling of smartphone malaise and adding that he thinks “we’ve lost it a little bit with phones, and we have the opportunity to get it back with glasses.” iPhone designer Jony Ive, who recently teamed with Sam Altman at OpenAI, pitched his new AI hardware as an antidote to the “unintended consequences” of his previous invention. And Apple, whose CEO recently said it was “difficult to see a world” in which a shift to new gadgets replaces the iPhone, perhaps aided by AI, is nonetheless deeply invested in the possibility, with one augmented-reality headset under its belt and new slimmer glasses reportedly on the way.

In the tech industry’s first telling, the post-smartphone world is a simple question of what and when: glasses? Watches? Pins? Armbands? Implants? It’s portrayed as a simple matter of progress — in consumer technology, things must be replaced by newer and better things — but also as a reaction to the burdens and distractions of the previous great gadget, from which new gadgets will set us free.

A survey of the post-phone landscape as it exists, though, reveals a complication in this consumerist liberation story. Someday, a new gadget may usher us into the post-smartphone world; in the meantime, the industry will have us trying everything else at once: on our faces, in our ears, around our necks, and on our appendages. Our phones — and the always-on, data-and-attention-hungry logic they represent — aren’t being replaced. They’re being extended.

Take Meta’s new Ray-Ban Display, a pair of regularish glasses with cameras, microphones, a screen built into the lenses, and a wristband for gesture input. The product is getting good reviews, all of which understand it, at least in its current form, as a two-piece “extension” of the smartphone: a tool you can use to take photos, get directions, check messages, or interact with a chatbot without interacting with the phone that remains in your pocket. After the first wave of AI-centric “post-phone” hardware devices flopped, the ones that started to gain traction, including the Fitbit-like Bee pin, were effectively wearable phone accessories, recording users’ surroundings for summarization and recall in a smartphone app. At more than 15 years old, the Apple Watch is, for most wearers most of the time, still a device used with a smartphone on one’s person; the same goes for Android smartwatches from companies like Samsung.

OpenAI and Databricks Strike $100 Million Deal to Sell AI Agents

Wsj • September 25, 2025

AI•Tech•OpenAI•Databricks•AIAgents

OpenAI and Databricks have agreed to a $100 million partnership aimed at accelerating enterprise adoption of AI agents. The deal centers on making it easier for companies to build, deploy, and manage task‑specific agents powered by OpenAI’s flagship model while drawing on data that already lives inside Databricks. Customers would be able to connect internal datasets, set governance and security controls, and monitor usage and performance from within the Databricks environment, while tapping OpenAI’s agentic capabilities for functions such as customer support, sales assistance, analytics automation, and back‑office workflows.

The partnership is framed as a go‑to‑market alliance as much as a technical integration. It is designed to bundle model access, tooling, and enterprise guardrails into a package that can be sold and supported jointly, with an emphasis on compliance, reliability, and cost predictability for large organizations. By aligning Databricks’ data and governance stack with OpenAI’s agents, the companies are pitching a faster path from proof‑of‑concept to production, particularly for regulated industries that require auditability and centralized controls. The goal is to move beyond experimental chatbots toward measurable productivity gains—automating repetitive tasks, summarizing and acting on business data, and orchestrating multi‑step processes under human oversight—while giving IT teams levers to manage identity, permissions, and spend at scale.

OpenAI is upping the ante massively

Ft • September 25, 2025

AI•Tech•OpenAI•Superintelligence•ProductStrategy

Overview

The piece argues that OpenAI is deliberately projecting a bold, almost theatrical confidence to reassure partners, developers, and investors that it remains the standard-bearer in the race toward superintelligence. This “calculated display of self-confidence” is less about a single product drop than a coordinated signal: OpenAI wants the market to believe it still sets the pace on capability, safety, and commercialization, and that others are reacting to its moves rather than the reverse. In a sector where perception quickly shapes distribution, capital, and talent flows, signaling leadership becomes a strategic asset in itself—especially as rivals tout their own breakthroughs and as customers weigh long-term platform bets.

What “upping the ante” looks like

OpenAI’s posture likely includes a faster cadence of model updates and demos, deliberately visible hiring and research milestones, and high-touch outreach to enterprises and developers. It pairs capability headlines with assurances about alignment and safeguards, framing progress as both ambitious and responsible. The message: OpenAI can push toward superintelligence while managing risks, and it can productize advances into tools that matter for productivity, creativity, and software development. The choreography—roadmaps, staged showcases, and ecosystem incentives—aims to anchor mindshare, reduce churn to alternatives, and keep the most coveted researchers and builders in its orbit.

Why the signal matters now

Capital intensity is soaring: access to compute, high-end hardware, and energy is becoming a gating factor. Signaling momentum helps secure multi-year commitments from cloud partners and enterprise customers.

Talent is mobile: the world’s small pool of frontier researchers and safety experts pays attention to who appears to be winning. Confidence and clear direction can become recruiting force multipliers.

Platform lock-in is forming: the first generation of AI-native applications is choosing model providers and toolchains. Perceived leadership influences those sticky decisions.

Regulatory narrative is fluid: demonstrating seriousness about safety and governance can shape how policymakers and the public interpret rapid capability gains.

Strategic trade-offs and risks

This posture carries hazards. Overpromising invites credibility risks if timelines slip, while escalating ambition can attract sharper regulatory scrutiny on safety, copyright, data sourcing, and market power. A visible push toward superintelligence intensifies the “capabilities vs. safeguards” tension, raising questions about evals, red-teaming, and public transparency. Operationally, racing ahead can concentrate technical risk (e.g., scaling bottlenecks, reliability regressions) and business risk (e.g., cost of inference vs. price compression), especially if competitors undercut on price or differentiate on modality, latency, or privacy. There is also ecosystem risk: if the platform appears too dominant or closed, developers may hedge with multi-model strategies.

Implications for stakeholders

Enterprises: Expect more opinionated platforms and prebuilt workflows, with deeper integrations that reduce switching costs. Procurement will weigh governance assurances—auditability, content provenance, and incident response—alongside raw performance.

Developers and startups: Generous credits, tooling, and distribution programs are likely to intensify. But dependency risk rises; designing for portability (abstractions, open formats, eval suites) becomes prudent.

Researchers and safety community: Increased transparency about model behavior, deployment gating, and external evaluations will be essential to sustain the “responsible leadership” claim as capabilities scale.

Investors and partners: The signal seeks to justify continued, possibly expanding, capital outlays for compute and infrastructure. Watch for long-term offtake agreements, energy partnerships, and specialized hardware strategies.

Key takeaways

OpenAI is using narrative discipline—public demos, roadmap framing, and ecosystem incentives—to reinforce its place at the frontier.

The goal is to persuade the market that it leads not only in capability but also in safety and commercialization, keeping capital and talent aligned.

This strategy competes in perception as much as in performance; credibility depends on execution, reliability, and measurable safeguards.

Stakeholders should prepare for faster release cycles, deeper platform ties, and a sharper regulatory spotlight as the race toward superintelligence accelerates.

Bottom line

The article’s core thesis is that OpenAI’s confident stance is a strategic signal designed to shape the field in its favor—locking in developers, calming enterprise risk officers, and deterring rivals—while arguing it can accelerate toward superintelligence without abandoning guardrails. Whether that signal endures will hinge on shipping dependable systems at scale, proving safety diligence, and delivering economics that make frontier AI sustainable for customers and the broader ecosystem.

Google DeepMind unveils new robotics AI model that can sort laundry

Ft • September 25, 2025

AI•Tech•Robotics•Reasoning•GeneralPurposeAI

Overview

A new robotics AI model is presented as a step-change in machine reasoning, aimed at making general-purpose machines more practically useful in everyday contexts. Framed around a tangible benchmark—competently sorting laundry—the system signals progress from narrowly scripted behaviors to goal-directed reasoning that can adapt to varied objects, textures, and environments. The emphasis is on improving how robots interpret tasks, plan multi-step actions, and adjust to uncertainty, rather than merely increasing motor precision.

What’s New

Focus on reasoning-first capabilities: identifying items, inferring categories (e.g., colors, fabrics), and sequencing actions (pick, sort, place) across non-ideal conditions like clutter and occlusion.

General-purpose orientation: designed to transfer skills beyond a single chore, suggesting applicability to home assistance, light industrial kitting, and logistics scenarios.

Push toward utility: the core promise is dependable, repeatable performance on tasks that require judgment calls, not just rote manipulation.

Why It Matters

Improved reasoning narrows the gap between perception and action in real-world settings. Domestic tasks like laundry sorting are deceptively complex: they require robust visual understanding, category inference, and error recovery when items slip, deform, or are misclassified. By addressing these problems, the model demonstrates capabilities that can generalize to many “long tail” tasks, strengthening the case for robots as practical helpers rather than research curiosities.

Potential Use Cases

Household assistance: laundry, dish sorting, pantry organization, and tidying tasks that demand consistent categorization and gentle handling.

Warehousing and fulfillment: item picking, returns processing, and mixed-bin sorting where variability and speed matter.

Elder care and accessibility: routine assistance that reduces cognitive and physical load for users in home environments.

Challenges and Open Questions

Reliability at scale: can reasoning remain robust across diverse homes, lighting conditions, and garment types without extensive re-tuning?

Safety and trust: ensuring fail-safes for contact-rich manipulation and transparent error handling.

Cost and integration: embedding advanced reasoning in affordable hardware with efficient on-device or low-latency edge compute.

Data and adaptation: maintaining performance as inventories and household contexts change, including continual learning without catastrophic forgetting.

Key Takeaways

Reasoning-centered robotics is shifting the focus from narrow demos to adaptable, general-purpose competence.

A “laundry test” highlights practical progress: it blends perception, classification, planning, and dexterous control.

The path to real-world utility hinges on reliability, safety, and cost-effective deployment beyond the lab.

Bain & Co.’s David Crawford: The world is still $800B short to keep pace with AI demand

Youtube • CNBC Television • September 23, 2025

AI•Funding•Semiconductors•DataCenters•Energy

Overview

Bain & Company’s David Crawford argues that global investment is significantly behind the curve needed to meet surging AI demand, estimating an $800 billion shortfall. His central point: the AI stack—from advanced chips and memory to networking, data centers, and power—faces simultaneous constraints, and the bottleneck is no longer a single component but a system-wide capacity gap. The implication is a multi‑year capex cycle in which hyperscalers, semiconductor suppliers, utilities, and specialized infrastructure players must all scale in lockstep to sustain AI adoption.

Where the Shortfall Is Concentrated

Crawford frames the $800B gap as spanning four interdependent layers:

Semiconductors: Leading‑edge GPUs/accelerators, high‑bandwidth memory, packaging, and foundry capacity remain constrained, with demand for training and increasingly inference outstripping planned supply.

Networking and optics: High‑speed switches, optical transceivers, and cabling are critical to move data at scale; underinvestment here can strand expensive compute.

Data centers: Purpose‑built AI facilities require higher power density, advanced cooling, and optimized layouts; build times and permitting add friction even when capital is available.

Power and grid: Reliable electricity—plus transmission, substation upgrades, and, in some cases, on‑site generation—is emerging as the ultimate ceiling for AI growth.

Timelines, Trade‑offs, and Execution Risk

He emphasizes that ramping any single layer without the rest creates diminishing returns. For instance, adding GPUs without sufficient HBM, or memory without adequate networking, leaves capacity idle. Similarly, data center lead times and grid interconnections often span years, extending the time to translate orders into usable AI capacity. Crawford suggests that efficiency improvements—through better model architectures, sparsity, lower‑precision compute, and software optimizations—will help, but they are unlikely to erase the near‑term need for massive physical buildouts.

Demand Drivers and the Shape of Spend

Crawford links persistent demand to three flywheels:

Model training at frontier scale as companies race to improve capabilities.

Inference growth driven by enterprise deployments embedded in workflows, not just experimental pilots.

Platform effects from hyperscalers and leading software vendors, which normalize AI usage across industries and regions.

He notes that as inference scales, the mix of spend may shift toward memory, networking, and power—areas that determine throughput and cost per token—while training continues to anchor leading‑edge silicon demand.

Implications for Investors, Operators, and Policymakers

Capital allocation: Expect prolonged capex cycles by cloud providers and chipmakers; second‑order beneficiaries include memory suppliers, substrate/packaging vendors, optical networking, liquid cooling, and power equipment companies.

Supply chain strategy: Vertical partnerships and prepayments/long‑term offtakes could become standard to de‑risk capacity. Firms may co‑develop reference designs that jointly optimize compute, network, and thermal envelopes.

Energy strategy: Utilities and data center operators may pursue dedicated generation (renewables, gas, nuclear SMRs over time) and storage, alongside grid upgrades. Siting decisions will tilt toward power‑abundant regions with favorable permitting.

Policy: Streamlined approvals for power and transmission, incentives for advanced manufacturing, and workforce development in electrical, mechanical, and fabrication trades could accelerate delivery and reduce project risk.

What to Watch Next

Evidence of multi‑year supply agreements across chips, HBM, optics, and power gear.

Data center buildout metrics: MW added, PUE targets, adoption of liquid cooling, and regional siting trends.

Efficiency milestones: Model compression, mixed‑precision breakthroughs, and inference serving improvements that bend unit economics.

Power ecosystem shifts: Co‑location with generation, novel financing for grid assets, and changes in interconnection queues.

Key Takeaways

The headline claim is that global AI infrastructure investment is about $800B below what near‑term demand requires.

Bottlenecks are system‑wide; solving compute alone won’t help without memory, networking, facilities, and power.

The cycle favors integrated planning, earlier supplier commitments, and closer cloud–hardware–energy collaboration.

Policy and permitting pace will materially affect how quickly the gap closes and who captures the value.

OpenAI Unveils Plans for Seemingly Limitless Expansion of Computing Power

Wsj • September 23, 2025

AI•Tech•Stargate•DataCenters•Compute

Overview

OpenAI is showcasing an enormous build‑out of AI computing capacity beginning in the Texas prairie near Abilene, positioning the site as “ground zero” for an unprecedented scale-up of data centers and power. The initiative—backed by partners including Oracle and SoftBank—envisions shepherding as much as $1 trillion into AI infrastructure over the coming years. The Abilene complex is planned as a flagship supercomputing campus within a broader network of new facilities across the United States. According to reporting, OpenAI’s expansion drive targets more than 20 gigawatts (GW) of compute capacity over time, with scenarios that ultimately contemplate 100 GW at multi‑trillion‑dollar cost. (wsj.com)

What’s being built in Texas

The Abilene site comprises a cluster of cutting‑edge data centers—eight buildings at full build‑out—with an initial power envelope near 900 megawatts (MW), anchoring what OpenAI describes as the world’s largest AI training facility. Early racks are being populated with Nvidia GB200‑class systems. Local leaders tout jobs and investment, while residents weigh environmental and land‑use tradeoffs. (wsj.com)

Additional, newly announced sites expand the footprint beyond West Texas: Oracle is developing facilities in nearby Shackelford County and in Doña Ana County, New Mexico, with further locations in the Midwest; SoftBank has broken ground in Lordstown, Ohio, and Milam County, Texas. (washingtonpost.com)

Partners, financing, and evolving scale

The Texas hub is part of a multi‑party push sometimes described under the “Stargate” banner—a joint effort involving OpenAI, Oracle, and SoftBank to reimagine hyperscale AI data centers. Recent updates added 4.5 GW of new capacity under development, lifting the announced pipeline above 5 GW mid‑year. (cnbc.com)

A complementary set of cloud partners is emerging. CoreWeave expanded its supply agreements with OpenAI to as much as $22.4 billion in total 2025 contracts, supporting up to 10 GW of targeted capacity under Stargate and adjacent programs. Such interlinked deals, which also involve major Nvidia orders, highlight both the capital intensity and antitrust scrutiny of AI infrastructure finance. (reuters.com)

Financing at the project level is materializing. A Crusoe‑developed Abilene facility—slated to be OpenAI’s largest—secured $11.6 billion to expand from two to eight buildings, bringing total commitments for that site to roughly $15 billion. Oracle has been named an anchor customer. (thestar.com.my)

Why Texas—and why now

Policymakers and executives frame Texas as an ideal locus for AI build‑outs: abundant land, favorable permitting, expanding transmission, and culturally pro‑infrastructure politics. “Texas is ground zero for AI” because data centers need “abundant, low‑cost energy,” argued Sen. Ted Cruz at a site tour. (washingtonpost.com)

The demand signal is real: OpenAI’s services, including ChatGPT, are seeing hundreds of millions of weekly users, and model training cycles continue to swell in size and duration, making power and silicon the binding constraints on progress. OpenAI has argued that today’s systems are “slow” and “not as smart as we’d like,” and that far more compute is required to unlock new products. (wsj.com)

Hardware pipeline and timelines

Nvidia remains the principal supplier of AI accelerators for these facilities. In parallel, Nvidia announced plans to invest $100 billion toward OpenAI‑aligned data centers and capacity, with the first gigawatt slated for installation in the second half of 2026—an indicator of the multi‑year horizon for bringing gigawatt‑scale compute online. (apnews.com)

As construction proceeds, OpenAI and Oracle report that parts of the Abilene complex are already energized and receiving new racks, even as several additional U.S. sites are moving through assessment, permitting, and early works. (cnbc.com)

Risks, constraints, and community impact

Power availability, grid interconnection queues, water usage, and environmental footprint are the most immediate constraints. Local sentiment is mixed: economic development and job creation are weighed against land, noise, and resource concerns. Balancing rapid scale with community and ecological stewardship will be decisive for timelines. (wsj.com)

Financially, the build relies on complex vendor financing and long‑term offtake contracts across chipmakers, cloud providers, and specialized “neoclouds.” Observers warn that circular investments and concentrated supplier relationships may invite scrutiny from competition authorities. (reuters.com)

Implications

If realized, Texas could anchor a new industrial base for American AI, catalyzing manufacturing, transmission upgrades, and high‑skilled employment while redefining hyperscale data‑center design around liquid cooling and high‑density power. For OpenAI, the strategy aims to remove compute bottlenecks that limit model capability and product reliability, while diversifying beyond a single cloud partner. For policymakers, siting and permitting reforms, energy mix decisions, and regional planning will shape whether 20‑to‑100‑GW ambitions are feasible—and on what timeline. (wsj.com)

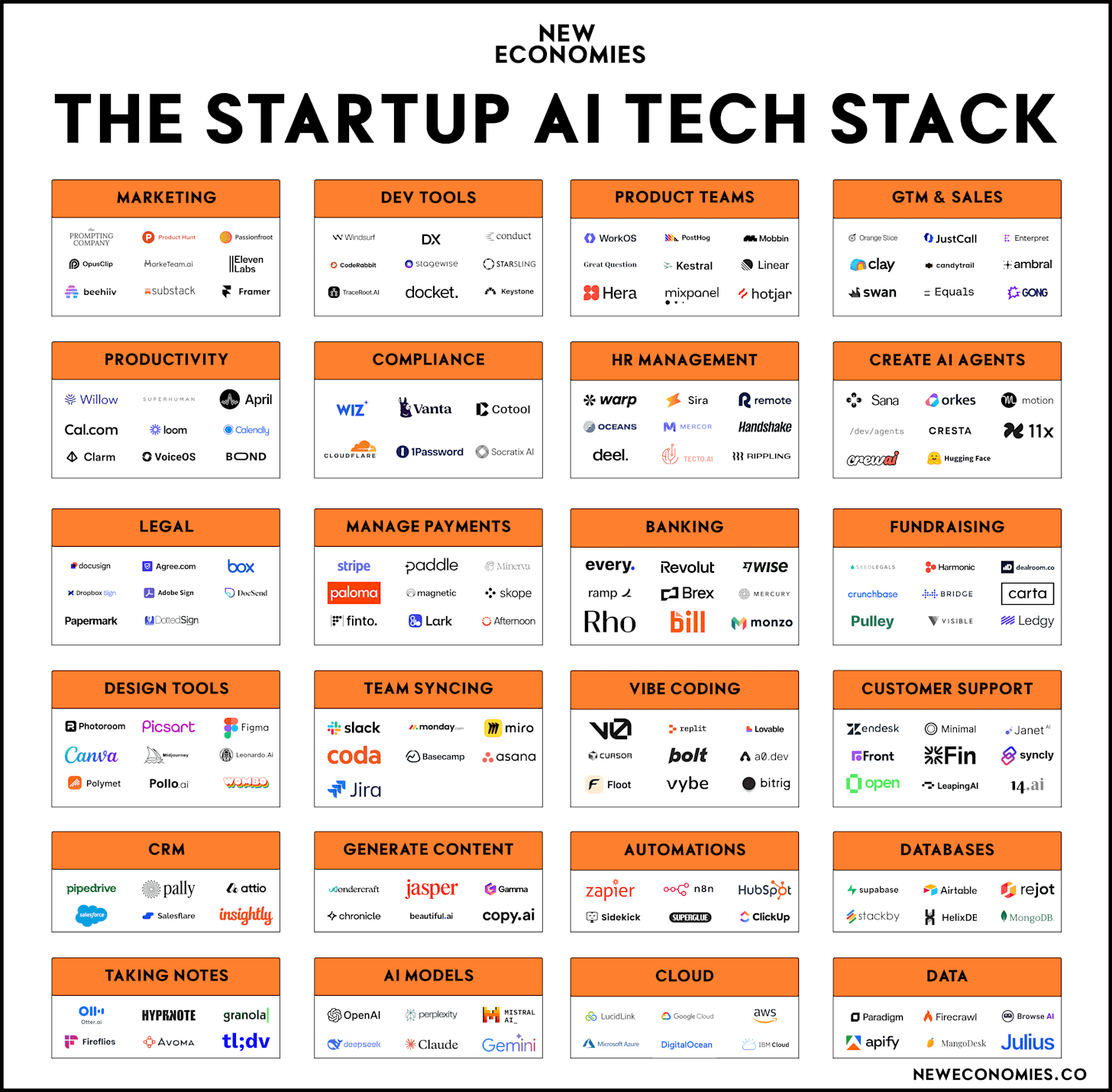

The Startup AI Tech Stack

New economies • Ollie Forsyth • September 22, 2025

AI•Tech•StartupStack•Marketing•DevTools

Hey Founders 👋

With new AI tools emerging every day, the way you build (and rebuild) your tech stack is changing — both in how you adapt and how you use these tools internally and externally.

To help, we’ve published the first comprehensive Startup AI Tech Stack: a guide to the tools that can speed up functions, processes, and daily tasks — and how to put them to work.

Let’s dive in 🚀

The Startup AI Tech Stack Landscape

Our latest landscape highlights the tools startups can use across their tech stack, organized into the following categories:

Row 1: Marketing, Dev Tools, Product teams, and GTM & Sales.

Row 2: Productivity, Compliance, HR Management, and Create AI Agents.

Row 3: Legal, Manage Payments, Banking, and Fundraising.

Row 4: Design Tools, Team Syncing, Vibe Coding and customer support.

Row 5: CRM, Generate Content, Automations, and Databases.

Row 6: Taking Notes, AI Models, Cloud, and Data.

Note: There are countless tools, and not every platform is featured. Share your favorites in the comments if we missed them. Some platforms span multiple categories — we’ve placed them where they fit best.

This is how you can use each tool within your tech stack 👀

Marketing

The Prompting Company: Be found through ChatGPT and similar platforms.

Substack: Publish your newsletters.

Product Hunt: Launch your project and get fans.

Passionfroot: Find creators for your business.

Opus Clips: Automatically create short viral clips.

MarkeTeam: Your AI marketing agents.

Framer: Publish your website.

Eleven Labs: Translate your content into multiple languages.

Beehiiv: Publish your newsletters.

Venture

Anthropic: “$60BN is Cheap”

Youtube • 20VC with Harry Stebbings • September 22, 2025

Venture

Watch this video on YouTube

Binance co-founder Zhao aims to open $10bn portfolio to outside investors

Ft • September 22, 2025

Venture

Firm is already one of world’s biggest crypto investors and is considering converting into external facing fund

Behind the Numbers: A Closer Look at Carta’s Q2 2025 Private Market Data

Youtube • Carta • September 22, 2025

Venture

Watch this video on YouTube

More Thoughts on the Existential Crisis in Seed: One Question, Two Views of the World

Nextview • Rob Go • September 23, 2025

Venture

There has been a lot of additional chatter since I shared my post on the existential crisis facing seed investors. There are too many to share, but I’ll include links to a few of my favorites at the end.

These posts and other IRL conversations have spurred a new framework through which I am understanding the market today.

There is essentially only one question that drives investing strategies: What is the most profitable inefficiency to target in a given market? Market inefficiency is where returns are generated. Figuring out what inefficiency exists and developing a strategy to attack it is the name of the game.

In the domain of startup investing, there are two predominant answers to this question that lead to two very different worldviews. The first is what I’d call the “classic venture” approach. This view is that the market is most inefficient in the early stages. These are companies that are largely pre-traction, with teams that may or may not be proven. Classic venture is about sourcing, selecting, and winning these opportunities, and hopefully investing at a cost basis and with a success rate that leads to superior fund-level returns.

The second approach is what I’d call the “super-compounder” approach. The belief here is that the biggest inefficiency is underestimating the ultimate potential of the super-compounders. This worldview fully embraces the extremes of the power law and drives the strategy that the only thing that matters in venture is getting into the one company each year that matters. The name of the game is access: getting into that one super-compounder at all costs, and making sure it’s the blockbuster that you’re hoping for. For all you Wire fans out there: “If you come at the king, you best not miss.”

Three thoughts related to this.

First, these two approaches become more or less attractive depending on what’s going on in the broader market. We are clearly in a moment where it seems like the super-compounder approach is winning. We’ve seen capital concentrate very narrowly into a very small set of companies that seem to be enjoying limitless demand—both from customers and follow-on capital. The fact that we’re so early in a platform shift drives the attractiveness of the super-compounder approach. The current belief is that the kings and queens of the AI era are being anointed right now, and the concentration of winners in the early infrastructure phase of an innovation wave tends to be greater.

Martin Casado also pointed out an interesting dynamic: early in a market’s development, being perceived as the winner provides greater advantages than what you see in a more mature market. This is because, early on, buyers don’t want to be left behind and will just go with the best-known solution. Later, when the market is better understood, customers are more willing to consider a wider set of alternatives or more specialized options. This further fuels the super-compounder approach.

On top of all this, the exit environment has been very lethargic. When the number of M&A, PE exits, or small-scale IPOs is limited, investors decide that they may as well bet the farm on the super-compounders—because it’s not like there are many other ways to win. In fact, the M&A that does happen tends to be in the AI core, as the super-compounders seek to accumulate talent and scale using their war chests of capital and inflated equity.

There are a bunch of other reasons why this has been an era that favors the super-compounder strategy (interest rates, lower cost of capital from sovereign wealth funds, etc). I’ll move on for now, but the point is that in recent years, the broad market environment has been more friendly toward the super-compounder approach vs. the classic venture approach.

Second, the laws of competition ought to drive rotation between these two investing approaches. In a world where the vast majority of capital is chasing the super-compounder approach, the market will cease to be inefficient. At some point, prices rise so quickly for the super-compounders that returns will significantly degrade. In desperation, super-compounder investors will start to deploy capital into less and less attractive businesses at higher and higher prices. The flood of capital and unrealistic expectations of these companies’ terminal scale will actually hurt the performance of otherwise quality assets.

We’ve seen this movie before during the peak exuberance of SoftBank’s late-stage investing, and I’ve discussed this effect at length in my post on express trains and warp zones.

While this exuberance is happening, there will eventually be such a dearth of contrarian early-stage capital that valuations in that segment will start to drop and companies with great potential will be largely overlooked. The substrate for classic venture investors will become more and more attractive, leading to better and better performance in this segment of the market. The tricky part will be that the downstream financing risk for these companies will be significant—because the big pools of capital are all chasing a tiny number of opportunities of the same profile. But this is precisely why seed entry prices will drop, and why there will be market inefficiency that rewards the classic early-stage approach.

Third thought: I think it’s critically important for managers to know which of the two games they are playing. Trying to oscillate between the two is likely a fool’s errand, as the two approaches require vastly different skills and strategies. You’ll likely miss many of the best opportunities in the time it takes to adjust course. Also, irrespective of which approach you take, the industry as a whole is getting more efficient. So whatever your strategy, you must constantly pound the rock to keep elevating your game…

The Automated VC | Yohei Nakajima, Founding Partner, Untapped VC

Youtube • Carta • September 25, 2025

Venture

Overview

The conversation explores how venture capital can be reimagined through automation and AI, reframing the investor’s job from manual triage to system design. It outlines the shift from intuition-led workflows to data-augmented, software-driven processes that scale sourcing, screening, diligence, and portfolio support. The discussion emphasizes building pipelines and feedback loops that continuously learn from outcomes, enabling faster iteration, broader coverage of opportunities, and more consistent decision-making without discarding human judgment where it matters most.

Why Automate VC?

Automation addresses three perennial constraints: time, coverage, and consistency. Traditional processes struggle to track the long tail of emerging founders, sector micro-trends, and pre-seed/pre-product signals. By instrumenting the funnel—ingesting signals from public repositories, product launches, hiring patterns, customer reviews, and social engagement—systems can surface candidates earlier and rank them against evolving theses. Automation also introduces repeatability: the same inputs produce traceable outputs, aiding internal alignment and enabling better post-mortems when investments succeed or miss.

Core Building Blocks

Data pipelines: capture structured and unstructured signals (web, APIs, user feedback) into a unified store with entity resolution for teams, products, and markets.

Scoring and ranking: combine rules-based screens (traction thresholds, market fit proxies) with learned models that update weights as outcomes accrue.

Agentic workflows: task-specific agents for sourcing, founder outreach, memo drafting, diligence checklists, and market landscaping—each instrumented with guardrails and human-in-the-loop review.

Knowledge graphs: link companies to people, prior employers, technologies, and partnerships to assess founder-market fit and network effects.

Experimentation layer: A/B tests on outreach copy, screening thresholds, and diligence prompts to optimize conversion at each funnel stage.

From Sourcing to Diligence

Automated sourcing casts a wider net, but selectivity improves through multi-stage filters. Early screens evaluate momentum signals (shipping cadence, community traction), then escalate to deeper diligence: customer interviews supported by templated questions, competitive maps generated from product feature extraction, and unit-economics stress tests. The aim is not to replace partner conviction but to provide richer, faster context—highlighting disconfirming evidence as rigorously as bullish signals.

Portfolio Support and Measurement

Post-investment, automation extends to value-creation: tracking product analytics to spot churn risk, matching founders with advisors using skills graphs, and monitoring hiring pipelines. Dashboards roll up portfolio health, revenue trajectories, runway, and follow-on readiness. Critically, the same telemetry feeds back into sourcing models to refine what “good” looked like at entry, closing the loop between thesis and evidence.

Risks, Bias, and Guardrails

Automated systems can encode historic biases, overweight noisy vanity metrics, or chase hype cycles. The conversation underscores transparent model documentation, regular bias audits, and counterfactual testing (e.g., how many outliers would the system have filtered out?). Privacy-safe data practices and consent-based enrichment are essential, as is preserving a carve-out for non-consensus, story-driven bets that models might underrate.

Implications for Founders and Investors

For founders, automation may accelerate initial responses and clarity on fit; however, authenticity in outreach and the ability to articulate defensible, non-obvious insights remain decisive. For investors, competitive advantage shifts from exclusive access toward the quality of data assets, feature engineering, agent design, and organizational adoption. Firms that treat their workflow like a product—shipping versions, measuring impact, and teaching teams to collaborate with agents—will compound learning and speed.

Key Takeaways

Automation expands coverage, increases velocity, and improves consistency across the VC funnel while keeping humans in the loop for judgment calls.

Data pipelines, agentic workflows, and knowledge graphs are the practical foundations of an “automated VC” stack.

Continuous experimentation and outcome-linked feedback loops are crucial to avoid static or biased models.

Post-investment telemetry not only drives portfolio support but also refines sourcing and screening over time.

Ethical, privacy, and bias guardrails are as important as technical performance to build trust with founders and LPs.

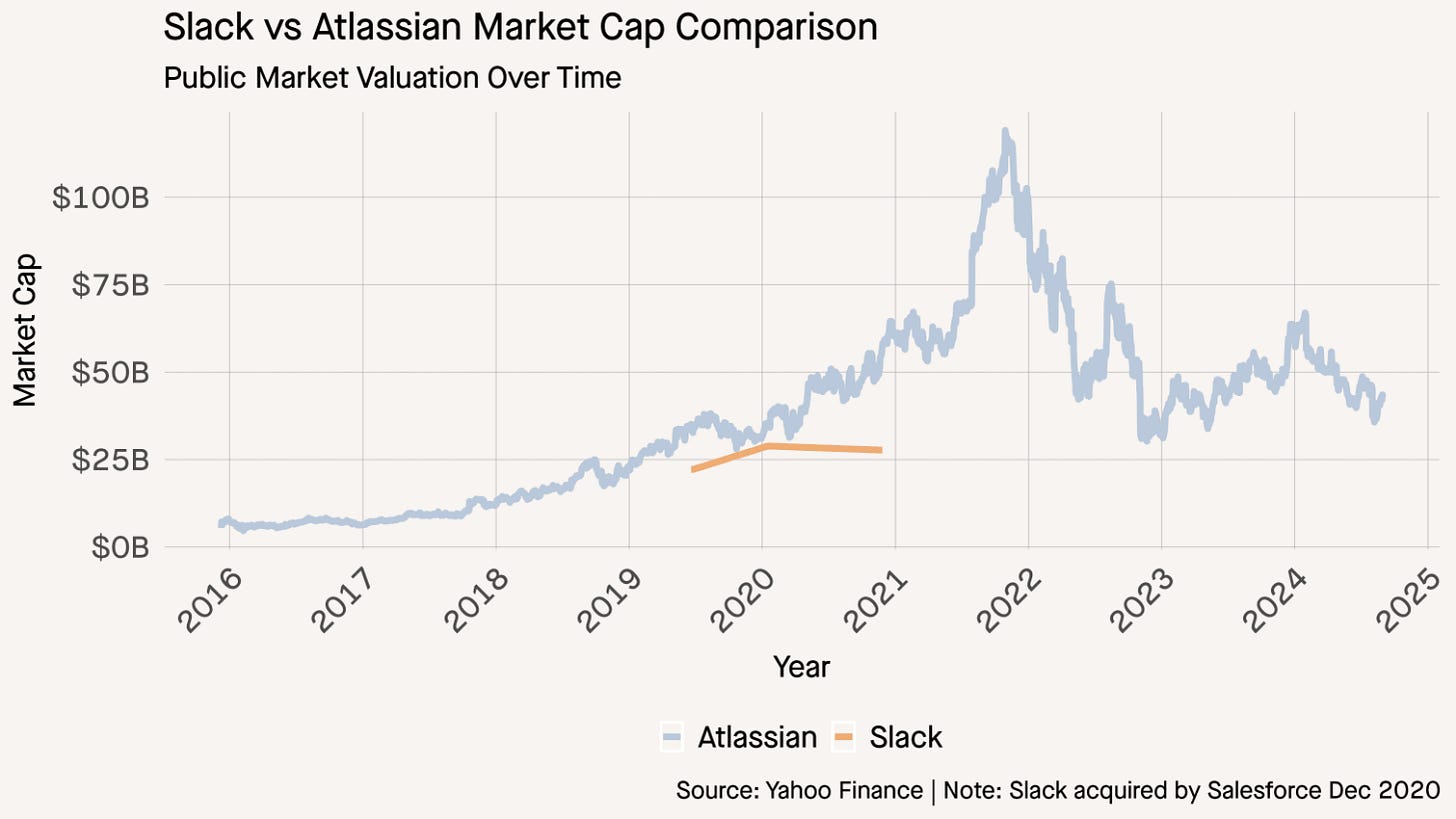

The Math of Hypergrowth: Two Paths to the Same Goal

Tomtunguz • a decade : 17 vs 7 years. • September 24, 2025

Venture

How long & how quickly can a business compound?

This is a question every investor asks of every business, public or private.

In the 2010s, Slack & Atlassian became titans. On the day Salesforce announced its intent to acquire Slack, it was equally valuable to Atlassian at ~$27b.

The revenue curves look similar in the out years, similar growth rates. Atlassian continues to compound at massive scale.

But the time to achieve $1b from founding date differs by a decade : 17 vs 7 years.

To create value, a startup must grow quickly & grow at scale ; or grow consistently over a long period of time. AI companies today are growing very quickly. The T3D2 companies can grow at a slower rate over a longer period of time to achieve the same market cap.

Compare OpenAI’s 400% growth at $1b revenue to Atlassian’s 30%. Or Snowflake at 124%. Snowflake is $75b market cap today, Atlassian $42b. The advantage of a head of steam is clear.

While both paths steady compounding & hypergrowth can lead to the same destination, the latter creates more value because of the time value of money. The sooner a startup reaches $1b revenue, the more valuable it is.

Of course, a hypergrowth company with significant churn isn’t worth very much at all. The CAP theorem equivalent in business is some combination of growth, margin, & retention. Most businesses can’t optimize for all three.

GPs, here’s how you should break down your 30 minute pitch #Fundraising #investorrelations

Youtube • Carta • September 24, 2025

Venture

What a 30-minute GP pitch must accomplish

In a single half-hour, GPs need to establish credibility, make a compelling case for their strategy, prove an ability to execute, and give LPs clear next steps. Treat the meeting like a tightly scripted performance: open strong, deliver only the essentials, and reserve time for interaction. The aim isn’t to tell everything about the fund—it’s to create conviction and momentum toward diligence and a second meeting.

Suggested 30-minute agenda

0:00–3:00 — Opening and context: your fund in one sentence; who you are; what this meeting will cover.

3:00–10:00 — Investment thesis and “Why now”: market wedge, sourcing edge, and how you win.

10:00–17:00 — Proof of execution: track record highlights, portfolio construction model, underwriting discipline.

17:00–23:00 — Pipeline and process: current deal flow, diligence workflow, co-investor network, risk management.

23:00–27:00 — Terms and alignment: target fund size, fees/carry, GP commit, reporting cadence, governance.

27:00–30:00 — Q&A and next steps: anticipated questions, data room pointer, follow-up timeline.

What to emphasize in each section

Opening: State stage/sector/geography and fund generation up front so LPs can quickly map you to mandate fit. A crisp, jargon-free one-liner frames everything that follows.

Thesis and “Why now”: Show the inefficiency you exploit and the repeatable sourcing/selection advantage you bring (networks, proprietary channels, or domain expertise). Keep TAM slides to a minimum; focus on where you uniquely find and price risk.

Proof of execution: Prioritize 2–3 case studies tied to your stated edge. Explain entry rationale, your post-investment value-add, and realizations or markups. Where allowed, present performance with standard LP metrics (IRR, TVPI/MOIC) and cohort views; if first-time, show adjacent evidence (angel/SPV results, operating wins). LPs commonly evaluate performance through these metrics and expect clear, comparable presentation. (carta.com)

Portfolio construction: Walk through number of names, initial check sizes, reserves policy, ownership targets, and pacing. Link the model to your sourcing bandwidth and follow-on strategy rather than treating it as a spreadsheet exercise. Guidance for portfolio construction and value-add is a core component LPs look for in materials. (carta.com)

Pipeline and process: Share funnel stats (top-of-funnel to term sheet), diligence rhythm, and IC mechanics. Name co-investors and referral loops that repeatedly convert into allocations. Address concentration and downside controls.

Terms and alignment: Be concise on fund economics and highlight GP commit timing, reporting practices, and communications cadence—signals of transparency LPs weigh heavily. (carta.com)

Delivery principles

Narrative discipline: Every minute should serve the through-line of “edge → execution → results → alignment.” Cut anything that doesn’t advance that arc.

Show, don’t tell: Replace adjectives with artifacts—IC memos, sourcing dashboards, update templates, or anonymized case-study timelines.

Anticipate objections: Preempt mandate misfit, team capacity, or concentration risk with data and examples.

Reserve interaction time: Q&A is where conviction forms; budget the final three minutes and keep answers concrete and brief.

Common pitfalls to avoid

Spending too long on biography or market size at the expense of process and portfolio construction.

Overstuffed slides—use clean visuals and defer detail to a data room.

Vague performance framing—always benchmark and define metrics consistently. LPs expect standard measures and concise case studies over anecdote. (carta.com)

Key takeaways

Lead with mandate fit and edge; prove the engine with 2–3 case studies and a tight portfolio model.

Make alignment tangible: GP commit, fees/carry rationale, and a clear reporting rhythm.

Close with momentum: explicit next steps, timeline to follow-up, and access to materials—then protect time for questions.

Nvidia To Invest Up To $100B In OpenAI

Crunchbase • Judy Rider • September 22, 2025

Venture

Chipmaker Nvidia announced on Monday that it is investing up to $100 billion in OpenAI.

The deal between Nvidia, the world’s highest-valued public company, and OpenAI, parent company of the ubiquitous ChatGPT, is notable considering that both startups are among the largest in their respective spaces.

But the investment comes with conditions. The two companies signed a letter of intent for a strategic partnership by which at least 10 gigawatts of Nvidia systems would be deployed for OpenAI’s AI infrastructure to train and run its next generation of models. To support the deployment (including data center and power capacity), Nvidia will invest up to $100 billion in OpenAI as the new Nvidia systems are deployed.

“Everything starts with compute,” said Sam Altman, co-founder and CEO of OpenAI, in a written statement. “Compute infrastructure will be the basis for the economy of the future, and we will utilize what we’re building with NVIDIA to both create new AI breakthroughs and empower people and businesses with them at scale.”

Nvidia has been on somewhat of an investment tear. So far this year, it has made at least 42 investments in private companies, either directly or through its NVentures venture arm, per Crunchbase data. That’s up slightly from the year-ago period, which was already unusually busy.

In late March, SoftBank announced that it was backing an investment of up to $40 billion in OpenAI, marking the largest venture capital investment ever.

These Are The Speediest Companies To Go From Series A To Series C

Crunchbase • September 22, 2025

Venture

Most founders count themselves fortunate if they go a couple years between each venture round. But in every cycle, there are a few outliers who fundraise much faster.

The past couple of years are no exception. Per Crunchbase data, there’s a sizable cohort

of companies that have gone all the way from Series A to Series C between 2023 and this year. Several have managed to scale all three stages in less than 12 months.

Heavily represented among the fastest fundraisers, unsurprisingly, are generative AI startups. But other areas are also producing rising stars, including vertical AI, fintech and spacetech.

Below, we look at some of the quickest serial fundraisers in these and other areas.

GenAI

In recent quarters, generative AI has been the single biggest area for venture funding by a long shot. So, naturally this is the space in which many of our fastest fundraisers compete.

Standouts include both generalists and startups focused on a particular medium, like code or audio.

Coding: AI coding startups have been particularly hot of late. This is evidenced by Anysphere’s $900 million Series C this summer, which came less than a year after the San Francisco company raised its Series A. AI software development platform Cognition was similarly speedy, closing a $400 million Series C this month, barely a year after its Series A.

Audio, video and imagery: Investors are also enthused about startups offering a richer AI audio and visual experience. To this end, Fal, focused on AI models for generative image, video and audio, has been closing rounds quickly, securing a $125 million July Series C just 10 months after its Series A. Voice AI startups are also in vogue, paving the way for 3-year-old voice AI platform ElevenLabs to get to Series C rapidly and see its valuation rise sharply.

Generalists: Developers of more general-purpose large language models are also still securing serial rounds at superfast rates. Elon Musk’s xAI is kind of the poster child for this practice, with at least $22 billion in known debt and equity financing since its inception in 2023. Three-year-old Perplexity has also been scaling at an impressive clip, as has Paris-based Mistral AI.

Vertical AI

Purveyors of AI-enabled enterprise software are also popular with venture capitalists lately.

Customer support is a particularly timely theme, and some fast fundraisers hail from this space, including Decagon, a conversational AI platform for customer service that closed its Series C this summer, about a year after securing its Series A. Parloa, a developer of AI agents focused on promoting customer loyalty, also picked up a big Series C — $120 million — in May, barely a year after its Series A.

Legaltech is another sector drawing considerable capital, and here the most oft-referenced startup name is Harvey, a 3-year-old developer of AI tools for legal professionals that has raised more than $800 million to date.

Fintech

Fintech is typically one of the larger sectors for startup investment. As such, we might expect to see some fast movers hail from this space.

And we did, with providers of business banking securing some of the fastest serial rounds. This includes Dublin-based NomuPay, a global payments platform for merchants; Mexico’s Kapital, which extends online banking services to businesses; and Dutch startup Finom, which provides business accounts for European freelancers and small enterprises.

Spacetech

Spacetech isn’t one of those industries where startups scale on the cheap, so it’s not a shocker to see companies in the sector raise serial rounds at a brisk clip.

In recent months, several fast fundraisers have closed on Series C rounds involving big checks. This includes satellite transport provider Impulse Space and space security-focused startup True Anomaly.

Meanwhile, 3-year-old Apex Space, which designs and builds satellite bus products, made it all the way to Series D this month, securing $200 million at a $1 billion valuation. Just five months earlier, Los Angeles-based Apex pulled in a $200 million Series C.

Other areas

Several other sectors also contributed some fast fundraisers of their own, albeit in slightly smaller quantities…

Crypto

YC x Coinbase RFS: Build Onchain

Ycombinator • Harj Taggar • September 23, 2025

Crypto•Blockchain•Fintech

We believe that it’s time to build onchain. The tools have been maturing over the last decade, but with low-cost chains, globally adopted stablecoins, easy-to-use wallets, and growing consumer adoption, the infrastructure is finally ready. And we are already seeing a number of large trends that have huge opportunity for builders around the world.

It starts with the fact that we are at the beginning of a new era in financial technology: Fintech 3.0.

Fintech 1.0 was the initial digitization of finance in the 90s, driven by companies like PayPal. The key unlock here was that consumers became comfortable paying for things online.

Fintech 2.0, which occurred over the last decade and was driven by companies like Stripe, Plaid, Brex, and Chime, involved building APIs on top of the existing financial system. The key unlock here were banking-as-a-service (BaaS) providers that made it possible for startups to build on top of the legacy financial system.

Now, we are entering the era of Fintech 3.0. This era will be about building a new financial system with code. A system where payments settle instantly, anywhere in the world, 24/7. A system where users store their assets in digital wallets, which they have full control and custody over, rather than banks.

For years, the main obstacle to building Fintech 3.0 has been regulatory uncertainty. With the passage of the GENIUS Act—and potentially, the CLARITY Act—we now have a clear crypto regulatory framework in the US that will let founders build generational companies onchain, with confidence. This is the most significant opportunity for crypto startups in years, and at YC and Coinbase, we want to fund and support you to seize it.