Contents

Editorial: Copyright Can't Save Publishers from AI

Essays

Copyright

AI

Venture Capital

Education

IPO

Interview of the Week

Startup of the Week

Post of the Week

Editorial: Copyright Can't Save Publishers from AI

What a week for generative AI and copyright law. This week was a bad week for Getty Images and publishers opposed to AI training on their content.

The headlines are flying, the legal filings are thick, and the only thing less clear than the future of content ownership is the present. If you’re a founder, a creator, or just someone who likes to watch billion-dollar companies fight in court, pour yourself a coffee: the AI copyright endgame is nowhere in sight, but the opening moves are wild.

Getty Retreats, But the War Isn’t Over

Getty Images, the grand old gatekeeper of stock photography, has quietly dropped its headline copyright claims against Stability AI in the UK. The core accusation? That Stability used millions of Getty’s images—sometimes with watermarks still attached—to train its Stable Diffusion model. Getty’s lawyers, after months of posturing, have now abandoned both the “training” and “output similarity” claims. Why? The evidence was weak, the witnesses were weaker, and the UK’s jurisdictional quirks didn’t help. As one legal observer put it, Getty couldn’t show that the AI’s outputs “reflect a substantial part of what was created in the images”.

But don’t mistake this for surrender. Secondary infringement and trademark claims remain, and Getty’s $1.7 billion US lawsuit is still live. The message: the legal fog is thick, and the real battle may be jurisdictional, not just factual. Meanwhile, Getty is hedging its bets with its own AI image generator, trained only on its licensed library. If you can’t beat ‘em, build your own model and charge for it.

Fair Use: Mixed Signals

Across the Atlantic, the US courts are finally starting to draw lines in the sand. Two major rulings dropped this week on whether training large language models (LLMs) on copyrighted works is “fair use.” The first, Bartz v. Anthropic, is a landmark: Judge Alsup ruled that training LLMs is “spectacularly transformative” and thus protected as fair use. The logic is simple and, frankly, overdue: just as Google was allowed to copy books to build a search engine, AI companies can study copyrighted works to build new, transformative tools. The court dismissed the idea that LLMs are “infringement machines” just because they can generate new text in the style of the originals.

But then came Kadrey v. Meta, and the waters muddied. Judge Chhabria, while ultimately ruling for Meta due to lack of evidence, opined that training on copyrighted works without a license would be “illegal in most cases.” He worried about “market dilution” and AI-generated works competing with originals. The problem? This flips the fair use doctrine on its head, treating “market harm” as the only thing that matters and ignoring the transformative nature of AI training. As the EFF notes, copyright is supposed to encourage new expression—even if that means competition.

The Precedent and the Path Forward

The upshot: the first big AI copyright decision is a win for AI, but the road ahead is anything but clear. The Bartz ruling gives AI companies a strong fair use precedent, but the specter of appeals, class actions, and conflicting opinions looms large. Congress is now on the clock to clarify what’s allowed and what isn’t, because the courts are sending mixed signals.

Meanwhile, the industry isn’t waiting. The Big Five tech companies are adapting, hedging, and investing. Getty is building its own models. Apple is betting on private data and device integration. The only certainty is that the legal and business landscape will keep shifting, and the only thing more expensive than training an LLM is litigating one.

Second Blow: The AI Browser Wars and the Coming Crisis for Publishers

If the legal fog wasn’t enough, this week delivered a second seismic shock—one that should have every publisher dependent on search engine traffic reaching for the antacids. The AI browser wars have begun, and the implications for the open web—and the revenue models that depend on it—are profound according to MG Siegler

The launch of Dia, an AI-first browser from The Browser Company, marks a turning point. Unlike legacy browsers, which are hurriedly bolting on AI features, Dia is built from the ground up to make AI the default layer of the browsing experience. With integrated chatbots, instant page summaries, and the ability to query across all open tabs, users are nudged to interact with content through AI intermediaries rather than directly with publisher sites.

Why does this matter? Because the traditional web ecosystem is built on a simple value exchange: publishers create content, search engines index it, and browsers deliver users—who then see ads or hit paywalls. If AI browsers become the primary interface, users may never even visit publisher sites. Instead, they’ll get AI-generated summaries, answers, and context—sidestepping the original source entirely.

This isn’t just a theoretical risk. The article notes that, for many, the AI overlay is already becoming the default way to consume news, research, and information. Chrome’s integration of Gemini is clunky for now, but Google’s ambitions are clear. Meanwhile, upstarts like Dia and Perplexity are racing to own the AI browser experience, giving them unprecedented control over what users see, how they see it, and—crucially—what they don’t.

For publishers, this is a direct threat to both traffic and revenue. The “click-through” model that underpins digital advertising is at risk of being bypassed. If users no longer need to visit a site to get the information they want, ad impressions and subscriptions will plummet. The browser, once a neutral conduit, is becoming an active filter and gatekeeper—one that may not have publishers’ interests at heart.

The bottom line: the AI browser wars are not just a product story—they are an existential challenge to the business model of the open web. Publishers who rely on search-driven traffic must now grapple with a future where the browser itself intermediates, summarizes, and potentially monetizes their content—without ever sending a user their way.

Philosophical Coda: The Internet of Tolls—A New Commons or a Fragmented Future?

As the dust settles on a week of legal wrangling and technological upheaval, a deeper question emerges: What kind of digital society are we building as AI, browsers, and copyright law collide? The answer, it seems, may be found at the tollbooth.

Om Malik and Fred Vogelstein’s reflection on the coming “Internet of Tolls” captures the sense that the open, frictionless web—the commons that fueled decades of innovation and free expression—is fragmenting. The new AI-powered landscape is increasingly shaped by paywalls, subscriptions, licensing deals, and, yes, literal and metaphorical tollbooths. Publishers, squeezed by search disruption and AI summarization, are racing to erect barriers around their content. Platforms, in turn, are building their own walled gardens, seeking to capture value before it leaks out into the ether of generative models.

But as Crazy Stupid Tech argues, these tollbooths are not just about money—they are about power, control, and the ability to set the rules of engagement for the next era of the internet.

The AI revolution, for all its promise, risks turning the web into a patchwork of private roads, each with its own gatekeeper, fee structure, and terms of service. The dream of a universal, open commons is giving way to a reality of fragmented access and algorithmic mediation.

Is this inevitable? Perhaps. As value concentrates in the hands of those who control the infrastructure—whether it’s the browser, the model, or the content feed—the incentives to charge, restrict, and gate will only grow. Yet, as both articles remind us, the real challenge is not technological but philosophical: Will we use AI to reinforce the walls, or to build new bridges? Will the internet become a maze of tolls, or can we imagine new models of sharing, compensation, and creativity that keep the commons alive? Cloudflare seems to be thinking about that (see Post of the Week).

The week’s events—AI browser wars, copyright battles, and the rise of digital tollbooths—are not just business stories. They are signals of a deeper transformation, one that will define who gets to participate, profit, and create in the digital age. The choices we make now, about law, technology, and values, will determine whether the future of knowledge is open or closed, abundant or fenced off.

That was the week. The tollbooths are rising. The road ahead is ours to choose

Essays

Meta makes the dumbest models

The random walk • Moses Sternstein • June 24, 2025

Technology•AI•MachineLearning•Bias•Meta•Essays

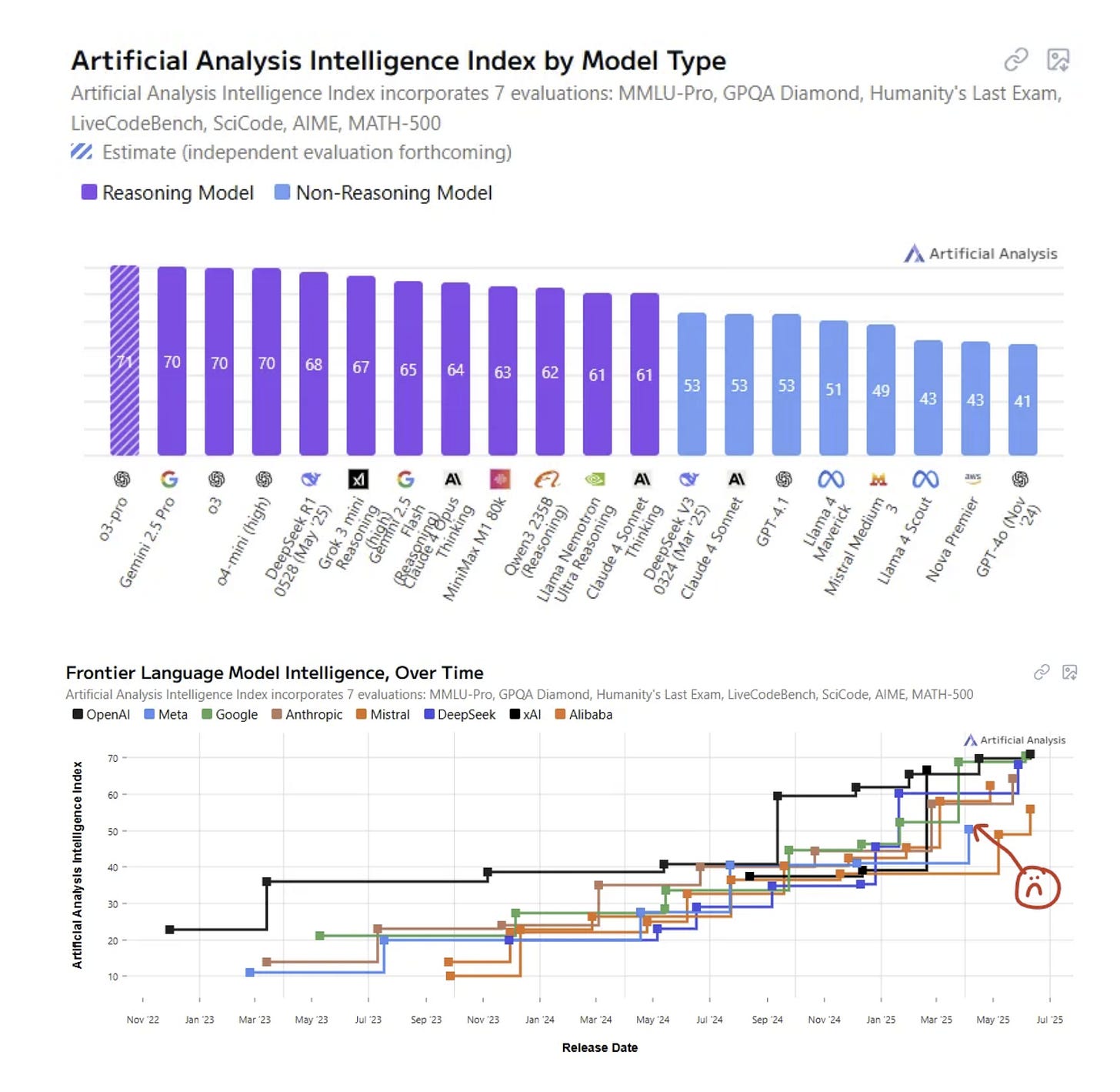

Meta’s models really have fallen behind. Mark Zuckerberg and Meta have recently made headlines for heavily investing in AI talent, including a $14 billion acquisition of Scale.ai and attempts to recruit Silicon Valley veterans like Daniel Gross and Nat Friedman. Zuckerberg is also reportedly reaching out personally to researchers and engineers through hundreds of emails and WhatsApp messages. The narrative suggests that Zuckerberg is frustrated with AI progress and views attracting top talent as the key to breakthrough success, prompting a willingness to spend extensively.

Despite these efforts, Meta’s AI models appear to be underperforming compared to competitors. Although Meta should have advantages in distribution and open-source data, their models like LLaMA are trailing in performance, suggesting their level of sophistication is lagging behind current standards.

Separately, an intriguing investigation into reward models (RMs) used to fine-tune AI aimed to reveal what these models actually prioritize in terms of values. Reward models score AI responses based on human preference alignment, but research shows their values deviate from typical human preferences. For example, RMs tend to rate Charlie Munger, Communism, and Ataturk positively while undervaluing sexual content and certain demographic references like Black people. In the negative rankings, there are surprisingly severe biases, with RMs ranking some groups and concepts very unfavorably compared to human values.

While these findings should not be over-interpreted without further study, they highlight significant and perhaps unexpected biases embedded in AI reward systems. This raises questions about the reliability and implications of fine-tuning methods used widely to align AI behavior with human values.

Checking In on AI and the Big Five

Stratechery • Ben Thompson • June 23, 2025

Technology•AI•BigTech•ArtificialIntelligence•Innovation•Essays

This detailed analysis revisits the state of artificial intelligence through the lens of the Big Five tech companies—Apple, Google, Meta, Microsoft, and Amazon—exploring how AI has evolved since early 2023 and the varying strategies, challenges, and opportunities each faces. The article underlines that AI has emerged as a pivotal technological epoch, reshaping the landscape of business models, innovation, and competition.

Meta's Urgent Shift and AI Struggles

Meta's recent challenges with its Llama 4 AI model, which was criticized as disappointing and deceptive, have sparked an aggressive recruitment and investment campaign led by Mark Zuckerberg to form a "Superintelligence" lab. This hiring blitz signals Meta’s recognition of AI as critical to its future, but also highlights internal uncertainties over leadership, product vision, and the company’s ability to keep pace in the AI arms race. Despite a solid position in individualized content and advertising through AI, the threat that AI chatbots pose to user attention and monetization creates strategic tensions. Zuckerberg’s frank admission of problems and the appointment of new leadership for AI development show a pivot towards more focused and rapid innovation within Meta.

Apple's Cautious, Hardware-First Approach

Apple remains a sustaining player in the AI space, focusing on complementing its device ecosystem rather than developing leading AI models internally. The company lags in AI infrastructure and model innovation but benefits from unique access to consumer data and devices that can act as differentiated platforms for AI applications. The partnership with OpenAI offers Apple a pathway to integrate cutting-edge AI services without heavy internal development. The article suggests Apple should aggressively expand beyond phones into AI-powered devices like watches, HomePods, and robotics, leveraging its hardware excellence and scale. However, Apple's integrated hardware-software approach and conservative acquisition strategy may slow its ability to compete fully in AI. Investing in or partnering with open-source model makers like Mistral could be vital.

Google's Integrated AI Powerhouse

Google’s infrastructure is described as the best globally, solidly integrated from chip design to models, exemplified by its Gemini models and media generation capabilities like Veo. Its unrivaled access to data, especially from YouTube and web indexing, gives it a tremendous edge for training AI. Google views AI primarily as a disruptive force to its core Search business, prompting innovations such as AI Search Overviews and the "Search Funnel" to transition search towards an AI-enhanced experience while preserving monetization. Google’s strong position in cloud computing through GCP represents a major growth avenue, where its superior AI capabilities can augment enterprise workflows and multi-cloud environments.

Microsoft’s Partnership-Driven AI Strategy Faces Frictions

Microsoft is uniquely positioned with very strong infrastructure, exclusive cloud rights for OpenAI’s APIs, and broad distribution through Windows and Microsoft 365. However, its relationship with OpenAI has become strained amid disputes over profit-sharing and governance, posing risks to Microsoft's long-term roadmap. The company’s AI incorporations into Bing and productivity apps have not yet dominated the market as hoped, and despite early success with GitHub Copilot, competitors have caught up. Still, Microsoft’s enterprise cloud dominance and potential to embed AI throughout its subscription services provide a strong foundation. The article recommends Microsoft secure exclusive Azure AI advantages permanently and diversify investments in alternative AI model developers to reduce dependency risks on OpenAI.

Amazon’s Steady, Advantageous Cloud and E-Commerce AI Integration

Amazon's AI engagement is characterized as sustaining and synergistic with its existing AWS and e-commerce businesses. AI usage is expected to increase AWS consumption without disrupting core revenue models. Amazon’s partnership with Anthropic is more stable compared to Microsoft-OpenAI, fitting well into AWS’s chip and infrastructure strategy. AWS remains the leading global cloud provider, critical for enterprise AI workloads, while Amazon.com could benefit from AI-driven product recommendations and affiliate revenue streams. Voice assistant Alexa, while underwhelming so far, remains a promising AI-powered platform for Amazon's future.

The Model Makers and Broader AI Landscape

OpenAI continues to dominate consumer-facing AI with ChatGPT, owning critical customer relationships but facing challenges in monetization beyond subscriptions. Anthropic focuses on developer tools and coding, riding a strong API revenue stream and deeper alignment with Amazon's cloud infrastructure. Newer players like Elon Musk’s xAI face hurdles related to infrastructure costs and unclear market positioning, with potential strategic fit alongside Oracle being scrutinized. Meta's dual position as both a model user and prospective builder exemplifies the high stakes of AI investment—the quest for superintelligence shapes talent acquisition and strategic urgency.

Strategic and Geopolitical Implications

The analysis also addresses the geopolitical dimension, particularly U.S.-China competition in AI and chip technology. The article critiques the framing of AI as a "destroyer of worlds," suggesting that China may eventually commoditize AI and chip manufacturing to the benefit of Big Tech globally, while raising risks for companies like Nvidia. This scenario highlights the complex interplay between innovation, national policies, and global competition shaping the future of AI.

Summary

Overall, the Big Five display distinct AI strategies reflecting their core competencies and business models: Apple’s hardware-centric sustaining approach, Google’s integrated data and cloud-driven disruption, Meta’s turbulent yet ambitious AI transformation, Microsoft’s powerful but partnership-dependent enterprise foothold, and Amazon’s cloud and AI synergy in e-commerce. The evolving partnerships with foundational AI model makers add another layer of complexity and opportunity. The article emphasizes the massive transformational potential of AI over the next decade, urging each company to adapt decisively to maintain or grow their influence in the new AI epoch.

AI Killed My Job: Tech workers

Bloodinthemachine • Brian Merchant • June 25, 2025

Technology•AI•WorkforceImpact•Automation•LaborMarket•Essays

“What will AI mean for jobs?” is perhaps the most frequently asked question about the technology dominating Silicon Valley, pop culture, and politics. Fears that AI will displace workers regularly top opinion polls. Companies like Duolingo and Klarna have cited AI as a reason for workforce reductions, with layoffs framed as shifts toward AI-first strategies. Even federal workers have been dismissed under the justification of such AI strategies.

Tech executives amplify these concerns. Anthropic’s CEO, Dario Amodei, predicts AI could eliminate half of entry-level white-collar jobs and replace up to 20% of all jobs. OpenAI’s Sam Altman asserts AI systems can replace entry-level workers and soon code “like an experienced software engineer,” bluntly stating, "Jobs are definitely going to go away, full stop."

However, the real-world impact remains complex. Many firms invest heavily in AI to boost productivity and reduce labor costs, with significant effects in creative industries due to low-cost AI-generated content. Yet broader economic data suggests disruption from AI may be more limited. Two and a half years after ChatGPT’s emergence, the tangible reshaping of work by AI is still emerging.

To explore this, the author initiated a project, AI Killed My Job, seeking direct testimonials from workers affected by AI-driven changes in employment. The goal is to amplify worker voices often overshadowed by speculation and corporate rhetoric. The project gathered an overwhelming response from a range of professions including artists, engineers, marketers, and consultants, revealing diverse experiences of AI’s impact.

The project’s first focus is on the tech industry—the birthplace of much AI innovation and where impacts are immediately felt. Workers recount AI being used by management to justify layoffs, accelerate workloads, and consolidate power. The stories come from large tech giants and small startups alike, illustrating AI’s role in job insecurity and organizational dynamics. Many testimonies highlight the degradation of work quality and increased pressures on remaining staff.

The title “AI Killed My Job” plays on the idea that while AI is not sentient and it is management that ultimately fires people, AI can “kill” jobs by eroding skill, autonomy, and job satisfaction. The collection aims to spark examination of how generative AI—the most heavily funded technology of our era—truly affects the workforce.

If your job has been impacted by AI, contributors are invited to share their stories confidentially. The newsletter will continue to explore AI’s impact across various fields, beyond tech, including law, media, customer service, and art.

Charted: Productivity Gains from Using AI

Visualcapitalist • Niccolo Conte • June 25, 2025

Technology•AI•Productivity•GenerativeAI•WorkplaceEfficiency•Essays

See this visualization first on the Voronoi app.

Charted: Productivity Gains from Using AI for Tasks

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

Key Takeaways

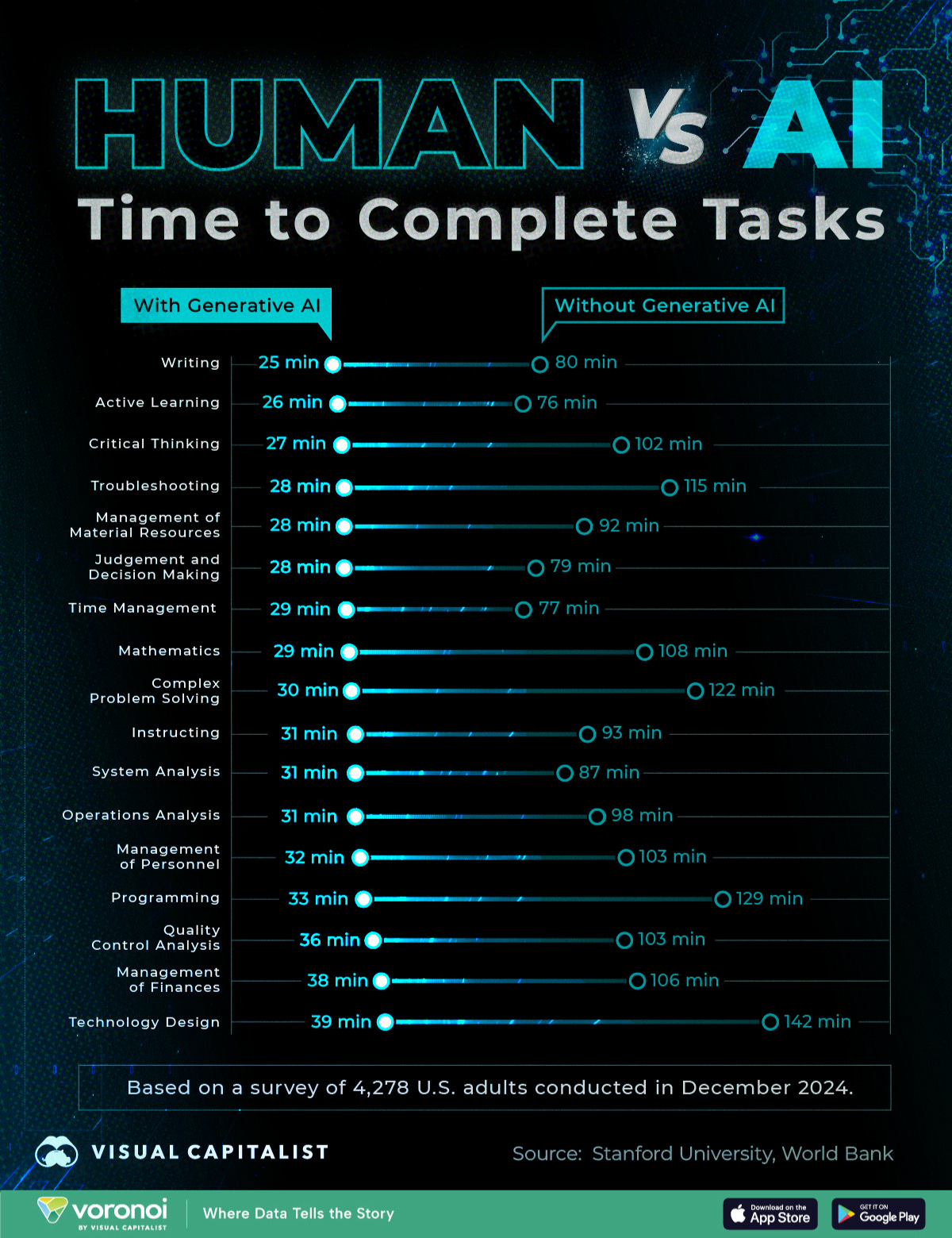

Across all tasks, using generative AI reduces the time taken to complete them by at least 60%

Technical and analytical tasks like troubleshooting, programming, and technology design saw significant productivity gains

Even human-centric tasks like instructing, management of personnel, and judgement and decision-making benefitted from AI tools

As AI tools become increasingly integrated—and in some cases, even mandated—into professional workflows, their real-world impact on productivity is becoming more evident.

This chart compares the average time it takes U.S. adults to complete 18 common work tasks with and without the use of generative AI, based on a December 2024 survey of 4,278 respondents conducted by Stanford University and the World Bank.

Generative AI Improves Productivity by Over 60%

Across all tasks, using generative AI reduced the average time taken to complete them by more than 60%.

Here’s how much time using generative AI saved across 18 common work tasks, in average number of minutes:

Writing: from 80 to 25 minutes (-69%)

Active Learning: from 76 to 26 minutes (-66%)

Critical Thinking: from 102 to 27 minutes (-74%)

Troubleshooting: from 115 to 28 minutes (-76%)

Judgement and Decision Making: from 79 to 28 minutes (-65%)

Management of Material Resources: from 92 to 28 minutes (-70%)

Mathematics: from 108 to 29 minutes (-73%)

Time Management: from 77 to 29 minutes (-62%)

Complex Problem Solving: from 122 to 30 minutes (-75%)

Instructing: from 93 to 31 minutes (-67%)

Operations Analysis: from 98 to 31 minutes (-68%)

Systems Analysis: from 87 to 31 minutes (-64%)

Management of Personnel: from 103 to 32 minutes (-69%)

Programming: from 129 to 33 minutes (-74%)

Equipment Maintenance: from 124 to 34 minutes (-73%)

Quality Control Analysis: from 103 to 36 minutes (-65%)

Management of Finances: from 106 to 38 minutes (-64%)

Technology Design: from 142 to 39 minutes (-73%)

Some of the largest gains came from highly technical or analytical tasks. For example, troubleshooting saw a 76% reduction in time, while critical thinking, programming, and technology design all showed over 70% time savings with generative AI.

Interestingly, even human-centric tasks—such as instructing, judgment and decision-making, and management of personnel—benefited from AI tools, with time reductions ranging from 60–70%.

Accelerating Work With AI

While AI is often framed as a replacement for human labor, this data shows that human workers empowered by AI can do the same tasks far more efficiently.

Writing, for example, dropped from an average of 80 minutes to just 25 minutes with generative AI. For complex cognitive functions like mathematics, systems analysis, and operations, AI reduced the time taken to complete tasks by over an hour.

Furthermore, AI adoption is increasing rapidly. According to the survey, LLM adoption at work for respondents aged 18 or older increased from 30% in December 2024 to over 43% as of March/April 2025.

If this trajectory continues, AI-driven productivity gains could scale from individual tasks to entire organizations, and potentially reshaping broader economic outcomes.

AI is transforming how we work and live online, but which companies are leading this new era of technology? Find out in this infographic on Voronoi, the new app from Visual Capitalist.

Here comes the Internet of “tolls”

Om • Om Malik • June 24, 2025

Technology•AI•BusinessModels•InternetEconomy•MediaInnovation•Essays

The decades-old doctrine of “Web traffic in exchange for permission to crawl” is over, writes Fred Vogelstein in his latest feature for our newsletter, Crazy StupidTech, and as a result, the Internet in the age of AI will be filled with much-needed “tolls.” This change has come quickly.

“Google essentially invented the business of crawling in exchange for monetizable traffic a generation ago with Adwords,” writes Fred. “It remains the source of its dominance today. And it has been an essential fuel for the growth of the $16 trillion global internet economy.“

The writing’s been on the wall since ChatGPT launched, but nobody wanted to read it. We’re watching the great traffic heist in real-time. “Not only are more and more searches going through AI chatbots that generate zero traffic for publishers,” Fred writes, “Google itself is now sending publishers less traffic. Instead, Google is increasingly choosing to use its own AI product Gemini to respond to queries as a way of competing with the chatbots.”

In other words, AI chatbots are swallowing searches whole, while Google is playing both sides with Gemini. Don’t ignore the fact that this is a big challenge to how Google makes money. But it has deep pockets. Established media is living on fumes. One man’s crisis is another man’s opportunity.

Tollbit is the first to capitalize on this. But as Fred points out, Cloudflare and Matthew Prince are cooking up something new and will give @TollbitOfficial some competition and a boost.

I have been talking about this for a very long time, but the establishment media is always the last to realize their own existential threats. Just as they were slow to recognize the emergence of blogs, social media, and how Facebook was a chimera, they have been slow to realize that the old “destination internet” as a behavioral construct is over.

The addiction to traffic and impressions-based advertising has been an Achilles’ heel of the media establishment. It is hard for them to look at the world through the lens of engagement. The rise of “chat-based” informational interfaces is yet another victory for engagement-trumps-all doctrine.

To get a better understanding of this, feel free to dig into the archives of our CrazyStupidTech newsletter. If you like what you read, please subscribe. It is free. But before you do all that, read Fred’s piece. It is very good.

Copyright

Getty drops key copyright claims against Stability AI, but UK lawsuit continues

Techcrunch • Rebecca Bellan • June 25, 2025

Technology•AI•Copyright•LegalIssues•ArtificialIntelligence

Getty Images dropped its primary claims of copyright infringement against Stability AI on Wednesday at London’s High Court, narrowing one of the most closely watched legal fights over how AI companies use copyrighted content to train their models.

The development marks a shift in the litigation landscape surrounding AI and intellectual property rights, as Getty concentrates on other aspects of the case. The lawsuit initially targeted Stability AI for allegedly using millions of Getty’s copyrighted images without permission to train its AI image-generation model, Stable Diffusion.

Despite dropping key copyright claims, Getty’s lawsuit against Stability AI in the UK is continuing on other grounds. The exact remaining claims have not been detailed, but the move suggests Getty is refining its legal strategy to focus on areas where it believes it has stronger chances of success.

The case is part of a broader series of legal challenges by artists, photographers, and copyright holders worldwide, highlighting the growing tensions between the AI industry’s rapid advancement and existing copyright frameworks. As AI models increasingly rely on vast datasets that include copyrighted works, determining the legality of such training methods is becoming a critical issue in courts.

Getty Images’ decision to drop some claims against Stability AI may influence other ongoing or future lawsuits related to AI training and copyright, potentially impacting how AI companies approach content licensing and data sourcing.

Two Courts Rule On Generative AI and Fair Use - One Gets It Right

Eff • Tori Noble • June 26, 2025

Technology•AI•Copyright•FairUse•GenerativeAI

Things are speeding up in generative AI legal cases, with two judicial opinions just out on an issue that will shape the future of generative AI: whether training gen-AI models on copyrighted works is fair use. One gets it spot on; the other, not so much, but fortunately in a way that future courts can and should discount.

The core question in both cases was whether using copyrighted works to train Large Language Models (LLMs) used in AI chatbots is a lawful fair use. Under the US Copyright Act, answering that question requires courts to consider:

1) whether the use was transformative;

2) the nature of the works (Are they more creative than factual? Long since published?)

3) how much of the original was used; and

4) the harm to the market for the original work.

In both cases, the judges focused on factors (1) and (4).

In Bartz v. Anthropic, three authors sued Anthropic for using their books to train its Claude chatbot. In his order deciding parts of the case, Judge William Alsup confirmed what EFF has said for years: fair use protects the use of copyrighted works for training because, among other things, training gen-AI is “transformative—spectacularly so” and any alleged harm to the market for the original is pure speculation. Just as copying books or images to create search engines is fair, the court held, copying books to create a new, “transformative” LLM and related technologies is also protected:

"[U]sing copyrighted works to train LLMs to generate new text was quintessentially transformative. Like any reader aspiring to be a writer, Anthropic’s LLMs trained upon works not to race ahead and replicate or supplant them—but to turn a hard corner and create something different. If this training process reasonably required making copies within the LLM or otherwise, those copies were engaged in a transformative use."

Importantly, Bartz rejected the copyright holders’ attempts to claim that any model capable of generating new written material that might compete with existing works by emulating their “sweeping themes,” “substantive points,” or “grammar, composition, and style” was an infringement machine. As the court rightly recognized, building gen-AI models that create new works is beyond “anything that any copyright owner rightly could expect to control.”

There’s a lot more to like about the Bartz ruling, but just as we were digesting it Kadrey v. Meta Platforms came out. Sadly, this decision bungles the fair use analysis.

Kadrey is another suit by authors against the developer of an AI model, in this case Meta’s ‘Llama’ chatbot. The authors in Kadrey asked the court to rule that fair use did not apply.

Much of the Kadrey ruling by Judge Vince Chhabria is dicta—meaning, the opinion spends many paragraphs on what it thinks could justify ruling in favor of the author plaintiffs, if only they had managed to present different facts (rather than pure speculation). The court then rules in Meta’s favor because the plaintiffs only offered speculation.

But it makes a number of errors along the way to the right outcome. At the top, the ruling broadly proclaims that training AI without buying a license to use each and every piece of copyrighted training material will be “illegal” in “most cases.” The court asserted that fair use usually won’t apply to AI training uses even though training is a “highly transformative” process, because of hypothetical “market dilution” scenarios where competition from AI-generated works could reduce the value of the books used to train the AI model.

…

Training AI is Fair Use, Product Protection Versus LLM Liability, Piracy and Competition

Stratechery • Ben Thompson • June 25, 2025

Technology•AI•Copyright•FairUse•IntellectualProperty

The first big AI copyright decision has come down, and it's a big win for AI. It also provides a blueprint for how Congress can do more.

From the Wall Street Journal:

A federal judge found that the startup Anthropic’s use of books to train its artificial-intelligence models was legal in some circumstances, a ruling that could have broad implications for AI and intellectual property. Judge William Alsup of the Northern District of California ruled Monday that Anthropic’s use of copyrighted books for AI model training was legal under U.S. copyright law if it had purchased those books. The ruling is set to help shape future litigation against AI companies, legal experts said.

“The court treats the AI as akin to a human learning from copyrighted material,” said Christina Frohock, professor of legal writing at the University of Miami School of Law. “It’s fair use if you and I pick up a book and read it and develop our own thoughts,” and the court made the same conclusion about AI systems, she said.

Copyright holders including musicians, filmmakers, authors, and news outlets have sued an array of companies including OpenAI, Meta Platforms, Midjourney, and others over allegedly unauthorized use of their copyrighted material for AI model training. The ruling doesn’t apply to more than seven million books that Anthropic obtained through “pirated” means, the judge said. Anthropic used purchased and pirated books to create a central library that it drew from to train its Claude AI models. The company will face another trial over its use of pirated works, which doesn’t amount to “fair use” even if not all of those books were used for training. The ruling also doesn’t address the legal question of whether the answers provided by AI models violate copyright law.

This is an extremely important decision and one that I think gets the most important factors correct (by which, of course, I mean that Judge Alsup agrees with me 😄). What is critically important for AI is that training is considered fair use, a finding I have argued for ever since these cases started appearing a few years ago.

It’s not at all clear to me that there is anything illegal about using copyrighted material for model training, as it very well may fall under the fair use exception to copyright. AI generative models are transformative in my mind (i.e. the purpose and character of use is different); AI-generated art, for example, is not some sort of collage; it’s a uniquely new image that is pulled out of (literal) noise. Sure, you can get oddities like a facsimile of the Getty Images watermark if you prompt the model to include said watermark, but that is a function of the user, not the tool.

The amount and substantiality question is more interesting: models take the entire image, but they don’t “copy” any of it into the final work; what matters more, the taking or the copying? The effect on the potential market, meanwhile, is the most challenging question: does the fact that ingesting an artists’ work means that said artist will face a more challenging market for future commissions bear on the fact that there is no actual reproduction in terms of the final product?…

AI

Why Data is More Valuable than Code

Tomtunguz • June 24, 2025

Technology•AI•Software•DataManagement•Workflows

In “Data Rules Everything Around Me,” Matt Slotnick wrote about the difference between SaaS & AI apps. A typical SaaS app has a workflow layer, a middleware/connectivity layer, & a data layer/database. So does an AI app.

AI makes writing frontends trivial, so in the three-layer cake of workflow software the data matters much more.

The big differences between an AI & the SaaS app lie within the ganache of the middle layer. In SaaS applications, coded business rules determine each step a lead follows from creation to close.

In AI apps, a non-deterministic AI model decides the steps using context: relevant information about the lead that the AI is querying from other sources.

The better the data, the better the workflow.

The context is the most valuable component because it ultimately changes the workflow. Models are relatively similar in performance.

For example, an inbound email comes into a customer support desk, “Was I double charged this month?” An agentic workflow would query the billing system, the contract system, & the email drafting tool to draft an email to the customer with distinct language for that persona. This only works if the enterprises’ data is well structured.

Enterprises will be shy about sharing the context with their vendors because of how much value it provides. They may start to structure it & assign a department to manage it because the better its availability, the more effective the agentic systems will be.

Data architecture may become a competitive advantage & the future battleground for software companies will be the access to that context - & the fight has already begun.

ElevenLabs releases a standalone voice generation app

Techcrunch • Ivan Mehta • June 24, 2025

Technology•Mobile•VoiceAI•TextToSpeech•Innovation•AI

ElevenLabs, a leading voice AI company, has launched a standalone mobile app for iOS and Android, enabling users to generate voice clips from text directly on their devices. Previously, users had to rely on ElevenLabs' web application for such tasks. The new mobile app allows users to input text and select a suitable voice to produce audio clips on the go. The free plan offers approximately 10 minutes of audio generation, with options to choose different models balancing cost and quality. Users also have a shared credit limit between the web and mobile applications. The app integrates access to ElevenLabs' latest text-to-speech models, including v3 alpha, which allow users to control expressions using tags.

Jack McDermott, ElevenLabs' mobile growth lead, noted the growing demand from creators who previously used mobile web browsers to generate voice samples for videos in apps like CapCut, Instagram, or InShot. Recognizing this need, ElevenLabs developed a native mobile experience to enhance usability and performance. This move positions ElevenLabs to compete with other voice cloning and generation tools such as Speechify and Captions.

The mobile app is ElevenLabs' second consumer-facing application, following the release of the Reader app last year, which allows users to listen to articles, blogs, PDFs, and e-books on the go. Earlier this year, ElevenLabs also opened up the Reader app for publishers to distribute audiobooks. Looking ahead, the company plans to introduce additional features, including speech-to-text capabilities and a conversational AI agent tool, and aims to incorporate MCP-powered experiences like 11.ai into the app.

Begun, the AI Browser Wars Have

Spyglass • M.G. Siegler • June 25, 2025

Technology•AI•Web•Browsers•Innovation

About a week ago, I bit the bullet. Reading the writing very clearly on the wall, I abandoned the Arc browser and jumped ship over to Dia, the new AI-first web browser built by The Browser Company. It took a while, but now I think I'm sold. I'm not sure that Dia itself will be the browser of the future, but I'm more certain than ever that an AI-centric browser will be.

At first, I found Dia to be a bit too simple for my taste. Because Arc had such a plethora of power-user features, many of which took a lot of training to get used to, it was hard to "downgrade". Of course, that's also the exact reason why The Browser Company shifted the focus to Dia. While Arc had a dedicated fan base, it was also clearly never going to go fully mainstream. They had made a better browser for web power users, but most people were not web power users – at least not in the sense that they would take the time to learn new tricks when Chrome was likely good enough for them. It was a tricky spot for the company to be in, they had sort of painted themselves into a dreaded middle ground.

So they sort of went down a third path, letting Arc live on, but really just with underlying engine (Chromium) updates. It's more or less in "maintenance mode" – something which they'd love to outsource, it seems. But they also can't fully open source the browser because they're now using some of the infrastructure to build Dia. Again, the writing is on the wall for what happens to Arc one way or another now. And at first this was extremely annoying to me – again, I had put so much time and muscle memory into using their browser. But I also get it.

And now I can happily say that I get Dia too. The simplicity is clearly the strength right now. It's sort of like if Chrome was built from the ground up to be AI-first. And I think a lot of people will understand that. Certainly more than understood Arc.

Front and center, the fact that Dia looks like Chrome helps a lot. To be fair, most browsers now look like Chrome. One of the ones that didn't was Arc, notably because of its side-tabs. I miss the side-tabs when it comes to (tab) information density, and it seems like The Browser Company is working on ways to perhaps bring them back as an option within Dia. But the default should clearly be what people know: top tabs.

Because what you're asking people to do here is not re-learn the fundamentals of web browsing, you're asking them to take what they already know and augment it with AI. The fact that a lot of what is working in these early days of AI sort of looks like paradigms we already know doesn't seem like an accident. From ChatGPT – which looks like, yes, chat – on down, what's old is new again, at least for now. And so Dia is essentially Chrome + AI.

But wait, doesn't Chrome itself already have AI baked in? Yes, Google is testing natively integrating Gemini into the browser in the beta channels of Chrome itself right now. I'm pretty surprised by this given all the antitrust action currently surrounding the company – and Chrome itself. But it highlights just how seriously Google is taking the AI threat – and undoubtedly how much they believe in the promise of the technology. That said, Gemini in Chrome is comically clunky right now. It feels tacked on because it's nearly literally tacked on. It's this weird overlay thing that feels far more like a third-party extension than a part of the browser. Worse, it's slow. It's not a good product at the moment. But again, it's in beta.

Then again, Dia is also in beta. And it is a good product right now. They're using Google's strength against them. Because Chrome has three billion-plus users, they can't fundamentally change the browser overnight with an update. They have to tack things on and hope they don't annoy too many current users. With Dia, The Browser Company has a clean slate to do as they wish. The fact that they seemingly have better product designers helps too.

It's interesting, you could basically just use Dia as a slightly cleaner-looking version of Chrome – there's noticeably less toolbar cruft – and never touch the AI elements, and I assume plenty of people would be perfectly happy with that. But, of course, no one is going to do that. Nor should The Browser Company want that. If Dia is going to succeed, it will be because of AI.

Goldman Sachs Rolls Out AI Assistant Firmwide to Boost Employee Productivity

Medium • ODSC - Open Data Science • June 25, 2025

Technology•AI•GenerativeAI•FinancialServices•ProductivityTools

Goldman Sachs has expanded its AI efforts with the firmwide rollout of its proprietary generative AI tool, the GS AI Assistant. According to an internal memo obtained by Reuters, the assistant is now available to all employees following successful use by approximately 10,000 staff members.

The memo, authored by Chief Information Officer Marco Argenti, outlines how the tool will support a range of everyday tasks. These include summarizing complex documents, drafting initial content, and performing data analysis — areas that are increasingly being targeted by financial institutions leveraging generative AI to streamline internal operations.

With this firmwide deployment, Goldman Sachs joins a growing number of major financial institutions integrating generative AI into their workflows. Citigroup, for instance, uses tools such as Citi Assist to navigate internal policy documentation and Citi Stylus to handle summarization and document comparisons.

Morgan Stanley employs a chatbot to assist its financial advisors with client communications, while Bank of America’s widely known virtual assistant Erica supports retail clients with routine banking transactions.

By focusing the GS AI Assistant on employee productivity and internal knowledge management, Goldman Sachs is taking a targeted approach that aligns with broader trends across the industry.

The memo notes that the tool has already been adopted by thousands of employees prior to its official launch. This internal usage suggests a high level of confidence in the assistant’s capabilities and reliability.

“Our AI assistant is designed to empower employees by reducing the time spent on repetitive tasks,” Argenti reportedly stated. “From drafting reports to analyzing large datasets, the tool is meant to act as a force multiplier.”

Goldman Sachs has not disclosed whether the assistant will be made available externally or expanded beyond its current functionalities. However, the launch marks a key milestone in the firm’s AI adoption strategy, reflecting a broader trend in the financial sector where automation and generative AI are reshaping traditional workflows.

As generative AI continues to evolve, its role within banking institutions is expanding from consumer-facing services to internal productivity tools. Goldman Sachs’ company-wide rollout of the GS AI Assistant highlights the firm’s commitment to using advanced technologies to optimize employee output and maintain a competitive edge in a rapidly digitizing industry.

Anthropic just made every Claude user a no-code app developer

Venturebeat • Michael Nuñez • June 25, 2025

Technology•AI•NoCode•AppDevelopment•Innovation

Anthropic has enhanced its Claude AI platform by turning every user into a no-code app developer. This advancement allows users to create applications without writing code, leveraging the AI’s capabilities to generate functional app components and workflows based on natural language inputs.

Since its launch, Claude has amassed 500 million user-created artifacts, showing rapid adoption and engagement with this new interactive, no-code approach. This milestone signifies a heavy focus on democratizing app development, especially for those who might not have traditional programming skills but want to build solutions tailored to their needs.

This move intensifies the rivalry between AI-powered development tools, particularly with OpenAI’s Canvas feature, which similarly enables users to develop applications and interfaces without coding. As AI startups compete for dominance in empowering users and developers alike, these no-code platforms are becoming crucial battlegrounds.

Anthropic's strategy with Claude reflects broader trends in the AI industry, where enabling more accessible and efficient app development is a key goal. By integrating no-code capabilities, Anthropic taps into a growing market of creators who want to rapidly prototype and deploy applications seamlessly.

The platform's expansion could also push developers to rethink traditional software creation processes, potentially reducing dependency on extensive coding skill sets and accelerating innovation cycles. This capability may have implications for businesses, enterprises, and individual creators, providing new pathways for digital transformation and custom app building.

The Multimodal Lake House : Partnering with Lance

Tomtunguz • June 23, 2025

Technology•AI•MultimodalAI•DataPipelines•LanceDB

Remember when you took a family photo & Ghibli-styled it?

Or that vibe coding session, when you pasted a screenshot of the browser so the AI can help you debug some Javascript?

Today, we expect AI to be able to hear, see, & read. This is why multimodal is the future of AI.

Multimodal data means using text, images, video, sound, even three-dimensional shapes with AI.

These are magical user experiences. But they aren’t easy to build. Data pipelines must be built to manage larger files. Embeddings need to be extracted from these unstructured files in ways that don’t explode compute costs.

Multimodal data is orders of magnitude larger than text : the average PDF is 10x larger than a text file & a YouTube video is roughly a million times larger.

Plus, multimodal data doesn’t change one part of the data pipeline : engineers must process the data at each step of the AI stack, from model training to real-time serving & downstream analysis at petabyte scale.

We kept hearing about these problems from builders & in the same breath, about a company that solves them.

Founded by Chang She, creator of the Pandas Library, & Lei Xu, core contributor to HDFS, the Hadoop file system, LanceDB has a tremendous heritage within the data ecosystem.

RunwayML, Midjourney, WorldLabs, ByteDance, UBS, Harvey, & Hex use Lance. We admire the technology so much, we are using it internally at Theory as part of our AI stack & we’re excited to partner with Chang & Lei to bring multimodal AI to builders & users everywhere.

OpenAI's warning over China's "AI Tigers"

Youtube • CNBC Television • June 27, 2025

Technology•AI•ArtificialIntelligence•China•Ethics

OpenAI has issued a warning regarding the rapid rise of China’s artificial intelligence capabilities, referring to key players in the Chinese AI sector as "AI Tigers." These entities are advancing AI technologies aggressively, posing both competitive and ethical challenges on the global stage.

The concern centers around how China’s AI development might outpace international regulatory frameworks, potentially leading to unforeseen risks in areas such as data privacy, national security, and responsible AI deployment. OpenAI is advocating for collaborative global efforts to establish stronger standards and safeguards around AI innovation to prevent misuse and ensure transparency.

China’s burgeoning AI ecosystem includes leading technology companies and state-backed projects that are pushing the envelope on AI applications ranging from facial recognition to autonomous systems. This momentum signals a significant shift in AI leadership dynamics, heightening the urgency for international dialogue and oversight to manage the transformative impact AI can have on societies worldwide.

Venture Capital

BlackRock makes push to make private assets available to 401(k) accounts

Youtube • CNBC Television • June 26, 2025

Finance•Investment•PrivateAssets•RetirementPlans•Diversification•Venture Capital

BlackRock, a leading global asset manager, is actively working to integrate private assets into 401(k) retirement plans, aiming to enhance portfolio diversification and potentially improve returns for plan participants. Private assets, including private equity, credit, and real estate, have traditionally been accessible primarily to institutional investors and high-net-worth individuals due to their illiquidity and higher fees. However, BlackRock's initiative seeks to democratize access to these investment opportunities within workplace retirement plans.

Larry Fink, BlackRock's CEO, emphasized the benefits of blending public and private markets, stating that such a combination has been a "great investment" for many institutions. He highlighted the need for greater portfolio diversification, noting that over the past two decades, the number of publicly traded companies has declined, while firms backed by private equity have grown. In the U.S., approximately 87% of companies with annual revenues exceeding $100 million are now private, according to the Partners Group, a Swiss-based global private equity firm. (nbcwashington.com)

Despite the potential advantages, integrating private assets into 401(k) plans presents challenges. Plan sponsors have expressed concerns regarding the complexity, lack of transparency, and higher fees associated with private equity investments. Additionally, the illiquid nature of these assets can pose difficulties for participants seeking to access their funds. (cnbc.com)

To address these challenges, BlackRock is collaborating with other major asset managers and plan providers to develop solutions that incorporate private assets into retirement plans. The goal is to create diversified portfolios that include private market investments, thereby enhancing potential returns and providing greater stability for retirement savers. This collaborative effort reflects a broader industry trend toward expanding access to private markets within the $12.5 trillion workplace retirement plan market. (blackrock.com)

In summary, BlackRock's initiative to include private assets in 401(k) plans represents a significant shift in retirement investment strategies. While it offers the promise of improved diversification and returns, it also necessitates careful consideration of the associated risks and challenges to ensure the benefits are realized for all participants.

Inside Collaborative Fund’s 4x DPI Fund I

Collabfund • June 25, 2025

Business•Startups•VentureCapital•FundPerformance•InvestmentReturns•Venture Capital

Lately, it feels like every corner of my internet bubble is talking about venture returns—Carta charts one day, leaked DPI tables the next. I’ve seen posts on lagging vintages, mega-fund bloat, the “Venture Arrogance Score,” the rising bar for 99th‑percentile exits, and the PE-ification of VC.

But for all that noise, I haven’t seen much that actually walks through how returns metrics evolve over time in an early-stage fund. That’s a crucial gap. Many analyses focus on funds that are still mid-vintage, where paper markups can tell an incomplete or even misleading story.

So I pulled the numbers on Collaborative’s first fund, a 2011 vintage that’s now nearly fully realized. It offers a concrete look at how venture performance can unfold across a fund’s full lifecycle.

Fund 1 was small: $8M deployed across 50 investments. Check sizes ranged from ~$10K to ~$400K, averaging around $100K for both initial and follow-on rounds. It was US-focused, sector-agnostic, and mostly pre-seed through Series A.

Without further ado, here’s how the fund performed over its lifetime across a few core metrics:

Contributions wrapped up by year 4, which is typical for early-stage funds. Distributions didn’t start until year 4 and peaked around year 10:

Returns metrics takeaways:

Strong overall performance: The fund achieved a 4.1x net DPI—solidly top-decile. For context, PitchBook pegs 3.0x as the 90th percentile for funds in the 2011 vintage.

The patience premium: At year 10, DPI was 0.9x. There’s a lot of discussion right now around the lack of venture liquidity. 10 years in, this fund could’ve been a talking point. But then, in year 11, DPI jumped to 3.8x. Liquidity might be slow, but value can still be building.

The IRR roller coaster: Net IRR hit 18% in year 6 (off paper gains), sagged in the middle years, then popped to 22% in year 11 after a major exit. IRR can be hurt by delays, even when final outcomes are strong.

Some juice still left: Even after 14 years, the fund sits at 4.1x net DPI and 4.6x net TVPI. That means roughly 0.5x of upside is unrealized. That tees up the classic end‑of‑life question: seek to harvest the tail or ride it out?

Shallow J-curve: Early markups lifted TVPI above 1x by Year 2, keeping LPs above water on paper even before distributions began.

Returns were highly concentrated. Eight companies—Upstart, Lyft, Scopely, Blue Bottle Coffee, Maker Studios, Gumroad, Reddit, and Kickstarter—drove nearly all distributions.

Together, these accounted for just $0.8M of invested capital but returned $37.6M—an average multiple of 45x. The remaining 42 investments, representing $7.2M, returned only $2.0M (a 0.3x multiple).

Within those eight, outcomes varied widely: one company alone delivered 73% of all cash returned. Adding the next three brought the cumulative share past 90%. Multiples ranged from 1.4x to 115x—illustrating just how concentrated and variable even a “winning” subset can be.

Portfolio lessons:

Power law: Eight companies drove ~95% of all returns. One contributed 73% on its own.

Capital efficiency: Less than $1M into the top eight generated $37.6M back.

Check size ≠ upside: Some of the biggest winners were sub-$100K checks, while some larger bets in the tail went to zero. Limiting ticket size can cap downside without necessarily hampering fund performance.

Winner variance: Even among the winners, multiples ranged from low single digits up to well over 100x, underscoring that not every winner needs to be a moonshot but a few big outliers can matter tremendously.

We believe the biggest risk in early-stage VC isn’t failure. It’s missing (or mis-sizing) the outlier. In Fund I, eight companies drove nearly all distributions. One check alone accounted for more than 70% of DPI. This is the power law at work.

Collaborative’s story now spans 15 years with four early funds in harvest mode. Three rank in PitchBook’s top quartile with two in the top decile by DPI. In each, a small number of companies drove the bulk of returns. Perhaps these will make for future posts.

Until then, I hope this serves as a reminder to take venture performance narratives based on unrealized funds with a grain of salt. Some trends are real and worth watching. But many of the loudest signals may fade or reverse as funds mature. Until they’re fully played out, their stories are still being written.

10 Brutal Truths From Coatue About AI and Who Gets Left Behind: The Great Separation

Saastr • Jason Lemkin • June 27, 2025

Technology•AI•Growth•Investment•Startups•Venture Capital

Leading crossover VC firm Coatue recently put together its highly detailed East Meets West Conference overview of AI, Growth and Tech.

A lot we know, but it’s a very good collection of data and optimism on AI + B2B going forward.

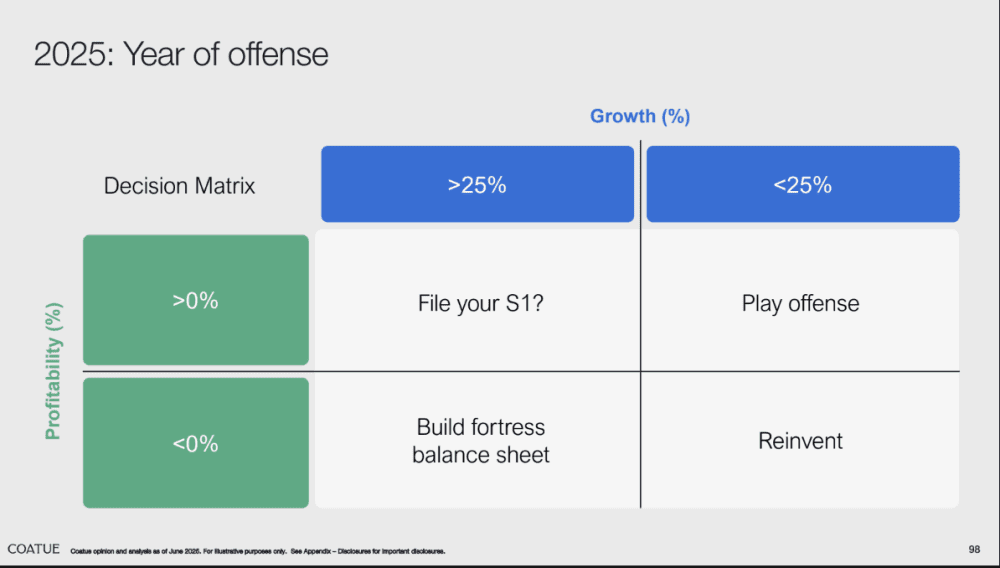

1: 2025 is the “Year of Offense” – Growth Trumps Everything

Coatue’s decision matrix for 2025 is brutally simple and reveals the new rules of the game:

Growing >25% + Profitable? → Play offense aggressively

Growing <25% + Profitable? → File your S1 immediately

Growing >25% + Unprofitable? → Build fortress balance sheet

Growing <25% + Unprofitable? → Reinvent your business

Why This Matters: The window for going public with lower growth rates is wide open. Public markets are rewarding ANY profitable growth right now. Companies that hesitate and wait for “perfect metrics” will miss the easiest IPO environment in years.

Action Items: If you’re profitable and growing, don’t wait. If you’re unprofitable, get to breakeven fast or raise enough capital to survive the next wave. There’s no middle ground anymore.

2: Growth Gets 13x Revenue Multiples vs 5x for Slow Growth – The Great Separation

The valuation gap between fast and slow growers has never been wider:

>25% Growth Companies: 13x revenue multiples (based on just 8 companies – that’s how rare they are)

<25% Growth Companies: 4-5x revenue multiples (based on 163 companies)

The Scarcity Factor: Only 5% of public software companies are growing >25% today, down from 26% in 2021

Historical Context: We’ve gone from 17% median revenue growth (2021) to just 9% today. High-growth companies aren’t just getting premium valuations – they’re becoming unicorns in public markets.

The Brutal Reality: Growth isn’t just valuable – it’s becoming extinct. If you’re growing fast, you’re literally in the top 5% of all software companies. Public markets are treating you like the rare asset you are.

Action Items: If you’re growing >25%, leverage this scarcity for maximum valuation. If you’re growing <25%, understand you’re competing with 95% of the market for scraps.

3: The AI Coding Boom Generated $1.3B in Net-New ARR in Just 12 Months

The Most Explosive Category Growth in Software History

The AI coding market didn’t just grow – it exploded from almost nothing to $1.6B ARR in a single year:

Starting point (June 2024): ~$300M ARR (primarily GitHub Copilot)

Today (June 2025): ~$1.6B ARR across the ecosystem

Net new revenue generated: $1.3B ARR in 12 months

Key players driving growth:

AI Co-Pilots: GitHub Copilot, Cursor, Windsurf

Code Enhancement: Augment Code, Cognition, Tabnine

Developer Environments: Replit, Lovable, StackBlitz

1. Developers are the highest-value users – willing to pay $20-100+ per month per seat

Immediate ROI is measurable – 30%+ productivity gains translate directly to cost savings

Network effects are massive – better AI attracts more developers, creating more training data

Bottom-up adoption – developers choose tools, then companies buy enterprise licenses

The Anthropic Parallel: While AI coding went from $300M to $1.6B ARR, Anthropic went $0→$1B ARR in 21 months, then $1B→$2B in 3 months, then $2B→$3B in just 2 months. Speed of execution in AI is unlike anything we’ve seen.

What This Means for Traditional SaaS: Your growth benchmarks are obsolete. While you’re celebrating 100% YoY growth, AI companies are achieving 500%+ growth rates and creating entirely new billion-dollar categories in months.

Action Items: If you’re building dev tools and not AI-first, you’re already obsolete. If you’re in any other SaaS category, study this playbook – AI integration isn’t optional anymore, it’s table stakes.

4: Private Capital is Unlimited for Top Companies – OpenAI’s $40B Raise Bigger Than All 2018-2024 Combined

The Winner-Take-All Capital Market

The concentration of venture funding has reached extreme levels:

OpenAI’s $40B raise in 2025 is larger than the top fundraises from 2018, 2019, 2020, 2021, 2022, 2023, and 2024 combined

Top 10 companies now get 52% of all venture funding (up from 16% in 2015)

Historical context: We’ve never seen this level of capital concentration in venture history

What This Creates: A two-tier market where the best companies can raise unlimited capital and stay private indefinitely, while everyone else fights for the remaining 48% of funding across thousands of companies.

The Feedback Loop: Top companies use unlimited capital to hire the best talent, build better products, and grow faster – creating an insurmountable moat against competitors with limited resources.

Action Items: Either be exceptional enough to access tier-1 capital, or build a capital-efficient business that doesn’t need massive funding. The middle tier is disappearing.

5: Microsoft Hit Peak Employees While Revenue Grows – AI Drives Real Productivity

The Efficiency Revolution is Here

Microsoft’s transformation proves AI productivity gains are real, not hype:

Peak employment: Microsoft’s headcount peaked and is now declining

Revenue growth continues: While reducing headcount, revenue keeps growing

30% of Microsoft’s code is now generated by AI

AppLovin case study: Revenue per employee doubled from $3.6M to $7.6M while keeping headcount flat

Why This Matters: We’re entering an era where the best companies will generate more revenue with fewer people. This isn’t just cost-cutting – it’s a fundamental shift in how value is created.

The Competitive Advantage: Companies that embrace AI-driven productivity will have 2x+ the revenue per employee of their competitors, creating massive profit margin advantages.

Action Items: Measure and optimize revenue per employee aggressively. Implement AI tools across all functions, not just engineering. The companies that figure this out first will have unbeatable unit economics.

The AI Agent Chasm

Thomvest • Alex Rohrbach • June 27, 2025

Technology•AI•Agents•LowCode•VentureCapital•Venture Capital

Are we ready to make the jump from prototype to real-world VC workflows?

I. Introduction

Late Monday night. A coordination agent prompts a competitive analysis agent to assess the landscape of cross-border payment platforms. The financial agent runs a market-sizing tool and CAGR calculator to complete the financial model. The coordination agent packages everything into a predefined template, and by 6 a.m., the investment memo hits each partner’s inbox, two hours before IC. Sounds like sci-fi? This is essentially Project Castellana, a prototype multi-agent system built by Alde González to automate memo writing. Other proofs-of-concept, like Virat Singh’s AI-driven hedge fund, are expanding our concept of the possible.

So are we ready to make the jump from prototype to real-world workflows in venture capital?

Nvidia has called 2025 the “year of AI agents.” But in tech, especially among VCs , bold declarations sometimes crumble under closer inspection. Is this another case of overhype? Can a scrappy business user actually spin up an AI agent that delivers real value?

We set out to explore what’s possible with minimal training, all while balancing our full-time roles. Think serious side projects by non-technical users. Can low-code tools meaningfully enhance venture workflows today?

Using a range of platforms, we built agents to research, assess, and synthesize startup prospects, and others to draft interview guides for customer discovery. Along the way, we found what worked (like off-the-shelf templates) and what didn’t (like inconsistent user interfaces and data pipelines).

We discovered a lot can be achieved for automating our workflows, but also that the baseline had shifted significantly. What we could achieve off-the-shelf with ChatGPT 4o and Deep Research was often sufficient.

Here’s what we did and what we learned.

II. Methodology

We started by clarifying what we meant by an “agent.” For us, an agent is an entity in the virtual world that makes decisions under uncertainty. You can’t just define steps 1 through 10, run them a hundred times, and expect the same outcome every time. Agency is required to navigate ambiguity, which gives rise to non-deterministic outcomes.

Relevance AI frames it well:

“Agentic automation is a new way to solve a large amount of these ‘undefinable’ tasks. Those where a model is able to predict what steps to take and how to connect them in a generalized but highly scalable way.”

In other words, every decision is predicted, not pre-defined.

We explored a range of low-code and natural-language platforms, including Relevance AI, Flowise, CrewAI, and a few prompt-based solutions like Octagon’s VC Agents and Wale’s Venture GPT. Overall, we were impressed by what we could achieve with minimal training.

We found it useful to group platforms into three categories:

Prompt-based: Create and manage agents entirely through natural-language instructions. The best example is GPT Builder. Octagon and Wale provide off-the-shelf solutions. Relevance AI offers a natural-language based builder.

Low-code: Use visual interfaces (e.g., drag-and-drop, flowcharts, block builders) to define logic with minimal coding. Flowise, Relevance AI, and CrewAI offer low-code solutions.

Code: Full developer platforms where you write and control the agent’s logic, memory, integrations, and architecture. LangChain and CrewAI stand out here.

Some platforms, like Relevance AI, fall across multiple categories. You can generate an agent with a natural-language prompt or build it from scratch.

As we used these platforms, we scored them on four dimensions:

Setup ease: How quickly and clearly we could get started, from onboarding to launching the first working agent.

Data integration breadth: How well the platform connects with external data sources and APIs.

Output value: How useful or insightful the agent’s output was.

Output consistency: How reliably the agent produced accurate, repeatable results across similar tasks.

But the real question we kept coming back to was simple: Would we use this in our day-to-day?

In some cases, the answer was yes. For example, we found ourselves returning to Wale’s Venture GPT for quick, lightweight research. It was fast, consistent, and good in a pinch. Other tools offered more flexibility or depth but didn’t necessarily end up in our workflow.

III. Takeaways

These are our five takeaways after testing a range of platforms and building agent workflows. Some patterns emerged across platforms, and while we saw real potential, we also encountered consistent friction.

1. There’s a chasm between prompt-based and low-code workflows.

Our biggest learning: the jump from writing prompts to building functional workflows is wider than it looks. We could quickly spin up prompt-based agents to research or summarize a topic — but the results were thin. Getting to something genuinely useful took a lot more work. Despite what our twitter feeds may suggest, the gap between “demo” and “daily use” is still significant.

For example, even this relatively simple flow — pulling values from a spreadsheet of investors — required configuring a document store, converting to a vector embedding, managing API credentials, and linking everything to a chat model. To do this confidently, you need a solid understanding of inputs, outputs, and chaining logic. That’s intuitive for someone with a computer science background, but far less so for a generalist. This may be a “no-code,” solution, but you still need to think like a developer and have a mental model to reason through the system being built.

2. It’s hard to beat the new baseline, an LLM.

We were often impressed by what these agents could do. But we also found ourselves reaching for ChatGPT-4o and Deep Research again and again. The truth is, those tools have become incredibly fast, reliable, and easy to use. For many research tasks, a well-structured GPT prompt gave us everything we needed in minutes. That doesn’t mean the agent platforms weren’t valuable — they offered flexibility, depth, and automation potential — but for one-off analysis and quick synthesis, an LLM often set the bar.

This distinction became apparent when we thought about research agents versus action agents. We defined research agents as agents designed to read, synthesize, and explain — exactly what ChatGPT excels at today. Alternatively, we defined action agents as agents built to do something: send an email, update a CRM, trigger a workflow, or run a process in the background. That’s where platform-based agents shine. They integrate with tools, run on schedules, and move information between systems. ChatGPT was often our go-to for research tasks, while platforms like Relevance or CrewAI became more useful when we needed agents that could take actions in the real world.

3. Code is sometimes easier to troubleshoot than low-code tools.

This one surprised us. We expected low-code tools to be the easiest entry point, and that was often true. But when things break, it’s a different story. These platforms each use their own evolving terminology, layouts, and logic flows. We often turned to LLMs for help (e.g., “How do I add a credential?” or “What’s the difference between an agent and a workflow?”). In Python, the advice was usually reliable — libraries might evolve, but the language itself is predictable. With low-code tools, though, an LLM might tell you to click the three dots in the top right corner… but the three dots don’t exist! That’s because the UI and structure vary so widely across platforms — and they change fast. Ironically, we often had more clarity and control when troubleshooting directly in code.

4. Templates are the best way to get started.

Almost every platform offered templates, and these made a big difference. Templates showed how everything connected and gave us a starting point.

Relevance AI stood out here, offering “Inventor”, a natural-language agent builder. We could describe what we wanted, and it generated a usable draft agent. We were able to tweak from there instead of starting cold. A pretty magical experience.

5. Pipelines are the hardest part — and the most important.

Without access to real data, it’s hard to build an agent that’s better than ChatGPT, especially for research workflows. But getting your data in (e.g., authentication, ingestion, context formatting) feels harder than it should be. We didn’t find the templates made it that much easier for accessing Harmonic or Crunchbase data. Even with an API key in hand, connecting third party or proprietary data took more technical skill than we expected. For many workflows, this was the limiting factor.

We came away encouraged by what’s possible, but clear-eyed about where things stand. Agent platforms are evolving quickly and offer real utility — especially for action-oriented workflows — but they’re not yet plug-and-play for most business users. Meanwhile, tools like ChatGPT continue to raise the baseline for what’s possible with a simple prompt. The challenge ahead isn’t just building more capable agents — it’s making them more usable, adaptable, and integrated into real workflows. Closing that gap, between capability and usability, is where the real opportunity lies.

Education

UCLA student shares how he was using ChatGPT

Youtube • OpenAI • June 25, 2025

Technology•AI•ChatGPT•StudentExperience•Education

IPO

Wealthfront Files to IPO at $340,000,000+ ARR. IPOs are Back!

Saastr • Jason Lemkin • June 25, 2025

Finance•Investment•Fintech•IPO•WealthManagement

The Wealthfront IPO offers five key lessons for SaaS founders on building a $2B+ fintech with 40%+ margins, showcasing how a pure-digital wealth management platform achieved profitability ahead of peers and positioned itself for a landmark IPO.

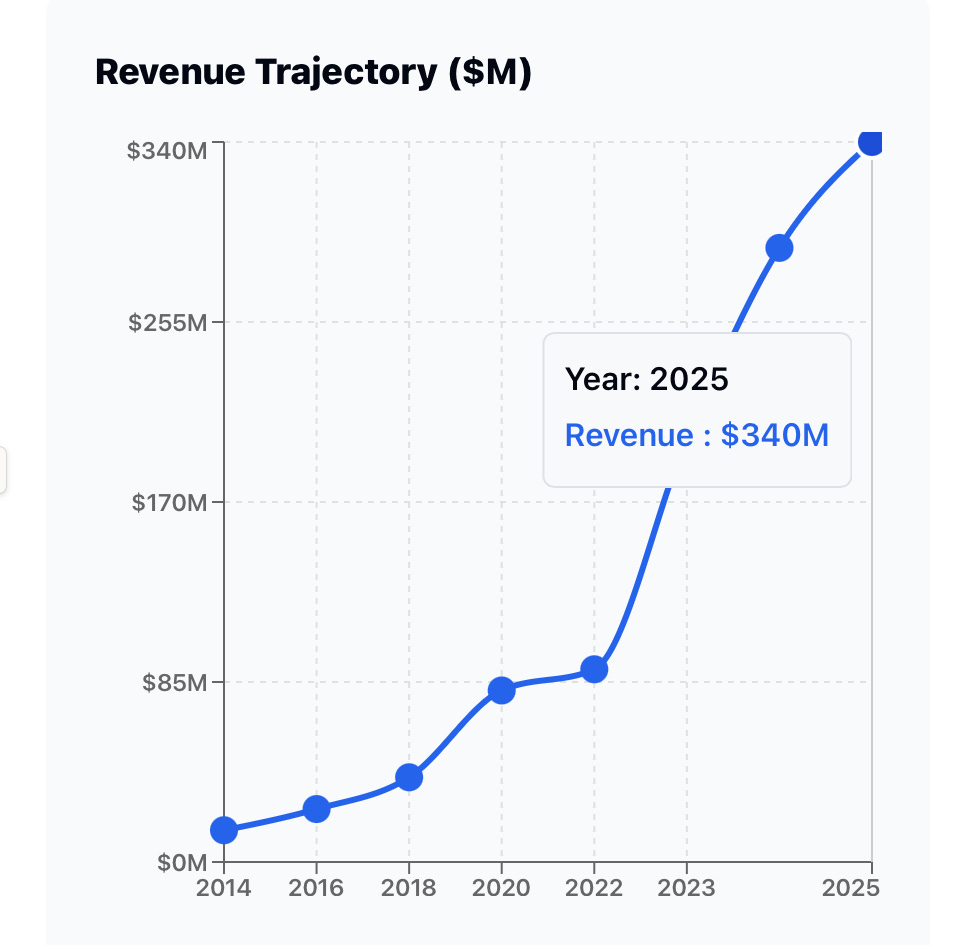

By the numbers, Wealthfront in 2025 reports a $340M+ annual run rate, representing a 70% growth from $200M in 2023. It manages over $80B in client assets across more than 1 million accounts, maintaining 40%+ EBIT margins since 2023. Efficiency is notable, with 330 employees overseeing $80B+, reflecting a 57x revenue growth with only 3x headcount increase—over $1M in revenue per employee. Unit economics reveal an $80K average client balance and 50% organic growth through referrals.

In valuation and funding, Wealthfront is currently valued at over $2B, as implied by a recent share buyback. It has raised $274M across eight rounds since 2008, with the last funding round being a $75M Series E in 2018, and has confidentially filed for an IPO in June 2025.

Market-wise, Wealthfront ranks as the 4 largest robo-advisor by assets under management, operating in a $6.6B+ global robo-advisory market growing at a 30.5% CAGR. Its pure-digital strategy distinguishes it from hybrid competitors. Founded in 2008, it took 17 years to reach IPO — reflecting a new normal in fintech timelines.

The first lesson: Stick to your core strategy even when others pivot. Unlike competitors who added human advisors, Wealthfront doubled down on pure automation, scaling profitably. Its headcount grew only threefold while revenue grew 57x in the past decade, highlighting the scalability of its approach.

Second, independence proved invaluable. Wealthfront walked away from a $1.4B acquisition offer in 2022, choosing to grow independently. This decision allowed the company to pursue a $3B+ IPO, potentially delivering greater long-term value.

Third, profitability before scale pressure: Wealthfront achieved 40%+ EBIT margins, unlike many fintech peers still unprofitable. Being cash flow positive and consistently profitable positioned it well for the public markets, which increasingly favor sustainable growth over growth at all costs.

Fourth, customer success drives growth: Half of new clients originate from referrals, showcasing genuine organic growth fueled by customer satisfaction. Clients maintain an average $80K balance and have collectively saved over $1 billion in advisory fees compared to traditional advisors.

Finally, timing market entry matters. IPO activity in the robo-advisor sector has been minimal, and Wealthfront stands to be the first major pure-digital wealth management company to go public. Favorable market conditions, including a near 50% rise in the S&P 500 over three years, enhance its opportunity.

The IPO serves as a market validation play, testing if pure-digital financial services can command premium public market valuations amid skepticism toward direct-to-consumer wealth models. It also represents a category creation opportunity, establishing a blueprint for similar fintech companies. Wealthfront differentiates through tax-optimization services and a low 0.25% management fee, fostering customer loyalty in a market dominated by giants like Vanguard and Schwab.

The bottom line for founders from Wealthfront’s journey emphasizes strategic patience, profitability over growth-at-all-costs, independence, customer value, and market timing. Its story exemplifies how a focus on sustainable, profitable growth in a pure-digital model can yield a compelling path to IPO.

Wealthfront’s valuation analysis suggests a conservative $3B-3.5B to bull case $5B-6B range, influenced by profitability, efficiency, market leadership, and growth acceleration. Risks include investor skepticism of direct-to-consumer models, dependence on market performance, competition with established players, and interest rate sensitivity. Favorable market conditions and reopening fintech IPO windows lend support for a $4B-5B targeted valuation, reflecting a premium justified by strong margins and business efficiency.

Interview of the Week

The $200 billion dilemma: Is Bill Gates helping or harming Africa?

Keenon • Andrew Keen • June 24, 2025

Business•Management•Philanthropy•Development•Africa•Interview of the Week