Contents

Post Election

AI

Tech

Venture Capital

Andrew Keen and Nick Carr

Deepseek R1 is AI’s Sputnik moment.

Editorial: AI’s Sputnik Moment

Last week DeepSeek emerged and Andrew and I spoke about it on our video conversation. On Monday the stock market crashed. Below is a short segment that ends with Andrew stating “it could mean a sharp correction for the markets”. We never give investment advice, but this was spot on.

Here is the clip.

This week we have been bombarded with further discussion about DeepSeek. It has become clear that the hedge fund that developed DeepSeek has over time invested in about 50,000 NVIDIA GPUs costing around $1.5bn. Far short of Stargate’s $500 billion target but still too much for most players.

And as if last week’s news wasn’t enough we now have another new model - Tülu 3 -from Allen Institute (https://decrypt.co/303703/remember-deepseek-two-new-ai-models-say-theyre-even-better) that is seemingly even better and is even more open source.

Both Stratechery and Semi-Analysis have great analysis of Deepseek (see below).

So what does it all mean?

I think that depends on who you are.

For OpenAI it means that there is competition. Sam Altman already acknowledged the need to speed up their release schedule and stay ahead. Are Language models are not dead. They are the basis on which all smaller models are created. But large models can now be built more cheaply and are good enough for many use cases. The cost of training new models will not decline but the cost of building derivative models has fallen off a cliff. Open source will become a real solution for many businesses.

For Meta it is a very concerning moment. Meta’s Llama is the prime open source model, along with Alibaba’s Qwen. Now DeepSeek has open sourced its Llama equivalent - R3 - and its reasoning o1 equivalent - R1. This gives developers simple and cheaper choices that are as good or better than Llama. It threatens Meta’s entire strategy.

For Microsoft it means that the dependence on OpenAI is a thing of the past. Its rapid embrace of DeepSeek (see below) is a great example of its desire to reduce reliance and costs. It also probably means that Microsoft does not need to build its own super expensive model.

For Google it is more bad news. Google has to build its own models. That will not get cheaper. And it can’t do what Microsoft does and rely on third parties.

For Amazon it is, similar to Microsoft, able to incorporate their party efforts. Costs can go down.

For Nvidia the impact is probably overstated. The large buyers still need to buy Nvidia GPUs and train new large models with hundreds of millions of parameters. OpenAI, Google, Amazon, Microsoft and the rest will all remain customers.

For Grok/Elon Musk it is both good and bad news. Good that OpenAI has to stay on its toes. Bad that cheap and effective models are coming. Grok 3 is soon to be launched and the bar has been raised very high now.

For AI users this is only good. More models, better models, cheaper models. And with “reasoning” and able to act as agents not just chatbots. Customers too will benefit. The pile of cash available to purchase AI is large and the platforms, apps and services available is growing exponentially. This will be trillions of dollars and will accelerate to that more rapidly.

Marc Andreessen described DeepSeek as a Sputnik moment for AI. Sputnik triggered the US to accelerate spending and achievement. The same will be true here.

Essays of the Week

An investing revolution is coming. The U.S. isn’t ready for it.

Crypto technology can democratize access to opportunities offered by early-stage private companies.

January 28, 2025 By Vlad Tenev

Vlad Tenev is the chief executive and co-founder of Robinhood Markets, a financial services company.

Looking to invest in some of the world’s most exciting companies? Good luck.

OpenAI, valued at $157 billion, is still private. So is SpaceX, at $350 billion. Not to mention Canva, Revolut, Stripe, Anthropic — each worth tens of billions.

That doesn’t mean these companies don’t have investors. They do: a small circle of wealthy backers with access to early-stage investment in private companies. Many of those insiders are sitting on profits as high as 1,000 times their initial investment or more, which everyday investors can’t partake in.

This investment gap is worsening as companies increasingly avoid going public; the United States has roughly half as many public companies as it did in 1996. Meanwhile, “accredited investor” rules, which generally restrict private-market investments to those with a net worth over $1 million or income of over $200,000, shut roughly 80 percent of U.S. households out of the private market.

There is a solution that can open this door — and usher in the most inclusive investment revolution since stock trading shifted from physical trading floors to electronic systems. Yes, it’s crypto. Stay with me, even if you’re skeptical.

When many hear “crypto,” they think its chief use case is meme coins, whether of presidents, dogs or frogs. To understand the coming trading revolution, however, we should start thinking of crypto in a different way: as a technology that enables the partitioning and trading of all assets, including real-world ones such as private companies.

Here’s how this can work: Crypto’s blockchain technology allows for the fast, easy and secure creation of digital tokens, which can confer ownership claims to something of value. The power of this process, called tokenization, lies in the flexibility of the technology to divide and distribute rights to almost anything so that they are tradable like a stock. Real-world assets, such as private companies, can be tokenized with only minor changes to the existing legal documents that govern ownership claims to these assets.

Through a similar process, you could tokenize a Picasso, the Washington Wizards, carbon credits or a favorite musician’s publishing rights. Anyone with a mobile phone could trade any tokenized asset in any quantity 24/7 — a technological step-change improvement over our current stock markets.

That’s where the investing revolution begins. Tokenizing private-company stock would enable retail investors to invest in leading companies early in their life cycles, before they potentially go public at valuations of more than $100 billion. This would also benefit the companies themselves, enabling them to draw additional capital by tapping into a global crypto retail market that is growing increasingly sophisticated and investment-savvy, without sacrificing the private-company protections they are used to — such as employee vesting and stock holding requirements.

There are, of course, ways to open up access to private markets without crypto, including through specialized secondary trading platforms such as EquityZen…

Migration Is Remaking Our World — and We Don’t Yet Understand It

Published: 2025-01-27 | Reading Time: 4 min | Domain: nytimes.com

Opinion Columnist

The admonition that we must remember the past to avoid repeating it has always struck me as strange. If human history teaches us anything, it is that the unexpected keeps happening, and responding to utterly new circumstances with the tools and ideas of the past can lead just as easily to disaster.

Nowhere does this seem more true than in two major story lines that I have been watching closely over the past decade. The first is one none of us can avoid: the global surge in migration and the new era of hard-right politics it has spurred in some of the wealthiest countries in the world.

The other has been a much quieter saga, but has recently come to the fore as the data have become undeniable: Across much of the globe, fertility rates are plunging at an alarming rate. Last month McKinsey released a startling report that concluded that most countries, not just the wealthiest ones, could see their growth slow drastically as their populations sink.

These trends intertwine in ways that are both obvious yet underexamined. One data point illustrates the rapidity and inexorability of this trend. For decades, Mexicans have come to the United States in search of work and opportunity. But in 2023, Mexico’s birthrate slipped just below that of the United States, and today Mexicans make up a diminishing share of the U.S. immigrant population…

Something Extraordinary Is Happening All Over the World

We are living in an age of mass migration.

Millions of people from the poor world are trying to cross seas, forests, valleys and rivers, in search of safety, work and some kind of better future. About 281 million people now live outside the country in which they were born, a new peak of 3.6 percent of the global population according to the International Organization for Migration, and the number of people forced to leave their country because of conflict and disaster is at about 50 million — an all-time high. In the past decade alone, the number of refugees has tripled and the number of asylum seekers has more than quadrupled. Taken together, it is an extraordinary tide of human movement.

The surge of people trying to reach Europe, the United States, Britain, Canada and Australia has set off a broad panic, reshaping the political landscape. All across the rich world, citizens have concluded — with no small prompting by right-wing populists — that there is too much immigration. Migration has become the critical fault line of politics. Donald Trump owes his triumphant return to the White House in no small part to persuading Americans, whose country was built on migration, that migrants are now the prime source of its ills.

But these vituperative responses reveal a paradox at the heart of our era: The countries that malign migrants are, whether they recognize it or not, in quite serious need of new people. Country after country in the wealthy world is facing a top-heavy future, with millions of retirees and far too few workers to keep their economies and societies afloat. In the not-so-distant future, many countries will have too few people to sustain their current standard of living.

The right’s response to this problem is fantastical: expel the migrants and reproduce the natives. Any short-term economic pain, they contend, must be borne for the sake of safeguarding national identity in the face of the oncoming horde — a version of the racist “great replacement” theory that was once beyond the pale but has become commonplace. But we can see how this approach is playing out, in a laboratory favored by Trump and his ilk.

In Hungary, object of much right-wing admiration, the government of Viktor Orban’s twin obsessions are excluding migrants and raising the country’s anemic birthrate. But reality has proved to be stubborn. Hungary has made almost no progress on the latter, and on the former, the government has been courting guest workers in the face of a chronic labor crisis. That’s despite Orban having declared, in the teeth of the Syrian migrant crisis in 2016, that “Hungary does not need a single migrant for the economy to work or the population to sustain itself or for the country to have a future.”

Hungarians, especially young, skilled and ambitious ones, disagree — and are voting with their feet by themselves becoming migrants. Faced with a weak economy, 57 percent of young Hungarians said in a recent survey that they planned to seek work abroad in the next decade; just 6 percent said they definitely planned to stay in Hungary. One-third of those who leave the country have a college degree, another survey found, and nearly 80 percent are below 40 years old. The government has spent millions to try to lure young Hungarians back home, with little to show so far. Demographers say that the population could drop to 8.5 million by 2050, a loss of about a million people.

Orban’s Hungary should be a cautionary tale for other nations, not a model. But its trajectory tells us a lot. Change is always hard, and the more rapid and unexpected the change, the more difficult it is to accept. We are lousy at predicting how many humans there should be and where they should live; the timing and geography of demographic shifts is often off-kilter to human needs. Migration messily brings both difficulties to the fore, offering both a challenge and an opportunity. It also eludes easy fixes and lazy characterizations.

Yet despite migration’s centrality to our politics and our world, nobody really understands it….

Lina Khan warns of ‘catastrophic consequences’ if Trump gives free hand to private equity

Published: 2025-01-27 | Reading Time: 3 min | Domain: ft.com

Lina Khan has warned of “catastrophic consequences” for America if Donald Trump’s antitrust officials fail to scrutinise private equity groups that are buying up chunks of the US economy.

The recently departed chair of the US Federal Trade Commission told the Financial Times that private equity groups posed a threat to the country’s healthcare system.

Given “the stakes of our healthcare markets, it’s extraordinarily important that we are staying vigilant here”, said Khan.

“If enforcers want to decide that they want to look the other way, that’s going to have, I fear, catastrophic consequences for Americans.”

Wall St has cheered the departure of the aggressive trustbuster, a member of a new generation of progressive officials appointed by Joe Biden who cracked down on anti-competitive conduct across the US economy from Big Tech to private equity. After leaving as FTC chair on Monday, Khan warned against reverting to what she sees as lax antitrust enforcement that for decades let businesses grow unchecked.

Khan cautioned that “in our democracy right now there is an open question” on whether “monopolists in extraordinarily powerful firms are going to be able to corrupt the political process and interfere with legitimate law enforcement”.

Her comments echo the alarm sounded by Biden during his farewell address, in which he warned that an “oligarchy is taking shape in America” that risks damaging democracy. Biden railed against an emerging “tech industrial complex” for holding a “dangerous concentration” of wealth and power in the country.

His speech was broadly seen as a broadside against business magnates who have cozied up to US President Donald Trump, including Tesla boss Elon Musk and other Big Tech CEOs.

Tech chief executives, including Musk, Amazon executive chair and founder Jeff Bezos and Meta chief executive Mark Zuckerberg, sat in prominent seats in front of the president’s cabinet nominees at Trump’s inauguration this week.

Amazon and Meta, whose FTC trials will begin in October 2026 and April, respectively, each donated $1mn to Trump’s inaugural fund, alongside other tech groups.

Big Tech was a critical pillar of Khan’s agenda. She launched an investigation into Microsoft’s cloud business as well as an inquiry into the partnerships between cloud providers and generative AI companies.

In private equity, a key focus was roll-ups in the healthcare sector. She argued that these roll-ups — in which companies purchase and merge several businesses in the same sector — and “strip and flip” models — where the acquired groups’ assets are sold off — often leave those businesses indebted and weakened.

“I heard a flood of concern from healthcare workers, from ER doctors . . . about the private equity roll-ups that were resulting in worse quality care, higher prices,” Khan said.

“These are just market realities that are not going away,” she added.

Just days before Khan’s resignation, the FTC reached a settlement with Welsh, Carson, Anderson, and Stowe that limits the buyout group’s involvement with a business it created that has acquired more than a dozen anaesthesiology practices in Texas. The FTC alleged the deals were aimed at raising prices and quashing competition.

Welsh Carson said it reached the agreement — which includes no admission of wrongdoing or monetary penalties — after the FTC “threatened to re-litigate” in its in-house court claims that were dismissed by a federal judge last year.

Khan has also ordered JAB Holdings to divest vet clinics as a condition for two proposed acquisitions that the agency said could have created monopolies. JAB has said the outcome of discussions with the regulator “was in line with what we anticipated”.

But not all FTC cases have come to an end, and the new administration has the power to withdraw or soften challenges launched by Khan.

The ex-chair argued that companies facing FTC trials were trying to get a better deal from Trump’s administration.

“This type of jostling is something that we’re all seeing in clear display,” said Khan.

Bill Gates Wants To 'Tax The Robots' That Take Your Job – And Some Say It Could Fund Universal Basic Income To Replace Lost Wages

Source: Benzinga | Published: 2025-01-28 | Reading Time: 1 min | Domain: benzinga.com

Back in 2017 – long before ChatGPT became a household name and artificial intelligence started raising existential questions – Bill Gates floated a bold idea: tax the robots. The Microsoft cofounder suggested that companies deploying automation to replace human workers should pay taxes on those machines, just as they do on human wages. At the time, it was a strikingly simple yet provocative proposal aimed at tackling an issue most people didn't even realize was coming.

Fast-forward to today when fears about AI replacing jobs are no longer sci-fi speculation but a looming reality. Gates' idea now seems eerily prophetic. Automation has made massive inroads into industries ranging from manufacturing to logistics, and the resulting economic implications have sparked a global debate. Suddenly, the idea of taxing robots has gone from a hypothetical thought experiment to a serious policy consideration.

The Case for a Robot Tax

In a 2017 interview with Quartz, Gates explained his rationale in clear terms: If a human worker earning $50,000 annually is taxed, why wouldn't a robot performing the same job face a similar levy? He proposed using the revenue generated from such taxes to fund social programs, retrain displaced workers and create new jobs in fields requiring human empathy like elder care and education.

"You cross the threshold of job replacement all at once," Gates explained, referencing industries like warehouse work and driving already on the brink of automation. His argument wasn't about punishing innovation but rather managing its societal impacts. By slowing automation "just a bit," Gates believed communities and governments could better adapt to the economic disruption. He placed the responsibility squarely on governments, stating that businesses alone couldn't address rising inequities.

Stanford lecturer Kartik Gada has taken a more optimistic approach, arguing that technological deflation – where goods and services become cheaper as technology advances – could fund UBI without increasing individual taxes. His ATOM publication even suggests that deflationary pressures created by automation might naturally offset the costs of a universal income program.

The Criticism: Innovation vs. Regulation

Not everyone is on board with taxing robots. Critics argue that such a tax could stifle innovation by discouraging companies from investing in automation. They contend that slowing technological progress would put countries at a competitive disadvantage globally. Others propose alternative solutions like universal basic dividends funded by the profits of tech companies as a less intrusive way to redistribute wealth.

Despite these critiques, automation is accelerating. Industrial robot orders have reached record highs, and AI is creeping into more job sectors than ever before. The COVID-19 pandemic supercharged the trend, with companies like Amazon doubling down on robotics to keep warehouses operational during labor shortages.

A Debate That's Far From Over

As automation continues to reshape industries, Gates' robot tax remains one of the most intriguing and polarizing ideas. While some see it as a way to balance innovation with societal needs, others fear it could hinder progress. What's clear is that the conversation Gates started in 2017 is more relevant than ever.

The question isn't just whether robots should be taxed but how society can navigate a future where machines increasingly take on roles once held by humans. Whether through robot taxes, UBI or some yet-to-be-discovered solution, one thing is certain: how we think about work, taxation and social safety nets will have to change.

I Study Financial Markets. The Nvidia Rout Is Only the Start.

Source: NYT > Top Stories | Published: 2025-01-28 | Reading Time: 1 min | Domain: nytimes.com

By Mihir A. Desai

Dr. Desai is a professor at Harvard Business School and Harvard Law School.

During Monday’s stock market swoon, Nvidia, the artificial intelligence giant, lost nearly $600 billion in value, the biggest single-day loss for a public company on record. How could the fortunes of one of our leading companies fall so far so suddenly? While some will seek answers in the promising A.I. start-upcoming out of China or the vicissitudes of trade policy, these movements speak to deeper changes in our financial markets that can best be explained, oddly enough, by revisiting ancient mythology.

The image of the ouroboros, a serpent eating its own tail, is a remarkably durable and pervasive motif. Ancient Chinese, Egyptian, European and Latin American civilizations seemed captivated by the image or ones like it, variously symbolizing the cyclic nature of life, the totality of the universe or fertility. Today, the more resonant lesson comes from the self-cannibalism of the ouroboros, which helps us understand the most significant financial puzzle of our day.

Like the ouroboros, I believe Big Tech is eating itself alive with its component companies throwing more and more cash at investments in one another that are most likely to generate less and less of a return. Monday’s correction shows that our financial markets — and possibly your retirement portfolio — may be starting to reflect an understanding of this dynamic.

Even after Monday’s dip, the disjunction in valuations between Big Tech — sometimes referred to as the Magnificent 7 of Microsoft, Apple, Amazon, Nvidia, Tesla, Meta and Alphabet — and the rest of the stock market remains staggering. The Magnificent 7 still constitute more than 30 percent of the market capitalization of the S&P 500 (up from just under 10 percent a decade ago). When you compare their stock prices with their earnings or sales, the traditional way to measure the valuation of a share, our tech Goliaths trade at ratios that are two to three times those of the Unmagnificent 493.

OpenAI in Talks for Huge Investment Round Valuing It Up to $300 Billion

Published: 2025-01-30 | Reading Time: 1 min | Domain: wsj.com

Updated Jan. 30, 2025 5:31 pm ET

OpenAI is in early talks to raise up to $40 billion in a funding round that would value the ChatGPT maker as high as $300 billion, according to people familiar with the matter.

SoftBank would lead the round and is in discussions to invest between $15 billion and $25 billion. The remaining amount would come from other investors.

Copyright ©2025 Dow Jones & Company, Inc. All Rights Reserved. 87990cbe856818d5eddac44c7b1cdeb8

SoftBank in Talks to Invest Up to $25 Billion in OpenAI

Source: NYT > Technology | Published: 2025-01-30 | Reading Time: 2 min | Domain: nytimes.com

OpenAI, the San Francisco artificial intelligence company that has been on a yearslong money-raising frenzy, is in talks with the Japanese conglomerate SoftBank for an investment up to $25 billion, according to three people familiar with the negotiations.

Some of that money could be used to cover OpenAI’s commitment to Stargate, the $100 billion data center project announced at the White House last week, the people said. But the money would be separate from the investment SoftBank is already putting into that project.

The sources, who requested anonymity because the talks were confidential, stressed that the terms of the investment are not yet final.

Stargate, a joint venture of SoftBank, OpenAI and the software company Oracle, could result in $500 billion of investment in computing infrastructure, the companies have said.

The negotiations were reported earlier by the Financial Times.

OpenAI started the A.I. boom in late 2022 with the release of its online chatbot, ChatGPT. But the company has had an unusually tumultuous few years since then.

Executives are still trying to repair OpenAI’s reputation after its board of directors unexpectedly fired its chief executive, Sam Altman, about a year after ChatGPT was released. He was reinstated five days later, but OpenAI has lost several prominent employees since then, including Ilya Sutskever, its chief scientist and a co-founder.

In October, OpenAI completed a $6.6 billion fund-raising deal that valued the company at $157 billion, nearly doubling the high-profile company’s valuation from just nine months earlier. SoftBank was part of that deal.

In December, OpenAI unveiled new A.I. technology called OpenAI o3. But not long after, a little-known Chinese start-up called DeepSeek shocked the tech industry with the release of an A.I. system that could match leading A.I. products made in the United States.

The Chinese company said it built its new A.I. technology at a lower price and with fewer hard-to-get computer chips than its American competitors, challenging an industrywide belief that bigger and better A.I. would cost many billions of dollars. OpenAI said on Wednesday that it was investigating whether DeepSeek may have improperly harvested OpenAI’s data to help build its own systems.

(The New York Times has sued OpenAI and its partner, Microsoft, accusing them of copyright infringement of news content related to A.I. systems. OpenAI and Microsoft have denied those claims.)

Since DeepSeek asserted that it could build A.I. more affordably, there have been questions about the wisdom of investing hundreds of billions of dollars in new data centers. But many experts believe massive amounts of computing power will continue to provide companies like OpenAI with an edge in the market.

With more chips, they can explore new ways of building artificial intelligence. In other words, more chips can still give companies a technical and competitive advantage. More chips will also be needed to operate the new “reasoning” A.I. models like OpenAI o3. These require more computing power when people and businesses use them.

Will DeepSeek Burst VC’s AI Bubble?

Source: Crunchbase News | Published: 2025-01-27 | Reading Time: 4 min | Domain: news.crunchbase.com

For many investors in artificial intelligence, the week started with a jolt as a Chinese AI app sent tech stocks plummeting and likely left many privately held startups shaking.

DeepSeek, birthed by a China-based hedge fund, claims to have created AI models that rival even those of OpenAI — but at much lower cost and using less energy.

The news that the U.S. may be falling behind in the AI arms race sent shares of many publicly traded tech companies nosediving on Monday. AI giant Nvidia’s shares dropped more than 15% at certain points of trading, falling back to a price point it has not seen since last fall. The tech- heavy Nasdaq Composite also plunged about 3% in early afternoon trading as investors started to sell off tech stocks on concerns China has usurped the AI crown from the U.S.

Venture dollars

Public-market investors surely are not the only ones watching the ramifications of the DeepSeek news closely.

Nearly a third of all global venture funding last year went to companies in AI-related fields as funding to those startups reached over $100 billion, per Crunchbase data. The fourth quarter alone saw more than $42 billion invested into AI-related startups, per Crunchbase.

That was nearly twice as much money as was invested in Q3 2024 — which was a record at the time. It also was about 3.5x the amount invested in Q4 2023.

That means there likely were many VC firms watching the DeepSeek news blitz this weekend very closely, wondering just what effect the Chinese upstart will have on their portfolios.

Just last week we examined several firms with the most AI-related investments last year. Names including Andreessen Horowitz, Lightspeed Venture Partners and Thrive Capital dominated the list. Those firms — the ones with the most money on the biggest bets — are likely more curious than ever if the U.S. is indeed king of AI.

“This is a game changer,” Umesh Padval, a venture partner at Thomvest Ventures, said in an interview. Padval has invested in AI startups such as large language model builder Cohere.

Padval said he sees DeepSeek as mainly a net positive for the AI industry, as it should only increase AI adoption and quicken the development cycle pace. However, it could be bad news for some of the foundational model startups that have raised billions of dollars at sky-high valuations — especially considering DeepSeek claims to have developed its model for about $6 million.

The biggest of the big

The foundational model startups that have raised billions of dollars at sky-high valuations are also likely watching the DeepSeek news closely and wondering what the mania around the China-based startup will mean for their next fundraise or secondary offering.

Just last October, OpenAI locked up a $6.6 billion raise at a post-money valuation of $157 billion led by Thrive Capital. The round made the ChatGPT creator one of the most valuable private companies in the world.

In November, xAI raised $6 billion in a funding round valuing it at $50 billion, The Wall Street Journal reported. The new round includes investment from the Qatar Investment Authority, Valor Equity Partners, Andreessen Horowitz and Sequoia Capital.

Those startups, along with others such as Mistral AI and Anthropic — which is reportedly taking in a fresh $1 billion investment from previous investor Google — likely will watch closely through the next several days to see DeepSeek’s next steps.

“I think the DeepSeek news is a real wake-up call for some startups who have raised like crazy,” said Padval, adding a few startups likely will have to look at their fundraising habits. This likely will become a tug-of-war in the AI race between China and the U.S., he said, but now costs and profitability will become bigger issues for some startups to grapple with.

The future of AI

The DeepSeek news also comes just days after the White House announced its new AI Stargate Project centered on the three Big Tech names: OpenAI, SoftBank and Oracle.

The project is anticipated to help build out $100 billion to $500 billion in AI datacenters and infrastructure, and is a key piece of the new administration’s plan to fuel the U.S. economy’s growth through the booming AI industry.

DeepSeek’s emergence could scuttle some of those plans and expectations.

However, it is much too early to know anything for sure, including whether the Chinese company did indeed build cheaper and better AI models.

There also assuredly will be concerns about personal data with DeepSeek being a China-based firm. Such concerns are already at the core of the U.S.’ current issues with TikTok, the video sharing platform that has turned into a political hot potato due to its ties to China.

Much still has to be learned and sorted out, but it does seem possible we are heading into a much different AI world than many investors envisioned.

DeepSeek's R1: What Actually Matters for AI Infrastructure

Source: Data Gravity | Published: 2025-01-27 | Reading Time: 7 min | Domain: datagravity.dev

Announced last week, DeepSeek's R1 triggered a $600B drop in NVIDIA's market value and a 15-20% decline across semiconductor stocks, while AI application companies saw their shares rise. The core innovation isn't just about cost - it's about how AI models are trained. R1 broke past the limits of human-curated training data by using AI-generated examples verified through automated evaluation. When the model solves a math problem, for instance, the system checks both the answer and the reasoning process. Good examples then feed back into training, creating a self-improving loop.

DeepSeek and Model Distillation?

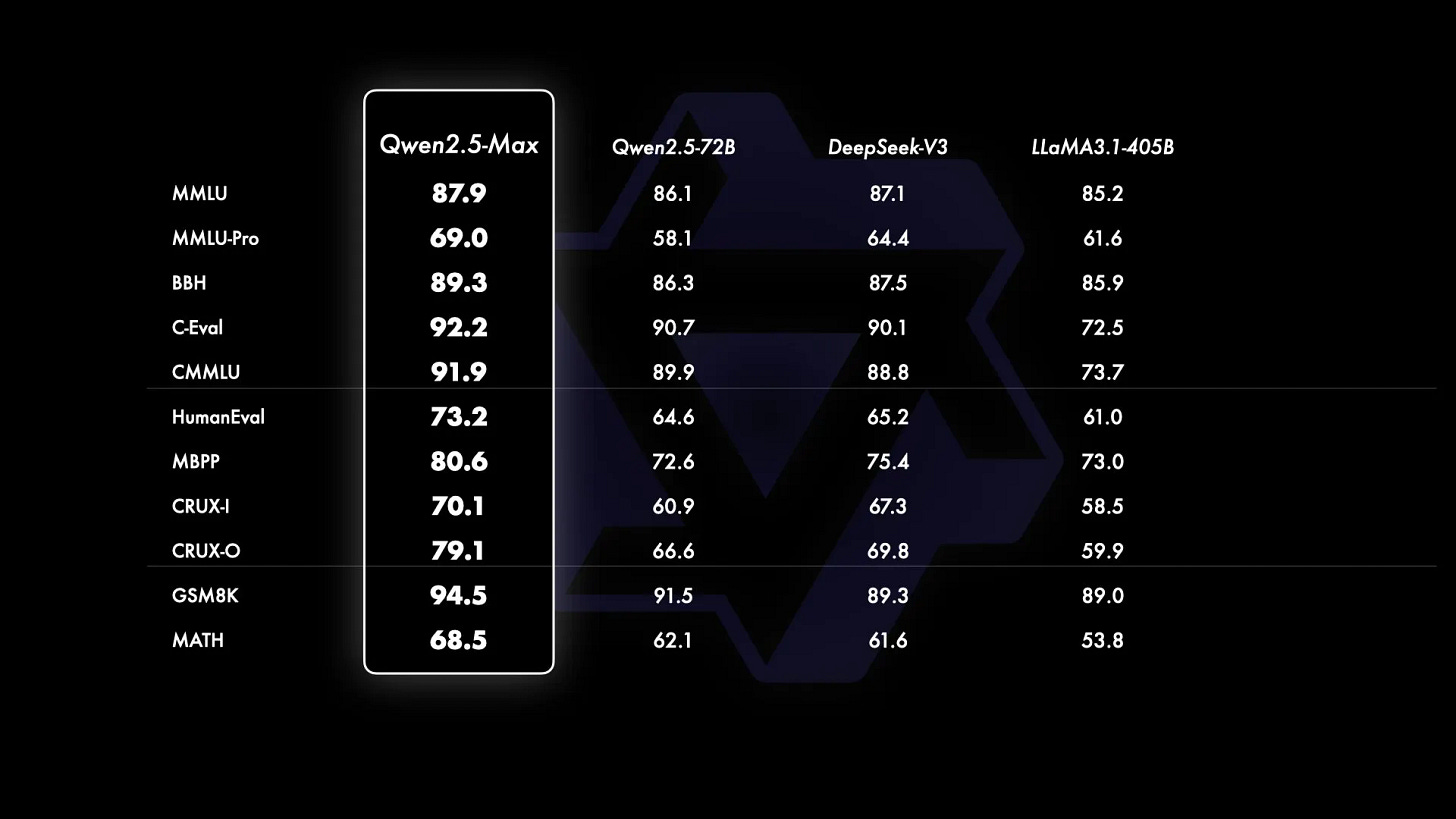

DeepSeek's R1 demonstrates how smaller companies can reverse engineer state-of-the-art AI models through four key technical approaches: distilling knowledge from existing models like GPT-4, avoiding computationally expensive sparse architectures (like Mixture of Experts), implementing aggressive memory optimizations (especially in key-value caches), and using straightforward reward functions for training. Their most innovative step was using AI-generated training examples verified by automated evaluation functions - breaking past the limits of human-curated data. The benchmarks are impressive:

This threatens to reduce the value of massive AI research investments from companies like OpenAI, Meta, and Anthropic, potentially affecting projects like Meta's $500B Stargate datacenter initiative. Being the first to market made ChatGPT the giant it is today, but it’s extremely expensive: for OpenAI, $7B on training and inference last year. Will the first mover continue to get paid for the research innovation? Or will knockoffs undercut their pricing power?

Put more simply, DeepSeek is the pioneering example of model distillation: the process of creating smaller, more efficient models from larger ones, preserving much of their reasoning power while reducing computational demands. This means top AI research may not be as proprietary as imagined.

Here are the papers:

Technical Progress

DeepSeek claims R1 cost only $5.7M to train - a fraction of what U.S. companies typically spend with OpenAI spending $7B on training and inference in 2024 and Anthropic also in the billions. However, this number needs context. While DeepSeek reports using 10,000 A100 GPUs, industry experts like Scale AI's Alexander Wang estimate they actually used closer to 50,000 NVIDIA Hopper GPUs - putting their true compute usage in line with U.S. companies.

The real advances are in efficiency:…

DeepSeek R1 is now available on Azure AI Foundry and GitHub

Source: Microsoft Azure Blog | Published: 2025-01-30 | Reading Time: 3 min | Domain: azure.microsoft.com

DeepSeek R1 is now available in the model catalog on Azure AI Foundry and GitHub, joining a diverse portfolio of over 1,800 models, including frontier, open-source, industry-specific, and task-based AI models. As part of Azure AI Foundry, DeepSeek R1 is accessible on a trusted, scalable, and enterprise-ready platform, enabling businesses to seamlessly integrate advanced AI.

DeepSeek R1 is now available in the model catalog on Azure AI Foundry and GitHub, joining a diverse portfolio of over 1,800 models, including frontier, open-source, industry-specific, and task-based AI models. As part of Azure AI Foundry, DeepSeek R1 is accessible on a trusted, scalable, and enterprise-ready platform, enabling businesses to seamlessly integrate advanced AI while meeting SLAs, security, and responsible AI commitments—all backed by Microsoft’s reliability and innovation.

Accelerating AI reasoning for developers on Azure AI Foundry

AI reasoning is becoming more accessible at a rapid pace transforming how developers and enterprises leverage cutting-edge intelligence. As DeepSeek mentions, R1 offers a powerful, cost-efficient model that allows more users to harness state-of-the-art AI capabilities with minimal infrastructure investment.

One of the key advantages of using DeepSeek R1 or any other model on Azure AI Foundry is the speed at which developers can experiment, iterate, and integrate AI into their workflows. With built-in model evaluation tools, they can quickly compare outputs, benchmark performance, and scale AI-powered applications. This rapid accessibility—once unimaginable just months ago—is central to our vision for Azure AI Foundry: bringing the best AI models together in one place to accelerate innovation and unlock new possibilities for enterprises worldwide.

Develop with trustworthy AI

We are committed to enabling customers to build production-ready AI applications quickly while maintaining the highest levels of safety and security. DeepSeek R1 has undergone rigorous red teaming and safety evaluations, including automated assessments of model behavior and extensive security reviews to mitigate potential risks. With Azure AI Content Safety, built-in content filtering is available by default, with opt-out options for flexibility. Additionally, the Safety Evaluation System allows customers to efficiently test their applications before deployment. These safeguards help Azure AI Foundry provide a secure, compliant, and responsible environment for enterprises to confidently deploy AI solutions.

How to use DeepSeek in model catalog

If you don’t have an Azure subscription, you can sign up for an Azure account here.

Search for DeepSeek R1 in the model catalog.

Open the model card in the model catalog on Azure AI Foundry.

Click on deploy to obtain the inference API and key and also to access the playground.

You should land on the deployment page that shows you the API and key in less than a minute. You can try out your prompts in the playground.

You can use the API and key with various clients.

DeepSeek Debates: Chinese Leadership On Cost, True Training Cost, Closed Model Margin Impacts

Source: SemiAnalysis | Published: 2025-01-31 | Reading Time: 14 min | Domain: semianalysis.com

The DeepSeek Narrative Takes the World by Storm

DeepSeek took the world by storm. For the last week, DeepSeek has been the only topic that anyone in the world wants to talk about. As it currently stands, DeepSeek daily traffic is now much higher than Claude, Perplexity, and even Gemini.

But to close watchers of the space, this is not exactly “new” news. We have been talking about DeepSeek for months (each link is an example). The company is not new, but the obsessive hype is. SemiAnalysis has long maintained that DeepSeek is extremely talented and the broader public in the United States has not cared. When the world finally paid attention, it did so in an obsessive hype that doesn’t reflect reality.

We want to highlight that the narrative has flipped from last month, when scaling laws were broken, we dispelled this myth, now algorithmic improvement is too fast and this too is somehow bad for Nvidia and GPUs.

Scaling Laws – O1 Pro Architecture, Reasoning Training Infrastructure, Orion and Claude 3.5 Opus “Failures”

The narrative now is that DeepSeek is so efficient that we don’t need more compute, and everything has now massive overcapacity because of the model changes. While Jevons paradox too is overhyped, Jevons is closer to reality, the models have already induced demand with tangible effects to H100 and H200 pricing.

DeepSeek and High-Flyer

High-Flyer is a Chinese Hedge fund and early adopters for using AI in their trading algorithms. They realized early the potential of AI in areas outside of finance as well as the critical insight of scaling. They have been continuously increasing their supply of GPUs as a result. After experimentation with models with clusters of thousands of GPUs, High Flyer made an investment in 10,000 A100 GPUs in 2021 before any export restrictions. That paid off. As High-Flyer improved, they realized that it was time to spin off “DeepSeek” in May 2023 with the goal of pursuing further AI capabilities with more focus. High-Flyer self funded the company as outside investors had little interest in AI at the time, with the lack of a business model being the main concern. High-Flyer and DeepSeek today often share resources, both human and computational.

DeepSeek now has grown into a serious, concerted effort and are by no means a “side project” as many in the media claim. We are confident that their GPU investments account for more than $500M US dollars, even after considering export controls.

The GPU Situation

We believe they have access to around 50,000 Hopper GPUs, which is not the same as 50,000 H100, as some have claimed. There are different variations of the H100 that Nvidia made in compliance to different regulations (H800, H20), with only the H20 being currently available to Chinese model providers today. Note that H800s have the same computational power as H100s, but lower network bandwidth.

We believe DeepSeek has access to around 10,000 of these H800s and about 10,000 H100s. Furthermore they have orders for many more H20’s, with Nvidia having produced over 1 million of the China specific GPU in the last 9 months. These GPUs are shared between High-Flyer and DeepSeek. They are used for trading, inference, training, and research. For more specific detailed analysis, please refer to our Accelerator Model.

Our analysis shows that the total server CapEx for DeepSeek is almost $1.3B, with a considerable cost of $715M associated with operating such clusters. Similarly, all AI Labs and Hyperscalers have many more GPUs for training then they they commit to an individual training run….

DeepSeek FAQ

Source: Stratechery by Ben Thompson | Published: 2025-01-27 | Reading Time: 26 min | Domain: stratechery.com

Listen to this post:

It’s Monday, January 27. Why haven’t you written about DeepSeek yet?

I did! I wrote about R1 last Tuesday.

I totally forgot about that.

I take responsibility. I stand by the post, including the two biggest takeaways that I highlighted (emergent chain-of-thought via pure reinforcement learning, and the power of distillation), and I mentioned the low cost (which I expanded on in Sharp Tech) and chip ban implications, but those observations were too localized to the current state of the art in AI. What I totally failed to anticipate were the broader implications this news would have to the overall meta-discussion, particularly in terms of the U.S. and China.

Is there precedent for such a miss?

There is. In September 2023 Huawei announced the Mate 60 Pro with a SMIC-manufactured 7nm chip. The existence of this chip wasn’t a surprise for those paying close attention: SMIC had made a 7nm chip a year earlier (the existence of which I had noted even earlier than that), and TSMC had shipped 7nm chips in volume using nothing but DUV lithography (later iterations of 7nm were the first to use EUV). Intel had also made 10nm (TSMC 7nm equivalent) chips years earlier using nothing but DUV, but couldn’t do so with profitable yields; the idea that SMIC could ship 7nm chips using their existing equipment, particularly if they didn’t care about yields, wasn’t remotely surprising — to me, anyways.

What I totally failed to anticipate was the overwrought reaction in Washington D.C. The dramatic expansion in the chip ban that culminated in the Biden administration transforming chip sales to a permission-based structure was downstream from people not understanding the intricacies of chip production, and being totally blindsided by the Huawei Mate 60 Pro. I get the sense that something similar has happened over the last 72 hours: the details of what DeepSeek has accomplished — and what they have not — are less important than the reaction and what that reaction says about people’s pre-existing assumptions.

So what did DeepSeek announce?

The most proximate announcement to this weekend’s meltdown was R1, a reasoning model that is similar to OpenAI’s o1. However, many of the revelations that contributed to the meltdown — including DeepSeek’s training costs — actually accompanied the V3 announcement over Christmas. Moreover, many of the breakthroughs that undergirded V3 were actually revealed with the release of the V2model last January.

Is this model naming convention the greatest crime that OpenAI has committed?

Second greatest; we’ll get to the greatest momentarily.

Let’s work backwards: what was the V2 model, and why was it important?

The DeepSeek-V2 model introduced two important breakthroughs: DeepSeekMoE and DeepSeekMLA. The “MoE” in DeepSeekMoE refers to “mixture of experts”. Some models, like GPT-3.5, activate the entire model during both training and inference; it turns out, however, that not every part of the model is necessary for the topic at hand. MoE splits the model into multiple “experts” and only activates the ones that are necessary; GPT-4 was a MoE model that was believed to have 16 experts with approximately 110 billion parameters each.

DeepSeekMoE, as implemented in V2, introduced important innovations on this concept, including differentiating between more finely-grained specialized experts, and shared experts with more generalized capabilities. Critically, DeepSeekMoE also introduced new approaches to load-balancing and routing during training; traditionally MoE increased communications overhead in training in exchange for efficient inference, but DeepSeek’s approach made training more efficient as well.

DeepSeekMLA was an even bigger breakthrough. One of the biggest limitations on inference is the sheer amount of memory required: you both need to load the model into memory and also load the entire context window. Context windows are particularly expensive in terms of memory, as every token requires both a key and corresponding value; DeepSeekMLA, or multi-head latent attention, makes it possible to compress the key-value store, dramatically decreasing memory usage during inference.

I’m not sure I understood any of that.

The key implications of these breakthroughs — and the part you need to understand — only became apparent with V3, which added a new approach to load balancing (further reducing communications overhead) and multi-token prediction in training (further densifying each training step, again reducing overhead): V3 was shockingly cheap to train. DeepSeek claimed the model training took 2,788 thousand H800 GPU hours, which, at a cost of $2/GPU hour, comes out to a mere $5.576 million.

That seems impossibly low.

DeepSeek is clear that these costs are only for the final training run, and exclude all other expenses; from the V3 paper:….

Remember DeepSeek? Two New AI Models Say They’re Even Better

Source: Decrypt | Published: 2025-01-30 | Reading Time: 1 min | Domain: decrypt.co

The Allen Institute for AI and Alibaba have unveiled powerful language models that challenge DeepSeek's dominance in the open-source AI race.

AI companies used to measure themselves against industry leader OpenAI. No more. Now that China’s DeepSeek has emerged as the frontrunner, it’s become the one to beat.

On Monday, DeepSeek turned the AI industry on its head, causing billions of dollars in losses on Wall Street while raising questions about how efficient some U.S. startups—and venture capital— actually are.

Now, two new AI powerhouses have entered the ring: The Allen Institute for AI in Seattle and Alibaba in China; both claim their models are on a par with or better than DeepSeek V3.

The Allen Institute for AI, a U.S.-based research organization known for the release of a more modest vision model named Molmo, today unveiled a new version of Tülu 3, a free, open-source 405-billion parameter large language model.

Meanwhile, China isn’t resting on DeepSeek’s laurels.

Amid all the hubbub, Alibaba dropped Qwen 2.5-Max, a massive language model trained on over 20 trillion tokens.

The Chinese tech giant released the model during the Lunar New Year, just days after DeepSeek R1 disrupted the market.

Benchmark tests showed Qwen 2.5-Max outperformed DeepSeek V3 in several key areas, including coding, math, reasoning, and general knowledge, as evaluated using benchmarks like Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond.

The model demonstrated competitive results against industry leaders like GPT-4o and Claude 3.5-Sonne,t according to the model’s card.

Alibaba made the model available through its cloud platform with an OpenAI-compatible API, allowing developers to integrate it using familiar tools and methods.

Ai2 releases Tülu 3, a fully open-source model that bests DeepSeek v3, GPT-4o with novel post-training approach

Source: VentureBeat | Published: 2025-01-30 | Reading Time: 4 min | Domain: venturebeat.com

The open source model race just keeps on getting more interesting.

Today, The Allen Institute for AI (Ai2) debuted its latest entrant in the race with the launch of its open source Tülu 3 405B parameter large language model (LLM). The new model not only matches Open AI’s GPT-4o’s capabilities but also surpasses DeepSeek’s V3 model across critical benchmarks.

This isn’t the first time the Ai2 has made bold claims about a new model. In Nov. 2024 the company released its first version of Tülu 3, which had both 8 and 70 billion parameter versions. At the time, Ai2 claimed the model was up to par with the latest GPT-4 model from OpenAI, Anthropic’s Claude and Google’s Gemini. The big difference being that Tülu 3 is open source. Ai2 had also claimed back in Sept. 2024 that its Molmo models were able to beat GPT-4o and Claude on some benchmarks.

While benchmark performance data is interesting, what’s perhaps more useful is the training innovations that enable the new Ai2 model.

Pushing post-training to the limit

The big breakthrough for Tülu 3 405B is rooted in an innovation that first appeared with the initial Tülu 3 release in 2024. That release utilizes a combination of advanced post-training techniques to get better performance.

With the Tülu 3 405B model, those post-training techniques have been pushed even further, using an advanced post-training methodology that combines supervised fine-tuning, preference learning, and a novel reinforcement learning approach that has proven exceptional at larger scales.

“Applying Tülu 3’s post-training recipes to Tülu 3-405B, our largest-scale, fully open-source post-trained model to date, levels the playing field by providing open fine-tuning recipes, data, and code, empowering developers and researchers to achieve performance comparable to top-tier closed models,” Hannaneh Hajishirzi, senior director of NLP Research at Ai2 told VentureBeat.

Advancing the state of open source AI post-training with RLVR

Post-training is something that other models, including DeepSeek v3, do as well.

The key innovation that helps to differentiate Tülu 3 is Ai2’s Reinforcement Learning from Verifiable Rewards (RLVR) system.

Unlike traditional training approaches, RLVR uses verifiable outcomes—such as solving mathematical problems correctly—to fine-tune the model’s performance. This technique, when combined with Direct Preference Optimization (DPO) and carefully curated training data, has enabled the model to achieve better accuracy in complex reasoning tasks while maintaining strong safety characteristics.

Key technical innovations in the RLVR implementation include:

Efficient parallel processing across 256 GPUs

Optimized weight synchronization

Balanced compute distribution across 32 nodes

Integrated vLLM deployment with 16-way tensor parallelism

The RLVR system showed improved results at the 405B parameter scale compared to smaller models. The system also demonstrated particularly strong results in safety evaluations, outperforming both DeepSeek V3 , Llama 3.1 and Nous Hermes 3. Notably, the RLVR framework’s effectiveness increased with model size, suggesting potential benefits from even larger-scale implementations.

How Tülu 3 405B compares to GPT-4o and DeepSeek v3

The model’s competitive positioning is particularly noteworthy in the current AI landscape.

Tülu 3 405B not only matches the capabilities of GPT-4o but also outperforms DeepSeek v3 in some areas particularly with safety benchmarks.

Across a suite of 10 AI benchmarks evaluation including safety benchmarks, Ai2 reported that the Tülu 3 405B RLVR model had an average score of 80.7, surpassing DeepSeek V3’s 75.9. Tülu however is not quite as good at GPT-4o which scored 81.6. Overall the metrics suggest that Tülu 3 405B is at the very least extremely competitive with GPT-4o and DeepSeek V3 across the benchmarks..

Why open source AI matters and how Ai2 is doing it differently

What makes Tülu 3 405B different for users though is how Ai2 has made the model available.

There is a lot of noise in the AI market about open source. DeepSeek says it’s open source and so is Meta’s Llama 3.1, which Tülu 3 405B also outperforms.

With both DeepSeek and Llama the models are freely available for use; there is some, but not all code available…

$13b Run Rate & Doubling by @ttunguz

Source: tomtunguz.com | Published: 2025-01-30 | Reading Time: 2 min | Domain: tomtunguz.com

Microsoft announced earnings yesterday & the data painted a brilliant picture for the future of AI.

Greater than 30% annual growth in back-to-back quarters is sensational for a $100b run rate business. Microsoft is projecting similar for next quarter. The AI subset is on a $13b run rate, more than double last year.

Azure other cloud services revenue grew 31%. Azure growth included 13 points from AI services, which grew 157% year-over-year, and was ahead of expectations even as demand continued to be higher than our available capacity… In Azure, we expect Q3 revenue growth to be between 31% and 32% in constant currency driven by strong demand for our portfolio of services.

Azure other cloud services revenue grew 31%. Azure growth included 13 points from AI services, which grew 157% year-over-year, and was ahead of expectations even as demand continued to be higher than our available capacity… In Azure, we expect Q3 revenue growth to be between 31% and 32% in constant currency driven by strong demand for our portfolio of services.

Data center capacity remains the limiting factor, but that constraint should subside by end of year.

We have more than doubled our overall data center capacity in the last 3 years, and we have added more capacity last year than any other year in our history…And while we expect to be AI capacity constrained in Q3, by the end of FY ‘25, we should be roughly in line with near-term demand given our significant capital investments.

We have more than doubled our overall data center capacity in the last 3 years, and we have added more capacity last year than any other year in our history…And while we expect to be AI capacity constrained in Q3, by the end of FY ‘25, we should be roughly in line with near-term demand given our significant capital investments.

RPOs, or remaining performance obligations, are customer prepurchases of Azure compute that haven’t yet been used. $300b of RPO is basically the next 2 years of Azure revenue already committed.

As we shared last week, we are thrilled OpenAI has made a new large Azure commitment…We have, and I think we talked about it, close to $300 billion of RPO.

As we shared last week, we are thrilled OpenAI has made a new large Azure commitment…We have, and I think we talked about it, close to $300 billion of RPO.

AI performance gains in software are 5x more effective than those in hardware. Deepseek’s announcements last week underscore the point.

On inference, we have typically seen more than 2x price performance gain for every hardware generation and more than 10x for every model generation due to software optimizations. And as AI becomes more efficient and accessible, we will see exponentially more demand.

On inference, we have typically seen more than 2x price performance gain for every hardware generation and more than 10x for every model generation due to software optimizations. And as AI becomes more efficient and accessible, we will see exponentially more demand.

Smaller models will be run on consumer hardware. NPUs (neural processing units) are newer consumer chips that run AI. Apple, Qualcomm, Intel, Samsung & others offer them. As models become more powerful, they will run on less expensive equipment. It’s interesting to see DeepSeek mentioned specifically here :

We also see more and more developers from Adobe and CapCut to WhatsApp build apps that leverage built-in NPUs. And they will soon be able to run DeepSeek’s R1 distilled models locally on Copilot+ PCs as well as the vast ecosystem of GPUs available on Windows. And beyond Copilot+ PCs, the most powerful AI workstation for local development is a Windows PC running WSL2 powered by NVIDIA RTX GPUs.

We also see more and more developers from Adobe and CapCut to WhatsApp build apps that leverage built-in NPUs. And they will soon be able to run DeepSeek’s R1 distilled models locally on Copilot+ PCs as well as the vast ecosystem of GPUs available on Windows. And beyond Copilot+ PCs, the most powerful AI workstation for local development is a Windows PC running WSL2 powered by NVIDIA RTX GPUs.

200,000 engineers are building AI in the 2 months of the AI Foundry launched. There are 150m engineers on Github, so overall AI penetration remains very small.

Azure AI Foundry features best-in-class tooling run times to build agents, multi-agent apps, AIOps, API access to thousands of models. Two months in, we already have more than 200,000 monthly active users.. All up, GitHub now is home to 150 million developers, up 50% over the past 2 years.

Azure AI Foundry features best-in-class tooling run times to build agents, multi-agent apps, AIOps, API access to thousands of models. Two months in, we already have more than 200,000 monthly active users.. All up, GitHub now is home to 150 million developers, up 50% over the past 2 years.

Darwin's Finches in AI by @ttunguz

Source: tomtunguz.com | Published: 2025-01-29 | Reading Time: 1 min | Domain: tomtunguz.com

Darwin’s finches sprinted to evolve when food sources changed. For decades, nothing—then sudden transformation. AI follows the same pattern & it’s accelerating.

Every morning, I wake up wondering what breakthrough will propel the ecosystem forward.

Last week, it was DeepSeek v2. This morning, a Hugging Face researcher announced that he could induce reasoning on the major advance of the last three months in one of the smallest models, a 3B parameter model.

The pace of innovation hasn’t stalled. The S-curve growth in model performance continues. But faster!

The first birds of AI soared with sheer scale—massive datasets and vast transformer architectures.

Their children specialized, dividing large models into smaller, more efficient Mixture of Experts (MoE) architectures.

Their grandchildren now do something even more remarkable: narrating their reasoning, self-correcting, and improving their own responses.

Here’s a rough timeline of how AI evolution has compressed in just the last two years:

GPT-4 set a new performance benchmark in ELO, an overall AI benchmark. Claude 3 surpassed it 366 days later.

GPT-4 Turbo set the next high bar, which Gemini 1.5 Pro matched just 11 days later.

GPT-4.0, the first model with true deep reasoning, arrived in September 2024. Google’s Gemini 2 Deep Reasoning matched it 141 days later.

This year, DeepSeek achieved the same level of reasoning—this time in just 41 days.

We’ve gone from year-long leaps to breakthroughs happening within weeks. The trend is clear: compression of progress. What once took years now takes months, then weeks, and soon, perhaps, mere days.

At this rate, the next AI revolution might not just arrive in the next quarter—it might arrive by the time you wake up tomorrow.

Who knows what that bird will look like?

Apple’s gross margin hits record as services business keeps growing

KEY POINTS

Apple’s gross margin climbed to 46.9% in the latest quarter, topping the prior record of 46.6% reached in the period ended March.

Services, which includes App Stores purchases, payments and advertising, are becoming a bigger part of the overall business, lifting Apple’s profit margin.

Sales of iPhones declined slightly from a year earlier.

Omar Marques | Lightrocket | Getty Images

Apple is struggling to squeeze growth out of its flagship iPhone unit, but its profit margin keeps going up thanks to a flourishing services business.

In its fiscal first-quarter earnings report on Thursday, Apple reported a gross margin — the profit left after accounting for the cost of goods sold — of 46.9%. That’s the highest on record, surpassing the 46.6% margin the company record in the period ending March 2024.

For Apple, services includes App Store purchases, advertising, payments, AppleCare support and other subscription offerings. The growth in those products has offset a slowdown in sales of the iPhone and a saturation in the global smartphone market.

The “services business in general in aggregate is accretive to the overall company margin,” Apple CFO Kevan Parekh said on the earnings call after the report.

In the current quarter, Apple said its gross margin will be between 46.5% and 47.5%.

IPhone sales slipped almost 1% in the latest quarter from a year earlier, as the company reported weakness in Greater China. Total revenue rose almost 4% to $124.3 billion.

Services revenue rose about 4% to $26.34 billion, beating analysts’ estimates. The business now accounts for roughly 21% of Apple’s overall revenue. Last quarter, Apple announced that its services unit had turned into a $100 billion a year business.

“We were thrilled to bring customers our best-ever lineup of products and services during the holiday season,” CEO Tim Cook said in the press release.

Cook’s emphasis on services has transformed Wall Street’s view of a company that’s been defined over the decades by its iconic devices. For many years in the iPhone era, Apple’s gross margin would predictably come in at between 38% and 39%, reflecting the company’s tight grip over its supply chain and its pricing power in the market.

Amazon Raises Its Ad Spending on Elon Musk’s X, in Major Reversal

Published: 2025-01-30 | Reading Time: 1 min | Domain: wsj.com

Shift occurs after many brands cut advertising on the platform over hate-speech concerns; Apple considers a return to site

Amazon is ramping up ad spending on Elon Musk’s X, according to people familiar with the situation, a major shift after pulling much of its advertising more than a year ago, when many brands had concerns about hate speech on the platform.

Amazon Chief Executive Andy Jassy was involved in the decision, which could result in the company spending significantly more on X. Apple, which pulled all of its ad dollars from X in late 2023, in recent weeks has had discussions about testing out ads on the platform, according to a person familiar with the situation.

Some large companies that have cut or zeroed out advertising on X are re-evaluating their stances in a changing political and social climate. Musk, X’s owner, has championed a lighter touch in online content moderation and has emerged as one of the most powerful people in President Trump’s orbit. Business and world leaders have worked to improve relations with him, given his elevated role in Washington.

Musk is leading the Department of Government Efficiency, a group that has planned to eliminate $2 trillion in government spending. He contributed roughly a quarter of a billion dollars to a super political-action committee he started to help re-elect Trump.

Ad buyers said that some brands that are returning to X are doing so at spending levels that are still well below their spending before Musk acquired the company, then known as Twitter, for $44 billion in 2022.

The return of advertisers to X would bolster its balance sheet at a critical moment. The investment banks that lent Musk cash for the acquisition have struggled to offload that debt from their books. They are now arranging for a sale of senior debt at 90 to 95 cents on the dollar, The Wall Street Journal reported. Selling the debt will be easier if the company’s finances improve.

What flavor of capital do you want?

Source: SignalRank Update | Published: 2025-01-30 | Reading Time: 5 min | Domain: signalrankupdate.substack.com

This is not a post about politics. But the US election illuminated how capital has become personified, reflecting the values and outlook of individuals (not firms). So the election feels like a good place to start to understand this shift from firm-level brands to individual platforms.

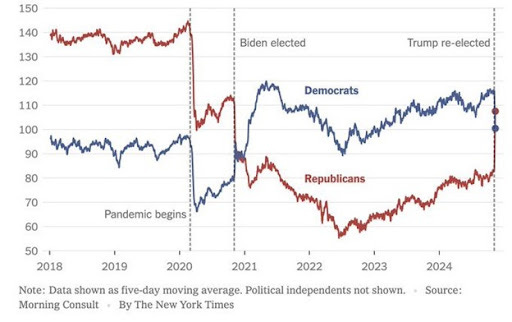

Social media has weaponized information to such an extent that society is now struggling to agree on basic facts. This chart (Figure 1) on consumer sentiment by party identification would be funny if it wasn’t so concerning. Consumer sentiment has become so polarized that views on the economy function as a kind of political Rorschach test.

Figure 1. Consumer sentiment by party identification, 2018-24

The politicization of information has now infected capital too. The recent election illuminated glaringly different interpretations of the world within the VC community.

It is particularly intriguing that VCs have engaged in more forthright political discourse in this election cycle on both sides, as one would imagine that VCs are looking for the best founders regardless of their political affiliation. Y Combinator’s Paul Graham tweeted about how “VCs have the strongest incentive not to discriminate of any group in the world.” He went on to argue that “if multiple companies founded by Martians with eyes on stalks started growing really fast, VCs would be falling over one another to invest in more companies founded by Martians with eyes on stalks. You know they would.”

Yet here we are. On team Trump, we had Doug Leone, David Sacks, Keith Rabois and Peter Thiel. On team Harris, we had Reid Hoffman, Vinod Khosla, Ron Conway and Aileen Lee. Pick your fighter.

The most fascinating dynamics are where firms were divided at the personal level. This could be interpreted as a hedge of some sorts to not aggravate entrepreneurs of one tribe or another. You could also argue that this represents a firm level maturity where individuals can disagree agreeably under the same umbrella. A16Z had Trump-supporting Marc Andreessen pitted against Ben Horowitz who switched at the last minute to Harris. The partner meetings at Lux Capital must be tense given the opposite positions taken by Josh Wolfe and Bilal Zuberi (who has subsequently left to found his own firm) on Gaza. Tim Draper sidestepped an election position entirely by endorsing both candidates. Nice….

Interview of the Week

Startup of the Week

ElevenLabs, the hot AI audio startup, confirms $180M in Series C funding at a $3.3B valuation

Source: TechCrunch | Published: 2025-01-30 | Reading Time: 1 min | Domain: techcrunch.com

ElevenLabs, one of the more popular startups working in the field of AI audio, said Thursday that it has raised a Series C round of $180 million, valuing the company at $3.3 billion post-money. a16z and ICONIQ Growth are co-leading the investment. Rumors of the fundraise were first reported by TechCrunch. The final numbers confirm […]

© 2024 TechCrunch. All rights reserved. For personal use only.