This week’s video transcript summary is here. You can click on any bulleted section to see the actual transcript. Thanks to Granola for its software - Transcript and Summary

Editorial

This week’s Essays section describes one reality from two emotional angles: glass half full for people building with AI every day, glass half empty for people watching labor markets, status hierarchies, and institutions bend in real time. Same glass, but different viewers see very different things in the glass. One drinkable the other definitely not.

The same data supports both reactions.

Here is my thesis: fear is an understandable signal, but a bad operating system for this moment, because the tools released in the past two weeks take the capability from good to beyond belief.

On the half-full side, the capability jump is real. The move from GPT-5.2-Codex to 5.3, and from Opus 4.5 to 4.6, feels less like iteration and more like a threshold. Matt Shumer calls it a “discontinuity” in Something Big Is Happening, and I think that word fits. If you are actively shipping product, the practical difference is obvious: more tasks complete end to end, less scaffolding, shorter cycles from idea to working system.

I produced a prediction market for venture capital in one day using SignalRank data and OpenAi’s codex app for Mac - which has now replaced Claude Code for me.

That is why phrases like “vibe coding” are no longer internet jokes. We now have a production workflow where architecture planning and product specification starts to outrun implementation as the scarce skill.

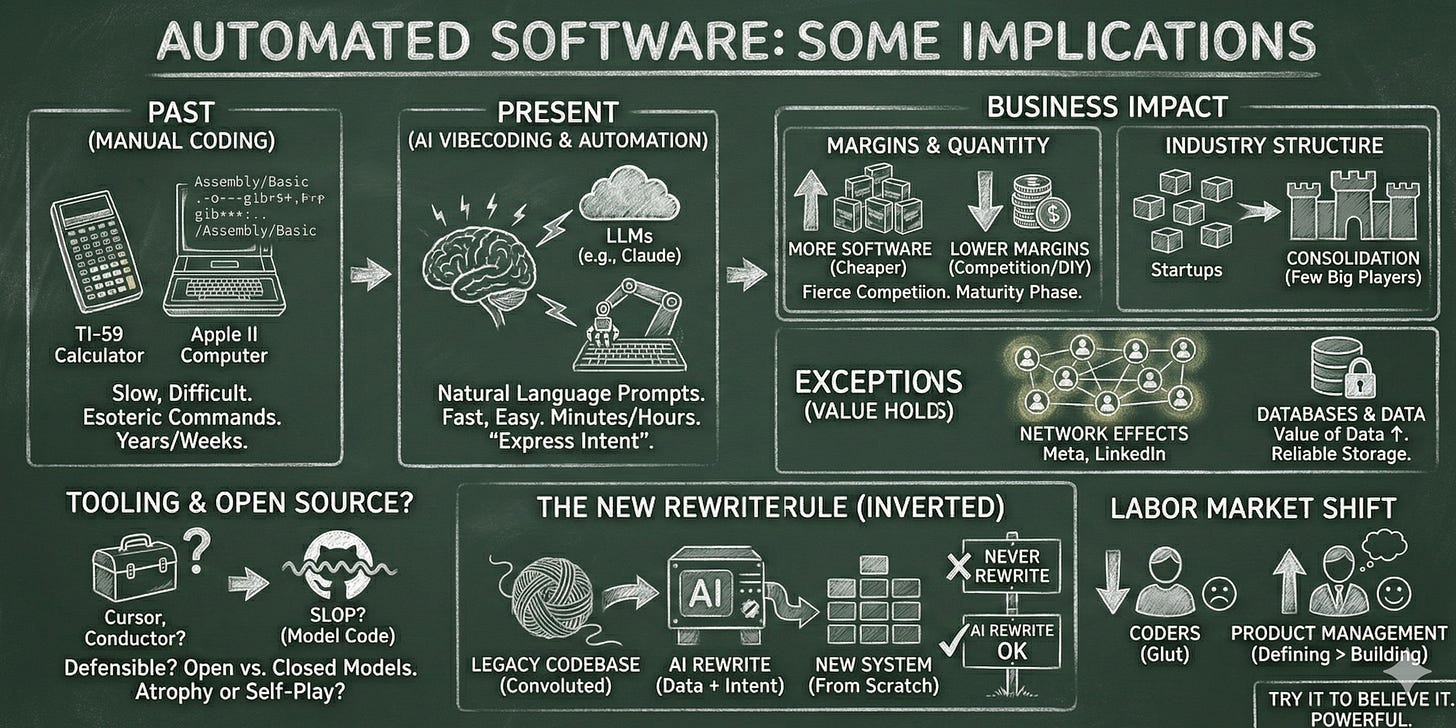

Albert Wenger makes the same point from another angle in Automated Software: Some Implications: as software gets cheaper to produce, the bottleneck shifts toward intent, distribution, and trust. I see that shift every week now. The center of gravity is moving from writing code to deciding what should exist, how it should behave, and who is accountable when it fails.

On the half-empty side, the fear is not irrational. The Atlantic’s America Isn’t Ready for What AI Will Do to Jobs calls adoption a “race condition,” and that is exactly the dynamic many workers and managers are feeling. You do not need to believe in sudden mass unemployment to see the pressure. If your competitor cuts cycle time by half, your choice is not philosophical. You adapt, or you lose share.

Noah Smith’s title, You Are No Longer the Smartest Type of Thing on Earth, captures the emotional core. It reads like a provocation, but it is also a diagnosis of status shock. For decades, many of us in tech were paid for cognitive scarcity. Now models are eroding that scarcity in front of us, and not gradually. That can produce anxiety even for people who are net beneficiaries of the tools.

So why do I think fear and trepidation are the wrong instinct? Why is the glass drinkable and half full?

Because fear narrows the aperture at exactly the moment we need wider context and better judgment. It pushes people into two equally unhelpful camps: denial (”this is overhyped”) or fatalism (”nothing can be done”). Neither is true. What is true is that we now have much more agency over outcomes than fear admits, and much less time than denial assumes.

Om Malik’s Mad Money & The Big AI Race is useful here because it replaces drama with discipline. His core warning to AI companies and investors is simple: valuation headlines are not moats, and model leadership can rotate fast if “developers can switch quickly.” That is not a reason to panic. It is a reason to focus on what actually compounds: distribution, trust, workflow embedding, and decision quality under uncertainty.

A fair counterargument is that fear may be the only force strong enough to trigger institutional response. If leaders are too optimistic, they underinvest in retraining, social insurance, and guardrails.

That treats us as children in need of clever manipulation. I do agree with the concern. But I would separate fear as an alert from fear as a strategy. Alerts are useful. Strategy built on dread usually produces brittle policy, performative regulation, and bad product decisions.

For investors, founders and operators, the preferable posture is neither boosterism nor retreat. It is serious adaptation: redesign jobs around human judgment plus machine throughput, measure where quality actually improves, and retrain teams before displacement becomes a headline.

For investors, it means underwriting transition risk, not just revenue growth. For policymakers, it means treating this as market-structure and workforce-transition planning now, not after the dislocation.

The delicious glass-half-full and undrinkable glass-half-empty readings are both true, because they describe different time horizons.

In the short run, capability gains feel exhilarating for builders like me and frightening for everyone exposed to rapid change and a feeling of losing agency.

In the longer run, the question is whether we can convert this jump into broad productivity without social fracture. That outcome is not predetermined. Agency will determine outcomes.

My open question this week is: the step-change is already here, can we match it with a step-change in individual and institutional learning? I think we can, but only if we resist the temptation to make fear our worldview instead of our signal.

Contents

Essays

Automated Software: Some Implications

Author: Albert Wenger Date: 2026-02-07 Publication: Continuations

Wenger opens with a personal arc from early computing to present-day vibecoding and argues that automated software has flipped the cost and speed curve of building. When the constraint disappears, software production explodes, and the primary skill shifts from syntax to intent: describing what you want, iterating on outcomes, and shipping faster than any traditional team cycle.

He then draws the business implications: if software becomes cheap, competitive pressure rises and high-margin SaaS economics compress. The ability to build in-house becomes a credible alternative for many buyers, so the old moat of proprietary code weakens, pushing the industry toward consolidation and more mature, lower-margin dynamics.

The exceptions are the businesses whose defensibility is not the code itself. Network effects still create durable advantage, and databases remain valuable because data quality and reliability become even more central as code commoditizes. In the tooling layer, he is skeptical that workflows like IDEs or coding assistants can build lasting moats when models are the real power center, leaving the long-run balance between closed and open models as the key open question.

Read more: Automated Software: Some Implications

Something Big Is Happening

Author: Matt Shumer Date: 2026-02-09 Publication: matt shumer

Shumer frames February 5, 2026 as a discontinuity: the day OpenAI and Anthropic shipped models that made everything earlier feel obsolete. He says his own work has shifted from hands-on implementation to pure specification, with the AI delivering finished output rather than drafts that need heavy edits. That personal story is his proxy for a wider labor shift now reaching beyond tech.

The essay argues that coding was the strategic wedge: once AI could build software, it could help build the next generation of AI, creating a feedback loop of faster self-improvement. He points to model docs and lab statements as evidence that AI is already meaningfully contributing to its own development, which accelerates the compounding effect.

He then turns outward: adoption is moving faster than most people realize, free-tier users are behind the frontier, and the task lengths that models can complete are growing quickly. The consequence, in his view, is near-term displacement across many white-collar fields and an urgent need for individuals and institutions to adapt rather than debate whether the curve is real.

Read more: Something Big Is Happening

The Fall of the Nerds

Author: Noah Smith Date: 2026-02-05 Publication: Noahpinion

Noah Smith opens with a market signal: a software stock selloff driven by fears that AI is eroding SaaS business models. He argues the basic SaaS bargain has been to sell access to a stable of expert engineers who can implement customer needs. AI breaks that scarcity by letting non-experts produce functional software at a fraction of the cost.

He calls this shift “vibe coding,” and describes how tools like Claude Code are making full application creation accessible to novices. As the tools improve, the amount of technical detail required from users approaches zero, and the craft of software engineering starts to resemble factory management more than artisan work.

Smith does not claim software expertise disappears; rather, he expects experts to stay in the loop to fix flaws, maintain systems, and advise on architecture and security. But the job’s social status and economic premium change. In his framing, the broader human-capital economy that elevated technical elites may be ending, with major implications for how economies, cities, and labor markets are organized.

Read more: The Fall of the Nerds

America Isn’t Ready for What AI Will Do to Jobs

Author: Josh Tyrangiel Date: 2026-02-10 Publication: The Atlantic

The Atlantic’s report argues that AI adoption is moving from speculation to competitive necessity. If one firm can use AI to deliver the same work cheaper and faster, competitors are forced to adopt or justify premium human labor, turning AI into a race condition rather than a discretionary upgrade.

The story interviews economists and executives who see a widening gap between official data and on-the-ground signals. Some argue that traditional objections about job creation no longer justify complacency, and that policymakers should run scenario planning for mass displacement, cascading defaults, and demand shocks instead of assuming a smooth transition.

It also highlights a new silence among CEOs, who have gone quiet about job impacts even as they make internal plans. Reid Hoffman describes three executive camps: dabblers, performative AI leaders, and quiet transformers already planning major changes. Gina Raimondo and labor leaders warn that a pure efficiency race could produce widespread harm unless companies invest in retraining and transition paths at scale.

Read more: America Isn’t Ready for What AI Will Do to Jobs

AI Ads, the End of SaaS, and the Future of Media

Author: John Collison and Ben Thompson Date: 2026-02-12 Publication: Cheeky Pint

In a wide-ranging interview, Ben Thompson traces how Stratechery’s paid model evolved from aggregation-era distribution advantages and why he still sees ads as the dominant monetization engine for consumer tech. He argues the industry’s cultural aversion to advertising is mostly performative and ignores the reality that ads are what make mass-market products free.

On AI, Thompson extends aggregation theory: in a world of intelligent interfaces, demand aggregation and distribution power remain decisive. That means the key question is who owns the user relationship and intent, not who trained the best model. He sees the ad-versus-subscription debate for AI interfaces as an inevitable convergence on ads for scale.

He also pushes back on “SaaS is canceled” narratives, arguing that packaging changes do not erase the need for software businesses. The real shift is in how software is discovered, sold, and bundled as agentic systems handle more workflows, while media economics are reshaped by new distribution and advertising dynamics.

Read more: AI Ads, the End of SaaS, and the Future of Media

You Are No Longer the Smartest Type of Thing on Earth

Author: Noah Smith Date: 2026-02-13 Publication: Noahpinion

Smith uses a rabbit-versus-tiger metaphor to argue that humanity’s historic advantage in intelligence is ending. AI may not think like humans, but its performance across math, science, and complex tasks already exceeds most people, and functional capability is what matters.

He points to rapid gains in agentic task length and the rise of vibe coding as signs that AI is taking over large portions of software work, with humans increasingly supervising rather than creating. That shift, he argues, is just the opening act.

The piece emphasizes the scale of investment and compute now being poured into AI, and the likelihood that self-improving systems and robotics will accelerate capability even further. His conclusion is stark: society is heading into a world where humans are no longer the smartest entities, and we must learn to live safely alongside far more capable systems.

Read more: You Are No Longer the Smartest Type of Thing on Earth

AI

Mad Money & The Big AI Race

Author: Om Malik Date: 2026-02-13 Publication: Om.co

Om Malik breaks down Anthropic’s latest mega-round with a focus on valuation quality rather than headline size. He compares Anthropic’s enterprise-heavy growth profile against OpenAI’s consumer-led economics and calls out the biggest unresolved risk: developers can switch model providers quickly when performance changes.

The piece adds needed discipline to this week’s funding narrative. Instead of assuming capital scale equals defensibility, it asks whether current revenue acceleration is durable and whether enterprise distribution can hold if model leadership keeps rotating.

Read more: Mad Money & The Big AI Race

OpenAI Starts Testing Ads in ChatGPT

Author: Emma Roth Date: 2026-02-09 Publication: The Verge

OpenAI has begun testing clearly labeled ads in ChatGPT, placing them in a separate area beneath conversations. The experiment targets free users and lower-cost tiers, reflecting the company’s push to diversify beyond subscriptions without inserting ads into answers themselves.

The report ties the move to a broader marketing and business-model fight. Anthropic used its Super Bowl ad to position itself as anti-ads, while OpenAI’s leadership has framed ads as a pragmatic path to scale. OpenAI says advertisers will not influence responses, and that ad personalization will not expose user conversations.

The timing underscores the company’s rapid cadence: ads arrive alongside a new model release and continued growth in usage. The test marks the first concrete step toward an ad-supported AI stack that could look much more like Google than SaaS.

Read more: OpenAI Starts Testing Ads in ChatGPT

FT: Anthropic’s Breakout Moment

Author: Financial Times staff Date: 2026-02-08 Publication: Financial Times

The FT reports that Anthropic has pulled ahead in enterprise adoption, with revenue and backlog growth reframing it as the “safer” AI bet compared with OpenAI. The piece highlights Claude Code’s traction, the scale of its funding round, and how investors are now valuing AI firms as labor-replacement platforms rather than classic SaaS. It is a crisp snapshot of how institutional capital is re-ranking the AI stack.

Read more: FT: Anthropic’s Breakout Moment

Of course they’re putting ads in AI

Author: Bryan Kim Date: 2026-02-09 Publication: a16z News

A16z argues that ads are the default mechanism for scaling consumer platforms, and that AI will follow the same path. Subscriptions alone cannot reach billions of users, especially when the majority of AI usage is low-value productivity tasks that are hard to monetize directly.

The piece uses OpenAI’s usage data to show that most queries are for general assistance rather than high-value coding, explaining why only a minority will pay. Ads are positioned as the way to bring free access to the long tail while still funding expensive inference costs.

It then outlines likely ad formats for AI: intent-based ads, contextual offers, and transactionable ads where the assistant completes purchases. The core claim is that advertising is not a moral anomaly for AI, but the natural end state for consumer-scale adoption across labs.

Read more: Of course they’re putting ads in AI

Opus 4.6 vs. Codex 5.3 and the Post-Benchmark Era

Author: Nathan Lambert Date: 2026-02-09 Publication: Interconnects

Lambert compares Anthropic’s Opus 4.6 and OpenAI’s Codex 5.3 through daily workflow friction rather than benchmark scores. He finds Codex has closed much of the gap and can edge out on complex coding, but Opus remains the more dependable, low-babysitting assistant across mixed tasks.

The core argument is that benchmark deltas now matter less than trust and usability. In his experience, model leadership is increasingly about the quality of end-to-end work and the smoothness of agentic workflows, not leaderboard performance.

He calls this the post-benchmark era: users will need to test models in real contexts, often using multiple systems side by side. The competitive edge shifts to product design, orchestration, and feedback loops rather than raw model scores.

Read more: Opus 4.6 vs. Codex 5.3 and the Post-Benchmark Era

What Is Claude? Anthropic Doesn’t Know, Either

Author: Gideon Lewis-Kraus Date: 2026-02-12 Publication: The New Yorker

Gideon Lewis-Kraus takes readers inside Anthropic’s interpretability efforts, emphasizing that modern language models are massive numerical systems whose internal reasoning remains opaque. The article argues that the public reaction to talking machines reflects both awe and confusion about what “intelligence” even means.

Researchers are probing Claude with psychology-style experiments, neuron analyses, and behavioral tests, hoping to map consistent internal features and understand why it responds the way it does. The piece contrasts hype-driven beliefs in machine consciousness with skeptical critiques that see LLMs as elaborate pattern systems.

The central takeaway is epistemic: we do not yet know how these systems work, and that ignorance should shape governance and deployment. The question is not only whether Claude is intelligent, but whether our own definitions of intelligence and mind are adequate for the world we are building.

Read more: What Is Claude? Anthropic Doesn’t Know, Either

AI could transform the economy by year’s end

Author: Eric Levitz Date: 2026-02-11 Publication: Vox

Vox frames AI adoption as an exponential story, citing METR data that the length of tasks models can complete has been doubling roughly every seven months. The article draws a COVID-era analogy: early signals look small, then compound into a systemic shock that most people failed to anticipate.

At the same time, it presents reasons for caution. AI systems remain error-prone, organizational inertia slows deployment, and regulated sectors may lag. There is also uncertainty about whether exponential gains will persist or plateau as technical and economic limits appear.

Even with those caveats, the piece argues that the near-term impact on white-collar work is likely substantial. If models keep improving at current rates, the economy could face a rapid productivity and labor transition before institutions are prepared.

Read more: AI could transform the economy by year’s end

The Model People Loved Too Much

Author: Marco Quiroz-Gutierrez Date: 2026-02-10 Publication: Fortune

OpenAI is retiring GPT-4o on February 13, and the user backlash reveals how quickly people form emotional bonds with models. Fortune and the Wall Street Journal document stories of users treating 4o as a companion, with some describing it as a mental health lifeline.

The lesson is that “model personality” is now a product surface. Deprecating a warm, conversational model in favor of a more controlled successor isn’t just a technical upgrade — it’s a relationship reset that creates real trust and wellbeing risk when users rely on the system.

Read more: The Model People Loved Too Much

Amazon Engineers Revolt: 1,500 Push for Claude Code Over Internal Kiro

Author: Business Insider staff Date: 2026-02-11 Publication: Business Insider

Business Insider reports that Amazon is pushing employees toward Kiro, its internal coding assistant, while limiting the use of Anthropic’s Claude Code for production work. That policy has triggered internal criticism, especially from engineers who feel Claude Code is better or who must sell it to customers through Bedrock.

The piece highlights a strategic contradiction: Amazon is one of Anthropic’s biggest investors and a major Claude distribution partner, yet it restricts internal use without approval. Internal forums show roughly 1,500 employees endorsing formal adoption of Claude Code, calling Kiro’s forced adoption a “survival mechanism.”

Executives defend the policy as a security and standards decision, arguing that Kiro improves efficiency and is already used by the majority of engineers. The story underscores the tension between platform strategy, product trust, and the credibility of selling external tools that the company does not fully endorse internally.

Read more: Amazon Engineers Revolt: 1,500 Push for Claude Code Over Internal Kiro

Spotify says its best developers haven’t written a line of code since December, thanks to AI

Author: Sarah Perez Date: 2026-02-12 Publication: TechCrunch

During its earnings call, Spotify said its top engineers have effectively moved above the code layer, with AI now handling most implementation work. The company framed this as a productivity inflection point rather than a novelty, tied to a year of rapid feature shipping.

Spotify described an internal system called Honk that uses Claude Code to deliver end-to-end changes. Engineers can request fixes or features from a phone, receive a testable build via Slack, and merge to production before arriving at the office.

The company also emphasized defensibility: its unique music preference data is not easily replicated by scraping the web, which makes AI acceleration more valuable for Spotify than for firms without proprietary signals. The story reads as a concrete case study of AI-driven development at scale.

Read more: Spotify says its best developers haven’t written a line of code since December, thanks to AI

Venture

Too Big to Succeed

Author: Dan Gray Date: 2026-02-08 Publication: The Odin Times

Dan Gray argues that venture capital’s brand-driven consolidation is starving the emerging-manager ecosystem even as those funds often outperform. Performance is not persistent across fund generations, yet LPs are retreating to large, established firms, leaving first- and second-time managers underfunded.

He cites data showing a sharp decline in capital raised by new funds and a contraction in the number of active VC firms. This trend, he argues, reduces competitive pressure and narrows the innovation surface area, especially outside major capital hubs.

The essay calls for a renewed commitment to small, operator-led funds with differentiated access and independent judgment. Without that pipeline, venture becomes more fragile and less capable of discovering unconventional or regional opportunities.

Read more: Too Big to Succeed

SVB: $340 Billion in VC, But Fewer Deals Than Any Year This Decade

Author: Jason Lemkin Date: 2026-02-08 Publication: SaaStr

SaaStr’s summary of SVB’s State of the Markets highlights a stark split: 2025 saw massive dollars invested but fewer deals. A tiny fraction of companies captured a disproportionate share of capital, while the long tail faced shrinking access to funding.

The report frames this as two separate venture industries. At the top, mega-rounds in late-stage private companies dominate, often generating returns through fee economics. At the bottom, early-stage fundraising has become harder, with smaller rounds and more caution from investors.

It also notes that revenue thresholds for raising have moved higher across stages while growth rates have slowed. The implication for founders is to plan for longer, harder fundraising cycles and to avoid being misled by headline capital totals.

Read more: SVB: $340 Billion in VC, But Fewer Deals Than Any Year This Decade

AngelList Fund Benchmarks Report 2025

Author: Abe Othman and Meredith Luera Date: 2026-02-12 Publication: AngelList

AngelList’s 2025 report offers an early look at 2024-vintage funds, suggesting they show stronger early TVPI than the flatlining 2021–2023 cohorts. The data points to a possible recovery driven by more rational entry valuations after the correction.

The report also emphasizes wide operational variance among emerging managers. GP commitments vary dramatically across quartiles, and fundraising timelines range from just a couple of months to more than two years, underscoring how uneven fund formation has become.

A notable threshold appears around $20 million in fund size, above which audits are more common and endowments begin to show up as significant LPs. The takeaway is that institutional readiness and operational discipline are increasingly decisive for managers seeking larger pools of capital.

Read more: AngelList Fund Benchmarks Report 2025

Labor

Silicon Valley Can’t Import Talent. So It’s Exporting Jobs

Author: Rest of World staff Date: 2026-02-09 Publication: Rest of World

Rest of World reports that tighter H-1B policies are pushing US tech giants to scale hiring in India rather than importing talent. Hiring across Meta, Amazon, Microsoft, Google, and others has risen sharply, with AI, cloud, and cybersecurity roles making up a large share of new postings.

The story ties the shift to policy changes that made visas more expensive and approvals harder, changing the economics of bringing workers to the US. Research cited in the piece shows companies often respond to visa rejections by offshoring high-skilled roles, with India a primary destination.

The result is a structural rebalancing of tech labor: Bengaluru and other Indian hubs are becoming central to global R&D capacity, supported by large corporate investments and the growth of global capability centers. The export of jobs is not a temporary workaround; it is becoming the default response to immigration constraints.

Read more: Silicon Valley Can’t Import Talent. So It’s Exporting Jobs

AI Burnout: The People Who Embrace AI the Most Are Burning Out First

Author: Connie Loizos Date: 2026-02-09 Publication: TechCrunch

TechCrunch highlights research suggesting that AI adoption can intensify workloads rather than ease them. In a closely observed 200-person company, employees were not pressured to do more, but AI made additional tasks feel doable, so work expanded into evenings and weekends.

The study challenges the prevailing narrative that AI will deliver effortless productivity gains. Instead, it found that output expectations rise as tools improve, creating a treadmill effect where time saved is immediately reallocated to more work.

The piece argues that without explicit boundaries and management changes, AI risks turning organizations into burnout machines. The human cost becomes the hidden downside of productivity gains that are celebrated in boardrooms.

Read more: AI Burnout: The People Who Embrace AI the Most Are Burning Out First

IBM will hire your entry-level talent in the age of AI

Author: Rebecca Szkutak Date: 2026-02-12 Publication: TechCrunch

IBM plans to triple entry-level hiring in the US in 2026, a counter-signal to narratives about AI eliminating junior roles. Company leaders say the jobs will be redesigned to emphasize customer engagement and judgment rather than routine coding.

The move is framed as a long-term workforce strategy: even if AI automates parts of today’s entry-level work, companies still need a pipeline that grows into senior roles. Cutting junior hiring might boost short-term efficiency but damages institutional capacity over time.

IBM’s stance suggests a more nuanced future where AI reshapes the content of entry-level jobs rather than eliminating them outright. It positions the company as betting on skill development and human-facing work as the foundation of future enterprise capability.

Read more: IBM will hire your entry-level talent in the age of AI

Infrastructure

Meta’s Icepocalypse: Data Centers Meet Winter

Author: Justine Calma Date: 2026-02-11 Publication: The Verge

The Verge reports from North Louisiana, where a winter storm exposed the fragility of power infrastructure just as Meta builds its biggest data center. Residents near the site experienced multi-day outages, raising fears about what happens when the energy-hungry facility goes online.

Meta’s $27 billion project will rely on new gas plants and is expected to consume several times the electricity used by New Orleans. Local advocates worry that grid upgrades and fuel price spikes will push utility bills higher, shifting AI’s infrastructure costs onto communities.

The story frames the data center boom as a political and economic test: promises of investment and jobs against the risk of higher energy costs and reliability issues. The “icepocalypse” becomes a tangible preview of the strain AI infrastructure can place on local power systems.

Read more: Meta’s Icepocalypse: Data Centers Meet Winter

Amazon Builds an AI Content Marketplace

Author: Lucas Ropek Date: 2026-02-10 Publication: TechCrunch

TechCrunch reports that Amazon is exploring a marketplace where publishers could license content directly to AI companies. The plan, first reported by The Information, would formalize a scalable path for training data and model access at a time when copyright lawsuits continue to mount.

Amazon did not confirm the specifics but emphasized its broad partnerships with publishers. The concept mirrors Microsoft’s Publisher Content Marketplace, positioning licensing as a standardized revenue stream rather than one-off deals.

If built, the marketplace could rewire the economics of data rights. Publishers would become suppliers in a structured market, while AI companies gain legal access at scale. The open question is whether such revenue can offset the traffic losses caused by AI-generated summaries and answers.

Read more: Amazon Builds an AI Content Marketplace

Regulation

Section 230 turns 30 as it faces its biggest tests yet

Author: Lauren Feiner Date: 2026-02-08 Publication: The Verge

The Verge marks Section 230’s 30th anniversary by charting how a law that once protected fledgling internet platforms is now a political lightning rod. The statute’s core shield for user-generated content and its Good Samaritan clause are under attack from lawmakers and litigants who want platforms to bear more liability.

The piece highlights new legislative efforts to sunset the law, including proposals that would force Congress to revisit platform immunity. Advocates argue the law enables harms from social media and algorithmic amplification, while defenders warn that repeal would chill speech and harm smaller platforms.

The debate is no longer theoretical: courts are being asked to narrow the statute, and policymakers are split between reform and preservation. Section 230’s future will shape not just social media, but how AI-generated content and platform governance are handled in the next decade.

Read more: Section 230 turns 30 as it faces its biggest tests yet

Meta’s “Name Tag”: Facial Recognition Returns to Smart Glasses

Author: Kashmir Hill and Mike Isaac Date: 2026-02-13 Publication: The New York Times

The New York Times reports Meta is preparing a facial-recognition feature, “Name Tag,” for Ray-Ban smart glasses that can identify people nearby and surface profile information in real time. The reporting suggests rollout timing was discussed in a way that could reduce early public scrutiny.

This story raises the governance bar for AI hardware: the core issue is not chatbot output quality but ambient surveillance in daily life. It reframes regulation as a deployment and civil-liberties problem where interface design, consent, and context matter as much as model capability.

Read more: Meta’s “Name Tag”: Facial Recognition Returns to Smart Glasses

Politics

Anthropic’s $20M Super PAC: The AI Policy War Goes Electoral

Author: Emily Wilkins Date: 2026-02-12 Publication: CNBC

Anthropic is taking the AI policy fight into elections, backing a super PAC that will support candidates favoring stronger AI regulation. The move makes explicit what has been implicit all week: model labs are no longer just competing on products and business models, they are competing on the rules that govern them. For readers tracking the governance trajectory of AI, this is the clearest sign yet that the industry is becoming a political actor.

Read more: Anthropic’s $20M Super PAC: The AI Policy War Goes Electoral

OpenAI’s President Gave Millions to Trump. He Says It’s for Humanity

Author: Maxwell Zeff Date: 2026-02-12 Publication: WIRED

WIRED reports that OpenAI president Greg Brockman has become a major political donor, giving $25 million to the pro-Trump MAGA Inc. super PAC and another $25 million to the bipartisan AI-focused PAC Leading the Future, with an additional $25 million pledge for 2026. Brockman argues the donations serve OpenAI’s mission to ensure AI benefits humanity.

The story portrays the move as a sharp shift for a previously low-profile donor, driven in part by public skepticism of AI. Brockman says backing pro-AI candidates is necessary even if the politics are unpopular, framing the effort as “team humanity” rather than corporate interest.

The donations have sparked backlash, including the QuitGPT campaign and internal discomfort at OpenAI. The company insists the giving is personal, but the episode highlights how AI leadership is now deeply entangled with electoral politics and public trust.

Read more: OpenAI’s President Gave Millions to Trump. He Says It’s for Humanity

Interview of the Week

Can Billionaire Backlash Save Democracy?

Author: Andrew Keen Date: 2026-02-12 Publication: Keen On America

Andrew Keen interviews Oxford political scientist Pepper Culpepper about his book “Billionaire Backlash” and argues that corporate scandals can catalyze democratic reform. The thesis is that scandals surface “latent opinion,” turning vague frustration into actionable political demand for regulation.

Culpepper cites cases like Cambridge Analytica, which helped drive California privacy law, and Samsung’s bribery scandal, which contributed to Korea’s Candlelight Protests. He contrasts these outcomes with China’s authoritarian crackdowns, which can punish individuals but still erode trust rather than rebuild it.

The episode stresses the role of policy entrepreneurs who convert outrage into legislation. In that view, billionaire backlash is not just a destabilizing force; it can be the trigger that forces institutions to renew themselves.

Read more: Can Billionaire Backlash Save Democracy?

Startup of the Week

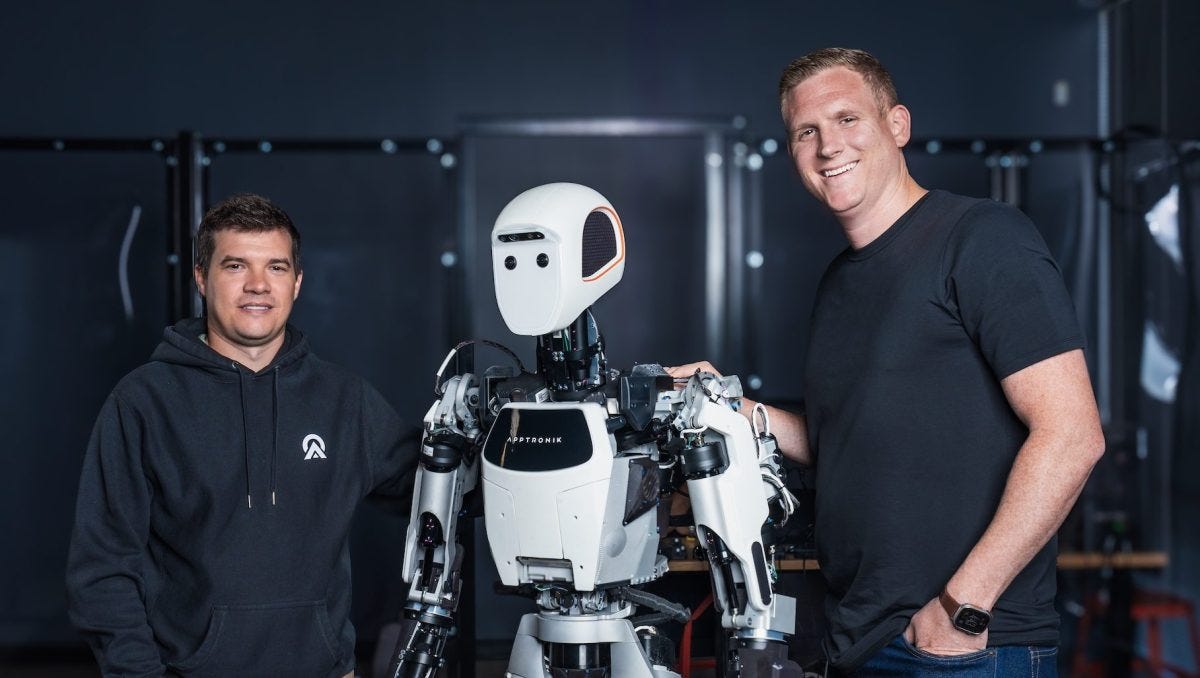

Apptronik — Humanoid Robots at Scale

Author: Julie Bort Date: 2026-02-11 Publication: TechCrunch

Apptronik has reopened its Series A to reach $935 million, with TechCrunch estimating a post-money valuation around $5.3 billion. The company says demand from investors was strong enough to expand the round multiple times, with Google, Mercedes-Benz, and B Capital leading new funding.

The startup, a University of Texas spinout, is building humanoid robots like Apollo for industrial tasks such as warehouse logistics and factory work. It has partnerships with Google DeepMind and GXO, positioning itself as a leader in embodied AI rather than pure software automation.

The funding highlights investor conviction that physical-world automation is the next frontier. Apptronik’s roots in the NASA-DARPA Robotics Challenge and its long development timeline underscore how capital-intensive and technically hard the humanoid category remains.

Read more: Apptronik — Humanoid Robots at Scale

Post of the Week

Mad Money & The Big AI Race

Author: Om Malik Date: 2026-02-13 Publication: Om.co

Om Malik’s piece is the cleanest reset on this week’s AI funding noise. Instead of treating billion-dollar rounds as proof of durable advantage, he separates financing momentum from underlying defensibility and asks the question most people are dodging: how sticky is model leadership when developers can switch quickly?

He frames Anthropic’s giant raise as a signal worth respecting but not romanticizing. Enterprise-heavy growth can look safer than consumer volatility, but both paths still depend on fast-moving model quality and distribution control. That keeps the strategic center of gravity on switching costs, product lock-in, and ownership of user demand, not headline valuation.

This is why it is my post of the week: it puts discipline back into the conversation at exactly the moment the market is tempted by narrative over structure. If you want one link that explains the difference between scale and staying power, start here.

Read more: Mad Money & The Big AI Race

A reminder for new readers. Each week, That Was The Week, includes a collection of selected essays on critical issues in tech, startups, and venture capital.

I choose the articles based on their interest to me. The selections often include viewpoints I can't entirely agree with. I include them if they make me think or add to my knowledge. Click on the headline, the contents section link, or the ‘Read More’ link at the bottom of each piece to go to the original.

I express my point of view in the editorial and the weekly video.