From the Editor: Andrew is traveling this week so no video. In its place here is a short snippet from 20VC with Harry Stebbings , Rory O’Driscoll and Jason M. Lemkin 🦄 that forms part of this week’s venture section with an article by Rob Hodgkinson and Jason M. Lemkin 🦄 .

Contents

Essays

AI

Media

Venture

a16z Raises $10BN in New Funds & Mercor Raises $350M at a $10BN Valuation

Sequoia Capital: why the next Rockefellers are building AI startups right now

And the winner of Startup Battlefield at Disrupt 2025 is: Glīd

#287: Inside RAISE Global 2025 — How Fund Models Are Evolving

Silicon Valley chip start-up raises $100mn to take on TSMC and ASML

Sequoia: Today we’re launching our newest set of Early Stage funds

Crypto

Startup of the Week

Post of the Week

Editorial: Can OpenAI Shape Our Future?

Two facts from this week tell the whole story.

First, Microsoft locked in a 27% stake and commercial rights to OpenAI’s frontier models through 2032, as OpenAI transformed its nonprofit entity and endowed it with a $130B value.

Second, Chinese open models—led by Qwen—overtook U.S. peers in downloads, with Airbnb’s Brian Chesky saying:

“We’re relying a lot on Alibaba’s Qwen. It’s very good. It’s also fast and cheap… we use OpenAI’s latest models, but we typically don’t use them that much in production because there are faster and cheaper models.”

Here’s the tension we need to name—and undertsand. Capitalism builds big, fast systems. Open source and the long tail democratize and discipline them. One does not replace the other. Innovation needs both.

The big private foundation models are consolidating because scale wins on timelines that matter.

Microsoft’s 27% + OpenAI’s restructuring + Sam Altman’s public clock—an AI research “intern” by 2026 and a “researcher” by 2028, backed by an ambition to add roughly one gigawatt of AI compute per week—are not vibes; they’re industrial policy by private contract.

The money is real: a16z’s new $10B, Mercor’s $10B valuation for the human‑in‑the‑loop engine behind ChatGPT, global data center buildouts from São Paulo to Saigon, and even atoms plays like TechCrunch Disrupt winner Glīd (moving steel boxes faster) and Substrate’s $100M particle‑accelerator challenge to EUV. You can dislike concentration; you can’t deny its execution speed, capital raising capability, and scale.

The open source LLM model builders are rebelling in productive ways. Qwen’s surge and DeepMind’s model show the counter‑thesis: smaller, efficient models can be “good enough,” fast, and cheap - often the right call in production.

Creators are voting with their feet (top Substack writers to Patreon; podcasters to TV), and the long tail of software is about to mirror YouTube’s history: LLMs turn “anyone with an idea” into an app publisher. This is how we keep the big companies honest. Technology empowers competitors.

The real argument is not large for-profit LLM companies vs. Open Source - it’s distribution of the vast wealth AI can be a catalyst for.

Zoom’s Eric Yuan says AI should deliver a 3 or 4‑day week. And I would add that this trends to a 3,2,1 then zero day week over the next 2-3 decades.

On the other hand the FT warns retailers that agentic commerce can disintermediate their customer relationships; Fast Company reminds us that “AI abstinence won’t work.”

If we allow the large LLMs to drive wealth, and make a lot of revenue but we leave the gains in their hands we will have built the wrong future. Perhaps surprisingly a certain D Trump this week suggested that the US Government should take 10% ownership of all AI companies (and beyond) and preserve that wealth for the people. A mechanism to capture the benefits of AI for all is needed, and not only for US citizens.

Whether we can trust Government to be the custodial and benefit the people is a seperate discussion. But a mechanism is required and 10% would be a smallish starting point.

Which is why the week’s most important idea is predistribution, not philanthropy. The $130B gift to OpenAI’s founding nonprofit is notable—but foundation giving is not a social contract. We need durable, auditable rails that share upside as capability compounds. Here are some of the ideas surfacing:

Universal Basic Credit is one idea mentioned this week, not just UBI: citizen equity in the rails—compute, models, and data—so dividends grow with usage. Citizen equity in the form of individual ownership in growth can be an interesting element.

A value exchange between AI and the web: this is a way for links to content and products are surfaced as relevant inside AI, allowing traffic to drive revenue and allowing AI to get paid a portion.

Industrial policy with teeth: public co‑ownership in strategic AI infrastructure, not just subsidies, to translate capex into national dividends.

What to watch next

Retailer data sovereignty in agentic commerce: who owns the customer when the agent acts?

Policy pilots for Universal Basic Credit and AI‑dividend funds.

Bottom line:Capitalism will build the AI and grow GDP worldwide. Open source will complement and constrain ownership of customers. Policy must make sure the gains show up in our time, not just on one balance sheet. If we really believe AI can shrink the workweek—and it can—then we owe ourselves mechanisms that pay everyone when the machines do the work.

Essay

Universal basic credit would create a fair AI economy

Ft • October 31, 2025

Essay•AI•Economy•Universal Basic Credit•Inequality

The rapid advancement of artificial intelligence necessitates a fundamental rethinking of economic policy, with universal basic credit emerging as a more effective alternative to traditional universal basic income. This approach focuses on predistribution rather than redistribution, aiming to close the inequality gap by providing citizens with access to capital and productive assets before wealth becomes concentrated. As AI systems increasingly automate cognitive work and generate substantial economic value, the traditional labor-based distribution model becomes increasingly inadequate for ensuring broad-based prosperity.

The Predistribution Advantage

Universal basic credit represents a shift from reactive wealth redistribution to proactive asset distribution. Unlike universal basic income, which transfers cash after wealth has been accumulated by a small segment of society, universal basic credit would provide citizens with direct stakes in productive AI systems and other capital assets. This approach recognizes that the fundamental challenge of AI-driven economies isn’t necessarily scarcity of goods, but rather the distribution of ownership and access to the means of production.

The predistribution model addresses several critical shortcomings of traditional redistribution systems. By giving people ownership stakes from the outset, it creates sustainable wealth-building pathways rather than temporary consumption support. This approach also aligns incentives more effectively, as citizens become direct beneficiaries of economic growth and technological advancement rather than passive recipients of government transfers.

Implementation Framework

Several implementation models have been proposed for universal basic credit, each with distinct advantages. One approach involves creating sovereign wealth funds that would hold equity in major AI companies and distribute dividends to all citizens. Another model suggests providing every adult with a starter portfolio of AI-related assets that could grow over time. A third approach focuses on giving citizens direct access to AI tools and computing resources they could use for entrepreneurial activities.

The common thread across these models is the recognition that in an AI-dominated economy, traditional work-based income may become insufficient for many people. By ensuring everyone has a stake in the capital that drives economic growth, universal basic credit could prevent the extreme wealth concentration that characterized previous technological revolutions while maintaining incentives for innovation and productivity.

Economic and Social Implications

This approach could fundamentally reshape how societies think about work, value creation, and economic participation. Rather than viewing AI primarily as a threat to employment, universal basic credit reframes technological advancement as an opportunity to democratize wealth creation. It acknowledges that in an economy where AI systems perform much of the productive work, traditional employment relationships may need to be supplemented with alternative forms of economic participation.

The transition to such a system would require careful design to avoid unintended consequences. Key considerations include balancing universal access with performance incentives, ensuring the system doesn’t discourage productive work where it remains valuable, and creating mechanisms that allow for individual choice and entrepreneurship. Properly implemented, universal basic credit could create a more resilient economic foundation where technological progress benefits society broadly rather than concentrating wealth among a small technological elite.

Venture Industrial Policy

Credistick • October 26, 2025

Venture•Essay

The concept of venture industrial policy represents a strategic shift in how governments are approaching economic development and technological competitiveness. Many countries are actively developing policies aimed at fostering domestic venture capital ecosystems, with the explicit goal of closing the significant gap with the United States. This approach recognizes that traditional industrial policy, focused on established industries, must evolve to address the unique needs of high-growth technology startups and the venture capital that fuels them.

The European Context and Growth-Capital Gap

In the European Union, a central premise driving this policy shift is the identification of a substantial “growth-capital gap” within its private markets. This gap refers to the critical shortage of funding available for scaling companies that have moved beyond the initial seed or early-stage funding rounds but are not yet ready for public markets or acquisition. This funding void is seen as a fundamental constraint limiting the potential of EU-based companies to achieve global scale, thereby hindering the region’s overall economic dynamism and its ability to compete in key technology sectors.

Strategic Objectives and Policy Mechanisms

Venture industrial policy moves beyond simple subsidy models to create more sophisticated, market-oriented interventions. The core objective is to create positive-sum games where public capital catalyzes rather than crowds out private investment. Key mechanisms being explored and deployed include:

The establishment of fund-of-funds that invest in private venture capital firms, thereby increasing the total capital available for startups.

Co-investment schemes where government-backed entities invest alongside private venture capitalists, de-risking deals for private investors.

Regulatory reforms aimed at making it easier for institutional investors like pension funds and insurance companies to allocate capital to venture capital asset classes.

Direct investment in strategic sectors deemed critical for national or regional security and economic sovereignty, such as semiconductors, artificial intelligence, and quantum computing.

Implications and Global Competition

This trend signifies a new phase in global economic competition, where technological leadership is increasingly intertwined with national security and economic prosperity. The proactive stance of various governments challenges the notion of venture capital as a purely market-driven phenomenon, reframing it as a strategic industry worthy of state support. The success or failure of these policies will have profound implications for the global distribution of technological innovation, influencing which companies and which regions dominate the next wave of technological change. The ultimate test will be whether these state-backed ecosystems can generate self-sustaining, profitable venture markets that produce world-leading companies without creating market distortions or dependency on continued public support.

AI abstinence won’t work

Fast Company • October 27, 2025

AI•Tech•LLMs•Cognitive Offloading•Digital Well being•Essay

As AI oozes into daily life, some people are building walls to keep it out for a host of compelling reasons. There’s the anxiety about a technology that requires an immense amount of energy to train and contributes to runaway carbon emissions. There are the myriad privacy concerns: At one point, some ChatGPT conversations were openly available on Google, and for months OpenAI was obligated to retain user chat history amid a lawsuit with The New York Times. There’s the latent ickiness of its manufacturing process, given that the task of sorting and labeling this data has been outsourced and underappreciated. Lest we forget, there’s also the risk of an AI oopsie, including all those accidental acts of plagiarism and hallucinated citations. Relying on these platforms seems to inch toward NPC status—and that’s, to put it lightly, a bad vibe.

Then there’s that matter of our own dignity. Without our consent, the internet was mined and our collective online lives were transformed into the inputs for a gargantuan machine. Then the companies that did it told us to pay them for the output: a talking information bank spring-loaded with accrued human knowledge but devoid of human specificity. The social media age warped our self-perception, and now the AI era stands to subsume it.

Amanda Hanna-McLeer is working on a documentary about young people who eschew digital platforms. She says her greatest fear of the technology is cognitive offloading through, say, apps like Google Maps, which, she argues, have the effect of eroding our sense of place. “People don’t know how to get to work on their own,” she says. “That’s knowledge deferred and eventually lost.” As we give ourselves over to large language models, we’ll relinquish even more of our intelligence.

Exposure avoidance

The movement to avoid AI might be a necessary form of cognitive self-preservation. Indeed, these models threaten to neuter our neurons (or at least how we currently use them) at a rapid pace. A recent study from the Massachusetts Institute of Technology found that active users of LLM tech “consistently underperformed at neural, linguistic, and behavioral levels.”

People are taking steps to avoid exposure. There’s the return of dumbphones, high school Luddite clubs, even a TextEdit renaissance. A friend who is single reports that antipathy toward AI is now a common feature on dating app profiles—not using the tech is a “green flag.” A small group of people proclaim to avoid using the technology entirely.

But as people unplug from AI, we risk whittling the overwhelming challenge of the tech industry’s influence on how we think down to a question of consumer choice. Companies are even building a market niche targeted toward the people who hate the tech.

Even less effective might be cultural signifiers, or showy—perhaps unintentional—declarations of individual purity from AI. We know the false promise of abstinence-only approaches. There’s real value in prioritizing logging off, and cutting down on individual consumption, but it won’t be enough to trigger structural change, Hanna-McLeer tells me.

Our age of kings

Noahpinion • October 26, 2025

Essay•GeoPolitics•Authoritarianism•Media•Democracy

In the contemporary global landscape, a distinct trend has emerged where strongman leaders are gaining prominence, a phenomenon driven by the profound societal disruptions caused by new media technologies. This dynamic represents a fundamental shift in the relationship between political authority and information dissemination. The central argument posits that these leaders are essentially offering a “cure” for the chaos and fragmentation of the digital public square, but that this proposed solution—characterized by centralized control and authoritarian tendencies—is ultimately more damaging than the initial problem it seeks to solve.

The Disruption of New Media

The rise of social media, algorithm-driven news feeds, and the 24-hour news cycle has fundamentally dismantled traditional information gatekeepers. This has led to several critical consequences:

Erosion of Shared Reality: The proliferation of niche information bubbles and echo chambers has made it increasingly difficult for societies to agree on a common set of facts, undermining democratic discourse.

Amplification of Outrage: The economic models of new media platforms often incentivize content that generates high engagement, which frequently means promoting outrage, misinformation, and tribal conflict over nuanced debate.

Weakened Institutions: Traditional institutions, including the mainstream press and political parties, have seen their authority decline as they compete with a vast, unregulated digital arena for public attention.

This environment of perpetual information warfare and social anxiety creates a powerful demand for order and simplicity. Citizens overwhelmed by complexity and conflict become more receptive to leaders who promise to cut through the noise and restore national cohesion and purpose.

The Strongman’s Appeal

In this context, the strongman leader presents themselves as the antidote to chaos. They offer a return to a perceived golden age of stability and national pride, often by employing a specific toolkit:

Centralizing Power: They systematically weaken checks and balances, targeting independent judiciaries, legislatures, and media to consolidate decision-making.

Promoting a Singular Narrative: By controlling state media and intimidating independent press, they enforce a single, state-sanctioned version of events, positioning themselves as the sole arbiter of truth.

Identifying Scapegoats: They often channel public frustration toward designated enemies, both foreign and domestic, to foster internal unity and deflect blame from governance failures.

This approach can be superficially appealing, as it replaces the exhausting cognitive load of navigating a complex information ecosystem with the simplicity of a single, commanding voice. The strongman promises to resolve the “disease” of informational overload by imposing a rigid, top-down order.

Why the Cure is Worse

However, the analysis concludes that this authoritarian “cure” is far more perilous than the disease of media disruption. While new media creates fragmentation, the strongman’s solution leads to systemic oppression and the erosion of fundamental freedoms. The trade-off is not between chaos and order, but between a messy, open society and a controlled, closed one. The strongman model sacrifices pluralism, dissent, and individual rights for the sake of a forced and fragile stability. It addresses the symptoms of societal breakdown not by healing the underlying causes—such as economic inequality or social distrust—but by silencing the patients.

Ultimately, the challenge for modern democracies is to develop resilience and new forms of social cohesion that can withstand the pressures of the digital age without resorting to authoritarian shortcuts. Building robust, trustworthy institutions and fostering media literacy may be a more difficult and protracted path, but it is the only one that preserves liberal democratic values. The age of kings is a regression, not a solution, offering a false comfort that comes at the ultimate price of liberty.

The future of the web is the history of YouTube

A16z • Anish A • October 28, 2025

Essay•AI•SoftwareDevelopment•YouTube•ContentCreation

Fifteen years ago, if you asked “what are smart people doing on weekends?” a really good answer would’ve been “making YouTube videos.”

YouTube celebrated their twenty year anniversary earlier this year, and while we all enjoy a good reminiscence of the early videos that made us laugh, we don’t always appreciate how countercultural it was to actually be a YouTuber in those early days. Even after the YouTube Partner Program launched in 2007, the idea that you could earn a living from a channel seemed far-fetched for a while. The world already had so much video content, from the major studios down through the long tail of the 110th channel in your cable bundle: how could there possibly be any commercial demand for even more?

We now know, in hindsight, that the world was actually short video 15 years ago. And we know that because of what’s happened on YouTube since. When anyone with a camera and an editing suite can find their audience, we discover all kinds of channels and businesses - from Hot Ones to Mr Beast to Dwarkesh - that obviously deserve prominent placement in our content universe. The supposed “long tail” was so much bigger than anyone could’ve known.

Maybe this is the right historical guide for thinking about LLMs, web apps, and the future of the internet. YouTube changed content forever by collapsing “creatively making content” and “running a microbusiness” into a straightforward sequence of steps. You still needed to be creative and driven, but the rest got a lot simpler. So why shouldn’t we see the same thing happen with software?

The web has always been great at facilitating permissionless creation by anyone. But it wasn’t until LLMs that the definition of “anyone” changed from “developers” to “literally anyone with an idea and access to a coding agent.” Five years from now, we might look back and realize that the world was short software, because the only people equipped to build it were engineers. In other words, this is the YouTube moment for the rest of the internet.

LLMs finally make it possible to build niche software and apps that previously would not have made it to market. You wouldn’t hire a bunch of engineers to build a product for 100 people, but you can build (and monetize!) smaller-TAM products using app-gen and coding tools.

Long tail web apps will be built by a particular kind of “professional”, and Youtubers are the best template we have for what that kind of professional looks like.

Paul Bakaus has pointed out that when people talk about the internet, they’re actually talking about three distinct things: the content layer of the web, which consists of blogs, sites like YouTube, Substack, and traditional publishers, the commerce layer of the web, which consists of marketplaces like Amazon and Shopify stores, and the app layer of the web, which for the majority of the internet’s history consisted of “serious” cloud-based software, like enterprise platforms and social networks.

The Vinci Code: How AI is Turning Everyone into James Bond

Keenon • Andrew Keen • October 28, 2025

Essay•AI•Espionage•National Security•China

Anthony Vinci’s provocative thesis in “The Fourth Intelligence Revolution” argues that artificial intelligence is fundamentally transforming espionage to the point where every citizen must become a modern-day James Bond to protect national security. As AI democratizes intelligence capabilities, Vinci contends that traditional boundaries between professional spies and ordinary citizens are dissolving, creating a landscape where everyone is both a potential target and an intelligence asset.

The Core Argument: A New Intelligence Paradigm

Vinci identifies what he calls the “Fourth Intelligence Revolution” - a fundamental shift driven by AI technology and the escalating geopolitical competition between the United States and China. This revolution extends intelligence operations beyond traditional military and diplomatic spheres into economics, technology, and everyday civilian life. The central premise is that intelligence is no longer the exclusive domain of government agencies but has become accessible to and affecting ordinary citizens.

Economic Espionage as the New Battleground

One of Vinci’s most significant predictions involves the rise of economic espionage as the defining feature of modern intelligence competition. He argues that America must develop capabilities in economic intelligence gathering - a domain where it has historically been reluctant to operate. This shift represents a fundamental change from traditional espionage focused on military secrets to one centered on markets, infrastructure, and intellectual property.

AI Versus AI: The Future of Espionage

Vinci envisions a future where espionage becomes largely automated, with artificial intelligence systems spying on and countering other AI systems. He describes this as a world where “our machines will spy on their machines,” transforming the traditional spy-versus-spy dynamic into algorithm-versus-algorithm competition. This technological arms race represents the next phase in intelligence evolution.

The Citizen as Intelligence Operative

Perhaps the most striking aspect of Vinci’s argument is his assertion that every citizen must now develop basic intelligence skills. In the digital era, individuals have become primary targets for state and non-state actors seeking to collect data, influence behavior, and manipulate information. This requires ordinary people to adopt counter-intelligence practices traditionally reserved for professional operatives.

China as the Central Adversary

While acknowledging threats from Russia and other autocracies, Vinci positions China as America’s primary intelligence adversary. He points to platforms like TikTok and widespread cyber-hacking operations as evidence of Beijing’s strategy to shape global perceptions and exploit American data. This makes Vinci’s framework as much about information warfare as traditional espionage.

The author of the article expresses skepticism about Vinci’s alarmist perspective, describing it as “paranoia layered upon paranoia layered upon more paranoia” that borrows heavily from Cold War thinking. However, the analysis acknowledges that Vinci’s background as both a “spook watcher and player” lends credibility to his warnings about the transformative impact of AI on global intelligence operations.

Bring back liberal nationalism

Noahpinion • October 28, 2025

Essay•GeoPolitics•LiberalNationalism•Democrats•Polling

Thesis and Context

The author argues that the American left should “rediscover the Americanism of FDR and JFK,” calling for a renewed liberal nationalism that fuses progressive goals with a shared national identity. Framed as an urgent political intervention, the piece contends that despite widespread disapproval of the current administration’s performance, Democrats are failing to capitalize—and may even be losing the narrative war—because the public does not associate them with patriotic competence or mainstream ideas.

Diagnosis of Governance and Public Mood

The article opens with a searing critique of the administration’s record: “Tariffs are punishing the U.S. economy,” “Masked federal agents are rampaging through the streets of American cities,” “Corruption is rampant,” and public health leaders “don’t even believe in the germ theory of disease.” This litany is used to set up a paradox: even as governance appears chaotic and illiberal, the president’s approval “keeps slipping” (attributed to Nate Silver) but not in ways that translate into a decisive advantage for Democrats. The broader implication is that poor performance in office is not automatically producing an electoral realignment.

The Polling Paradox

Three referenced data points deepen the puzzle:

Approval parity: The two parties are “neck and neck” in approval, with the GOP slightly ahead—counter to expectations of a thermostatic backlash to overreach (visualized via Nate Silver).

Extremity perception: Despite rhetoric about a “third term,” ending birthright citizenship, and press crackdowns, the GOP is perceived as “no more extreme than the Dems” (attributed to G. Elliott Morris).

Issue ownership: Polling suggests the public “prefers Republican ideas over Democratic ones on almost all issues” (attributed to Reuters).

Together, these signals suggest that the median voter does not view Democrats as the safe, centrist, or patriotic alternative. The piece portrays this gap as both strategic and existential: the party positioned to check illiberal tendencies is failing to persuade the electorate that it is mainstream.

Argument for Liberal Nationalism

Against this backdrop, the author urges the left to reclaim an affirmative national story anchored in historically successful Democratic leadership. Invoking FDR and JFK, the essay implies a politics that is unapologetically American—optimistic about national capability, confident in democratic institutions, and willing to pursue ambitious public projects. Rather than ceding “nationalism” to illiberal or exclusionary narratives, liberal nationalism would bind social solidarity, civic equality, and economic dynamism to a shared civic identity. The implicit claim is that such a frame can realign perceptions of Democratic ideas from “extreme” to “common-sense American,” rebuild trust, and translate disapproval of governing failures into durable majorities.

Implications

If the public’s issue preferences and extremity perceptions are misaligned with Democrats’ self-understanding, messaging and policy need a national frame that resonates beyond partisan subcultures. Liberal nationalism offers that bridge: it situates social insurance, competent governance, and equal citizenship within a story of American renewal—one that can counter illiberal nationalism on its own symbolic terrain while mobilizing a broad coalition.

Key takeaways:

Governance failures alone won’t deliver a backlash; identity and mainstream signaling matter.

Polls indicate parity or GOP edge on approval, extremity perceptions, and issue preferences.

Reclaiming patriotic language and national purpose may shift the perceived center of gravity.

FDR/JFK-style Americanism is presented as a proven template for broad-based legitimacy.

Without a unifying national story, Democrats risk remaining out of step with the median voter.

AI

Microsoft to Get 27% Stake of OpenAI, AI Model Access

Bloomberg • October 28, 2025

AI•Funding•Microsoft•OpenAI•Partnership

Microsoft has solidified its position as a dominant force in the artificial intelligence sector by finalizing a landmark agreement with OpenAI. The deal grants Microsoft a substantial 27% ownership stake in the pioneering AI research company, valuing OpenAI at approximately $500 billion. This strategic investment, worth about $135 billion for the stake, represents one of the largest corporate partnerships in the technology industry’s history and fundamentally reshapes the competitive landscape of AI development.

Key Agreement Terms

The partnership extends far beyond a simple financial investment. A cornerstone of the deal is the guaranteed technology access Microsoft secures for its products and services. The agreement locks in Microsoft’s rights to integrate OpenAI’s AI models and underlying technology until the year 2032. This long-term horizon provides Microsoft with unprecedented stability and a clear roadmap for embedding advanced AI across its entire ecosystem, including Azure cloud services, Office productivity suites, and Windows operating system.

Most notably, the arrangement includes access to models that have achieved the benchmark for Artificial General Intelligence (AGI). This clause is particularly significant, as AGI represents AI systems with human-like cognitive abilities across a wide range of tasks. Securing commercial rights to such transformative technology gives Microsoft a potentially insurmountable competitive advantage, allowing it to leverage these advanced capabilities while other companies are still developing foundational AI models.

Strategic Implications and Market Impact

This deepened alliance has profound implications for the global AI race. By cementing its relationship with the leader in generative AI, Microsoft ensures it remains at the forefront of the industry, directly countering competitors like Google and Amazon. The deal effectively makes OpenAI’s groundbreaking research and product development a core, integrated component of Microsoft’s future growth strategy.

For OpenAI, the partnership provides the immense capital required to fund the extraordinary computational costs of training ever-larger AI models. It also offers a direct and powerful route to commercialization through Microsoft’s vast global customer base. However, this closer alignment with a single corporate partner raises questions about the future of OpenAI’s original mission of developing AI for the benefit of humanity, a structure that included a capped-profit arm to balance commercial and ethical considerations.

The agreement signals a new phase of maturity and consolidation in the AI industry, where access to frontier models may become concentrated among a few tech giants with the resources to fund their development. This could accelerate AI adoption across enterprises but also invites increased regulatory scrutiny regarding market competition and the concentration of powerful AI capabilities.

Sam, Jakub, and Wojciech on the future of OpenAI with audience Q&A

Youtube • OpenAI • October 29, 2025

AI•Tech•AGI•Compute•AIResearch

Overview

OpenAI leaders Sam Altman, Jakub Pachocki, and Wojciech Zaremba discuss the company’s research trajectory, product direction, and governance in a wide‑ranging livestream with audience Q&A. They frame progress as a staged path from today’s helpful assistants toward systems that autonomously contribute to scientific discovery, while emphasizing safety guardrails and responsible deployment. Altman positions the session as a shift from abstract AGI talk to concrete, testable milestones that the public can track over the next 24–30 months. (tomsguide.com)

Research roadmap and milestones

The team sets two public targets: by September 2026, an “automated AI research intern” running on hundreds of thousands of GPUs; by March 2028, a “true automated AI researcher” capable of independently generating and testing hypotheses. They stress these are goals, not guarantees, but argue transparency about targets is in the public interest. This reframes AGI as measurable capability, not a label. (gigazine.net)

They describe how research “intern” capabilities would span reading literature, proposing experiments, and coordinating tools with minimal hand‑holding, with the 2028 stage expected to execute multi‑step research programs end‑to‑end. (techradar.com)

Compute, scale, and costs

Altman sketches an “infrastructure factory” ambition to add roughly one gigawatt of AI compute per week over time, with a cost curve that could bring the price of an additional gigawatt down toward $20 billion within five years. He references current multiyear commitments on the order of $1.4 trillion tied to about 30 GW of new capacity, underscoring the capital intensity behind the roadmap and the importance of efficiency gains. (businessinsider.com)

They argue the investment thesis rests on demand from hundreds of millions of weekly ChatGPT users and millions of developers, who convert capability advances into real applications—evidence the speakers cite to justify continued scale‑up. (techcrunch.com)

Safety, policy, and product choices

In Q&A, Altman reiterates that adult users should have more control but not unlimited freedom; he walks back a past example about “erotica” and uses a sharp analogy—comparing totally unbounded access to “selling heroin”—to clarify OpenAI’s stance that safety restrictions will persist alongside expanded controls for verified adults. (businessinsider.com)

On model lifecycle, he acknowledges attachment to older systems (e.g., ChatGPT‑4o) but makes no promises about indefinite availability, balancing user preference with the need to retire legacy models as capabilities and safety improve. (businessinsider.com)

The conversation reiterates a product vision of ChatGPT as a super‑assistant and customizable platform, with automation aimed first at accelerating legitimate research and complex workflows rather than chasing a single “AGI moment.” (tomsguide.com)

What it means

If achieved, the 2026 and 2028 milestones would move AI from assistant to collaborator, with implications for R&D productivity, IP creation, and the scientific labor market. But the plan presumes sustained progress on reliability, attribution, and oversight, and hinges on building—and paying for—unprecedented compute. (techradar.com)

The messaging signals OpenAI’s intent to be measured on deliverables, not definitions. Public targets create accountability but also raise expectation risk: missing dates could dent confidence, while meeting them would reset what researchers and businesses consider “table stakes” for AI. (gigazine.net)

Key takeaways

Two public targets: “research intern” by Sep 2026; autonomous “researcher” by Mar 2028. (gigazine.net)

Massive scale‑up: toward 1 GW/week of new compute; long‑run cost aspiration ≈ $20B/GW; cited commitments ≈ $1.4T for ~30 GW. (businessinsider.com)

Product direction: ChatGPT as super‑assistant and platform; progress judged by real research outcomes, not AGI rhetoric. (tomsguide.com)

Policy stance: tighter adult controls with persistent safety limits; no guarantee to keep legacy models indefinitely. (businessinsider.com)

Why AI is a double-edged sword for retailers

Ft • October 31, 2025

AI•ECommerce•Retail•Technology•Data•Strategy

Artificial intelligence represents a transformative but complex opportunity for the retail industry, creating what industry experts describe as a “double-edged sword” for businesses navigating this new technological landscape. The emergence of agentic commerce—where AI systems autonomously make purchasing decisions on behalf of consumers—promises significant rewards but also introduces substantial risks, particularly concerning data ownership and competitive advantage.

The Promise of Agentic Commerce

Agentic commerce systems are positioned to revolutionize retail by automating consumer purchasing decisions. These AI agents can analyze preferences, monitor prices, track inventory, and execute transactions with minimal human intervention. The potential efficiency gains for both consumers and retailers are substantial, with early adopters reporting significant improvements in customer satisfaction and operational efficiency. Industry analysts project that businesses successfully implementing these systems could see double-digit percentage increases in sales conversion rates while simultaneously reducing marketing costs.

The technology enables hyper-personalized shopping experiences at scale, with AI systems capable of anticipating needs before consumers even recognize them. This proactive approach to commerce represents a fundamental shift from reactive marketing strategies to predictive relationship management, creating new opportunities for customer loyalty and lifetime value optimization.

Data Dilemmas and Strategic Risks

The implementation of agentic commerce systems requires retailers to share extensive consumer data with AI platforms, creating a critical strategic vulnerability. As one retail technology executive noted, “The very data that gives you competitive advantage today could become the foundation for your competitors’ advantage tomorrow.” This data sharing creates dependency on third-party AI systems that may eventually leverage the accumulated information to compete directly with their retail partners.

The concentration of consumer insights within AI platforms raises concerns about market consolidation and the potential erosion of retail differentiation. Smaller retailers face particular challenges, as they may lack the resources to develop proprietary AI systems while simultaneously risking their unique market positions by adopting third-party solutions. This creates a paradox where the technology that promises competitive advantage could ultimately homogenize retail offerings across the market.

Implementation Challenges and Strategic Considerations

Successfully navigating the AI transformation requires careful strategic planning and significant investment in both technology and talent. Retailers must balance the immediate benefits of third-party AI solutions against the long-term value of developing proprietary capabilities. Organizations that treat AI as merely another technology implementation rather than a fundamental business transformation risk missing the broader strategic implications.

The most successful implementations appear to be those that maintain strategic control over core customer relationships while leveraging AI for operational enhancements. This hybrid approach allows retailers to benefit from AI efficiencies without completely ceding their competitive positioning to technology providers. Companies are also finding value in developing AI governance frameworks that clearly delineate which data and decisions can be delegated to automated systems versus those requiring human oversight.

Future Implications and Industry Evolution

The retail industry stands at an inflection point where early AI adoption decisions may determine market leadership for the coming decade. The companies that successfully harness AI’s potential while mitigating its risks will likely emerge as the dominant players in their respective categories. However, the rapid evolution of AI capabilities means that today’s cutting-edge implementation could become tomorrow’s strategic liability if not properly managed.

Industry observers suggest that the most sustainable approach involves developing modular AI strategies that can adapt as the technology and competitive landscape evolve. This requires ongoing investment in organizational capabilities and maintaining flexibility in technology partnerships. The retailers that thrive in this new environment will be those that view AI not as a simple tool but as a core component of their business strategy, continuously balancing innovation with strategic control.

Marc Andreessen and Ben Horowitz on the State of AI

Youtube • a16z • October 31, 2025

AI•Technology•VentureCapital•Investment•Innovation

Marc Andreessen and Ben Horowitz provide a comprehensive analysis of the current state and future trajectory of artificial intelligence, drawing from their extensive experience in technology investing. The discussion covers the technological breakthroughs, market dynamics, and societal implications of AI development, offering insights into why this technological shift represents one of the most significant in modern history.

Current AI Landscape and Breakthroughs

The conversation highlights the remarkable pace of AI advancement, particularly in large language models and generative AI. Andreessen emphasizes that we’re witnessing “the single most important technological breakthrough of our lifetimes,” comparing it to the invention of the computer, internet, and mobile computing combined. The founders discuss how AI capabilities have progressed from narrow applications to general reasoning abilities, with models now demonstrating creative problem-solving across multiple domains. They note the exponential improvement in model performance despite relatively modest increases in parameter count, suggesting fundamental architectural improvements.

Investment Philosophy and Market Opportunities

Andreessen and Horowitz outline their firm’s investment strategy, which focuses on identifying “foundational models” and “application layer” companies. They distinguish between infrastructure plays—companies building the underlying AI technology—and application companies that leverage these models to solve specific business problems. The discussion reveals their preference for “full-stack” approaches where companies control both the AI technology and user experience, creating sustainable competitive advantages. They emphasize the importance of proprietary data moats and network effects in building defensible AI businesses.

Technical Challenges and Scaling Laws

The conversation delves into the technical constraints facing AI development, including compute limitations, energy requirements, and data scarcity. Andreessen explains the concept of “scaling laws” and how they’ve driven performance improvements, but also notes emerging challenges as models grow larger. They discuss the ongoing research into more efficient architectures, training methods, and inference optimization. The founders highlight the critical importance of chip design and manufacturing capacity, noting that AI progress remains tightly coupled with semiconductor advancement.

Regulatory Environment and Societal Impact

Both founders express concerns about potential regulatory overreach that could stifle AI innovation. They argue that current regulatory proposals often misunderstand the technology’s capabilities and development trajectory. Horowitz emphasizes that “the worst outcome would be to regulate AI based on science fiction scenarios rather than actual capabilities.” They discuss the balance between safety considerations and innovation pace, suggesting that market competition and technical safety research provide more effective safeguards than premature regulation.

Future Outlook and Industry Evolution

Looking forward, Andreessen and Horowitz predict several key trends: the emergence of specialized AI models for specific industries, the integration of AI into enterprise software stacks, and the development of new interaction paradigms beyond chat interfaces. They anticipate significant productivity gains across knowledge work, creative industries, and scientific research. The discussion also covers the potential for AI to create entirely new categories of applications and services that are difficult to imagine with current technological constraints.

The founders conclude by emphasizing the transformative potential of AI while acknowledging the challenges ahead. They see current AI capabilities as merely the beginning of a multi-decade technological revolution that will reshape industries, create new economic opportunities, and address complex global challenges through accelerated scientific discovery and problem-solving capabilities.

Charts of the Week: Open model of choice?

A16z • a16z New Media • October 31, 2025

AI•Tech•OpenSource•China•HVAC

Steve Hsu coined the “skull graph moment” as the point at which an upstart overtakes an incumbent with a rate-and-pace-of-change that not only closes a previously “insurmountable” gap, but begins to create a new one, in favor of the challenger.

US-made open models just recently had something of a skull-graph moment with respect to Chinese models. For ~1.5 years, US models enjoyed a pretty fat lead as the global “open model of choice,” at least measured by downloads, but in March 2025 that began to change: Chinese model adoption began to accelerate rapidly.

In a span of less than 6 months, cumulative downloads of Chinese open models had not only overtaken US models, but began to open a widening lead. A good chunk of that Chinese overtake is driven by Qwen’s popularity as an open-source model (at the expense of Llama).

For example, Brian Chesky just told the world “we’re relying a lot on Alibaba’s Qwen. It’s very good. It’s also fast and cheap . . . we use OpenAI’s latest models, but we typically don’t use them that much in production because there are faster and cheaper models.”

To be fair, US model adoption has reaccelerated recently, as well, but the point stands: the race for model dominance is definitely on (and just getting started). The other lesson here is that, given how quickly the ecosystem is evolving, the competitive landscape today will (probably) look nothing like the landscape one year from now (let alone five years from now). In all events, the time to build is now, same as it ever was, only more so.

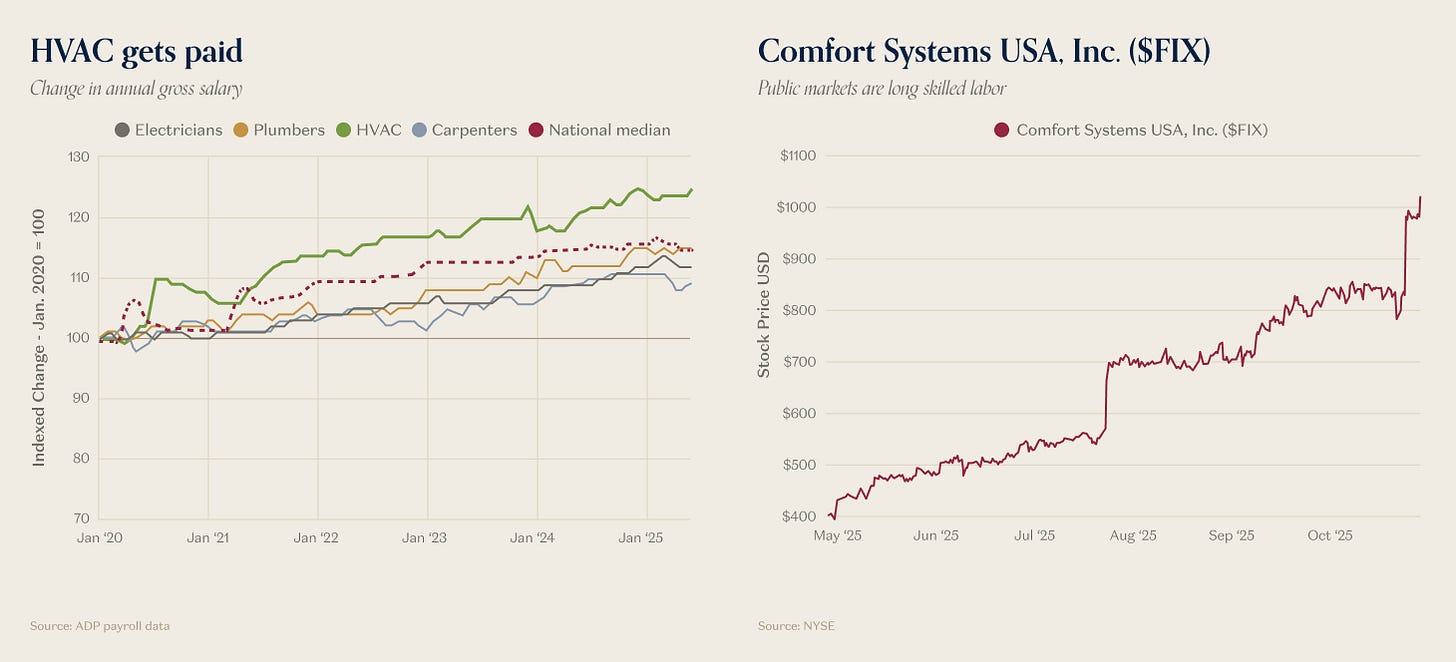

While everyone knows the skilled trades are in short-supply, no skilled-trade is more bottlenecked than HVAC.

The wage premium for HVAC workers is ~25% since 2020, which is more than double the median gross salary increase nationwide (and more than double the premium for the other skilled trades, like electricians, plumbers and carpenters).

Public markets have noticed, as well. The share price for Comfort Systems USA (which supplies skilled labor to big industrial projects) looks more like a staircase than a price chart with every earnings release.

Secular aging, plus the AI Capex Supercycle, have made it an increasingly good time to be skilled in the world of atoms. The college wage premium may have gone flat, but if you know a thing or two about industrial cooling, you can write your own check.

Why AI is a double-edged sword for retailers

Ft • October 31, 2025

AI•ECommerce•Retail•Data•Strategy

The integration of artificial intelligence into retail, often termed “agentic commerce,” presents a transformative yet precarious frontier for the industry. This model involves AI agents autonomously making purchasing decisions on behalf of consumers, promising immense efficiency gains but at the potential cost of ceding critical strategic data.

The Promise of Agentic Commerce

The potential pay-offs for retailers who successfully implement agentic commerce systems are substantial. AI agents can streamline the entire shopping journey, from product discovery to checkout, reducing friction and increasing conversion rates. For consumers, this means a highly personalized and convenient experience where their preferences are anticipated and fulfilled automatically. For businesses, it unlocks new levels of operational efficiency in inventory management, dynamic pricing, and targeted marketing, potentially leading to a significant boost in sales and customer loyalty.

The Data Dilemma

However, this automated future comes with a significant trade-off: data sovereignty. To function effectively, AI agents require deep, uninterrupted access to a consumer’s preferences, purchasing history, and behavioral data. By integrating these third-party AI systems into their platforms, retailers are effectively handing over their most valuable asset—proprietary customer data. This creates a fundamental tension, as the very data that fuels the AI’s effectiveness is also the core intellectual property that defines a retailer’s competitive advantage. Ceding control of this data flow could inadvertently empower the AI platform providers, potentially reducing retailers to mere fulfillment endpoints in a value chain they no longer control.

Strategic Implications for Retail

This dynamic forces retail executives into a critical strategic decision. The allure of increased sales through hyper-personalization is powerful, but it must be weighed against the long-term risk of disintermediation. A retailer could find its customer relationship managed and owned by an external AI agent, weakening its brand and making it vulnerable to being swapped out for a competitor offering a better price or faster delivery. The central challenge becomes how to harness the power of AI-driven automation without surrendering the customer relationship and the rich data that underpins it.

The future of retail will likely be shaped by how companies navigate this double-edged sword. The winners will be those who develop a sophisticated data strategy that leverages AI for customer benefit while retaining control over their core data assets and preserving the integrity of their direct-to-consumer relationships.

Zoom CEO Eric Yuan says AI will shorten our workweek

Techcrunch • Sarah Perez • October 27, 2025

AI•Work•Jobs•FutureOfWork•Productivity

Zoom CEO Eric Yuan has made a bold prediction about the future of work, forecasting that artificial intelligence will enable a significant reduction in the standard workweek to just three or four days within the next few years. This vision positions AI not as a job replacement threat, but as a powerful productivity tool that will augment human capabilities and automate routine tasks, thereby freeing up employee time for more strategic and creative work.

The AI-Augmented Workforce

Yuan’s perspective suggests a fundamental shift in how companies will leverage technology. Instead of focusing solely on cost-cutting through automation, the emphasis will be on using AI to enhance human productivity to such a degree that the same amount of work can be accomplished in fewer hours. This involves AI handling administrative burdens, summarizing meetings, managing emails, and generating reports, which currently consume a substantial portion of the knowledge worker’s day. The core idea is that by offloading these tasks to AI, employees can concentrate on higher-value activities that require human judgment, empathy, and innovation.

Implications for Business and Society

The move toward a shorter workweek would have profound implications across multiple domains. For businesses, it could lead to a more satisfied, focused, and productive workforce, potentially reducing burnout and improving retention. For employees, it promises a better work-life balance and more personal time. This transition would also necessitate a re-evaluation of corporate metrics, moving away from measuring hours worked toward measuring outcomes and results achieved. Such a change aligns with growing calls for improved mental health and well-being in the workplace, suggesting that technology could be a catalyst for a more humane approach to professional life.

Zoom’s Strategic Direction

This vision is not merely theoretical for Yuan; it is central to Zoom’s product strategy. The company has been aggressively integrating AI features into its platform, such as AI-powered meeting summaries, email composition tools, and intelligent scheduling assistants. These developments are concrete steps toward realizing the productivity gains that would make a shorter workweek economically viable for organizations. By building the tools that automate routine collaboration overhead, Zoom is positioning itself at the forefront of this workplace transformation.

The prediction underscores a broader, optimistic narrative about AI’s role in society, where technology serves to enhance human potential and quality of life rather than diminish it. While challenges around implementation, equity, and economic structuring remain, leadership from tech executives like Yuan signals a tangible and approaching future for the modern workforce.

It’s Not Just Rich Countries. Tech’s Trillion-Dollar Bet on AI Is Everywhere.

Wsj • October 26, 2025

AI•Funding•Globalization•DevelopingEconomies•Infrastructure

The global artificial intelligence revolution is no longer confined to wealthy nations and Silicon Valley tech giants. A massive trillion-dollar investment wave is spreading AI development and adoption across developing countries worldwide, representing a fundamental shift in how artificial intelligence is being created and deployed.

Global AI Investment Patterns

Major technology companies are making unprecedented investments in AI infrastructure across emerging markets. Microsoft, Google, and Amazon are collectively spending billions to establish data centers and cloud computing facilities in countries throughout Southeast Asia, Latin America, and Africa. These investments are not merely about expanding market reach but represent strategic positioning for the next phase of global AI development.

The scale of commitment is staggering, with projections indicating over $1 trillion in AI-related infrastructure spending across developing economies by 2030. This includes everything from semiconductor manufacturing facilities in Vietnam and Malaysia to advanced computing clusters in Brazil and Saudi Arabia. The geographical diversification marks a significant departure from previous technology cycles that primarily benefited established innovation hubs.

Local Innovation Ecosystems

Beyond infrastructure, there’s growing investment in homegrown AI talent and startups across the developing world. Countries like Nigeria, Indonesia, and Colombia are seeing rapid growth in their local AI sectors, with venture capital flowing into startups focused on solving region-specific challenges. These include agricultural optimization for smallholder farmers, healthcare diagnostics for rural populations, and financial services tailored to local needs.

The development of culturally relevant AI models trained on local languages and contexts represents a crucial aspect of this trend. Rather than simply adopting Western-developed AI systems, researchers and entrepreneurs in these regions are building solutions that understand local dialects, cultural nuances, and specific economic conditions. This approach ensures that AI benefits are more evenly distributed and relevant to diverse populations.

Economic and Social Implications

The widespread adoption of AI in developing economies carries profound implications for global economic patterns. Nations that successfully integrate AI into their industrial and service sectors could leapfrog traditional development stages, similar to how mobile banking transformed financial inclusion in parts of Africa. However, this transition also presents challenges regarding job displacement and the need for massive workforce retraining.

Government policies are evolving rapidly to accommodate this technological shift. Several countries have established national AI strategies that include education reforms, research funding, and regulatory frameworks designed to foster innovation while addressing potential societal impacts. The competition to become regional AI hubs is driving policy innovation and international partnerships.

Future Outlook and Challenges

The democratization of AI technology faces several significant hurdles. Access to advanced computing resources remains constrained by export controls and high costs, while talent migration continues to challenge local ecosystem development. Additionally, concerns about data sovereignty and the environmental impact of energy-intensive AI infrastructure require careful management.

Despite these challenges, the trend toward global AI adoption appears irreversible. The combination of decreasing technology costs, increasing digital literacy, and strategic government investments suggests that AI’s transformative impact will be felt across the economic spectrum. This development could potentially reshape global economic hierarchies and create new centers of technological innovation outside traditional hubs.

The trillion-dollar bet on AI across developing nations represents more than just financial investment—it signifies a fundamental reordering of technological capability and economic potential. As these ecosystems mature, they’re likely to produce innovative applications and business models that could eventually influence global AI development trends, creating a more diverse and resilient technological landscape worldwide.

Silicon Valley called — the 1990s are back

Ft • October 26, 2025

AI•Funding•VentureCapital•MarketTrends•TechnologyCycles

The current artificial intelligence boom is drawing striking comparisons to the dot-com era of the 1990s, creating a sense of déjà vu in Silicon Valley. While the underlying technology is fundamentally different, the market dynamics, investment frenzy, and cultural phenomena share remarkable similarities with the period that preceded the 2000 crash. The rapid proliferation of AI startups, massive funding rounds, and sky-high valuations are creating an environment that veteran tech observers find both exhilarating and concerning.

Market Parallels and Investment Frenzy

Venture capital investment in AI has reached unprecedented levels, with billions flowing into companies developing large language models, AI infrastructure, and applications. This mirrors the late-1990s internet boom when investors rushed to fund any company with a “.com” in its name. The fear of missing out is driving valuations to levels that many analysts consider unsustainable, with some AI startups achieving unicorn status in record time despite having minimal revenue.

The investment landscape shows several key similarities:

• Explosive funding rounds for pre-revenue companies

• Intense competition among venture firms to back promising AI startups

• Sky-high valuations based on potential rather than current performance

• Rapid hiring and talent acquisition wars, particularly for AI researchers

Technological Differences and Sustainability

Despite the market parallels, significant differences exist between the two technological revolutions. The internet boom was primarily about creating new distribution channels and business models, while the AI revolution represents a fundamental advancement in computational capability and problem-solving. AI technologies are demonstrating tangible productivity improvements across numerous industries, from healthcare diagnostics to software development.

Current AI infrastructure requires substantially more capital than early internet companies needed, creating higher barriers to entry but also potentially more sustainable competitive advantages for established players. The computational resources, data requirements, and specialized talent needed to develop cutting-edge AI models mean that successful companies may be harder to displace than their dot-com predecessors.

Regulatory and Economic Context

The current regulatory environment presents both challenges and stabilizing factors that were absent during the dot-com era. Antitrust scrutiny, data privacy regulations, and AI-specific legislation in development could temper some of the wilder excesses while also creating compliance hurdles. The Federal Reserve and other central banks are more attuned to asset bubbles than they were in the 1990s, potentially allowing for earlier intervention if markets become overheated.

Global competition, particularly from China, adds another dimension that was less pronounced during the first internet boom. National security concerns and export controls on advanced semiconductors have created a more fragmented technological landscape, with different countries pursuing sovereign AI capabilities.

Cultural and Workforce Impacts

The cultural response to AI mirrors the internet era in its mixture of utopian optimism and dystopian anxiety. Visionaries predict AI will solve humanity’s greatest challenges, while critics warn of job displacement, misinformation, and loss of human agency. The workforce is experiencing similar disruption, with new highly-paid specializations emerging while other roles face obsolescence.

The current cycle differs in that many tech leaders lived through the dot-com bust and may apply those hard-won lessons to navigate the present boom. However, the psychological drivers of market cycles—greed, fear, and herd behavior—remain unchanged, suggesting that while the technology is different, human nature continues to follow familiar patterns.

Google DeepMind Developers: How Nano Banana Was Made

Youtube • a16z • October 28, 2025

AI•Tech•MachineLearning•GoogleDeepMind•ModelDevelopment

The development of Nano Banana represents a fascinating case study in AI model creation, showcasing how Google DeepMind developers approached building a compact yet powerful language model. This project emerged from the recognition that while massive models like GPT-4 demonstrate impressive capabilities, there’s significant value in creating smaller, more efficient models that can run on consumer hardware while maintaining substantial reasoning abilities.

Project Origins and Philosophy

The Nano Banana project began as an experiment to push the boundaries of what’s possible with smaller parameter counts. Developers focused on creating a model that could deliver meaningful performance while being accessible to users without specialized hardware. The name “Nano Banana” itself reflects this approach - “nano” indicating the small size, while “banana” represents something approachable and familiar rather than intimidating technical terminology.

The team emphasized that smaller models require more thoughtful architecture decisions and training approaches. As one developer noted, “With large models, you can sometimes brute-force performance through scale, but with nano-scale models, every architectural decision matters significantly.” This philosophy drove the team to optimize every component of the model architecture, from attention mechanisms to activation functions.

Technical Innovations and Training Approach

Several key technical innovations contributed to Nano Banana’s success. The developers implemented novel training techniques including:

Curriculum learning strategies that gradually increased complexity

Knowledge distillation from larger models

Advanced data filtering and preprocessing

Optimized tokenization approaches for smaller vocabularies

The training data selection proved particularly crucial. Rather than using massive, unfiltered datasets, the team focused on high-quality, diverse content that would maximize learning efficiency. They emphasized that “data quality beats quantity when working with constrained parameter budgets,” a principle that guided their careful curation process.

Performance and Applications

Despite its compact size, Nano Banana demonstrates surprising capabilities across various tasks including reasoning, coding assistance, and creative writing. The developers shared that the model performs particularly well on logical reasoning tasks and can maintain coherent conversations over extended contexts. Performance benchmarks show it competing favorably with models several times its size in specific domains.

The team highlighted several practical applications where Nano Banana excels:

On-device AI assistance without cloud dependency

Educational tools for students and developers

Prototyping and experimentation platforms

Cost-effective deployment for small businesses

Development Challenges and Solutions

Creating an effective small model presented unique challenges. The developers discussed how they addressed the limited parameter budget through techniques like parameter sharing, efficient attention mechanisms, and specialized activation functions. They also implemented careful regularization to prevent overfitting, which becomes more problematic with smaller models.

One developer explained, “The biggest challenge was balancing capability with size constraints. We had to make strategic trade-offs about what capabilities to prioritize and which to sacrifice.” This involved extensive experimentation with different architectural variations and training methodologies to find the optimal balance.

Future Implications and Industry Impact

The success of Nano Banana suggests a promising direction for AI development - creating specialized, efficient models rather than continually scaling up parameter counts. This approach could democratize AI access by making powerful models available to users without expensive hardware or cloud computing budgets.

The developers believe that nano-scale models will play an increasingly important role in the AI ecosystem, particularly for edge computing, privacy-sensitive applications, and cost-constrained deployments. As one team member summarized, “Not every application needs a giant model, and having effective smaller options expands what’s possible with AI technology.”

OpenAI Restructures as For-Profit Company

Nytimes • Cade Metz • October 28, 2025

AI•Funding•CorporateGovernance•Restructuring

OpenAI has announced a fundamental restructuring of its corporate organization, transitioning from a nonprofit-controlled entity to a for-profit company. This landmark shift resolves a long-standing and complex governance structure that has been a source of internal tension and external scrutiny. The most significant financial detail of the move is that the original nonprofit that controlled OpenAI will receive a monumental $130 billion stake in the new for-profit entity.

Restructuring Details and Governance Shift

The core of the change involves dismantling the unique “capped-profit” model that OpenAI pioneered. Previously, the company was governed by a nonprofit board whose mission was to ensure that artificial general intelligence (AGI) would be developed and deployed safely for the benefit of humanity. This structure was initially created to align the company’s operations with its founding charter’s safety-centric principles, even as it sought massive capital to fund its ambitious research. The new structure simplifies this arrangement, placing the company on a more conventional corporate footing, albeit with an unprecedented financial settlement to the original controlling nonprofit.

Implications of the $130 Billion Stake

The $130 billion stake granted to the nonprofit represents one of the largest transfers of equity value in corporate history. This arrangement appears designed to endow the original mission-oriented entity with permanent, substantial financial resources. The funds could potentially be used to fund safety research, policy advocacy, or other beneficial AI initiatives, acting as a massive endowment to counterbalance the commercial incentives of the for-profit arm. This suggests a compromise aimed at preserving the company’s founding ideals while unlocking its full commercial potential.

Broader Industry Impact and Future Trajectory

This restructuring signals a new phase for the AI industry, where the lines between mission-driven research and commercial imperatives are being redrawn. For OpenAI, the move likely simplifies future fundraising, provides clearer equity structures for employee compensation, and could pave the way for eventual public market offerings. It also resolves investor uncertainties that stemmed from the previous governance model, where the nonprofit board held ultimate control, including the power to dismiss executives.

The decision underscores the immense capital requirements for competing at the forefront of AI development and the pressure on even the most idealistic organizations to adapt traditional corporate models. As OpenAI continues to develop and deploy increasingly powerful AI systems, this new structure will be closely watched as a blueprint for how other AI firms might balance profitability with their stated missions to benefit humanity.

Big tech’s big gamble

Ft • October 27, 2025

AI•Funding•Tech•Investment•Market Dynamics

The technology industry is currently engaged in an unprecedented financial gamble as major corporations pour billions into artificial intelligence development with uncertain returns. This massive investment surge represents one of the largest capital allocations in modern technological history, raising fundamental questions about market sustainability and the willingness of investors to finance what amounts to a high-stakes technological arms race.

Investment Scale and Market Dynamics

The scale of AI investment has reached staggering proportions, with technology giants allocating resources that dwarf previous technological cycles. Companies are redirecting substantial portions of their capital expenditure toward AI infrastructure, including specialized computing hardware, data center expansion, and research talent acquisition. This reallocation is occurring despite unclear pathways to profitability and significant questions about when, or if, these investments will generate meaningful returns for shareholders.

The market’s tolerance for this level of spending appears to be testing historical boundaries, with investors showing remarkable patience given the transformative potential of AI technologies. However, this patience is not unlimited, and analysts are beginning to question how long companies can sustain these investment levels without demonstrating clearer revenue generation or cost savings from their AI initiatives.

Strategic Implications and Competitive Pressures

Several key factors are driving this investment frenzy:

• First-mover advantage: Companies fear being left behind in what many perceive as a foundational technological shift comparable to the internet’s emergence

• Infrastructure requirements: The computational demands of advanced AI systems necessitate massive upfront investment in specialized hardware and cloud capacity

• Talent acquisition: The war for AI expertise has driven compensation packages to extraordinary levels, adding significant operational costs

• Regulatory positioning: Companies are investing heavily to shape future regulatory frameworks and establish market dominance before potential restrictions emerge

The competitive dynamics have created a classic prisoner’s dilemma where companies feel compelled to match their rivals’ spending, regardless of immediate business justification. This has led to what some analysts describe as an “AI investment bubble” characterized by spending that may not align with near-term market realities.

Market Reactions and Financial Considerations

Financial markets have shown mixed reactions to this spending surge. While some investors remain bullish about AI’s long-term potential, others express concern about the erosion of profit margins and the diversion of resources from core business operations. The tension between growth expectations and fiscal discipline has created challenging dynamics for corporate leadership teams.

The fundamental question facing the industry revolves around timing and scalability—how quickly can these investments be translated into commercially viable products and services, and at what scale must they operate to justify their costs? Current spending patterns suggest companies are betting on rapid adoption across multiple sectors, though evidence of widespread, profitable implementation remains limited.

Future Outlook and Industry Impact

The outcome of this massive gamble will likely reshape the technology landscape for decades. Success could cement the dominance of current market leaders and create new technological paradigms, while failure might lead to significant write-downs and strategic reassessments. The intermediate period will test both corporate balance sheets and investor patience as the industry navigates this transitional phase.

The broader economic implications extend beyond technology companies to affect capital markets, energy infrastructure due to AI’s substantial power requirements, and global competition patterns. How this investment cycle resolves will influence not only corporate fortunes but also national technological competitiveness and the pace of AI integration across economic sectors.

Media

Podcasters Look Beyond Audio and YouTube to TV

Bloomberg • October 30, 2025

Media•Broadcasting•Podcasting•Television•ContentLicensing

The podcasting industry is undergoing a significant transformation as creators and production companies increasingly look beyond their traditional audio roots and even the established video platform of YouTube, setting their sights on the television market. This strategic pivot is driven by the dual forces of a declining linear TV landscape and the rising star power of podcast hosts who have cultivated massive, dedicated audiences. As traditional television networks grapple with changing viewer habits, they are actively seeking fresh, pre-vetted content with built-in fanbases, making popular podcasts an attractive and lower-risk acquisition target.

The Driving Forces Behind the Shift

Several key factors are fueling this migration to television. The most prominent is the saturation and intense competition within the audio-only and digital video spaces. While platforms like Spotify and YouTube have been lucrative for top creators, breaking through the noise has become increasingly difficult. Television, particularly streaming services, offers a new frontier for growth and a chance to reach demographics that may not be active podcast listeners.

Furthermore, the economic model is compelling. Licensing a successful podcast to a television network or streaming service provides a substantial, upfront financial windfall for creators, often far exceeding what they can earn from advertising and subscriptions alone. It also transforms a single intellectual property into a multi-platform franchise, significantly increasing its overall value. For networks, adapting a podcast is a data-informed decision; they are essentially greenlighting a show with a proven concept and a ready-made marketing engine in the form of the podcast’s existing audience.

Key Players and Landmark Deals

This trend is not merely theoretical; it is already manifesting in high-profile deals across the industry. Major media companies and streaming platforms are leading the charge. For instance, Netflix has successfully adapted podcasts like “Archive 81” into series, while Amazon has transformed “Homecoming” from a fictional podcast into a critically acclaimed show.

Beyond fictional narratives, non-fiction and interview-based podcasts are also making the leap. A prime example is the deal for the “Huberman Lab” podcast, hosted by Stanford neurobiology professor Dr. Andrew Huberman. The show, which delves into science and health, was licensed for a television adaptation, signaling that educational and expert-driven content has a place in this new ecosystem. Similarly, talk and interview formats are being developed for television, capitalizing on the host’s celebrity and interviewing prowess to create a visual talk show.

Implications for the Media Landscape