Contents

Editorial: Who’s For Free Speech? Jimmy Kimmel, ABC and the Future of Media

Essays

AI

Venture

Your VCs May Be Giving Up on You Right About Now. Don’t Let That Stop You.

US listings market bursts back to life with busiest week in 4 years

Robinhood plans to launch a startups fund open to all retail investors

UK smartphone maker Nothing raises $200mn to take on Apple and Samsung

OpenAI surges to first place as Forge's Private Mag 7 hit $1.2 trillion

IPOs Fire Up Even More in September: This Week It’s Netskope and StubHub

Apollo in Talks for Private Markets Partnership With Schroders

Robinhood Expands Private Equity Push With New Venture Capital Fund

Crypto

GeoPolitics

Regulation

Media

Interview of the Week

Startup of the Week

Post of the Week

Editorial: Who’s For Free Speech? Jimmy Kimmel, ABC and the Future of Media

This has been the week of free speech. The brutal assassination of Charlie Kirk framed the recent past. Stopping him speaking by murdering him was the killers method. “Hate speech” was what the killer accused Kirk of.

The divided opinions in social media ranged from support for the assassin through to calls to violence by Kirk’s supporters, notably articulated by an over-reacting Elon Musk at one point during a live speech to people on the Tommy Robinson march in London.

In almost all cases one side wants to characterize the other as conveying “hate speech” and seeks to close down that speech using a variety of methods.

Then Jimmy Kimmel weighed in. During a somewhat confusing monologue he seemed to accuse MAGA supporters of killing Charlie Kirk (“one of their own”). Very quickly the regulator of media went online to tell Kimmel’s employer, ABC, to deal with this the “easy way” or the “hard way”. Within hours Kimmel’s show was taken off the air “indefinitely”. Kimmel was essentially cancelled due to the regulators influence on ABC.

Kimmel has not yet taken to Youtube of Substack to create his own channel, but one imagines that cannot be too far from his thoughts as others have done.

All of this raises the question of free speech. How far should society go in protecting speech? For me, all speech should be protected. The very need for protection is especially relevant if I disagree with the speech. Hate speech itself should therefore be especially protected. Democracy cannot exist if speech is regulated. It is a zero sum point.

If it makes you angry, as much speech does, then articulate opposition to it. More speech is the antidote to speech you dislike.

The rise of personal media on Youtube, Substack, Social Media enables all speech to find a home and an audience, and that is just great.

Meanwhile a new issue is emerging. Should AI have freedom of speech?

This week the issue of how much scope AI has to learn is front and center.

In the ‘Interview of the Week’ with Andrew Keen, Marshall Poe of The New Books Network states:

" AI companies appear to scrape podcast content without permission or payment, then repackage it as their own product.” and later "The cost of that theft has gone to zero,"

This framing of reality is deeply flawed. As an observer of the ecosystem with decades of experience in tech I can say for sure that AI does not steal and repackage content. Only a shallow understanding of how AI works would suffice to render the claim ridiculous. AI reads, listens, views content just as a human does. The Courts have so far found this to be the case. AI is not copying, repackaging or stealing. it is learning.

On those rare occasions when AI learns on stolen content, as with Anthropic and the pirated books, it has been severely punished. But that behavior is not typical of AI.

We all need to turn down the dial on demonizing those we disagree with and also on AI. The success of America - as Noah Smith argues in Noahpinion this week - is founded on an absolute definition of the first amendment. Nothing is outside the scope of that - not hate speech, not misinformation, not disinformation, not opinions of any kind. Cancelling speech or a speaker is advocated on all sides of the political spectrum. It is an intolerant and insecure reaction to disagreement. Assassination is thankfully rare, but not zero. Firing or suspension is less rare. Both are abominations and an affront to civilised society.

Of course, if speech promotes illegal action that then occurs it enters a space of law, and the illegal actions and the original incitement, can be punished under existing laws. The speech is no longer protected. But the incitement has to be explicit, direct and proven. Not implied.

So …. that is my thought for the week.

Essays

Without free speech, America is nothing

Noahpinion • September 19, 2025

Essay•Regulation•FreeSpeech•First Amendment•FCC

“If the freedom of speech is taken away, then dumb and silent we may be led, like sheep to the slaughter.” — George Washington

“Our liberty depends on the freedom of the press, and that cannot be limited without being lost.” — Thomas Jefferson

“Why should freedom of speech and freedom of press be allowed? Why should a government…allow itself to be criticized? I would not allow opposition by lethal weapons. Ideas are much more fatal things than guns. Why should any man be allowed to…disseminate pernicious opinions calculated to embarrass the government?” — Vladimir Lenin

Donald Trump returned to power promising to restore freedom of speech in America. In his inaugural speech on January 20, he declared:

After years and years of illegal and unconstitutional federal efforts to restrict free expression, I also will sign an executive order to immediately stop all government censorship and bring back free speech to America.

And in February, JD Vance said:

Our own government encouraged private companies to silence people...Under Donald Trump's leadership, we may disagree with your views, but we will fight to defend your right to offer it in the public square.

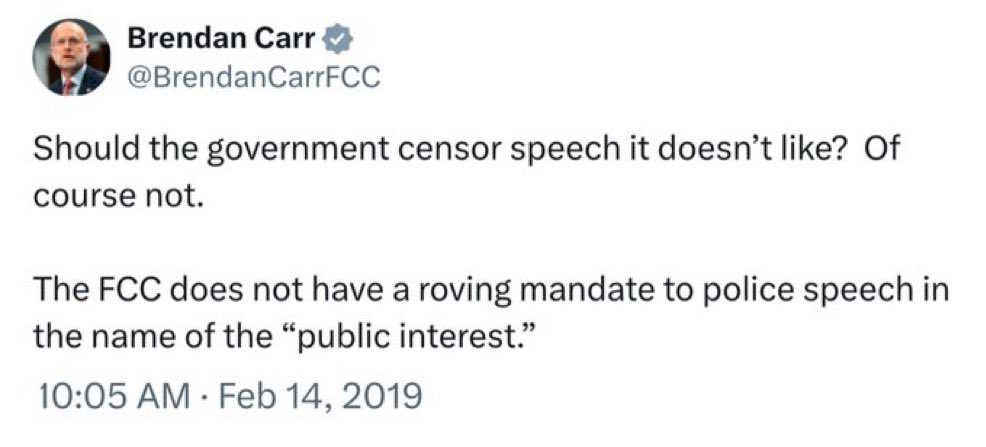

And in a now-deleted tweet from 2019, Brendan Carr, whom Trump would later name to lead his FCC, offered similar sentiments:

Why were the MAGA folks talking so passionately about freedom of speech? Because for years, conservatives felt as if that freedom was under attack by the progressive movement, and later by the Biden administration.

Since the mid-2010s, progressives on social media had tried to ruin the reputation and careers of people who said things they considered racist, transphobic, or otherwise problematic. Social media platforms were successfully pressured to “deplatform” various figures on the right, including Donald Trump himself after the January 6th attacks. Some rightists were even cut off from bank accounts and other essential services.

Progressives argued that none of this was a violation of freedom of speech. They claimed that freedom of speech is entirely defined by the First Amendment, and involves only government restrictions on speech. If private companies took away your bank account or kicked you off of the country’s main social media platforms, that was merely social ostracism, not a violation of any freedom. Conservatives retorted that if private companies have the ability to make modern life unlivable for people whose speech they don’t like, that takes away their freedom of speech.

I agree with the conservatives on this one; government is not the only organization that has the power to restrict freedom. I thought the centrist liberals who signed the Harper’s Letter in support of free speech — and were pilloried by progressives for doing so — were pretty heroic.

Anyway, then came the Biden administration’s war on disinformation. The administration tried to pressure social media companies into taking down certain posts, and was eventually halted from doing this by the courts. Biden’s Department of Homeland Security created a “Disinformation Governance Board” that conservatives feared would become a propaganda ministry. (I side with the Biden administration on this latter example; having the government contradict things people say is not a violation of free speech.)

After years of this, conservatives and rightists started to think of themselves as the guardians of free speech, fighting back against a smothering progressive orthodoxy. For many, this belief was sincere. But for the Trump administration, it proved to be nothing more than a cheap slogan.

In the days since the assassination of Charlie Kirk, the administration has encouraged companies to fire people who celebrated the murder. JD Vance said: “When you see someone celebrating Charlie's murder, call them out — and, hell, call their employer.” And in fact, many employers have been firing people for saying the wrong thing about Kirk. Trump and other GOP politicians have echoed the call for people who insult Kirk to be fired.

This is a right-wing version of “cancel culture”, and it’s perfectly legal, just like it was when progressives did it. I do believe this is a restriction on freedom of speech, but it’s not a violation of the First Amendment.

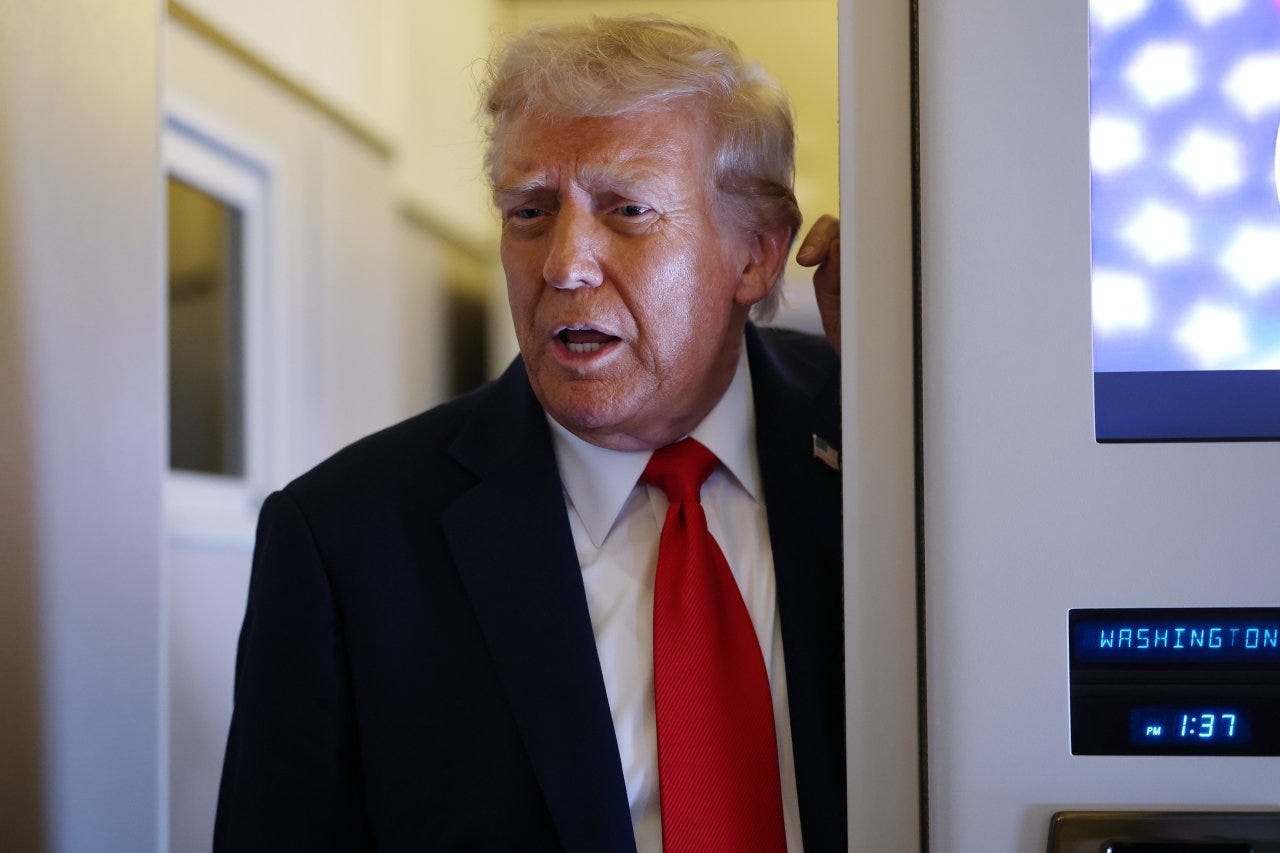

But that was only the beginning. Over the last few days, the Trump administration has begun to use government power to persecute people for saying things it doesn’t like. Jimmy Kimmel, a late-night comedy show host, accused the MAGA movement of trying to make political hay out of Kirk’s murder (which appears to be true), and claimed that the killer was actually a rightist (which appears to be false). In response, Brendan Carr, Trump’s FCC Chair, threatened ABC and its local affiliates:

FCC chairman Brendan Carr has threatened to take action against ABC after Jimmy Kimmel said in a monologue that “the MAGA gang” was attempting to portray Charlie Kirk‘s assassin as “anything other than one of them.”

Appearing on Benny Johnson’s podcast on Wednesday, Carr suggested that the FCC has “remedies we can look at.”

“We can do this the easy way or the hard way,” Carr said. “These companies can find ways to change conduct and take action, frankly, on Kimmel or there’s going to be additional work for the FCC ahead.”

ABC and the local stations, worried about retaliation from the administration, immediately cancelled Kimmel’s show.

The government forcing a private company to cancel a comedy program it doesn’t like already constitutes the most significant attack on press freedom in a generation. But the Trump administration has signaled that this is only the beginning of a much broader, deeper government campaign to stamp out opinions it doesn’t like.

Attorney General Pam Bondi declared that freedom of speech doesn’t extend to “hate speech” — something radical progressives have long argued, and conservatives have long resisted.

Conservatives got very mad at Bondi, and she backtracked, saying that “hate speech” won’t be prosecuted after all. But then Trump came out and declared his desire to revoke the FCC licenses of TV networks that oppose his administration, and Carr appeared to endorse some version of the idea ….

Total Concentration

Mailchi • September 15, 2025

Essay•Venture

As “virtue is proved by its contrary,” so too are the benefits of diversification proven by the costs of hyper-concentration.

There is a belief among many investors, including prominent stars like Warren Buffett, that diversification “makes very little sense for anyone who knows what they are doing.” Some even go as far as to advocate for hyper-concentration, based on Buffett’s view that “if you find three wonderful businesses in your life, you’ll get very rich. And if you understand them, bad things aren’t going to happen to those three.”

On the other hand, Nobel Prizes have been awarded to financial economists whose research insights were based on diversified portfolios of hundreds, sometimes thousands, of stocks. In addition to contributing to our understanding of how financial markets function, these economists have made their mark on Wall Street. Multitrillion-dollar investment firms like Vanguard and BlackRock have been built upon their research, compounding wealth for millions of investors along the way.

So it seems we have competing truth claims. Either diversification is “di-worsification” that should only be practiced by the ignorant, or it is a “free lunch” that improves investment outcomes without increasing risk, as claimed by the academics. It cannot be both.

We set out to evaluate these competing truth claims in the context of equity investing by comparing the best arguments for concentration against the evidence from decades of empirical data. The most forceful arguments for hyper-concentration, as articulated by the investment process consultant Alpha Theory in their Concentration Manifesto, fall along three lines:

Conviction: Any manager with a decent system for security selection should believe more attractive stocks have higher expected returns, so holdings should be concentrated in a handful of the most attractive names.

Mental capital: Every portfolio manager has limited time; fewer holdings allow deeper research and understanding of each investment.

Diversification is for allocators: Managers should maximize expected returns and leave risk management to allocators who can diversify across multiple concentrated managers.

Our simulations led us to a few simple conclusions. First, extreme concentration increases risk and decreases expected return. Second, the core task of investing is making predictions about the future; neither doing more work nor having higher conviction leads to better predictive accuracy. Third, allocators to concentrated portfolios face a greater risk from survivorship bias in manager selection.

Extreme Concentration Increases Risk and Decreases Expected Return

The most important thing to understand about highly concentrated portfolios is the relationship between concentration and volatility. And volatility has a direct negative impact on returns through a phenomenon called volatility drag. Consider this: a portfolio down 10% in year one and up 10% in year two has lost 1% of value; down 20% then up 20% loses 4%; down 30% then up 30% loses 9%. Linear changes in volatility drive squared losses in total return. These extreme swings are more likely in hyper-concentrated portfolios. Diversification is an easy path to reducing idiosyncratic risk.

Oracle Pops, From Databases to AI, Oracle and OpenAI

Stratechery • Ben Thompson • September 15, 2025

Essay•AI•Oracle•OpenAI•Nvidia

Oracle Pops

Oracle shares soared after the company disclosed several multibillion‑dollar cloud contracts and reported roughly $455 billion in remaining performance obligations for the quarter ended August 31, up more than fourfold year over year, with management signaling additional large customers could push the figure above $500 billion. The stock recorded its biggest single‑day percentage gain since the early 1990s. Beyond the headline numbers, what matters is whether those obligations ultimately become revenue.

Oracle has a “colorful” history around recognizing future obligations, but this time investors are rewarding the scale of unrealized commitments, not prematurely recognized revenue. The essential question is execution: will customers consume the contracted capacity on schedule and at expected margins?

From Databases to AI

Oracle’s technical positioning predates the LLM boom. Exadata, launched in 2008 and later integrated end‑to‑end after the Sun acquisition, was engineered for low‑latency, deterministic performance, with RDMA, high‑performance ethernet, and a software‑defined SmartNIC offloading networking. When Oracle brought that architecture to OCI, existing customers could lift‑and‑shift and preserve on‑prem performance characteristics. Those same attributes—massive scale, tight networking, and predictable latency—map directly to training large models.

Crucially, Oracle is model‑ and chip‑neutral on the product side yet all‑in on Nvidia for hardware. That neutrality builds trust with AI customers, while Nvidia favoritism helps secure GPU supply, translating into sellable capacity.

Oracle and OpenAI

OpenAI has emerged as the signature customer, reportedly committing about $300 billion of compute over roughly five years starting in 2027, demanding power on the scale of multiple gigawatts. The concentration is risky—Oracle’s cloud capex already presses cash flow—but the potential upside is enormous, and founder‑CTO Larry Ellison’s appetite for bold bets underpins the strategy. Investors may also see Oracle as the most straightforward public proxy for OpenAI’s growth.

Meta Ray-Ban Display, Why Less is More, Price and the Neural Band

Stratechery • Ben Thompson • September 18, 2025

Essay•AI•Meta•RayBanDisplay•NeuralBand

Meta has unveiled its first Ray-Ban glasses with a built-in screen. The $799 Ray-Ban Display places a display in the right lens to show texts, video calls, turn-by-turn directions, and visual answers from Meta AI. It can double as a camera viewfinder and surface music controls. A new control system centers on a neural wristband: pinch to select, slide a thumb to scroll, double-tap to summon Meta’s voice assistant, or twist your hand to adjust volume.

Live captions appear in real time, including translations; video calls let wearers see the caller while sharing their own point of view. Users can reply by sending audio or dictating a response, with in‑air handwriting promised later. Sales start September 30 with the wristband included, in two sizes and two colors, with early support for Messenger, WhatsApp, and a Spotify-powered music app. The monocular display offers a 20‑degree field of view at 600×600 resolution and 30–5,000 nits of brightness. The glasses record 1080p video and run about six hours per charge; the case adds roughly 30 hours.

I haven’t tried them hands‑on. These are not Orion: Orion is a standalone device with displays in both lenses and a puck running a full OS; Ray‑Ban Displays pair with your smartphone and function more like a heads‑up display than full AR.

Is OpenAI the 'Amazon of AI'? Or the AOL?

Regenerator1 • Henry Blodget • September 18, 2025

Essay•AI•OpenAI•Nvidia•Amazon

OpenAI, Nvidia, and other early AI leaders look like sure-fire long-term AI winners. History suggests they might not be — even if the AI industry itself goes on to be even bigger and more lucrative than anyone expects.

If AI industry development plays out like that of the Internet and other major technologies, two things will happen:

Almost all of today’s AI companies will fail

Almost all of today’s investments in companies and infrastructure will be lost

That doesn’t mean that it’s dumb for startups, investors, and corporations to invest in AI right now. AI is a colossal opportunity. And in the early years of profoundly disruptive tech booms, it’s risky to miss out.

Also, the only way to learn is by trying (experimenting and iterating). The people and companies that learn will be the ones who will win, even if most early experiments fail.

But when I say “almost all of today’s AI companies will likely fail,” I mean it.

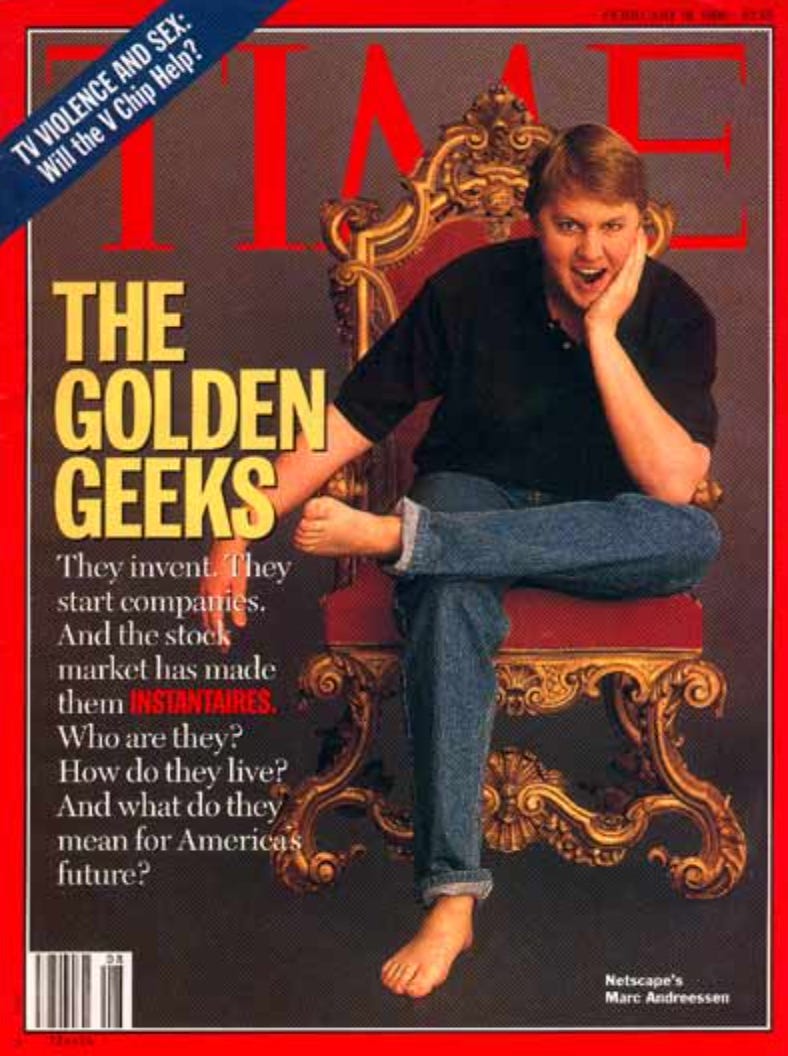

Unless the early AI leaders are vastly more successful over the long haul than the Internet companies that went public in 1994-2001, almost none will lead the industry or produce vast shareholder returns long-term (unless bought after the crash).

How the early Internet leaders fared

Early investors and entrepreneurs made fortunes on Internet investments from 1994 to 2000.

If they held on past 2000, however, most of them lost most of their gains.

Out of hundreds of Internet companies that went public from 1994-2001, only a handful ever regained their bubble highs.

The Internet investors and companies that made lasting fortunes were the ones that got in and cashed out early (before the crash) or bought in after the crash, when Internet assets were available for pennies on the dollar.

Only one Internet company I can think of — ONE — has delivered a compelling long-term return from its bubble peak — as well as, of course, a gargantuan one from its post-crash bottom. (Two if you count Microsoft, but Microsoft was already public and wasn’t a pure-play “Internet company.”)

That company, not surprisingly, is Amazon.

As you might recall, Amazon’s stock went bananas after its 1997 IPO. It soared from from $0.09 (split-adjusted) to a 1999 high of $5.69, up more than 60X. It then collapsed to $0.30 in 2002, down about 95%.

If you owned the stock from the peak to the trough — as I did — that sucked.

Happily, over the following decades, Amazon made all those losses back, and then some. Amazon is now up 42X from its bubble peak!

"Bubble lessons" for the AI era...

Regenerator1 • Henry Blodget • September 13, 2025

Essay•AI•AIBubble

There are striking parallels between today’s AI boom and prior tech development cycles. So here are three lessons from the last big one (the Internet):

1) Most of today’s AI companies will fail,

2) Most of today’s AI investments will lose money, and/but

3) It can be as risky to miss out as to go all-in.

The consensus is that we’re in an “AI bubble.”

In other words, a gold-rush boom that will be followed by an epic bust — and then, hopefully, world-changing long-term growth.

If so, AI is following a similar development pattern as the Internet, railroads, canals, cars, computers, and other major technologies.

To wit:

A big opportunity attracts capital, ideas, and experimentation and makes early entrants rich

The flood of capital and talent creates ever-increasing competition (supply), which, at some point, gets way ahead of demand

Experiments fail, returns tank, and the “bubble” bursts

Then, over many years, assuming the opportunity wasn’t a mass hallucination, the “right-sized” industry develops in pace with customer demand… and the scale of success and returns often exceeds even the wildest bubble dreams.

This is what happened with the Internet. It’s what happened with railroads, canals, cars, aviation, TV, et al. And it’s likely what will happen with AI.

Why does it happen this way?

Why can’t we just enjoy lucrative booms without having to deal with the pain, wreckage, and losses (money and jobs) of busts?

Because:

We don’t know what will work until we try

We don’t know when (or even if) booms will turn to busts — and it’s risky to miss out

Technology changes, but people don’t

A giant R&D lab

One thing that’s happening in AI right now is that entrepreneurs, investors, and executives are throwing money and effort at the AI opportunity to figure out what will work.

In the old days, most experiments like these were conducted in the R&D departments of big companies (Bell Labs or Xerox PARC) or by solo inventors (Thomas Edison).

Now they’re done in public, by startups, VCs, and early adopters.

This is a normal and, ultimately, productive process. It leads to a few successes… and a whole lot of failures.

Thomas Edison tried thousands of ideas and materials before he figured out how to make a lightbulb. Famously, he viewed this not as “failing,” but experimenting. Jeff Bezos has the same attitude. It worked for him, too.

These days, Edison’s experiments would be conducted by startups and funded by VC firms and forward-thinking management teams.

What seems certain is that AI is a very powerful innovation. It will have a radical impact on the future. It will transform industries and economies and produce vast companies and fortunes — just as electric lights, the Internet, and other major technologies did.

So everyone is understandably excited about it.

But…

No one knows exactly what the AI-powered future will look like.

No one knows which handful of companies will invent enduring AI lightbulbs and which thousands of others will have bad ideas, take wrong turns, execute poorly, get leapfrogged, or otherwise, as Edison put it, “successfully find 10,000 ways that will not work.”

And, on the customer side, no one really knows what to do with AI.

So we’re all throwing money and effort at the opportunity and trying to figure it out.

iPhones 17 and the Sugar Water Trap

Stratechery • Ben Thompson • September 10, 2025

Essay•AI•Apple•iPhone

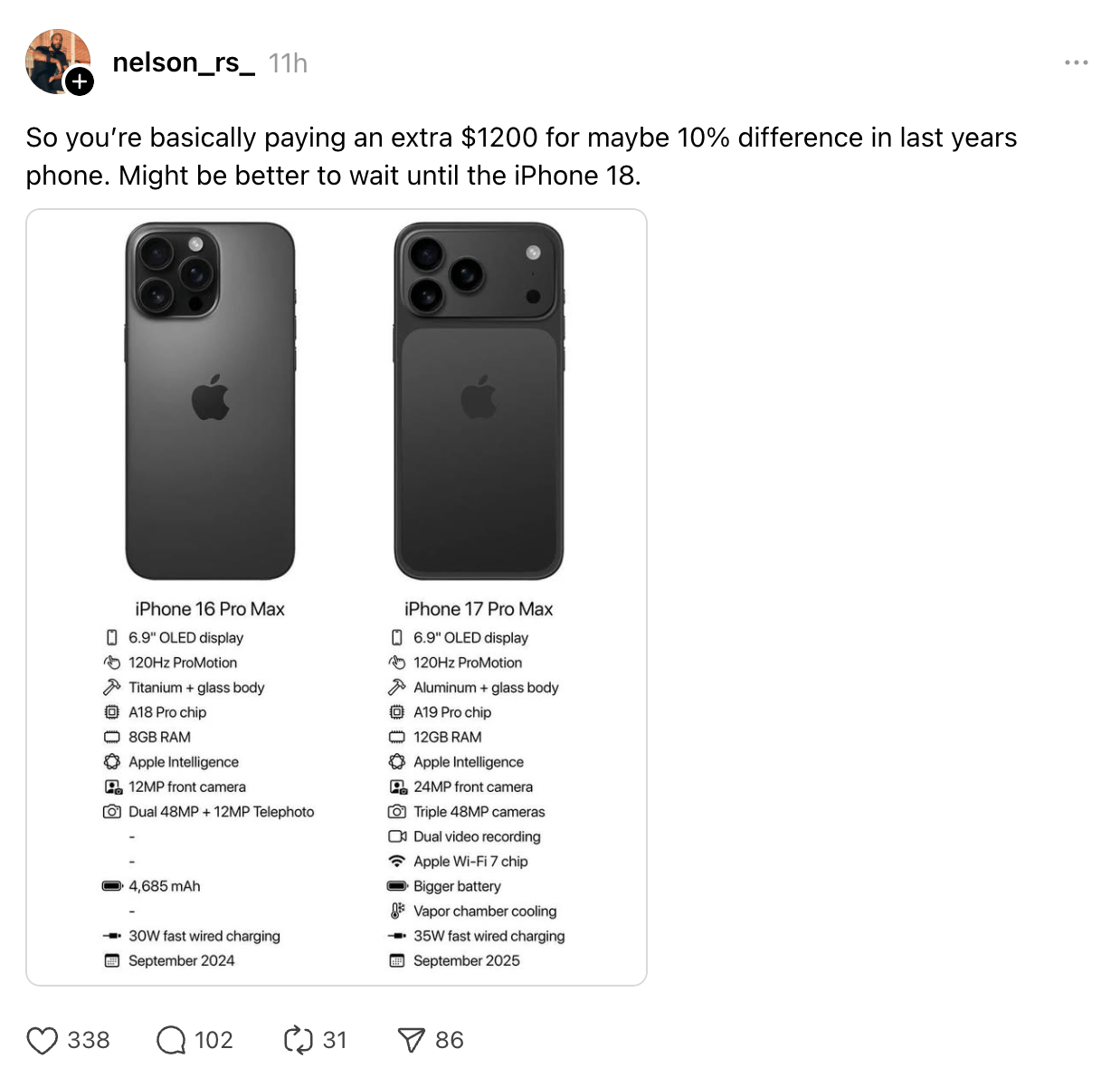

I think the new iPhones are pretty great.

The base iPhone 17 finally gets some key features from the Pro line, including the 120Hz Promotion display (the lack of which stopped me from buying the most beautiful iPhone ever). The iPhone Air, meanwhile, is a marvel of engineering: transforming the necessary but regretful camera bump into an entire module that houses all of the phone’s compute is Apple at its best, and reminiscent of how the company elevated the necessity of a front camera assembly into the digital island, a genuinely useful user interface component. The existence of the iPhone Air, meanwhile, seems to have given the company permission to fully lean into the “Pro” part of the iPhone Pro.

This is honestly very confusing to me: the content of the post is totally contradicted by the image! Just look at the features listed:

There is a completely new body material and design

There is a new faster chip, with GPUs actually designed for AI workloads (a reminder that Apple’s neural engine was designed for much more basic machine learning algorithms, not LLMs)

There is a 50% increase in RAM

This is a lot more than a 10% difference over last year’s phone! Basically every aspect of the iPhone Pro got better, and did I mention the Stratechery orange?

I could stop there, playing the part of the analytics nerd, smugly asserting my list of numbers and features to tell everyone that they’re wrong, and, when it comes to the core question of the year-over-year improvement in iPhone hardware, I would be right! I think, however, that the widespread insistence that this was a blah update — even when the reality is otherwise — exposes another kind of truth: people are calling this update boring not because the iPhones 17 aren’t great, but because Apple no longer captures the imagination.

AI

DeepMind CEO Says AI Could Shorten Drug Discovery From Years to Months

Bloomberg • September 11, 2025

AI•Tech•DrugDiscovery•IsomorphicLabs•AlphaFold

Developing new medicines typically takes years and is plagued by high failure rates. The head of Alphabet’s artificial intelligence efforts says advances in AI could compress that timeline to under a year.

In a Bloomberg Television interview, Demis Hassabis said he aims to reduce discovery cycles to months, potentially even faster, as AI models mature.

Hassabis oversees Google DeepMind and Isomorphic Labs, the Alphabet unit focused on drug discovery that launched in 2021. Isomorphic has signed partnerships with Eli Lilly & Co. and Novartis AG.

Drugmakers have promoted AI-driven discovery as a way to speed patient access to therapies, lower development costs, and respond more quickly to health emergencies. Computing advances now let algorithms parse enormous molecular datasets. Still, no AI-designed drug has yet succeeded in a clinical trial or reached patients.

In January, Hassabis said clinical testing of AI-designed drugs would begin by the end of the year. It has not begun. Speaking from London in early September, he said the company has shown initial proof points toward trials but offered no timing update.

Isomorphic Labs was created to commercialize AlphaFold, DeepMind’s system for predicting protein structure and behavior. Hassabis and DeepMind scientist John Jumper shared the 2024 Nobel Prize in chemistry with a U.S. professor for related work. Researchers are now developing a more advanced AlphaFold capable of reasoning beyond protein–protein interactions.

Hassabis has previously suggested Isomorphic could ultimately build a business exceeding $100 billion in value. Earlier this year, the unit raised $600 million in financing led by Thrive Capital. Current efforts center on cancer and immune disorders, areas viewed as relatively amenable to translating algorithmic models into clinical outcomes, according to Rebecca Paul, director of medicinal drug design.

Paul told Bloomberg Television that AI-originated medicines could turn many cancers into manageable chronic conditions, while stressing that firm timelines remain uncertain.

Isomorphic’s collaboration with Novartis has grown from three to six therapeutic targets, with both sides citing strong progress but withholding additional detail.

Nvidia and OpenAI to back major UK investment in artificial intelligence

Ft • September 11, 2025

AI•Funding•SovereignAI

Overview

Nvidia and OpenAI are preparing to support a large-scale investment program to expand artificial intelligence infrastructure across the U.K., with an announcement expected during next week’s high-profile U.S. delegation visit. The plan centers on accelerating the build-out of new data centers and compute capacity in Britain, reflecting a broader “sovereign AI” push among U.S. allies to host their own advanced model training and inference capabilities rather than relying solely on overseas infrastructure.

What’s being proposed

Backing for multiple U.K. data center projects that could be worth billions over time, focused on providing high-density, GPU-rich capacity for AI training and enterprise-scale inference.

Collaboration under discussion with London-based Nscale Global Holdings, a data center developer positioned to deploy facilities equipped with Nvidia’s latest GPU platforms.

A staged rollout: early commitments are expected first, with additional capacity, partners, and locations to follow as power, grid connections, and planning approvals are secured.

Who’s involved

Nvidia is expected to provide the systems stack—GPUs (Blackwell generation for training and inference), networking, and software—while encouraging cloud and colocation partners to stand up “AI factories.”

OpenAI’s participation signals demand-side certainty for advanced compute and model services, supporting utilization of newly built capacity.

U.K. partners include Nscale for development; other global cloud and infrastructure players may be added as projects scale.

Why it matters

Sovereign AI capacity: Hosting cutting-edge compute domestically is increasingly seen as strategic infrastructure—vital for national security, industrial competitiveness, and research leadership.

Economic multiplier: New data centers catalyze supply chains (power, cooling, construction, grid upgrades) and downstream AI adoption across sectors like healthcare, finance, and public services.

Talent and ecosystem: The U.K. already punches above its weight in AI research and startups; local compute can reduce bottlenecks, speed experimentation, and retain talent.

Policy and market context

The U.K. government has prioritized AI-led growth, seeking to scale public-sector compute and streamline planning for data center development while crowding in private capital.

Nvidia’s CEO Jensen Huang has called the U.K. an “incredible place to invest” and a “Goldilocks circumstance,” praising its AI talent base yet noting the need for more domestic infrastructure to match its research strengths.

Global backdrop: Countries and blocs (U.S., EU, Gulf states) are racing to secure AI compute, power, and cooling at scale, with long lead times on grid connections and specialized equipment.

Implications and risks

Power and sustainability constraints: Securing reliable, low-carbon electricity and modernizing grid connections will be decisive; projects will be paced by energy availability as much as capital.

Supply chain tightness: Advanced GPUs, high-bandwidth memory, and networking gear remain supply-constrained; sequencing deliveries and facility readiness is a critical execution risk.

Regulatory and local approvals: Community engagement, land use, water usage, and heat reuse will shape timelines and social license to operate.

Ecosystem effects: Expanded compute could accelerate U.K. model labs and enterprise AI adoption, while also attracting international AI firms seeking European capacity. It may pressure incumbents to announce rival builds or partnerships, potentially lowering compute costs over time.

Key takeaways

A major U.S.–U.K. AI infrastructure tie-up is set to be unveiled, pairing Nvidia’s hardware/software stack with OpenAI’s demand and U.K. developers’ build-out capacity.

The initiative aligns with the U.K.’s strategy to onshore critical AI infrastructure, leveraging its research base and startup ecosystem.

Execution will hinge on power, planning, and supply chain timing—but, if realized, could materially boost Britain’s AI readiness and competitiveness.

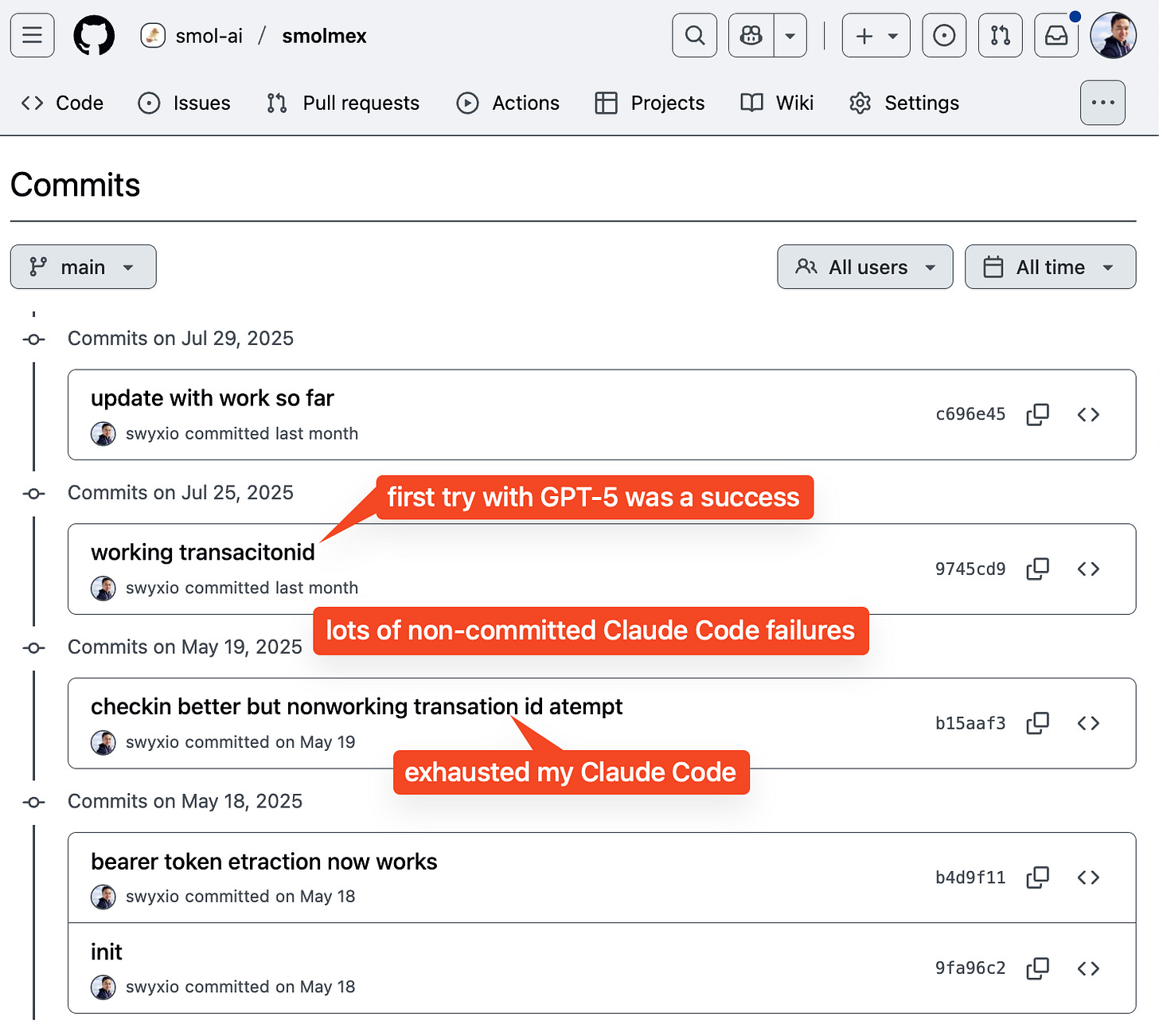

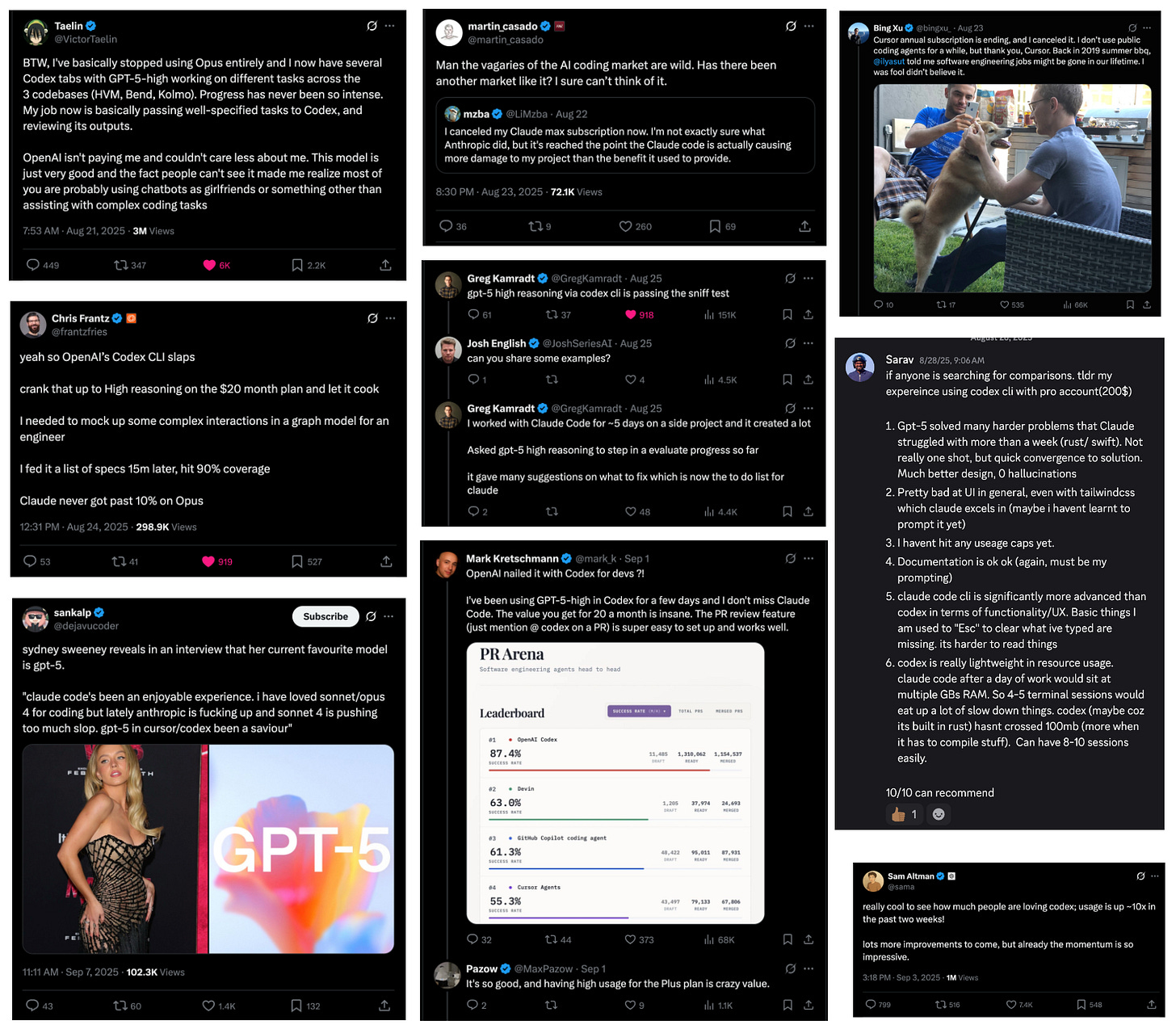

How GPT5 + Codex took over Agentic Coding — ft. Greg Brockman, OpenAI

Latent • September 15, 2025

AI•Tech•AgenticCoding

This is the last in our GPT-5 coverage of the vibes, bootstrapping, vision, and Router.

The new GPT-5-Codex launches today, capping off perhaps the most intense month of vibe shifts in Coding Agents in recent memory (click to expand):

For a little over a year now, starting with Claude 3.5 Sonnet in June, 3.7 Sonnet and Claude Code in Feb, and Claude 4 in May, Anthropic has enjoyed an uncontested dominance in coding usecases, leading to an epic runup to $5B in revenue (10% of which is Claude Code) and a $183B valuation, adding $122B in market cap.

That seems to have ignited a fire in OpenAI, which of course shipped the original 2021 Codex that kicked off GitHub Copilot, and started to reprioritize coding abilities in o1 and GPT 4.1. GPT-5-codex’s 74.5% on SWE-bench (full 500) is kind of a wash vs GPT-5 thinking’s 74.9% (477 task subset), so what is the cause of this major shift in GPT5 sentiment? Well, for one, the Codex team has been COOKING.

Factor 1: Many Faces, One Agent

As Greg says in today’s podcast, MANY people pitched in: “At the beginning of the year, we set a company goal of an agentic software engineer by the end of the year.” The original A-SWE agentic harness was called 10X and lived in the terminal, but since launching the new Codex CLI and then “ChatGPT Codex” (now Codex Cloud) and then the IDE extension (now at 800k installs after 2.5 weeks) and GitHub code review bot, there is now a complete set of interfaces to match all needs.

Although perhaps causing the least fanfare, the @codex code review bot might have the highest utility because of its very tight scoping: “the big bottleneck… was the amount of review,” so OpenAI focused on a “very high signal Codex mode” that reads intent, checks dependencies, and surfaces issues even top reviewers might miss without hours of deep thought. Released internally first, it became a safety net that accelerated teams—including Codex—tremendously.

Factor 2: Better Post-Training Qualities

Variable Grit. Thibault Sottiaux: “One of the things that this model exhibits is an ability to go on for much longer and to really have that grit that you need on these complex refactoring tasks. But at the same time, for simple tasks, it actually comes way faster at you.” OpenAI has seen it work “up to seven hours for very complex refactorings,” alongside substantial work on code quality—optimized for what people use GPT-5 within Codex for.

Codex and the future of coding with AI — the OpenAI Podcast Ep. 6

Youtube • OpenAI • September 15, 2025

AI•Tech•Codex•CodeGeneration•DeveloperTools

Overview

This episode explores how AI coding systems like Codex are reshaping software creation—from translating natural language into runnable code to assisting with debugging, tests, and documentation. The discussion frames Codex as a “pair programmer” that augments human problem-solving rather than replacing it, and examines where the technology is reliable today, where it remains brittle, and what it could unlock as models improve and toolchains become more integrated.

What Codex Does Well Today

Converts task descriptions into code snippets and scaffolding across popular languages and frameworks.

Accelerates “glue code” and boilerplate generation, freeing developers to focus on architecture, product logic, and edge cases.

Suggests tests, docstrings, and inline comments, improving readability and maintainability.

Performs context-aware completions in IDEs, referencing nearby files, APIs, and patterns.

Aids exploration by offering multiple solution paths, which developers can refine or combine.

Limits, Failure Modes, and Safety

Hallucinations: Confident but incorrect code can slip in, especially around unfamiliar APIs or edge-case semantics.

Security: Generated code may introduce injection risks, unsafe defaults, or dependency vulnerabilities if left unscreened.

Licensing and provenance: Teams need clarity on acceptable training data, reuse policies, and internal code exposure.

Determinism vs. creativity: Stochastic outputs must be corralled by tests, linters, and style guides to ensure predictability.

Domain specificity: Performance degrades on highly specialized stacks without adequate examples and feedback loops.

How Teams Integrate Codex

Shift-left testing: Treat model outputs as proposals; immediately wrap with unit tests, type checks, and static analysis.

Human-in-the-loop review: Code review remains central—developers curate, annotate, and enforce standards.

Prompt patterns: Clear intent, constraints, and examples yield better code; teams maintain prompt libraries like they do utilities.

Tool orchestration: Combining Codex with package managers, build systems, CI, and deployment scripts creates end-to-end acceleration.

Telemetry and feedback: Logging failures and fixes helps tune prompts and informs where the assistant adds or subtracts value.

Impact on Roles and Skills

Developers: Less time on boilerplate; more on system design, data modeling, performance, and UX. Reading and critiquing AI-generated code becomes a core competency.

Newcomers: Lower barrier to entry via guided scaffolding and explanation, but stronger need for fundamentals to detect subtle defects.

Leads/Architects: Increased leverage through codified patterns, reusable prompts, and automated code transformations across codebases.

Educators: Emphasis shifts to testing, verification, security, and computational thinking over syntax memorization.

Evaluation and Quality Assurance

Benchmarks alone are insufficient; real-world metrics include diff quality, defect escape rates, time-to-PR, and post-merge incidents.

“Spec-first” workflows—writing tests and contracts up front—provide objective gates for accepting model outputs.

Runtime validation (property-based tests, fuzzing) catches corner cases that generative models often miss.

Design Principles for Responsible Use

Guardrails by default: Safe templates, least-privilege patterns, parameterized queries, and vetted crypto/storage practices.

Transparency: Make AI assistance visible in diffs and review tools; attribute where code came from.

Iterative prompting: Start with small, verifiable steps; prefer patch-style changes to large, sweeping rewrites.

Data hygiene: Keep secrets out of prompts; use redaction and secure contexts for sensitive code.

Looking Ahead

The conversation highlights a trajectory from suggestion engines to agentic systems that can plan multi-step changes, run tests, call tools, and open pull requests—while remaining under developer supervision. As models become better at reasoning and environment interaction, the bottleneck moves from “can the model write code?” to “can the team specify intent, constraints, and safety property checks well enough?” Organizations that pair Codex-like systems with rigorous specs, observability, and governance will capture the bulk of productivity gains without compromising reliability or security.

Key Takeaways

Treat AI code as high-speed drafts; enforce quality with tests and reviews.

Invest in prompts, patterns, and guardrails like you would in frameworks and libraries.

Measure impact with engineering metrics tied to reliability, not just lines generated.

Upskill teams on verification, security, and system design to fully realize benefits.

When AI Writes Code, Who Secures It?

Oreilly • September 15, 2025

AI•Tech•SoftwareSecurity

In early 2024, a striking deepfake fraud case in Hong Kong brought the vulnerabilities of AI-driven deception into sharp relief. A finance employee was duped during a video call by what appeared to be the CFO—but was, in fact, a sophisticated AI-generated deepfake. Convinced of the call’s authenticity, the employee made 15 transfers totaling over $25 million to fraudulent bank accounts before realizing it was a scam.

This incident exemplifies more than just technological trickery—it signals how trust in what we see and hear can be weaponized, especially as AI becomes more deeply integrated into enterprise tools and workflows. From embedded LLMs in enterprise systems to autonomous agents diagnosing and even repairing issues in live environments, AI is transitioning from novelty to necessity. Yet as it evolves, so too do the gaps in our traditional security frameworks—designed for static, human-written code—revealing just how unprepared we are for systems that generate, adapt, and behave in unpredictable ways.

Beyond the CVE Mindset

Traditional secure coding practices revolve around known vulnerabilities and patch cycles. AI changes the equation. A line of code can be generated on the fly by a model, shaped by manipulated prompts or data—creating new, unpredictable categories of risk like prompt injection or emergent behavior outside traditional taxonomies.

A 2025 Veracode study found that 45% of all AI-generated code contained vulnerabilities, with common flaws like weak defenses against XSS and log injection. (Some languages performed more poorly than others. Over 70% of AI-generated Java code had a security issue, for instance.) Another 2025 study showed that repeated refinement can make things worse: After just five iterations, critical vulnerabilities rose by 37.6%.

To keep pace, frameworks like the OWASP Top 10 for LLMs have emerged, cataloging AI-specific risks such as data leakage, model denial of service, and prompt injection. They highlight how current security taxonomies fall short—and why we need new approaches that model AI threat surfaces, share incidents, and iteratively refine risk frameworks to reflect how code is created and influenced by AI.

Easier for Adversaries

Perhaps the most alarming shift is how AI lowers the barrier to malicious activity. What once required deep technical expertise can now be done by anyone with a clever prompt: generating scripts, launching phishing campaigns, or manipulating models. AI doesn’t just broaden the attack surface; it makes it easier and cheaper for attackers to succeed without ever writing code.

In 2025, researchers unveiled PromptLocker, the first AI-powered ransomware. Though only a proof of concept, it showed how theft and encryption could be automated with a local LLM at remarkably low cost: about $0.70 per full attack using commercial APIs—and essentially free with open source models. That kind of affordability could make ransomware cheaper, faster, and more scalable than ever.

This democratization of offense means defenders must prepare for attacks that are more frequent, more varied, and more creative. The Adversarial ML Threat Matrix, founded by Ram Shankar Siva Kumar during his time at Microsoft, helps by enumerating threats to machine learning and offering a structured way to anticipate these evolving risks. (He’ll be discussing the difficulty of securing AI systems from adversaries at O’Reilly’s upcoming Security Superstream.)

Silos and Skill Gaps

Developers, data scientists, and security teams still work in silos, each with different incentives. Business leaders push for rapid AI adoption to stay competitive, while security leaders warn that moving too fast risks catastrophic flaws in the code itself.

These tensions are amplified by a widening skills gap: Most developers lack training in AI security, and many security professionals don’t fully understand how LLMs work. As a result, the old patchwork fixes feel increasingly inadequate when the models are writing and running code on their own.

The rise of “vibe coding”—relying on LLM suggestions without review—captures this shift. It accelerates development but introduces hidden vulnerabilities, leaving both developers and defenders struggling to manage novel risks.

From Avoidance to Resilience

AI adoption won’t stop. The challenge is moving from avoidance to resilience. Frameworks like Databricks’ AI Risk Framework (DASF) and the NIST AI Risk Management Framework provide practical guidance on embedding governance and security directly into AI pipelines, helping organizations move beyond ad hoc defenses toward systematic resilience. The goal isn’t to eliminate risk but to enable innovation while maintaining trust in the code AI helps produce.

Transparency and Accountability

Research shows AI-generated code is often simpler and more repetitive, but also more vulnerable, with risks like hardcoded credentials and path traversal exploits. Without observability tools such as prompt logs, provenance tracking, and audit trails, developers can’t ensure reliability or accountability. In other words, AI-generated code is more likely to introduce high-risk security vulnerabilities.

AI’s opacity compounds the problem: A function may appear to “work” yet conceal vulnerabilities that are difficult to trace or explain. Without explainability and safeguards, autonomy quickly becomes a recipe for insecure systems. Tools like MITRE ATLAS can help by mapping adversarial tactics against AI models, offering defenders a structured way to anticipate and counter threats.

Looking Ahead

Securing code in the age of AI requires more than patching—it means breaking silos, closing skill gaps, and embedding resilience into every stage of development. The risks may feel familiar, but AI scales them dramatically. Frameworks like Databricks’ AI Risk Framework (DASF) and the NIST AI Risk Management Framework provide structures for governance and transparency, while MITRE ATLAS maps adversarial tactics and real-world attack case studies, giving defenders a structured way to anticipate and mitigate threats to AI systems.

The choices we make now will determine whether AI becomes a trusted partner—or a shortcut that leaves us exposed.

Alphabet market value exceeds $3tn

Ft • September 15, 2025

AI•Tech•Alphabet•MarketCap•StockMarket

Overview

Alphabet’s market value surpassing $3 trillion marks a pivotal moment for Big Tech and capital markets, signaling investor conviction that the company’s core ad engine, fast‑growing cloud franchise, and accelerating artificial intelligence strategy can translate into sustained cash flow and durable competitive advantages. The move places Alphabet among a very small cohort of companies that have crossed this threshold, underscoring how AI narratives and earnings delivery have concentrated equity returns in a handful of mega‑caps. It also intensifies scrutiny of how AI will be monetized across Search, YouTube, Cloud, and the Android ecosystem—and what that means for the broader market’s leadership and risk profile.

What’s driving the re‑rating

AI integration at scale: Alphabet is weaving generative AI across its stack—from Gemini‑powered Search and Workspace to developer tools and model APIs—creating multiple monetization levers (ad relevance and formats, premium features, usage‑based AI services, and enterprise subscriptions).

Cloud profitability and mix shift: Google Cloud’s transition from years of investment to consistent operating profits has improved visibility on margins. AI infrastructure (TPUs, GPUs), managed services, and data platforms create higher‑value workloads and stickier enterprise relationships.

YouTube’s dual engine: Advertising remains resilient while subscription products (YouTube Premium, YouTube TV) provide recurring revenue and partially de‑risk cyclicality, with Shorts and connected‑TV ad demand expanding the surface area for growth.

Capital returns and discipline: Large buybacks and operating leverage from data‑center utilization support per‑share value, even as capex rises for AI infrastructure.

Market structure implications

Concentration and index mechanics: A $3tn Alphabet increases its weight in major indices, amplifying passive flows and options activity. This can add momentum during risk‑on periods but raises the market’s sensitivity to a narrow set of earnings reports and AI headlines.

Valuation signaling: Crossing the threshold often attracts incremental mandates that screen by market cap and liquidity, potentially lowering the equity risk premium for mega‑caps while raising it for smaller peers competing for capital.

Competitive landscape

Platform advantages: Alphabet’s data reservoirs (Search queries, YouTube engagement, Android device telemetry) and distribution give it low‑friction channels to deploy AI features and gather feedback loops that improve models.

Intensifying rivalry: Microsoft’s OpenAI collaboration, Apple’s platform‑level AI rollouts, and Nvidia’s ecosystem leadership in AI compute pressure Alphabet to execute quickly. Differentiation will hinge on model quality, safety, cost efficiency, and developer experience.

Cost curves and moats: Long‑term edge may depend on owning compute (custom TPUs), optimizing serving costs, and aligning models tightly with high‑intent user interactions where monetization is clearest (commercial search, shopping, travel, local services).

Policy and risk considerations

Antitrust proceedings: Ongoing cases in search distribution and ad tech, along with EU digital market rules, could reshape default settings, data access, or business practices. Remedies might increase traffic acquisition costs or require product changes that affect ad yield.

AI governance: As generative features expand, Alphabet faces content quality, attribution, and safety obligations. Missteps could draw regulatory penalties or brand‑safety concerns from advertisers.

Capex intensity: Data‑center build‑outs, power procurement, and supply‑chain constraints for advanced chips create execution risk. Returns depend on utilization, pricing power, and conversion of AI usage into high‑margin revenue streams.

Why it matters beyond Alphabet

Signal for the AI cycle: The milestone validates investor expectations that AI will become a broad‑based productivity layer, not simply an R&D expense. It encourages enterprises to accelerate AI adoption, benefiting cloud vendors, chipmakers, and application startups aligned to Alphabet’s ecosystem.

Advertising’s evolution: If AI‑enhanced Search and YouTube increase relevance and conversion, ad budgets may consolidate further into platforms with the richest intent data—raising the bar for challengers and reshaping the ad‑tech landscape.

Capital allocation ripple effects: Higher valuations and cash flows give Alphabet greater flexibility for M&A, infrastructure commitments, and shareholder returns, influencing competitive dynamics in cloud, cybersecurity, and developer tools.

Key takeaways

Alphabet’s $3tn valuation reflects confidence in AI‑driven monetization across Search, YouTube, and Cloud, coupled with improving profitability and sustained buybacks.

Market concentration rises as mega‑caps capture a larger share of index weight and investor attention, increasing both momentum potential and systemic earnings risk.

Execution on AI quality, cost, and safety—and navigation of regulatory headwinds—will determine whether the premium is durable.

The milestone reinforces AI as a multi‑year capital cycle spanning chips, data centers, and software, with Alphabet positioned as a central platform for enterprise and consumer use cases.

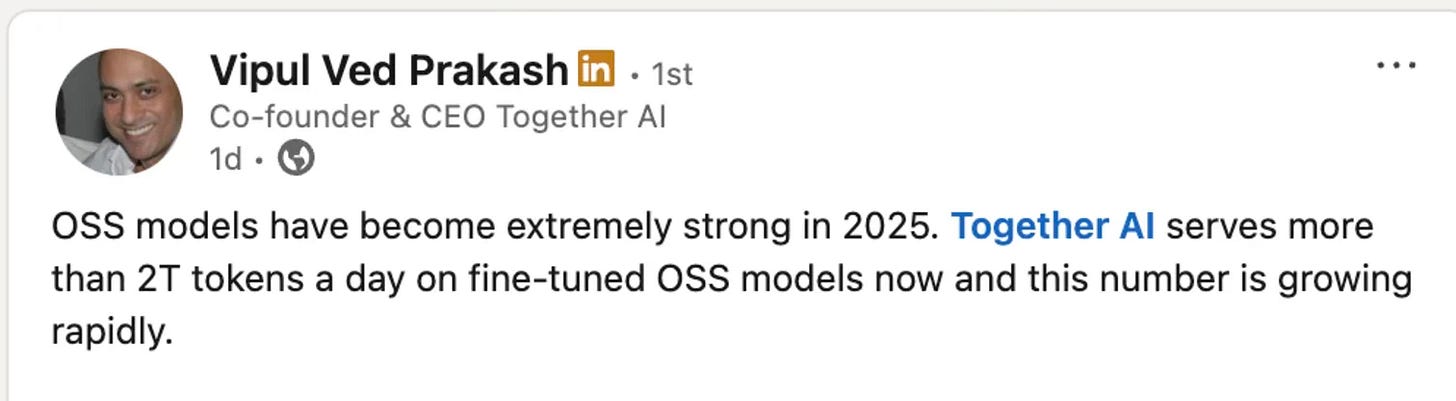

Beyond a Trillion : The Token Race

Tomtunguz • September 17, 2025

AI•Data•Tokens•Inference•Google

One trillion tokens per day. Is that a lot?

“And when we look narrowly at just the number of tokens served by Foundry APIs, we processed over 100t tokens this quarter, up 5x year over year, including a record 50t tokens last month alone.”

In April, Microsoft shared a statistic, revealing their Foundry product is processing about 1.7t tokens per month.

Yesterday, Vipul shared Together.ai is processing 2t of open-source inference daily.

In July, Google announced a staggering number :

“At I/O in May, we announced that we processed 480 trillion monthly tokens across our surfaces. Since then we have doubled that number, now processing over 980 trillion monthly tokens, a remarkable increase.”

Google processes 32.7t daily, 16x more than Together & 574x more than Microsoft Foundry’s April volume.

From these figures, we can draw a few hypotheses :

Open-source inference is a single-digit fraction of inference. It’s unclear what fraction of Google’s inference tokens are from their open source models like Gemma. But, if we assume Anthropic & OpenAI are 5t-10t tokens per day1 & all closed-source, plus Azure is roughly similar in size, then open-source inference is likely around 1-3% of total inference. 2

Agents are early. Microsoft’s data point suggests the agents within GitHub, Visual Studio, Copilot Studio, & Microsoft Fabric contribute less than 1% of overall AI inference on Azure.

With Microsoft expected to invest $80 billion compared to Google’s $85 billion in AI data center infrastructure this year, the AI inference workloads of each company should increase significantly both through hardware coming online & algorithmic improvements.

“Through software optimization alone, we are delivering 90% more tokens for the same GPU compared to a year ago.”

Microsoft is squeezing more digital lemonade from their GPUs & Google must also be doing similar.

When will we see the first 10t or 50t AI tokens processed per day? It can’t be far off now.

Venture

Your VCs May Be Giving Up on You Right About Now. Don’t Let That Stop You.

Saastr • September 12, 2025

Venture

One of the best parts of YC Demo Day is catching up with VCs you haven’t seen in a while. And this time I several sobering conversations with top VCs that made it clear:

Many VCs have now just quietly given up on their slow-growth portfolio companies.

Especially the ones at $50m, $100m+ ARR that are now growing sub-20%, even sub-10%.

The reasoning? They’ve been hoping for growth to reaccelerate these past 2+ years, and now feel they need to focus 100% of their bandwidth on their “go-forward AI portfolio.”

It’s bleak. It’s pragmatic. And it’s the reality in an AI-first world.

The New VC Math: AI or Bust

Here’s what multiple VCs told me:

“Look, we gave these companies 24+ months to learn, adjust, and reboot. If they haven’t figured it out by now, it’s just tough. Our LPs want to see hypergrowth AI investments, not zombie SaaS companies burning through runway at 10% growth.”

The conversation usually ended with some variation of: “Consolidation is the only outcome for most $100M+ pre-AI B2B companies.”

Ouch.

But it might be exactly the final catalyst you need.

Why This Might Be the Best Thing That Ever Happened to You

First, let’s be real about what “VC support” actually meant for many of these companies.

I’ve seen too many founders mistake VC hand-holding for actual business building. When your investors are constantly available for “strategic guidance” and follow-on funding discussions, it’s easy to lose sight of the fundamentals:

Building a product customers actually can’t live without

Creating efficient, repeatable sales processes

Achieving genuine product-market fit (not just “good enough” fit)

Developing sustainable unit economics

Second, VC abandonment forces brutal prioritization.

When you know there’s no safety net, you stop playing it safe. You can’t afford to run 47 experiments simultaneously or chase every shiny growth hack. You focus on what actually moves the needle.

Third, it eliminates the “growth at all costs” pressure that may have been killing you slowly and/or forcing bad decisions.

Without VCs pushing for hockey stick growth, you can focus on building a sustainable, profitable business. Or at least, doing what it really takes to reboot your company so it really can get back to growth.

The Playbook for VC-Abandoned Companies (That Actually Works)

1. Embrace the Never Raise Again Mentality

Assume you can never raise again. Start with these fundamentals:

Audit every dollar. I mean every dollar. That $50K/month in “growth tools” you’re not actually using? Gone. The 12-person marketing team generating 30 qualified leads per month? Time for some hard conversations.

Focus on cash flow, not only growth metrics. At least, until growth gets back to venture rates.Your new North Star isn’t ARR growth—it’s cash flow positive. This mindset shift alone will transform how you make decisions.

2. Double Down on Your Best Customers

Forget about TAM expansion and new market penetration. Your survival depends on the customers you already have.

Conduct deep customer interviews. Not NPS surveys—actual conversations. Find out what they’d be willing to pay 2x for. What features would make your product indispensable?

Build retention into everything. Every product decision, every customer success initiative, every pricing change should be evaluated through one lens: Does this make it harder for customers to leave?

3. And Also … Refound Your Startup for the AI Age

This isn’t about slapping “AI-powered” onto your existing product. It’s about fundamentally rethinking your business through the lens of what’s actually possible now.

From $1M to $500M in 17 Months 📈

Youtube • 20VC with Harry Stebbings • September 15, 2025

Venture

Overview

A fast-paced clip distills how an AI services startup vaulted from $1 million to $500 million in revenue in just 17 months. The guest, Brendan Foody (CEO), frames the business as solving “talent allocation in the AI economy,” positioning the company as the fastest-growing in history and signaling a rumored $10 billion valuation with top-tier investors on the cap table. The segment previews themes explored in a longer conversation: hypergrowth mechanics, sustainability of AI revenue, the pitfalls of today’s evaluation benchmarks, and what investors should prioritize when judging AI companies. (thetwentyminutevc.com)

What Drove the Surge

Strategic market timing: The company claims it “quadrupled since Scale was acquired,” referencing a wave of enterprise customers rethinking data-labeling/talent vendors after Meta took a 49% stake in Scale AI—an industry jolt that spurred defections from major buyers and reshaped demand across providers. This exogenous shock appears to have accelerated pipeline velocity and contract size for rivals like Mercor. (thetwentyminutevc.com)

Clear positioning among data/talent providers: The clip nods to differentiation across Scale, Surge, Mercor, and Turing—implying that defensibility hinges on quality, throughput, and domain specialization rather than commodity annotation. The emphasis is on outcomes for model performance and enterprise deployment speed, not just price per task. (thetwentyminutevc.com)

Capital and culture as accelerants: Backing from elite funds (including Benchmark, Felicis, and Emergence) provided credibility and resources to blitzscale. The founder underscores an intense execution culture (“9-9-6”) that compresses cycles on product, GTM, and operations—controversial but presented as inseparable from outlier outcomes. (thetwentyminutevc.com)

What to Measure (and What Not To)

Skepticism of benchmarks: The speaker argues many AI evaluation benchmarks are “BS,” easily gamed and weakly correlated with customer value. Instead, he urges investors and operators to prioritize production usage, revenue durability, and real-world error reduction over leaderboard deltas. This is intertwined with a broader claim that near-term margins can mislead in AI services that mix humans-in-the-loop with automation. (thetwentyminutevc.com)

Revenue sustainability vs. headline growth: The segment spotlights durability—renewals, expansion, and payback—as the truer signal in AI than short bursts of ARR. The implication is that superior workflow integration and data feedback loops build compounding advantages that keep gross margins and retention improving over time. (thetwentyminutevc.com)

Notable Lines

“You cannot create a $10BN company without 9-9-6 work culture.” The founder ties extreme cadence to speed in shipping, iteration, and customer capture, acknowledging trade-offs but asserting necessity for category-definers. (thetwentyminutevc.com)

“We literally have too much money; we cannot spend it.” The line suggests capital abundance post-breakout, shifting the constraint from cash to talent, operational excellence, and selectivity in bets. (thetwentyminutevc.com)

Implications

For founders: Category inflection points—like Meta’s Scale AI move—create windows for decisive customer capture. Teams ready with enterprise-grade workflows, clear SLAs, and specialist talent can convert market turbulence into step-function growth. (reuters.com)

For investors: Traditional diligence comfort (benchmarks, neat cohort curves) can lag reality in fast-moving AI. Prioritize production references, latency/quality metrics in live settings, and the vendor’s ability to absorb demand spikes without degrading output. (thetwentyminutevc.com)

For the AI data/talent stack: Consolidation and conflicts will keep reshaping buyer choices. Vendors that balance quality, security, and neutrality may be best positioned as hyperscalers and model labs diversify supplier exposure. (reuters.com)

Key Takeaways

Hypergrowth can be catalyzed by external shocks; preparedness turns them into durable share gains. (reuters.com)

Benchmarks and early margins are second-order; customer outcomes and revenue durability lead. (thetwentyminutevc.com)

Culture and cadence are treated as non-negotiables for outlier performance—even if polarizing. (thetwentyminutevc.com)

Capital is abundant at breakout stage; disciplined allocation and operational scaling become the bottleneck. (thetwentyminutevc.com)

The AI data/talent market is in flux; neutrality and trust are competitive advantages. (reuters.com)

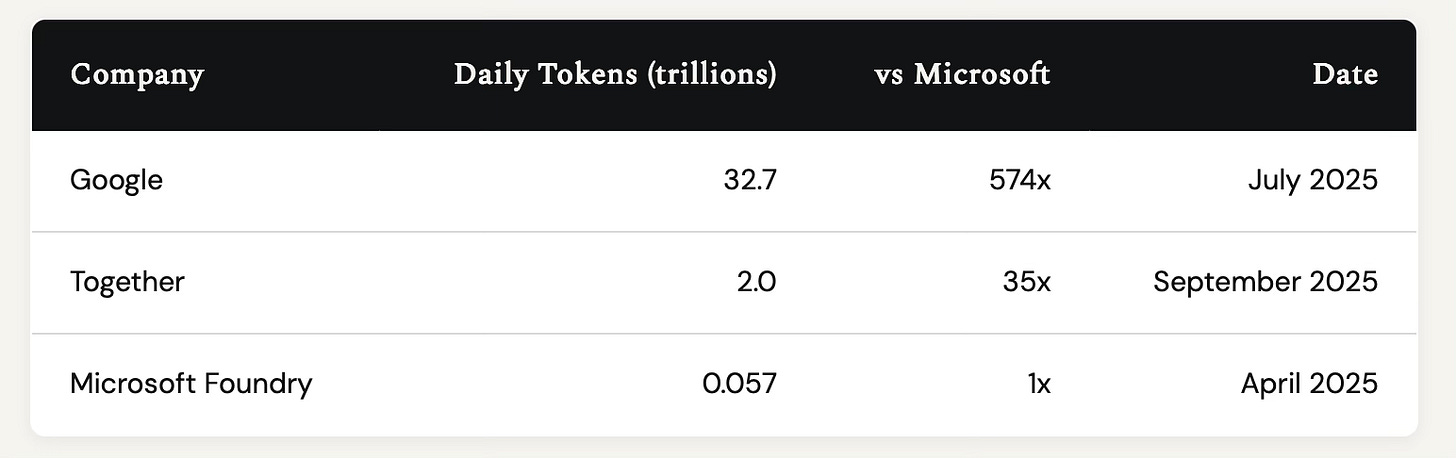

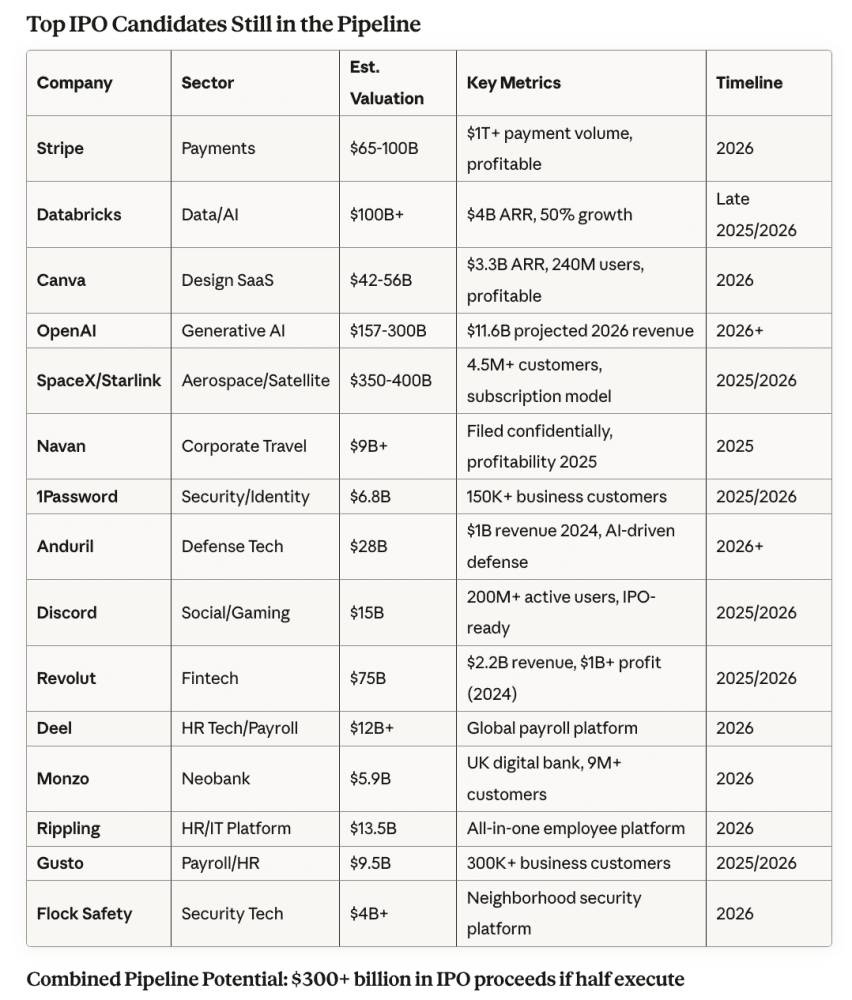

The Great IPO Awakening: Are We Back?

Saastr • September 15, 2025

Venture

What’s happening

The piece argues that the IPO market has decisively reawakened after a prolonged lull, highlighting that last week was the “busiest IPO stretch since 2021,” with six companies collectively raising $4.4 billion in five days. This burst of issuance is framed as more than a tentative reopening: the “IPO window isn’t just cracking open—it’s swinging wide.” The author’s core message is practical and urgent: strong companies should consider listing now while investors are receptive, but approach pricing conservatively to ensure aftermarket stability and long-run credibility.

Why this moment matters

Signal versus noise: A cluster of sizable offerings in a single week suggests broad-based demand rather than a one-off success, potentially resetting market psychology after a multi-year drought.

Backlog release: Years of delayed exits have created pent-up supply among late-stage private companies; the current window could catalyze a queue of filings and launches.

Valuation discipline: Despite improved sentiment, the emphasis on “conservative pricing” implies investors remain price-sensitive and reward fundamentals over froth.

Playbook for founders and late-stage boards

Move if you’re ready: Companies with durable growth, efficient unit economics, and clean cohorts should accelerate timetables to capitalize on the window.

Prioritize quality over maximum proceeds: Favor building a supportive, long-term shareholder base and momentum over squeezing out the last turn of valuation at pricing.

Prepare for scrutiny: Public-market investors will examine gross margin quality, cash burn trajectory, path to operating leverage, and predictability of revenue more tightly than during 2020–2021.

Implications for VCs and LPs

Liquidity returns: A functioning IPO market rebalances venture portfolios, enabling distributions and refreshing recycling capital for new vintages.

Mark-to-market reality check: Public comps will set reference prices for late-stage holdings; disciplined pricing on IPO day can reduce painful post-listing compressions.

Sector rotation: Fundamentals-driven appetite may favor resilient categories (infrastructure, vertical software, cybersecurity, fintech with proven unit economics) over story-driven growth.

Risks and watch items

Sustainability: One busy week does not guarantee a cycle; macro shifts, rate volatility, or a few high-profile post-IPO underperformers could chill sentiment quickly.

Aftermarket performance: The true test will be 30–90 days post-pricing. Stable trading validates valuation and encourages the next wave; weak trading can slam the window shut.

Execution readiness: Companies that haven’t built public-ready forecasting, controls, and IR infrastructure risk missing this window or stumbling post-listing.

Key takeaways

“Busiest IPO stretch since 2021”: six deals, $4.4B raised in five days underscores a real reopening.

The window appears “wide,” but success depends on conservative pricing and strong fundamentals.

Founders should act with urgency and discipline; VCs should expect improved liquidity but prepare for valuation realism set by public markets.

US listings market bursts back to life with busiest week in 4 years

Ft • September 13, 2025

Venture

The US listings market has sprung back to life, recording its busiest week in four years as a cluster of companies priced offerings and began trading on the NYSE and Nasdaq. Proceeds ran into the billions, a clear step-up from the sporadic single‑name deals that characterized much of the post‑2021 drought. Bankers pointed to healthier risk appetite, steadier equity indices and narrower volatility as factors enabling issuers to test demand at valuations that balance growth ambitions with public‑market discipline.

Investor reception was generally constructive, with books multiple times covered and day‑one trading mostly above offer for several debuts. Unlike the frothier cycle of 2020–21, price discovery has skewed conservative, with issuers prioritizing durable aftermarket support over headline‑grabbing “pops.” Order books showed a deeper mix of long‑only funds alongside hedge funds, a shift ECM desks say should reduce churn and help companies meet early lock‑up milestones without destabilizing trading.

The revival has been broad‑based. Beyond technology, healthcare and consumer names featured, alongside financial and industrial issuers, suggesting the window is not confined to a single theme. Syndicates highlighted the return of cornerstone and anchor allocations to anchor deal quality, while discretionary retail participation, though smaller than in the meme‑stock era, added incremental demand on platforms offering directed share programs.

Attention now turns to the pipeline. With investor meetings already scheduled through late September and into October, exchanges and underwriters expect a steady cadence of mid‑ to large‑cap offerings if macro conditions hold. The key watch‑items are rate expectations, earnings revisions and any flare‑ups in election‑year volatility. For now, the momentum shift is tangible: a reopened route to public capital, renewed competition between listing venues, and a clearer path for mature private companies to transition to the public markets.

Robinhood plans to launch a startups fund open to all retail investors

Techcrunch • September 15, 2025

Venture

Robinhood announced Monday it has filed an application with the U.S. Securities and Exchange Commission to launch a new publicly traded fund that will hold shares of startups.

The idea behind the “Robinhood Ventures Fund I” is to allow every retail investor access to make money on the hottest startups before they go public.

While the current version of the application is public, Robinhood hasn’t filled in the fine-print yet. This means we don’t know how many shares it plans to sell, nor other details like the management fee it plans to charge. It’s also unclear which startups it hopes this fund will eventually hold. The paperwork says it “expects” to invest in aerospace and defense, AI, fintech, robotics as well as software for consumers and enterprises.

Robinhood’s big pitch is that retail investors are being left out of the gains that are amassed by startup investors like VCs. That’s true to an extent. “Accredited investors” — or those with a net worth large enough to handle riskier investments — already have a variety of ways of buying equity in startups, such as with venture firms like OurCrowd.

Retail investors who are not rich enough to be accredited have more limited options. There are funds similar to what Robinhood has proposed, including Cathy Wood’s ARK Venture Fund, a mutual fund which holds stakes in companies like Anthropic, Databricks, OpenAI, SpaceX, and others.

Robinhood’s last such effort was controversial. The trading company launched what it called private “tokenized” stocks in the EU earlier this year, implying these tokens gave retail investors the ability to make money from shares of private companies like OpenAI. However, OpenAI denounced the product, pointing out that buyers of these tokens were not actually buying OpenAI stock — tokenized or otherwise. They were simply buying tokens pegged to prices of a private company’s stock.

This new closed-end “Ventures Fund I” is a more classic, mutual fund-style, approach. As to when Robinhood’s new fund will be available we don’t know that either yet. Robinhood, which is in a quiet period, declined to comment.

UK smartphone maker Nothing raises $200mn to take on Apple and Samsung

Ft • September 15, 2025

Venture

London-based smartphone maker Nothing has raised $200mn in fresh funding as it pushes to become a rare challenger to Apple and Samsung in the global handset market.

The financing values the five-year-old start-up at about $1.8bn including the new capital, giving the company more firepower to develop devices that lean heavily on artificial intelligence.

Founder and chief executive Carl Pei said the group generated about $500mn in sales last year, a rise of roughly 150 per cent from the prior year. Nothing has sold around 7mn devices to date across its smartphones, headphones and earbuds, and is on track to reach about $1bn in revenue this year, he added.

Pei said investors were persuaded by the company’s growth metrics and execution, arguing that the fundraising reflected performance rather than hype.

Nothing has carved out a niche in the cut-throat consumer electronics market with distinctive hardware and user interface design at more accessible prices than its largest rivals, helping it attract younger, design-conscious buyers.

Despite the momentum, the company remains a small player in a market that ships more than a billion smartphones annually, underscoring the scale of the challenge ahead.

The latest round was led by Tiger Global, with participation from Qualcomm Ventures, Indian entrepreneur Nikhil Kamath and existing backers including Tony Fadell and Alphabet’s GV, according to people familiar with the deal.

Pei said the fresh capital would support exploration of AI-native devices that blend software and hardware more tightly, suggesting new product categories may emerge as data and context become central to consumer experiences.

OpenAI surges to first place as Forge's Private Mag 7 hit $1.2 trillion

Youtube • CNBC Television • September 19, 2025

Venture

Overview

The segment highlights that OpenAI has surged to the top position within Forge’s “Private Magnificent 7,” a curated cohort of the most valuable and influential late-stage private companies. The group’s aggregate valuation has reached approximately $1.2 trillion, underscoring the scale private markets have achieved and the degree to which artificial intelligence is driving investor interest. The discussion frames this milestone as both a barometer of secondary-market pricing and broader sentiment toward late-stage growth assets amid a renewed risk-on environment.

Key takeaways

OpenAI now ranks first in Forge’s Private Mag 7, reflecting strong market demand for AI leaders in private secondary markets.

The cohort’s combined valuation of about $1.2 trillion signals a recovery and expansion in private-market risk appetite after earlier pullbacks.

Investor interest is concentrating in AI platform companies with visible enterprise adoption, licensing revenue, and ecosystem effects.

Secondary-market prints are increasingly used as reference points for valuation, liquidity planning, and IPO readiness across late-stage portfolios.

The milestone may influence benchmarking for limited partners, crossover funds, and structured liquidity providers.

What the $1.2 trillion figure implies

Crossing the $1.2 trillion threshold suggests that the most watched late-stage private companies are approaching public mega-cap scale collectively, even without traditional IPOs. For allocators, it means a small number of names can have outsized impacts on portfolio valuations, distributions, and capital calls. For founders and employees, higher secondary valuations can unlock more flexible liquidity programs and extend private lifecycles, delaying the need for a public listing while still rewarding early stakeholders.

Why OpenAI leads the pack

OpenAI’s ascent reflects several reinforcing dynamics: enterprise adoption of AI assistants and developer platforms; expanding monetization through subscriptions, APIs, and partnerships; and perceived defensibility from model scale, data flywheels, and distribution. The company also benefits from a broad ecosystem of applications and integrations, which can translate into durable revenue growth expectations. In secondary markets, that narrative often commands premium multiples relative to non-AI peers, propelling OpenAI above other high-profile private companies in this cohort.

Impact on private-market behavior

Forge’s index-level visibility provides a reference for pricing discovery, which can tighten bid-ask spreads and increase transaction throughput for late-stage shares. As benchmarks rise, more employees and early investors may opt to sell small allocations, increasing float and improving price transparency. Crossover and hedge funds tracking “private-to-public” pipelines may recalibrate models for potential listings, while venture firms could face higher follow-on costs to maintain pro rata in premium AI names.

Risks and what to watch

Concentration risk is elevated: a handful of AI leaders are absorbing disproportionate capital, leaving less room for diversified bets. If revenue ramps or unit economics disappoint, re-rating could be swift given the reliance on forward expectations. Watch for signals such as expanded secondary volumes, new primary rounds with clear price discovery, and indications of public-market readiness (audits, governance upgrades, and profitability pathways). Also monitor broader macro factors—rates, equity multiples, and regulatory scrutiny—that influence late-stage tech valuations and exit timing.

Bottom line

OpenAI’s move to the top of the Private Mag 7 and the cohort’s $1.2 trillion aggregate valuation encapsulate a pivotal moment for late-stage private markets: AI-led names are setting the tone for pricing, liquidity, and exit strategies. For investors, the message is clear—AI platform leaders are the current gravity well of private-market value, but concentrated expectations raise the stakes for execution, transparency, and eventual public-market performance.

IPOs Fire Up Even More in September: This Week It’s Netskope and StubHub

Saastr • Jason Lemkin • September 19, 2025

Venture

The IPO Market Has Awoken: This Week, StubHub and Netskope Lead Tech’s Return to Public Markets

After years of IPO drought, September 2025 delivered the most concentrated IPO activity since November 2021, with over $6 billion raised across multiple landmark debuts. From last week’s historic $4.4 billion surge featuring Klarna’s $1.37B fintech comeback to this week’s dual $800M+ offerings from StubHub and Netskope, September represents more than isolated success stories—it signals the definitive return of risk appetite and growth stock demand that B2B leaders have been waiting for.

The big takeaway? The window is open again, but only for companies with real fundamentals. September’s IPO calendar tells the story of a market that rewards disciplined valuations, proven business models, and sustainable unit economics over pure growth narratives.

September 2025: The Great IPO Awakening

September 2025 will be remembered as the month the. tech IPO market truly awakened. For the first time since late 2021. With over $6 billion raised across landmark debuts, this represents the most concentrated IPO activity since the 2021 peak—but with fundamentally different characteristics.

IPO Timeline: 2025 Recovery vs. 2021 Boom

2025 Major IPOs by Month

2025 Total Through September: $25+ billion raised, 246 total IPOs

Key Differences from 2021:

Conservative Pricing: Most companies priced at or above range but with realistic valuations

Fundamental Focus: Revenue growth, path to profitability, and unit economics matter