A reminder for new readers. That Was The Week includes a collection of my selected readings on critical issues in tech, startups, and venture capital. I selected the articles because they are of interest to me. The selections often include things I entirely disagree with. But they express common opinions, or they provoke me to think. The articles are sometimes long snippets to convey why they are of interest. Click on the headline, contents link or the ‘More’ link at the bottom of each piece to go to the original. I express my point of view in the editorial and the weekly video below.

Congratulations to this week’s chosen creators: @mariogabriele, @edzitron, @JasonrShuman, @alexeheath, @pierce, @kyle_l_wiggers, @lawrencebonk, @alex, @PeterJ_Walker, @psawers, @bheater, @levie, @nikitabier

Contents

Editorial: The Robots Are Coming

Mark Zuckerberg on Llama 3 and Open Source

Aaron Levie and Nikita Bier on Lina Khan

Editorial

Mario Gabriele inspires this week’s headline and lead essay. His essay is titled “The Robotics Renaissance” and argues that we are about to enter an “automation supercycle”.

We are entering a robotics renaissance.

Over the next decade, intelligent, embodied androids will permeate industrial activities and aspects of everyday life, from assembling cars to folding laundry. The impending robot “population boom” is the result of technological breakthroughs, intense investor appetite, labor cost arbitrage, and long-standing demographic trends.

His essay highlights the fact of shortage of humans due to aging:

America doesn’t have a large enough working-age population to support its young and elderly. The increase in the US’s “dependency ratio” is not unique – many other advanced countries face similar slumps. While immigration is one solution, robots are another.

The catalyst for the essay is that recent developments in AI and the dexterity of robots are coming together to create some unique opportunities.

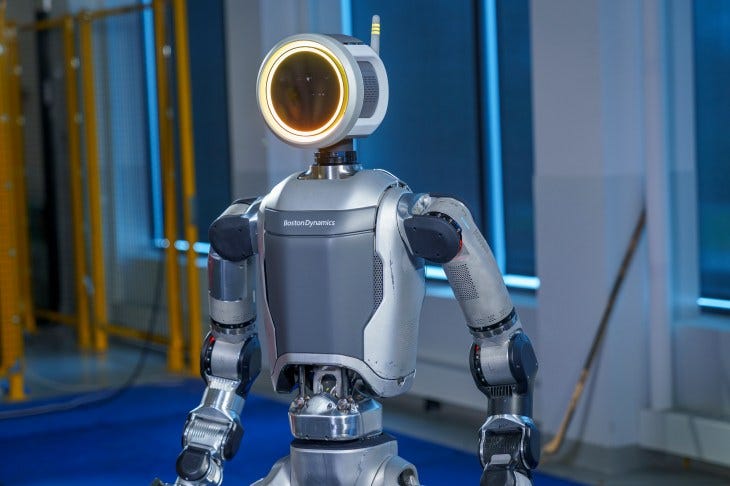

Boston Dynamics demonstrated a highly mobile and agile prototype, which is featured below as “Startup of the Week”:

The emergence of generalized robots coincides with the steady march of AI. Meta released its Llama 3 models as open source last week, including the all-important detailed weightings. Developers now have free access to models that cost upwards of $10 billion to train. In this week’s earnings call, Meta stated its intent to spend big on future AI models, leading to a punishing decline in its share price.

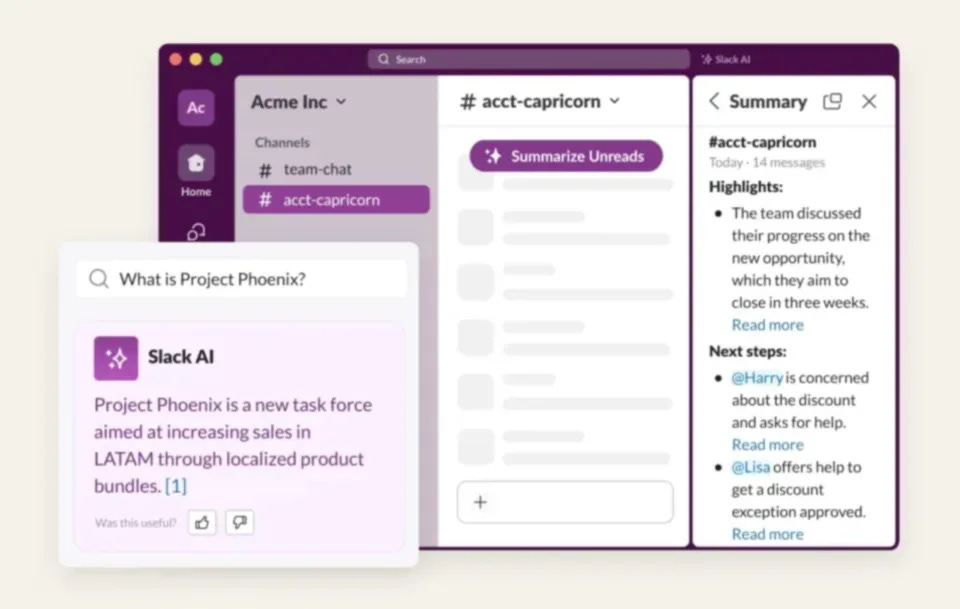

Trends such as Snowflake’s release of a coding co-pilot and Slack’s integration of AI demonstrate how quickly these new capabilities are being made available to the entire planet.

So, yes, it is quite likely that robots are coming. And they are likely to take on tasks that today require humans alone. I consider this a win for humanity in our never-ending goal to reduce the working day while boosting our living standards. For that to be true, the innovation has to be maintained, and the benefits of the wealth created have to be socially distributed.

The former is inevitable, the latter less so.

The robots are coming, and I bet TikTok will not leave the USA soon. The passage of a bill forcing the sale of TikTok, with the threat of a ban if the sale does not happen, will likely be challenged in court. TikTok will prevail. You heard it here first.

Essays of the Week

The Robotics Renaissance

Why we’re entering into an automation supercycle.

MARIO GABRIELE, APR 25, 2024

We are entering a robotics renaissance.

Over the next decade, intelligent, embodied androids will permeate industrial activities and aspects of everyday life, from assembling cars to folding laundry. The impending robot “population boom” is the result of technological breakthroughs, intense investor appetite, labor cost arbitrage, and long-standing demographic trends.

Why is this happening?

Modern AI models have changed the game

The same deep learning and neural techniques that have powered the generative AI revolution are helping robotics overcome one of its major limitations: a lack of training data. In the past, if you wanted to teach a robot to pick up a yellow block and place it in a blue bin, it had to learn through trial and error or extensive videos. New foundation models allow robots to learn from online text, images, and videos.

Generative AI can create new data to tackle edge cases

One of the challenges of operating in the physical world is the sheer volume of strange, niche possibilities. While AI thrives in decoding the fixed rules of chess or language patterns, it has historically struggled to account for real-world edge cases. Not only do new models help with this kind of “thinking,” they can also create new, relevant data to train on. Researchers can effectively train robots on visual scenarios created by generative AI.

Investors are looking for the next bonanza

Venture capitalists have aggressively invested in AI over the past few years, pouring billions into companies making new foundation models or building applications on top of them. As some of those well-capitalized startups close their doors, there is a growing itch to find the next great AI opportunity. Robotics is the beneficiary.

Robots aren’t that fussy about pay

A recent MIT study attempted to paint a rosy portrait of AI’s impact on the workforce by saying “only” 23% of wages paid would be “economically viable” to automate. That’s a lot! That figure will only increase as hardware costs decline and robots become increasingly intelligent. California’s decision to raise the minimum wage for fast food workers to $20 an hour and other similar initiatives may accelerate the shift.

Major economies lack sufficient working humans

America doesn’t have a large enough working-age population to support its young and elderly. The increase in the US’s “dependency ratio” is not unique – many other advanced countries face similar slumps. While immigration is one solution, robots are another.

What can new robots do?

Google’s RT-2 model best demonstrates what this new class of robotics is capable of. When an engineer prompted Google’s test robot to select the “extinct animal” from a range of dollar store figurines it had never seen before, it correctly selected a plastic dinosaur. The model’s ability to adapt to a new environment with new variables, generalize, and reason is novel and opens up huge possibilities. Modern robots are no longer constrained to a narrow pre-programmed problem.

Above: Google's RT-2 in action (Google)

Beyond RT-2, a range of robotics makers are demonstrating commercial utility across sectors from logistics to manufacturing to construction.

Who are the major players?

Google’s cutting-edge RT-2 model demonstrates the search monolith’s impressive AI research bonafides. Its DeepMind division is driving the technology forward and will continue to play a vital role. While we wouldn’t expect Google worker robots any time soon, autonomous vehicle subsidiary Waymo illustrates how the company applies artificial intelligence in the physical world.

OpenAI disbanded its robotics division in 2021, citing a lack of progress. It remains an important player in the space, investing in startups like Figure and Physical Intelligence. After being cut loose, OpenAI’s robotics team founded Covariant, a maker of a robotics “brain” and physical setups for sorting items, assembling them into kits, and more.

Amazon is a long-time customer and acquirer in the robotics space. After acquiring Kiva Systems for $775 million in 2012, the firm created a dedicated Amazon Robotics division that has continued investing in the space and trialing new solutions. Amazon is conducting one such trial with Agility, maker of the “Digit” humanoid robot, which it touts as “the first human-centric, multi-purpose robot made for logistics work.” Agility seems confident there’s demand for its products, announcing a plan to produce 10,000 per year via a new “RoboFab” in Oregon.

Tesla’s “Optimus” robot attracted skepticism when first unveiled, but it seems to be improving quickly. Earlier this year, Elon Musk shared a video of Optimus gently folding a shirt, displaying its dexterity. On a recent earnings call, Musk said he expected Optimus to be operational in Tesla’s factories before the end of the year and available for purchase by 2025. (Musk’s timelines are not always the most reliable.) Given Musk’s gift for galvanizing technical talent to solve a hard problem, it would be foolish to underestimate his efforts.

Figure, 1X, Agility, Apptronik, and Sanctuary are all building humanoid robotics, some of which are finally ready to leave their home factory and find gainful employment. Figure’s 5’6 worker is headed to BMW’s production lines and, as mentioned, Agility is sending its units off to Amazon. Apptronik’s androids are slated for a longer journey: via a partnership with NASA, the company intends to send its robots to space to assist astronauts.

What about Boston Dynamics?

Though no one makes better sizzle reels of robot parkour, Boston Dynamics has struggled to find commercial applications for its dexterous machines. In many ways, they represent the pinnacle of old-world robotics: extremely well-tuned machines that struggle to generalize or solve new problems.

Who else should you keep an eye on?

Monumental is reversing the construction industry’s “productivity collapse” with non-humanoid robots. The Netherlands-based startup recently raised $25 million to develop its automated bricklayers further and disrupt this massive, sleepy industry. In the long run, Monumental hopes its technology will reduce housing costs and reignite the production of beautiful, well-crafted buildings.

..Lots More

The Man Who Killed Google Search

EDWARD ZITRON, APR 23, 202414 MIN READ

This is the story of how Google Search died, and the people responsible for killing it.

The story begins on February 5th 2019, when Ben Gomes, Google’s head of search, had a problem. Jerry Dischler, then the VP and General Manager of Ads at Google, and Shiv Venkataraman, then the VP of Engineering, Search and Ads on Google properties, had called a “code yellow” for search revenue due to, and I quote, “steady weakness in the daily numbers” and a likeliness that it would end the quarter significantly behind.

For those unfamiliar with Google’s internal scientology-esque jargon, let me explain. A “code yellow” isn’t, as you might think, a crisis of moderate severity. The yellow, according to Steven Levy’s tell-all book about Google, refers to — and I promise that I’m not making this up — the color of a tank top that former VP of Engineering Wayne Rosing used to wear during his time at the company. It’s essentially the equivalent of DEFCON 1 and activates, as Levy explained, a war room-like situation where workers are pulled from their desks and into a conference room where they tackle the problem as a top priority. Any other projects or concerns are sidelined.

In emails released as part of the Department of Justice’s antitrust case against Google, Dischler laid out several contributing factors — search query growth was “significantly behind forecast,” the “timing” of revenue launches was significantly behind, and a vague worry that “several advertiser-specific and sector weaknesses” existed in search.

I should note that I’ve previously — and erroneously — referred to the “code yellow” as something that Gomes raised as a means of calling attention to the proximity of Google’s ads side getting too close to search. The truth is much grimmer — the Code Yellow was the rumble of the Rot Economy, with Google’s revenue arm sounding the alarm that its golden goose wasn’t laying enough eggs. Gomes, a Googler of 19 years that built the foundation of modern search engines, should go down as one of the few people in tech that actually fought for a real principle, destroyed by and replaced with Prabhakar Raghavan, a computer scientist class traitor that sided with the management consultancy sect. More confusingly, one of the problems was that there was insufficient growth in “queries,” as in the amount of things people were asking Google. It’s a bit like if Ford decided that things were going poorly because drivers weren’t putting enough miles on their trucks.

Anyway, a few days beforehand on February 1 2019, Kristen Gil, then Google’s VP Business Finance Officer, had emailed Shashi Thakur, then Google’s VP of Engineering, Search and Discover, saying that the ads team had been considering a “code yellow” to “close the search gap [it was] seeing,” vaguely referring to how critical that growth was to an unnamed “company plan.” To be clear, this email was in response to Thakur stating that there is “nothing” that the search team could do to operate at the fidelity of growth that ads had demanded.

(Editor’s note: If you read those emails, start from the bottom and work your way up).

Shashi forwarded the email to Gomes, asking if there was any way to discuss this with Sundar Pichai, Google’s CEO, and declaring that there was no way he’d sign up to a “high fidelity” business metric for daily active users on search. Thakur also said something that I’ve been thinking about constantly since I read these emails: that there was a good reason that Google’s founders separated search from ads.

On February 2, 2019, just one day later, Thakur and Gomes shared their anxieties with Nick Fox, a Vice President of Search and Google Assistant, entering a multiple-day-long debate about Google’s sudden lust for growth. The thread is a dark window into the world of growth-focused tech, where Thakur listed the multiple points of disconnection between the ads and search teams, discussing how the search team wasn’t able to finely optimize engagement on Google without “hacking engagement,” a term that means effectively tricking users into spending more time on a site, and that doing so would lead them to “abandon work on efficient journeys.” In one email, Fox adds that there was a “pretty big disconnect between what finance and ads want” and what search was doing.

When Gomes pushed back on the multiple requests for growth, Fox added that all three of them were responsible for search, that search was “the revenue engine of the company,” and that bartering with the ads and finance teams was potentially “the new reality of their jobs.”

On February 6th 2019, Gomes said that he believed that search was “getting too close to the money,” and ended his email by saying that he was “concerned that growth is all that Google was thinking about.”

On March 22 2019, Google VP of Product Management Darshan Kantak would declare the end of the code yellow. The thread mostly consisted of congratulatory emails until Gomes responded congratulating the team, saying that the plans architected as part of the code would do well throughout the year.

Prabhakar Raghavan, then Google’s Head of Ads and the true mastermind behind the code yellow, would respond curtly, saying that the current revenue targets were addressed “by heroic RPM engineering” and that “core query softness continued without mitigation” — a very clunky way of saying that despite these changes, query growth wasn’t happening.

Big Winners and Bold Concentration: Unveiling the Secret Portfolio Returns of Leading Venture Funds

Jason Shuman

General Partner at Primary

April 22, 2024

An exclusive inside look into how funds who have returned more than 10X to LPs make and lose money.

In 2015, Chris Dixon wrote a post in partnership with Horsley Bridge about the Babe Ruth Effect in Venture Capital. Last year I shined some light on that data with the following tweet.

The overwhelming response led me to reach out to some of our own Limited Partners (LPs) to pull some updated data on the Power Law in VC and shine some light on the differences between Good Funds they’ve looked at (3.53X MOIC average) vs. Great (17.95X MOIC average, all over 10X) funds. This dataset incorporated returns data from more than 5,700 companies and more than 150 mature VC funds.

The reality is that venture math is not spoken about nearly enough. More specifically, VCs don’t discuss the required unicorn or home run hit rate that drives top decile returns OR the concentration of capital and returns that is prevalent amongst the top performing managers.

While a new crop of managers have entered the world of seed investing and many push the narrative of it being a game of luck, some of the data below might argue otherwise.

I want to thank the team at Stepstone, one of the top institutional LPs in the industry who have been incredible partners to us, for sharing some of their anonymized data with me to help drive the industry forward.

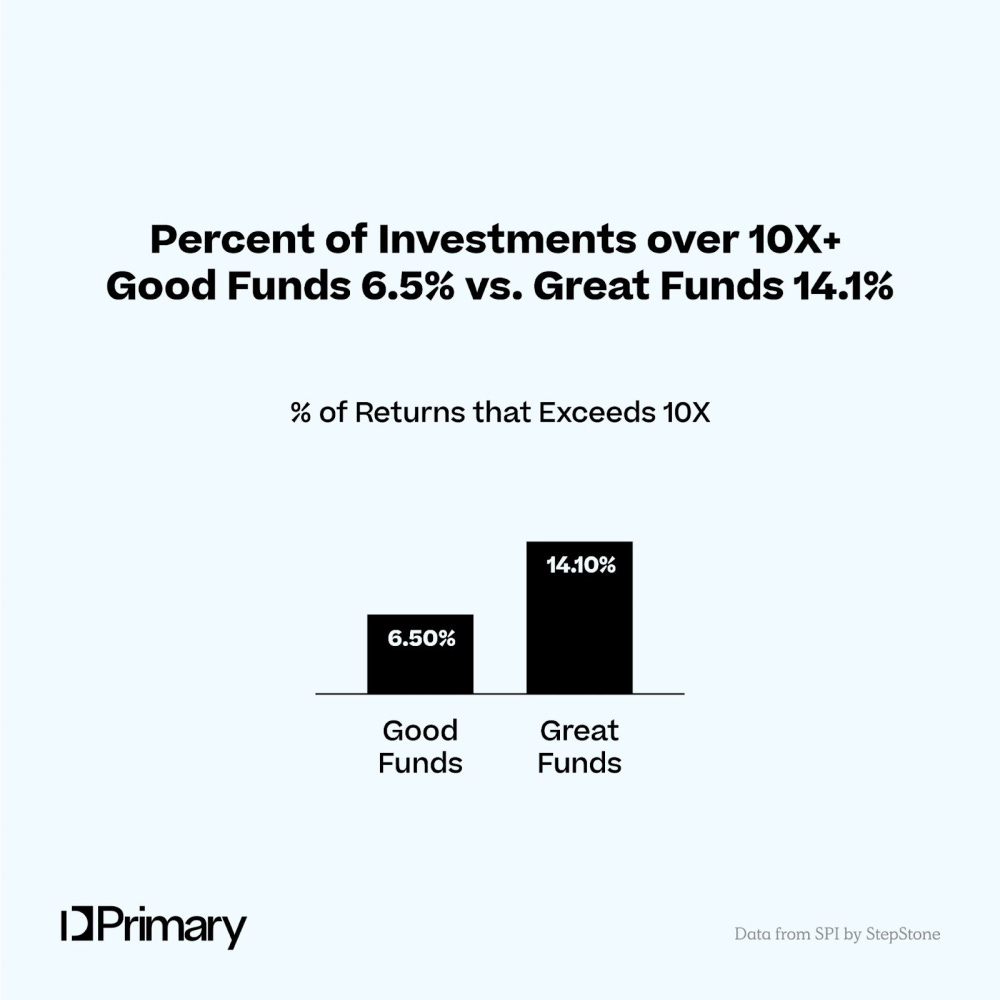

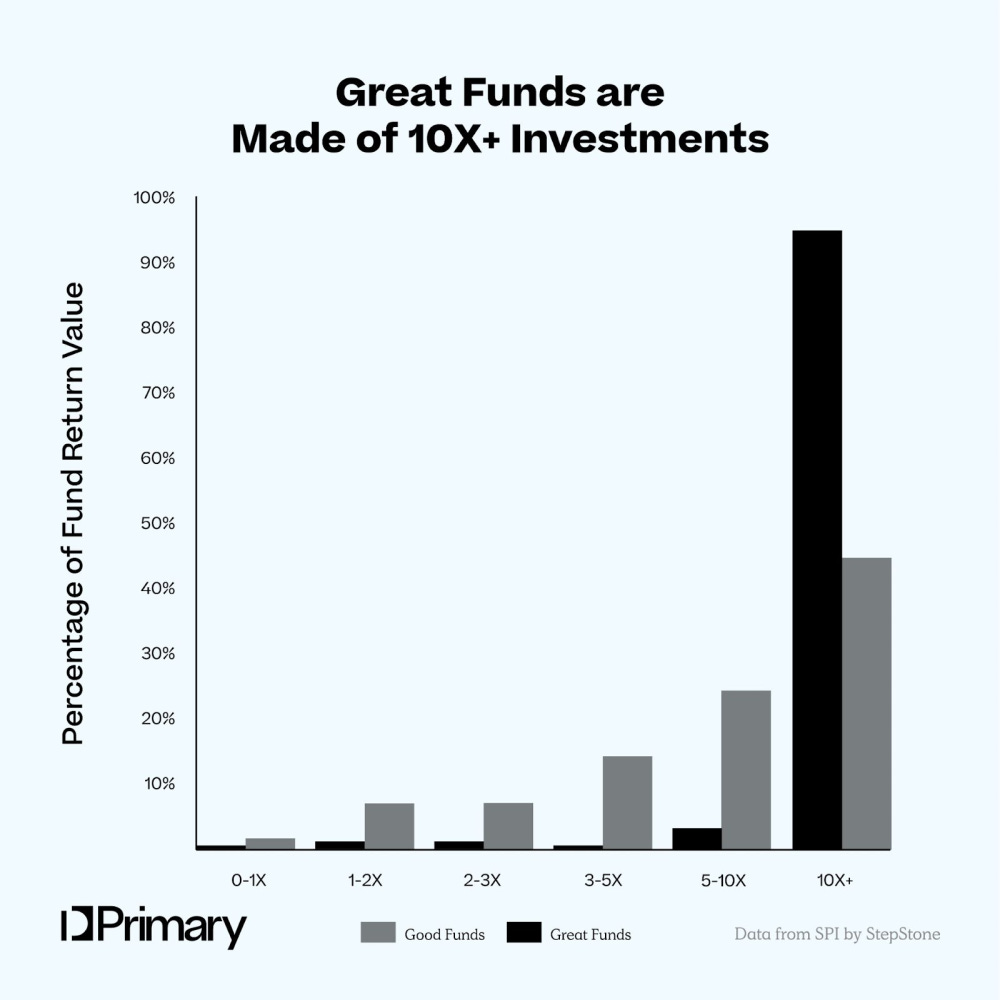

Great Funds hit home runs over 2X more than Good Funds

10X+ funds get 10X+ returns on 14.1% of their companies.

When we’ve modeled out how to return 5X net to LPs, meaning how much you send home to LPs after fees and carry, we’ve found time and time again that your unicorn hit rate needs to be close to 10-15%.

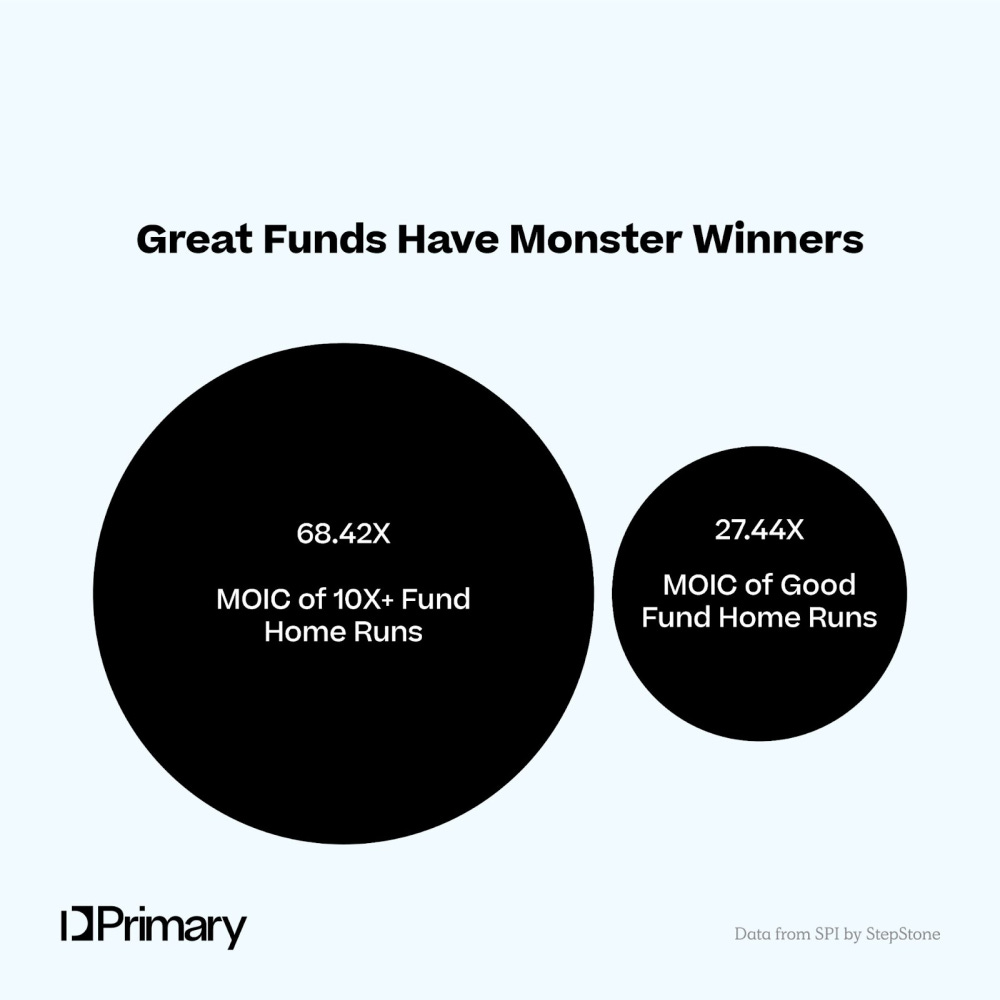

And Great Fund “Winners” are nearly 2.5X larger outcomes for them than good funds

Great Funds see an average MOIC of 68.42X on their 10X+ investments vs. Good Funds at 27.44X. This shows that not only are they winning, but they’re winning big.

While our analysis shows that a 10-15% unicorn hit rate is how you get to 5X net funds, the obvious outlier is when you are able to invest in something that becomes a decacorn or something worth multiple billions of dollars which could end up being equivalent of hitting 2, 3 or 10 unicorns!

Nearly all the returns are driven by the 10X+ investments for Great Funds, proving the VC power law…

91% of Great Fund Returns come from 14% of the Companies

Similar to Chris Dixon’s dataset from Horsley Bridge, we find that Great Funds not only have more home runs, they have home runs of greater magnitude. What this chart shows is Power Law of venture capital in full effect.

Great funds get 91% of their returns from 14% of their investments, while Good funds only get 40.3% of their returns from 10X+ investments, 25.1% from 5-10X and 15% from 3-5X.

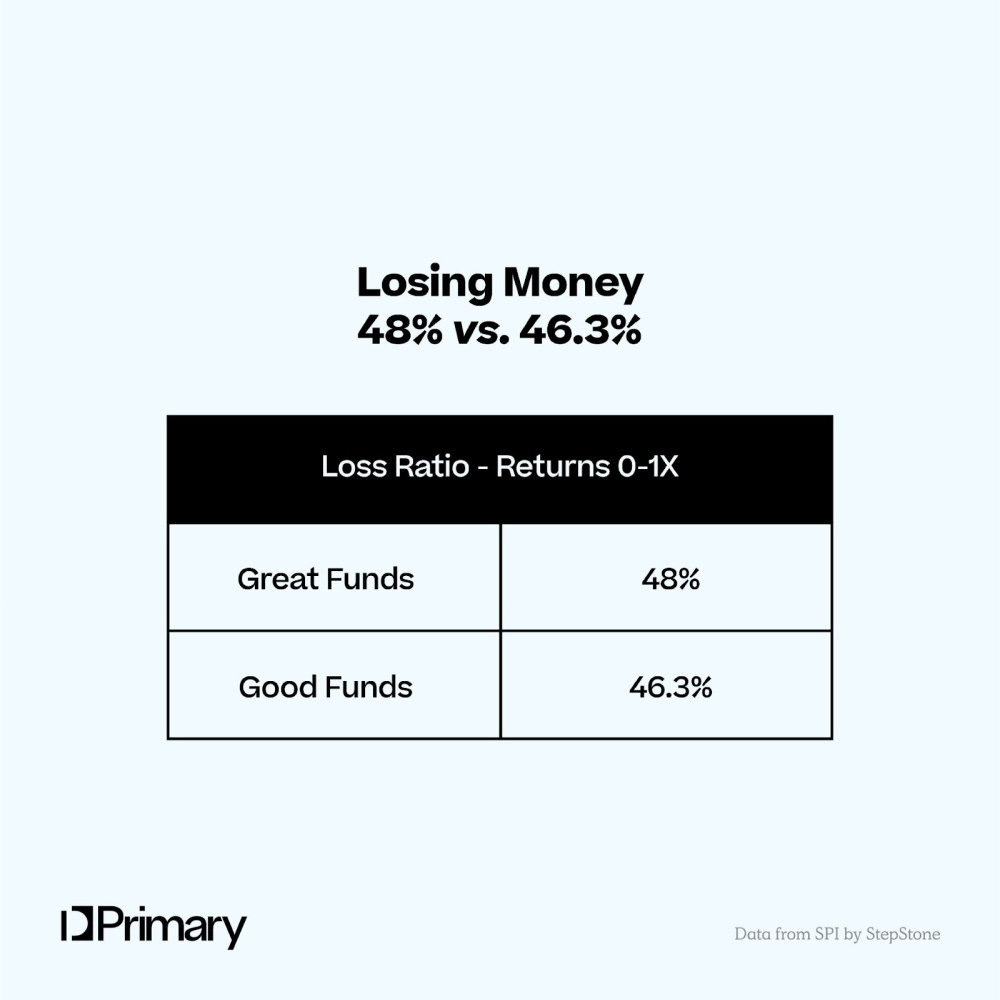

Across the board early-stage VCs are losing money almost half the time

Great Funds lose money just a little bit more than Good Funds

Another interesting stat is loss ratio. What we found in the data is that Great Funds tend to lose money slightly more than good funds, but that both lose money >46% of the time showing us that even the best tend to lose money on nearly half their bets.

Concentrating Capital - Great Funds know how to double down

But one of the most interesting pieces of information we found through our analysis is that Great Funds know how to concentrate capital exceptionally well.

Great Funds invested 38.7% of their capital in 23.1% of their investments, which generated over 5X+. 23.9% of that capital went into their 10X+ investments.

Good Funds on the other hand invested 18.6% of their capital in 14.4% of their investments that generated 5X+.

Assuming you hold all other variables constant, if the Good Funds had concentrated capital the exact same way that the Great Funds did, their returns would have gone from 3.53X MOIC to >8X MOIC.

This >2X difference in capital concentration can drive some serious improvement in returns (or downside) AND can reflect a manager's ability to both identify their winners early and earn the right to continue to invest in them along the way.

Ultimately, this dataset has left us thinking deeply about the following:

How early can you truly identify your winners and how does that impact your concentration strategy/portfolio construction?

How can you ensure that you'll be able to earn the right to concentrate?

You need to take big swings

You can't be afraid to lose money

Rising seed prices are likely to impact even the best fund returns by a couple of turns.

Video of the Week

AI of the Week

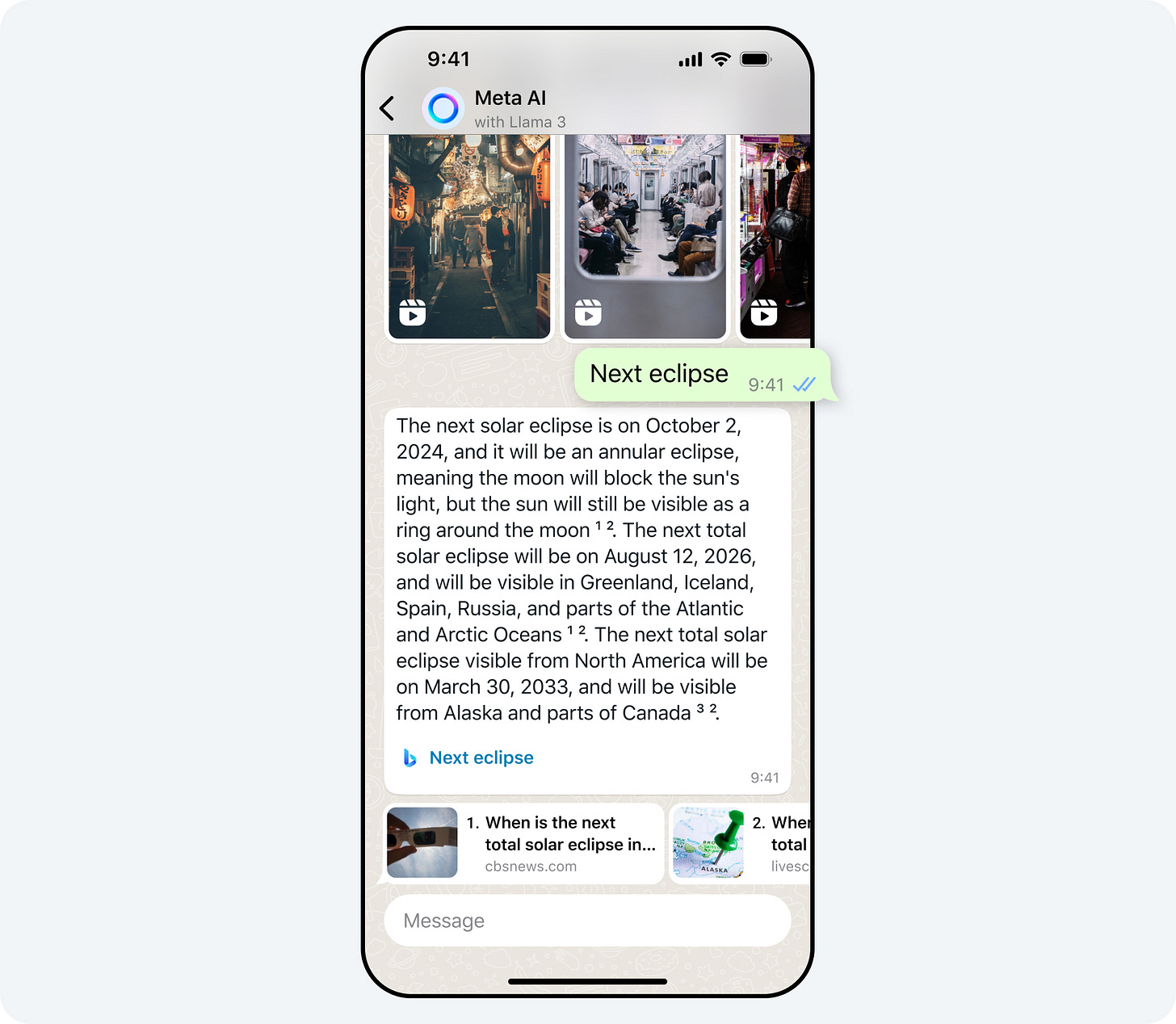

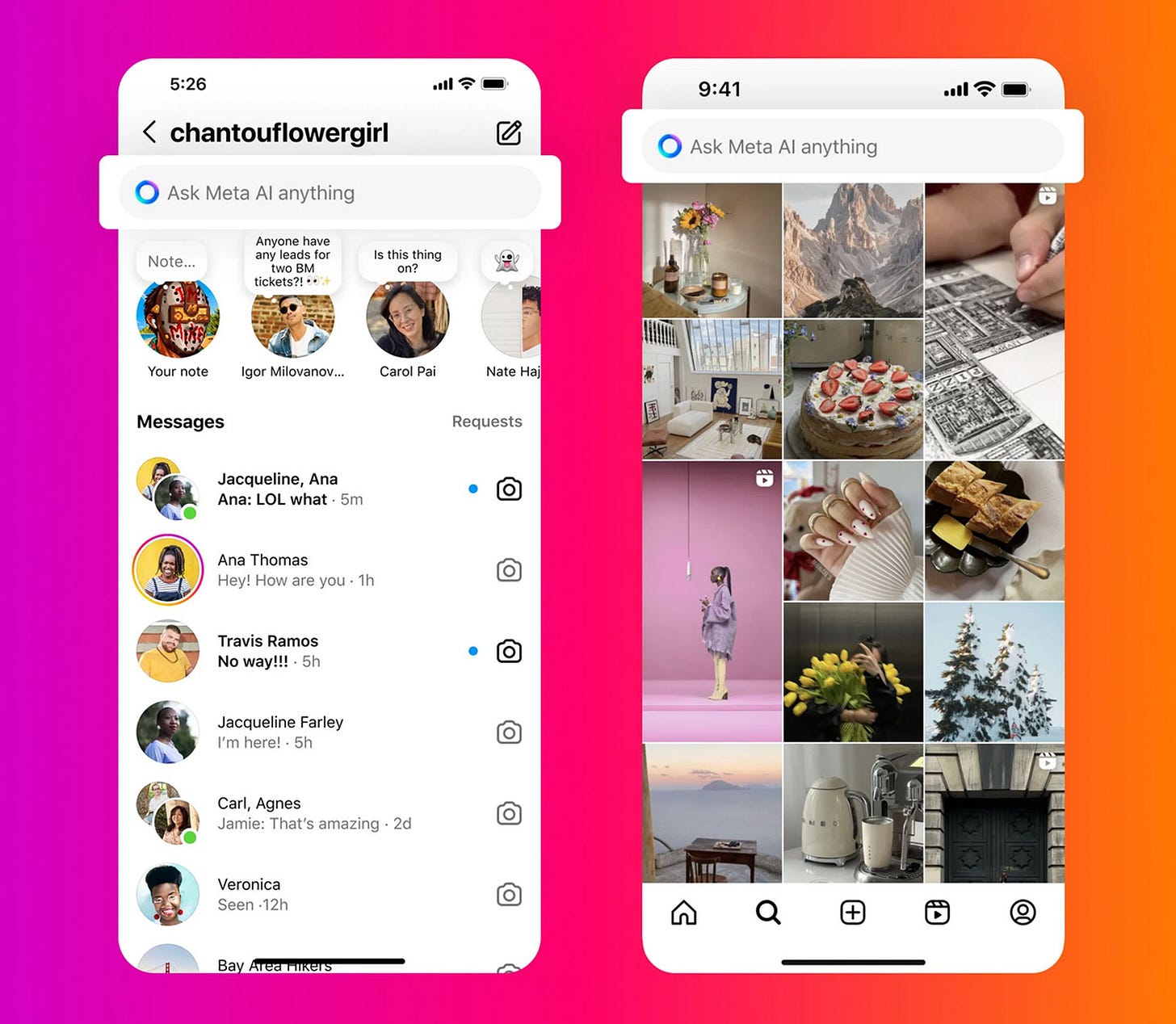

Meet Your New Assistant: Meta AI, Built With Llama 3

April 18, 2024

Takeaways

A better assistant: Thanks to our latest advances with Meta Llama 3, we believe Meta AI is now the most intelligent AI assistant you can use for free – and it’s available in more countries across our apps to help you plan dinner based on what’s in your fridge, study for your test and so much more.

More info: You can use Meta AI in feed, chats, search and more across our apps to get things done and access real-time information, without having to leave the app you’re using.

Faster images: Meta AI’s image generation is now faster, producing images as you type, so you can create album artwork for your band, decor inspiration for your apartment, animated custom GIFs and more.

Built with Meta Llama 3, Meta AI is one of the world’s leading AI assistants, already on your phone, in your pocket for free. And it’s starting to go global with more features. You can use Meta AI on Facebook, Instagram, WhatsApp and Messenger to get things done, learn, create and connect with the things that matter to you. We first announced Meta AI at last year’s Connect, and now, more people around the world can interact with it in more ways than ever before.

We’re rolling out Meta AI in English in more than a dozen countries outside of the US. Now, people will have access to Meta AI in Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia and Zimbabwe — and we’re just getting started.

Thanks to our latest advances with Meta Llama 3, Meta AI is smarter, faster and more fun than ever before.

Make Meta AI Work for You

Planning a night out with friends? Ask Meta AI to recommend a restaurant with sunset views and vegan options. Organizing a weekend getaway? Ask Meta AI to find concerts for Saturday night. Cramming for a test? Ask Meta AI to explain how hereditary traits work. Moving into your first apartment? Ask Meta AI to “imagine” the aesthetic you’re going for and it will generate some inspiration photos for your furniture shopping.

We want Meta AI to be available when you’re trying to get things done at your computer too, so we’re rolling out meta.ai (the website) today. Struggling with a math problem? Need help making a work email sound more professional? Meta AI can help! And you can log in to save your conversations with Meta AI for future reference.

Seamless Search Integration in the Apps You Know and Love

Meta AI is also available in search across Facebook, Instagram, WhatsApp and Messenger. You can access real-time information from across the web without having to bounce between apps. Let’s say you’re planning a ski trip in your Messenger group chat. Using search in Messenger you can ask Meta AI to find flights to Colorado from New York and figure out the least crowded weekends to go – all without leaving the Messenger app.

Meta AI in Feed

You can also access Meta AI when you’re scrolling through your Facebook Feed. Come across a post you’re interested in? You can ask Meta AI for more info right from the post. So if you see a photo of the northern lights in Iceland, you can ask Meta AI what time of year is best to check out the aurora borealis.

Spark Your Creativity With Meta AI’s Imagine Feature

We’re making image generation faster, so you can create images from text in real-time using Meta AI’s Imagine feature. We’re starting to roll this out today in beta on WhatsApp and the Meta AI web experience in the US.

You’ll see an image appear as you start typing — and it’ll change with every few letters typed, so you can watch as Meta AI brings your vision to life.

The images generated are also now sharper and higher quality, with a better ability to include text in images. From album artwork, to wedding signage, birthday decor and outfit inspo, Meta AI can generate images that bring your vision to life faster and better than ever before. It’ll even provide helpful prompts with ideas to change the image, so you can keep iterating from that initial starting point.

And it doesn’t stop there. Found an image you love? Ask Meta AI to animate it, iterate on it in a new style or even turn it into a GIF to share with friends.

With our most powerful large language model under the hood, Meta AI is better than ever. We’re excited to share our next-generation assistant with even more people and can’t wait to see how it enhances people’s lives. While these updates are specific to Meta AI in Facebook, Instagram, WhatsApp, Messenger and on the web, Meta AI is also available in the US on Ray-Ban Meta smart glasses — and coming to Meta Quest. We’ll have more to share in the weeks to come, so stay tuned!

Meta’s battle with ChatGPT begins now

Meta’s AI assistant is being put everywhere across Instagram, WhatsApp, and Facebook. Meanwhile, the company’s next major AI model, Llama 3, has arrived.

By Alex Heath, a deputy editor and author of the Command Line newsletter. He has over a decade of experience covering the tech industry.

Apr 18, 2024, 8:59 AM PDT

ChatGPT kicked off the AI chatbot race. Meta is determined to win it.

To that end: the Meta AI assistant, introduced last September, is now being integrated into the search box of Instagram, Facebook, WhatsApp, and Messenger. It’s also going to start appearing directly in the main Facebook feed. You can still chat with it in the messaging inboxes of Meta’s apps. And for the first time, it’s now accessible via a standalone website at Meta.ai.

For Meta’s assistant to have any hope of being a real ChatGPT competitor, the underlying model has to be just as good, if not better. That’s why Meta is also announcing Llama 3, the next major version of its foundational open-source model. Meta says that Llama 3 outperforms competing models of its class on key benchmarks and that it’s better across the board at tasks like coding. Two smaller Llama 3 models are being released today, both in the Meta AI assistant and to outside developers, while a much larger, multimodal version is arriving in the coming months.

The goal is for Meta AI to be “the most intelligent AI assistant that people can freely use across the world,” CEO Mark Zuckerberg tells me. “With Llama 3, we basically feel like we’re there.”

In the US and a handful of other countries, you’re going to start seeing Meta AI in more places, including Instagram’s search bar.

The Meta AI assistant is the only chatbot I know of that now integrates real-time search results from both Bing and Google — Meta decides when either search engine is used to answer a prompt. Its image generation has also been upgraded to create animations (essentially GIFs), and high-res images now generate on the fly as you type. Meanwhile, a Perplexity-inspired panel of prompt suggestions when you first open a chat window is meant to “demystify what a general-purpose chatbot can do,” says Meta’s head of generative AI, Ahmad Al-Dahle.

While it has only been available in the US to date, Meta AI is now being rolled out in English to Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe, with more countries and languages coming. It’s a far cry from Zuckerberg’s pitch of a truly global AI assistant, but this wider release gets Meta AI closer to eventually reaching the company’s more than 3 billion daily users.

There’s a comparison to be made here to Stories and Reels, two era-defining social media formats that were both pioneered by upstarts — Snapchat and TikTok, respectively — and then tacked onto Meta’s apps in a way that made them even more ubiquitous.

“I expect it to be quite a major product”

Some would call this shameless copying. But it’s clear that Zuckerberg sees Meta’s vast scale, coupled with its ability to quickly adapt to new trends, as its competitive edge. And he’s following that same playbook with Meta AI by putting it everywhere and investing aggressively in foundational models.

“I don’t think that today many people really think about Meta AI when they think about the main AI assistants that people use,” he admits. “But I think that this is the moment where we’re really going to start introducing it to a lot of people, and I expect it to be quite a major product.”

“Compete with everything out there”

The new web app for Meta AI.

Image: Meta

Today Meta is introducing two open-source Llama 3 models for outside developers to freely use. There’s an 8-billion parameter model and a 70-billion parameter one, both of which will be accessible on all the major cloud providers. (At a very high level, parameters dictate the complexity of a model and its capacity to learn from its training data.)

Google is combining its Android and hardware teams — and it’s all about AI

/

Under Rick Osterloh, a new platforms and devices team will be dedicated to bringing AI to your phone, your TV, and everything else that runs Android.

By David Pierce, editor-at-large and Vergecast co-host with over a decade of experience covering consumer tech. Previously, at Protocol, The Wall Street Journal, and Wired.

Apr 18, 2024, 8:26 AM PDT

AI is taking over at Google, and the company is changing in big ways to try to make it happen even faster. Google CEO Sundar Pichai announced substantial internal reorganizations on Thursday, including the creation of a new team called “Platforms and Devices” that will oversee all of Google’s Pixel products, all of Android, Chrome, ChromeOS, Photos, and more. The team will be run by Rick Osterloh, who was previously the SVP of devices and services, overseeing all of Google’s hardware efforts. Hiroshi Lockheimer, the longtime head of Android, Chrome, and ChromeOS, will be taking on other projects inside of Google and Alphabet.

This is a huge change for Google, and it likely won’t be the last one. There’s only one reason for all of it, Osterloh says: AI. “This is not a secret, right?” he says. Consolidating teams “helps us to be able to do full-stack innovation when that’s necessary,” Osterloh says. He uses the example of the Pixel camera: “You had to have deep knowledge of the hardware systems, from the sensors to the ISPs, to all layers of the software stack. And, at the time, all the early HDR and ML models that were doing camera processing… and I think that hardware / software / AI integration really showed how AI could totally transform a user experience. That was important. And it’s even more true today.”

Osterloh offers GPUs as another example: Google has poured resources into its Tensor products to keep up with Nvidia and others, and keeping hardware and software close together and aware of each other’s work makes it easier to improve quickly. This is the sort of thing you can try to do with two teams, he says, but when it’s a single team with a single leader and a single goal, everything can just be faster.

As we talk, Osterloh and Lockheimer are seated in Lockheimer’s office, talking to me on Google Meet. The two men have been friends and colleagues for decades and swear up and down that this change is not the result of some internal power struggle. (Even when I bring it up as a joke, they both loudly and quickly shoot me down.) They’ve been talking with Pichai about making this shift for more than two years, Lockheimer says, and now finally felt like the right time.

Snowflake releases a flagship generative AI model of its own

Kyle Wiggers @kyle_l_wiggers / 6:00 AM PDT•April 24, 2024

Image Credits: Joan Cros/NurPhoto / Getty Images

All-around, highly generalizable generative AI models were the name of the game once, and they arguably still are. But increasingly, as cloud vendors large and small join the generative AI fray, we’re seeing a new crop of models focused on the deepest-pocketed potential customers: the enterprise.

Case in point: Snowflake, the cloud computing company, today unveiled Arctic LLM, a generative AI model that’s described as “enterprise-grade.” Available under an Apache 2.0 license, Arctic LLM is optimized for “enterprise workloads,” including generating database code, Snowflake says, and is free for research and commercial use.

“I think this is going to be the foundation that’s going to let us — Snowflake — and our customers build enterprise-grade products and actually begin to realize the promise and value of AI,” CEO Sridhar Ramaswamy said in press briefing. “You should think of this very much as our first, but big, step in the world of generative AI, with lots more to come.”

An enterprise model

My colleague Devin Coldewey recently wrote about how there’s no end in sight to the onslaught of generative AI models. I recommend you read his piece, but the gist is: Models are an easy way for vendors to drum up excitement for their R&D and they also serve as a funnel to their product ecosystems (e.g. model hosting, fine-tuning and so on).

Arctic LLM is no different. Snowflake’s flagship model in a family of generative AI models called Arctic, Arctic LLM — which took around three months, 1,000 GPUs and $2 million to train — arrives on the heels of Databricks’ DBRX, a generative AI model also marketed as optimized for the enterprise space.

Slack rolls out its AI tools to all paying customers

The features include thread summaries and conversational search.

Lawrence Bonk, Thu, Apr 18, 2024

Slack just rolled out its AI tools to all paying users, after releasing them to a select subset of customers earlier this year. The company’s been teasing these features since last year and, well, now they’re here.

The AI auto-generates channel recaps to give people key highlights of stuff they missed while away from the keyboard or smartphone, for keeping track of important work stuff and office in-jokes. Slack says the algorithm that generates these recaps is smart enough to pull content from the various topics discussed in the channel. This means that you’ll get a paragraph on how plans are going for Jenny’s cake party in the conference room and another on sales trends or whatever.

There’s something similar available for threads, which are smaller conversations between one or a few people. The tool will recap any of these threads into a short paragraph. Customers can also opt into a daily recap for any channel or thread, delivered each morning.

Another interesting feature is conversational search. The various Slack channels stretch on forever and it can be tough to find the right chat when necessary. This allows people to ask questions using natural language, with the algorithm doing the actual searching.

These tools aren’t just for English speakers, as Slack AI now offers Japanese and Spanish language support. Slack says it’ll soon integrate some of its most-used third-party apps into the AI ecosystem. To that end, integration with Salesforce’s Einstein Copilot is coming in the near future.

It remains to be seen if these tools will actually be helpful or if they’re just more excuses to put the letters “AI” in promotional materials. I’ve been on Slack a long time and I haven’t encountered too many scenarios in which I’d need a series of auto-generated recaps, as longer conversations are typically relegated to one-on-one meetings, emails or video streams. However, maybe this will change how people use the service.

News Of the Week

House Passes TikTok Ban Bill: What It Means for TikTok Users

APR 22, 2024, JEREMY GRAY

The House of Representatives, led by a Republican majority, passed legislation on Saturday that will ban the popular social media app TikTok in the United States if its Chinese owner does not sell its stake in the next year.

The TikTok ban, part of a larger foreign aid package, will go to the Senate. If passed there, it will then land on President Biden’s desk. The package includes support for Ukraine and Israel and is a public priority for Biden and other leading Democrats. The Senate is expected to pass the bill, although it may include some

This latest measure follows an earlier attempt to force ByteDance Ltd. to sell off its interest in TikTok, which also passed the House before stalling in Senate.

Like that earlier legislation, the new one received overwhelming bipartisan support in the House of Representatives. The new measure passed through the House by a 360 to 58 vote — margins not often seen in the heavily polarized House.

Even though the bill has passed the House and is expected to pass the Senate, its path through Congress is but one hurdle. TikTok’s parent company is expected to present legal challenges to any legislation that forces it to sell its share in the platform.

“We will not stop fighting and advocating for you,” said TikTok CEO Shou Zi Chew in a video posted on TikTok last month. “We will continue to do all we can, including exercising our legal rights, to protect this amazing platform that we have built with you.”

Following the House’s vote on Saturday, TikTok released a new statement on Sunday, emphasizing the threat to free speech that a forced sale may pose.

“It is unfortunate that the House of Representatives is using the cover of important foreign and humanitarian assistance to once again jam through a bill that would trample the free speech rights of 170 million Americans,” TikTok explains in a statement.

This echoes the company’s stance on state-level attempts to ban or curtail access to TikTok when ByteDance also relied on First Amendment-type arguments.

The American Civil Liberties Union agrees with ByteDance, having urged Senate to reject the TikTok bill in March.

“Make no mistake: the House’s TikTok bill is a ban, and it’s blatant censorship. Today, the House of Representatives voted to violate the First Amendment rights of more than half of the country. The Senate must reject this unconstitutional and reckless bill,” said Jenna Leventoff, senior policy counsel at ACLU, in March.

Unsurprisingly, lawmakers in favor of forcing China-based ByteDance to sell its stake in TikTok argue that the move has little to do with censorship and free speech but is concerned entirely with national security. This is something legislators have been trying to achieve for more than a year.

However, given that some bill proponents, such as Democratic Senator Mark Warner, chairman of the Senate Intelligence Committee, argue that TikTok can be leveraged as a propaganda tool by the Chinese government, especially against young Americans, the issues of national security and free speech are clearly intertwined.

Legal experts expect that if challenged, lawmakers may struggle to defend their bill. They must show that TikTok’s parent company, ByteDance, does pose a legitimate national security threat and that any potential First Amendment violations are due to provable government interests. Lawmakers must also demonstrate that their legislation is very narrowly focused to deal only with these concerns if they can be established.

Democratic Representative Ro Khanna has expressed doubt that a TikTok ban could survive legal challenges.

In any event, the groundwork for a TikTok ban has passed its first test — getting House support. Even if the measure receives Senate approval and is signed into law by the President, it will still be some time before TikTok is sold or taken offline in the U.S.

Andreessen Horowitz’s $7.2B new funds for a ‘new era’

Alex Wilhelm, Theresa Loconsolo/ 7:57 AM PDT•April 17, 2024

What is worth $11 billion and wants to go to Mars to collect rocks? NASA’s mission to Mars to collect rocks that was expected to cost $11 billion and take ages. So, the U.S. space agency is throwing the doors open to get more input, and that means that startups are looking at an opportunity that is truly out of this world.

But that’s not the only thing going on. Today’s Equity episode is focused on all things startups, which means we also got to chat through Two Chairs’ recent and massive Series C, Quilt’s heat pump work and fundraise, and several IPO updates. Here’s hoping that after Ibotta and Rubrik get out the door, more IPOs follow.

Also on the show today was a grip of venture capital news. Bay Bridge Ventures is raising a $200 million climate fund — it has lots of good company there, given rising LP interest in climate tech more generally — and a SpaceX alum is building a new VC firm that we covered.

To close, the massive, gobsmackingly big $7.2 billion worth of new funds from a16z. We dug into their breakdown on the podcast, but the short version is that it appears that the venture slowdown has not managed to impede the venture firm’s golden touch when it comes to fundraising. Hit play, let’s have some fun!

State of Pre-Seed: Q1 2024

Author: Peter Walker, Published date: April 23, 2024

Fundraising for pre-seed companies stayed robust in Q1 2024, dominated by SAFEs and convertible notes; valuation caps remained flat.

Executive summary

What is a pre-seed round anyway? Many startups will begin their fundraising journeys in this stage, but there are no consensus definitions in this part of the venture market.

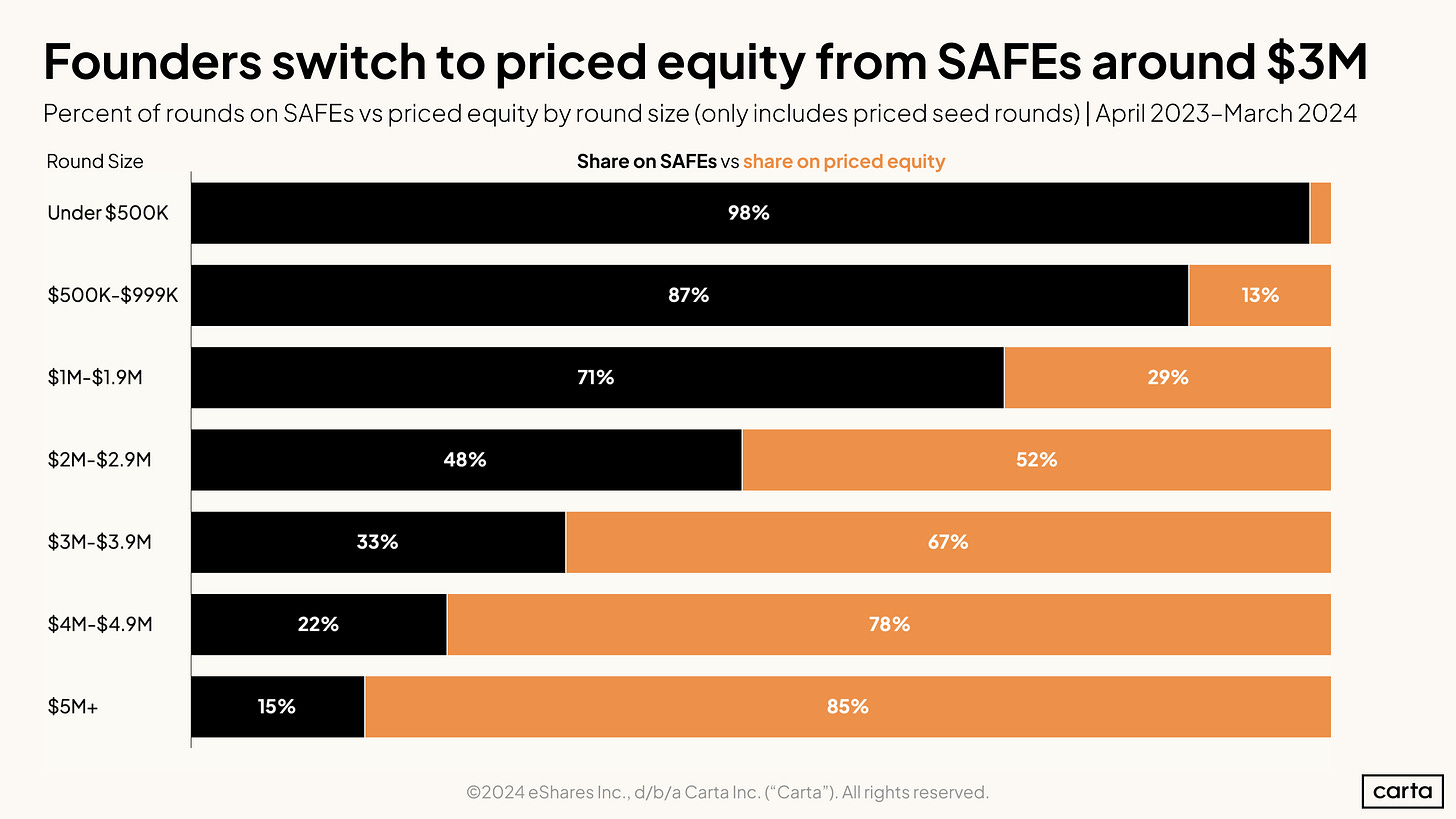

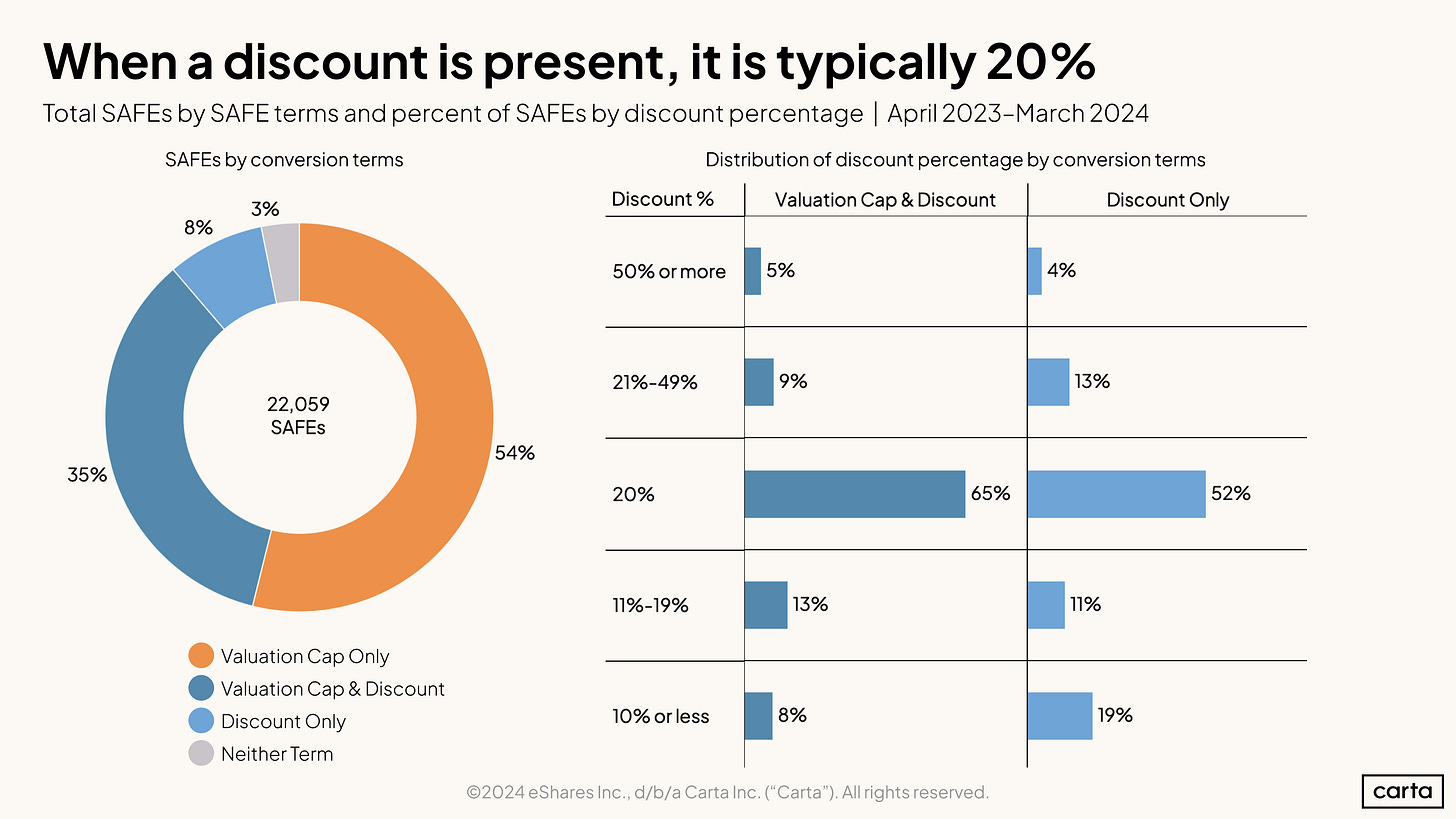

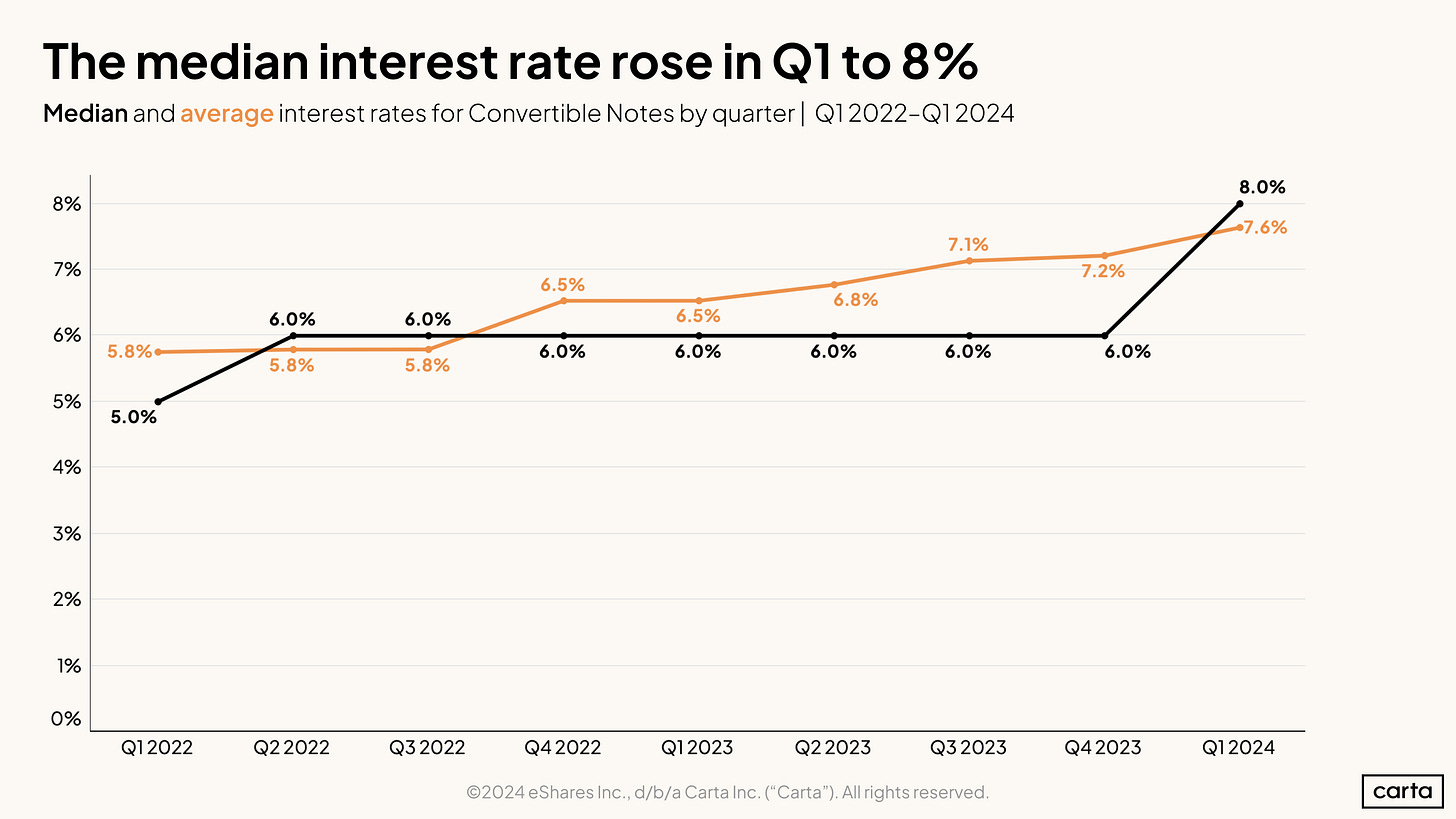

There is one clear trend: Companies at this stage are fundraising using SAFEs (Simple Agreement for Future Equity) and convertible notes as opposed to the standard priced venture rounds.

Given this trend, we think our data set can help founders make sense of the ambiguity. Since 2020, companies on the Carta cap table platform have signed 101,865 individual SAFEs and convertible notes before raising any priced funding. That’s $14.5 billion invested into the earliest startups.

Of course founders on Carta who have yet to raise a million dollars have access to our cap table platform for free through Carta Launch, which also includes tools to create and fund SAFEs in a few clicks.

If you’d like a high-res deck of all the slides below PLUS five extra graphics breaking down SAFE rounds by industry—download that here.

Q1 highlights

SAFEs have eaten more of the early-stage market: SAFEs are now the preferred investment instrument for all rounds under $3 million.

Valuation caps stayed flat in Q1: The SAFE round valuation caps have shifted less frequently than the full priced round valuations.

Small checks made an impact: 41% of checks in SAFE rounds under $1 million were below $25,000.

Key trends

SAFEs

Convertible notes

Google fires 28 employees after sit-in protest over controversial Project Nimbus contract with Israel

Googlers in Sunnyvale entered Google Cloud CEO Thomas Kurian's office

Paul Sawers@psawers / 3:41 AM PDT•April 18, 2024

Comment

Image Credits: Justice Speaks

Google has terminated the employment of 28 staff following a prolonged sit-in protest at the company’s Sunnyvale and New York offices.

The employees were protesting against Project Nimbus, a $1.2 billion cloud computing contract inked by Google and Amazon with the Israeli government and its military three years ago. The controversial project, which reportedly also provides Israel with the full suite of Google Cloud’s artificial intelligence and machine learning technology, allegedly has strict contractual stipulations that prevent Google and Amazon from bowing to boycott pressure. This effectively means that they must continue providing services to Israel no matter what.

Conflict

Employees at Google have protested and publicly chastised the contract since 2021, but as the Israel-Palestine conflict continues to escalate in the wake of last October’s attacks by Hamas, this unrest is spilling further into the workforces of corporations deemed not only to be helping Israel, but also actively profiteering from the conflict.

While the latest rallies included demonstrations outside Google’s Sunnyvale and New York offices, as well as Amazon’s Seattle HQ, protestors went one step further by going inside the buildings, including the office of Google Cloud CEO Thomas Kurian.

In a statement issued to TechCrunch via anti-Big Tech advocacy firm Justice Speaks, Hasan Ibraheem, a Google software engineer participating in the New York City sit-in protest, said that by providing cloud and AI infrastructure to the Israeli military, Google is “directly implicated in the genocide of the Palestinian people.”

“It’s my responsibility to do everything I can to end this contract even while Google pretends nothing is wrong,” Ibraheem said. “The idea of working for a company that directly provides infrastructure for genocide makes me sick. We’ve tried sending petitions to leadership but they’ve gone ignored. We will make sure they can’t ignore us anymore. We will make as much noise as possible. So many workers don’t know that Google has this contract with the IOF [Israel Offensive Forces]. So many don’t know that their colleagues have been facing harassment for being Muslim, Palestinian and Arab and speaking out. So many people don’t realize how complicit their own company is. It’s our job to make sure they do.”

Nine Google workers were also arrested and forcibly removed from the company’s offices — four in New York and five in Sunnyvale. A separate statement issued by Justice Speaks on behalf of the “Nimbus nine” protestors, said that they had demanded to speak with Kurian, but their request was denied.

Startup of the Week

Boston Dynamics’ Atlas humanoid robot goes electric

A day after retiring the hydraulic model, Boston Dynamics' CEO discusses the company’s commercial humanoid ambitions

Brian Heater@bheater / 6:07 AM PDT•April 17, 2024

Comment

Image Credits: Boston Dynamics

Atlas lies motionless in a prone position atop interlocking gym mats. The only soundtrack is the whirring of an electric motor. It’s not quiet, exactly, but it’s nothing compared to the hydraulic jerks of its ancestors.

As the camera pans around the robot’s back, its legs bend at the knees. It’s a natural movement, at first, before crossing into an uncanny realm, like something out of a Sam Raimi movie. The robot, which appeared to be lying on its back, has effectively switched positions with this clever bit of leg rotation.

As Atlas fully stands, it does so with its back to the camera. Now the head spins around 180 degrees, before the torso follows suit. It stands for a moment, offering the camera its first clear view of its head — a ring light forming the perimeter of a perfectly round screen. Once again, the torso follows the head’s 180, as Atlas walks away from the camera and out of frame.

A day after retiring the hydraulic version of its humanoid robot, Boston Dynamics just announced that — like Bob Dylan before it — Atlas just went electric.

The pace is fast, the steps still a bit jerky — though significantly more fluid than many of the new commercial humanoids to which we’ve been introduced over the past couple of years. If anything, the gait brings to mind the brash confidence of Spot, Atlas’ cousin whose branch on the evolutionary tree forked off from the humanoid a few generations ago.

All-new Atlas

The new version of the robot is virtually unrecognizable. Gone is the top-heavy torso, the bowed legs and plated armor. There are no exposed cables anywhere to be found on the svelte new mechanical skeleton. The company, which has warded off reactionary complaints of robopocalypse for decades, has opted for a kinder, gentler design than both the original Atlas and more contemporary robots like the Figure 01 and Tesla Optimus.

The new robot’s aesthetic more closely matches that of Agility’s Digit and Apptronik’s Apollo. There’s a softer, more cartoonish design to the traffic-light-headed robot. It’s the “All New Atlas,” according to the video. Boston Dynamics has bucked its own trend by maintaining the research name for a product it will be positioning toward commercialization. SpotMini became Spot. Handle became Stretch. For now, however, Atlas is still Atlas.

“We might revisit this when we really get ready to build and deliver in quantity,” Boston Dynamics CEO Robert Playter tells TechCrunch. “But I think for now, maintaining the branding is worthwhile.”