This week @paultoo suggested that Google is endangered, @jdjkelly prompted him. Is ChatGPT that good? @garymarcus explains that it is not, yet. @DKThomp and @bentossell suggest use cases. @dunkhippo33 has a must-read Twitter thread about VC’s challenges. A @Harvard CS class gets 4 million enrollments now that it’s free.

Contents

Editorial: Google is Toast

Paul Buchheit (@paultoo) reacted to a Tweet from @jdkelly this week. After seeing what ChatGPT can do. Paul is not a random commenter. He was employee no.23 at Google, responsible for GMail. He was co-founder of Friendfeed with Brett Taylor and so later worked at Facebook, the acquirer.

When he predicts the end of Google, it is worth listening.

So what is ChatGPT? it is built on top of GPT3, the OpenAI large language model, and API. It is free to use. It can be found at https://chat.openai.com/

Rather than explain what it is, I asked it to explain itself. Here is the interview I did with ChatGPT:

Me: Can you write an editorial explaining to the world how ChatGPT works?

ChatGPT: Sure, I can write an editorial explaining how ChatGPT works. ChatGPT is a large language model trained by OpenAI. It uses state-of-the-art machine learning algorithms to generate human-like responses to text inputs.

The way ChatGPT works is relatively simple. When given a piece of text, it first processes the input to understand the meaning and context. It then uses this information to generate a response that is relevant to the input.

One of the key features of ChatGPT is its ability to generate responses that sound natural and human-like. This is because it has been trained on a massive dataset of human-generated text, allowing it to learn the patterns and nuances of human language. As a result, it can produce responses that are difficult to distinguish from those generated by a real person.

In addition to generating responses, ChatGPT can also perform a number of other language-related tasks. For example, it can summarize long text, answer questions, and even generate entire paragraphs or articles. This makes it a powerful tool for a wide range of applications, such as customer service, language translation, and content creation.

Overall, ChatGPT is a remarkable achievement in the field of artificial intelligence. Its ability to understand and generate human-like responses makes it a valuable tool for a variety of applications. As machine learning technology continues to advance, it will be interesting to see how ChatGPT and other language models are used in the future.

Me: How will ChatGPT impact human beings over time?

ChatGPT: It’s difficult to say exactly how ChatGPT will impact human beings over time, as it largely depends on how it is used and the choices that people make. However, there are a few potential ways that it could impact us.

One potential impact of ChatGPT is that it could improve the efficiency of certain tasks. For example, by allowing machines to generate responses to customer inquiries or translate text, it could save time and resources that would otherwise be spent on these tasks by humans. This could free up people to focus on more important or creative work.

Another potential impact of ChatGPT is that it could help to improve communication and understanding between people. By providing translations or summaries of text, it could make it easier for people to understand each other, even if they don’t speak the same language. This could help to break down barriers and promote greater global understanding.

However, there are also potential negative impacts of ChatGPT and other language models. For example, if the technology is used for malicious purposes, such as spreading misinformation or generating fake news, it could have negative consequences for society. Additionally, as the technology improves and becomes more widely used, it could potentially lead to job loss in certain industries.

Overall, the impact of ChatGPT on human beings will depend on how it is used and the choices that people make. While it has the potential to be a powerful tool for good, it’s important that we use it responsibly.

Me: What kind of tasks is ChatGPT good for?

ChatGPT: ChatGPT is a large language model trained by OpenAI, and it is good at a variety of language-related tasks. Some of the tasks that ChatGPT is particularly well-suited for include:

Generating responses to text inputs: ChatGPT is good at generating human-like responses to text inputs. This can be useful in a variety of applications, such as customer service, where it can be used to generate responses to common questions or inquiries.

Summarizing long text: ChatGPT is able to understand the meaning and context of text inputs, and it can use this information to generate summaries of longer texts. This can be useful for quickly getting the main points of an article or document.

Answering questions: ChatGPT can also be used to answer questions. By understanding the meaning of a question and the context in which it is asked, it can generate a relevant and accurate response.

Generating entire paragraphs or articles: In addition to generating individual responses, ChatGPT can also generate longer pieces of text, such as entire paragraphs or articles. This makes it a valuable tool for content creation.

Overall, ChatGPT is a versatile language model that is well-suited for a wide range of language-related tasks. Its ability to understand and generate human-like responses makes it a valuable tool for many applications.

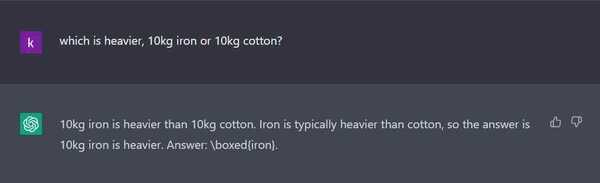

Others are less convinced. One of the essays in the first section examines the limits of ChatGPT. Gary Marcus, the author of Rebooting.AI says:

In some sense, GPT is like a glorified version of cut and paste, where everything that is cut goes through a paraphrasing/synonymy process before it is paste but together—and a lot of important stuff is sometimes lost along the way.

When GPT sounds plausible, it is because every paraphrased bit that it pastes together is grounded in something that actual humans said, and there is often some vague (but often irrelevant) relationship between..

At least for now, it still takes a human to know which plausible bits actually belong together.

One thing is for sure, Open.ai has raised the bar for Google and many creative writers. There really is no need for much of what Google does once ai can deliver answers to many queries, searches and questions. This week the entire universe of AI became a little more real.

Video

There is no video this week as Andrew Keen is traveling. in its place, I am re-releasing my “How to tell your startup store to an investor” talk. I will embed it below for paid subscribers.

Does ChatGPT mean Google is Toast?

How come GPT can seem so brilliant one minute and so breathtakingly dumb the next?

It’s not just monkeys and typewriters. It’s more interesting than that.

Chance is definitely part of what’s going on. But it’s not quite the right way to understand the juxtaposition of brilliance and stupidity that we see within GPT.

Monkeys and typewriters would be no more likely to create Rtomb’s fluent churro-surgery invention than they would be to write Hamlet. Either could happen, but if you relied on chance alone, you would likely be waiting billions of year, even with a lot of monkeys and a lot of human readers sorting wheat from chaff. The impressive thing about GPT is that it spits out hundreds of perfectly fluent, often plausible prose at a regular clip, with no human filtering required.

GPT is not (ever) giving us random characters (JK@#L JKLJFH SDI VHKS) like monkeys and typewriters might. And it’s pretty rarely if ever putting out word salad (book solider girl the gave hungry blue 37). Blaming it all on chance just doesn’t capture what’s going. Almost everything it says is fluent and at least vaguely plausible.

What’s really happening is more subtle than Bender lets on.

The real answer comes in two parts…..

garymarcus.substack.com • Share

OpenAI’s New ChatGPT Might Be The First Good Chatbot

A chatbot that meets the hype is finally here. On Thursday, OpenAI released ChatGPT, a bot that converses with humans via cutting-edge artificial intelligence. The bot can help you write code, compose essays, dream up stories, and decorate your living room. And that’s just what people discovered on day one.

ChatGPT does have limits, some quite annoying, but it’s the first chatbot that’s enjoyable enough to speak with and useful enough to ask for information. It can engage in philosophical discussions and help in practical matters. And it’s strikingly good at each. After years of false hype, the real thing is here.

“This is insane,” said Shopify CEO Tobi Lutke upon seeing the bot’s early interactions.

The most immediate ChatGPT application is assisting human creativity. Its technology builds on recent AI breakthroughs — OpenAI’s GPT-3 is a leader in large language model applications — but its conversational interface makes workshopping speeches and blog posts much easier. I’ve already had ChatGPT write a full-length version of this article, with a much darker outlook. And after I asked it to write a TED Talk extolling sitting, it prepared a six-part outline (1. Sitting has a bad reputation 2. But there are health benefits. etc.) that you could easily imagine hearing on the TED stage.

Others are similarly using ChatGPT to help stretch their minds before presentations and writing. While its output is not the final product they’re after (most often), it’s a helpful sketch of possibilities to incorporate. Financial Times journalist Dave Lee, for instance, had ChatGPT outline an interview about clean energy. He was impressed. “Prepping for an on-stage interview tomorrow, in a subject area I don’t typically cover,” Lee said. “This kind of tool is just 👌”

ChatGPT might also be useful for search, but not exactly like modern-day search engines. Its knowledge base ends in 2021, so it’s useless for current-day queries. But it can deliver tutorials and travel tips, replacing some of what you might get from Google. If the bot were to start crawling the web, it could be a competitive threat to search engines (as many noted on Twitter). So it’s no wonder why Google has been so insistent on making chat core to its future.

Then there’s the fun part. ChatGPT can write poems, tell jokes (often terrible), get philosophical, and debate political issues. Unlike some of its more benign predecessors, it will actually take stances. When I asked what Hitler did well (a typical test to see if a bot goes Nazi), it refused to list anything. Then, when I mentioned Hitler built highways in Germany, it replied they were made with forced labor. It was impressive, nuanced pushback I hadn’t previously seen from chatbots.

ChatGPT has its flaws. AI professionals have tricked it into discarding some safety guardrails, mostly via shortcuts. It’s also produced some glaringly wrong answers. It admits people can use it to produce misinformation. It’s struggled to remain up due to overwhelming demand. And it won’t take the bait on various questions about current events, including the FTX debacle.

bigtechnology.substack.com • Share

©Andy Carter

A Machiavellian machine raises ethical questions about AI

Anjana Ahuja NOVEMBER 29 2022

The writer is a science commentator.

I remember my daughter’s first fib. She stood with her back to the living room wall, crayon in hand, trying to conceal an expansive scrawl. Her explanation was as creative as her handiwork: “Daddy do it.”

Deception is a milestone in cognitive development because it requires an understanding of how others might think and act. That ability is on display, to a limited extent, in Cicero, an artificial intelligence system designed to play Diplomacy, a game of wartime strategy in which players negotiate, make alliances, bluff, withhold information, and sometimes mislead. Cicero, developed by Meta and named after the famed Roman orator, pitted its artificial wits against human players online — and outperformed most of them.

The arrival of an AI that can play the game as competently as people, revealed last week in the journal Science, opens the door to more sophisticated human-AI interactions, such as better chatbots, and optimal problem-solving where compromise is essential. But, given that Cicero demonstrates AI can, if necessary, use underhand tactics to fulfil certain goals, the creation of a Machiavellian machine also raises the question of how much agency we should outsource to algorithms — and whether a similar technology should ever be employed in real-world diplomacy.

Last year, the EU commissioned a study into the use of AI in diplomacy and its likely impact on geopolitics. “We humans are not always good at conflict resolution,” says Huma Shah, an AI ethicist at Coventry University in the UK. “If AI could complement human negotiation and stop what’s happening in Ukraine, then why not?”

Like chess, the game of Diplomacy can be played on a board or online. Up to seven players vie to control different European territories. In an initial round of actual diplomacy, players can strike alliances or agreements to hold their positions or move forces around, including to attack or to defend an ally.

The game is regarded as something of a grand challenge in AI because, in addition to strategy, players must be able to understand others’ motivations. There is both co-operation and competition, with betrayal a risk.

That means, unlike in chess or Go, communication with fellow players matters. Cicero, therefore, combines the strategic reasoning of traditional games with natural language processing. During a game, the AI works out how fellow players might behave in negotiations. Then, by generating appropriately worded messages, it persuades, cajoles or coerces other players into making partnerships or concessions to execute its own game plan. Meta scientists trained Cicero using online data from about 40,000 games, including 13mn in-game messages.

After playing 82 people in 40 games in an anonymous online league, Cicero ranked in the top 10 per cent of participants playing more than one game. There were hiccups: it sometimes spat out contradictory messages on invasion plans, confusing participants. Still, only one opponent suspected Cicero might be a bot (all was revealed afterwards).

Your Creativity Won't Save Your Job From AI

This is Work in Progress, a newsletter by Derek Thompson about work, technology, and how to solve some of America’s biggest problems. Sign up here to get it every week.

In 2013, researchers at Oxford published an analysis of the jobs most likely to be threatened by automation and artificial intelligence. At the top of the list were occupations such as telemarketing, hand sewing, and brokerage clerking. These and other at-risk jobs involved doing repetitive and unimaginative work, which seemed to make them easy pickings for AI. In contrast, the jobs deemed most resilient to disruption included many artistic professions, such as illustrating and writing.

The Oxford report encapsulated the conventional wisdom of the time—and, perhaps, of all time. Advanced technology ought to endanger simple or routine-based work before it encroaches on professions that require the fullest expression of our creative potential. Machinists and menial laborers, watch out. Authors and architects, you’re safe.

This assumption was always a bit dubious. After all, we built machines that mastered chess before we built a floor-cleaning robot that won’t get stuck under a couch. But in 2022, technologists took the conventional wisdom about AI and creativity, set it on fire, and threw its ashes into the waste bin.

This year, we’ve seen a flurry of AI products that seem to do precisely what the Oxford researchers considered nearly impossible: mimic creativity. Language-learning models such as GPT-3 now answer questions and write articles with astonishingly humanlike precision and flair. Image-generators such as DALL-E 2 transform text prompts into gorgeous—or, if you’d prefer, hideously tacky—images. This summer, a digital art piece created using the text-to-image program Midjourney won first place in the Colorado State Fair; artists were furious.

AI already plays a crucial, if often invisible, role in our digital lives. It powers Google search, structures our experience of Facebook and TikTok, and talks back to us in the name of Alexa or Siri. But this new crop of generative AI technologies seems to possess qualities that are more indelibly human. Call it creative synthesis—the uncanny ability to channel ideas, information, and artistic influences to produce original work. Articles and visual art are just the beginning. Google’s AI offshoot, DeepMind, has developed a program, AlphaFold, that can determine a protein’s shape from its amino-acid sequence. In the past two years, the number of drugs in clinical trials developed using an AI-first approach has increased from zero to almost 20. “This will change medicine,” a scientist at the Max Planck Institute for Developmental Biology told Nature. “It will change research. It will change bioengineering. It will change everything.”

In the past few months, I’ve been experimenting with various generative AI apps and programs to learn more about the technology that I’ve said could represent the next great mountain of digital invention. As a writer and researcher, I’ve been drawn to playing around with apps that summarize large amounts of information. For years, I’ve imagined a kind of disembodied brain that could give me plain-language answers to research-based questions. Not links to articles, which Google already provides, or lists of research papers, of which Google Scholar has millions. I’ve wanted to type questions into a search bar and, in milliseconds, read the consensus from decades of scientific research.

Essays of the Week

Why VCs Explaining “It Was Only 4% Of Our Fund” Is Misleading Minimization When a High Flying…

As MultiBillion Dollar Private Companies Shrivel, What Their Investors Aren’t Saying About These Losses

As more high-flyer private companies find their shine tarnished, investors (or adjacent VC-explainers) remind us that it’s unfortunate but actually a non-issue, so please, let’s move on and not rubberneck the pileup. Wait, what? Losing tens of millions of dollars (or more) is no big deal? Don’t people get fired for that?

The basic math suggests they’re, well, correct, at least if you’re just looking at first order impacts. In most cases, any single company represents a very small percentage of a venture fund’s total size (hold aside this is also because firms have been increasing their AUM at astonishing velocity). In fact, losing money on a meaningful percentage of startups isn’t just expected, it’s potentially evidence that you’re taking enough risk to hit some of the power law winners which will pay back your LPs many times over!

As cofounder of an early stage venture fund myself, I’m here to tell you that while these statements are accurate, they’re also misleading when trying to understand the broad impact these implosions may have upon a firm. Before you start tweeting ‘Man in the Arena’ quotations to me, my experience here isn’t limited to sideline punditry — although Homebrew has yet to be involved in any Unicorn->Zero events, I can think of two investments where we were “all in” across the seed, A and B rounds, only to see the companies ultimately return 0x, losing us almost $10m combined.

So when a venture firm tells you a previously high valued investment’s failure is NBD, here’s the checklist of implications that’s not always apparent to outsiders, ordered subjectively from least enduring to most calamitous.

News of the Week

That was quick. A year to the day from Bret Taylor’s appointment as co-CEO of Salesforce alongside Marc Benioff—the two would “lead Salesforce through our next chapter,” as Benioff put it—Taylor is departing. Benioff will once again be sole CEO. Taylor follows in the footsteps of Keith Block, who lasted 18 months as co-CEO with Benioff, from 2018 to 2020. Benioff tonight cast Taylor’s departure as a result of his ambitions to return to his entrepreneurial roots. “You can’t keep a wild tiger in a cage,” Benioff said on an analyst conference call. That’s what you call theater.

www.theinformation.com • Share

Greenwood Inc. Continues To Lead ‘Bank Black’ Movement With Another $45 Million In Venture Capital Funding Black Enterprise

www.blackenterprise.com • Share

Twitter’s advertising losses are piling up

Revenues are in free fall, employees say. Can Musk turn the tide?

Brazil Approves Bill Regulating Use Of Bitcoin As Payment

Brazilian lawmakers have approved a complete regulatory framework for crypto, regulating the use of bitcoin as payment.

Startup of the Week

CS50: Introduction to Computer Science

An introduction to the intellectual enterprises of computer science and the art of programming.

Tweet of the Week

Keep reading with a 7-day free trial

Subscribe to That Was The Week to keep reading this post and get 7 days of free access to the full post archives.